FADS: An Intelligent Fatigue and Age Detection System

Abstract

:1. Introduction

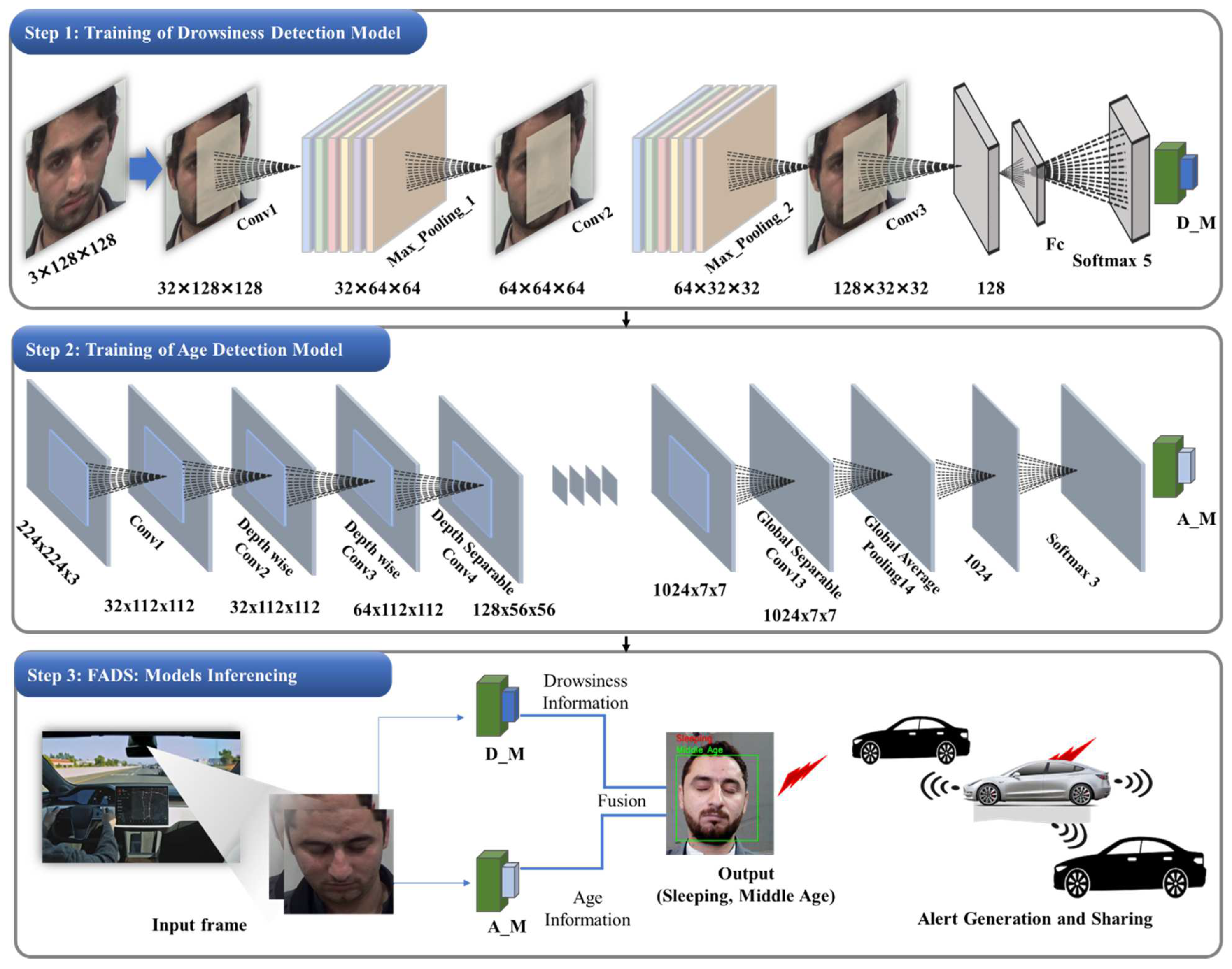

- We developed a DL-assisted FADS for driver mood detection from an easy-to-deploy resource-constrained vision sensor. Addressing this issue of complex systems, it can overcome high computational costs and ensure the real-time detection of the driver’s mood.

- Age is an important factor in avoiding most of the accidents, and for this purpose, the proposed FADS extracts facial features to classify the driver’s age. If the classified age is beyond the defined threshold (age <18 and age >60), then an alert is generated to notify the nearby vehicles and the authorized department. Another influencing factor that causes road accidents is drowsiness or driver moods such as anger or sadness. Therefore, their prediction is also performed by the facial features using a lightweight CNN. These factors can avoid most accidents and ensure safe vehicle driving.

- Due to data unavailability, we created a new dataset for FADS as a step toward the smart system, which includes five classes (i.e., active, angry, sad, sleepy, and yawning). Furthermore, a UTKFace dataset was categorized into three classes (i.e., underage (age <18), middle-age (age ≥18 or ≤60), and overage (>60)) for detailed analysis. This categorization further enhances FADS by fusing dual features to reach an optimum outcome, which is needed for smart surveillance.

- Extensive experiments were conducted from different aspects and the results over the baseline CNNs confirm that the proposed FADS achieved state-of-the-art performance on the standard and the new dataset in terms of lower model complexity and good accuracy.

2. Literature Review

3. Fatigue and Age Detection System

3.1. Face Detection

3.2. Driver Drowsiness Detection

3.3. Driver Age Classification

3.4. Fusion Strategy in FADS

4. Results and Discussion

4.1. System Configuration and Evaluation

4.2. Dataset Explanation

4.3. Performance Comparison of Different Edge Devices

4.4. Results of Drowsiness Detection

4.5. Results of Age Classification

4.6. Time Complexity Analysis

4.7. Qualitative Analysis of the Proposed System

5. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lin, C.; Han, G.; Du, J.; Xu, T.; Peng, Y. Adaptive traffic engineering based on active network measurement towards software defined internet of vehicles. IEEE Trans. Intell. Transp. Syst. 2020, 22, 3697–3706. [Google Scholar] [CrossRef]

- Peden, M.; Scurfield, R.; Sleet, D.; Mohan, D.; Hyder, A.A.; Jarawan, E.; Mathers, C. World Report on Road Traffic Injury Prevention; World Health Organization: Geneva, Switzerland, 2004. [Google Scholar]

- World Health Organization. Association for Safe International Road Travel. Faces behind Igures: Voices of Road Trafic Crash Victims and Their Families; OMS: Genebra, Switzerland, 2007. [Google Scholar]

- National Safety Council. Drivers are Falling Asleep Behind the Wheel. 2020. Available online: https://www.nsc.org/road/safety-topics/fatigued-driver (accessed on 1 January 2023).

- Vennelle, M.; Engleman, H.M.; Douglas, N.J. Sleepiness and sleep-related accidents in commercial bus drivers. Sleep Breath. 2010, 14, 39–42. [Google Scholar] [CrossRef] [PubMed]

- de Castro, J.R.; Gallo, J.; Loureiro, H. Tiredness and sleepiness in bus drivers and road accidents in Peru: A quantitative study. Rev. Panam. Salud Publica (Pan Am. J. Public Health) 2004, 16, 11–18. [Google Scholar]

- Lenné, M.G.; Jacobs, E.E. Predicting drowsiness-related driving events: A review of recent research methods and future opportunities. Theor. Issues Ergon. Sci. 2016, 17, 533–553. [Google Scholar] [CrossRef]

- Tefft, B.C. Prevalence of motor vehicle crashes involving drowsy drivers, United States, 1999–2008. Accid. Anal. Prev. 2012, 45, 180–186. [Google Scholar] [CrossRef]

- Armstrong, K.; Filtness, A.J.; Watling, C.N.; Barraclough, P.; Haworth, N. Efficacy of proxy definitions for identification of fatigue/sleep-related crashes: An Australian evaluation. Transp. Res. Part F Traffic Psychol. Behav. 2013, 21, 242–252. [Google Scholar] [CrossRef] [Green Version]

- Centers for Disease Control and Prevention Drowsy driving-19 states and the District of Columbia, 2009–2010. MMWR Morb. Mortal. Wkly. Rep. 2013, 61, 1033–1037.

- Williamson, A.; Friswell, R. The effect of external non-driving factors, payment type and waiting and queuing on fatigue in long distance trucking. Accid. Anal. Prev. 2013, 58, 26–34. [Google Scholar] [CrossRef]

- Hassall, K. Do ‘safe rates’actually produce safety outcomes? A decade of experience from Australia. In HVTT14: International Symposium on Heavy Vehicle Transport Technology, 14th ed.; HVTT Forum: Rotorua, New Zealand, 2016. [Google Scholar]

- Kalra, N. Challenges and Approaches to Realizing Autonomous Vehicle Safety; RAND: Santa Monica, CA, USA, 2017. [Google Scholar]

- Ballesteros, M.F.; Webb, K.; McClure, R.J. A review of CDC’s Web-based Injury Statistics Query and Reporting System (WISQARS™): Planning for the future of injury surveillance. J. Saf. Res. 2017, 61, 211–215. [Google Scholar] [CrossRef]

- Deng, W.; Wu, R. Real-time driver-drowsiness detection system using facial features. IEEE Access 2019, 7, 118727–118738. [Google Scholar] [CrossRef]

- Zhao, L.; Wang, Z.; Wang, X.; Liu, Q. Driver drowsiness detection using facial dynamic fusion information and a DBN. IET Intell. Transp. Syst. 2017, 12, 127–133. [Google Scholar] [CrossRef]

- Massoz, Q.; Langohr, T.; François, C.; Verly, J.G. The ULg multimodality drowsiness database (called DROZY) and examples of use. In Proceedings of the 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–10 March 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–7. [Google Scholar]

- Tsaur, W.J.; Yeh, L.Y. DANS: A Secure and Efficient Driver-Abnormal Notification Scheme with I oT Devices Over I o V. IEEE Syst. J. 2018, 13, 1628–1639. [Google Scholar] [CrossRef]

- Xing, Y.; Lv, C.; Zhang, Z.; Wang, H.; Na, X.; Cao, D.; Velenis, E.; Wang, F.-Y. Identification and analysis of driver postures for in-vehicle driving activities and secondary tasks recognition. IEEE Trans. Comput. Soc. Syst. 2017, 5, 95–108. [Google Scholar] [CrossRef] [Green Version]

- Yu, J.; Park, S.; Lee, S.; Jeon, M. Driver drowsiness detection using condition-adaptive representation learning framework. IEEE Trans. Intell. Transp. Syst. 2018, 20, 4206–4218. [Google Scholar] [CrossRef] [Green Version]

- Dua, M.; Singla, R.; Raj, S.; Jangra, A. Deep CNN models-based ensemble approach to driver drowsiness detection. Neural Comput. Appl. 2021, 33, 3155–3168. [Google Scholar] [CrossRef]

- Moujahid, A.; Dornaika, F.; Arganda-Carreras, I.; Reta, J. Efficient and compact face descriptor for driver drowsiness detection. Expert Syst. Appl. 2021, 168, 114334. [Google Scholar] [CrossRef]

- Karuna, Y.; Reddy, G.R. Broadband subspace decomposition of convoluted speech data using polynomial EVD algorithms. Multimed. Tools Appl. 2020, 79, 5281–5299. [Google Scholar] [CrossRef]

- Ji, Y.; Wang, S.; Zhao, Y.; Wei, J.; Lu, Y. Fatigue state detection based on multi-index fusion and state recognition network. IEEE Access 2019, 7, 64136–64147. [Google Scholar] [CrossRef]

- Ghoddoosian, R.; Galib, M.; Athitsos, V. A realistic dataset and baseline temporal model for early drowsiness detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar]

- Sai, P.-K.; Wang, J.-G.; Teoh, E.-K. Facial age range estimation with extreme learning machines. Neurocomputing 2015, 149, 364–372. [Google Scholar] [CrossRef]

- Lu, J.; Liong, V.E.; Zhou, J. Cost-sensitive local binary feature learning for facial age estimation. IEEE Trans. Image Process. 2015, 24, 5356–5368. [Google Scholar] [CrossRef]

- Huerta, I.; Fernández, C.; Segura, C.; Hernando, J.; Prati, A. A deep analysis on age estimation. Pattern Recognit. Lett. 2015, 68, 239–249. [Google Scholar] [CrossRef] [Green Version]

- Ranjan, R.; Zhou, S.; Chen, J.C.; Kumar, A.; Alavi, A.; Patel, V.M.; Chellappa, R. Unconstrained age estimation with deep convolutional neural networks. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Santiago, Chile, 7–13 December 2015; pp. 109–117. [Google Scholar]

- Han, H.; Jain, A.K.; Wang, F.; Shan, S.; Chen, X. Heterogeneous face attribute estimation: A deep multi-task learning approach. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 2597–2609. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dornaika, F.; Arganda-Carreras, I.; Belver, C. Age estimation in facial images through transfer learning. Mach. Vis. Appl. 2019, 30, 177–187. [Google Scholar] [CrossRef]

- Rothe, R.; Timofte, R.; van Gool, L. Deep expectation of real and apparent age from a single image without facial landmarks. Int. J. Comput. Vis. 2018, 126, 144–157. [Google Scholar] [CrossRef] [Green Version]

- Shen, W.; Guo, Y.; Wang, Y.; Zhao, K.; Wang, B.; Yuille, A.L. Deep regression forests for age estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2304–2313. [Google Scholar]

- Taheri, S.; Toygar, Ö. On the use of DAG-CNN architecture for age estimation with multi-stage features fusion. Neurocomputing 2019, 329, 300–310. [Google Scholar] [CrossRef]

- Lou, Z.; Alnajar, F.; Alvarez, J.M.; Hu, N.; Gevers, T. Expression-invariant age estimation using structured learning. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 365–375. [Google Scholar] [CrossRef]

- Liu, H.; Lu, J.; Feng, J.; Zhou, J. Group-aware deep feature learning for facial age estimation. Pattern Recognit. 2017, 66, 82–94. [Google Scholar] [CrossRef]

- Ullah, F.U.M.; Obaidat, M.S.; Ullah, A.; Muhammad, K.; Hijji, M.; Baik, S.W. A Comprehensive Review on Vision-based Violence Detection in Surveillance Videos. ACM Comput. Surv. 2022, 55, 1–44. [Google Scholar] [CrossRef]

- Sajjad, M.; Nasir, M.; Ullah, F.U.M.; Muhammad, K.; Sangaiah, A.K.; Baik, S.W. Raspberry Pi assisted facial expression recognition framework for smart security in law-enforcement services. Inf. Sci. 2019, 479, 416–431. [Google Scholar] [CrossRef]

- Sun, X.; Wu, P.; Hoi, S.C. Face detection using deep learning: An improved faster RCNN approach. Neurocomputing 2018, 299, 42–50. [Google Scholar] [CrossRef] [Green Version]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2015; pp. 91–99. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Ullah, W.; Ullah, A.; Hussain, T.; Khan, Z.A.; Baik, S.W. An Efficient Anomaly Recognition Framework Using an Attention Residual LSTM in Surveillance Videos. Sensors 2021, 21, 2811. [Google Scholar] [CrossRef]

- Yar, H.; Hussain, T.; Khan, Z.A.; Koundal, D.; Lee, M.Y.; Baik, S.W. Vision Sensor-Based Real-Time Fire Detection in Resource-Constrained IoT Environments. Comput. Intell. Neurosci. 2021, 2021, 5195508. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Khan, S.U.; Hussain, T.; Ullah, A.; Baik, S.W. Deep-ReID: Deep features and autoencoder assisted image patching strategy for person re-identification in smart cities surveillance. Multimed. Tools Appl. 2021, 1–22. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Yar, H.; Hussain, T.; Agarwal, M.; Khan, Z.A.; Gupta, S.K.; Baik, S.W. Optimized Dual Fire Attention Network and Medium-Scale Fire Classification Benchmark. IEEE Trans. Image Process. 2022, 31, 6331–6343. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Darknet: Open Source Neural Networks in C. 2013. Available online: https://pjreddie.com/darknet/ (accessed on 1 January 2023).

- Ullah, F.U.M.; Obaidat, M.S.; Muhammad, K.; Ullah, A.; Baik, S.W.; Cuzzolin, F.; Rodrigues, J.J.P.C.; de Albuquerque, V.H.C. An intelligent system for complex violence pattern analysis and detection. Int. J. Intell. Syst. 2021, 37, 10400–10422. [Google Scholar] [CrossRef]

- Ullah, F.U.M.; Muhammad, K.; Haq, I.U.; Khan, N.; Heidari, A.A.; Baik, S.W.; de Albuquerque, V.H.C. AI assisted Edge Vision for Violence Detection in IoT based Industrial Surveillance Networks. IEEE Trans. Ind. Inform. 2021, 18, 5359–5370. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef] [Green Version]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Wang, H.; Lu, F.; Tong, X.; Gao, X.; Wang, L.; Liao, Z.J.E.R. A model for detecting safety hazards in key electrical sites based on hybrid attention mechanisms and lightweight Mobilenet. Energy Rep. 2021, 7, 716–724. [Google Scholar] [CrossRef]

- Bi, C.; Wang, J.; Duan, Y.; Fu, B.; Kang, J.-R.; Shi, Y. MobileNet based apple leaf diseases identification. Mob. Netw. Appl. 2020, 27, 172–180. [Google Scholar] [CrossRef]

- Rothe, R.; Timofte, R.; van Gool, L. Dex: Deep expectation of apparent age from a single image. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Santiago, Chile, 7–13 December 2015; pp. 10–15. [Google Scholar]

- Sajjad, M.; Zahir, S.; Ullah, A.; Akhtar, Z.; Muhammad, K. Human behavior understanding in big multimedia data using CNN based facial expression recognition. Mob. Netw. Appl. 2020, 25, 1611–1621. [Google Scholar] [CrossRef]

- Zhang, T.; Han, G.; Yan, L.; Peng, Y. Low-Complexity Effective Sound Velocity Algorithm for Acoustic Ranging of Small Underwater Mobile Vehicles in Deep-Sea Internet of Underwater Things. IEEE Internet Things J. 2022, 10, 563–574. [Google Scholar] [CrossRef]

- Sun, F.; Zhang, Z.; Zeadally, S.; Han, G.; Tong, S. Edge Computing-Enabled Internet of Vehicles: Towards Federated Learning Empowered Scheduling. IEEE Trans. Veh. Technol. 2022, 71, 10088–10103. [Google Scholar] [CrossRef]

- Rizzo, A.; Burresi, G.; Montefoschi, F.; Caporali, M.; Giorgi, R. Making IoT with UDOO. IxD&A 2016, 30, 95–112. [Google Scholar]

- Nasir, M.; Muhammad, K.; Ullah, A.; Ahmad, J.; Baik, S.W.; Sajjad, M. Enabling automation and edge intelligence over resource constraint IoT devices for smart home. Neurocomputing 2022, 491, 494–506. [Google Scholar] [CrossRef]

- Nayyar, A.; Puri, V.A.; Puri, V. A review of Beaglebone Smart Board’s-A Linux/Android powered low cost development platform based on ARM technology. 9th International Conference on Future Generation Communication and Networking (FGCN), Jeju, Republic of Korea, 25–28 November 2015; IEEE: Genebra, Switzerland; pp. 55–63. [Google Scholar]

- Yar, H.; Imran, A.S.; Khan, Z.A.; Sajjad, M.; Kastrati, Z. Towards smart home automation using IoT-enabled edge-computing paradigm. Sensors 2021, 21, 4932. [Google Scholar] [CrossRef]

- Jan, H.; Yar, H.; Iqbal, J.; Farman, H.; Khan, Z.; Koubaa, A. Raspberry pi assisted safety system for elderly people: An application of smart home. 2020 First International Conference of Smart Systems and Emerging Technologies (SMARTTECH), Riyadh, Saudi Arabia, 3–5 November 2020; IEEE: Genebra, Switzerland, 2020; pp. 155–160. [Google Scholar]

- Cass, S. Nvidia makes it easy to embed AI: The Jetson nano packs a lot of machine-learning power into DIY projects-[Hands on]. IEEE Spectr. 2020, 57, 14–16. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

| Name | Configuration |

|---|---|

| OS | Window 10 |

| Programming language and IDE | Jupyter Notebook, Python 3.7.2 |

| Libraries | TensorFlow, PyLab, Numpy, Keras, Matplotlib |

| Imaging libraries | OpenCV 4.0, Scikit-Image, Scikit-Learn |

| Class | Age Group |

|---|---|

| Underage | 6–16 |

| Middle age | 18–60 |

| Overage | 60+ |

| Board | Chip | RAM | OS |

|---|---|---|---|

| Udoo [59] | ARM Cortex A9 | 1 GB | Debian, Android |

| Phidgets [60] | SBC | 64 MB | Linux |

| Beagle Bone [61] | ARM AM335 @ 1 Ghz | 512 MB | Linux Angstrom |

| Raspberry Pi 4 [62,63] | Broadcom BCM2711 Processor | 2 GB, 4 GB, 8 GB | Raspbian |

| Jetson Nano [64] | 1.43 Ghz Quad Core Cortex A57 | 4 GB | All Linux Distro |

| Driver State | Precision | Recall | F1-Measure |

|---|---|---|---|

| Active | 0.98 | 1 | 0.99 |

| Angry | 1 | 0.97 | 0.98 |

| Sad | 0.97 | 1 | 0.98 |

| Sleeping | 1 | 0.98 | 0.99 |

| Yawning | 0.99 | 1 | 0.99 |

| Technique | Model Size (MB) | Parameters (Million) | Accuracy (%) |

|---|---|---|---|

| AlexNet [51] | 233 | 60 | 94.0 |

| VGG16 [52] | 528 | 138 | 98.3 |

| ResNet50 [65] | 98 | 20 | 88.0 |

| MobileNet [41] | 13 | 4.2 | 93.5 |

| The proposed system | 15 | 2.2 | 98.0 |

| Age Classes | Precision | Recall | F1-Measure |

|---|---|---|---|

| Middle age | 0.88 | 0.84 | 0.86 |

| Overage | 0.90 | 0.97 | 0.93 |

| Underage | 0.92 | 0.88 | 0.90 |

| Technique | Accuracy (%) |

|---|---|

| AlexNet [51] | 77.0 |

| VGG16 [52] | 81.0 |

| ResNet50 [65] | 84.0 |

| The proposed system | 90.0 |

| Method Fusion | CPU | GPU | Jetson Nano |

|---|---|---|---|

| AlexNet + MobileNet | 6.37 | 39.87 | 8.01 |

| VGG16 + MobileNet | 5.73 | 33.07 | 6.78 |

| ResNet50 + MobileNet | 8.90 | 42.50 | 13.12 |

| The proposed system | 13.88 | 55.03 | 18.43 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hijji, M.; Yar, H.; Ullah, F.U.M.; Alwakeel, M.M.; Harrabi, R.; Aradah, F.; Cheikh, F.A.; Muhammad, K.; Sajjad, M. FADS: An Intelligent Fatigue and Age Detection System. Mathematics 2023, 11, 1174. https://doi.org/10.3390/math11051174

Hijji M, Yar H, Ullah FUM, Alwakeel MM, Harrabi R, Aradah F, Cheikh FA, Muhammad K, Sajjad M. FADS: An Intelligent Fatigue and Age Detection System. Mathematics. 2023; 11(5):1174. https://doi.org/10.3390/math11051174

Chicago/Turabian StyleHijji, Mohammad, Hikmat Yar, Fath U Min Ullah, Mohammed M. Alwakeel, Rafika Harrabi, Fahad Aradah, Faouzi Alaya Cheikh, Khan Muhammad, and Muhammad Sajjad. 2023. "FADS: An Intelligent Fatigue and Age Detection System" Mathematics 11, no. 5: 1174. https://doi.org/10.3390/math11051174

APA StyleHijji, M., Yar, H., Ullah, F. U. M., Alwakeel, M. M., Harrabi, R., Aradah, F., Cheikh, F. A., Muhammad, K., & Sajjad, M. (2023). FADS: An Intelligent Fatigue and Age Detection System. Mathematics, 11(5), 1174. https://doi.org/10.3390/math11051174