Price Prediction of Bitcoin Based on Adaptive Feature Selection and Model Optimization

Abstract

1. Introduction

- (a)

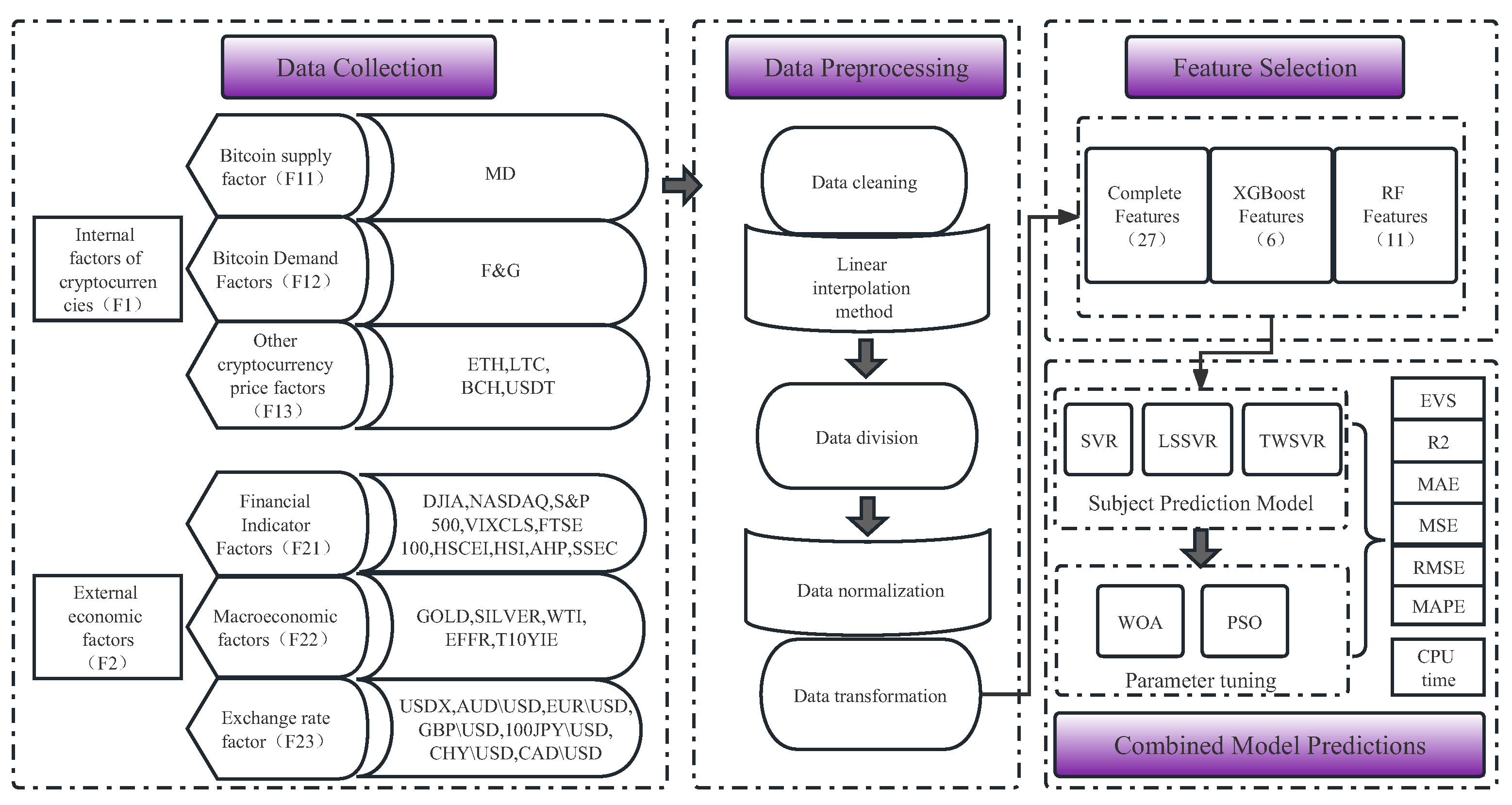

- Expanding the factors that influence Bitcoin price movements. This article considers 27 influencing factors—that is, the impact of other cryptocurrencies on Bitcoin’s price—and uses the Crypto Fear and Greed Index (F&G) as an indicator of the degree of user demand for Bitcoin. In this study, XGBoost and random forest models were used to select the influencing factors of the price of Bitcoin, the variables selected by the two methods were compared with the full variables in the prediction model, and the index system affecting the prediction of the Bitcoin price was obtained.

- (b)

- Three models, i.e., SVR, LSSVR, and TWSVR, were used as the main prediction models in this study. Since the prediction accuracies of SVR, LSSVR, and TWSVR are easily affected by the hyperparameters of the models, two optimization algorithms, i.e., WOA and PSO, were used in this study to optimize the hyperparameters of the prediction model.

2. Data Collection and Preprocessing

2.1. Data Collection

- Mining difficulty (MD) means the difficulty of successfully mining Bitcoin data blocks in transaction information, which represents the level of Bitcoin supply.

- The Crypto Fear and Greed Index (F&G) analyzes the current sentiment in the Bitcoin market. Data levels range from 0 to 100. Zero means "extreme fear", while 100 means "extreme greed". It takes into account the volatility of Bitcoin price changes (25%), the current volume of Bitcoin trading (25%), market momentum, and the public’s appreciation of Bitcoin (50%). The Crypto Fear and Greed Index represents public demand for Bitcoin.

- Ethereum Price (ETH), Litecoin Price (LTC), Bitcoin Cash Price (BCH), and the USDT Price Index (USDT) are the prices of other types of cryptocurrencies, which indirectly affect the price fluctuations of Bitcoin.

- The Dow Jones Industrial Average Index (DJIA), National Association of Securities Dealers Automated Quotations Index (NASDAQ), Standard and Poor’s 500 Index (S&P 500), and CBOE Volatility Index (VIXCLS) are important measures of the U.S. stock market volatility.

- The Financial Times Stock Exchange 100 Index (FTSE 100) is an important measure of volatility in the UK stock market.

- The Hang Seng China Enterprises Index (HSCEI), Hang Seng Index (HSI), A/H share premium index (AHP), and Shanghai Securities Composite Index (SSEC) are important measure indices of volatility in China’s stock market.

- Gold price (GOLD) and silver price (SILVER) are important indicators to measure the price fluctuation of rare metals in the United States and are also macroeconomic factors affecting the Bitcoin price change.

- West Texas Intermediate (WTI) is an important index for measuring the price fluctuation of US crude oil and is a macroeconomic factor affecting the Bitcoin price change.

- The effective federal funds rate (EFFR) is an important index to measure the fluctuation of the funds rate in the United States, and it is a macroeconomic factor affecting the change in Bitcoin price.

- The 10-year break-even inflation rate (T10YIE) is an important index for measuring the inflation level in the United States and is a macroeconomic factor affecting the Bitcoin price change.

- The US dollar index ® (USDX) is an important index that comprehensively reflects the exchange rate of the US dollar in the international foreign exchange market.

- Australian dollar to US dollar exchange rate (AUD∖USD), European dollar to US dollar exchange rate (EUR∖USD), Great Britain pound to US dollar exchange rate (GBP∖USD), 100 Japanese yen to US dollar exchange rate (100 JPY∖USD), Swiss Franc to US dollar exchange rate (CHY∖USD), and Canadian dollar to US dollar exchange rate (CAD∖USD) represent the exchange rates of the US dollar in each foreign exchange market.

2.2. Data Preprocessing

2.2.1. Missing Data Completion through Linear Interpolation

2.2.2. Data Normalization and Division

2.2.3. Feature Selection for Price Prediction of Bitcoin

3. Price Prediction of Bitcoin

3.1. Main Forecasting Model

3.1.1. Support Vector Regression

3.1.2. Least-Squares Support Vector Regression

3.1.3. Optimization Model

- (1)

- Whale optimization algorithm

| Algorithm 1 General framework of the WOA algorithm. |

| Require: Fitness function, Whale swarm . Ensure: Global optimal solution.

|

- ①

- Surrounding prey behavior

- ②

- Spiral bubble hunting behavior

- (2)

- Particle swarm optimization algorithm

4. Results and Discussion

4.1. Experiment Settings

4.2. Feature Selection

- (a)

- XGBoost screened out six important factors in Figure 8. The S&P 500 index is the most important. Moreover, the ETH, LTC, MD, F&G, and GOLD indices are also very important. The six important factors are the supply factor of Bitcoin, the demand factor of Bitcoin, the price factor of other cryptocurrencies, the financial-indicator factor, and the macroeconomic factor, but the exchange rate factor was not included. Eleven important factors were screened by the random forest algorithm, focusing on internal cryptocurrency factors and U.S. stock market factors. The importance of the S&P500 indices screened by the random forest algorithm is significantly higher than that of the other indices. These are the BCH, DJIA, ETH, F&G, GOLD, HSCEI, LTC, MD, NASDAQ, and WTI indicators. Compared with the important indicators extracted by the two methods, the indicators extracted by the XGBoost algorithm are more important and representative. The eleven important factors screened by the random forest algorithm include six important factors screened by the XGBoost algorithm.

- (b)

- From the perspective of economics, the internal factors of cryptocurrency and the U.S. stock market are the most important factors affecting the price of Bitcoin. The internal factors of cryptocurrency directly affect the price of Bitcoin from the perspective of supply and demand. Bitcoin is also popular in Western countries, so its volatile price is indirectly influenced by the U.S. stock market.

4.3. Combined Model Prediction

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| SVR | Support Vector Regression |

| LSSVR | Least-Square Support Vector Regression |

| TWSVR | Twin Support Vector Regression |

| WOA | Whale Optimization Algorithm |

| PSO | Particle Swarm Optimization Algorithm |

| ARIMA | Autoregressive comprehensive moving average |

| SVM | Support Vector Machine |

| RF | Random Forest |

| ANN | Artificial Neural Network |

| LSTM | Long- and Short-Term Memory Neural Network |

| ANFIS | Adaptive Network Fuzzy Inference System model |

| MD | Mining Difficulty |

| F&G | Crypto Fear and Greed Index |

| ETH | Ethereum Price |

| LTC | Litecoin Price |

| BCH | Bitcoin Cash Price |

| USDT | USDT Price Index |

| DJIA | Dow Jones Industrial Average Index |

| NASDAQ | National Association of Securities Dealers Automated Quotations Index |

| S&P 500 | Standard & Poor’s 500 Index |

| VIXCLS | CBOE Volatility Index |

| FTSE 100 | Financial Times Stock Exchange 100 Index |

| HSCEI | Hang Seng China Enterprises Index |

| HSI | Hang Seng Index |

| AHP | A/H share premium index |

| SSEC | Shanghai Securities The Composite Index |

| GOLD | Gold Price |

| SILVER | Silver Price |

| WTI | West Texas Intermediate |

| EFFR | Effective Federal Funds Rate |

| T10YIE | 10-Year Breakeven Inflation Rate |

| AUD∖USD | Australian Dollar to US Dollar Exchange Rate |

| EUR∖USD | European Dollar to US Dollar Exchange Rat |

| GBP∖USD | Great Britain Pound to US Dollar Exchange Rat |

| 100JPY∖USD | 100 Japanese Yen to US Dollar Exchange Rat |

| CHY∖USD | Swiss Franc to US Dolla Exchange Ratx |

| CAD∖USD | Canadian dollar to US Dolla Exchange Rat |

| EVS | Expected Variance Score |

| Coefficient of Determination | |

| MSE | Mean Square Error |

| RMSE | Root Mean Square Erro |

| MAPE | Mean Absolute Percentage Error |

References

- Shah, D.; Zhang, K. Bayesian regression and Bitcoin. In Proceedings of the 2014 52nd annual Allerton Conference on Communication, Control, and Computing (Allerton), Monticello, IL, USA, 30 September–3 October 2014; pp. 409–414. [Google Scholar] [CrossRef]

- Jang, H.; Lee, J. An empirical study on modeling and prediction of bitcoin prices with bayesian neural networks based on blockchain information. IEEE Access 2018, 6, 5427–5437. [Google Scholar] [CrossRef]

- Khedr, A.M.; Arif, I.; El-Bannany, M.; Alhashmi, S.M.; Sreedharan, M. Cryptocurrency price prediction using traditional statistical and machine-learning techniques: A survey. Intell. Syst. Account. Financ. Manag. 2021, 28, 3–34. [Google Scholar] [CrossRef]

- Andi, H.K. An accurate bitcoin price prediction using logistic regression with LSTM machine learning model. J. Soft Comput. Paradig. 2021, 3, 205–217. [Google Scholar] [CrossRef]

- Liu, X.; Hu, Z.; Ling, H.; Cheung, Y.M. MTFH: A Matrix Tri-Factorization Hashing Framework for Efficient Cross-Modal Retrieval. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 964–981. [Google Scholar] [CrossRef] [PubMed]

- Ali, M.; Shatabda, S. A data selection methodology to train linear regression model to predict bitcoin price. In Proceedings of the 2020 2nd International Conference on Advanced Information and Communication Technology (ICAICT), Dhaka, Bangladesh, 28–29 November 2020; pp. 330–335. [Google Scholar] [CrossRef]

- Poongodi, M.; Vijayakumar, V.; Chilamkurti, N. Bitcoin price prediction using ARIMA model. Int. J. Internet Technol. Secur. Trans. 2020, 10, 396–406. [Google Scholar] [CrossRef]

- Abu Bakar, N.; Rosbi, S. Autoregressive integrated moving average (ARIMA) model for forecasting cryptocurrency exchange rate in high volatility environment: A new insight of bitcoin transaction. Int. J. Adv. Eng. Res. Sci. 2017, 4, 130–137. [Google Scholar] [CrossRef]

- Erfanian, S.; Zhou, Y.; Razzaq, A.; Abbas, A.; Safeer, A.A.; Li, T. Predicting Bitcoin (BTC) Price in the Context of Economic Theories: A Machine Learning Approach. Entropy 2022, 24, 1487. [Google Scholar] [CrossRef]

- Derbentsev, V.; Babenko, V.; Khrustalev, K.; Obruch, H.; Khrustalova, S. Comparative performance of machine learning ensemble algorithms for forecasting cryptocurrency prices. Int. J. Eng. 2021, 34, 140–148. [Google Scholar] [CrossRef]

- Charandabi, S.E.; Kamyar, K. Prediction of cryptocurrency price index using artificial neural networks: A survey of the literature. Eur. J. Bus. Manag. Res. 2021, 6, 17–20. [Google Scholar] [CrossRef]

- Ho, A.; Vatambeti, R.; Ravichandran, S. Bitcoin Price Prediction Using Machine Learning and Artificial Neural Network Model. Indian J. Sci. Technol. 2021, 14, 2300–2308. [Google Scholar] [CrossRef]

- Pour, E.S.; Jafari, H.; Lashgari, A.; Rabiee, E.; Ahmadisharaf, A. Cryptocurrency price prediction with neural networks of LSTM and Bayesian optimization. Eur. J. Bus. Manag. Res. 2022, 7, 20–27. [Google Scholar] [CrossRef]

- Wu, C.H.; Lu, C.C.; Ma, Y.F.; Lu, R.S. A new forecasting framework for bitcoin price with LSTM. In Proceedings of the 2018 IEEE International Conference on Data Mining Workshops, Singapore, 17–20 November 2018; pp. 168–175. [Google Scholar] [CrossRef]

- Ye, Z.; Wu, Y.; Chen, H.; Pan, Y.; Jiang, Q. A stacking ensemble deep learning model for Bitcoin price prediction using Twitter comments on Bitcoin. Mathematics 2022, 10, 1307. [Google Scholar] [CrossRef]

- Chen, Z.; Li, C.; Sun, W. Bitcoin price prediction using machine learning: An approach to sample dimension engineering. J. Comput. Appl. Math. 2020, 365, 112395. [Google Scholar] [CrossRef]

- Mudassir, M.; Bennbaia, S.; Unal, D.; Hammoudeh, M. Time-series forecasting of Bitcoin prices using high-dimensional features: A machine learning approach. Neural Comput. Appl. 2020, 1–15. [Google Scholar] [CrossRef]

- Mallqui, D.C.; Fernandes, R.A. Predicting the direction, maximum, minimum and closing prices of daily Bitcoin exchange rate using machine learning techniques. Appl. Soft Comput. 2019, 75, 596–606. [Google Scholar] [CrossRef]

- Zhu, Y.; Dickinson, D.; Li, J. Analysis on the influence factors of Bitcoin’s price based on VEC model. Financ. Innov. 2017, 3, 37–49. [Google Scholar] [CrossRef]

- Aggarwal, A.; Gupta, I.; Garg, N.; Goel, A. Deep learning approach to determine the impact of socio economic factors on bitcoin price prediction. In Proceedings of the 2019 Twelfth International Conference on Contemporary Computing (IC3), Noida, India, 8–10 August 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Ciaian, P.; Rajcaniova, M.; Kancs, D. The economics of BitCoin price formation. Appl. Econ. 2016, 48, 1799–1815. [Google Scholar] [CrossRef]

- Kim, Y.B.; Kim, J.G.; Kim, W.; Im, J.H.; Kim, T.H.; Kang, S.J.; Kim, C.H. Predicting fluctuations in cryptocurrency transactions based on user comments and replies. PLoS ONE 2016, 11, e0161197. [Google Scholar] [CrossRef]

- Chen, W.; Xu, H.; Jia, L.; Gao, Y. Machine learning model for Bitcoin exchange rate prediction using economic and technology determinants. Int. J. Forecast. 2021, 37, 28–43. [Google Scholar] [CrossRef]

- Ma, B.; Yan, G.; Chai, B.; Hou, X. XGBLC: An improved survival prediction model based on XGBoost. Bioinformatics 2022, 38, 410–418. [Google Scholar] [CrossRef]

- Srinivas, P.; Katarya, R. hyOPTXg: OPTUNA hyper-parameter optimization framework for predicting cardiovascular disease using XGBoost. Biomed. Signal Process. Control 2022, 73, 103456. [Google Scholar] [CrossRef]

- Huo, W.; Li, W.; Zhang, Z.; Sun, C.; Zhou, F.; Gong, G. Performance prediction of proton-exchange membrane fuel cell based on convolutional neural network and random forest feature selection. Energy Convers. Manag. 2021, 243, 114367. [Google Scholar] [CrossRef]

- Saraswat, M.; Arya, K. Feature selection and classification of leukocytes using random forest. Med. Biol. Eng. Comput. 2014, 52, 1041–1052. [Google Scholar] [CrossRef]

- Wu, Y.; Zhang, Q.; Hu, Y.; Sun-Woo, K.; Zhang, X.; Zhu, H.; Li, S. Novel binary logistic regression model based on feature transformation of XGBoost for type 2 Diabetes Mellitus prediction in healthcare systems. Future Gener. Comput. Syst. 2022, 129, 1–12. [Google Scholar] [CrossRef]

- Zhang, X.; Yan, C.; Gao, C.; Malin, B.A.; Chen, Y. Predicting missing values in medical data via XGBoost regression. J. Healthc. Inform. Res. 2020, 4, 383–394. [Google Scholar] [CrossRef]

- Zhang, W.; Wu, C.; Li, Y.; Wang, L.; Samui, P. Assessment of pile drivability using random forest regression and multivariate adaptive regression splines. Georisk Assess. Manag. Risk Eng. Syst. Geohazards 2021, 15, 27–40. [Google Scholar] [CrossRef]

- Xie, G.; Wang, S.; Zhao, Y.; Lai, K.K. Hybrid approaches based on LSSVR model for container throughput forecasting: A comparative study. Appl. Soft Comput. 2013, 13, 2232–2241. [Google Scholar] [CrossRef]

- Khemchandani, R.; Goyal, K.; Chandra, S. TWSVR: Regression via twin support vector machine. Neural Netw. 2016, 74, 14–21. [Google Scholar] [CrossRef]

- Peng, X. TSVR: An efficient twin support vector machine for regression. Neural Netw. 2010, 23, 365–372. [Google Scholar] [CrossRef]

- Erkan, U.; Toktas, A.; Ustun, D. Hyperparameter optimization of deep CNN classifier for plant species identification using artificial bee colony algorithm. J. Ambient. Intell. Humaniz. Comput. 2022, 1–12. [Google Scholar] [CrossRef]

- Yang, L.; Shami, A. On hyperparameter optimization of machine learning algorithms: Theory and practice. Neurocomputing 2020, 415, 295–316. [Google Scholar] [CrossRef]

- Bischl, B.; Binder, M.; Lang, M.; Pielok, T.; Richter, J.; Coors, S.; Thomas, J.; Ullmann, T.; Becker, M.; Boulesteix, A.L.; et al. Hyperparameter optimization: Foundations, algorithms, best practices and open challenges. arXiv 2021, arXiv:2107.05847. [Google Scholar] [CrossRef]

- Qiu, Y.; Zhou, J.; Khandelwal, M.; Yang, H.; Yang, P.; Li, C. Performance evaluation of hybrid WOA-XGBoost, GWO-XGBoost and BO-XGBoost models to predict blast-induced ground vibration. Eng. Comput. 2022, 38, 4145–4162. [Google Scholar] [CrossRef]

- Hitam, N.A.; Ismail, A.R.; Samsudin, R.; Ameerbakhsh, O. The Influence of Sentiments in Digital Currency Prediction Using Hybrid Sentiment-based Support Vector Machine with Whale Optimization Algorithm (SVMWOA). In Proceedings of the 2021 International Congress of Advanced Technology and Engineering (ICOTEN), Taiz, Yemen, 4–5 July 2021; pp. 1–7. [Google Scholar] [CrossRef]

- Wu, K.; Zhu, Y.; Shao, D.; Wang, X.; Ye, C. A Method of Trading Strategies for Bitcoin and Gold. In Proceedings of the 2022 International Conference on Artificial Intelligence, Internet and Digital Economy (ICAID 2022), Xi’an, China, 15–17 April 2022; Atlantis Press: Amsterdam, The Netherlands, 2022; pp. 186–197. [Google Scholar] [CrossRef]

- Chen, X.; Cheng, L.; Liu, C.; Liu, Q.; Liu, J.; Mao, Y.; Murphy, J. A WOA-based optimization approach for task scheduling in cloud computing systems. IEEE Syst. J. 2020, 14, 3117–3128. [Google Scholar] [CrossRef]

- Balyan, A.K.; Ahuja, S.; Lilhore, U.K.; Sharma, S.K.; Manoharan, P.; Algarni, A.D.; Elmannai, H.; Raahemifar, K. A hybrid intrusion detection model using ega-pso and improved random forest method. Sensors 2022, 22, 5986. [Google Scholar] [CrossRef]

- Xing, Z.; Zhu, J.; Zhang, Z.; Qin, Y.; Jia, L. Energy consumption optimization of tramway operation based on improved PSO algorithm. Energy 2022, 258, 124848. [Google Scholar] [CrossRef]

- Huang, C.L.; Dun, J.F. A distributed PSO–SVM hybrid system with feature selection and parameter optimization. Appl. Soft Comput. 2008, 8, 1381–1391. [Google Scholar] [CrossRef]

- Cuong-Le, T.; Nghia-Nguyen, T.; Khatir, S.; Trong-Nguyen, P.; Mirjalili, S.; Nguyen, K.D. An efficient approach for damage identification based on improved machine learning using PSO-SVM. Eng. Comput. 2022, 38, 3069–3084. [Google Scholar] [CrossRef]

- Chtita, S.; Motahhir, S.; El Hammoumi, A.; Chouder, A.; Benyoucef, A.S.; El Ghzizal, A.; Derouich, A.; Abouhawwash, M.; Askar, S. A novel hybrid GWO–PSO-based maximum power point tracking for photovoltaic systems operating under partial shading conditions. Sci. Rep. 2022, 12, 10637. [Google Scholar] [CrossRef]

- Vehtari, A.; Gelman, A.; Simpson, D.; Carpenter, B.; Bürkner, P.C. Rank-normalization, folding, and localization: An improved for assessing convergence of MCMC (with discussion). Bayesian Anal. 2021, 16, 667–718. [Google Scholar] [CrossRef]

- Petetin, H.; Bowdalo, D.; Soret, A.; Guevara, M.; Jorba, O.; Serradell, K.; Pérez García-Pando, C. Meteorology-normalized impact of the COVID-19 lockdown upon NO 2 pollution in Spain. Atmos. Chem. Phys. 2020, 20, 11119–11141. [Google Scholar] [CrossRef]

- Wang, Y.; Ni, X.S. A XGBoost risk model via feature selection and Bayesian hyper-parameter optimization. arXiv 2019, arXiv:1901.08433. [Google Scholar] [CrossRef]

- Kursa, M.B.; Rudnicki, W.R. Feature selection with the Boruta package. J. Stat. Softw. 2010, 36, 1–13. [Google Scholar] [CrossRef]

- Prayudani, S.; Hizriadi, A.; Lase, Y.; Fatmi, Y. Analysis accuracy of forecasting measurement technique on random K-nearest neighbor (RKNN) using MAPE and MSE. In Proceedings of the 1st International Conference of SNIKOM 2018, Medan, Indonesia, 23–24 November 2018; Volume 1361, p. 012089. [Google Scholar] [CrossRef]

- Rai, N.K.; Saravanan, D.; Kumar, L.; Shukla, P.; Shaw, R.N. RMSE and MAPE analysis for short-term solar irradiance, solar energy, and load forecasting using a Recurrent Artificial Neural Network. In Applications of AI and IOT in Renewable Energy; Elsevier: Amsterdam, The Netherlands, 2022; pp. 181–192. [Google Scholar] [CrossRef]

- Dhiman, H.S.; Deb, D.; Guerrero, J.M. Hybrid machine intelligent SVR variants for wind forecasting and ramp events. Renew. Sustain. Energy Rev. 2019, 108, 369–379. [Google Scholar] [CrossRef]

| CATEGORY | MODEL | EVS | MAE | MSE | RMSE | MAPE | CPU Time (s) | |

|---|---|---|---|---|---|---|---|---|

| Complete Features | ARIMA | 0.723 | 0.691 | 0.091 | 0.008 | 0.091 | 0.132 | 1.173 |

| WOA-SVR | 0.9022 | 0.879 | 0.042 | 0.003 | 0.055 | 0.0602 | 2.5631 | |

| WOA-LSSVR | 0.8868 | 0.869 | 0.043 | 0.003 | 0.057 | 0.0622 | 0.5268 | |

| WOA-TWSVR | 0.9484 | 0.923 | 0.032 | 0.002 | 0.044 | 0.0442 | 0.6736 | |

| PSO-SVR | 0.8964 | 0.876 | 0.042 | 0.003 | 0.056 | 0.0614 | 2.6417 | |

| PSO-LSSVR | 0.8835 | 0.867 | 0.043 | 0.003 | 0.057 | 0.0625 | 0.5416 | |

| PSO-TWSVR | 0.9484 | 0.923 | 0.032 | 0.002 | 0.044 | 0.0442 | 0.6736 | |

| XGBoost Features | WOA-SVR | 0.9161 | 0.894 | 0.036 | 0.002 | 0.049 | 0.0512 | 1.9301 |

| WOA-LSSVR | 0.9041 | 0.887 | 0.042 | 0.003 | 0.054 | 0.0594 | 0.3671 | |

| WOA-TWSVR | 0.9547 | 0.929 | 0.031 | 0.002 | 0.042 | 0.0433 | 0.4743 | |

| PSO-SVR | 0.9074 | 0.89 | 0.041 | 0.003 | 0.052 | 0.0571 | 1.9319 | |

| PSO-LSSVR | 0.9041 | 0.887 | 0.042 | 0.003 | 0.054 | 0.0594 | 0.3671 | |

| PSO-TWSVR | 0.9239 | 0.896 | 0.035 | 0.002 | 0.046 | 0.0475 | 0.4876 | |

| RF Features | WOA-SVR | 0.8067 | 0.78 | 0.054 | 0.005 | 0.072 | 0.0786 | 2.3297 |

| WOA-LSSVR | 0.9015 | 0.881 | 0.042 | 0.003 | 0.055 | 0.0607 | 0.3847 | |

| WOA-TWSVR | 0.9502 | 0.919 | 0.031 | 0.002 | 0.042 | 0.0438 | 0.5113 | |

| PSO-SVR | 0.8041 | 0.771 | 0.055 | 0.005 | 0.073 | 0.0792 | 2.2744 | |

| PSO-LSSVR | 0.8994 | 0.878 | 0.042 | 0.003 | 0.055 | 0.0611 | 0.4228 | |

| PSO-TWSVR | 0.9491 | 0.924 | 0.032 | 0.002 | 0.044 | 0.0441 | 0.4943 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, Y.; Ma, J.; Gu, F.; Wang, J.; Li, Z.; Zhang, Y.; Xu, J.; Li, Y.; Wang, Y.; Yang, X. Price Prediction of Bitcoin Based on Adaptive Feature Selection and Model Optimization. Mathematics 2023, 11, 1335. https://doi.org/10.3390/math11061335

Zhu Y, Ma J, Gu F, Wang J, Li Z, Zhang Y, Xu J, Li Y, Wang Y, Yang X. Price Prediction of Bitcoin Based on Adaptive Feature Selection and Model Optimization. Mathematics. 2023; 11(6):1335. https://doi.org/10.3390/math11061335

Chicago/Turabian StyleZhu, Yingjie, Jiageng Ma, Fangqing Gu, Jie Wang, Zhijuan Li, Youyao Zhang, Jiani Xu, Yifan Li, Yiwen Wang, and Xiangqun Yang. 2023. "Price Prediction of Bitcoin Based on Adaptive Feature Selection and Model Optimization" Mathematics 11, no. 6: 1335. https://doi.org/10.3390/math11061335

APA StyleZhu, Y., Ma, J., Gu, F., Wang, J., Li, Z., Zhang, Y., Xu, J., Li, Y., Wang, Y., & Yang, X. (2023). Price Prediction of Bitcoin Based on Adaptive Feature Selection and Model Optimization. Mathematics, 11(6), 1335. https://doi.org/10.3390/math11061335