LCAM: Low-Complexity Attention Module for Lightweight Face Recognition Networks

Abstract

1. Introduction

- To propose an attention module with low complexity. The proposed attention module is the Low-Complexity Attention Module, which is also known as LCAM. Notably, LCAM has significantly fewer FLOPs and parameter counts and is still able to exhibit comparable or better performances in comparison with those of other modules that combine both channel and spatial attentions.

- To preserve and enhance the information interaction in the spatial (vertical and horizontal) branches so as to avoid information loss in LCAM.

2. Related Work

2.1. General Face Recognition Pipeline

2.2. Lightweight Face Recognition Models

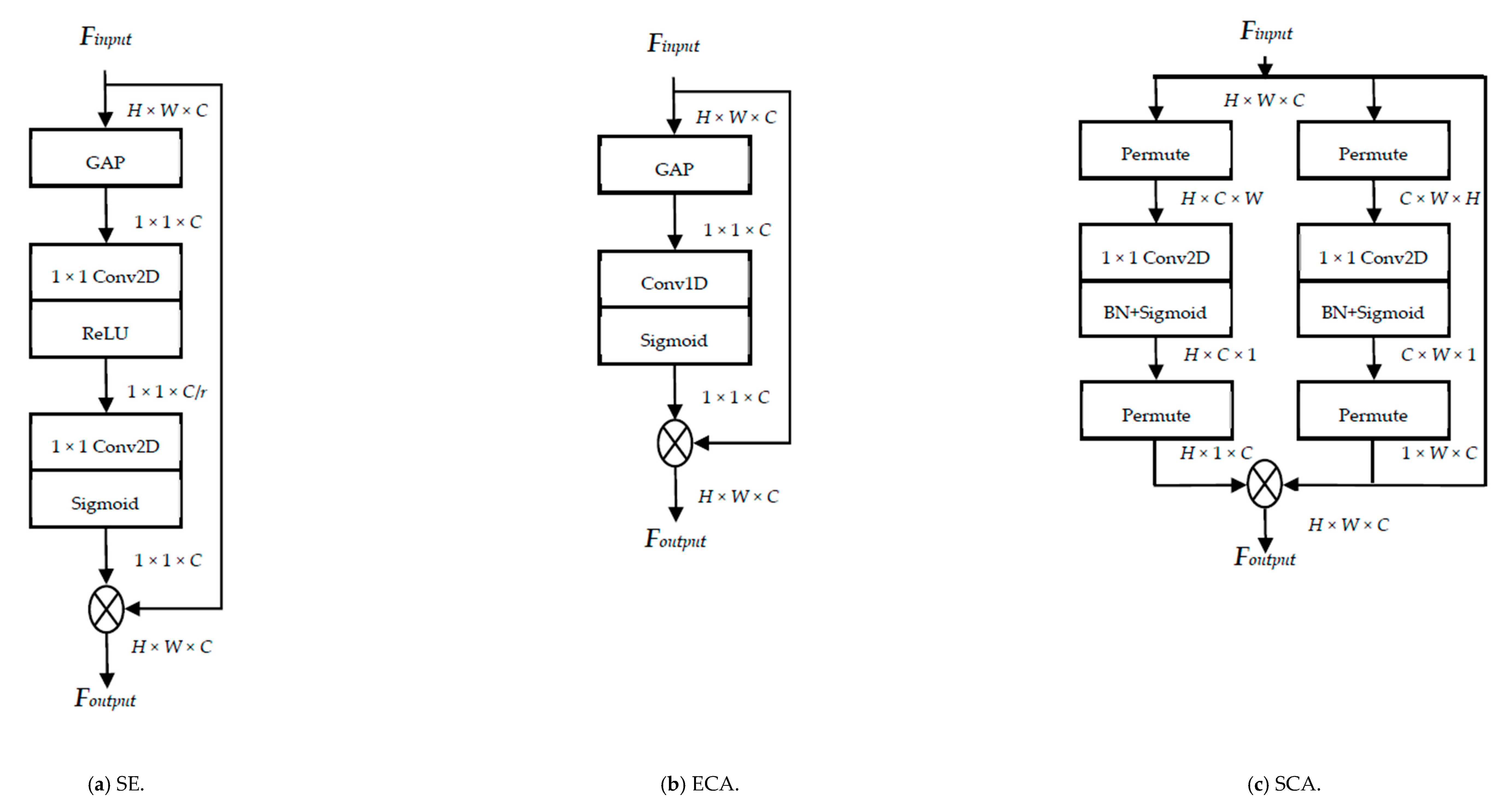

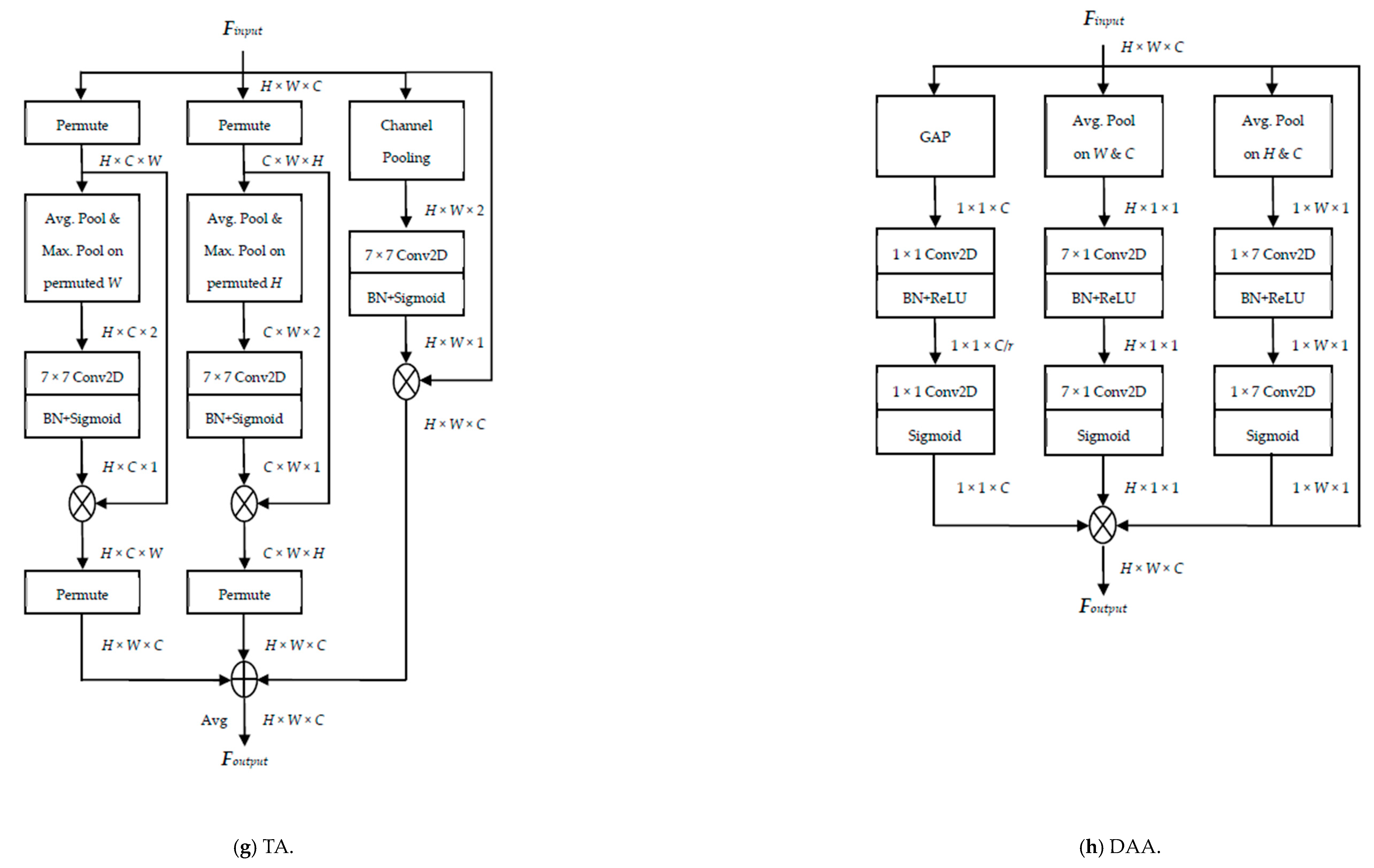

2.3. Attention Modules

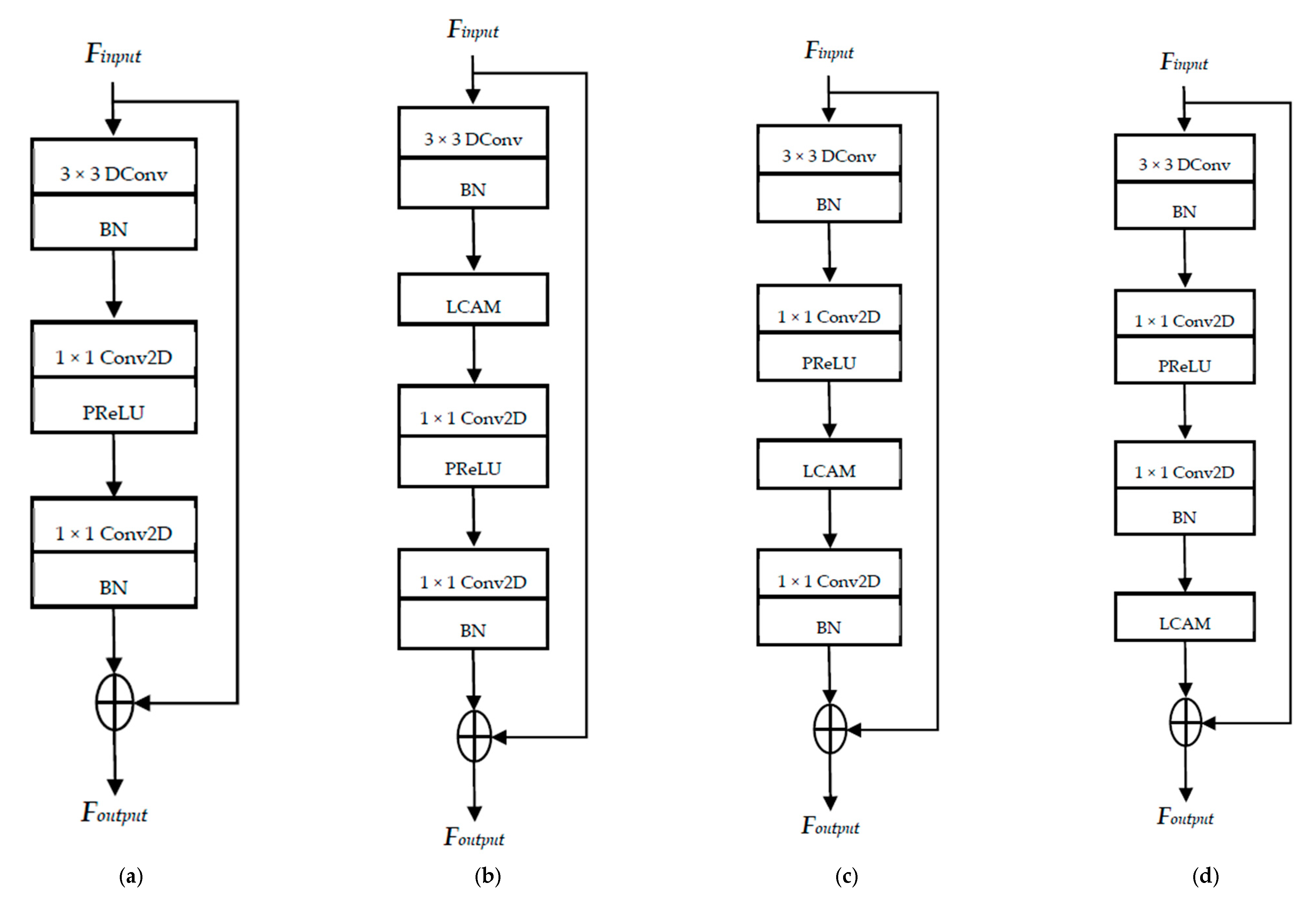

3. Proposed Approach

3.1. Channel Attention Branch

3.2. Vertical Attention Branch

3.3. Horizontal Attention Branch

4. Experiments and Analysis

4.1. Dataset

4.2. Experimental Settings

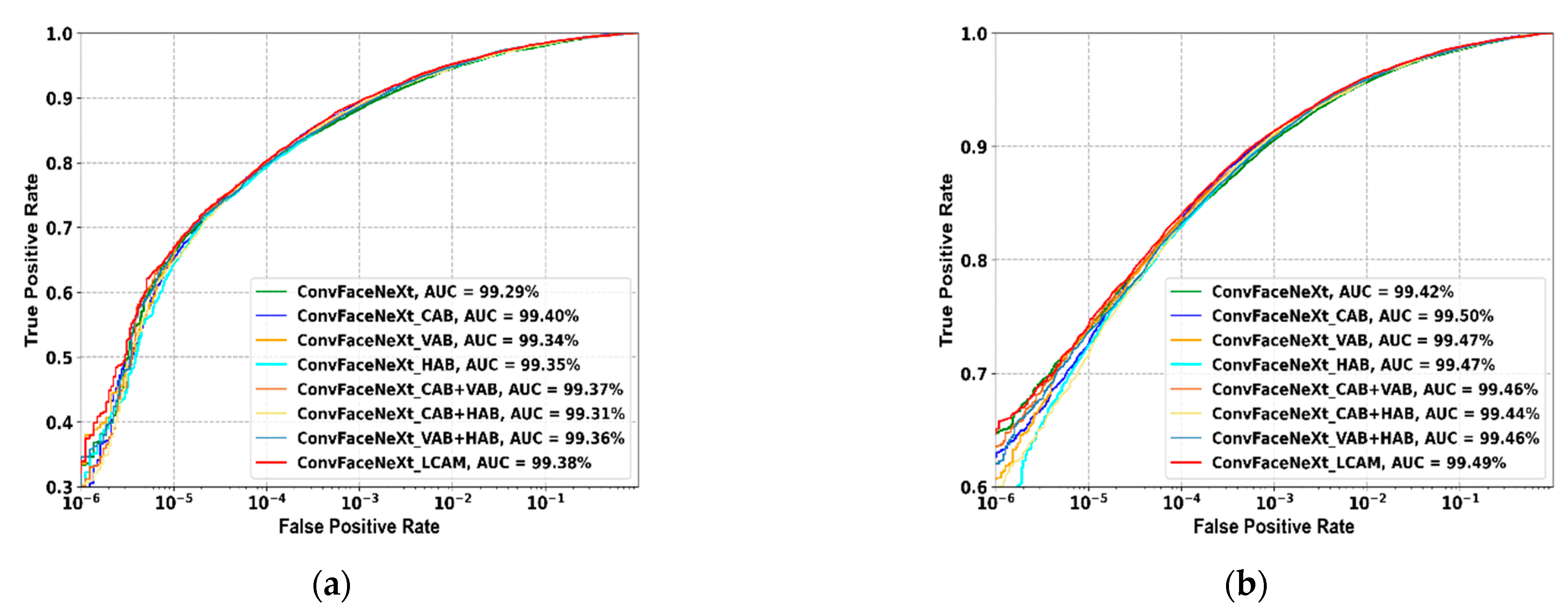

4.3. Ablation Studies

4.3.1. Effect of Different Kernel Size

4.3.2. Effect on Different Combination of Branches

4.3.3. Effect on Different Integration Strategy

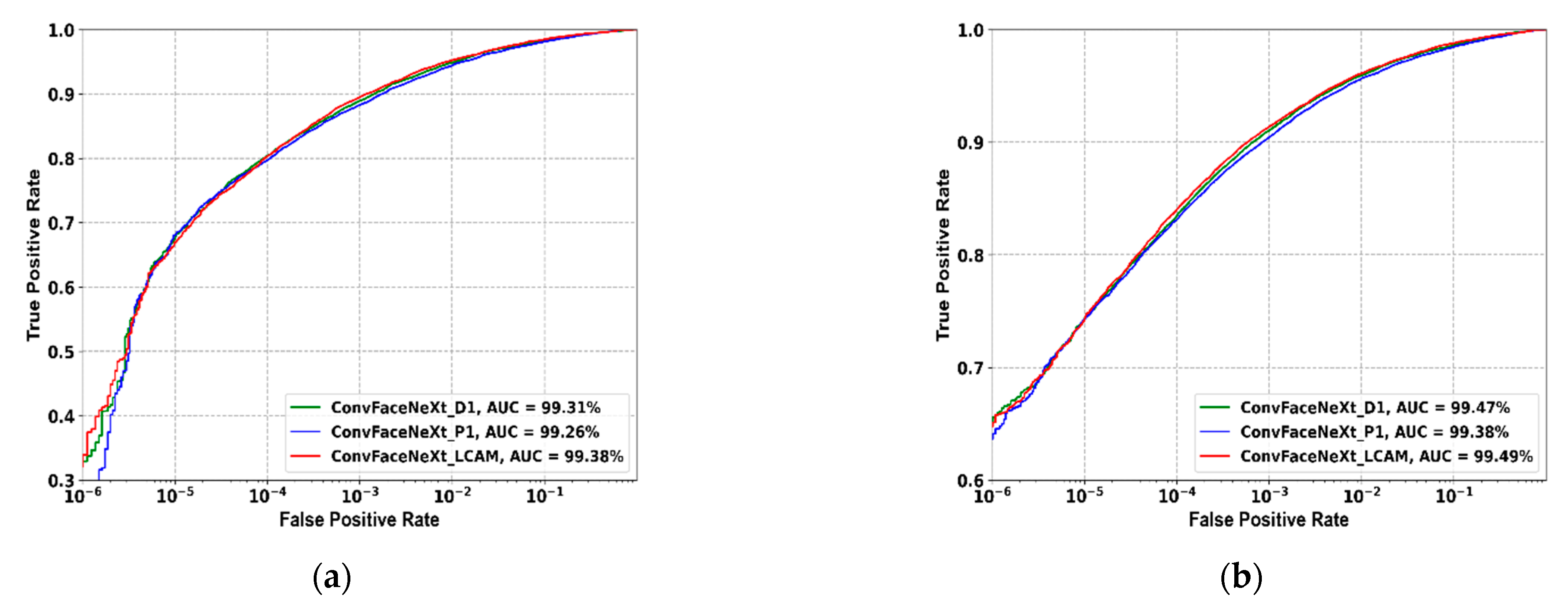

4.4. Quantitative Analysis

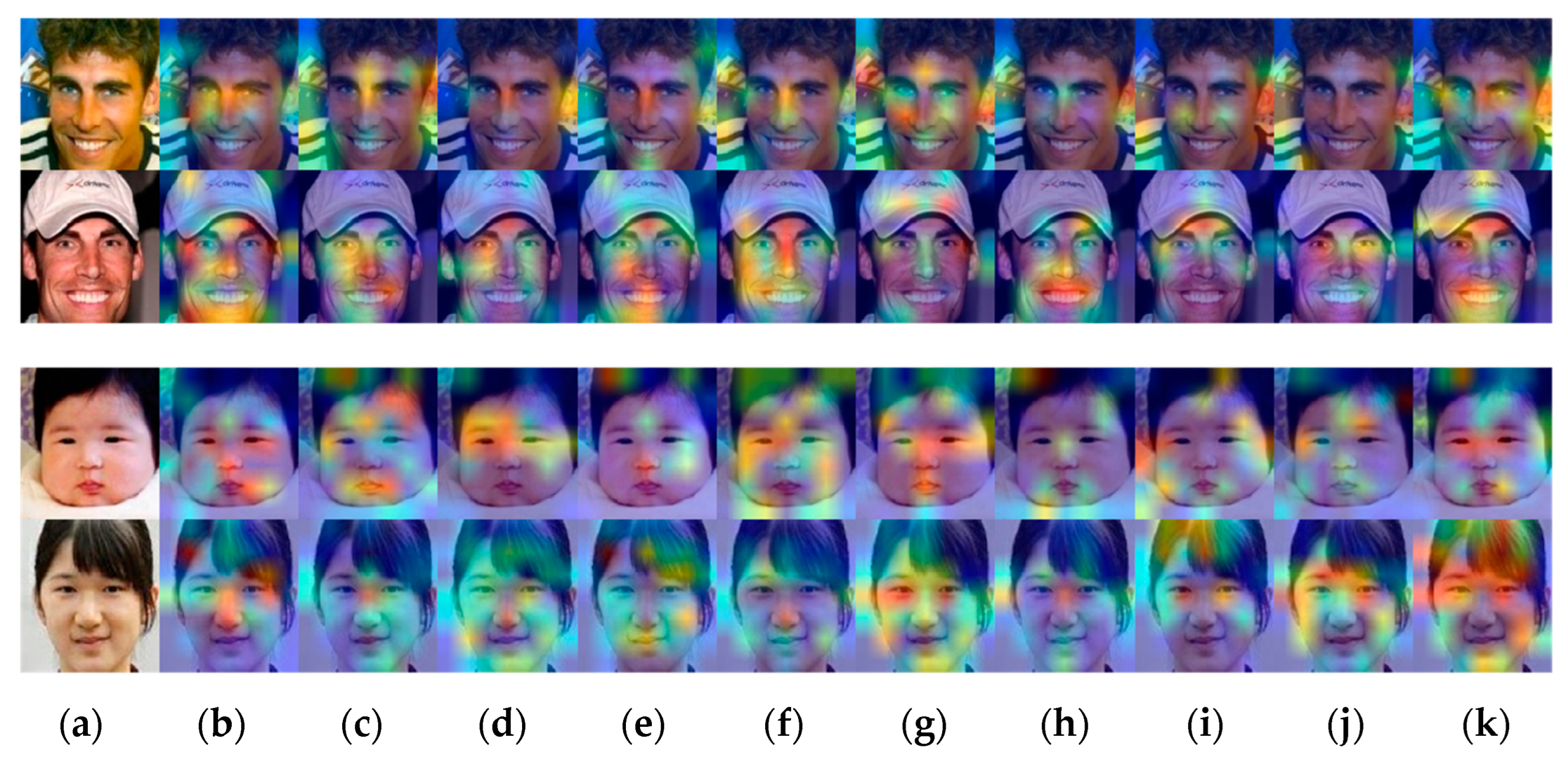

4.5. Qualitative Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Guo, M.; Xu, T.; Liu, J.; Liu, Z.; Jiang, P.; Mu, T.; Zhang, S.; Martin, R.R.; Cheng, M.; Hu, S. Attention Mechanisms in Computer Vision: A Survey. Comp. Vis. Media 2022, 8, 331–368. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11531–11539. [Google Scholar] [CrossRef]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 13708–13717. [Google Scholar] [CrossRef]

- Misra, D.; Nalamada, T.; Arasanipalai, A.U.; Hou, Q. Rotate to Attend: Convolutional Triplet Attention Module. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2021; pp. 3138–3147. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.; Kai, L.; Fei-Fei, L. ImageNet: A Large-Scale Hierarchical Image Database. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar] [CrossRef]

- Chen, S.; Liu, Y.; Gao, X.; Han, Z. MobileFaceNets: Efficient CNNs for Accurate Real-Time Face Verification on Mobile Devices. In Proceedings of the Chinese Conference on Biometric Recognition (CCBR), Urumqi, China, 11–12 August 2018; pp. 428–438. [Google Scholar] [CrossRef]

- Martínez-Díaz, Y.; Luevano, L.S.; Méndez-Vázquez, H.; Nicolás-Díaz, M.; Chang, L.; Gonzalez-Mendoza, M. ShuffleFaceNet: A Lightweight Face Architecture for Efficient and Highly-Accurate Face Recognition. In Proceedings of the IEEE International Conference on Computer Vision Workshop (ICCVW), Seoul, South Korea, 27–28 October 2019; pp. 2721–2728. [Google Scholar] [CrossRef]

- Martínez-Díaz, Y.; Nicolás-Díaz, M.; Méndez-Vázquez, H.; Luevano, L.S.; Chang, L.; Gonzalez-Mendoza, M.; Sucar, L.E. Benchmarking Lightweight Face Architectures on Specific Face Recognition Scenarios. Artif. Intell. Rev. 2021, 54, 6201–6244. [Google Scholar] [CrossRef]

- Hoo, S.C.; Ibrahim, H.; Suandi, S.A. ConvFaceNeXt: Lightweight Networks for Face Recognition. Mathematics 2022, 10, 3592. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, X.; Shakeel, M.S.; Wan, H.; Kang, W. Learning Upper Patch Attention using Dual-Branch Training Strategy for Masked Face Recognition. Pattern Recognit. 2022, 126, 1–15. [Google Scholar] [CrossRef]

- Liu, T.; Luo, R.; Xu, L.; Feng, D.; Cao, L.; Liu, S.; Guo, J. Spatial Channel Attention for Deep Convolutional Neural Networks. Mathematics 2022, 10, 1750. [Google Scholar] [CrossRef]

- Mo, R.; Lai, S.; Yan, Y.; Chai, Z.; Wei, X. Dimension-Aware Attention for Efficient Mobile Networks. Pattern Recognit. 2022, 131, 108899. [Google Scholar] [CrossRef]

- Shah, S.W.; Kanhere, S.S. Recent Trends in User Authentication—A Survey. IEEE Access. 2019, 7, 112505–112519. [Google Scholar] [CrossRef]

- Brown, D. Mobile Attendance based on Face Detection and Recognition using OpenVINO. In Proceedings of the International Conference on Artificial Intelligence and Smart Systems (ICAIS), Coimbatore, India, 25–27 March 2021; pp. 1152–1157. [Google Scholar] [CrossRef]

- Shaukat, Z.; Akhtar, F.; Fang, J.; Ali, S.; Azeem, M. Cloud-based Face Recognition for Google Glass. In Proceedings of the 2018 International Conference on Computing and Artificial Intelligence (ICCAI), Chengdu, China, 12–14 March 2018; pp. 104–111. [Google Scholar] [CrossRef]

- Hoo, S.C.; Ibrahim, H. Biometric-based Attendance Tracking System for Education Sectors: A Literature Survey on Hardware Requirements. J. Sens. 2019, 2019, 1–25. [Google Scholar] [CrossRef]

- Ranjan, R.; Sankaranarayanan, S.; Bansal, A.; Bodla, N.; Chen, J.C.; Patel, V.M.; Castillo, C.D.; Chellappa, R. Deep Learning for Understanding Faces: Machines May Be Just as Good, or Better, than Humans. IEEE Signal Process. Mag. 2018, 35, 66–83. [Google Scholar] [CrossRef]

- Zhang, K.; Zhang, Z.; Li, Z.; Qiao, Y. Joint Face Detection and Alignment Using Multitask Cascaded Convolutional Networks. IEEE Signal Process. Lett. 2016, 23, 1499–1503. [Google Scholar] [CrossRef]

- Najibi, M.; Samangouei, P.; Chellappa, R.; Davis, L.S. SSH: Single Stage Headless Face Detector. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Du, H.; Shi, H.; Zeng, D.; Zhang, X.P.; Mei, T. The Elements of End-to-end Deep Face Recognition: A Survey of Recent Advances. ACM Comput. Surv. 2022, 54, 1–42. [Google Scholar] [CrossRef]

- Wang, Z.; Chen, J.; Hu, J.; Wang, Z.; Chen, J.; Hu, J. Multi-View Cosine Similarity Learning with Application to Face Verification. Mathematics 2022, 10, 1800. [Google Scholar] [CrossRef]

- Deng, J.; Guo, J.; Zhang, D.; Deng, Y.; Lu, X.; Shi, S. Lightweight Face Recognition Challenge. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar] [CrossRef]

- Ma, N.; Zhang, X.; Zheng, H.; Sun, J. ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 122–138. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Cai, H.; Zhu, L.; Han, S. ProxylessNAS: Direct Neural Architecture Search on Target Task and Hardware. In Proceedings of the International Conference on Learning Representations (ICLR), New Orleans, LA, USA, 6–9 May 2019; pp. 1–13. [Google Scholar] [CrossRef]

- Liu, Z.; Mao, H.; Wu, C.; Feichtenhofer, C.; Darrell, T.; Xie, S. A ConvNet for the 2020s. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 11966–11976. [Google Scholar] [CrossRef]

- Abdulhussain, S.H.; Mahmmod, B.M.; AlGhadhban, A.; Flusser, J. Face Recognition Algorithm Based on Fast Computation of Orthogonal Moments. Mathematics 2022, 10, 2721. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of Deep Learning: Concepts, CNN Architectures, Challenges, Applications, Future Directions. J. Big Data 2021, 8, 1–74. [Google Scholar] [CrossRef]

- Khan, S.; Rahmani, H.; Shah, S.A.A.; Bennamoun, M. A Guide to Convolutional Neural Networks for Computer Vision; Morgan & Claypool Publishers: San Rafael, CA, USA, 2018. [Google Scholar]

- Liu, W.; Zhou, L.; Chen, J. Face Recognition Based on Lightweight Convolutional Neural Networks. Information 2021, 12, 191. [Google Scholar] [CrossRef]

- Xiao, J.; Jiang, G.; Liu, H. A Lightweight Face Recognition Model based on MobileFaceNet for Limited Computation Environment. EAI Endorsed Trans. Internet Things 2022, 7, 1–9. [Google Scholar] [CrossRef]

- Li, X.; Wang, F.; Hu, Q.; Leng, C. Airface: Lightweight and Efficient Model for Face Recognition. In Proceedings of the 2019 IEEE/CVF International Conference on Computer VisionWorkshop (ICCVW), Seoul, Republic of Korea, 27–28 October 2019; pp. 2678–2682. [Google Scholar]

- Bansal, A.; Nanduri, A.; Castillo, C.D.; Ranjan, R.; Chellappa, R. UMDFaces: An Annotated Face Dataset for Training Deep Networks. In Proceedings of the IEEE International Joint Conference on Biometrics (IJCB), Denver, CO, USA, 1–4 October 2017; pp. 464–473. [Google Scholar] [CrossRef]

- Yi, D.; Lei, Z.; Liao, S.; Li, S. Learning Face Representation from Scratch. arXiv 2014, arXiv:1411.7923. [Google Scholar] [CrossRef]

- Parkhi, O.M.; Vedaldi, A.; Zisserman, A. Deep Face Recognition. In Proceedings of the British Machine Vision Conference (BMVC), Swansea, UK, 7–10 September 2015. [Google Scholar] [CrossRef]

- Wang, Q.; Zhang, P.; Xiong, H.; Zhao, J. Face.evoLVe: A Cross-Platform Library for High-Performance Face Analytics. Neurocomputing 2022, 494, 443–445. [Google Scholar] [CrossRef]

- Huang, G.B.; Mattar, M.; Berg, T.; Learned-Miller, E. Labeled Faces in the Wild: A Database for Studying Face Recognition in Unconstrained Environments. In Proceedings of the Workshop on Faces in ‘Real-Life’ Images: Detection, Alignment, and Recognition, Marseille, France, 12–18 October 2008. [Google Scholar]

- Zheng, T.; Deng, W.; Hu, J. Cross-Age LFW: A Database for Studying Cross-Age Face Recognition in Unconstrained Environments. arXiv 2017, arXiv:1708.08197. [Google Scholar] [CrossRef]

- Zheng, T.; Deng, W. Cross-Pose LFW: A Database for Studying Cross-Pose Face Recognition in Unconstrained Environments; Technical Report; Beijing University of Posts and Telecommunications: Beijing, China, 2018; Available online: http://www.whdeng.cn/CPLFW/Cross-Pose-LFW.pdf (accessed on 3 December 2022).

- Sengupta, S.; Chen, J.C.; Castillo, C.; Patel, V.M.; Chellappa, R.; Jacobs, D.W. Frontal to Profile Face Verification in the Wild. In Proceedings of the 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–9 March 2016; pp. 1–9. [Google Scholar] [CrossRef]

- Moschoglou, S.; Papaioannou, A.; Sagonas, C.; Deng, J.; Kotsia, I.; Zafeiriou, S. AgeDB: The First Manually Collected, In-the-Wild Age Database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 June 2017; pp. 51–59. [Google Scholar] [CrossRef]

- Cao, Q.; Shen, L.; Xie, W.; Parkhi, O.M.; Zisserman, A. VGGFace2: A Dataset for Recognising Faces across Pose and Age. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), Xi’an, China, 15–19 May 2018; pp. 67–74. [Google Scholar] [CrossRef]

- Whitelam, C.; Taborsky, E.; Blanton, A.; Maze, B.; Adams, J.; Miller, T.; Kalka, N.; Jain, A.K.; Duncan, J.A.; Allen, K.; et al. IARPA Janus Benchmark-B Face Dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 June 2017; pp. 592–600. [Google Scholar] [CrossRef]

- Maze, B.; Adams, J.; Duncan, J.A.; Kalka, N.; Miller, T.; Otto, C.; Jain, A.K.; Niggel, W.T.; Anderson, J.; Cheney, J.; et al. IARPA Janus Benchmark-C: Face Dataset and Protocol. In Proceedings of the 2018 International Conference on Biometrics (ICB), Queensland, Australia, 20–23 February 2018; pp. 158–165. [Google Scholar] [CrossRef]

- Deng, J.; Guo, J.; Yang, J.; Xue, N.; Cotsia, I.; Zafeiriou, S.P. ArcFace: Additive Angular Margin Loss for Deep Face Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 5962–5979. [Google Scholar] [CrossRef] [PubMed]

- Russell, S.; Norvig, P. Artifcial Intelligence: A Modern Approach, 4th Global ed.; Pearson Education Limited: London, UK, 2022. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef]

- Zhao, J.; Han, J.; Shao, L. Unconstrained Face Recognition Using a Set-to-Set Distance Measure on Deep Learned Features. IEEE Trans. Circuits Syst. Video Technol. 2018, 28, 2679–2689. [Google Scholar] [CrossRef]

- Wang, Q.; Guo, G. LS-CNN: Characterizing Local Patches at Multiple Scales for Face Recognition. IEEE Trans. Inf. Forensics Secur. 2019, 15, 1640–1653. [Google Scholar] [CrossRef]

| Model | Param. (M) | FLOPs (M) | LFW | CALFW | CPLFW | CFP-FF | CFP-FP | AgeDB-30 | VGG2-FP | Average |

|---|---|---|---|---|---|---|---|---|---|---|

| ConvFace NeXt_LCAM | 1.05 | 406.56 | 99.20 | 93.47 | 86.40 | 98.90 | 89.49 | 93.70 | 90.78 | 93.13 |

| ConvFace NeXt_L5K | 1.05 | 406.60 | 99.23 | 93.73 | 86.97 | 99.01 | 89.61 | 93.65 | 90.04 | 93.18 |

| ConvFace NeXt_L7K | 1.05 | 406.65 | 99.15 | 93.67 | 86.33 | 99.11 | 89.06 | 93.65 | 89.80 | 92.97 |

| ConvFace NeXt_L9K | 1.05 | 406.69 | 99.27 | 93.50 | 85.98 | 98.90 | 88.94 | 93.48 | 90.60 | 92.95 |

| Model | IJB-B (TAR@FAR) | IJB-C (TAR@FAR) | ||||

|---|---|---|---|---|---|---|

| 10−5 | 10−4 | 10−3 | 10−5 | 10−4 | 10−3 | |

| ConvFace NeXt_LCAM | 66.97 | 80.27 | 89.49 | 74.19 | 84.01 | 91.37 |

| ConvFace NeXt_L5K | 41.61 | 77.22 | 88.77 | 51.57 | 80.23 | 90.77 |

| ConvFace NeXt_L7K | 66.80 | 80.14 | 89.06 | 74.21 | 83.78 | 91.10 |

| ConvFace NeXt_L9K | 67.64 | 79.86 | 89.04 | 73.60 | 83.32 | 91.01 |

| Model | Param. (M) | FLOPs (M) | LFW | CALFW | CPLFW | CFP-FF | CFP-FP | AgeDB-30 | VGG2-FP | Average |

|---|---|---|---|---|---|---|---|---|---|---|

| ConvFace NeXt | 1.05 | 404.57 | 99.10 | 93.32 | 85.45 | 98.87 | 87.40 | 92.95 | 88.92 | 92.29 |

| ConvFace NeXt_CAB | 1.05 | 405.53 | 99.13 | 93.55 | 86.18 | 98.87 | 89.63 | 93.05 | 89.46 | 92.84 |

| ConvFace NeXt_VAB | 1.05 | 405.54 | 99.20 | 93.37 | 86.02 | 98.93 | 89.61 | 92.92 | 90.00 | 92.86 |

| ConvFace NeXt_HAB | 1.05 | 405.54 | 99.10 | 93.15 | 85.68 | 99.01 | 88.30 | 93.35 | 89.94 | 92.65 |

| ConvFace NeXt_CAB+VAB | 1.05 | 406.06 | 99.05 | 93.00 | 86.33 | 98.84 | 88.91 | 93.02 | 89.74 | 92.70 |

| ConvFace NeXt_CAB+HAB | 1.05 | 406.06 | 99.22 | 93.28 | 86.40 | 98.96 | 89.31 | 93.37 | 90.46 | 93.00 |

| ConvFace NeXt_VAB+HAB | 1.05 | 405.58 | 99.10 | 93.58 | 85.47 | 98.99 | 87.91 | 93.28 | 90.10 | 92.63 |

| ConvFace NeXt_LCAM | 1.05 | 406.56 | 99.20 | 93.47 | 86.40 | 98.90 | 89.49 | 93.70 | 90.78 | 93.13 |

| Model | IJB-B (TAR@FAR) | IJB-C (TAR@FAR) | ||||

|---|---|---|---|---|---|---|

| 10−5 | 10−4 | 10−3 | 10−5 | 10−4 | 10−3 | |

| ConvFace NeXt | 66.11 | 79.77 | 88.22 | 73.75 | 83.27 | 90.56 |

| ConvFace NeXt_CAB | 65.12 | 80.16 | 89.15 | 72.54 | 83.58 | 91.23 |

| ConvFace NeXt_VAB | 65.50 | 79.94 | 88.97 | 73.85 | 83.53 | 90.96 |

| ConvFace NeXt_HAB | 64.37 | 79.29 | 88.71 | 72.45 | 82.92 | 90.81 |

| ConvFace NeXt_CAB+VAB | 66.59 | 80.03 | 88.93 | 74.06 | 83.63 | 91.16 |

| ConvFace NeXt_CAB+HAB | 64.85 | 79.77 | 89.06 | 71.55 | 83.23 | 91.14 |

| ConvFace NeXt_VAB+HAB | 66.79 | 79.77 | 88.66 | 73.64 | 83.21 | 90.83 |

| ConvFace NeXt_LCAM | 66.97 | 80.27 | 89.49 | 74.19 | 84.01 | 91.37 |

| Model | Param. (M) | FLOPs (M) | LFW | CALFW | CPLFW | CFP-FF | CFP-FP | AgeDB-30 | VGG2-FP | Average |

|---|---|---|---|---|---|---|---|---|---|---|

| ConvFace NeXt_D1 | 1.05 | 406.51 | 99.12 | 93.28 | 86.38 | 99.01 | 90.30 | 92.88 | 90.62 | 93.08 |

| ConvFace NeXt_P1 | 1.05 | 408.54 | 99.20 | 93.32 | 86.00 | 99.07 | 88.80 | 93.10 | 89.60 | 92.73 |

| ConvFace NeXt_LCAM | 1.05 | 406.56 | 99.20 | 93.47 | 86.40 | 98.90 | 89.49 | 93.70 | 90.78 | 93.13 |

| Model | IJB-B (TAR@FAR) | IJB-C (TAR@FAR) | ||||

|---|---|---|---|---|---|---|

| 10−5 | 10−4 | 10−3 | 10−5 | 10−4 | 10−3 | |

| ConvFace NeXt_D1 | 67.56 | 80.39 | 88.91 | 74.34 | 83.45 | 91.04 |

| ConvFace NeXt_P1 | 68.09 | 79.62 | 88.27 | 74.27 | 83.11 | 90.42 |

| ConvFace NeXt_LCAM | 66.97 | 80.27 | 89.49 | 74.19 | 84.01 | 91.37 |

| Model | Atten. Module | Param. (M) | FLOPs (M) | LFW | CA LFW | CP LFW | CFP-FF | CFP-FP | AgeDB-30 | VGG2-FP | Average |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Conv FaceNeXt | Baseline | 1.05 | 404.57 | 99.10 | 93.32 | 85.45 | 98.87 | 87.40 | 92.95 | 88.92 | 92.29 |

| SE | 1.06 | 405.54 | 99.03 | 93.05 | 85.63 | 98.80 | 87.96 | 93.00 | 89.70 | 92.45 | |

| ECA | 1.05 | 405.52 | 99.05 | 93.48 | 86.33 | 98.83 | 88.86 | 93.32 | 89.78 | 92.81 | |

| CBAM | 1.07 | 407.61 | 99.07 | 93.43 | 86.48 | 99.06 | 88.20 | 93.57 | 89.34 | 92.74 | |

| ECBAM | 1.05 | 407.58 | 99.27 | 93.47 | 86.22 | 98.81 | 88.99 | 93.13 | 89.10 | 92.71 | |

| CA | 1.07 | 407.19 | 99.10 | 93.43 | 85.82 | 98.91 | 87.89 | 93.32 | 88.92 | 92.48 | |

| SCA | 1.05 | 407.39 | 99.25 | 93.50 | 86.33 | 99.03 | 88.87 | 93.77 | 90.48 | 93.03 | |

| TA | 1.05 | 419.15 | 99.23 | 93.52 | 86.25 | 99.01 | 88.60 | 93.73 | 90.02 | 92.91 | |

| DAA | 1.10 | 407.50 | 99.13 | 93.55 | 86.43 | 99.01 | 89.56 | 93.35 | 89.88 | 92.99 | |

| LCAM | 1.05 | 406.56 | 99.20 | 93.47 | 86.40 | 98.90 | 89.49 | 93.70 | 90.78 | 93.13 |

| Model | Atten. Module | IJB-B (TAR@FAR) | IJB-C (TAR@FAR) | ||||

|---|---|---|---|---|---|---|---|

| 10−5 | 10−4 | 10−3 | 10−5 | 10−4 | 10−3 | ||

| Conv FaceNeXt | Baseline | 66.11 | 79.77 | 88.22 | 73.75 | 83.27 | 90.56 |

| SE | 65.57 | 79.95 | 88.63 | 73.52 | 83.44 | 90.76 | |

| ECA | 67.16 | 79.69 | 88.81 | 73.69 | 83.38 | 90.90 | |

| CBAM | 61.23 | 79.19 | 88.99 | 71.45 | 83.02 | 91.01 | |

| ECBAM | 61.71 | 79.20 | 88.81 | 71.15 | 82.63 | 90.74 | |

| CA | 64.44 | 79.90 | 88.57 | 73.68 | 83.21 | 90.80 | |

| SCA | 68.12 | 80.90 | 89.28 | 74.90 | 84.41 | 91.48 | |

| TA | 64.68 | 80.09 | 88.86 | 71.50 | 83.01 | 90.95 | |

| DAA | 66.64 | 79.86 | 88.84 | 73.48 | 83.33 | 91.06 | |

| LCAM | 66.97 | 80.27 | 89.49 | 74.19 | 84.01 | 91.37 | |

| Model | Atten. Module | Param. (M) | FLOPs (M) | LFW | CA LFW | CP LFW | CFP-FF | CFP-FP | AgeDB-30 | VGG2-FP | Average |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Mobile FaceNet | Baseline | 1.03 | 473.15 | 99.03 | 93.18 | 85.52 | 98.91 | 87.51 | 93.35 | 88.40 | 92.27 |

| SE | 1.04 | 473.83 | 99.07 | 93.75 | 85.80 | 98.87 | 88.06 | 93.40 | 88.80 | 92.54 | |

| ECA | 1.03 | 473.82 | 99.15 | 93.48 | 86.38 | 99.17 | 89.61 | 93.50 | 90.26 | 93.08 | |

| CBAM | 1.04 | 475.33 | 99.15 | 93.48 | 86.42 | 98.96 | 89.37 | 93.13 | 90.08 | 92.94 | |

| ECBAM | 1.03 | 475.31 | 99.18 | 93.33 | 86.65 | 98.93 | 89.86 | 93.50 | 90.26 | 93.10 | |

| CA | 1.04 | 474.94 | 99.12 | 93.12 | 85.60 | 99.01 | 87.87 | 93.15 | 88.74 | 92.37 | |

| SCA | 1.03 | 475.14 | 99.20 | 93.42 | 85.87 | 99.21 | 88.29 | 93.80 | 89.46 | 92.75 | |

| TA | 1.03 | 482.97 | 99.23 | 93.90 | 86.63 | 99.06 | 90.94 | 93.68 | 90.30 | 93.39 | |

| DAA | 1.06 | 475.21 | 99.27 | 93.43 | 86.98 | 99.03 | 90.51 | 93.88 | 90.40 | 93.36 | |

| LCAM | 1.03 | 474.56 | 99.18 | 93.38 | 87.10 | 99.10 | 90.83 | 93.42 | 90.94 | 93.42 |

| Model | Atten. Module | IJB-B (TAR@FAR) | IJB-C (TAR@FAR) | ||||

|---|---|---|---|---|---|---|---|

| 10−5 | 10−4 | 10−3 | 10−5 | 10−4 | 10−3 | ||

| Mobile FaceNet | Baseline | 38.53 | 73.92 | 87.74 | 55.10 | 78.17 | 89.79 |

| SE | 40.06 | 74.27 | 87.58 | 53.46 | 78.38 | 89.65 | |

| ECA | 64.91 | 79.97 | 88.97 | 73.73 | 83.96 | 91.14 | |

| CBAM | 37.48 | 75.47 | 88.60 | 60.21 | 79.97 | 90.51 | |

| ECBAM | 59.01 | 79.45 | 89.19 | 71.12 | 83.03 | 91.02 | |

| CA | 19.10 | 63.85 | 86.40 | 28.79 | 67.03 | 87.92 | |

| SCA | 38.82 | 72.73 | 87.87 | 48.68 | 76.74 | 89.86 | |

| TA | 53.45 | 78.08 | 89.04 | 62.64 | 81.23 | 90.78 | |

| DAA | 60.66 | 79.86 | 89.52 | 71.88 | 83.75 | 91.48 | |

| LCAM | 66.78 | 80.83 | 89.26 | 73.66 | 83.79 | 91.14 | |

| Model | Atten. Module | Param. (M) | FLOPs (M) | LFW | CA LFW | CP LFW | CFP-FF | CFP-FP | AgeDB-30 | VGG2-FP | Average |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Proxyless FaceNAS | Baseline | 3.01 | 873.95 | 98.82 | 92.63 | 84.32 | 98.76 | 86.13 | 92.23 | 87.66 | 91.51 |

| SE | 3.03 | 875.03 | 98.97 | 92.98 | 84.68 | 98.84 | 87.46 | 92.92 | 88.18 | 92.00 | |

| ECA | 3.01 | 875.01 | 98.98 | 93.07 | 85.28 | 99.03 | 88.21 | 92.57 | 88.68 | 92.26 | |

| CBAM | 3.03 | 878.60 | 98.97 | 92.75 | 84.78 | 98.79 | 86.73 | 93.03 | 87.58 | 91.80 | |

| ECBAM | 3.02 | 878.56 | 99.15 | 92.95 | 85.50 | 98.83 | 88.84 | 92.67 | 88.64 | 92.37 | |

| CA | 3.03 | 876.51 | 98.85 | 92.52 | 84.75 | 98.66 | 86.23 | 92.42 | 87.34 | 91.54 | |

| SCA | 3.01 | 877.10 | 99.10 | 93.22 | 84.85 | 99.03 | 86.47 | 92.90 | 89.18 | 92.11 | |

| TA | 3.02 | 887.77 | 99.12 | 93.23 | 85.75 | 98.79 | 88.66 | 92.75 | 89.64 | 92.56 | |

| DAA | 3.06 | 877.21 | 98.92 | 92.85 | 85.17 | 98.83 | 87.36 | 92.30 | 88.84 | 92.04 | |

| LCAM | 3.02 | 876.23 | 98.93 | 93.23 | 85.65 | 98.91 | 87.71 | 93.22 | 88.96 | 92.37 |

| Model | Atten. Module | IJB-B (TAR@FAR) | IJB-C (TAR@FAR) | ||||

|---|---|---|---|---|---|---|---|

| 10−5 | 10−4 | 10−3 | 10−5 | 10−4 | 10−3 | ||

| Proxyless FaceNAS | Baseline | 53.33 | 75.79 | 86.87 | 63.18 | 78.66 | 88.90 |

| SE | 44.14 | 74.34 | 87.01 | 57.90 | 77.85 | 88.91 | |

| ECA | 55.55 | 77.23 | 88.19 | 68.18 | 80.96 | 90.06 | |

| CBAM | 53.89 | 75.92 | 87.35 | 65.13 | 79.72 | 89.56 | |

| ECBAM | 63.02 | 78.29 | 88.10 | 69.77 | 81.70 | 90.23 | |

| CA | 54.74 | 75.23 | 87.02 | 66.41 | 79.47 | 89.13 | |

| SCA | 21.12 | 64.65 | 86.48 | 30.38 | 66.55 | 87.86 | |

| TA | 51.33 | 76.27 | 87.65 | 62.94 | 79.44 | 89.74 | |

| DAA | 40.51 | 74.34 | 87.33 | 52.39 | 77.17 | 89.17 | |

| LCAM | 60.03 | 78.52 | 88.78 | 69.77 | 81.79 | 90.72 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hoo, S.C.; Ibrahim, H.; Suandi, S.A.; Ng, T.F. LCAM: Low-Complexity Attention Module for Lightweight Face Recognition Networks. Mathematics 2023, 11, 1694. https://doi.org/10.3390/math11071694

Hoo SC, Ibrahim H, Suandi SA, Ng TF. LCAM: Low-Complexity Attention Module for Lightweight Face Recognition Networks. Mathematics. 2023; 11(7):1694. https://doi.org/10.3390/math11071694

Chicago/Turabian StyleHoo, Seng Chun, Haidi Ibrahim, Shahrel Azmin Suandi, and Theam Foo Ng. 2023. "LCAM: Low-Complexity Attention Module for Lightweight Face Recognition Networks" Mathematics 11, no. 7: 1694. https://doi.org/10.3390/math11071694

APA StyleHoo, S. C., Ibrahim, H., Suandi, S. A., & Ng, T. F. (2023). LCAM: Low-Complexity Attention Module for Lightweight Face Recognition Networks. Mathematics, 11(7), 1694. https://doi.org/10.3390/math11071694