3.1. The Intuition

The main focus of the two proposed data transformations is the regression task of the categorical e-commerce data. The motivation of the proposed work is to increase the degree of linearity between the different product categories (groups). For linear data, two different values of

x should be mapped to two different values of

y. For non-linear data, this condition does not hold. For instance, if the data can be fitted by a quadratic curve, then two different

x values can have the same

y value. In this vein, the statistical analysis of variance (ANOVA) is used to test the degree of variances of a set of groups. ANOVA produces the F-value using Equation (

1) [

23]:

where

represents the between-group variation and

represents the within-group variation. The higher the F-value, the higher the distinction of each group’s response in comparison to the other groups. In other words, the observations of each group behave similarly to one another, but the observations from different groups behave distinctively.

We argue that the regression task of the categorical data should be easier with a higher F-value. Thus, we propose two data transformations that decrease the denominator, , and increase the numerator, ; thus, the F-value increases as a result. In other words, the total variation in the dependent variable can be decomposed into two parts: the variation between groups and the variation within groups. We argue that the smaller the within-group variation and/or the higher the between-group variation, the more meaningful/useful the data categories for understanding/predicting the data. The idea can be explained in light of motivational examples.

The first motivational example discusses the effect of reducing the within-group variation. This example includes predicting the price of a given product based on its description, i.e., a regression task. If we have four different observations from two different product types, e.g., car audio systems and men’s suits, then the observations belong to two different groups. Additionally, the features of the observations at hand include the term frequency–inverse document frequency, (TF–IDF), the score of the product description, the product type, and the product price;

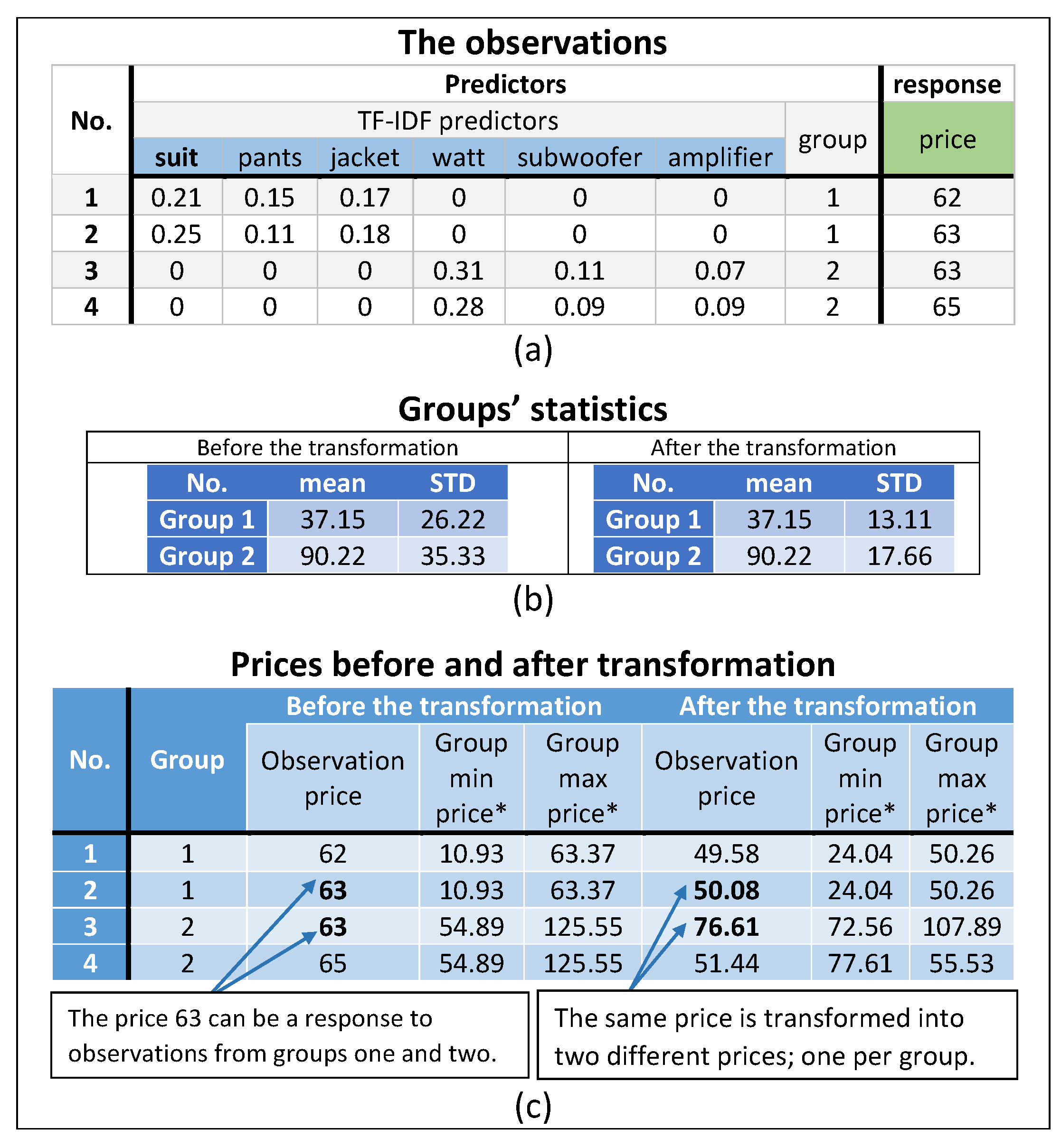

Figure 1 shows this example.

Figure 1a contains four observations from two different groups, where each group has two observations. The observations include two features: the first feature is the product description, i.e., textual data, and the second feature is the group identifier. Additionally, each feature has a price-dependent feature known as the response vector. The description feature is converted into six different features using the TF–IDF technique. The first three features represent words that may appear in the description of the men’s suits group observations; the last three features represent words that may appear in the car audio group observations.

In

Figure 1a, one can notice that the second and third observations belong to two different groups, but both observations have the same price. This case motivates the proposed work of separating as much data as possible from the different groups based on the outcome value, i.e., the price. We argue that the observations of different groups are more relative to the observations within the same group. Thus, this relationship should be reflected in the outcome variable as well.

Figure 1b shows the mean and the standard deviation of the men’s suit and car audio systems groups before and after possible data transformations. The proposed within-group reduction transformation reduces the variability within each group and maintains the same group means. The intuition of the proposed data transformations is to reduce the intersection of the response of the observations of different groups.

Finally,

Figure 1c shows that the price of 63 can be a response to two observations (second and third observations) from two different groups. Apparently, each group has a different distribution shape, i.e., mean and variance. Since each observation belongs to a different distribution shape, the proposed within-group variation reduction data transformation modifies the shape of each group so that the intersection between the two different groups is minimized. Thus, the price of 63 changed to 50.08 for the first group and 76.61 for the second group. Moreover, considering 68% of the data, the minimum value of the second group,

is larger than the maximum value of the first group

. The proposed within-group reduction transformation forces the data to have a smaller within-group variation.

The second motivational example discusses the effect of increasing the between-group variation.

Figure 2 includes data spread over four product categories, where each product category (group) has a different color. The

x values of each group are closer to one another compared to the

x values of the other groups. In other words, the predictor values of group observations are relatively closer than the predictor values of other groups. In

Figure 2a, the regression line (the green line), connects to the average of each group. Obviously, the regression line with an accepted performance cannot be a linear equation. On the other hand, the data in

Figure 2b can be addressed as a linear regression problem, i.e., using a polynomial equation with linear coefficients. A polynomial equation is impractical for a regression problem with so many independent variables, as discussed in detail at the end of

Section 4.1.1.

In addition, we argue that observations of different groups with different values of the independent variable

x that have similar or very close values of the dependent variable

y might be ambiguous for the regressor. We call a region of the dependent variable

y with observations of different groups an

ambiguity region.

Figure 2b depicts these regions as red rectangles.

The proposed between-group increment transformation reduces or eliminates these regions of ambiguity. This transformation suggests a shift value for each group’s data so that the group means are aligned on a straight line. In other words, the dependent variable

y is shifted by a certain amount for each observation. This shifting amount is fixed per group.

Figure 3 depicts the data in

Figure 2 with two different shift values.

Figure 3a depicts the data after shifting each group’s observations separately with a small shift (increasing the dependent variable values). The data in

Figure 3a still have a small ambiguity region relative to the data in

Figure 2b and the regressor line is linear. In contrast,

Figure 3b depicts the same data with a large shift value. We call this data transformation the group means linear alignment.

3.2. The Proposed Data Transformation: Ambiguity Reduction

The proposed method is two-fold: decreasing the within-group variability and increasing the between-group variability. First, the variance of the dependent variable values is reduced per data group (cluster or category), as shown in

Figure 1. Second, the values of the dependent variables are shifted in one direction, i.e., upward or downward, to align the groups’ averages in a straight line,

Figure 3. In the following subsections, we discuss the proposed method in detail. Of note, the proposed data transformations are applied to the dependent value only, since it is used in calculating the F-value.

3.2.1. Within-Group Reduction

One aspect of improving the F-value is to decrease the denominator of Equation (

1). Thus, we propose moving the observations’ values toward the group average to reduce the group variance (we interchangeably refer to this transformation as

variance reduction transformation). The further the observation from the group average, the more the observation-dependent value moves toward the group average and vice versa. The observation value is changed by applying Equation (

2):

where

is the original dependent value of the observation

j belonging to group

i,

represents the variance reduction ratio of the data,

is the mean of group

i, and

is the transformed (new) dependent value of the observation

j belonging to group

i. In Equation (

2), the higher the

, the less the group variance. In other words, a higher value of the

forces the observations’ dependent values of group

i to be closer to

. For instance, given that a group’s mean equals five,

, the dependent value of an observation equals ten,

, and

Applying Equation (

2), the value of

. Thus,

moved approximately 1.7 units toward the average. On the other hand, if the dependent value of an observation equals 6,

, the value of

. Thus,

moved approximately 0.3 units toward the average.

3.2.2. Between-Group Increase

Another aspect of improving the F-value is to increase the numerator of Equation (

1). Thus, we propose increasing the between-group variability. We separately shift each group’s observations so that the averages of all the groups are aligned on a straight line (we interchangeably refer to this transformation as

means linear alignment transformation). In other words, all the observations of the same group are shifted by the same value. Of note, only the dependent value is shifted, whereas the independent values are fixed. Therefore, this effect of shifting the observations of each group serves two purposes. First, it increases the between-group variability. Second, it increases the data linearity.

The algorithm for increasing the between-group variation is listed in Algorithm 1. This algorithm aligns the set of group means into a straight line. Thus, the algorithm first computes the line Equation (lines 1 to 4). Then, the algorithm computes the shift of each group’s mean, so that the shift fits on the obtained line (lines 5 to 8).

The algorithm has two inputs; an ascendingly sorted list that includes the mean of each data group and the slope percentage. The second input controls the degree of the slope, and the output is a list of shifts per group. For example, if the data have five groups, then the algorithm produces a list of five shifts, one shift per group. The maximum and minimum group means are extracted in lines 1 and 2, and the last and first means in the input list, respectively. Then, in line 3, the slope is computed by considering the first and last means as two successive points (the difference between them on the x-axis is one). Thus, the slope is calculated by subtracting the minimum group’s mean from the maximum group’s mean and dividing the result by one. Then, the slope is multiplied by a variable, , representing the calculated slope percentage. This variable can increase or decrease the slope based on the nature of the data at hand. Finally, the algorithm computes the amount of shift required to move the mean of each group to fit the line, lines 5 to 8.

Shifting the dependent values in one direction (positive or negative) guarantees that the sign of the shifted values, e.g., prices, is not flipped. The proposed data transformation is about determining the proper values of the two aforementioned steps independently per group.

| Algorithm 1: Increasing the between-group variability |

|---|

| Input: |

| Means: a list of ascendingly sorted product category (group) means |

| slope_perc: a ratio to modify the slope |

| Output: |

| GroupShift: a list of group shift values |

| ShiftData(Means) |

![Mathematics 11 01938 i001 Mathematics 11 01938 i001]() |

3.2.3. Ambiguity-Value: A Metric to Measure Ambiguity of the Dependent Variable

We define the data ambiguity of a regression model as observations of different groups having the same or close outcome values. For instance, observation a belongs to group 1, and observation b belongs to group 2; if the outcome of observation b is closer to observations a in comparison to other observations belonging to group 1, then observation b causes an ambiguity.

In this context, we propose a metric to measure the degree of data ambiguity by calculating the percentage of observations with outcome values located within the boundaries of many groups. This is achieved by counting the number of observations with an outcome value in the range of other groups rather than the group to which this observation belongs. Then, this counter is divided by the number of observations,

n. We call this metric

ambiguity-

value and defined it in Equation (

3):

where

i represents the group number out of

k groups,

,

j represents the observation number out of

observations per group,

,

l represents the ambiguity level,

,

g represents the group numbers other than the group the observation belongs to,

and

represent the lower and upper bounds of the outcomes of group

g, respectively,

represents the outcome of the observation

j belonging to group

i, and

n represents the number of all observations in the

k groups.

For observation a belonging to group i, the intuition of the proposed metric is when observation a’s outcome value is only located within group i’s boundaries, then it is easier to handle the observation rather than observation a’s outcome value being located within the boundaries of more than one group. The ambiguity-value metric calculates the percentage of observations with outcome values located within the boundaries of at least l groups. The ambiguity level l is used to tune the ambiguity-value metric. For instance, when l equals four, only observations with outcomes belonging to four or more different group boundaries are counted.

3.2.4. Back-Transformation

The back/inverse transformation of the proposed method includes two steps. First, the shifted outcome values are shifted back with the same shift value but in the opposite direction. Second, as the data variance is reduced using Equation (

2), the predicted value of the regression model is transformed back using the inverse equation, as in Equation (

4):

where

represents the output of the regression model for an input of group

i and

represents the back-transformed value, which is reported as the final value. Another approach to applying the back-transformation is to consider the problem as a regression task; we refer to this model as regression model-II. In this task, the predicted transformed values are the independent variables and the actual outcome is the dependent variable. Thus, the model can be seen as a two-level regression. The first level predicts the outcome in the transformed form, and the second level predicts the final reported value from the predicted transformed value. More specifically, this approach of back-transformation (e.g., regression model-II) may be thought of as a boosting type ensemble (as a general concept) where subsequent models correct the predictions from prior models.

3.2.5. Putting It Together: Ambiguity Reduction Transformation

Figure 4 depicts the block diagram of the proposed service with the two proposed data transformation methods with the regression model(s). The block diagram describes the complete regression task from the input observations to the predicted prices. First, the original transformed prices of the observations are transformed by applying Equation (

2) to reduce the data variance per product category. Then, another transformation is applied to align the product category means on a straight line. Then, the regressor model, model-I, is trained on the transformed prices producing the predicted values. Two back-transformations should then be applied to the predicted prices in the reverse order. Thus, the predicted prices are back-shifted. Then, to reverse the effect of Equation (

2), one possible solution is to apply the reverse of Equation (

2). Another solution is to train another regression model, model-II, to reverse the transformation effect (back-transformation). Regression model-II takes the predicted prices as an input (independent variable) and the true prices as an output (dependent variable). Thus, regression model-II learns to reverse the effect of Equation (

2).

As with any regression model, regression model-I produces the predicted value with an error from the true outcome. Trying to inverse this predicted value using the inverse equation of Equation (

2) might increase the error value. Thus, we propose using regression model-II to reduce this error.