Dynamic Learning Rate of Template Update for Visual Target Tracking

Abstract

:1. Introduction

- A new learning rate adjustment strategy is proposed. The learning rate of template update is dynamically adjusted by the motion state of the target. The effectiveness and portability of the method were demonstrated in the experiments.

- The method demonstrates that the motion state of the target contains a wealth of potential information and that this information has a positive impact on the performance of the tracker.

2. Related Work

2.1. The Strategy of Template Update

2.2. The Application of Motion State

3. Method

3.1. Preview of DCF Based Tracker

3.2. Definition of Motion State

3.3. Strategy of Dynamic Learning Rate

4. Experiment Results

4.1. Baseline Trackers

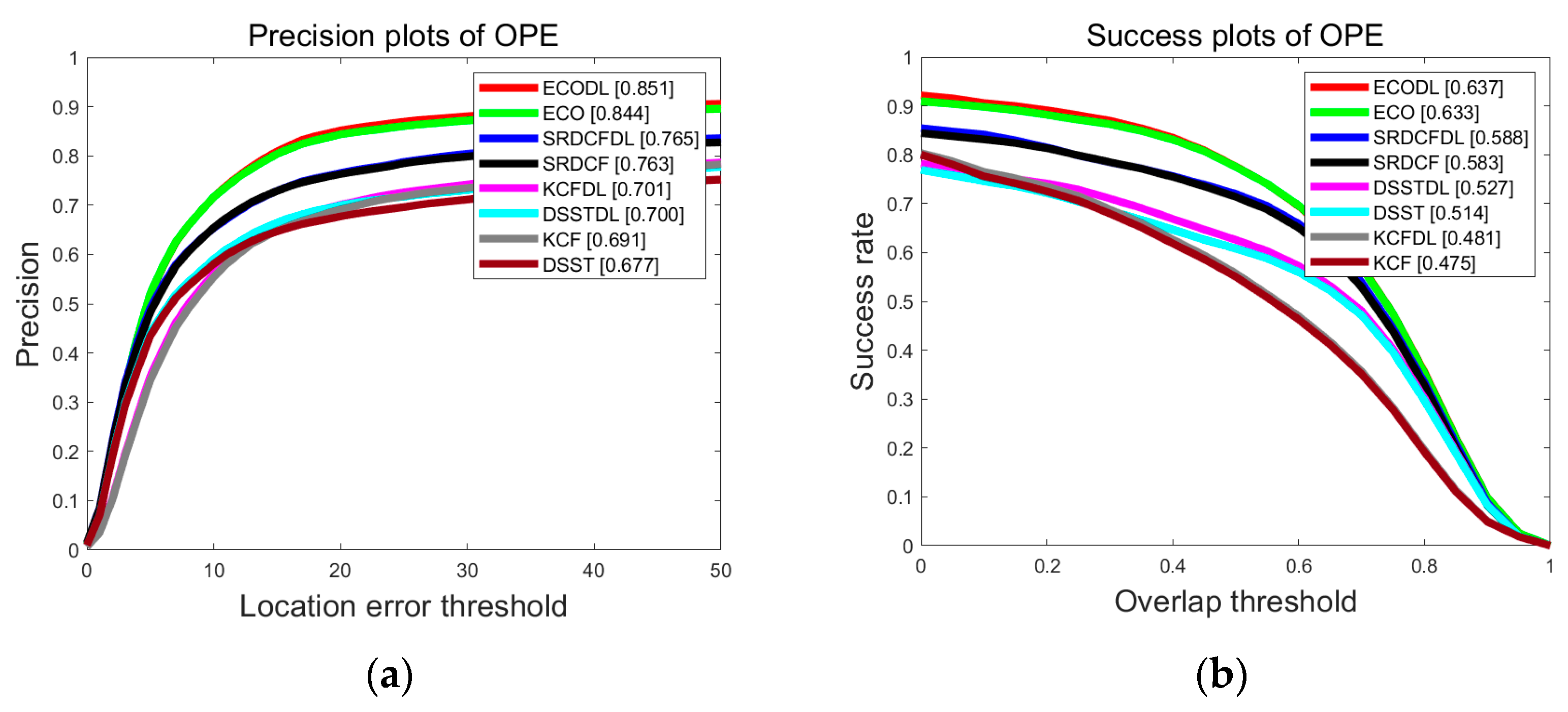

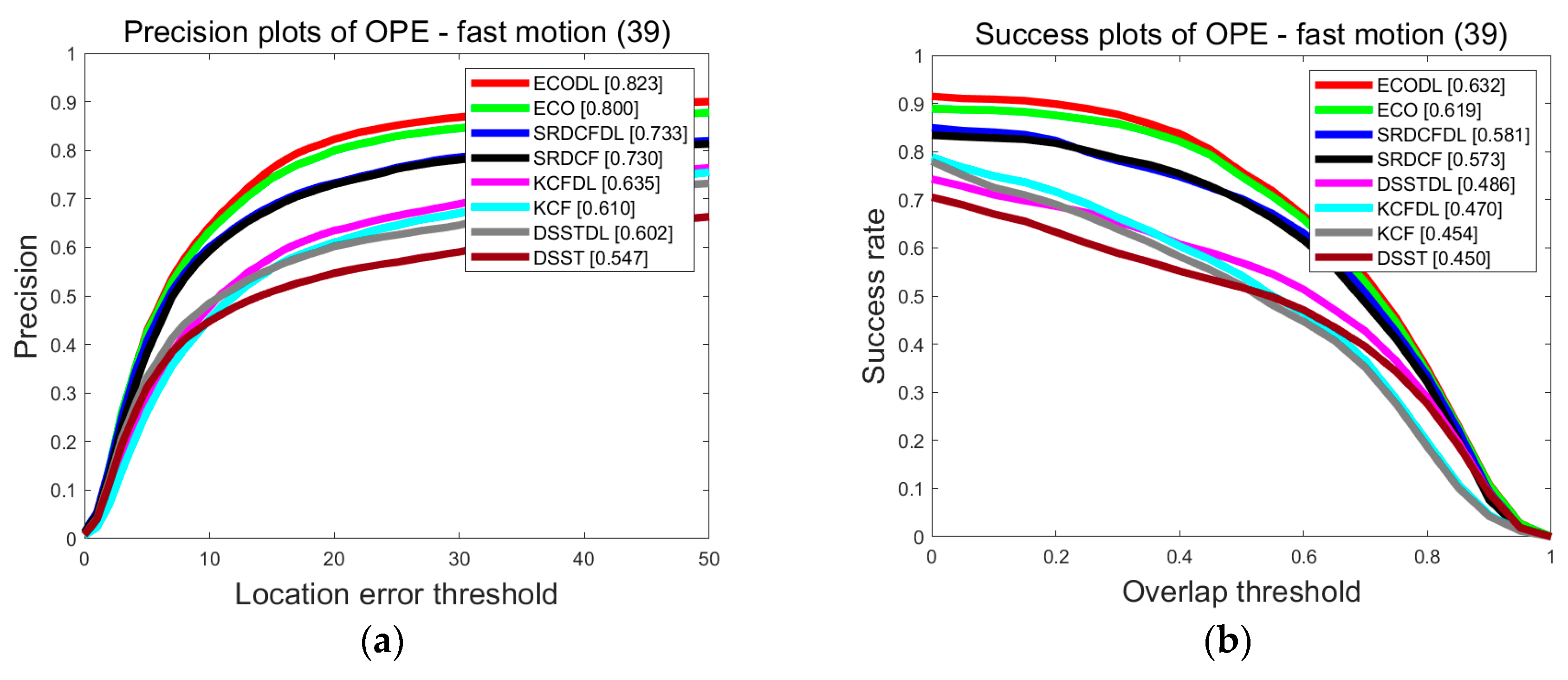

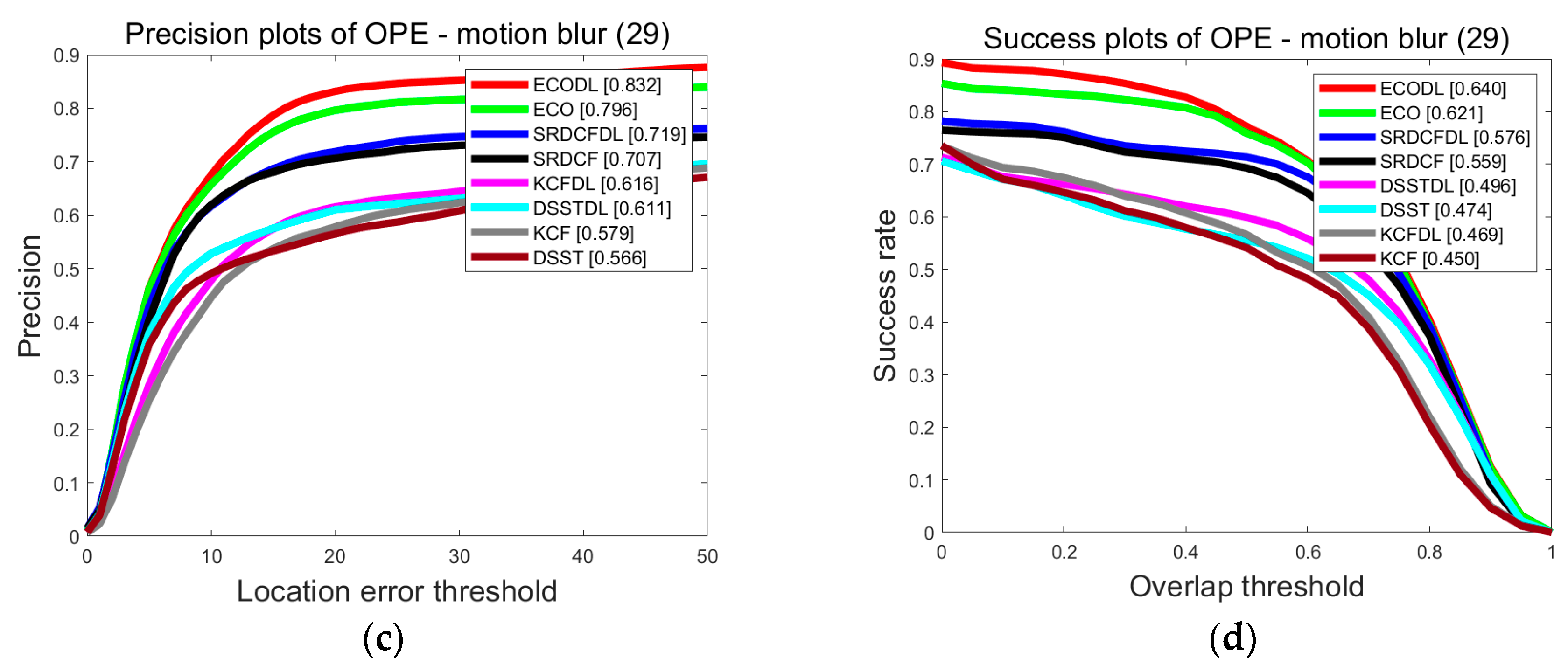

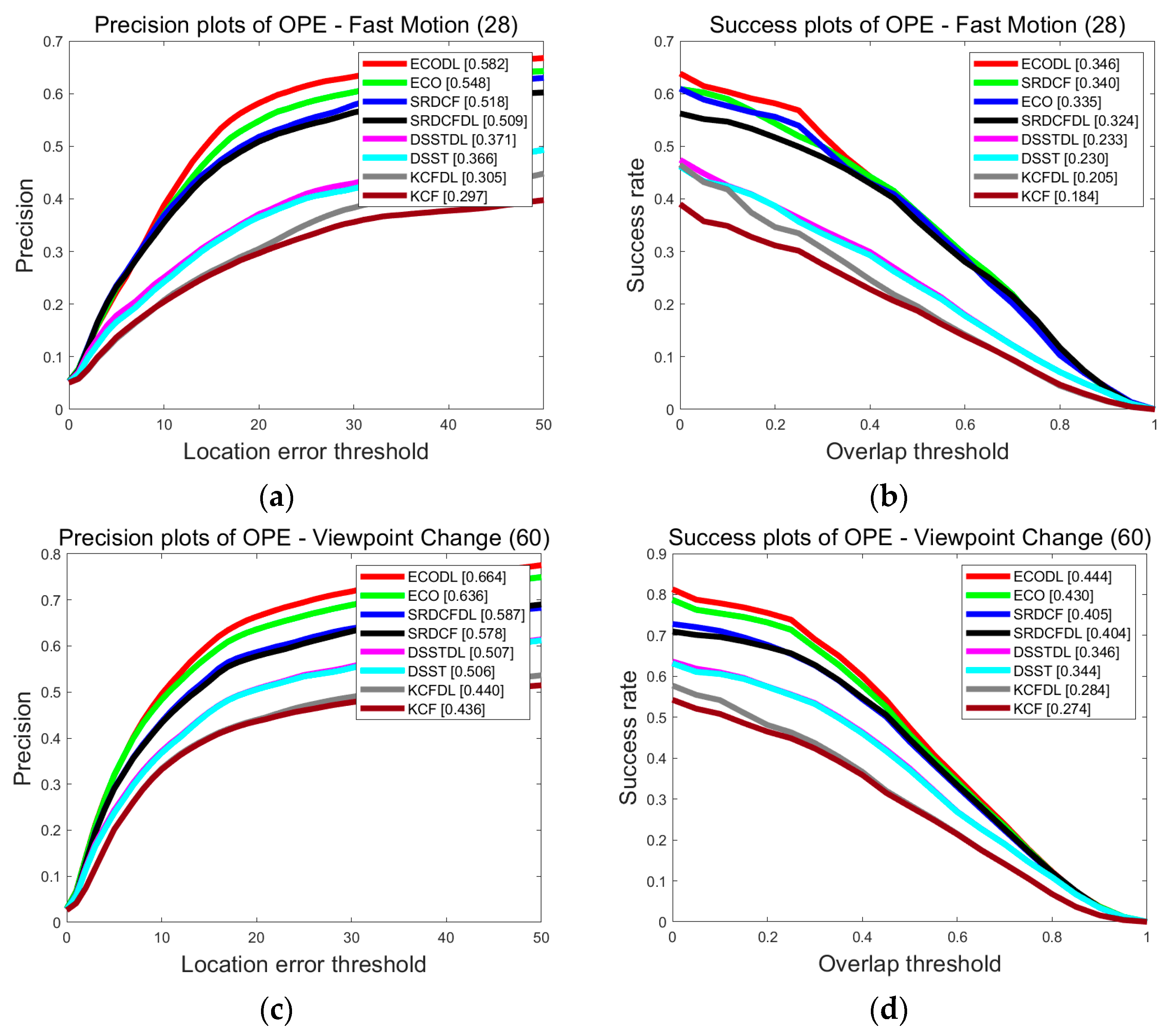

4.2. Test on OTB100

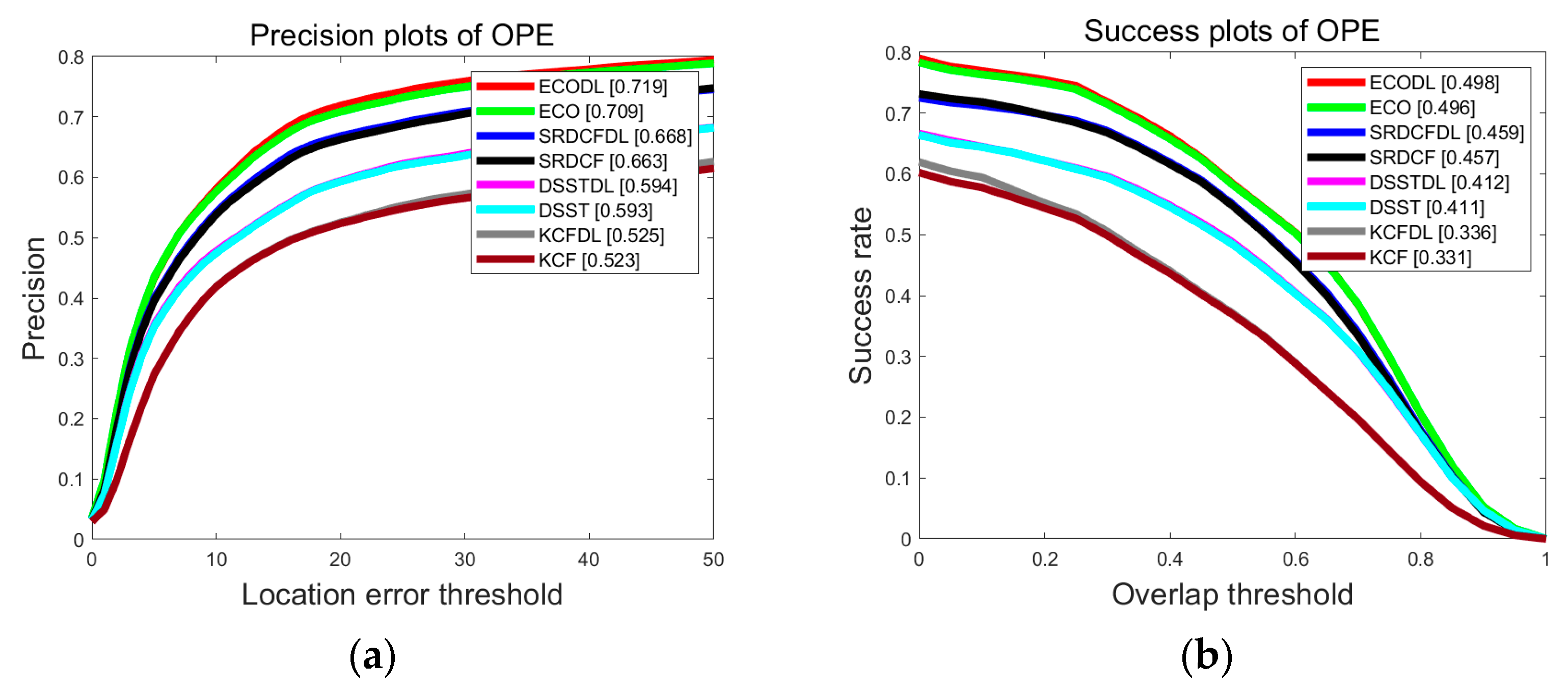

4.3. Test on UAV123

4.4. Discussion

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wu, Y.; Lim, J.; Yang, M.H. Online object tracking: A benchmark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2411–2418. [Google Scholar]

- Wu, Y.; Lim, J.; Yang, M.H. Object Tracking Benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1834–1848. [Google Scholar] [CrossRef] [PubMed]

- Kristan, M.; Matas, J.; Leonardis, A.; Vojir, T.; Pflugfelder, R.; Fernandez, G.; Nebehay, G.; Porikli, F.; Cehovin, L. A Novel Performance Evaluation Methodology for Single-Target Trackers. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 2137–2155. [Google Scholar] [CrossRef]

- Mueller, M.; Smith, N.; Ghanem, B. A benchmark and simulator for uav tracking. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer International Publishing: Cham, Switzerland, 2016; pp. 445–461. [Google Scholar]

- Liu, S.; Liu, D.; Srivastava, G.; Połap, D.; Woźniak, M. Overview and methods of correlation filter algorithms in object tracking. Complex Intell. Syst. 2021, 7, 1895–1917. [Google Scholar] [CrossRef]

- Du, S.; Wang, S. An overview of correlation-filter-based object tracking. IEEE Trans. Comput. Soc. Syst. 2021, 9, 18–31. [Google Scholar] [CrossRef]

- Jiao, L.; Wang, D.; Bai, Y.; Chen, P.; Liu, F. Deep learning in visual tracking: A review. IEEE Trans. Neural Netw. Learn. Syst. 2021, 1–20. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Lin, Z.; Xu, J.; Jin, W.-D.; Lu, S.-P.; Fan, D.-P. Bilateral attention network for RGB-D salient object detection. IEEE Trans. Image Process. 2021, 30, 1949–1961. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Zhong, B.; Li, G.; Zhang, S.; Ji, R. Siamese box adaptive network for visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6668–6677. [Google Scholar]

- Voigtlaender, P.; Luiten, J.; Torr, P.H.; Leibe, B. Siam r-cnn: Visual tracking by re-detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6578–6588. [Google Scholar]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual object tracking using adaptive correlation filters. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2544–2550. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. Exploiting the circulant structure of tracking-by-detection with kernels. In Proceedings of the Computer Vision–ECCV 2012: 12th European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; Proceedings, Part IV 12. Springer: Berlin/Heidelberg, Germany, 2012; pp. 702–715. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-speed tracking with kernelized correlation filters. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 583–596. [Google Scholar] [CrossRef] [PubMed]

- Danelljan, M.; Häger, G.; Khan, F.S.; Felsberg, M. Discriminative scale space tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1561–1575. [Google Scholar] [CrossRef] [PubMed]

- Mueller, M.; Smith, N.; Ghanem, B. Context-aware correlation filter tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1396–1404. [Google Scholar]

- Danelljan, M.; Bhat, G.; Shahbaz Khan, F.; Felsberg, M. Eco: Efficient convolution operators for tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6638–6646. [Google Scholar]

- Dai, K.; Wang, D.; Lu, H.; Sun, C.; Li, J. Visual tracking via adaptive spatially-regularized correlation filters. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 4670–4679. [Google Scholar]

- Danelljan, M.; Hager, G.; Shahbaz Khan, F.; Felsberg, M. Learning spatially regularized correlation filters for visual tracking. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4310–4318. [Google Scholar]

- Li, Y.; Fu, C.; Ding, F.; Huang, Z.; Lu, G. AutoTrack: Towards high-performance visual tracking for UAV with automatic spatio-temporal regularization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11923–11932. [Google Scholar]

- Ma, F.; Sun, X.; Zhang, F.; Zhou, Y.; Li, H.C. What Catch Your Attention in SAR Images: Saliency Detection Based on Soft-Superpixel Lacunarity Cue. IEEE Trans. Geosci. Remote Sens. 2022, 61, 1–17. [Google Scholar] [CrossRef]

- Valmadre, J.; Bertinetto, L.; Henriques, J.; Vedaldi, A.; Torr, P.H. End-to-end representation learning for correlation filter based tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2805–2813. [Google Scholar]

- Wang, M.; Liu, Y.; Huang, Z. Large margin object tracking with circulant feature maps. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4021–4029. [Google Scholar]

- Danelljan, M.; Robinson, A.; Shahbaz Khan, F.; Felsberg, M. Beyond correlation filters: Learning continuous convolution operators for visual tracking. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part V 14. Springer International Publishing: Cham, Switzerland, 2016; pp. 472–488. [Google Scholar]

- Zhai, M.; Xiang, X.; Lv, N.; Kong, X. Optical flow and scene flow estimation: A survey. Pattern Recognit. 2021, 114, 107861. [Google Scholar] [CrossRef]

- Khodarahmi, M.; Maihami, V. A review on Kalman filter models. Arch. Comput. Methods Eng. 2023, 30, 727–747. [Google Scholar] [CrossRef]

- Chen, X.; Li, D.; Zou, Q. Exploiting Acceleration of the Target for Visual Object Tracking. IEEE Access 2021, 9, 73818–73825. [Google Scholar] [CrossRef]

| Tracker | Learning Rate | Published |

|---|---|---|

| KCF [13] | Static | 2014 (CVPR) |

| SRDCF [18] | Static | 2015 (ICCV) |

| DSST [14] | Static | 2016 (CVPR) |

| ECO [16] | Static | 2017 (CVPR) |

| Tracker | Precision | Improvement | Success | Improvement |

|---|---|---|---|---|

| ECODL | 85.1 | 0.83% | 63.7 | 0.63% |

| ECO | 84.4 | — | 63.3 | — |

| SRDCFDL | 76.5 | 0.26% | 58.8 | 0.85% |

| SRDCF | 76.3 | — | 58.3 | — |

| DSSTDL | 70.0 | 3.4% | 52.7 | 2.5% |

| DSST | 67.7 | — | 51.4 | — |

| KCFDL | 70.1 | 1.4% | 48.1 | 1.2% |

| KCF | 69.1 | — | 47.5 | — |

| Attribute | ECO | SRDCF | DSST | KCF |

|---|---|---|---|---|

| IV | 62.7/61.5 | 59.8/58.4 | 56.5/56.2 | 47.3/47.6 |

| OPR | 60.6/59.7 | 54.0/53.7 | 48.1/47.0 | 44.9/45.0 |

| IPR | 57.0/56.2 | 52.7/52.0 | 51.7/49.9 | 47.1/46.5 |

| SV | 61.0/60.1 | 54.9/54.5 | 48.3/46.6 | 40.0/39.2 |

| OV | 57.6/55.7 | 46.3/44.5 | 41.4/39.2 | 38.7/38.9 |

| MB | 64.0/62.1 | 57.6/55.9 | 49.6/47.4 | 46.9/45.0 |

| DEF | 59.3/59.6 | 53.4/53.2 | 44.3/42.0 | 44.5/43.9 |

| FM | 63.2/61.9 | 58.1/57.3 | 48.6/45.0 | 47.0/45.4 |

| OCC | 62.1/61.2 | 54.4/53.8 | 46.2/45.0 | 43.8/44.0 |

| BC | 62.2/61.8 | 55.5/54.9 | 52.5/53.1 | 49.2/49.0 |

| LR | 56.0/53.4 | 49.0/49.2 | 40.0/39.8 | 30.7/30/7 |

| Tracker | Precision | Improvement | Success | Improvement |

|---|---|---|---|---|

| ECODL | 71.9 | 1.4% | 49.8 | 0.40% |

| ECO | 70.9 | — | 49.6 | — |

| SRDCFDL | 66.8 | 0.75% | 45.9 | 0.44% |

| SRDCF | 66.3 | — | 45.7 | — |

| DSSTDL | 59.4 | 0.17% | 41.2 | 0.24% |

| DSST | 59.3 | — | 41.1 | — |

| KCFDL | 52.5 | 0.38% | 33.6 | 1.5% |

| KCF | 52.3 | — | 33.1 | — |

| Attribute | ECO | SRDCF | DSST | KCF |

|---|---|---|---|---|

| ARC | 42.4/42.4 | 38.4/38.2 | 33.6/33.4 | 27.4/26.7 |

| BC | 35.7/35.7 | 31.2/30.7 | 30.4/30.5 | 27.2/27.2 |

| CM | 47.6/47.3 | 43.9/44.6 | 37.9/37.7 | 31.9/31.0 |

| FM | 34.6/33.5 | 32.4/34.0 | 23.3/23.0 | 20.5/18.4 |

| FO | 27.7/28.1 | 24.8/24.8 | 20.9/21.0 | 18.5/18.5 |

| IV | 40.9/41.6 | 39.6/39.5 | 35.6/35.0 | 28.3/27.0 |

| LR | 35.6/35.6 | 30.9/30.1 | 26.4/26.4 | 18.0/18.0 |

| OV | 41.6/40.2 | 38.3/39.7 | 33.7/33.7 | 25.7/25.6 |

| PO | 43.4/43.0 | 38.7/38.4 | 34.4/34.2 | 28.4/28.2 |

| SO | 49.1/48.8 | 44.2/44.1 | 42.2/42.2 | 35.0/34.2 |

| SV | 46.6/46.4 | 42.8/42.7 | 37.4/37.3 | 29.6/29.1 |

| VC | 44.4/43.0 | 40.4/40.5 | 34.6/34.4 | 28.4/27.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, D.; Li, S.; Wei, Q.; Chai, H.; Han, T. Dynamic Learning Rate of Template Update for Visual Target Tracking. Mathematics 2023, 11, 1988. https://doi.org/10.3390/math11091988

Li D, Li S, Wei Q, Chai H, Han T. Dynamic Learning Rate of Template Update for Visual Target Tracking. Mathematics. 2023; 11(9):1988. https://doi.org/10.3390/math11091988

Chicago/Turabian StyleLi, Da, Song Li, Qin Wei, Haoxiang Chai, and Tao Han. 2023. "Dynamic Learning Rate of Template Update for Visual Target Tracking" Mathematics 11, no. 9: 1988. https://doi.org/10.3390/math11091988

APA StyleLi, D., Li, S., Wei, Q., Chai, H., & Han, T. (2023). Dynamic Learning Rate of Template Update for Visual Target Tracking. Mathematics, 11(9), 1988. https://doi.org/10.3390/math11091988