1. Introduction

The computational knowledge revolution has brought many developments in different fields, such as technical sciences, industrial engineering, economics, operations research, networks, chemical engineering, etc. Therefore, many new problems are being generated continuously. These problems need to be solved. A mathematical modelling technique is a critical tool that is utilized to formulate these problems as mathematical problems [

1].

The result is these problems are formulated as unconstrained (UCN), constrained (CON), multi-objective optimization problems (MOOP), and systems of non-linear equations (NE).

In recent years, there is a considerable advance in the study of non-linear equations.

Finding solutions to non-linear systems which include a large number of variables is a hard task. Hence, several derivative-free methods have been suggested to face this challenge [

2]. A system of non-linear equations exists in many fields of science and technology. Non-linear equations represent various sorts of implementations [

3,

4].

The applications of systems of non-linear equations are arising in different fields such as compressive sensing, chemical equilibrium systems, optimal power flow equations, and financial forecasting problems [

5,

6].

The non-linear system of equations is defined by

where the function

is monotone and continuously differentiable, satisfying

The solution to Problem (

1) can be generated by the classical methods, i.e., the new point is obtained by

where

is the step-size obtained by an appropriate line search method with the search direction

.

Many ideas have been implemented to obtain new proposed methods to design different formulas by which the step-size

and search direction

are generated. For example, the Newton method [

7], quasi-Newton method [

8], and the conjugate gradient (CG) method [

9,

10,

11,

12].

The Newton method, the quasi-Newton method, and their related methods are widely used to solve Problem (

1); due to their fast convergence speeds, see [

13,

14,

15,

16,

17]. These methods require computing the Jacobian matrix or the approximate Jacobian matrix per iteration; hence, they are very expensive.

Thus, it is more useful to sidestep using these methods to deal with large-scale non-linear systems of equations.

The CG algorithm is a popular classical methods, due to its simplicity, less storage, efficiency, and good convergence properties. The CG method has become more popular in solving non-linear equations [

18,

19,

20,

21,

22,

23].

Currently, many derivative-free iterative methods have been proposed to deal with the large-scale Problem (

1) [

24].

Cruz [

25] proposed a new spectral method to deal with large-scale systems of non-linear monotone equations. The core idea of the method, presented in [

25], can be briefly described as follows.

where

is the spectral parameter defined by

where

and

, with

sufficiently large, the author of [

25] set

. Furthermore, the author of [

25] defined the step-size

as follows

, where

is selected by a backtracking line search algorithm to make the following inequality holds:

where

for

.

The author of [

25] used Lemma 3.3 from [

26] to prove that the sequence

converges. Furthermore, the author of [

25] presented a convergence analysis for their algorithm and the numerical results show that the algorithm in is computationally efficient to solve Problem (

1).

Stanimirovic et al. [

27] proposed a new improved gradient descent iterations to solve Problem (

1). They proposed new directions and step-size gradient methods by defining various parameters. Furthermore, they presented a theoretical convergence analysis of their methods under standard assumptions. In all four cases in [

27], the direction vector is computed by using the system vector, i.e., they set

, with several step-size and parameters proposed by the authors.

Waziri et al. [

28] proposed some hybrid derivative-free algorithms to solve Problem (

1). They presented several parameters by which two methods were designed to deal with a non-linear system of equations. In both proposed methods the direction vector

is computed depending on the

, i.e., the values of the non-linear system of equations (vector

) are used to represent the search direction for some cases with several proposed parameters.

The performance of both methods was examined by solving a set of test problems of non-linear equations. These numerical experiments show the efficiency of both proposed methods.

Abubakar et al. [

5] presented a new hybrid spectral-CG algorithm to find approximate solutions to Problem (

1).

In all four cases used in [

5], the vector

was utilized to compute the search direction

.

Kumam et al. [

29] proposed a new hybrid approach to deal with systems of non-linear equations applied to signal processing. The new approach suggested by the authors of [

29] depends on hybridizing several parameters of the classical CG method by using a convex combination. Under sensible hypotheses, the authors of [

29] proved the global convergence of their hybrid technique. Therefore, this new hybrid approach presented incredible results for solving monotone operator equations compared with other methods.

Dai and Zhu [

19] proposed a modified Hestenes–Stiefel-type derivative-free (HS-CG method) to solve large-scale non-linear monotone equations. The HS-CG method was proposed by Hestenes and Stiefel [

10]. The modified method presented by the authors of [

19] is similar to the Dai and Wen [

30] method for unconstrained optimization.

Furthermore, the authors of [

19] presented a derivative-free new line search and step-size method. They established the convergence of their proposed method if the system of non-linear equations is Lipschitz, continuous and monotone. The numerical results obtained by this method demonstrate that the proposed approach in [

19] is more efficient compared to other methods.

Ibrahim et al. [

31] proposed a derivative-free iterative method to deal with non-linear equations with convex constraints using the projection technique. They also established a convergence analysis of the method under a few assumptions.

Thus, it can be said that there exists a consensus on estimating the value of the gradient vector in terms of the system vector, without using approximate values of the Jacobian matrix.

The hybridization of the gradient method with the random parameters (stochastic method) has been demonstrated to exhibit high efficiency in solving unconstrained and constrained optimization problems, see, for example, [

32,

33].

Therefore, the above briefly discusses the different ideas form previous literature by which the different formulas of step-size and search direction were designed. All of these motivated and guided us in the current research.

This study aims to use numerical differentiation methods to estimate the gradient vector by which the step-size can be computed.

There are many studies which estimate the gradient vector, see, for example, [

34,

35,

36,

37]. The common approaches to the numerical differentiation methods are the forward difference and the central difference [

38,

39,

40,

41,

42].

Sundry’s advanced methods provided fair results when numerically calculating the gradient vector values. See, for example, [

34,

35,

36,

37,

43,

44,

45].

Obtaining a good estimation of the gradient vector depends on the optimal selection of the finite-difference interval h.

For example, the authors of [

43,

44] proposed a random mechanism for selecting the optimal finite-difference interval

h and presented a good results when solving unconstrained minimization problems.

However, when solving Problem (

1), using the numerical differentiation methods to compute the approximate values of the Jacobian matrix is extremely expensive.

Thus, we use the core idea of the forward difference method without computing the Jacobian matrix or the gradient vector, i.e., our suggested method will benefit from the essence of forward difference method where the function evaluation is at most one for each iteration.

Therefore, the primary aim of this article is to develop a new algorithm that solves Problem (

1).

The contributions of this study are as follows:

Suggesting a two -step-size gradient method.

Design two search directions and with different trust regions that possess the sufficient descent property, i.e., and with .

Design an iterative formula by using the -step-size and direction by which the first candidate solution can be generated.

Hybridization of the two-step-size gradient method, and with the direction to generate the second candidate solution.

A modified line search technique is presented to make the hybrid method a globally convergent method.

A convergence analysis of the proposed algorithm is established.

Numerical experiments including solving a set of test problems as a non-linear system.

The rest of this paper is organized as follows, in the next section, we introduce the proposed method.

Section 3 presents the sufficient descent property and convergence analysis of the proposed method.

Section 4 shows the numerical results, followed by the conclusion reported in the last section.

3. Convergence Analysis of the proposed algorithm

The following assumptions, lemmas and theorems are required to establish the global convergence of Algorithm 1.

| Algorithm 1 New proposed algorithm “HRSG” |

-

Input:

, , , , a starting point and . -

Output:

the approximate solution of the system F, . - 1:

Compute , and set . - 2:

whiledo - 3:

Compute . ▹ defined by ( 8). - 4:

Compute . ▹ and defined by ( 11) and ( 12), respectively. - 5:

Pick as the best solution to . - 6:

Set and . ▹ Accepted as the point. - 7:

while do - 8:

Set . ▹ computed by ( 17). - 9:

Compute . - 10:

Compute . - 11:

end while - 12:

Set and . ▹ Update the solution. - 13:

Set - 14:

end while - 15:

return and .

|

Assumption 1.

(1a) there are a set of solutions to System (1). (1b) the set of non-linear systems F satisfies the Lipschitz condition, i.e., there exists a positive constant L such thatfor all . The next lemma shows that the proposed method “HRSG” has the sufficient descent property and trust region feature.

Lemma 1. Let sequence be generated by Algorithm 1. Then, we haveandwhere and . Proof. If

,

, then

and

, implying (

20) and (

21).

Since

,

,

and

, then

Therefore, (

20) is true. Since

,

,

and

, then

. Therefore, the proof is complete. □

Lemma 2. The sequence of the research direction } defined by (12) satisfies the following properties.and Proof. Since , then .

From (

20), we obtain

.

According to (

10) and (

13),

for

, while

has two cases.

Case 1: If , then .

We set ; hence, , with .

Case 2: If , then

In this case, since

and

, then

. Hence, we have

Therefore, the following inequality is true.

We set ; hence, in this case, , with .

Both cases satisfy with . Then the direction satisfies the sufficient descent condition.

Since

, by using (

21), we obtain

, and set

. Then, (

23) is true, with

.

Therefore, the proof is complete. □

We adopt the concept from [

16] in the following lemmas.

Lemma 3. Solodov and Svaiter [16] assumed that satisfies . Thus, let the sequence be generated by Algorithm 1. Then, Corollary 1. Lemma 3 implies that the sequence is non-increasing, convergent and therefore bounded. Furthermore, is bounded and Corollary 2. Form (26), it is easy to prove that the following limit is true.where the step length is defined by (17). Proof. By using the result in (

26), since

, then

Therefore, (

27) is true. □

Lemma 4. Let be generated by Algorithm 1, then Proof. By using (

7)–(

9), (

21) and (

26), since

, then

.

From (

8), we have

for

, where

. Then

Since and , then .

Therefore, the following is true

From (

29) and (

30) we can say the following is true.

By using (

26), then

.

Since

for all

k (where

is bounded), then (

28) is true.

Therefore, Lemma 4 holds. □

Theorem 1. Let be generated by Algorithm 1. Then Proof. By using proof contradiction, we assume that (

32) does not hold true, that is, there exists a positive constant

such that

By combing the sufficient descent condition (

20) and the Cauchy–Schwarz inequality we obtain

By using the result in (

28) with

, then (

36) contradicts with the facts.

Therefore, Theorem 1 holds. □

Note 1: Both search directions and satisfy the sufficient descent condition, i.e., and with .

Note 2: , with .

Note 3: Algorithm 1 includes two different search direction

and

and three step-sizes

,

and

with a mix of random and deterministic parameters, enabling Algorithm 1 capable of approaching an approximate solution to Problem (

1). The difference between the size of the trust region of both search directions helps the proposed method search the null space (where solutions are) of the entire feasible region.

Note 4: Theorem 1 confirms that sequence

obtained by Algorithm 1 makes System (

1) approach 0 as long as

, i.e.,

4. Numerical Experiments

In this section, nine test problems are considered to illustrate the convergence of the proposed Algorithm 1.

These test problems are taken from [

46]. All codes were programmed in MATLAB version 8.5.0.197613 (R2015a) and ran on a PC with Intel(R) Core(TM) i5-3230M

[email protected] 2.60 GHz with RAM 4.00GB of memory on the Windows 10 operating system.

The parameters of Algorithm 1 are listed as follows.

, , , , and .

Numerical Results for a Non-Linear System

The section gives the numerical results of a system of non-linear equations solved by Algorithm 1 that represents two approaches (App1 and App2).

The performance of the two approaches is tested by a set of test problems (a system of non-linear equations).

The number of dimensions of the test problems (systems of non-linear equations) are listed as follows: .

The HRSG method described by Algorithm 1 contains two algorithms (HRSG1 and HRSG2). The HRSG1 algorithm denotes the first approach (App1) described in

Section 2.1.1.

While the HRSG2 algorithm indicates the second approach (App1) described in

Section 2.1.2.

Therefore, the results of both algorithms listed in

Table 1,

Table 2,

Table 3,

Table 4,

Table 5,

Table 6,

Table 7,

Table 8,

Table 9,

Table 10,

Table 11,

Table 12,

Table 13,

Table 14,

Table 15,

Table 16,

Table 17 and

Table 18 are obtained by running each algorithm 51 times for all the test problems with five cases of the number dimensions and seven initial points.

The stopping criterion and evaluation criteria of Algorithm 1 are listed as follows. The stopping criterion is or , then if the final result of a test problem is , the algorithm is incapable of solving this test problem, with setting .

Therefore, the evaluation criteria used to test the performance of our proposed method are the number of iterations (Itr), function evaluations (EFs), and time (Tcpu). Regarding these three criteria, the results obtained by 51 runs of Algorithm 1 (HRSG1 and HRSG2) are classified into three types of the results as follows.

The best results of the number of iterations (BItr), function evaluation (BEFs), and run time (BTcpu).

The worst results of the number of iterations (WItr), function evaluation (WEFs), and run time (WTcpu).

The mean results of the number of iterations (MItr), function evaluation (MEFs), and run time (MTcpu).

All three types of results are listed in

Table 1,

Table 2,

Table 3,

Table 4,

Table 5,

Table 6,

Table 7,

Table 8,

Table 9,

Table 10,

Table 11,

Table 12,

Table 13,

Table 14,

Table 15,

Table 16,

Table 17 and

Table 18.

Thus, we compare the performance of Algorithm 1 (HRSG1, HRSG2) with the performance of the Algo algorithm proposed by the authors of [

46].

Table 1 gives the best results (BItr, BEFs, and BTcpu) of the HRSG1 algorithm versus the results of the HRSG1 and Algo algorithms. Column 1 in

Table 1 contains the number dimensions of Problem 1,

.

Column 1 in

Table 1 presents the initial points (INP) of Problem 1. Columns 3–5 give the result of the HRSG1 algorithm according to the BItr, BEFs, and BTcpu. Columns 6–8 give the result of the HRSG2 algorithm according to the BItr, BEFs, and BTcpu. The result of the Algo algorithm according to the BItr, BEFs, and BTcpu are listed in Columns 9–12.

Table 2,

Table 3,

Table 4,

Table 5,

Table 6,

Table 7,

Table 8 and

Table 9 are similar to

Table 1 for Problems 2–9.

We consider the results of the Algo algorithm from [

46] and present the best results (BItr, BEFs, and BTcpu), these results are taken from Columns 3–6 in Tables A1–A9 from [

46]. This is because no other results of the Algo algorithm are presented in [

46].

Table 10,

Table 11,

Table 12,

Table 13,

Table 14,

Table 15,

Table 16,

Table 17 and

Table 18 present the results of the HRSG1 and HRSG2 algorithms regarding the WItr, WEFs, WTcpu, MItr, MEFs, and MTcpu, respectively.

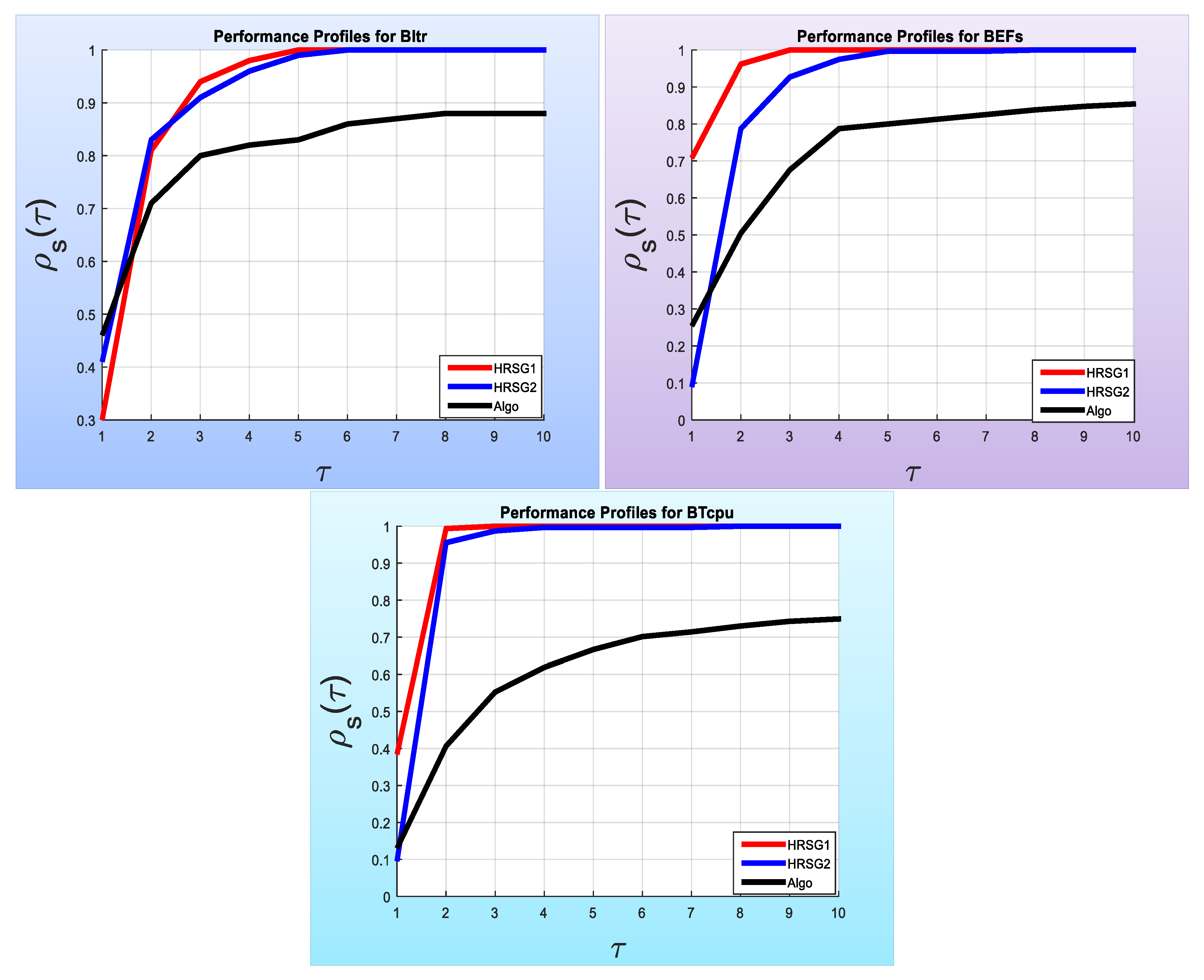

The performance profile is used to show the success of the proposed algorithm to solve the test problems [

47,

48,

49,

50,

51].

Thus, the numerical results are shown in the form of performance profiles to simply display the performance of the proposed algorithms [

49].

The most important characteristic of the performance profiles is that the results listed in many tables can be shown in one figure.

This is implemented by plotting the different solvers in a cumulative distribution function .

For example, the information listed in

Table 1,

Table 2,

Table 3,

Table 4,

Table 5,

Table 6,

Table 7,

Table 8 and

Table 9 are summarized in

Figure 1 to give an obvious image of the performance of the three algorithms, HRSG1, HRSG2 and Algo. The first graph (left of

Figure 1) gives the performance of the BItr for the three algorithms. At

,

of test problems were solved by the Algo method against

and

of the test problems solved by the HRSG1 and HRSG2 methods, respectively. This means that the rank of the three algorithms is as follows, Algo algorithm, the HRSG2 algorithm, and HRSG1 algorithm, for the the BItr. When

,

of test problems were solved by the HRSG2 method against

and

of the test problems solved by the HRSG1 and Algo methods, respectively. This means that the rank of the three algorithms is as follows, the HRSG2 algorithm, the HRSG1 algorithm, and the Algo algorithm, for the the BItr.

If , of the test problems were solved by the HRSG1 method against and of the test problems solved by the HRSG2 and Algo methods, respectively. This means that the rank of the three algorithms is as follows, HRSG1, HRSG2, and the Algo algorithm for the BItr.

At , all the test problems were solved by the HRSG1 method against and of the test problems solved by the HRSG2 and Algo methods, respectively. This means that the rank of the three algorithms is as follows, HRSG1, HRSG2, and the Algo for the BItr.

Finally, at

, all the test problems were solved by the HRSG1 and HRSG2 methods against

of the test problems solved by the Algo method. This means that the Algo method failed to solve

of the test problems. It is worth noting that this failure is indicated by (-) in

Table 3 (Table A3 of [

46]).

The performance profiles for the BFEs and BTcpu are illustrated by the second and third graphs of

Figure 1. Therefore, it is clear that the performance of the HRSG1 algorithm is the best.

Furthermore, the results listed in

Table 10,

Table 11,

Table 12,

Table 13,

Table 14,

Table 15,

Table 16,

Table 17 and

Table 18 are presented in

Figure 2 and

Figure 3 for the WItr, WFEs and WTcpu, and MEItr, MEFEs and METcpu, respectively.

In general, the performance of the HRSG1 is better than the performance of the HRSG2.

Note: Both our proposed algorithms (HRSG1 and HRSG2) are capable of solving all the test problems at the lowest cost, where the WItr did not exceed 100, the WFEs did not exceed 1000 and the WTcpu did not exceed 10 s; while the Algo algorithm failed to solve

of the test problems, illustrated by (-) in

Table 3 (Table A3 of [

46]).

Thus, in general, the proposed algorithm (HRSG) is competitive with, and in all cases superior to, the Algo algorithm in terms of efficiency, reliability and effectiveness to find the approximate solution of the test problems of the system of non-linear of equations.

5. Conclusions and Future Work

In this paper, we proposed a new adaptive hybrid two-step-size gradient method to solve large-scale systems of non-linear equations.

The proposed algorithm includes a mix of random and deterministic parameters by which two directions are designed. By simulating the forward difference method the (, ) step-sizes are generated iteratively. The two search directions possess different trust regions and satisfy the sufficient descent condition. The convergence analysis of the HRSG method was established.

The diversity and multiplicity of the parameters give the proposed algorithm several opportunities to reach the feasible region. Therefore, this was a good action that made the proposed algorithm capable of rapidly approaching the approximate solution of all the test problems at each run.

In general, the numerical results demonstrated that the HRSG method is superior compared to the results obtained by the Algo method.

Several evaluation criteria were used to test the performance of the proposed algorithm, providing a fair assessment of the HRSG method.

The proposed HRSG method is represented by two algorithms, HRSG1 and HRSG2.

It is noteworthy that the performance of the HRSG1 algorithm is more efficiency and effective than the HRSG2 algorithm. This is because of the errors resulting from calculating the step-size (interval).

The combination of the suggested method with the new line search approach results in a new globally convergent method. This new globally convergent method is capable of dealing with local minimization problems. Therefore, when any meta-heuristic technique as a global optimization algorithm is combined with the HRSG as a globally convergent method, the result is a new hybrid semi-gradient meta-heuristic algorithm. The idea behind this hybridization is to utilize the advantages of both techniques. Therefore, the implementation of this idea will be our interest in future work.

Furthermore, the result of this hybridization is a new hybrid semi-gradient meta-heuristic algorithm, capable of dealing with unconstrained, constrained, and multi-objective optimization problems.

It is also attractive to modify and adapt the HRSG method to make it capable of dealing with image restoration problems.

Moreover, it is possible to hybridise the HRSG method with new versions of the CG method. As a result, we could obtain a new hybrid method, capable of training deep neural networks.

The idea of the HRSG method depends on imitating the forward difference method by using one point to estimate the values of the gradient vector at each iteration, where the function evaluation is, at most, one per iteration. Therefore, the HRSG method contains two approaches used to implement this task, namely HRSG1 and HRSG2. Therefore, in future work, both approaches can be used by using the forward and central differences with more than one point.

Furthermore, the approximate values of the gradient vectors can be calculated using the forward and central differences randomly in this case, where the number of function evaluations is zero.

Hence, the proposed method obtained from the above proposals can be applied to deal with systems of non-linear equations, unconstrained, constrained, and multi-objective optimization problems, image restoration, and training deep neural networks.

List of Test Problems

Problem 1: Exponential Function [

27,

46].

Problem 2: Exponential Function.

Problem 3: Non-Smooth Function [

27,

46].

Problem 5: Strictly Convex Function I [

27,

46].

Problem 6: Strictly Convex Function II [

46].

Problem 7: Tri-Diagonal Exponential Function [

27,

46].

where

.

Problem 8: Non-Smooth Function [

27,

46].

Problem 9: The Trig Exponential Function [

27].

Initial Points (INP): , , , , , and .