Semi-Supervised Medical Image Classification with Pseudo Labels Using Coalition Similarity Training

Abstract

1. Introduction

- Developing a collaborative similarity learning strategy aimed at optimizing pseudo-labels in order to enhance accuracy and expedite model convergence.

- To ensure the quality of pseudo-labels during initial training, we employ a strategy of mutual correction involving semantic similarity and instance similarity. Furthermore, in order to improve the performance of the model, the similarity score is utilized as a weight to guide samples towards maintaining an appropriate distance from misclassification results during the classification process.

- The model’s generalization ability can be improved by incorporating adaptive consistency constraints into the loss function, thus enhancing its performance on untrained data sets.

2. Related Work

2.1. Semi-Supervised Learning

2.2. Similarity Learning

3. Materials and Methods

3.1. Preliminaries

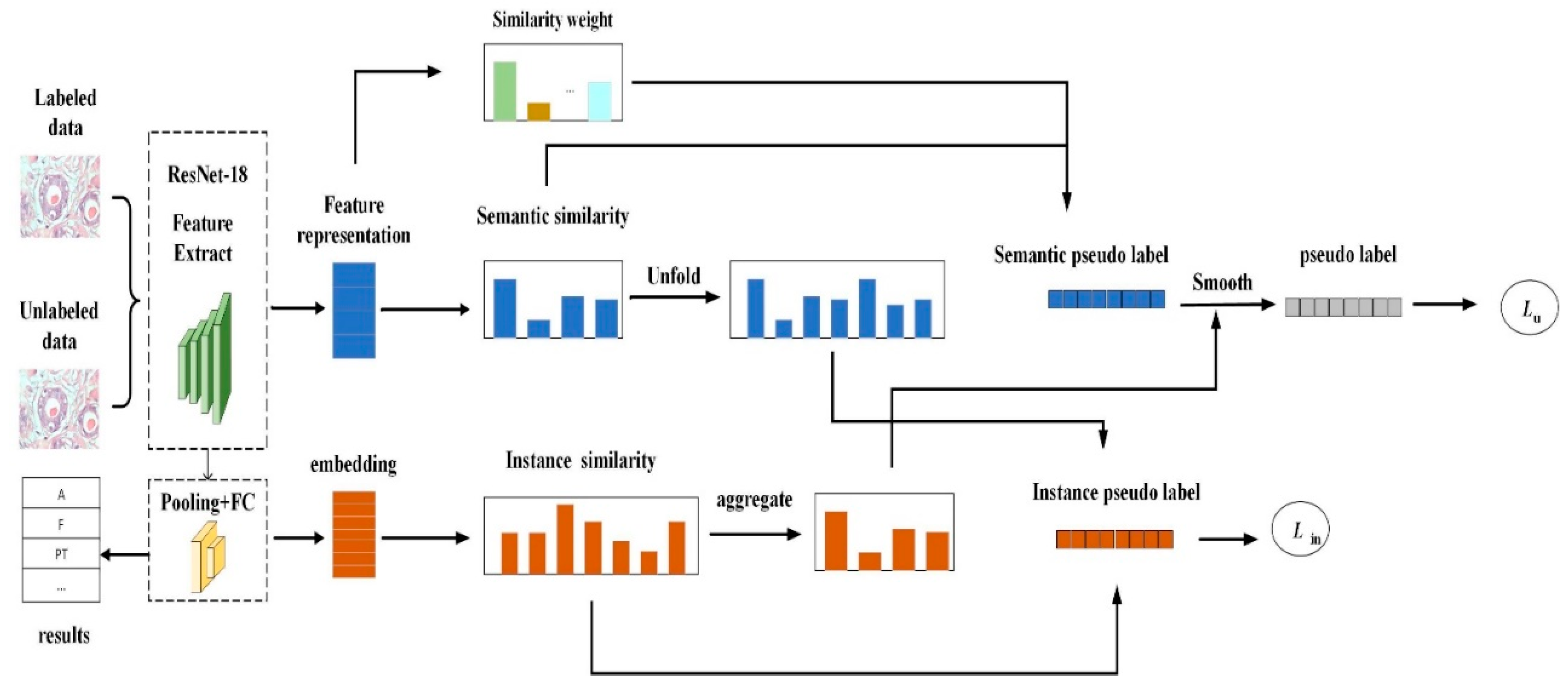

3.2. Coalition Similarity Training Framework

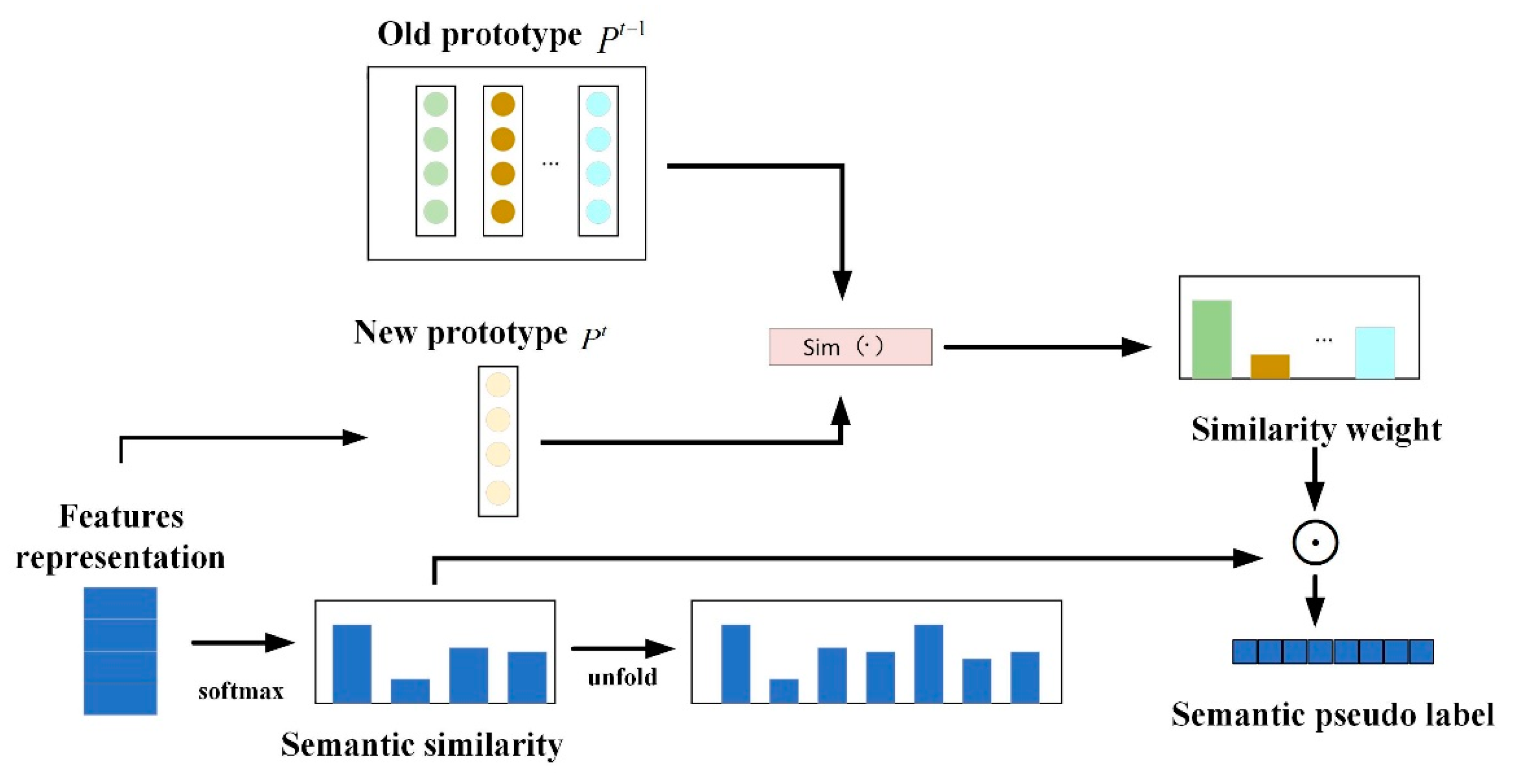

3.2.1. Semantic Pseudo Labels

3.2.2. Instance Pseudo Labels

3.3. Loss Functions

3.4. Model Training

- Loading the network structure and randomly initializing the network parameters.

- The ResNet-18 network is utilized to process both labeled and unlabeled samples. The resulting feature representations are then passed through the Softmax layer, enabling us to obtain the predicted labels for the labeled samples as and the pseudo-labels for the unlabeled samples.

- Utilize the outputs of the fully connected layer to compute the class prototype, followed by calculating the cosine similarity between the new and old prototypes as the measure of similarity weight. Similarity weights and pseudo-labels are combined to generate semantic pseudo-labels.

- Embeddings from weakly and strongly augment calculate separately the instance similarity. The weakly augmented embeddings are calibrated with semantic pseudo-labels to generate instance pseudo-labels and are sent to the cross-entropy loss function at the same time with the strongly augmented embeddings for optimization.

- The semantic pseudo-labels are smoothed by instance similarity to obtain the final pseudo-labels. The chosen samples are utilized for computing the MMD loss based on the given threshold. Finally, the network parameters are ultimately optimized through the minimization of the overall loss function.

- Repeat steps (2)–(5) for each training iteration.

- Assess the performance of the trained model by applying it to the test dataset. The test sample is utilized as the input, and the trained ResNet-18 network generates the predicted classification output, which is compared with the truth values to compute the accuracy.

| Algorithm 1. Coalition similarity framework. |

| 1: require: : a batch B of labeled and unlabeled samples. : Total steps required for training. |

| 2: while do |

| 3: compute semantic pseudo labels by Equations (4) and (5) |

| 4: compute instance pseudo labels by Equations (6)–(8) |

| 5: optimize model by Equation (12) |

| 6: update model’s parameters |

| 7: update K-type prototypes by Equation (2) |

| 8: |

| 9: end |

| 10: output: the well trained model |

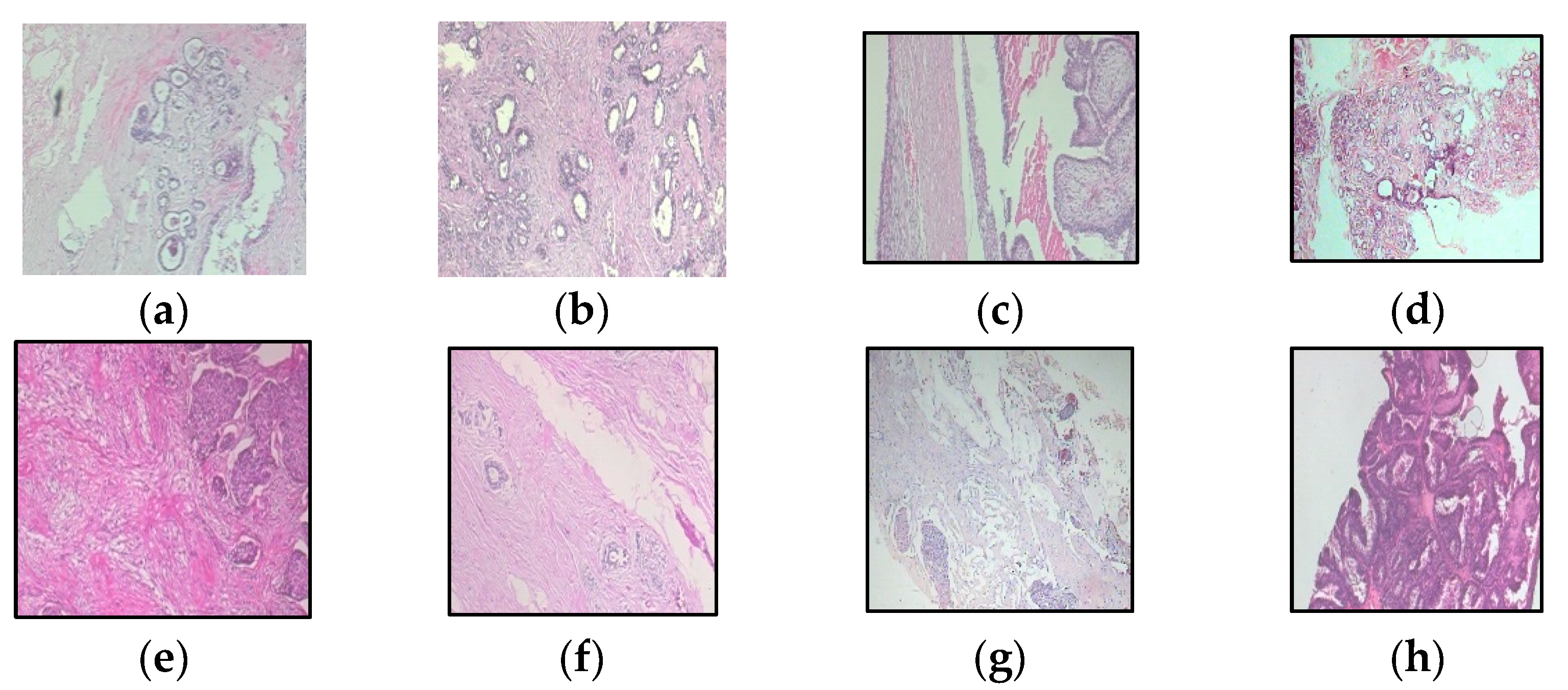

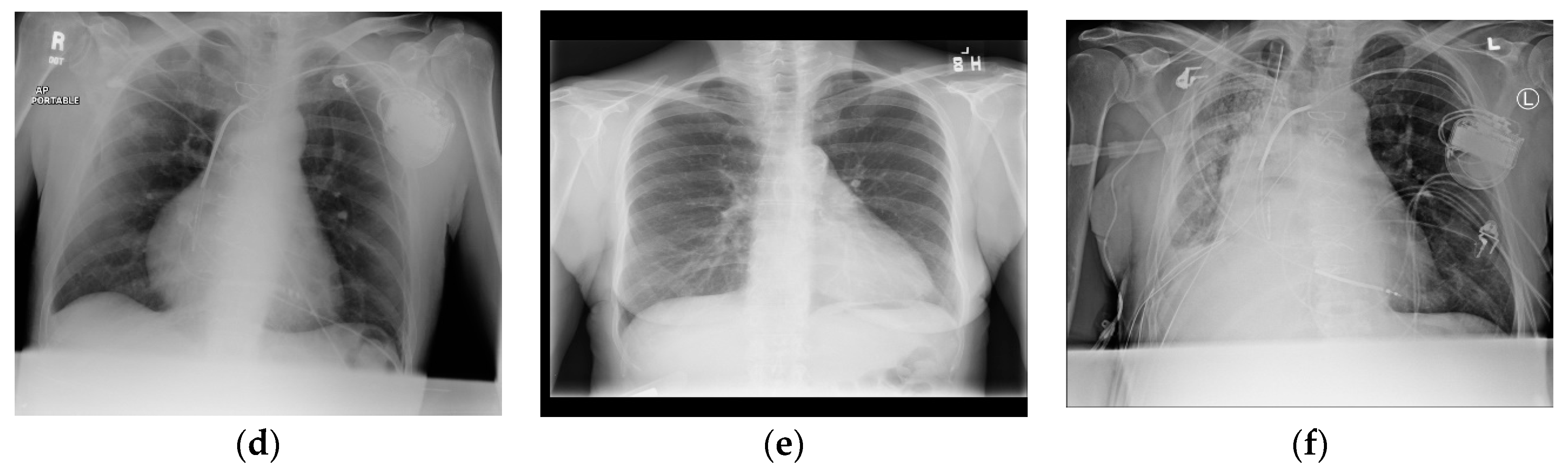

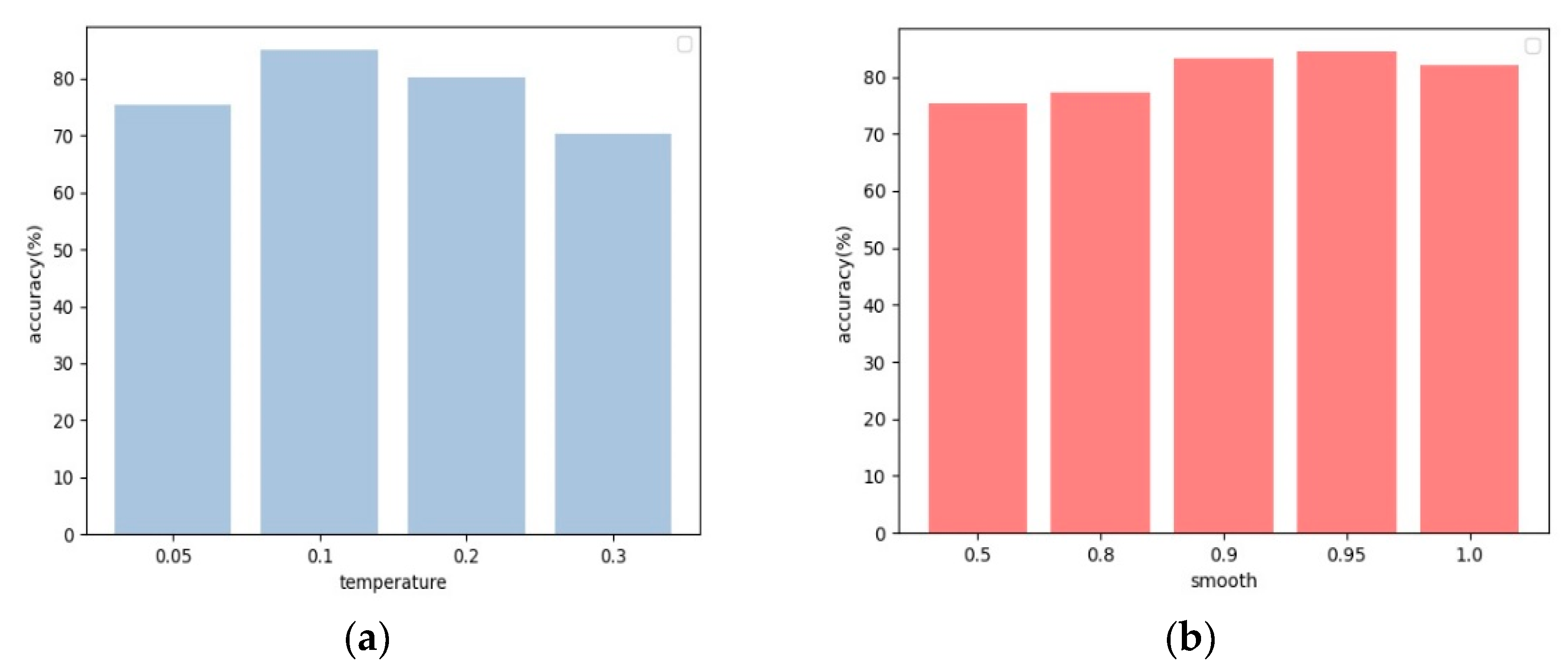

4. Experiments

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Huynh, T.; Nibali, A.; He, Z. Semi-supervised learning for medical image classification using imbalanced training data. Comput. Methods Programs Biomed. 2022, 216, 106628. [Google Scholar] [CrossRef] [PubMed]

- Kostopoulos, G.; Karlos, S.; Kotsiantis, S.; Ragos, O. Semi-supervised regression: A recent review. J. Intell. Fuzzy Syst. 2018, 35, 1483–1500. [Google Scholar] [CrossRef]

- Wang, K.; Wang, X.S.; Cheng, Y.H. Few-shot learning based on enhanced pseudo-labels and graded pseudo-labeled data selection. Int. J. Mach. Learn. Cybern. 2023, 14, 1783–1795. [Google Scholar] [CrossRef]

- Zhou, S.F.; Tian, S.W.; Yu, L.; Wu, W.D.; Zhang, D.Z.; Peng, Z.; Zhou, Z.C. Growth threshold for pseudo labeling and pseudo label dropout for semi-supervised medical image classification. Eng. Appl. Artif. Intell. 2024, 130, 107777. [Google Scholar] [CrossRef]

- Wang, P.; Wang, X.X.; Wang, Z.; Dong, Y.F. Learning Accurate Pseudo-Labels via Feature Similarity in the Presence of Label Noise. Appl. Sci. 2024, 14, 2759. [Google Scholar] [CrossRef]

- Bai, T.; Zhang, Z.; Guo, S.; Zhao, C.; Luo, X. Semi-supervised cell detection with reliable pseudo-labels. J. Comput. Biol. 2022, 29, 1061–1073. [Google Scholar] [CrossRef] [PubMed]

- Zheng, M.; You, S.; Huang, L.; Wang, F.; Qian, C.; Xu, C. SimMatch: Semi-supervised learning with similarity matching. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 14451–14461. [Google Scholar] [CrossRef]

- Liu, F.; Tian, Y.; Chen, Y.; Liu, Y.; Belagiannis, V.; Carneiro, G. ACPL: Anti-curriculum pseudo-labelling for semi-supervised medical image classification. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 20665–20674. [Google Scholar] [CrossRef]

- Komodakis, N.; Zagoruyko, S. Paying more attention to attention: Improving the performance of convolutional neural networks via attention transfer. In Proceedings of the 5th International Conference on Learning Representations (ICLR) 2017, Toulon, France, 24–26 April 2017. [Google Scholar]

- Li, X.; Grandvalet, Y.; Davoine, F. Explicit inductive bias for transfer learning with convolutional networks. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 2825–2834. [Google Scholar]

- Spanhol, F.A.; Oliveira, L.S.; Petitjean, C.; Heutte, L. A dataset for breast cancer histopathological image classification. IEEE Trans. Biomed. Eng. 2016, 63, 1455–1462. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Peng, Y.; Lu, L.; Lu, Z.; Bagheri, M.; Summers, R.M. ChestX-Ray8: Hospital-scale Chest X-Ray database and benchmarks on weakly-supervised classification and localization of common thorax diseases. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3462–3471. [Google Scholar] [CrossRef]

- Gui, Q.; Zhou, H.; Guo, N.; Niu, B. A survey of class-imbalanced semi-supervised learning. Mach. Learn. 2023, 1–30. [Google Scholar] [CrossRef]

- Zhang, X.; Jing, X.-Y.; Zhu, X.; Ma, F. Semi-supervised person re-identification by similarity-embedded cycle GANs. Neural Comput. Appl. 2020, 32, 14143–14152. [Google Scholar] [CrossRef]

- Laine, S.; Aila, T. Temporal ensembling for semi-supervised learning. arXiv 2016, arXiv:1610.02242. [Google Scholar] [CrossRef]

- Zheng, F.; Liu, Z.; Chen, Y.; An, J.; Zhang, Y. A novel adaptive multi-view non-negative graph semi-supervised ELM. IEEE Access 2020, 8, 116350–116362. [Google Scholar] [CrossRef]

- Shaik, R.U.; Unni, A.; Zeng, W. Quantum based pseudo-labelling for hyperspectral imagery: A simple and efficient semi-supervised learning method for machine learning classifiers. Rem. Sens. 2022, 14, 5774. [Google Scholar] [CrossRef]

- Zhu, P.; Zhang, L.; Wang, Y.; Mei, J.; Zhou, G.; Liu, F.; Liu, W.; Takis Mathiopoulos, P. Projection learning with local and global consistency constraints for scene classification. ISPRS J. Photogramm. Remote Sens. 2018, 144, 202–216. [Google Scholar] [CrossRef]

- Lee, D.-H. Pseudo-label: The simple and efficient semi-supervised learning method for deep neural networks. In Proceedings of the ICML 2013 Workshop: Challenges in Representation Learning (WREPL), Atlanta, GA, USA, 21 June 2013; Goodfellow, I., Erhan, D., Bengio, Y., Eds.; p. 896. [Google Scholar]

- Shi, W.; Gong, Y.; Ding, C.; Ma, Z.; Tao, X.; Zheng, N. Transductive semi-supervised deep learning using min-max features. In Proceedings of the Computer Vision–ECCV 2018, Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer: Berlin/Heidelberg, Germany; pp. 311–327. [CrossRef]

- Wu, D.; Shang, M.; Luo, X.; Xu, J.; Yan, H.; Deng, W.; Wang, G. Self-training semi-supervised classification based on density peaks of data. Neurocomputing 2018, 275, 180–191. [Google Scholar] [CrossRef]

- Li, X.; Huang, J.; Liu, Y.; Zhou, Q.; Zheng, S.; Schiele, B.; Sun, Q. Learning to teach and learn for semi-supervised few-shot image classification. Comput. Vision Image Underst. 2021, 212, 103270. [Google Scholar] [CrossRef]

- Pham, H.; Dai, Z.; Xie, Q.; Le, Q.V. Meta pseudo labels. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 11552–11563. [Google Scholar] [CrossRef]

- Liu, K.; Liu, Z.; Liu, S. Semi-supervised breast histopathological image classification with self-training based on non-linear distance metric. IET Image Process. 2022, 16, 3164–3176. [Google Scholar] [CrossRef]

- Sohn, K.; Berthelot, D.; Carlini, N.; Zhang, Z.; Zhang, H.; Raffel, C.A.; Cubuk, E.D.; Kurakin, A.; Li, C.-L. FixMatch: Simplifying semi-supervised learning with consistency and confidence. In Proceedings of the 34th Conference on Neural Information Processing Systems (NeurIPS 2020), Online Conference, 6–12 December 2020; p. 51. [Google Scholar]

- Wang, X.; Chen, H.; Xiang, H.; Lin, H.; Lin, X.; Heng, P.-A. Deep virtual adversarial self-training with consistency regularization for semi-supervised medical image classification. Med. Image Anal. 2021, 70, 102010. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Y.; Huang, L.; Zhou, T.; Sun, H. Combating medical noisy labels by disentangled distribution learning and consistency regularization. Future Gener. Comput. Syst. 2023, 141, 567–576. [Google Scholar] [CrossRef]

- Xia, P.; Zhang, L.; Li, F. Learning similarity with cosine similarity ensemble. Inf. Sci. 2015, 307, 39–52. [Google Scholar] [CrossRef]

- Ye, H.-J.; Zhan, D.-C.; Jiang, Y.; Zhou, Z.-H. What makes objects similar: A unified multi-metric learning approach. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 1257–1270. [Google Scholar] [CrossRef] [PubMed]

- Zhang, B.; Zheng, W.; Zhou, J.; Lu, J. Attributable visual similarity learning. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 7522–7531. [Google Scholar] [CrossRef]

- Wang, Y.; Huang, Y.; Wang, Q.; Zhao, C.; Zhang, Z.; Chen, J. Graph-based self-training for semi-supervised deep similarity learning. Sensors 2023, 23, 3944. [Google Scholar] [CrossRef] [PubMed]

- Hamrouni, L.; Kherfi, M.L.; Aiadi, O.; Benbelghit, A. Plant Leaves Recognition Based on a Hierarchical One-Class Learning Scheme with Convolutional Auto-Encoder and Siamese Neural Network. Symmetry 2021, 13, 1705. [Google Scholar] [CrossRef]

- Huang, L.B.; Chen, Y.S. Dual-Path Siamese CNN for Hyperspectral Image Classification With Limited Training Samples. IEEE Geosci. Remote Sens. Lett. 2021, 18, 518–522. [Google Scholar] [CrossRef]

- Xiao, Y.C.; Zhu, F.; Zhuang, S.X.; Yang, Y. Identification of Unknown Electromagnetic Interference Sources Based on Siamese-CNN. J. Electron. Test. 2023, 39, 597–609. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Wu, H.; Prasad, S. Semi-supervised deep learning using pseudo labels for hyperspectral image classification. IEEE Trans. Image Process. 2018, 27, 1259–1270. [Google Scholar] [CrossRef] [PubMed]

- Northcutt, C.; Jiang, L.; Chuang, I. Confident learning: Estimating uncertainty in dataset labels. J. Artif. Intell. Res. 2021, 70, 1373–1411. [Google Scholar] [CrossRef]

- Cascante-Bonilla, P.; Tan, F.; Qi, Y.; Ordonez, V. Curriculum labeling: Revisiting pseudo-labeling for semi-supervised learning. Proc. AAAI Conf. Artif. Intell. 2021, 35, 6912–6920. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Kai, L.; Li, F.-F. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar] [CrossRef]

- Phan, M.H.; Ta, T.-A.; Phung, S.L.; Tran-Thanh, L.; Bouzerdoum, A. Class similarity weighted knowledge distillation for continual semantic segmentation. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 16845–16854. [Google Scholar] [CrossRef]

- Verma, V.; Kawaguchi, K.; Lamb, A.; Kannala, J.; Solin, A.; Bengio, Y.; Lopez-Paz, D. Interpolation consistency training for semi-supervised learning. Neural Netw. 2022, 145, 90–106. [Google Scholar] [CrossRef] [PubMed]

- Tarvainen, A.; Valpola, H. Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; pp. 1195–1204. [Google Scholar]

- Mi, W.; Li, J.; Guo, Y.; Ren, X.; Liang, Z.; Zhang, T.; Zou, H. Deep learning-based multi-class classification of breast digital pathology images. Cancer Manag. Res. 2021, 13, 4605–4617. [Google Scholar] [CrossRef] [PubMed]

- Boumaraf, S.; Liu, X.; Zheng, Z.; Ma, X.; Ferkous, C. A new transfer learning based approach to magnification dependent and independent classification of breast cancer in histopathological images. Biomed. Signal Process. Control 2021, 63, 102192. [Google Scholar] [CrossRef]

- Litrico, M.; Del Bue, A.; Morerio, P. Guiding pseudo-labels with uncertainty estimation for source-free unsupervised domain adaptation. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7640–7650. [Google Scholar] [CrossRef]

| Method\AP | 5% | 10% | 20% | 50% | 80% | 95% |

|---|---|---|---|---|---|---|

| Pseudo-Labeling [19] | 65.35% | 73.98% | 79.26% | 87.69% | 89.99% | 91.02% |

| ICT [41] | 69.57% | 73.75% | 81.36% | 89.03% | 90.65% | 93.44% |

| FixMatch [25] | 71.63% | 74.04% | 82.31% | 90.35% | 92.69% | 94.04% |

| Mean Teacher [42] | 70.23% | 73.66% | 81.50% | 88.93% | 90.89% | 93.29% |

| Ours | 74.28% | 77.43% | 85.02% | 92.72% | 93.65% | 95.21% |

| Method | Type | Percentage | Accuracy |

|---|---|---|---|

| Mi et al. [43] | Supervised | 100% | 89.67% |

| Boumaraf et al. [44] | Supervised | 100% | 92.41% |

| Ours | Semi-supervised | 80% | 93.65% |

| Litrico et al. [45] | Unsupervised | 0% | 72.98% |

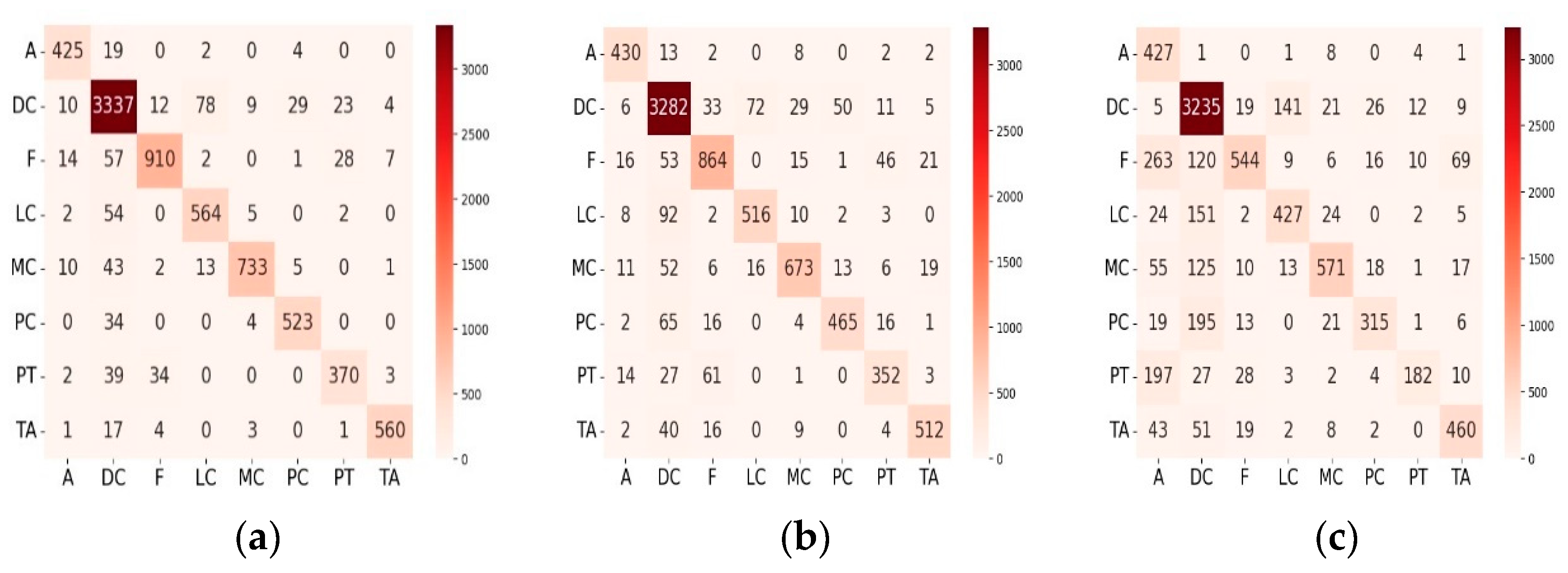

| Type of Disease | FixMatch | Ours |

|---|---|---|

| Adenosis | 90.23% | 91.59% |

| Ductal carcinoma | 90.68% | 92.69% |

| Fibroadenoma | 92.36% | 94.79% |

| Lobular carcinoma | 78.03% | 85.59% |

| Mucinous carcinoma | 93.00% | 97.21% |

| Papillary carcinoma | 90.10% | 93.06% |

| Phyllodes tumor | 89.27% | 87.67% |

| Tubular adenoma | 94.63% | 97.39% |

| Method\AP | 5% | 10% | 20% | 50% | 80% | 95% |

|---|---|---|---|---|---|---|

| Pseudo-Labeling | 57.64% | 65.27% | 72.46% | 87.69% | 85.21% | 88.92% |

| ICT | 65.25% | 73.75% | 78.22% | 82.77% | 87.34% | 93.44% |

| FixMatch | 68.09% | 72.96% | 80.34% | 84.31% | 89.56% | 92.08% |

| Mean Teacher | 67.96% | 73.01% | 79.07% | 83.13% | 88.23% | 92.47% |

| Ours | 69.93% | 74.20% | 80.21% | 84.30% | 89.77% | 93.15% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, K.; Ling, S.; Liu, S. Semi-Supervised Medical Image Classification with Pseudo Labels Using Coalition Similarity Training. Mathematics 2024, 12, 1537. https://doi.org/10.3390/math12101537

Liu K, Ling S, Liu S. Semi-Supervised Medical Image Classification with Pseudo Labels Using Coalition Similarity Training. Mathematics. 2024; 12(10):1537. https://doi.org/10.3390/math12101537

Chicago/Turabian StyleLiu, Kun, Shuyi Ling, and Sidong Liu. 2024. "Semi-Supervised Medical Image Classification with Pseudo Labels Using Coalition Similarity Training" Mathematics 12, no. 10: 1537. https://doi.org/10.3390/math12101537

APA StyleLiu, K., Ling, S., & Liu, S. (2024). Semi-Supervised Medical Image Classification with Pseudo Labels Using Coalition Similarity Training. Mathematics, 12(10), 1537. https://doi.org/10.3390/math12101537