Abstract

In this paper, we consider a novel vector-valued rational interpolation algorithm and its application. Compared to the classic vector-valued rational interpolation algorithm, the proposed algorithm relaxes the constraint that the denominators of components of the interpolation function must be identical. Furthermore, this algorithm can be applied to construct the vector-valued interpolation function component-wise, with the help of the common divisors among the denominators of components. Through experimental comparisons with the classic vector-valued rational interpolation algorithm, it is found that the proposed algorithm exhibits low construction cost, low degree of the interpolation function, and high approximation accuracy.

MSC:

41A20

1. Introduction

Vector-valued rational interpolation, as a classic topic in mathematics, has garnered significant attention due to its extensive applications in various fields, including mechanical vibration, data analysis, automatic control, and image processing, etc. [1,2,3,4,5]. In this paper, we primarily focus on the univariate vector-valued rational interpolation problem. The classical vector-valued rational interpolation can be stated as follows: Given a dataset

where each vector value is associated with a distinct point . We construct a vector-valued rational function

such that

where is an s-dimensional vector of polynomials, and is a real algebraic polynomial.

For a classical univariate vector-valued rational interpolation problem, Graves-Morris [6,7] proposed a Thiele-type algorithm based on the Samelson inverse transformation for vectors. They also proved the characteristic theorem and uniqueness of this kind of vector-valued rational interpolation. Subsequently, Graves-Morris and Jenkins [8] presented a Lagrange determinant algorithm for solving the classical vector-valued rational interpolation problems. Levrie and Bultheel [9] introduced a definition of generalized continued fractions and a Thiele n-fraction algorithm designed for the classical vector-valued rational interpolation. Zhu and Zhu [10] further contributed a recursive algorithm with inheritance properties for this type of interpolation.

Among various methods, the Thiele-type vector-valued rational interpolation algorithm [6] stands out as the most classic and widely used. It is discussed under the assumption of . However, examples are found for which the rational interpolation functions constructed with this algorithm do not satisfy this assumption [11]. Therefore, this assumption actually limits the applicability of this algorithm and its uniqueness theorem. Furthermore, this algorithm may encounter a computation stall during the computation of inverted differences. In such cases, adjusting points or perturbing vector components becomes necessary for recalculation. This subsequently leads to an increase in computational complexity.

To make up for this deficiency, we propose a new vector-valued rational interpolation algorithm. Recognizing that vector-valued rational interpolation can be seen as a combination of multiple scalar rational interpolation problems, we construct a vector rational function in the form of

to satisfy the interpolation conditions

where a non-trivial common divisor may exist between and . This modification can expand the applicability of univariate vector-valued rational interpolation.

Based on the Fitzpatrick algorithm for univariate scalar rational interpolation, this paper develops a corresponding vector-valued version. It fully takes the advantage of common divisors among denominators. This algorithm can not only remove restrictions on the denominators of the interpolation function, but also avoid complicated calculations of inverse differences. When solving problems component-wise, we obtain denominators from the calculated components. These denominators are then used to reduce the degree of subsequent components. This inheritance gives the algorithm significant advantages in solving vector-valued rational function recovery problems and certain applications.

The paper is organized as follows: In Section 2, we provide a detailed introduction to the algorithm established for the univariate vector-valued rational interpolation function in the form of (1). In Section 3, we discuss how to handle vector-valued rational function recovery problem through introducing termination conditions. Finally, the application in solving positive-dimensional polynomial systems is considered.

2. Univariate Vector-Valued Rational Interpolation

In this section, we propose a univariate vector-valued rational interpolation algorithm based on the Fitzpatrick algorithm. The recursive property of the Fitzpatrick algorithm enables us to make full use of the common factors in the denominators of the already computed interpolation components, thereby reducing the degrees of the interpolation results for subsequent components. Before detailing our algorithm, we provide a brief overview of the Fitzpatrick algorithm; more details can be found in [12].

2.1. Fitzpatrick Algorithm for Univariate Scalar Rational Interpolation

Let be a field of characteristic 0. is the univariate polynomial ring over . Given a dataset

where each value is associated with a distinct point . We construct a rational function

such that

where and , for . The problem is referred to as the Cauchy-type rational interpolation problem [12].

This problem can be solved with the help of free modules and Gröbner basis methods. Let be a free module with standard basis vectors and . If for , then is called a monomial in .

Definition 1.

For the order on , the following holds:

(1) if and only if and if and only if ;

(2) if and only if ;

where ξ is a given integer.

Fixing a monomial order in , each can be expressed as a -linear combination of monomials:

where , , and . The leading term of is defined as . Additionally, the degree of the rational function is defined as , where denotes the degree of the polynomial.

Let , be the ideals in . If there exist such that

then the pair is called a weak interpolation for the univariate Cauchy-type rational interpolation problem. It is easy to verify that for each positive integer k, the subset

is a -submodule. Let . Then, we have . If a fixed monomial order in is given, and it is known that the Gröbner basis of is , then the Gröbner basis of for can be recursively computed from that of with the Fitzpatrick algorithm (Algorithm 1).

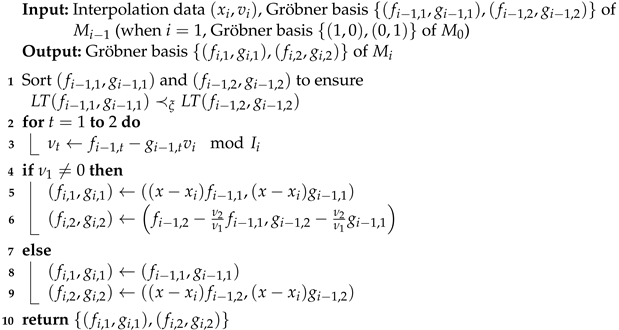

| Algorithm 1: Fitzpatrick Algorithm [12]. |

|

If the Gröbner basis of M is , then every pair can be expressed as

where for . For appropriate such that for ,

is a general solution to the univariate Cauchy-type rational interpolation problem.

If for all , then we can choose and . If there exists an index i such that , we can select a polynomial with degree equal to as . Additionally, we can choose an arbitrary with the restriction for all . If and are chosen in this manner, the degree can be minimized [12].

2.2. Fitzpatrick Algorithm for Univariate Vector-Valued Rational Interpolation

In the following, we discuss the univariate vector-valued rational interpolation problem where the denominators of different components share a common divisor, specifically, the vector-valued rational interpolation function in the form of (1).

Firstly, we use classical algorithms, such as the Fitzpatrick algorithm [12], the continued fraction method [13], or methods by solving linear systems of equations [14,15,16], to compute . Then, computation of for can be conducted as follows. We first select such that

If there are multiple polynomials satisfying (2), we choose the one with the largest index. Assuming that the greatest common divisor of and is , there exist and such that and . Therefore, can be expressed as

Let . Then, we can establish a new rational interpolation problem:

For the interpolation problem (3), we denote its corresponding weak interpolation module by , where . Further, set

It can be easily verified that is a Gröbner basis for the module

w.r.t. , where . Clearly, , so for each , there exist , such that

If , the following expression is meaningful:

Noting that , we also have

Hence we can have

This represents a simpler rational interpolation problem. We can use the Fitzpatrick algorithm to solve this interpolation problem (6). Assuming that the solution is , we substitute and into (5) and obtain . Therefore,

Thus,

is the k-th component of the vector-valued rational interpolation function. Further observation shows that, if , the degree of the rational function is lower than that of . This property enables us to obtain a higher-degree rational function by computing the lower-degree rational interpolation function . For specific implementation details, see Algorithm 2.

Remark 1.

Due to the uncertainty of , selecting for maximum degree reduction is challenging. However, once the highest degree is chosen, the degree of the greatest common divisor between and may still be higher than that between and other denominators. From this perspective, this selection may not be optimal, but it remains reasonable.

Next, we compare our algorithm with the classical vector-valued rational interpolation algorithm in two aspects: the degree of the interpolation result and the approximation quality (see Examples 1 and 2). Since the majority of vector-valued rational interpolation algorithms are built upon the theory of the Thiele’s algorithm, i.e., the univariate Thiele vector-valued rational interpolation algorithm [6], we chose to compare our approach with the Thiele’s algorithm. To evaluate the accuracy of the interpolation, we introduce the following error metric: Let the interpolation function be and the original function be . Then, the error for the k-th component is defined as . Consequently, the interpolation error vector can be expressed as

In order to quantify the error further, we define the error bound as follows:

where , for , represents the error bound for the k-th component.

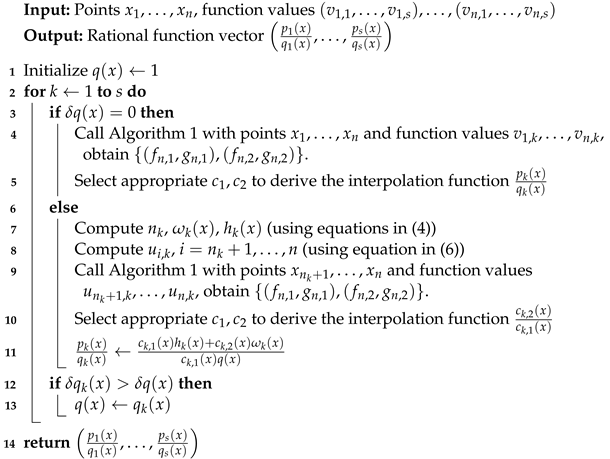

| Algorithm 2: Fitzpatrick Algorithm for univariate vector-valued rational interpolation. |

|

Example 1.

Given the vector function and its data at the points as shown in Table 1, we seek a rational interpolation function that satisfies the data in the table.

Applying Algorithm 2, we then obtain the interpolation result as follows:

In contrast, the Thiele’s algorithm gives the following interpolation result:

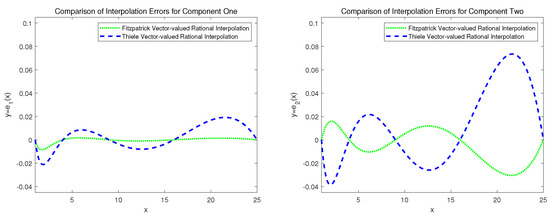

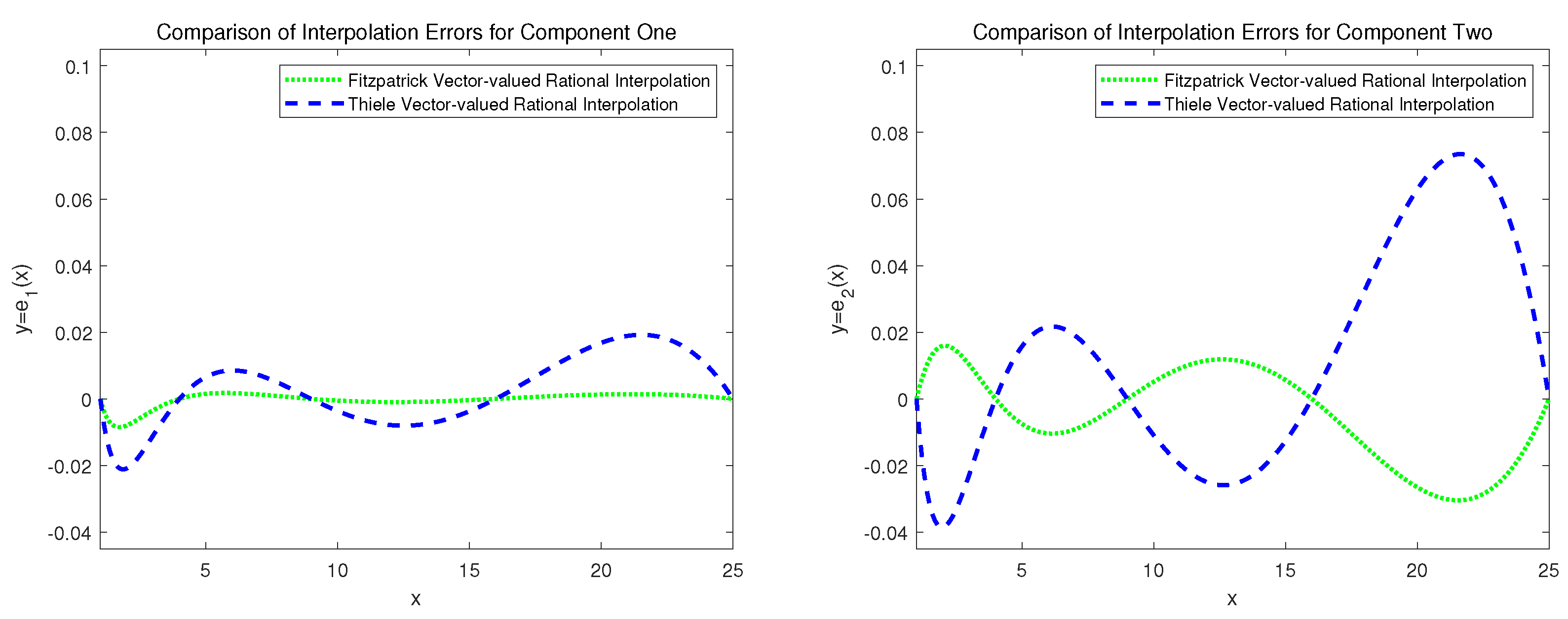

By (8), the error bound for Algorithm 2 is calculated as , and the error bound for Thiele’s algorithm is . Furthermore, we present a graphical analysis contrasting the error functions of the results produced by both algorithms for each individual component, with detailed visualizations provided in Figure 1. Obviously, for this problem, Algorithm 2 outperforms the univariate Thiele’s algorithm in both solution degree and computational accuracy.

Table 1.

Vector-valued rational interpolation data.

Table 1.

Vector-valued rational interpolation data.

Figure 1.

Comparison of interpolation result errors between the two algorithms.

Figure 1.

Comparison of interpolation result errors between the two algorithms.

Example 2.

For the vector functions listed in Table 2, we sample the points at the provided knot sequences (denoted as , which means a row vector starting from a and ending at b with step size h). Subsequently, we apply both Algorithm 2 and the univariate Thiele’s algorithm to calculate the interpolation functions and compare their approximation performance.

Using (8), we determine the error bounds with respect to the results of this problem for both Algorithm 2 and the univariate Thiele’s algorithm. These error bounds are presented in the 2nd and 3rd columns of Table 3, while the degrees of the interpolation functions are listed in the 4th and 5th columns. The data indicate that, in terms of both interpolation errors and function degrees, Algorithm 2 typically exhibits superior performance compared to the univariate Thiele’s algorithm for the current problem.

Table 2.

Original functions and points.

Table 2.

Original functions and points.

| i | Knots | |

|---|---|---|

| 1 | 0.4:0.4:2.8 | |

| 2 | 0.2:0.2:1.4 | |

| 3 | 2.0:1.0:8.0 | |

| 4 | 0.2:0.2:1.4 | |

| 5 | 0.2:0.2:1.4 | |

| 6 | 3.0:1.0:9.0 | |

| 7 | 0.2:0.2:1.4 | |

| 8 | 2.0:1.0:8.0 | |

| 9 | 0.2:0.2:1.4 | |

| 10 | 2.0:1.0:8.0 |

Remark 2.

The computation of is performed with built-in functions in Maple.

Example 3.

Given

let , for , and 5, be a set of vector-valued rational functions. We take , and 70, respectively. Subsequently, we apply Algorithm 2 and the univariate Thiele’s algorithm to perform the calculations and record the respective runtime (in seconds) in detail.

For various values of n, the runtime of Algorithm 2 is presented in the 2nd, 4th, 6th, and 8th columns of Table 4, while the runtime of Thiele’s algorithm is shown in the 3rd, 5th, 7th, and 9th columns. It is evident from the data that, for this problem, for the same values of s and n, Algorithm 2 exhibits significantly less runtime than Thiele’s algorithm. Notably, as the number of sampling points, n, increases, the disparity in runtime between the two algorithms becomes increasingly pronounced.

Table 3.

Comparison of degrees and errors between two algorithm results.

Table 3.

Comparison of degrees and errors between two algorithm results.

| Function | ERR | Degree | ||

|---|---|---|---|---|

| Algorithm 2 | Thiele | Algorithm 2 | Thiele | |

Table 4.

Comparison of runtime between two algorithms.

Table 4.

Comparison of runtime between two algorithms.

| s | n = 10 | n = 30 | n = 50 | n = 70 | ||||

|---|---|---|---|---|---|---|---|---|

| Algorithm 2 | Thiele | Algorithm 2 | Thiele | Algorithm 2 | Thiele | Algorithm 2 | Thiele | |

| 2 | 0.015000 | 0.016000 | 0.062000 | 1.265000 | 0.328000 | 13.750000 | 1.578000 | 95.891000 |

| 3 | 0.016000 | 0.032000 | 0.141000 | 2.422000 | 1.391000 | 28.281000 | 10.640000 | 196.828000 |

| 4 | 0.031000 | 0.047000 | 0.250000 | 4.078000 | 4.109000 | 51.172000 | 40.469000 | 318.484000 |

| 5 | 0.032000 | 0.047000 | 0.563000 | 4.968000 | 7.938000 | 70.453000 | 108.813000 | 432.188000 |

Remark 3.

All computations in this paper are performed on a hardware environment equipped with a 64-bit 1.8 GHz Intel Core i7 processor and 8 GB of RAM, Windows 10 Enterprise operating system and Maple 2016.

3. Univariate Vector-Valued Rational Recovery

In this section, we discuss an application of univariate vector-valued rational interpolation, specifically focusing on the problem of recovering univariate vector-valued rational functions. Let be a univariate vector-valued rational function provided by a black box with an unknown specific form. Once some is input, the black box outputs the function value . Suppose the black box function gives sufficient data

we aim to reconstruct a vector-valued rational function

such that

where for all , , , and . In this case, we consider to be the function obtained by recovering .

A common approach to solving this problem involves two procedures: applying a recursive vector-valued rational interpolation algorithm to compute for and verifying if it equals at all data points. However, this handling method suffers from computational redundancy.

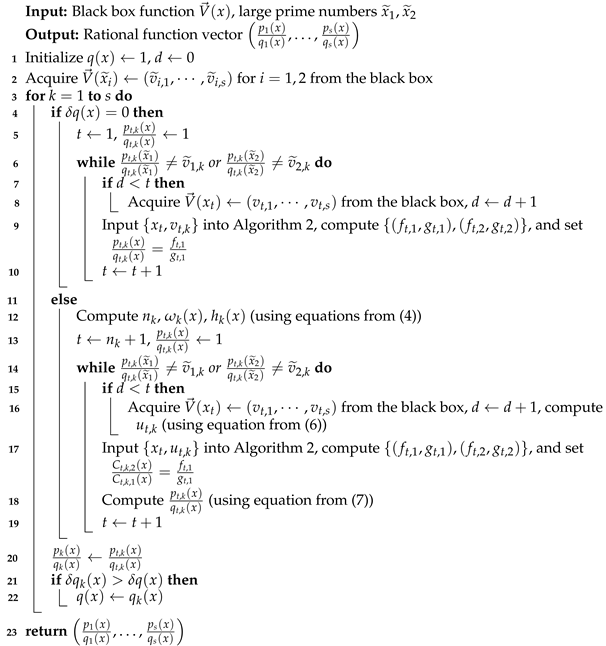

To reduce computational redundancy, we propose a novel strategy to deal with the recovery of vector-valued rational functions. Specifically, we sample at two distinct sets of points with the black-box function : a computation set , where t is adjustable; and a validation set , where and are large prime numbers. On , we apply a recursive vector-valued rational interpolation algorithm to compute the k-th component of the interpolation function. Subsequently, on , we verify if equals . If holds for , we consider that the k-th component is successfully recovered and proceed to compute the -th component with the same method. Otherwise, we increase the number of points and continue to compute based on . As our algorithm processes each component individually, we can modify Algorithm 2 accordingly to implement this procedure, which yields Algorithm 3, as follows.

| Algorithm 3: Fitzpatrick Algorithm for univariate vector-valued rational recovery. |

|

Next, we will select several sets of black box functions to test Algorithm 3. Additionally, we will compare its performance with a vector-valued rational recovery algorithm, which is derived from Thiele’s algorithm together with the termination conditions (referred to as the Thiele-type algorithm).

Example 4.

Let the black box rational function be . We perform rational recovery with Algorithm 3.

(1) Choose two large prime numbers at random. Let , , and obtain the function values

(2) Select points , and input the points sequentially into Algorithm 2 for interpolation. For , we have . One can easily check that and , then (data used is shown in Table 5).

(3) Let , then we obtain , , .

For , input the points sequentially, calculate , and interpolate with Algorithm 2, then compute . For , we have , and then compute which satisfies and , thus (data used are shown in Table 5).

Finally, the rational recovery result is

Table 5.

Vector-valued rational restoration data.

Table 5.

Vector-valued rational restoration data.

Example 5.

Given

let , for , be a set of black box vector-valued rational functions.

We compute the recovery of rational functions using both Algorithm 3 and the Thiele-type algorithm. First, two large prime numbers, and , are selected and their corresponding function values are obtained. Additionally, points for , are selected and input into the algorithms. Table 6 lists the number of points and runtime consumed by Algorithm 3 in the 2nd and 4th columns, respectively. The respective information for the Thiele-type algorithm is presented in the 3rd and 5th columns. As the vector dimension increases, the experimental results show that, in this problem, Algorithm 3 exhibits significant advantages over the Thiele-type algorithm in terms of both runtime and the number of points required.

Table 6.

Comparison of points and runtime between the two algorithms.

If there are no non-trivial common divisors among the denominators of the components, such as

Algorithm 3 can still successfully recover these functions. This fully demonstrates the versatility and effectiveness of Algorithm 3, indicating that it is not only applicable to specific cases where the denominators of vector-valued interpolation functions have non-trivial common divisors, but also widely applicable to general vector-valued rational function recovery problems.

Next, we consider the application of the vector-valued rational recovery algorithm in solving positive-dimensional polynomial systems. Let I be a given positive-dimensional ideal, and suppose that a maximally independent set modulo I is . Let . Denote the extension of I over by , then is a 0 dimensional ideal over . According to the reference [17], solving for is the most critical step in solving positive-dimensional polynomial systems. Therefore, we focus on discussing the solution process of here. Let t be the separating element of the extension ideal . According to the RUR (Rational Univariate Representation) method for polynomial systems [18], the solutions of can be represented by a set of univariate polynomials over in terms of T:

That is, the solutions of I can be expressed as

The coefficients of these univariate polynomials are rational functions over , which need to be computed. To this end, let be the vector composed of all coefficients of these polynomials:

Direct symbolic computations of these rational functions are computationally expensive. To compute these rational functions, we select appropriate points , and evaluate the generators of I at these points to obtain zero-dimensional ideals . Under certain conditions, t remains to be the separating element of the zero-dimensional ideal . Then, RUR of is calculated as follows:

These are univariate polynomials over in terms of T. According to reference [17], under appropriate conditions, , and if the vector of their coefficients is

then

This is equivalent to obtaining the values of the rational functions , , at the points . By applying the previous rational function recovery method, we can obtain , .

In the following examples, we incorporate the vector-valued rational function recovery method into the algorithm described in [17] in order to obtain solutions to polynomial systems.

Example 6.

Given

where I is a positive-dimensional ideal, and the maximally independent set modulo I is , thus . We first arbitrarily select two large prime numbers, and , to obtain function values. We then choose points for , and substitute into the ideal I to obtain . Taking as the separating element, we obtain the RUR of as follows:

and then extract the coefficients to form the vector

which represents the function values at point . The relevant data at point are then input into the interpolation algorithm, and and are used for validation; the data for recovery are presented in Table 7.

The recovered result is

This solution agrees with the one obtained using a different method in reference [19].

Table 7.

Coefficient data of RUR of .

Table 7.

Coefficient data of RUR of .

| i | ||

|---|---|---|

| 1 | 3 | |

| 2 | 5 | |

| 3 | 7 | |

| 4 | 9 | |

| 5 | 11 |

SHEPWM (Selective Harmonic Elimination Pulse Width Modulation) stands as a vital technology in medium- and high-voltage, high-power inverters, characterized not only by low switching losses but also by its ability to achieve linear control of the fundamental voltage to optimize the quality of the output voltage. Harmonic elimination theory allows us to derive a set of trigonometric algebraic equations with N switching angles, known as the SHEPWM equations. In 2004, Chiasson et al. [20] were the first to attempt transforming such trigonometric algebraic equations into a polynomial system for solution. In 2017, Shang et al. [21] further simplified these polynomial systems and proposed a method for solving them with the rational representation theory of positive-dimensional ideals.

Example 7.

The SHEPWM problem with can be solved by finding the zeros of the ideal

where I is a positive-dimensional ideal, and the maximally independent set modulo I is and . We first select two large prime numbers, and , to obtain function values. Choosing for , and taking as the separating element, we obtain the RUR of as follows:

all the coefficients form the vector

which represents the function values at point . The relevant data at point are then input into the interpolation algorithm, and and are used for validation. The data used for recovery are provided in Appendix A. The recovered result is

The solution obtained from this result agrees with the one found using a different method in reference [21].

4. Conclusions

This paper deals with the problems of univariate vector-valued rational interpolation and recovery with common divisors in the denominators. We propose a univariate vector-valued rational interpolation algorithm that takes the advantage of the property of a common divisor.Specifically, it leverages the denominators obtained from prior interpolated components to reduce the degree of interpolation for subsequent ones. By incorporating termination conditions into this algorithm, a vector-valued rational recovery algorithm can be derived. Numerical experiments demonstrate reliable performance of the algorithm in interpolation and recovery, offering advantages over traditional univariate Thiele-type algorithms. Additionally, we explore its application in solving positive-dimensional polynomial systems, confirming its feasibility in practical scenarios. However, when we are confronted with bivariate or multivariate vector-valued rational interpolation problems, the construction of the algorithm encounters greater challenges due to the complexity of interpolation points and function definitions. Future work aims to further investigate solutions for bivariate vector-valued rational interpolation and recovery, specifically targeting these more complex scenarios. In our research, we find in [22] that interpolation calculations can be applied to the solution of differential equations [23]. Consequently, we plan to use our method to solve differential equations in the future. We discover that in [24], the idea of mapping is utilized in the process of solving the problem, and the method we propose in this paper for solving positive-dimensional ideal problems actually establishes a homomorphic image mapping between solutions in zero-dimensional space and positive-dimensional space. This sparks our interest in using mappings to solve problems. Therefore, our next step is to explore the application of this mapping concept in solving different types of problems.

Author Contributions

Conceptualization, P.X. and L.X.; methodology, P.X. and L.X.; software, L.X.; validation, L.X.; formal analysis, L.X.; writing—original draft preparation, L.X.; writing—review and editing, S.Z. and P.X.; funding acquisition, P.X. and L.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Scientific Research Fund of Liaoning Provincial Education Department (No. LZD202003) and the Inner Mongolia Natural Science Foundation (No. 2022MS01015) and the Doctoral Research Launch Fund of Inner Mongolia Minzu University of China (No. BS650).

Data Availability Statement

The original contributions presented in the study are included in the article; further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Coefficient Data of RUR of

The appendix provides supplementary coefficient data related to the RUR of for Example 7.

Table A1.

Coefficient data of RUR of .

Table A1.

Coefficient data of RUR of .

| i | ||

|---|---|---|

| 1 | 1 | |

| 2 | 2 | |

| 3 | 3 | |

| 4 | 4 | |

| 5 | 5 | |

| 6 | 6 | |

| 7 | 7 | |

| 8 | 8 | |

| 9 | 9 | |

| 10 | 10 | |

| 11 | 11 | |

| 12 | 12 | |

| 13 | 13 | |

| 14 | 14 | |

| 15 | 15 | |

| 16 | 16 | |

| 17 | 17 | |

| 18 | 18 | |

References

- Graves-Morris, P.R.; Beckermann, B. The compass (star) identity for vector-valued rational interpolants. Adv. Comput. Math. 1997, 7, 279–294. [Google Scholar] [CrossRef]

- Wu, B.; Li, Z.; Li, S. The implementation of a vector-valued rational approximate method in structural reanalysis problems. Comput. Methods Appl. Mech. Eng. 2003, 192, 1773–1784. [Google Scholar] [CrossRef]

- Tsekeridou, S.; Cheikh, F.A.; Gabbouj, M.; Pitas, I. Vector rational interpolation schemes for erroneous motion field estimation applied to MPEG-2 error concealment. IEEE Trans. Multimed. 2004, 6, 876–885. [Google Scholar] [CrossRef]

- Hu, G.; Qin, X.; Ji, X.; Wei, G.; Zhang, S. The construction of λμ-B-spline curves and its application to rotational surfaces. Appl. Math. Comput. 2015, 266, 194–211. [Google Scholar] [CrossRef]

- He, L.; Tan, J.; Huo, X.; Xie, C. A novel super-resolution image and video reconstruction approach based on Newton-Thiele’s rational kernel in sparse principal component analysis. Multimed. Tools Appl. 2017, 76, 9463–9483. [Google Scholar] [CrossRef]

- Graves-Morris, P.R. Vector valued rational interpolants I. Numer. Math. 1983, 42, 331–348. [Google Scholar] [CrossRef]

- Graves-Morris, P.R. Vector-valued rational interpolants II. IMA J. Numer. Anal. 1984, 4, 209–224. [Google Scholar] [CrossRef]

- Graves-Morris, P.R.; Jenkins, C.D. Vector-valued rational interpolants III. Constr. Approx. 1986, 2, 263–289. [Google Scholar] [CrossRef]

- Levrie, P.; Bultheel, A. A note on thiele n-fractions. Numer. Algor. 1993, 4, 225–239. [Google Scholar] [CrossRef]

- Zhu, X.; Zhu, G. A recurrence algorithm for vector valued rational interpolation. J. Univ. Sci. Technol. China 2003, 33, 15–25. [Google Scholar] [CrossRef]

- Wang, R.; Zhu, G. Rational Function Approximation and Its Applications; Science Press: Beijing, China, 2004; pp. 117–146. [Google Scholar]

- Fitzpatrick, P. On the scalar rational interpolation problem. Math. Control Signal. Systems 1996, 9, 352–369. [Google Scholar] [CrossRef]

- Jones, W.B.; Thron, W.J. Continued Fractions: Analytic Theory and Applications; Addison-Wesley Pub. Co.: Glenview, IL, USA, 1980. [Google Scholar]

- Kailath, T.; Kung, S.Y.; Morf, M. Displacement ranks of matrices and linear equations. J. Math. Anal. Appl. 1979, 68, 395–407. [Google Scholar] [CrossRef]

- Löwner, K. Über monotone matrixfunktionen. Math. Z. 1934, 38, 177–216. [Google Scholar] [CrossRef]

- Gohberg, I.; Kailath, T.; Olshevsky, V. Fast gaussian elimination with partial pivoting for matrices with displacement structure. Math. Comput. 1995, 64, 1557–1576. [Google Scholar] [CrossRef]

- Tan, C.; Zhang, S. Computation of the rational representation for solutions of high-dimensional systems. Commun. Math. Res. 2010, 26, 119–130. [Google Scholar] [CrossRef]

- Rouillier, F. Solving zero-dimensional systems through the rational univariate representation. Appl. Algebra Eng. Commun. Comput. 1999, 9, 433–461. [Google Scholar] [CrossRef]

- Faugére, J.C. A new efficient algorithm for computing Gröbner basis (F4). J. Pure Appl. Algebra 1999, 139, 61–88. [Google Scholar] [CrossRef]

- Chiasson, J.N.; Tolbert, L.M.; Mckenzie, K.J.; Du, Z. A complete solution to the harmonic elimination problem. IEEE Trans. Power Electron. 2004, 19, 491–499. [Google Scholar] [CrossRef]

- Shang, B.; Zhang, S.; Tan, C.; Xia, P. A simplified rational representation for positive-dimensional polynomial systems and SHEPWM equations solving. J. Syst. Sci. Complex. 2017, 30, 1470–1482. [Google Scholar] [CrossRef]

- Djellab, N.; Boureghda, A. A moving boundary model for oxygen diffusion in a sick cell. Comput. Methods Biomech. Biomed. Eng. 2022, 25, 1402–1408. [Google Scholar] [CrossRef]

- Abbaszadeh, M.; Dehghan, M. A meshless numerical procedure for solving fractional reaction subdiffusion model via a new combination of alternating direction implicit (ADI) approach and interpolating element free Galerkin (EFG) method. Comput. Math. Appl. 2015, 70, 2493–2512. [Google Scholar] [CrossRef]

- Boureghda, A. Solution of an ice melting problem using a fixed domain method with a moving boundary. Bull. Math. Soc. Sci. Math. Roumanie 2019, 62, 341–353. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).