Stochastic Evolutionary Analysis of an Aerial Attack–Defense Game in Uncertain Environments

Abstract

1. Introduction

- (1)

- The micro-evolutionary mechanism of the aerial attack–defense game in uncertain environments is studied. By constructing a game mathematical model of aerial attack–defense confrontation behavior, analyzing the role of multiple individual performance factors on strategy selection, exploring the risk of random uncertainties in the complex battlefield environment on the game player’s maneuver decision-making, and uncovering the micro-evolutionary mechanism of asymmetric performance and random uncertain environmental disturbances on the decision-making of aerial attack–defense confrontation behavior.

- (2)

- The difference in performance and value between both game players is analyzed, and the operational effectiveness evaluation system of the equipment is improved. On the basis of traditional operational effectiveness indices, dynamic factors such as time sensitivity and equipment load weight coefficients are introduced to further improve the operational effectiveness assessment system, which helps decision-makers to more accurately assess the effectiveness of equipment and optimize the allocation of resources in the complex and changing battlefield environment.

- (3)

- This study supports the construction of the aerial game mission decision-making response mechanism and game system model. The study not only focuses on the construction and analysis of the theoretical model, but also emphasizes the significance of guidance to the actual management practice, specifically through an in-depth study of the influence of key variables on the behavior of the game player and the stable state of the system’s evolution, which provides a scientific basis and theoretical support for the improvement of the decision-making and response mechanism of aerial game missions in the future.

2. Evolutionary Game Modeling

2.1. Problem Description and Parameterization

2.2. Model Assumptions and Payment Matrix

- (1)

- If the blue player chooses to attack independently, the two players are symmetric and equal at the strategy level, and the attack gain that the blue player can achieve at this time is ; the costs incurred include and . The defense gain that the red player can achieve at this time is ; the costs incurred include and .

- (2)

- If the blue player chooses the coordinated attack strategy, the two players are asymmetric and unequal at the strategy level, the blue player is in an advantageous position, and the attack gain that the blue player can obtain at this time is , the costs incurred include , , and . The defense gain that the red player can obtain at this time is ; the costs incurred include and .

- (1)

- If the blue player chooses the independent attack strategy, the two players are asymmetric and unequal at the strategy level, and the attack gain that the blue player can obtain at this time is ; the costs incurred include and . Meanwhile, the red player is in a dominant position, and the defense gain that the red player can obtain at this time is ; the costs incurred include , , and .

- (2)

- If the blue player chooses the coordinated attack strategy, the two players are symmetric and equal at the strategy level, and the attack gain that the blue player can obtain at this time is ; the costs incurred include , , and . The defense gain that the red player can obtain at this time is ; the costs incurred include , , and .

3. Stochastic Evolutionary Game Modeling

3.1. Stochastic Evolutionary Game

3.2. Equilibrium Solving

3.3. System Stability Analysis

- (1)

- If there is a positive constant such that , then the zero-solution P-order moment index of the stochastic differential Equation (10) is stable, and holds.

- (2)

- If there is a positive constant such that , then the zero-solution P-order moment index of the stochastic differential Equation (10) is unstable, and holds.

- (a)

- When , then , and .

- (b)

- When , then , and .

- (c)

- When , then , and .

- (d)

- When , then , and .

4. Numerical Simulation Analysis

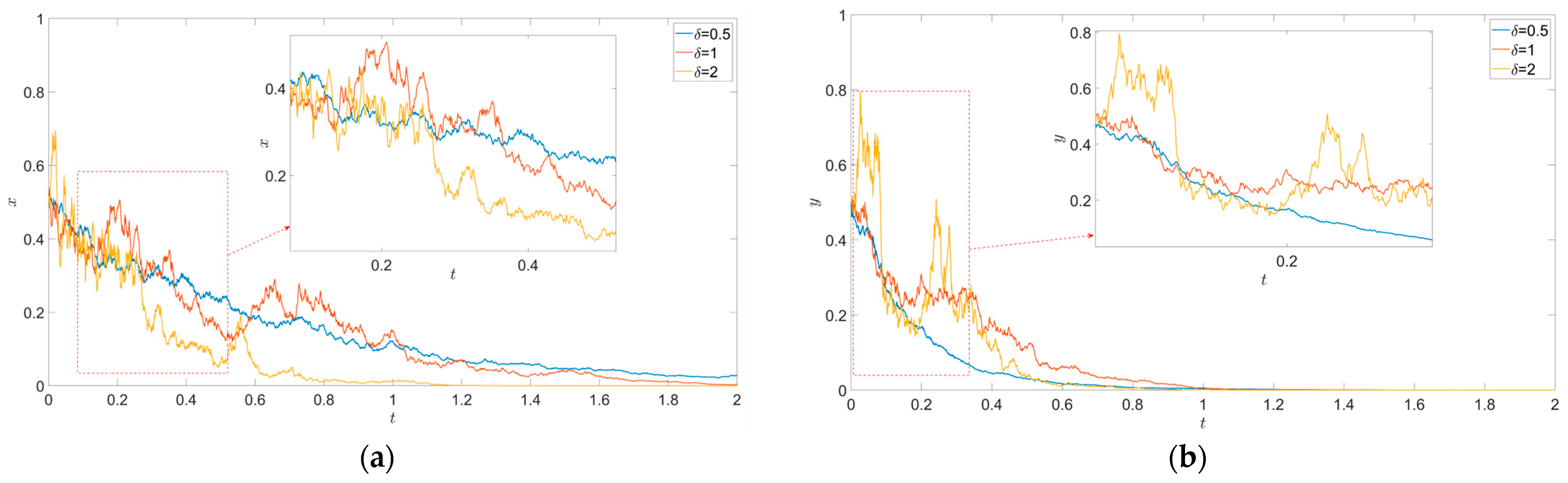

4.1. System Stability Verification

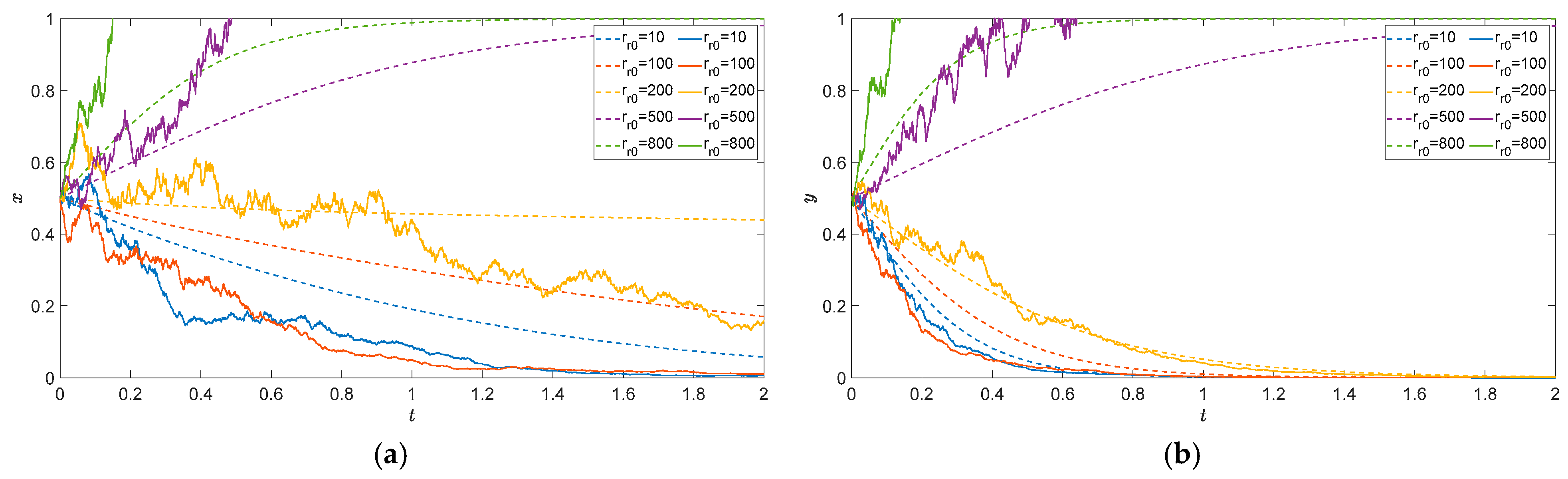

4.2. System Sensitivity Analysis

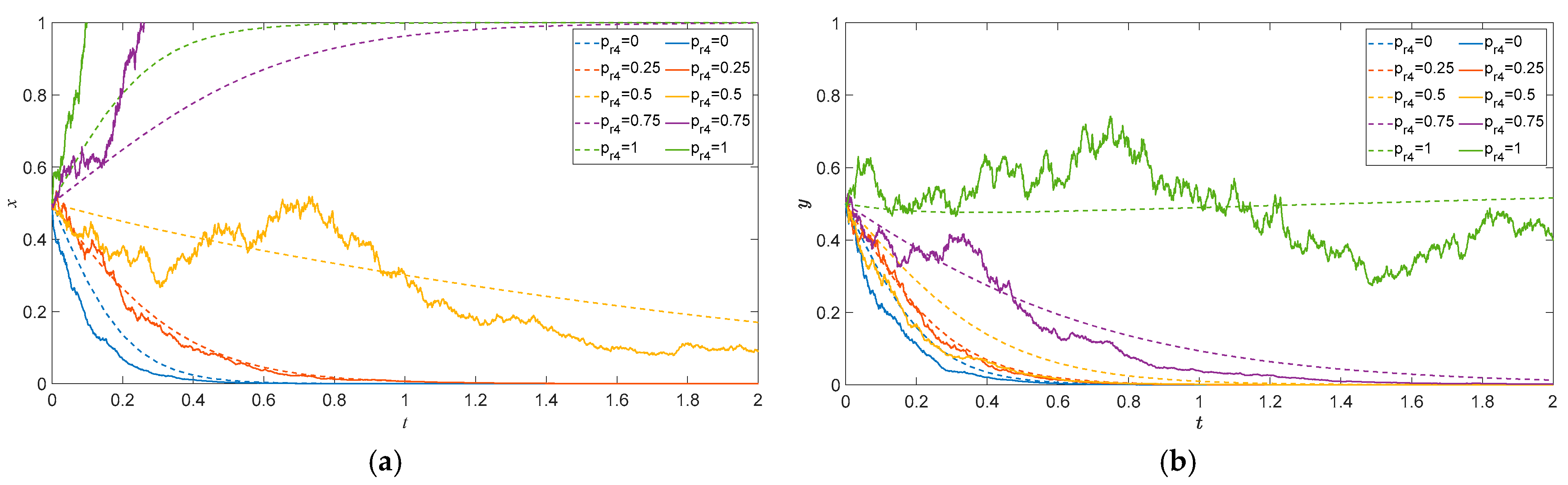

- (1)

- Basic probability of equipment operation in total process .

- (2)

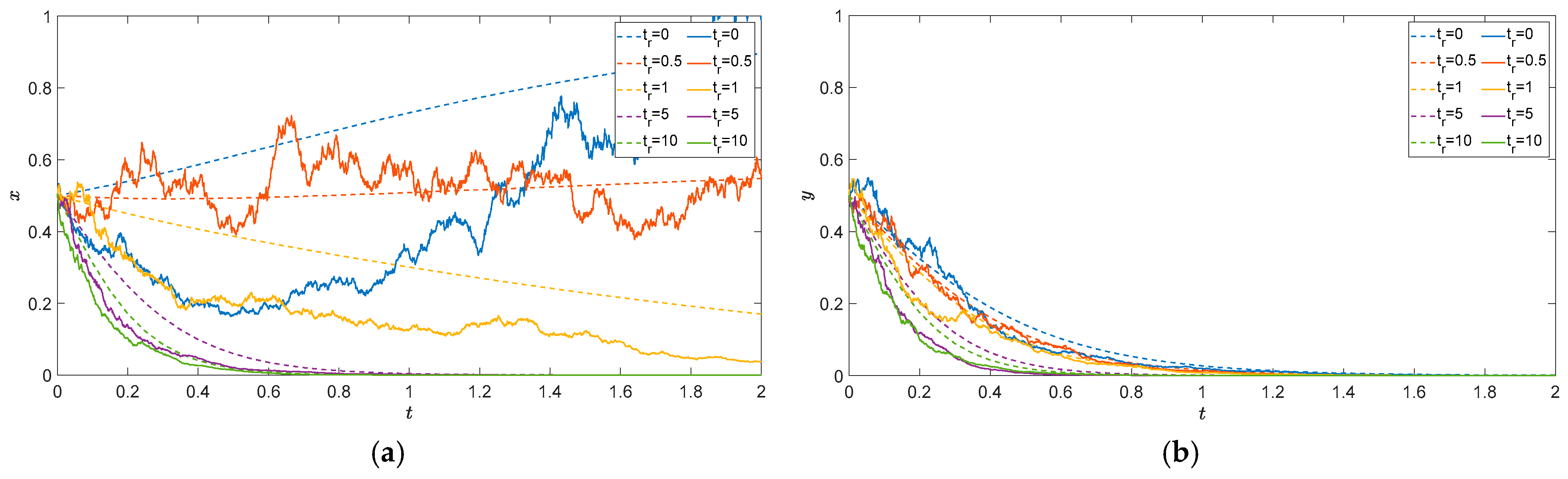

- Equipment reaction time, .

- (3)

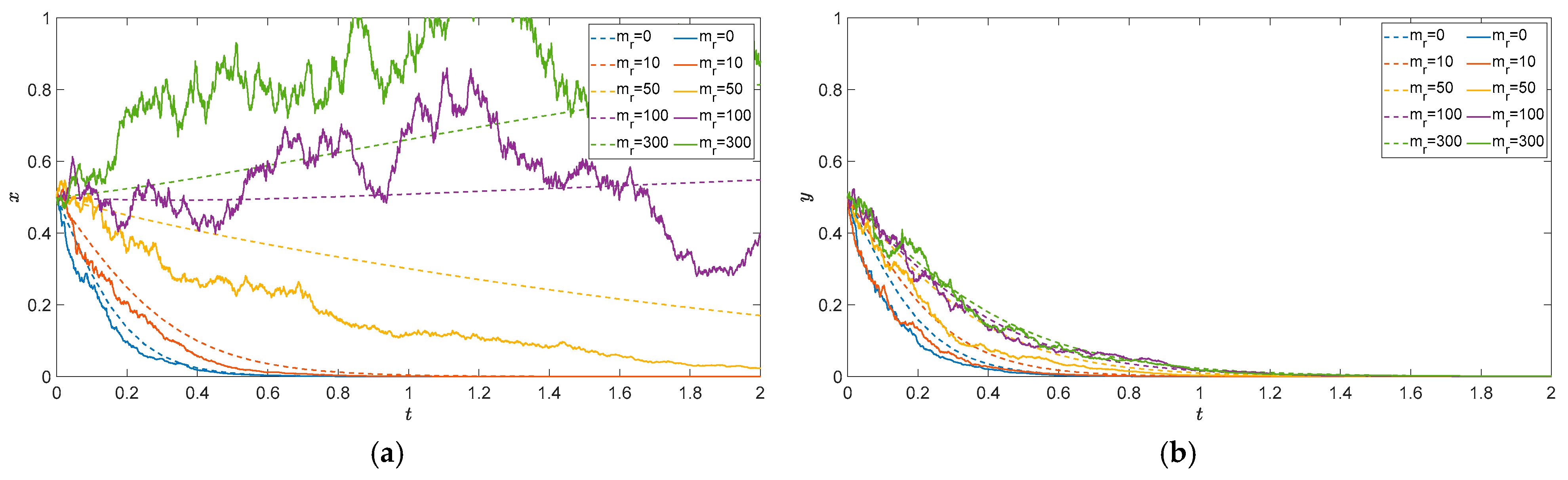

- Sustained Strike Capability, , of the Equipment

- (4)

- The inherent value of the target .

- (5)

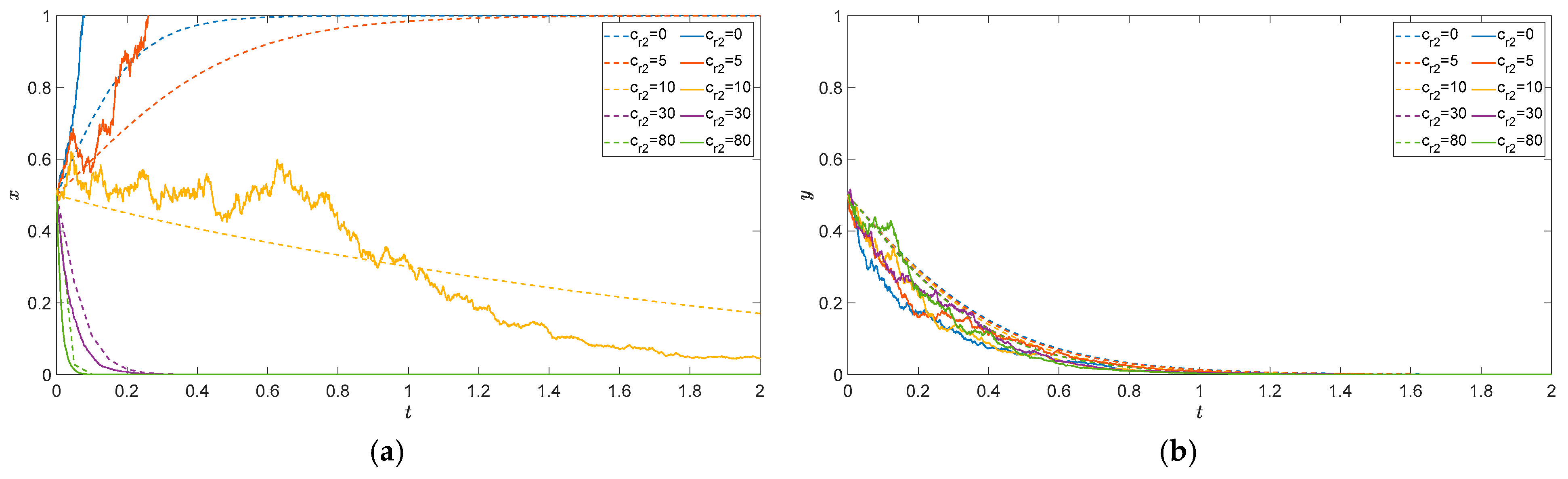

- Network collaboration cost .

5. Conclusions

- (1)

- The related index factors of the performance and value between both players have a great influence on the strategy evolution trend in both game players, which will not only change the result of strategy selection but also affect the rate of strategy evolution. From the perspective of strategy results, parameters such as the basic probability of equipment operation in the total process and target inherent value will have a significant impact on the strategy evolution results of both game players, resulting in the reversal of the strategy selection of both game players. Meanwhile, parameters such as equipment reaction time, equipment sustained strike capability, and network coordination cost have a greater impact on the parameter owner itself, and have less impact on the other game player. From the perspective of evolution rate, the basic probability of equipment operation in the total process slows down the evolution rate of both game players. Parameters such as equipment reaction time, equipment sustained strike capability, and target intrinsic value relatively slow down the evolution rate of the parameter owner itself. The parameters of equipment reaction time, equipment sustained strike capability and network coordination cost relatively accelerate the evolution rate of the other game player.

- (2)

- Random environmental factors cause a certain degree of interference to the strategy evolution process of the game players, which usually speeds up the strategy evolution rate, promotes the game players to reach the equilibrium as soon as possible, and greatly affects the evolution process of the game strategy. Especially in the transition moment when the strategy is about to change, the random environmental factors will cause a sharp fluctuation in the game strategy choice, which seriously affects the strategy choice of the game player.

- (3)

- Traditional evolutionary game theory lacks a description of the random dynamic environment, which cannot reflect the uncertainty and random dynamics in the complex battlefield environment. The stochastic evolutionary game model can better describe the random external environment and more accurately describe the evolution process of the game player’s behavior strategy, which has stronger universality and practicability.

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- McLennan-Smith, T.A.; Kalloniatis, A.C.; Jovanoski, Z.; Sidhu, H.S.; Roberts, D.O.; Watt, S. Humanitarian Aid Agencies in Attritional Conflict Environments. Oper. Res. 2021, 69, 1696–1714. [Google Scholar] [CrossRef]

- Lyu, C.Y.; Zhan, R.J. Global Analysis of Active Defense Technologies for Unmanned Aerial Vehicle. IEEE Aerosp. Electron. Syst. Mag. 2022, 37, 6–30. [Google Scholar] [CrossRef]

- Suresh, K.; Senthil, K.; Prasad, N. A Novel Method to Develop High Fidelity Laser Sensor Simulation Model for Evaluation of Air to Ground Weapon Algorithms of Combat Aircraft. Def. Sci. J. 2019, 69, 3–9. [Google Scholar]

- Li, Z.L.; Zu, J.H.; Kuang, M.C.; Zhang, J.; Ren, J. Hierarchical decision algorithm for air combat with hybrid action based on reinforcement learning. Acta Aeron. Et Astron. Sin. 2024, 45, 530053. [Google Scholar]

- Park, H.; Lee, B.-Y.; Tahk, M.-J.; Yoo, D.-W. Differential Game Based Air Combat Maneuver Generation Using Scoring Function Matrix. Int. J. Aeronaut. Space Sci. 2016, 17, 204–213. [Google Scholar] [CrossRef]

- Zhang, J.; Yang, Q.; Shi, G.; Lu, Y.; Wu, Y. UAV Cooperative Air Combat Maneuver Decision Based on Multi-Agent Reinforcement Learning. J. Syst. Eng. Electron. 2021, 32, 1421–1438. [Google Scholar] [CrossRef]

- Zhao, M.R.; Wang, G.; Fu, Q.; Guo, X.K.; Li, T.D. Deep Reinforcement Learning-Based Air Defense Decision-Making Using Potential Games. Adv. Intell. Syst. 2023, 5, 2300151. [Google Scholar] [CrossRef]

- Yu, M.G.; Chen, J.; He, M.; Liu, X.D.; Zhang, D.G. Cooperative evolution mechanism of multiclustered unmanned swarm on community networks. Sci. Sin. Technol. 2023, 53, 221–242. [Google Scholar] [CrossRef]

- Yu, M.G.; He, M.; Zhang, D.G.; Ma, Z.Y.; Kang, K. Strategy dominance condition of unmanned combat cluster based on multi-player public goods evolutionary game. Syst. Engin. Electron. 2021, 43, 2553–2561. [Google Scholar]

- Hu, S.; Ru, L.; Wang, W. Evolutionary Game Analysis of Behaviour Strategy for UAV Swarm in Communication-Constrained Environments. IET Control Theory Appl. 2023, 18, 350–363. [Google Scholar] [CrossRef]

- Gao, Y.F.; Zhang, L.; Wang, C.Y.; Zheng, X.Y.; Wang, Q.L. An Evolutionary Game-Theoretic Approach to Unmanned Aerial Vehicle Network Target Assignment in Three-Dimensional Scenarios. Mathematics 2023, 11, 4196. [Google Scholar] [CrossRef]

- Sheng, L.; Shi, M.H.; Qi, Y.C.; Li, H.; Pang, M.J. Dynamic offense and defense of UAV swarm based on situation evolution game. Syst. Engin. Electron. 2023, 45, 2332–2342. [Google Scholar]

- Huang, C.; Dong, K.; Huang, H.; Tang, S.; Zhang, Z. Autonomous Air Combat Maneuver Decision Using Bayesian Inference and Moving Horizon Optimization. J. Syst. Eng. Electron. 2018, 29, 86–97. [Google Scholar] [CrossRef]

- Wang, Y.; Huang, C.Q.; Tang, C.L. Research on Unmanned Combat Aerial Vehicle Robust Maneuvering Decision under Incomplete Target Information. Adv. Mech. Eng. 2016, 8, 168781401667438. [Google Scholar] [CrossRef]

- Li, Y.Y.; Chen, W.Y.; Liu, S.K.; Yang, G.; He, H. Multi-UAV Cooperative Air Combat Target Assignment Method Based on VNS-IBPSO in Complex Dynamic Environment. Int. J. Aerosp. Eng. 2024, 2024, 1–17. [Google Scholar] [CrossRef]

- Chen, B.; Tong, R.; Gao, X.E.; Chen, Y.F. A Novel Dynamic Decision-Making Method: Addressing the Complexity of Attribute Weight and Time Weight. J. Comput. Sci. 2024, 77, 102228. [Google Scholar] [CrossRef]

- Ren, Z.; Zhang, D.; Tang, S.; Xiong, W.; Yang, S.H. Cooperative Maneuver Decision Making for Multi-UAV Air Combat Based on Incomplete Information Dynamic Game. Def. Technol. 2023, 27, 308–317. [Google Scholar] [CrossRef]

- Cao, Y.; Kou, Y.X.; Li, Z.W.; Xu, A. Autonomous Maneuver Decision of UCAV Air Combat Based on Double Deep Q Network Algorithm and Stochastic Game Theory. Int. J. Aerosp. Eng. 2023, 6, 1–20. [Google Scholar] [CrossRef]

- Yang, K.; Kim, S.; Lee, Y.; Jang, C.; Kim, Y.-D. Manual-Based Automated Maneuvering Decisions for Air-to-Air Combat. J. Aerosp. Inf. Syst. 2024, 21, 28–36. [Google Scholar] [CrossRef]

- Mansikka, H.; Virtanen, K.; Lipponen, T.; Harris, D. Improving Pilots’ Tactical Decisions in Air Combat Training Using the Critical Decision Method. Aeronaut. J. 2024, 1–14. [Google Scholar] [CrossRef]

- Faizi, S.; Sałabun, W.; Shaheen, N.; Rehman, A.U.; Wątróbski, J. A Novel Multi-Criteria Group Decision-Making Approach Based on Bonferroni and Heronian Mean Operators under Hesitant 2-Tuple Linguistic Environment. Mathematics 2021, 9, 1489. [Google Scholar] [CrossRef]

- Zhang, H.; Zhou, H.; Wei, Y.; Huang, C. Autonomous Maneuver Decision-Making Method Based on Reinforcement Learning and Monte Carlo Tree Search. Front. Neurorobot. 2022, 16, 996412. [Google Scholar] [CrossRef] [PubMed]

- Tang, T.; Wang, Y.; Jia, L.J.; Hu, J.; Ma, C. Close-in Weapon System Planning Based on Multi-Living Agent Theory. Def. Technol. 2022, 18, 1219–1231. [Google Scholar] [CrossRef]

- Peng, S.X.; Wang, H.T.; Zhou, Q. Combat effectiveness evaluation model of submarine-to-air missile weapon system. Syst. Engin. Theo. Pract. 2015, 35, 267–272. [Google Scholar]

- Shi, L.K.; Pei, Y.; Yun, Q.J.; Ge, Y.X. Agent-Based Effectiveness Evaluation Method and Impact Analysis of Airborne Laser Weapon System in Cooperation Combat. Chin. J. Aeronaut. 2023, 36, 442–454. [Google Scholar] [CrossRef]

- Gao, F.; Zhang, A.; Bi, W.H. Weapon System Operational Effectiveness Evaluation Based on the Belief Rule-Based System with Interval Data. J. Intell. Fuzzy Syst. 2020, 39, 6687–6701. [Google Scholar] [CrossRef]

- Yang, H.; Mo, J. Stochastic Evolutionary Game of Bidding Behavior for Generation Side Enterprise Groups. Pow. Syst. Technol. 2021, 45, 3389–3397. [Google Scholar]

- Liu, W.Y.; Zhu, W.Q. Lyapunov Function Method for Analyzing Stability of Quasi-Hamiltonian Systems under Combined Gaussian and Poisson White Noise Excitations. Nonlinear Dyn. 2015, 81, 1879–1893. [Google Scholar] [CrossRef]

- Liu, W.Y.; Zhu, W.Q.; Xu, W. Stochastic Stability of Quasi Non-Integrable Hamiltonian Systems under Parametric Excitations of Gaussian and Poisson White Noises. Probabilistic Eng. Mech. 2013, 32, 39–47. [Google Scholar] [CrossRef]

- Marek, T.M.; Mariusz, M. The Interrelation between Stochastic Differential Inclusions and Set-Valued Stochastic Differential Equations. J. Math. Anal. Appl. 2013, 408, 733–743. [Google Scholar]

- Cheng, D.Z.; He, F.H.; Qi, H.S.; Xu, T.T. Modeling, Analysis and Control of Networked Evolutionary Games. IEEE Trans. Autom. Control 2015, 60, 2402–2415. [Google Scholar] [CrossRef]

- Giulia, D.N.; Zhang, T.S. Approximations of Stochastic Partial Differential Equations. Ann. Appl. Probab. 2016, 26, 1443–1466. [Google Scholar]

- Jumarie, G. Approximate Solution for Some Stochastic Differential Equations Involving Both Gaussian and Poissonian White Noises. Appl. Math. Lett. 2003, 16, 1171–1177. [Google Scholar] [CrossRef]

- Mustafa, B.; Tugcem, P.; Gulsen, O.B. Numerical Methods for Simulation of Stochastic Differential Equations. Adv. Differ. Equ. 2018, 2018, 17. [Google Scholar]

- Wang, C.S.; Weng, J.T.; He, J.S.; Wang, X.P.; Ding, H.; Zhu, Q.X. Stability Analysis of the Credit Market in Supply Chain Finance Based on Stochastic Evolutionary Game Theory. Mathematics 2024, 12, 1764. [Google Scholar] [CrossRef]

- Tabar, M. Numerical Solution of Stochastic Differential Equations: Diffusion and Jump-Diffusion Processes. In Analysis and Data-Based Reconstruction of Complex Nonlinear Dynamical Systems; Springer: Cham, Switzerland, 2019; ISBN 1860-0840. [Google Scholar]

- Gilsing, H.; Shardlow, T. SDELab: A Package for Solving Stochastic Differential Equations in MATLAB. J. Comput. Appl. Math. 2007, 205, 1002–1018. [Google Scholar] [CrossRef][Green Version]

- Peng, S.G.; Zhang, Y. Some New Criteria on Pth Moment Stability of Stochastic Functional Differential Equations with Markovian Switching. IEEE Trans. Autom. Control 2010, 55, 2886–2890. [Google Scholar] [CrossRef]

| Parameter | Definition | Parameter | Definition |

|---|---|---|---|

| basic probability of the whole process of air-air missile operations | basic probability of the whole process of laser operations | ||

| probability of red target detected by blue player | probability of blue target detected by red player | ||

| penetration survival probability of air-to-air missile | penetration survival probability of laser | ||

| probability of air-to-air missile hitting target after penetration | probability of laser hitting target after penetration | ||

| destruction probability of air-to-air missile after hitting target | destruction probability of laser after hitting target | ||

| response time sensitivity parameters of air-to-air missile | response time sensitivity parameters of laser | ||

| reaction time of air-to-air missile striking target | reaction time of laser striking target | ||

| load weight coefficient of air-to-air missile | load weight coefficient of laser | ||

| capacity of blue player to carry ammunition | capacity of red player to sustainable strikes | ||

| strategy revenue correction factor | discount rate for independent strategy returns | ||

| flight basis cost of blue player | flight basis cost of red player | ||

| network collaboration cost of blue player cluster | network collaboration cost of red player cluster | ||

| single missile strike cost of blue player | single laser strike cost of red player | ||

| the inherent value income of blue player | the inherent value income of red player | ||

| equal gain from independent attack of blue player | equal gain from independent defense of red player | ||

| advantageous gain from coordinated attack of blue player | advantageous gain from coordinated defense of red player | ||

| disadvantage gain from independent attack of blue player | disadvantage gain from independent defense of red player | ||

| equal gain from coordinated attack of blue player | equal gain from coordinated defense of red player |

| Strategy Combination | Blue Player | ||

|---|---|---|---|

| red player | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, S.; Ru, L.; Lu, B.; Wang, Z.; Wang, W.; Xi, H. Stochastic Evolutionary Analysis of an Aerial Attack–Defense Game in Uncertain Environments. Mathematics 2024, 12, 3050. https://doi.org/10.3390/math12193050

Hu S, Ru L, Lu B, Wang Z, Wang W, Xi H. Stochastic Evolutionary Analysis of an Aerial Attack–Defense Game in Uncertain Environments. Mathematics. 2024; 12(19):3050. https://doi.org/10.3390/math12193050

Chicago/Turabian StyleHu, Shiguang, Le Ru, Bo Lu, Zhenhua Wang, Wenfei Wang, and Hailong Xi. 2024. "Stochastic Evolutionary Analysis of an Aerial Attack–Defense Game in Uncertain Environments" Mathematics 12, no. 19: 3050. https://doi.org/10.3390/math12193050

APA StyleHu, S., Ru, L., Lu, B., Wang, Z., Wang, W., & Xi, H. (2024). Stochastic Evolutionary Analysis of an Aerial Attack–Defense Game in Uncertain Environments. Mathematics, 12(19), 3050. https://doi.org/10.3390/math12193050