Abstract

This paper explores the iterative approximation of solutions to equilibrium problems and proposes a simple proximal point method for addressing them. We incorporate the golden ratio technique as an extrapolation method, resulting in a two-step iterative process. This method is self-adaptive and does not require any Lipschitz-type conditions for implementation. We present and prove a weak convergence theorem along with a sublinear convergence rate for our method. The results extend some previously published findings from Hilbert spaces to 2-uniformly convex Banach spaces. To demonstrate the effectiveness of the method, we provide several numerical illustrations and compare the results with those from other methods available in the literature.

Keywords:

variational inequalities; golden ration technique; Lyapunov function; banach space; self-adaptive stepsize MSC:

47H09; 49J25; 65K10; 90C25

1. Introduction

In this paper, we focus on studying an iterative approximation of the Equilibrium Problem (EP), which is defined by the following condition:

where is a bifunction, and is a closed and convex subset of a real Banach space E. We denote the solution set of the EP by whenever the problem is consistent. The introduction of the EP is attributed to Blum and Oettli [], with additional contributions from [] in the early 1990s. Both of these works are based on the minimax inequality considered by Ky Fan []. As presented in this form, the EP serves as a unified framework for studying several optimization and fixed-point problems, including variational inequalities, complementarity problems, saddle point problems, and Nash equilibrium problems, among others.

In recent years, the EP has attracted significant interest from various researchers, leading to the development of several prominent solution methods. Notable among these are gap function methods [], auxiliary problem principle methods [], and proximal point methods [,]. The proximal method, in particular, has garnered considerable attention as a means of obtaining approximate solutions to the EP [,]. This method is based on the auxiliary problem principle initially introduced by Cohen [] and later adapted to the EP in real Banach space by Mastroeni []. It is important to note that the proximal method is particularly effective when the bifunction is strongly monotone or monotone. In 2008, Tran et al. [] extended the proximal point method for solving the equilibrium problem (EP) under the assumption that the bifunction is pseudomonotone and satisfies a Lipschitz-type condition. This proposed method, also known as the extragradient method, is a two-step proximal point method that builds on Korpelevich’s extragradient method (see []) within the EP framework.

It is worth mentioning that the construction of the extragradient method by Tran et al. [] requires satisfying a Lipschitz-type condition, particularly regarding the selection of the scaling parameter , which must fall in the range of , where and are the Lipschitz-type constants of the bifunction. Since then, numerous extensions and modifications of this method have emerged in various directions (see [,,,,,]).

Hieu [], drawing from the subgradient technique developed by Censor et al. [,], introduced a subgradient extragradient method to solve the EP associated with a pseudomonotone operator. In this approach, the second optimization subproblem is altered by replacing the feasible set with a constructible half-space. Similar to the method proposed by Tran et al. [], Hieu’s method [] also relies on knowledge of the Lipschitz condition of the cost function. In the context of 2-uniformly convex Banach spaces, Ogbuisi [] proposed a Popov subgradient extragradient method for approximating solutions to the equilibrium problem (EP). He established a weak convergence theorem under the condition that the scaling parameter satisfies specific requirements related to Lipschitz-type constants and . However, the reliance of these methods on the Lipschitz constant poses significant limitations, especially due to the nonlinear nature of the function, thus restricting the applicability of these methods.

To address this limitation, Yang and Liu [,] introduced a self-adaptive subgradient extragradient method. In their approach, the scaling parameter is updated using a formula that begins with a known initial point, thereby enhancing the scope of the subgradient extragradient method. Jolaoso and Aphane [] proposed two subgradient extragradient methods for solving the EP. The first method, similar to Ogbuisi’s, relies on the Lipschitz-type condition. However, in their second method, they utilized the self-adaptive technique, extending the work of Yang and Liu [,] to the framework of 2-uniformly convex Banach spaces. It is important to note that both the extragradient method and its subgradient versions require solving a strongly convex optimization problem twice per iteration, which can be time-consuming and memory-intensive.

Recently, Hoai [] introduced a modified proximal point algorithm based on the golden ratio technique within the framework of a real Hilbert space. A noteworthy feature of this method is that it requires solving only one strongly convex optimization problem per iteration. This improvement over the original proximal point method is attributed to the incorporation of the golden ratio. The golden ratio method was initially proposed by Malitsky [] for solving Mixed Variational Inequalities, and it has recently been studied as an extrapolation technique to enhance the convergence properties of iterative algorithms (see [,]) and the references therein. To generate a sequence using this method, an extra step , which is a weighted average of the current and the previous scaled by , is introduced. Due to the improvements observed in the convergence properties of iterative methods, researchers have increasingly considered the application of this technique (see [,,,]).

Building upon the works of Hoai [], Ogbuisi [], and Jolaoso [], we introduced a simple proximal point method based on the golden ratio technique for solving the EP in the context of 2-uniformly convex Banach spaces. This method, which combines proximal point and golden ratio approaches, is self-adaptive and eliminates the need for prior knowledge of the Lipschitz-type constants of the associated pseudomonotone operator. Using the proposed method, we establish and prove a weak convergence theorem, along with a sublinear rate of convergence for the method.

The manuscript is structured as follows: In Section 2, we review important definitions and results, and we derive analogs of two well-known results from Hilbert spaces. The main result is presented in Section 3, which begins with key assumptions and an introduction to the methodology. Section 4 contains numerical illustrations that demonstrate the performance of the method, along with comparisons to previously published results. Finally, we conclude the manuscript with a summary in the last section.

2. Preliminary Results

In this section, we revisit several foundational definitions and relevant lemmas. Let be a closed and convex subset of a real Banach space E with norm . We denote the dual space of E as and the duality pairing between elements of E and as . The weak convergence of a sequence to a point is expressed as .

Let be the normalized duality mapping defined by

We also define the Lyapunov functional as follows (see []):

From the definition of , it is evident that

We will also require the following property of the functional (see [,]):

Also in [], Alber introduced the generalized projection operator defined by

The generalized projection operator satisfies the following property as given in the next result.

Lemma 1

([]). Let be a closed and convex subset of a smooth, strictly convex, and reflexive real Banach space If and then

and

In the case where is a real Hilbert space, then and reduces to the metric projection (see []).

Definition 1.

Let be a closed and convex subset of E and let be a bifunction. Then, f is

- (1)

- strongly monotone, if there exists a positive constant γ, such that

- (2)

- monotone, if

- (3)

- strongly pseudomonotone, if there exists a positive constant γ, such that

- (4)

- pseudomonotone, if

- (5)

- (see Theorem 3.1(v), []). Lipschitz-type on if there exists a positive constantWe note that the above inequality corresponds towhere

- Clearly

Denote the unit sphere and unit ball of a real Banach space E by and , repectively. The modulus of convexity of E is the function defined by

A Banach space E is said to be uniformly convex if for any and 2-uniformly convex if there exists a constant , such that for any It is obvious that every 2-uniformly convex Banach space is uniformly convex. The Banach space E is said to be strictly convex if for every with The modulus of smoothness of E is the function defined by

The Banach space E is said to be uniformly smooth if and 2-uniformly smooth if there exists a constant , such that Also, E is said to be smooth if the limit

exists for all It is well known that every 2-uniformly smooth Banach space is uniformly smooth. See [,] for more on the geometry of Banach spaces.

Lemma 2

([]). Let E be a smooth and uniformly convex real Banach space, let and be sequences in If either or is bounded and as then as

Lemma 3

([]). Let E be a 2-uniformly convex Banach space. Then, there exists a constant , such that

Lemma 4

([]). Let The space E is q-uniformly smooth if and only if its dual is p-uniformly convex.

We use the following definitions in the sequel. The normal cone to a set at a point is given by

Let be a function. The subdifferential of h at s is defined by

Remark 1.

Observe from the definitions of the subdifferential and that

Lemma 5

(Chapter 7, Section 7.2, []). Let be convex subset of a Banach space E and be a convex, subdifferentiable and lower semicontinuous function. Furthermore, the function h satisfies the following regularity condition:

Then, is a solution to the following convex optimization problem

if and only if

Let be a closed and convex subset of a real Banach space be a convex function. Let be a scaling parameter. The proximal mapping of h is defined by

Remark 2.

From (Chapter 3, Section 3.2, []) it is known that is single-valued. By the definition of and Lemma 5, we obtain

Indeed, by Remark 1 and Lemma 5, we have by setting that

Then, there exists and , such that

As we have for all It follows from (3), that

Then, from we obtain

To establish the weak convergence, we need the following Lemma which is an analog of the ones obtained previously in the Hilbert spaces []. First, we obtain the analog of the renowned Opial’s Lemma for the Lyapunov functional.

Lemma 6.

Let be a sequence in E such that Then for all

Proof.

From (1), we have that

As for all it follows from the fact that J is weakly-weakly continuous that thus

as required. □

Lemma 7.

Let be a nonempty, closed, and convex subset of a 2-uniformly convex Banach E and let be a sequence in E such that

- (i)

- exists;

- (ii)

- every sequential weak limit point of belongs to

Then, converges weakly to a point in

Proof.

We proceed by contradiction. Assume that there exists at least two weak accumulation points p and q, such that Let be a subsequence of such that Then, by the previous Lemma, we have that

We can also in comparable manner show that , which is a contradiction. Hence and thus we conclude that converges to a unique point of □

Lemma 8

([]). Let and be nonnegative sequences satisfying

where m is some nonnegative integer. Then as and a limit of exists.

3. Main Result

In this section, we announce our main results in the sequel. First, we prove the following crucial result.

Lemma 9.

Let and and let then

Proof.

From the definition of and we have

Now observe that

Similarly,

The conclusion follows. □

Remark 3.

If and is a real Hilbert space, then the conclusion of Lemma 9 simplifies to the Euclidean identity

Next, we state the following iterative method (see Algorithm 1) and assumptions.

| Algorithm 1: Self adaptive inertial GRAAL for EP |

|

Assumption 1.

Suppose that the following conditions hold:

- (A1)

- The feasible set is a closed and convex subset of

- (A2)

- The bifuntion is pseudomonotone with and satisfies the Lipschitz-type condition (2).

- (A3)

- is convex, lower semicontinuous and subdifferentiable on for each

- (A4)

- The solution set .

Lemma 10.

Let be the sequence of iterates generated by Algorithm 1, such that condition A2 holds, then and are bounded and distinct from 0.

Proof.

It is clear from (12), that for all From condition A2, we have that there exists , such that

Let and such that Now, assume that this holds for It follows from that

or

We infer from both cases that is bounded by and separated from zero. Clearly, □

Lemma 11.

Suppose the conditions of Assumption 1 hold. Then,

- (i)

- the limit exists;

- (ii)

- the sequences and are bounded.

Proof.

From Remark 2 and (11), we have

Similarly, we have

Again from (11), we have which implies

Thus,

Multiplying this through by and adding to (15), we obtain

Using this estimates in (19), we obtain

As and we have that which implies by the pseudomonotonicity of that Hence, we obtain

Hence,

Using the update (12), one has

We go again from (11), where we have that

It follows from Lemma 9, that

and thus

By combining this with (24), we obtain

Rearranging the above, we have

Again from Lemma 9 and we have that

From this and the last two terms of (26), one obtains

Thus, we obtain from (26), that

It follows from Lemma 10, that for all Now, since then there exists such that for all Thus, there exists such that Now, set

We obtain easily that (28) becomes

Next, we claim that is bounded. To see this, we obtain from (1), that

Observe that

Hence,

which implies that Therefore, the sequence is bounded and as It follows from this and (27), that as We obtain by Lemma 3, that as From Lemma 9 and we have

Again by Lemma 3, we obtain

As is bounded, we infer that

Thus, we have established that the limits of exist. Therefore, is bounded. Consequently, the sequences and are bounded.

In the next result, we shall show that each weak sub-sequential limit of is in □

Lemma 12.

Let and be the sequences generated by Algorithm 1 under Assumptions 1. Assume is a sub-sequence of , such that then

Proof.

By Lemma 11, we have that is bounded, thus there exists a sub-sequence of such that Recall from (13), that

As f has a Lipschitz-type property, one has

We obtain by this and multiplying (18) by that

As we obtain by passing to the limit as in (36), that Hence, □

Theorem 1.

Let and be sequences generated by Algorithm 1. Then, and converge weakly to a point in

Proof.

From Lemma 12, we have that the set of weak accumulation points of lies in We have also established in Lemma 11 that the limit exists. We conclude by Lemma 7 and (31), that and both converge weakly to □

Sublinear Rate

As part of our convergence result, we show that our method sub-linearly converges by using as the measure of the rate of convergence.

Theorem 2.

Under the conditions of Assumption 1 and

Proof.

It follows from that

This implies

with

We obtain from and that

Hence,

and the conclusion follows. □

4. Numerical Example

In this section, we report some numerical illustrations of our proposed methods in comparison with some previously announced methods in the literature. All codes were written on a personal Dell laptop running on the memory 8gig/256gig with MATLAB 2024a software.

Example 1.

We consider the following Nash Equilibrium problem initially solved in [], with the bifunction defined by defined on a feasible set given by

Let the matrices and c be defined, respectively, by

Then, one can see easily that the matrix B is positive semidefinite and is negative semidefinite. Hence, f is monotone and thus pseudomonotone with a Lipschitz constant We will compare the performance of our proposed Algorithm 1 with that of Hoai et al. [] (Algorithm 3.1, HTV Alg. 3.1), Jolaoso [] (Algorithm 3.1), Vinh and Muu [] (Algorithm 1, VM Alg. 1), and Xie et al. [] (Algorithm 3, XCT Alg. 3). We consider the following set of initial parameters and points:

- IP 1.

- First set of initial parameters and points for each algorithm.

- Algorithm 1: , and .

- HTV Alg. 3.1 , and .

- J Alg. 3.1 and .

- VM Alg. 1 and .

- XCT Alg. 3 and .

- IP 2.

- Second set of initial parameters and points for each algorithm.

- Algorithm 1: were same as in IP 1 and, and .

- HTV Alg. 3.1 , and .

- J Alg. 3.1 were same as in IP 1 and, .

- VM Alg. 1 were same as in IP 1 and, .

- XCT Alg. 3 were same as in IP 1 and, .

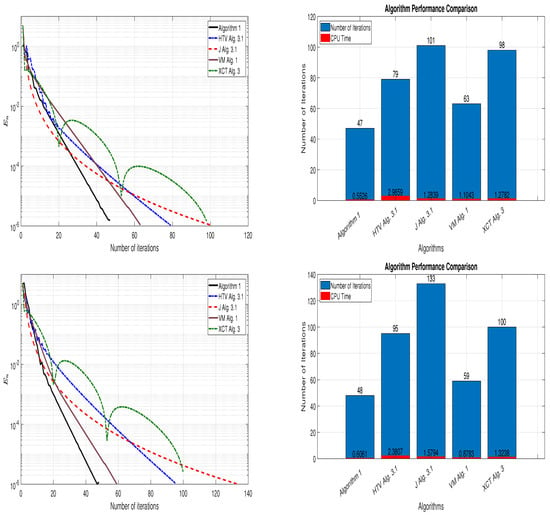

Setting a maximum number of iterations 3000, for each algorithm, the simulation is continued until or is not satisfied. The results of the numerical experiment are presented in Table 1 and Figure 1.

Table 1.

Numerical performance of all algorithms in Example 1.

Figure 1.

Norm of successive iterates () for the algorithms and the data in Table 1. Top: IP 1 and Bottom: IP 2.

Next, we will consider an example in the setting of the classical Banach space to support our main theorem.

Example 2.

Let the space of cubic summable sequences given by

with norm for all It is known that E is a Banach space, which is not a Hilbert space. Define the feasible set by and let the bifunction be defined by

It is clear that f is pseudomonotone but not monotone and f satisfies the Lipschitz-type condition with In this example, we will only compare the performance of our proposed Algorithm 1 and Jolaoso [] (Algorithm 3.1) because other algorithms were established in the setting of Hilbert spaces. For Algorithm 1 and J Alg. 3.1, we use the same set of parameters considered in Example 1. We consider the following initial points:

- IP 1.

- .

- IP 2.

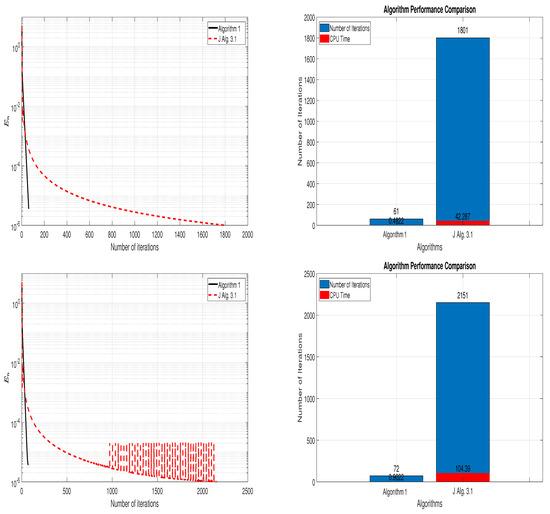

Table 2. Numerical performance of all algorithms in Example 2.

Table 2. Numerical performance of all algorithms in Example 2. Figure 2. Norm of successive iterates () for the algorithms and the data in Table 1. Top: IP 1 and Bottom: IP 2.

Figure 2. Norm of successive iterates () for the algorithms and the data in Table 1. Top: IP 1 and Bottom: IP 2.

5. Conclusions

In this manuscript, we address the problem of finding approximate solutions to equilibrium problems in Banach spaces. We propose a modified proximal point algorithm that utilizes the golden ratio technique when the cost mapping is pseudomonotone. This method is self-adaptive, meaning that it does not require prior knowledge of the Lipschitz property of the operator for its control parameter. The construction of our method involves solving a single convex optimization problem in each iteration. We present and prove two convergence results: one weak convergence result and one sublinear convergence result. Lastly, we provide two numerical examples, including one conducted in a Banach space, to demonstrate the advantages of our proposed method compared to previous approaches in the literature.

Author Contributions

Conceptualization, O.K.O.; Investigation, H.A.A. and S.P.M.; Methodology, O.K.O.; Software, O.K.O. and A.A.; Validation, H.A.A. and S.P.M.; Writing—review & editing, O.K.O. and A.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

No new data were created or analyzed in this study.

Conflicts of Interest

The authors declare no competing interests.

References

- Blum, E.; Oettli, W. From optimization and variational inequalities to equilibrium problems. Math. Stud. 1994, 63, 123–145. [Google Scholar]

- Muu, L.; Oettli, W. Convergence of an adaptive penalty scheme for finding constrained equilibria. Nonlinear Anal. 1992, 18, 1159–1166. [Google Scholar] [CrossRef]

- Fan, K. A Minimax Inequality and Its Application; In Inequalities; Shisha, O., Ed.; Academic: New York, NY, USA, 1972; Volume 3, pp. 103–113. [Google Scholar]

- Mastroeni, G. Gap function for equilibrium problems. J. Glob. Optim. 2003, 27, 411–426. [Google Scholar] [CrossRef]

- Mastroeni, G. On Auxiliary Principle for Equilibrium Problems; Series Nonconvex Optimization and Its Applications; Springer: Berlin/Heidelberg, Germany, 2003; Volume 68, pp. 289–298. [Google Scholar]

- Moudafi, A. Proximal point algorithm extended to equilibrium problem. J. Nat. Geometry. 1999, 15, 91–100. [Google Scholar]

- Flam, S.D.; Antipin, A.S. Equilibrium programming and proximal-like algorithms. Math. Program. 1997, 78, 29–41. [Google Scholar] [CrossRef]

- Cohen, G. Auxiliary problem principle and decomposition of optimization problems. J. Optim. Theory Appl. 1980, 32, 277–305. [Google Scholar] [CrossRef]

- Tran, D.Q.; Muu, L.D.; Hien, N.V. Extragradient algorithms extended to equilibrium problems. Optimization 2008, 57, 749–776. [Google Scholar] [CrossRef]

- Korpelevich, G. An extragradient method for finding saddle points and for other problems. Ekon. Mat. Metody. 1976, 12, 747–756. [Google Scholar]

- Anh, P.N.; An, L.T.H. New subgradient extragradient methods for solving monotone bilevel equilibrium problems. Optimization 2019, 68, 2099–2124. [Google Scholar] [CrossRef]

- Anh, P.N.; An, L.T.H. The subgradient extragradient method extended to equilibrium problems. Optimization 2015, 64, 225–248. [Google Scholar] [CrossRef]

- Hieu, D.V. Halpern subgradient extragradient method extended to equilibrium problems. Rev. Real Acad. Cienc. Exactas FíSicas Nat. Ser. MatemáTicas 2017, 111, 823–840. [Google Scholar]

- Khanh, P.Q.; Thong, D.V.; Vinh, N.T. Versions of the subgradient extragradient method for pseudomonotone variational inequalities. Acta Appl. Math. 2020, 170, 319–345. [Google Scholar] [CrossRef]

- Yang, J.; Liu, H.; Liu, Z.X. Modified subgradient extragradient algorithms for solving monotone variational inequalities. Optimization 2018, 67, 2247–2258. [Google Scholar] [CrossRef]

- Yang, J.; Liu, H. The subgradient extragradient method extended to pseudomonotone equilibrium problems and fixed point problems in Hilbert space. Optim. Lett. 2019, 14, 1803–1816. [Google Scholar] [CrossRef]

- Censor, Y.; Gibali, A.; Reich, S. Extensions of Korpelevich’s extragradient method for the variational inequality problem in Euclidean space. Optimization 2012, 61, 1119–1132. [Google Scholar] [CrossRef]

- Censor, Y.; Gibali, A.; Reich, S. Strong convergence of subgradient extragradient methods for the variational inequality problem in Hilbert space. Optim. Methods Softw. 2011, 26, 827–845. [Google Scholar] [CrossRef]

- Ogbuisi, F. Popov subgradient extragradient algorithm for pseudomonotone equilibrium problem in Banach spaces. J. Nonlinear Funct. Analy. 2019, 44. [Google Scholar]

- Jolaoso, L.O.; Aphane, M. An explicit subgradient extragradient with self-adaptive stepsize for pseudomonotone equilibrium problems in Banach spaces. Numer. Algorithms 2022, 89, 583–610. [Google Scholar] [CrossRef]

- Pham Thi, H.A.; Ngo Thi, T.; Nguyen, V. The Golden ratio algorithms for solving equilibrium problems in Hilbert spaces. J. Nonlinear Var. Anal. 2021, 5, 493–518. [Google Scholar]

- Malitsky, Y. Golden ratio algorithms for variational inequalities. Math. Program. 2020, 184, 383–410. [Google Scholar] [CrossRef]

- Chang, X.K.; Yang, J.F. A golden ratio primal-dual algorithm for structured convex optimization. J. Sci. Comput. 2021, 87, 47. [Google Scholar] [CrossRef]

- Chang, X.K.; Yang, J.F.; Zhang, H.C. Golden ratio primal-dual algorithm with linesearch. SIAM J. Optim. 2022, 32, 1584–1613. [Google Scholar] [CrossRef]

- Oyewole, O.K.; Reich, S. Two subgradient extragradient methods based on the golden ratio technique for solving varia-tional inequality problems. Numer. Algorithms 2024, 97, 1215–1236. [Google Scholar] [CrossRef]

- Zhang, C.; Chu, Z. New extrapolation projection contraction algorithms based on the golden ratio for pseudo-monotone variational inequalities. AIM Math. 2023, 8, 23291–23312. [Google Scholar] [CrossRef]

- Alber, Y.I. Metric and Generalized Projection Operators in Banach Spaces: Properties and Applications; Kartsatos, A.G., Ed.; Dekker: New York, NY, USA, 1996. [Google Scholar]

- Reem, D.; Reich, S.; De Pierro, A. Re-examination of Bregman functions and new properties of their divergences. Optimization 2019, 68, 279–348. [Google Scholar] [CrossRef]

- Reich, S. A weak convergence theorem for alternating method with Bregman distance. In Theory and Applications and Nonlinear Operators of Accretive and Monotone Type; Kartsatos, A.G., Ed.; Marcel Dekker: New York, NY, USA, 1996; pp. 313–318. [Google Scholar]

- Goebel, K.; Reich, S. Uniform Convexity, Hyperbolic Geometry, and Nonexpansive Mappings; Marcel Dekker: New York, NY, USA; Basel, Switzerland, 1984. [Google Scholar]

- Cioranescu, I. Geometry of Banach Spaces, Duality Mappings, and Nonlinear Problems; Kluwer: Dordrecht, The Netherlands, 1990. [Google Scholar]

- Kamimura, S.; Takahashi, W. Strong convergence of a proximal-type algorithm in Banach space. SIAM J. Optim. 2002, 13, 938–945. [Google Scholar] [CrossRef]

- Nakajo, K. Strong convergence for gradient projection method and relatively nonexpansive mappings in Banach spaces. Appl. Math. Comput. 2015, 271, 251–258. [Google Scholar] [CrossRef]

- Avetisyan, K.; Djordjević, K.; Pavlović, M. Littlewood-palew inequalities in uniformly convex and uniformly smooth Banach spaces. J. Math. Anal. Appl. 2007, 336, 31–43. [Google Scholar] [CrossRef]

- Tiel, J.V. Convex Analysis: An Introductory Text; Wiley: New York, NY, USA, 1984. [Google Scholar]

- Parikh, N.; Boyd, S. Proximal Algorithms. Found. Trends Optim. 2013, 1, 123–231. [Google Scholar]

- Acedo, G.L.; Xu, H.K. Iterative methods for strict pseudo-contractions in Hilbert spaces. Nonlinear Anal. 2007, 67, 2258–2271. [Google Scholar] [CrossRef]

- Jolaoso, L.O. The subgradient extragradient method for solving pseudomonotone equilibrium and fixed point problems in Banach spaces. Optimization 2022, 71, 4051–4081. [Google Scholar] [CrossRef]

- Vinh, N.T.; Muu, L.D. Inertial extragradient algorithms for solving equilibrium problems. Acta Math. Vietnam. 2019, 44, 639–663. [Google Scholar] [CrossRef]

- Xie, Z.; Cai, G.; Tan, B. Inertial subgradient extragradient method for solving pseudomonotone equilibrium problems and fixed point problems in Hilbert spaces. Optimization 2022, 73, 1329–1354. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).