A Q-Learning Based Target Coverage Algorithm for Wireless Sensor Networks

Abstract

1. Introduction

2. Related Works

3. Q-Learning Principles

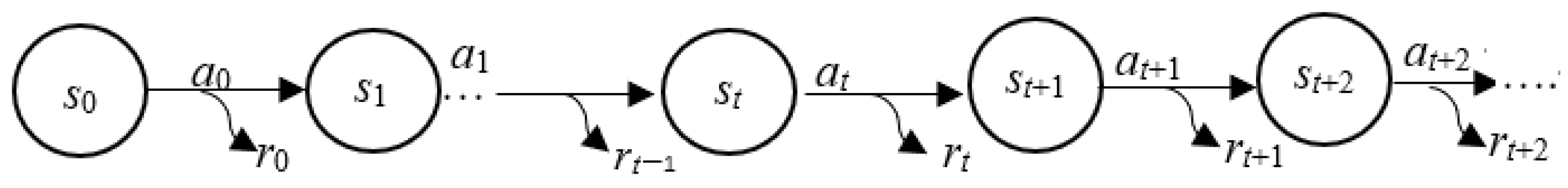

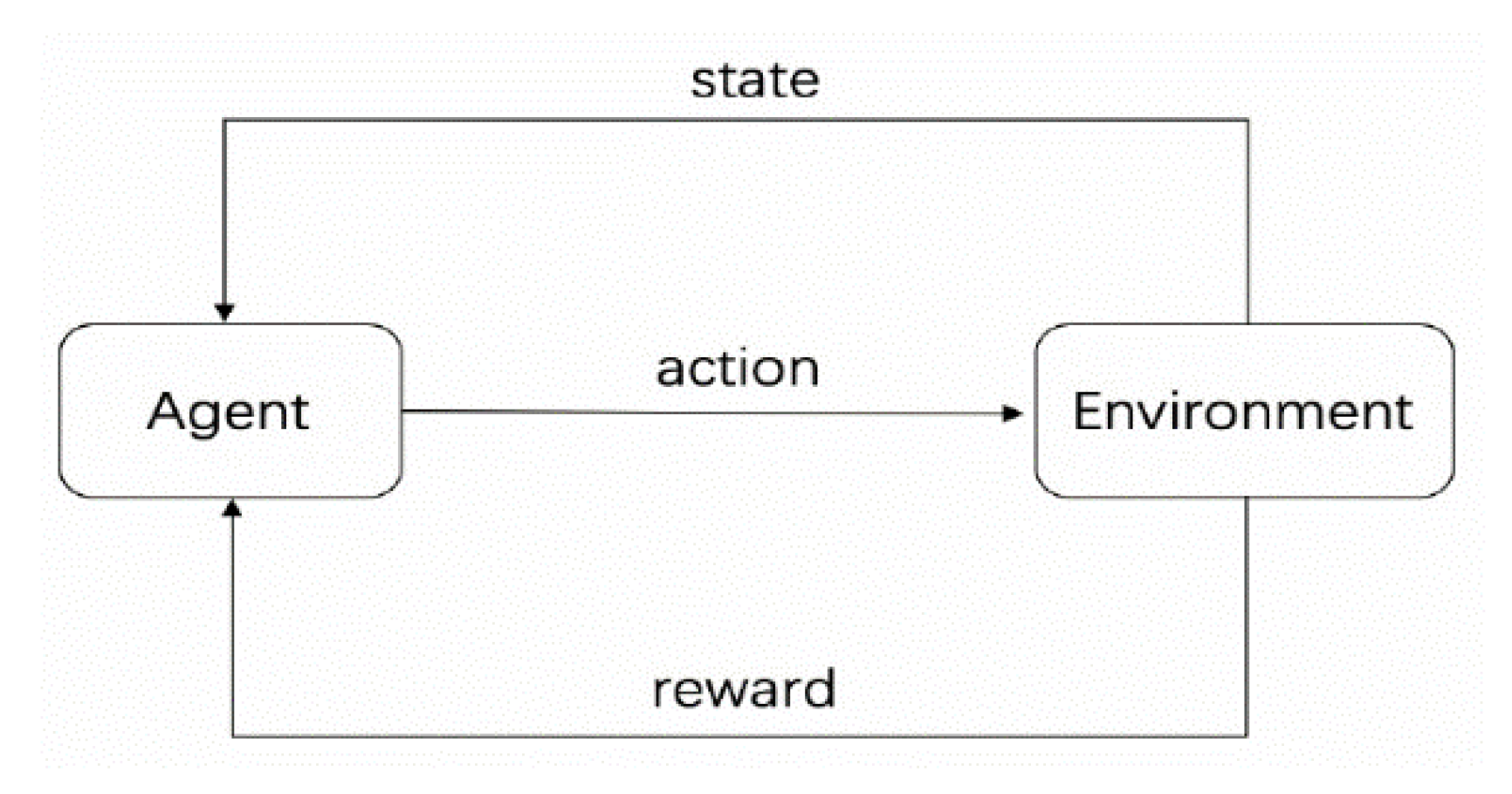

3.1. Markov Decision Process

3.2. Q-Learning Based on MDPs

4. Modeling and Problem Description

4.1. Network Model

4.2. Sensor Model

4.3. Energy Model

4.4. Related Definitions and Theorems

5. Algorithm Description

5.1. Modeling

5.1.1. States

- (1)

- pi is in the sleeping state, at this time, pi is transferred from the sleeping state to the active state, and the activated sensor nodes in the network do not constitute a feasible solution, i.e., ∃ tcj = 0, j ∈ {1, 2, …, m}. statei and the target coverage are updated in St+1, i.e., statei = {ei −1, 1}, and the target coverage update formula is as follows.

- (2)

- pi is active, when the environment state St+1 = St.

- (3)

- pi is dead, at which point the environmental state St+1 = St.

- (4)

- pi is sleeping, at this time pi from the sleeping to the activated, the sensor nodes that are active in the network can constitute a feasible solution; St+1 is first updated according to type (1), and then all the sensor states as well as the target coverage are updated.

5.1.2. Action

5.1.3. Regard

5.2. Network Structure and Algorithm

| Algorithm 1. Q-Learning algorithm for the Target Coverage |

| Require: The number of training rounds e, synchronization parameter interval value C, the memory pool capacity M, the number of data extraction batches b. |

| Ensure: The cumulative rewards and network lifetime. |

| 1: Initialize the Q-network parameters to ω, the target network parameters to = ω, and initialize the memory pool. |

| 2: repeat |

| 3: Initialize the cumulative rewards and network lifetime to 0. |

| 4: The smart body senses the environment to get the state st. |

| 5: e = e − 1 |

| 6: repeat |

| 7: The network selects the current action at according to the ε-greedy policy. |

| 8: Obtain the instantaneous reward rt and update st + 1 after executing the action at. |

| 9: (st, at, rt, st + 1) → the memory pool. |

| 10: Update the cumulative rewards. |

| 11: Predict the value of action according to Formula (18). |

| 12: Randomly select the b-th batche of data from the empirical pool for training and updating the Q-network parameters = ω. |

| 13: Synchronize = ω every C steps. |

| 14: until a feasible solution cannot continue to form. |

| 15: Output the cumulative rewards and network lifetime obtained this time. |

| 16: until e = 0 |

6. Experiments and Analysis of Results

6.1. Convergence Proof

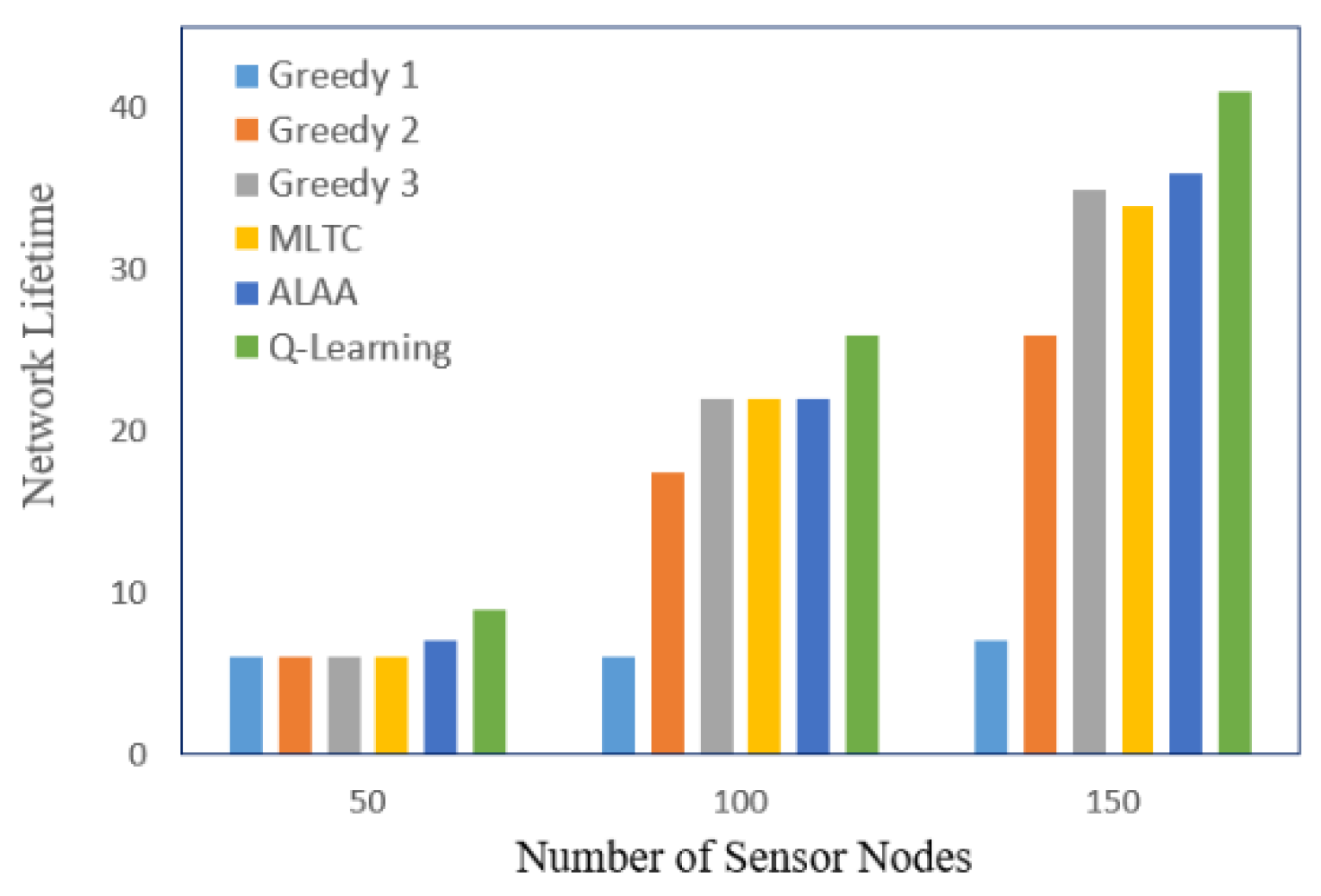

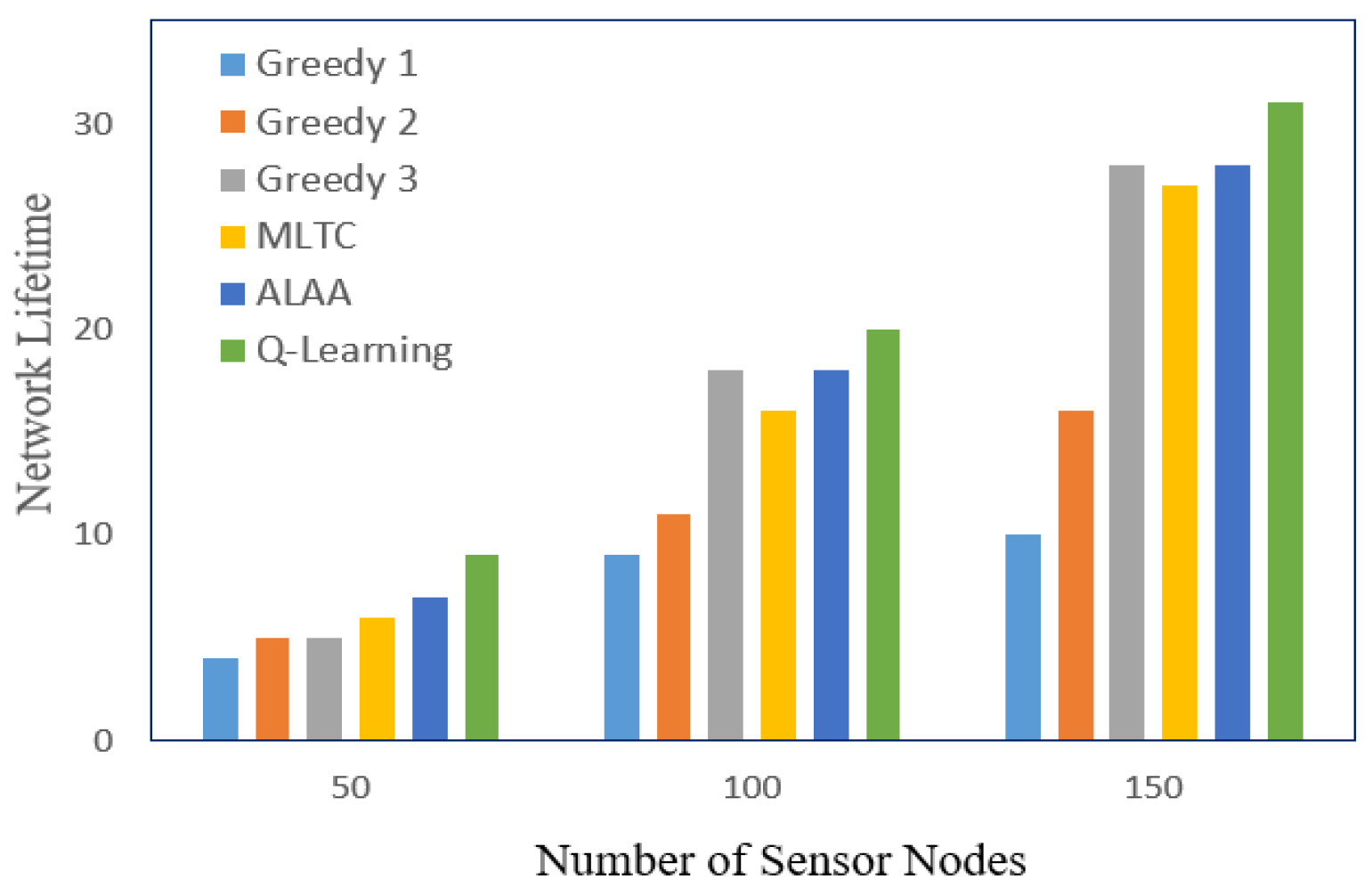

6.2. Comparison with Similar Algorithms

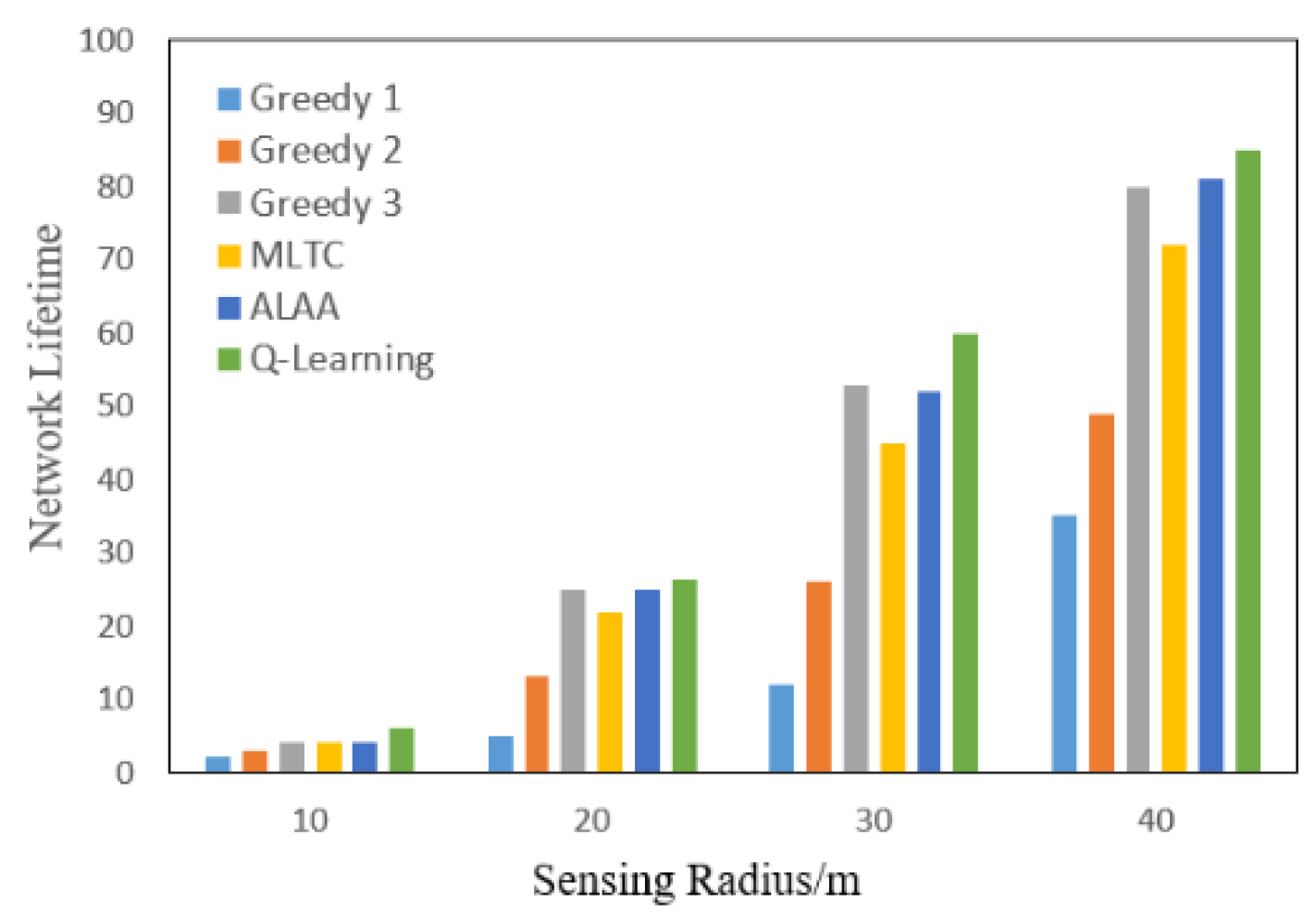

6.3. Impact of Sensing Radius on the Lifetime

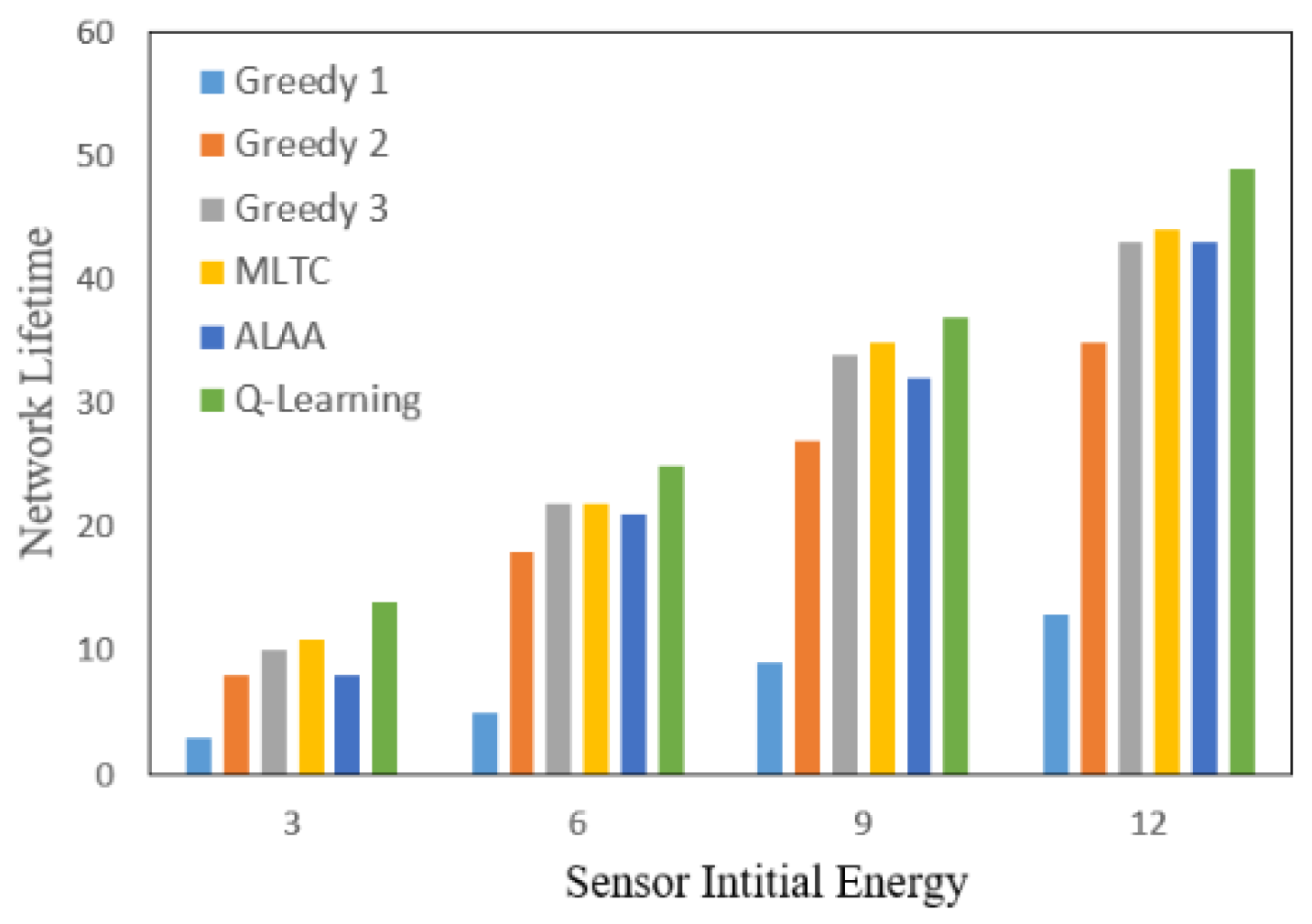

6.4. Impact of Initial Energy of Sensor on the Lifetime

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Lee, H.; Keshavarzian, A. Towards energy-optimal and reliable data collection via collision-free scheduling in wireless sensor networks. In Proceedings of the INFOCOM, Phoenix, AZ, USA, 13–18 April 2008. [Google Scholar]

- Zhu, C.; Zheng, C.L.; Shu, L.; Han, G. A Survey on Coverage and Connectivity Issues in Wireless Sensor Networks. J. Netw. Comput. Appl. 2012, 35, 619–632. [Google Scholar] [CrossRef]

- Carrabs, F.; Cerulli, R.; Raiconi, A. A Hybrid Exact Approach for Maximizing Lifetime in Sensor Networks with Complete and Partial Coverage Constraints. J. Netw. Comput. Appl. 2015, 58, 12–22. [Google Scholar] [CrossRef]

- Castaio, F.; Rossi, A.; Sevaux, E.M.; Velasco, N. A Column Generation Approach to Extend Lifetime in Wireless Sensor Networks with Coverage and Connectivity Constraints. Comput. Oper. Res. 2014, 52, 220–230. [Google Scholar] [CrossRef]

- Rossi, A.; Singh, A.; Sevaux, M. An Exact Approach for Maximizing the Lifetime of Sensor Networks with Adjustable Sensing Ranges. Comput. Oper. Res. 2012, 39, 3166–3176. [Google Scholar] [CrossRef]

- Rossi, A.; Singh, A.; Sevaux, M. Focus Distance-Aware Lifetime Maximization of Video Camera-Based Wireless Sensor Networks. J. Heuristics 2021, 27, 5–30. [Google Scholar] [CrossRef]

- Shahrokhzadeh, B.; Dehghan, E.M. A Distributed Game-Theoretic Approach for Target Coverage in Visual Sensor Networks. IEEE Sens. J. 2017, 17, 7542–7552. [Google Scholar] [CrossRef]

- Yen, L.H.; Lin, C.M.; Leung, V.C.M. Distributed Lifetime-Maximized Target Coverage Game. ACM Trans. Sens. Netw. (TOSN) 2013, 9, 1–23. [Google Scholar] [CrossRef]

- Cardei, I.M.; Wu, J. Energy-Efficient Coverage Problems in Wireless Ad-Hoc Sensor Networks. Comput. Commun. 2006, 29, 413–420. [Google Scholar] [CrossRef]

- Rangel, E.O.; Costa, D.G.; Loula, A. On Redundant Coverage Maximization in Wireless Visual Sensor Networks: Evolutionary Algorithms for Multi-objective Optimization. Appl. Soft Comput. 2019, 82, 105578. [Google Scholar] [CrossRef]

- Akram, J.; Munawar, H.S.; Kouzani, A.Z.; Mahmud, M.A.P. Using Adaptive Sensors for Optimised Target Coverage in Wireless Sensor Networks. Sensors 2022, 22, 1083. [Google Scholar] [CrossRef] [PubMed]

- Liang, D.Y.; Shen, H.; Chen, L. Maximum Target Coverage Problem in Mobile Wireless Sensor Networks. Sensors 2020, 21, 184. [Google Scholar] [CrossRef] [PubMed]

- Duan, J.; Yao, A.N.; Wang, Z.; Yu, L.T. Improved Sparrow Search Algorithm Optimises Wireless Sensor Network Coverage. J. Jilin Univ. (Eng. Technol. Ed.) 2024, 54, 761–770. [Google Scholar]

- Chand, S.; Kumar, B. Target Coverage Heuristic Based on Learning Automata in Wireless Sensor Networks. IET Wirel. Sens. Syst. 2018, 8, 109–115. [Google Scholar]

- Allah, M.N.; Motameni, H.; Mohamani, H. A Genetic Algorithm-Based Approach for Solving the Target Q-Coverage Problem in Over and Under Provisioned Directional Sensor Networks. Phys. Commun. 2022, 54, 101719. [Google Scholar]

- Littman, M.L. Markov games as a framework for multi-agent reinforcement learning. In Proceedings of the Eleventh International Conference on International Conference on Machine Learning, New Brunswick, NJ, USA, 10–13 July 1994; pp. 157–163. [Google Scholar]

- Cao, X.B.; Xu, W.Z.; Liu, X.X.; Peng, J.; Liu, T. A Deep Reinforcement Learning-Based on-Demand Charging Algorithm for Wireless Rechargeable Sensor Networks. Ad Hoc Netw. 2021, 110, 102278. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing Atari with Deep Reinforcement Learning. arXiv 2013, arXiv:1312.5602. [Google Scholar]

- Banoth SP, R.; Donta, P.K.; Amgoth, T. Dynamic Mobile Charger Scheduling with Partial Charging Strategy for WSNs Using Deep Q-Networks. Neural Comput. Appl. 2021, 33, 15267–15279. [Google Scholar] [CrossRef]

- Lu, H.; Zhang, X.W.; Yang, S. A Learning-Based Iterative Method for Solving Vehicle Routing Problems. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 30 April 2020; pp. 1–15. [Google Scholar]

- Younus, M.U.; Khan, M.K.; Anjum, M.R.; Afridi, S.; Arain, Z.A.; Jamali, A.A. Optimizing the Lifetime of Software Defined Wireless Sensor Network via Reinforcement Learning. IEEE Access 2020, 9, 259–272. [Google Scholar] [CrossRef]

- Saadi, N.; Bounceur, A.; Euler, R.; Lounis, M.; Bezoui, M.; Kerkar, M. Maximum Lifetime Target Coverage in Wireless Sensor Networks. Wirel. Pers. Commun. 2020, 111, 1525–1543. [Google Scholar] [CrossRef]

| Variables | Meaning |

|---|---|

| n | the number of sensors |

| P | the set of sensor nodes |

| pi | the sensor node i |

| m | the number of targets |

| T | the set of targets |

| tj | the target j |

| ci | the set of targets that can be covered by the sensor node pi |

| CS | the set of feasible solutions |

| csi | the ith feasible solution |

| actx | the time at which the feasible solution is activated |

| L | the total number of feasible solutions |

| E | the initial energy carried by the sensor node |

| eix | the energy consumed by pi node per unit time |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xiong, P.; He, D.; Lu, T. A Q-Learning Based Target Coverage Algorithm for Wireless Sensor Networks. Mathematics 2025, 13, 532. https://doi.org/10.3390/math13030532

Xiong P, He D, Lu T. A Q-Learning Based Target Coverage Algorithm for Wireless Sensor Networks. Mathematics. 2025; 13(3):532. https://doi.org/10.3390/math13030532

Chicago/Turabian StyleXiong, Peng, Dan He, and Tiankun Lu. 2025. "A Q-Learning Based Target Coverage Algorithm for Wireless Sensor Networks" Mathematics 13, no. 3: 532. https://doi.org/10.3390/math13030532

APA StyleXiong, P., He, D., & Lu, T. (2025). A Q-Learning Based Target Coverage Algorithm for Wireless Sensor Networks. Mathematics, 13(3), 532. https://doi.org/10.3390/math13030532