Abstract

The aim of this paper is to solve a class of non-linear fractional variational problems (NLFVPs) using the Ritz method and to perform a comparative study on the choice of different polynomials in the method. The Ritz method has allowed many researchers to solve different forms of fractional variational problems in recent years. The NLFVP is solved by applying the Ritz method using different orthogonal polynomials. Further, the approximate solution is obtained by solving a system of nonlinear algebraic equations. Error and convergence analysis of the discussed method is also provided. Numerical simulations are performed on illustrative examples to test the accuracy and applicability of the method. For comparison purposes, different polynomials such as 1) Shifted Legendre polynomials, 2) Shifted Chebyshev polynomials of the first kind, 3) Shifted Chebyshev polynomials of the third kind, 4) Shifted Chebyshev polynomials of the fourth kind, and 5) Gegenbauer polynomials are considered to perform the numerical investigations in the test examples. Further, the obtained results are presented in the form of tables and figures. The numerical results are also compared with some known methods from the literature.

1. Introduction

It is necessary to determine the maxima and minima of certain functionals in study problems in analysis, mechanics, and geometry. These problems are known as variational problems in calculus of variations. Variational problems have many applications in various fields like physics [1], engineering [2], and areas in which energy principles are applicable [3,4,5].

Nowadays, fractional calculus is a very interesting branch of mathematics. Fractional calculus has many real applications in science and engineering, such as fluid dynamics [6], biology [7], chemistry [8], viscoelasticity [9,10], signal processing [11], bioengineering [12], control theory [13], and physics [14]. Due to the importance of the fractional derivatives established through real-life applications, several authors have considered problems in calculus of variations by replacing the integer-order derivative with fractional orders in objective functionals, and this is thus known as fractional calculus of variations. Some of these studies are of a fractionally damped system [15], energy control for a fractional linear control system [16], a fractional model of a vibrating string [17], and an optimal control problem [18]. In this paper, our aim is to minimize non-linear fractional variational problems (NLFVPs) [19] of the following form:

under the constraints

where and are two functions of class with on , and are real numbers with , and is a constant.

The pioneer approach for solving the fractional variational problems originates in reference [20] where Agrawal derived the formulation of the Euler-Langrage equation for fractional variational problems. Further, in reference [4], he gave a general formulation for fractional variational problems. In reference [5], the authors used an analytical algorithm based on the Adomian decomposition method (ADM) for solving problems in calculus of variations. In [21,22], Legendre orthonormal polynomials and Jacobi orthonormal polynomials, respectively, were used to obtain an approximate numerical solution of fractional optimum control problems. In [23], the Haar wavelet method was used to obtain numerical solution of these problems. Some other numerical methods for the approximate solution of fractional variational problems are given in [24,25,26,27,28,29,30,31,32,33,34]. Recently, in [19], the authors gave a new class of fractional variational problems and solved this using a decomposition formula based on Jacobi polynomials. The operational matrix methods (see [35,36,37,38,39,40,41]) have been found to be useful for solving problems in fractional calculus.

In present paper, we extend the Rayleigh-Ritz method together with operational matrices of different orthogonal polynomials such as Shifted Legendre polynomials, Shifted Chebyshev polynomials of the first kind, Shifted Chebyshev polynomials of the third kind, Shifted Chebyshev polynomials of the fourth kind, and Gegenbauer polynomials to solve a special class of NLFVPs. The Rayleigh-Ritz methods have been discussed by many researchers in the literature for different kinds of variational problems, i.e., fractional optimal control problems [18,21,22,32,33]; here we cite only few, and many more can be found in the literature. In this method, first we take a finite-dimensional approximation of the unknown function. Further, using an operational matrix of integration and the Rayleigh-Ritz method in the variational problem, we obtain a system of non-linear algebraic equations whose solution gives an approximate solution for the non-linear variational problem. Error analysis of the method for different orthogonal polynomials is given, and convergence of the approximate numerical solution to the exact solution is shown. A comparative study using absolute error and root-mean-square error tables for all five kinds of polynomials is analyzed. Numerical results are discussed in terms of the different values of fractional order involved in the problem and are shown through tables and figures.

2. Basic Preliminaries

The definition of fractional order integration in the Riemann-Liouville sense is defined as follows.

Definition 1.

The Riemann-Liouville fractional order integral operator is given by

The analytical form of the shifted Jacobi polynomial of degree on [0, 1] is given as

where and are certain constants. Jacobi polynomials are orthogonal in the interval [0, 1] with respect to the weight function and have the orthogonality property

where is the Kronecker delta function and

For certain values of the constants and , the Jacobi polynomials take the form of some well-known polynomials, defined as follows.

Case 1: Legendre polynomials (S1) For in Equation (3), we get Legendre polynomials.

Case 2: Chebyshev polynomials of the first kind (S2) For in Equation (3), we get Chebyshev polynomials of the first kind.

Case 3: Chebyshev polynomials of the third kind (S3) For in Equation (3), we get Chebyshev polynomials of the third kind.

Case 4: Chebyshev polynomials of the fourth kind (S4) For in Equation (3), we get Chebyshev polynomials of the fourth kind.

Case 5: Gegenbauer polynomials (S5) For in Equation (3), we get Gegenbauer polynomials.

A function with can be expanded as

where and is the usual inner product space.

Equation (11) for finite-dimensional approximation is written as

where and are matrices given by and .

Theorem 1.

Letbe a Hilbert space andbe a closed subspace ofwith dim; letbe any basis for. Suppose thatis an arbitrary element inandis the unique best approximation toout of. Then

where

Proof .

Please see references [42,43]. □

Theorem 2.

Suppose thatis the Nth approximation of the function, and suppose

then we have

Proof .

Please see Appendix A. □

3. Operational Matrices

Theorem 3.

Letbe a Shifted Jacobi vector and suppose; then

whereis anoperational matrix of the fractional integral of orderand itsth entry is given by

Proof .

We refer to reference [44] for the proof. □

Now, in particular cases, the operational matrix of integration for various polynomials is given as follows.

For Shifted Legendre polynomials (S1), the th entry of the operational matrix of integration is given as

For Shifted Chebyshev polynomials of the first kind (S2), the th entry of the operational matrix of integration is given as:

For Shifted Chebyshev polynomials of the third kind (S3), the th entry of the operational matrix of integration is given as

For Shifted Chebyshev polynomials of the fourth kind (S4), the th entry of the operational matrix of integration is given as

For Shifted Gegenbauer polynomials (S5), the th entry of the operational matrix of integration is given as

4. Method of Solution

Approximating the unknown function in terms of orthogonal polynomials has been practiced in several papers in recent years [18,21,22,32,33] for different types of problems. Here, for solving the problem in Equation (1), we approximate

We are approximating the derivative first because we want to use the initial condition. Taking the integral of order on both sides of Equation (19), we get

Using the operational matrix of integration, Equation (20) can be written as

where and is the operational matrix of integration of order .

Using Equation (19), we can write

Using Equations (19) and (22) in Equation (1), we obtain

Equation (23) can then be written as

We further take the following approximations:

where , , , and , , , , and is the usual inner product space.

Using Equations (25) and (26) we can write

where

From Equations (24) and (27)–(29), we get

Let

From Equations (31) and (32), we get

where is a square matrix given by .

Using Equation (22), the boundary condition can be written as

Using the Lagrange multiplier method [18,20,21,22,32,33], the necessary extremal condition for the functional in Equation (33) becomes

From Equations (34) and (35), we get a set of equations. Solving these equations, we get unknown parameters . Using these unknown parameters in Equation (21), we get the unknown function’s extreme values of the non-linear fractional functional.

5. Error Analysis

The upper bound of error for the operational matrix of fractional integration of a Jacobi polynomial of the ith degree is given as

From Equation (36), we can write

Taking the integral operator of order on both sides of Equation (3), we get

From the construction of the operational matrix we can write

Using Theorem 1 we can write

From Equations (37)–(39), we get

Using Equation (40) in Equation (41), we obtain the error bound for the operational matrix of integration of an ith-degree polynomial, which is given as

Now, in particular cases, the error bounds for different orthogonal polynomials are given as follows.

Case 1: For Legendre polynomials (S1) the error bound is given as

Case 2: For Chebyshev polynomials of the first kind (S2) the error bound is given as

Case 3: For Chebyshev polynomials of the third kind (S3) the error bound is given as

Case 4: For Chebyshev polynomials (S4) the error bound is given as

Case 5: For Gegenbauer polynomials (S5) the error bound is given as

Let denote the error vector for the operational matrix of integration of order obtained by using orthogonal polynomials in ; then

From Theorems 1 and 2 and from Equations (43)–(47), it is clear that as the error vector in Equation (48) tends to zero.

6. Convergence Analysis

A set of orthogonal polynomials on [0, 1] forms a basis for . Let be the n-dimensional subspace of generated by . Thus, every functional on can be written as a linear combination of orthogonal polynomials . The scalars in the linear combinations can be chosen in such a way that the functional minimizes. Let the minimum value of a functional on space be denoted by . From the construction of and , it is clear that and .

Theorem 4.

Consider the functional, then

Proof .

Using Equation (48) in Equation (23), we have

Taking and using Equations (25)–(27) and (48) in Equation (49), we get

where

and is the error term of the functional.

Using Equations (30) and (32) in Equation (50), we get

where

Solving Equation (51) similarly to the original functional, Equation (51) reduces to the following form:

Using Equation (48) in Equation (34), we get

Similar to above, by using the Rayleigh-Ritz method on Equation (53) with the boundary condition in Equation (54) we obtain the extreme value of the functional defined in Equation (53). Let this extreme value be denoted by .

Now, from Equation (48), it is obvious that as which implies that So, it is clear that as , the functional in Equation (53) comes close to the functional in Equation (23) and the boundary condition in Equation (54) comes close to Equation (34).

So, for large values of

From Theorem 4 and Equation (55), we conclude that

Proof completed. □

7. Numerical Results and Discussions

In this section, we investigate the accuracy of the method by testing it on some numerical examples. We apply the numerical algorithm to two test problems using different orthogonal polynomials as a basis. The results for the test problems are shown through the figures and tables.

Example 1.

Consider a non-linear fractional variational problem as in Equation (1) with; we then have the following non-linear fractional variational problem [19]:

under the constraints

The exact solution of the above equation is given as

We discuss this example for different values of or 1, , and

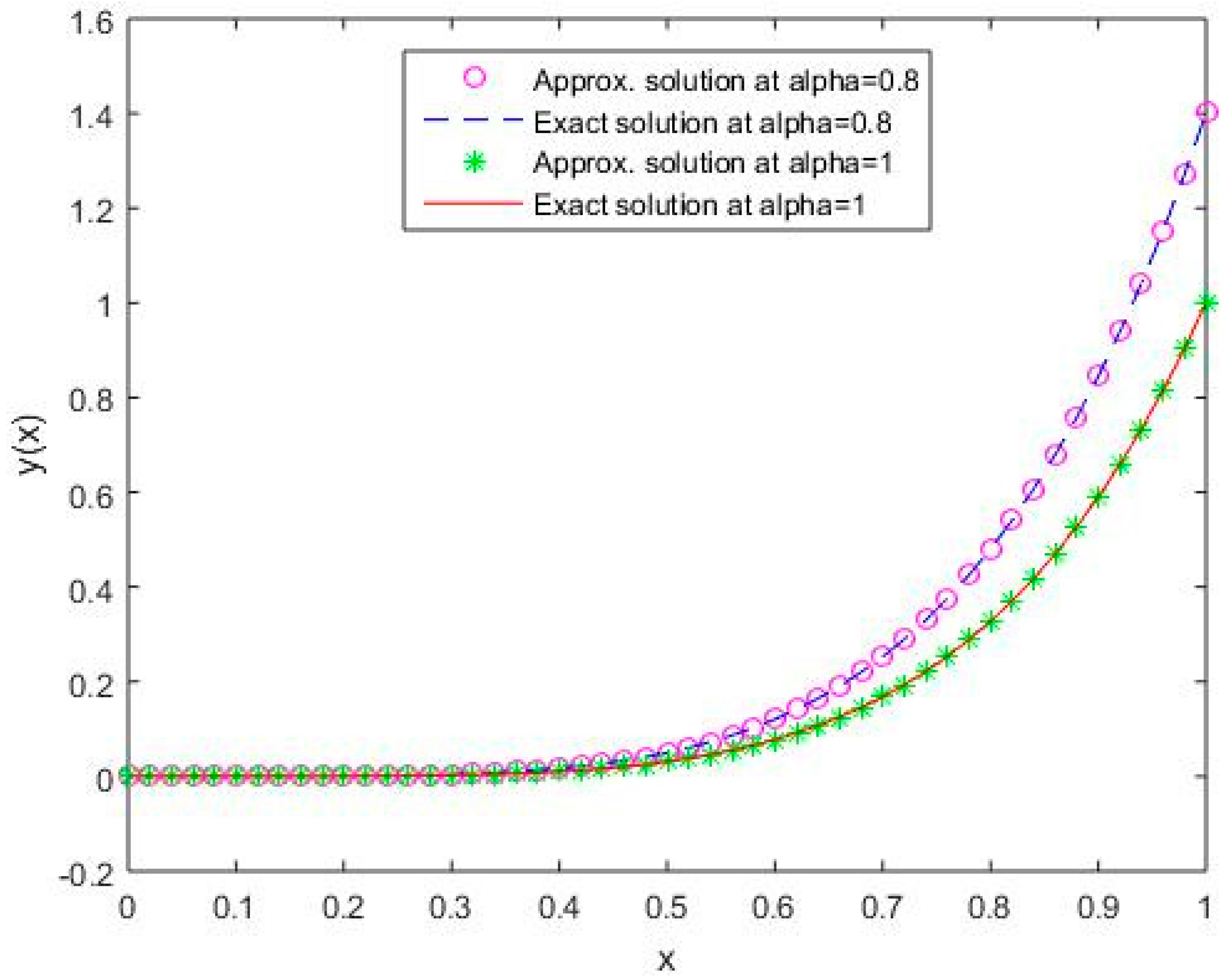

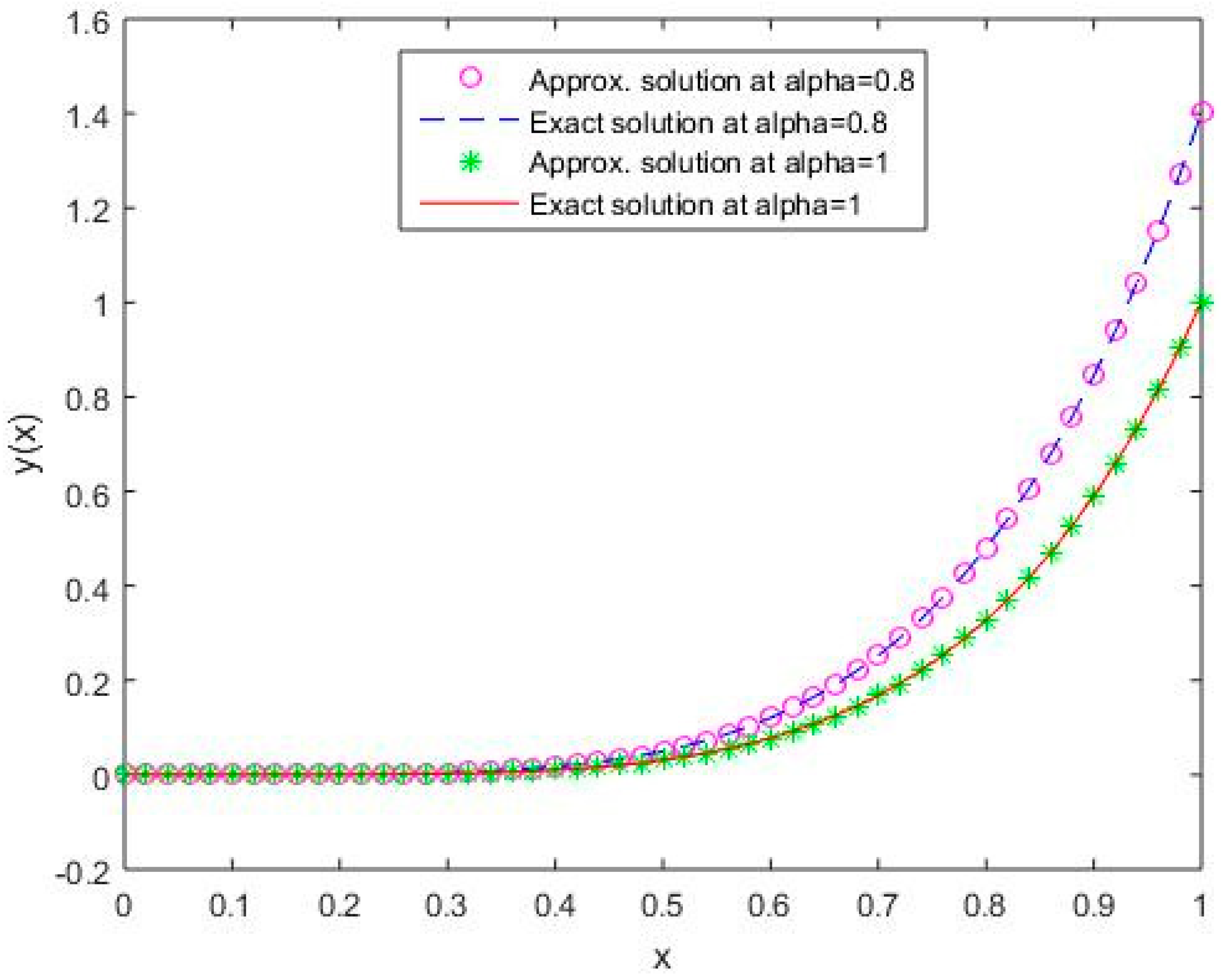

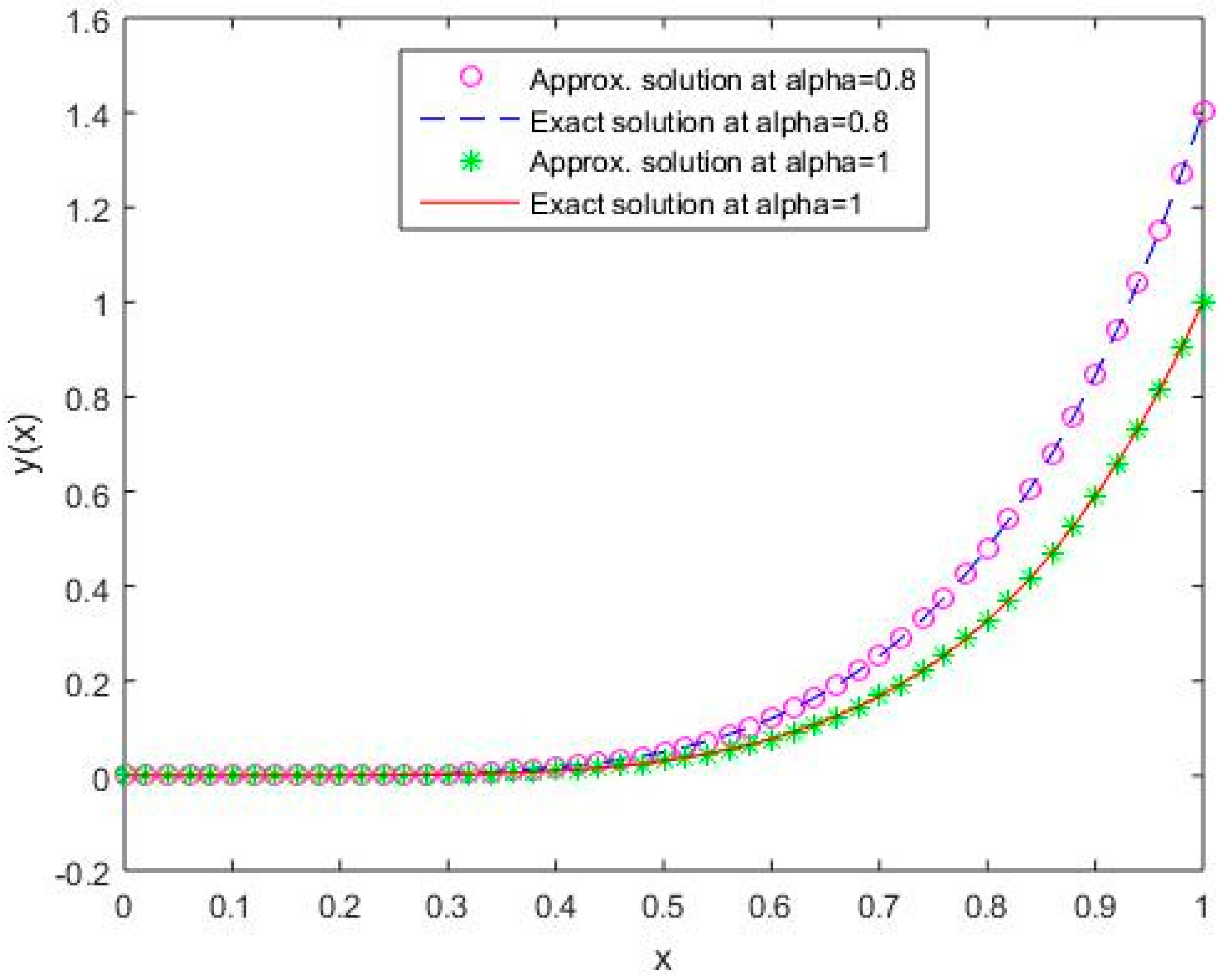

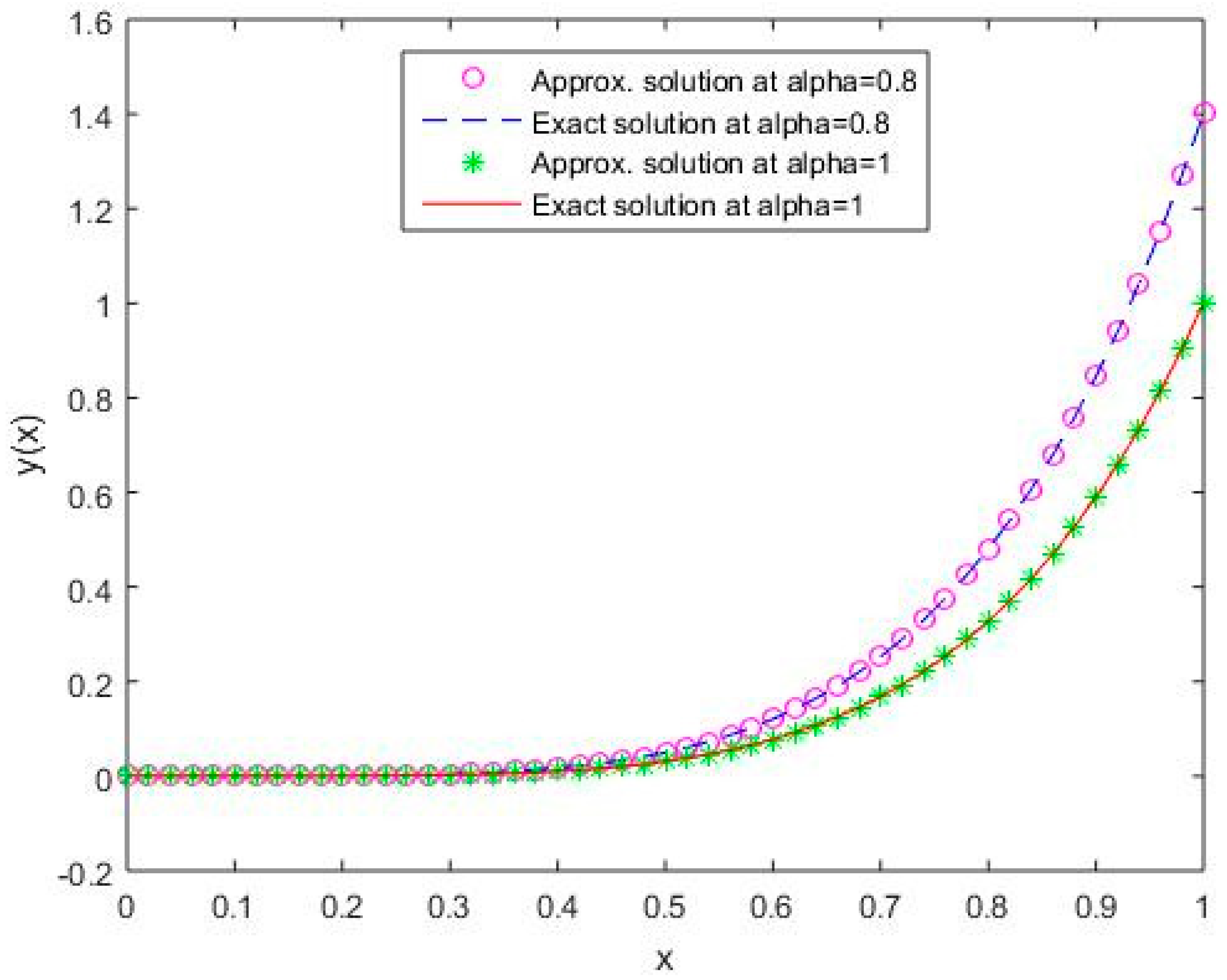

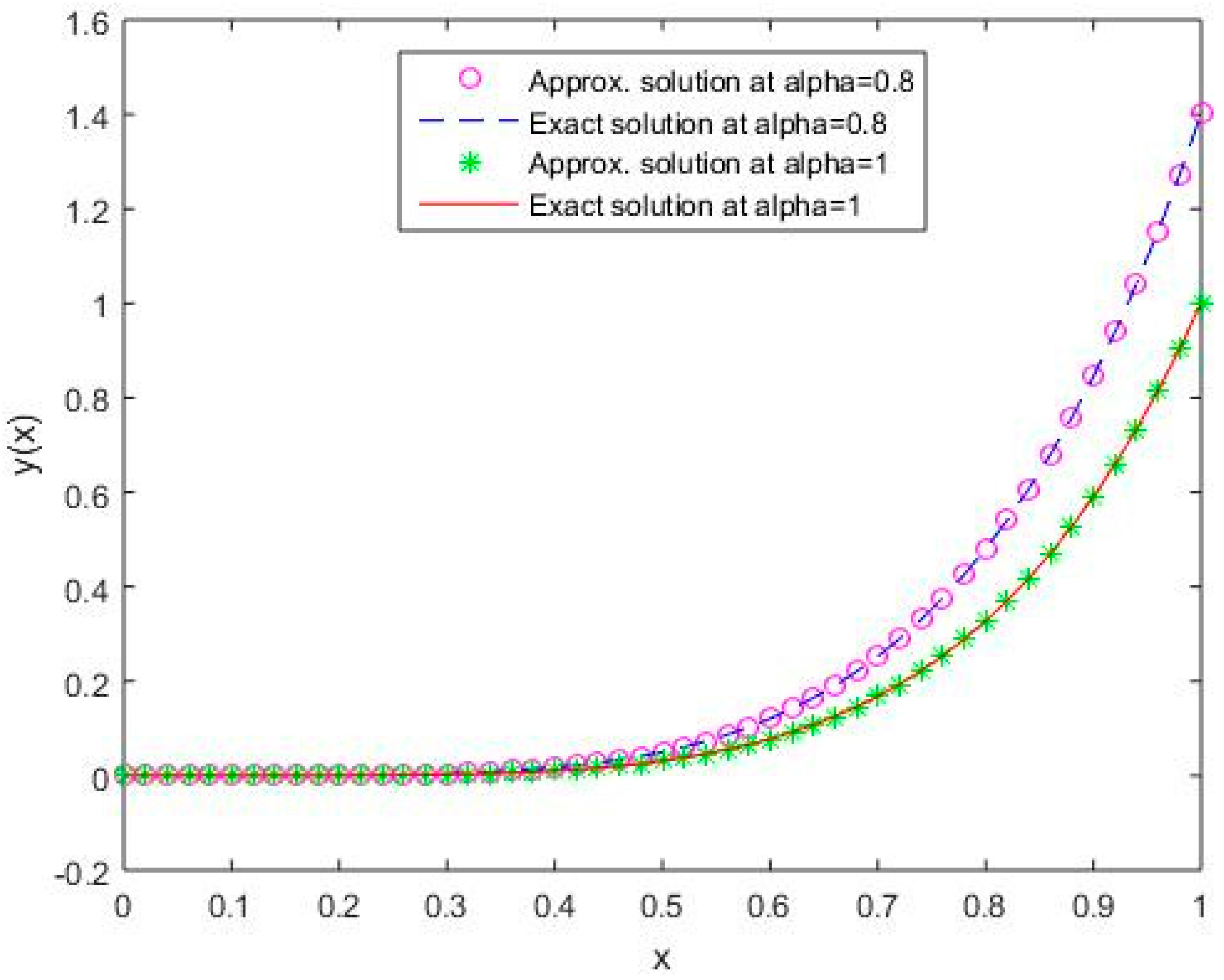

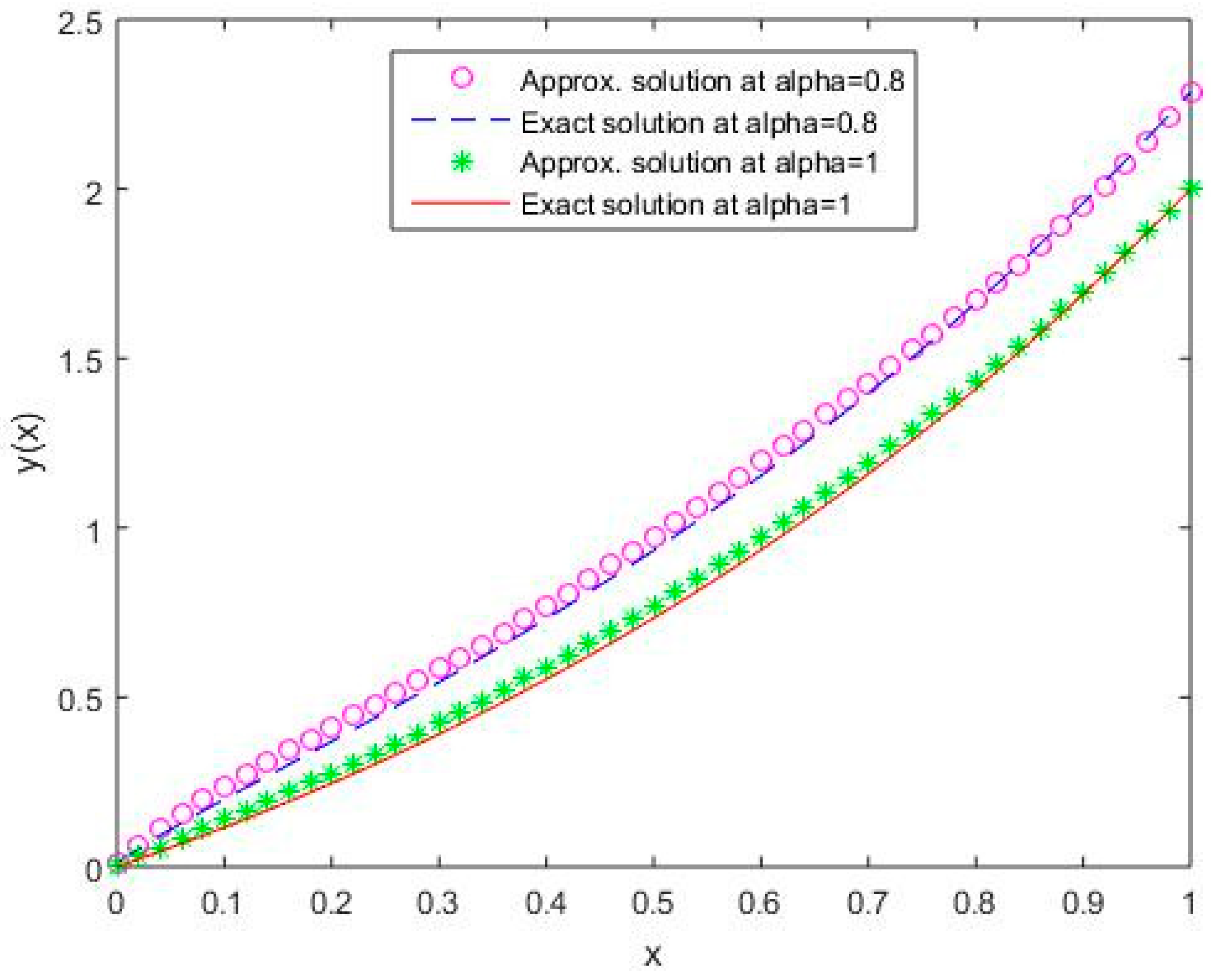

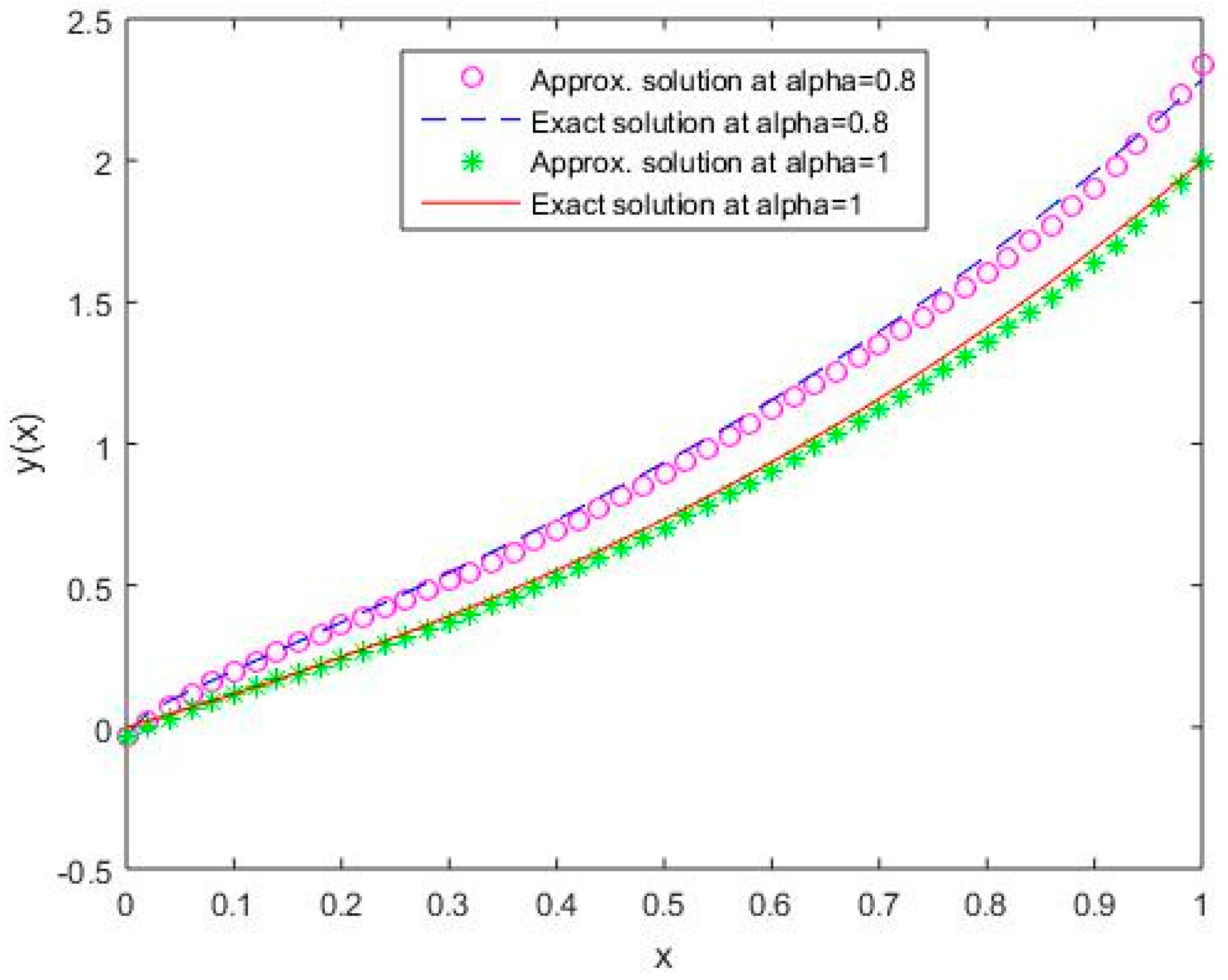

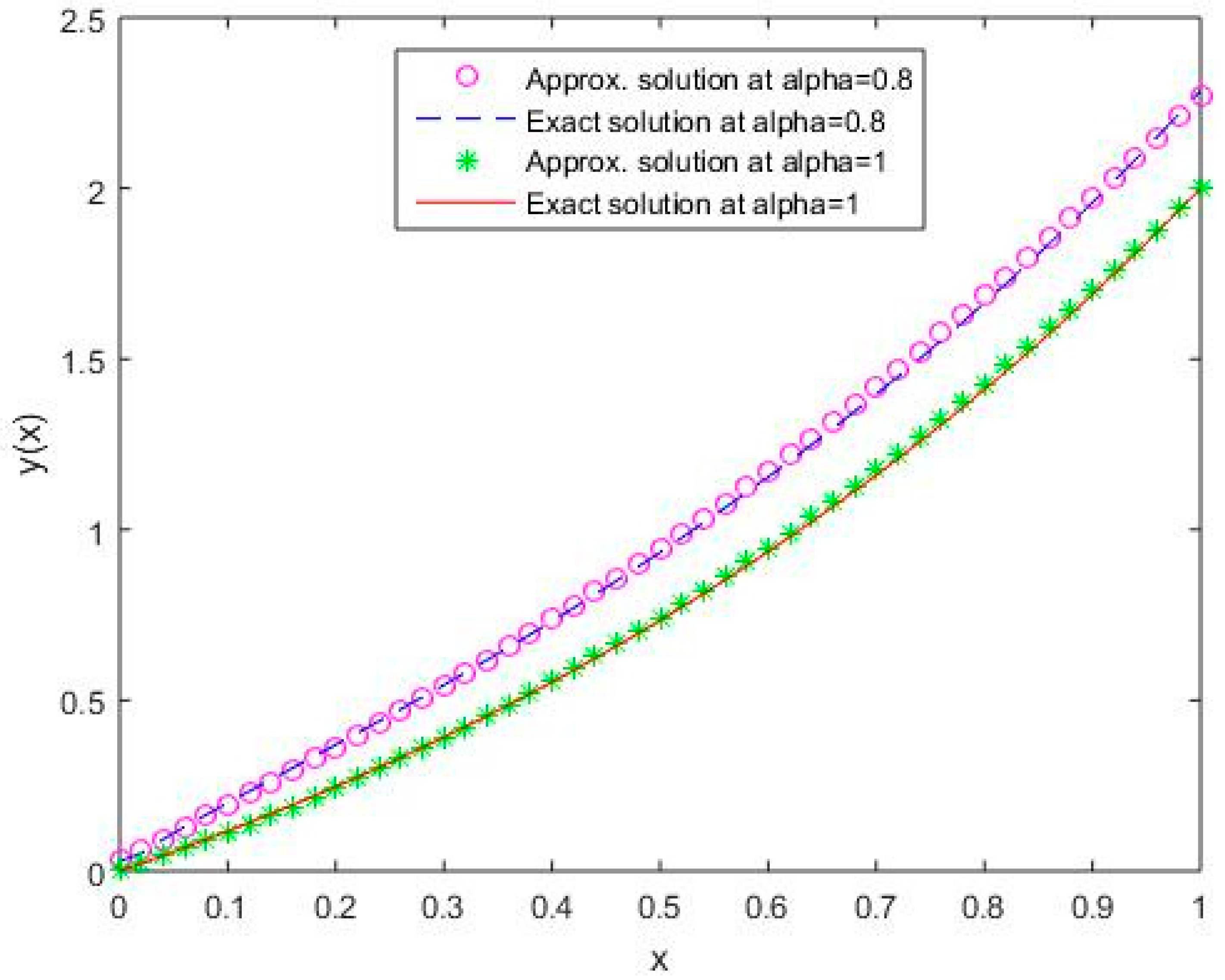

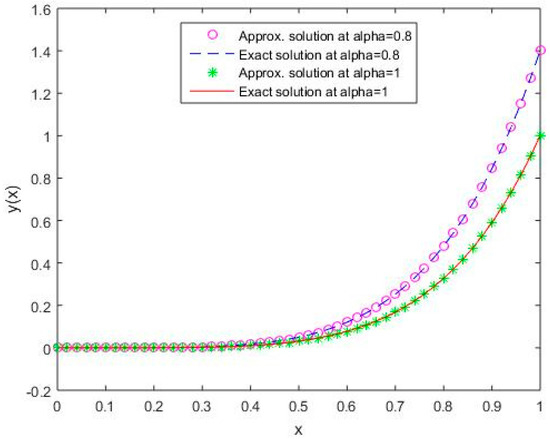

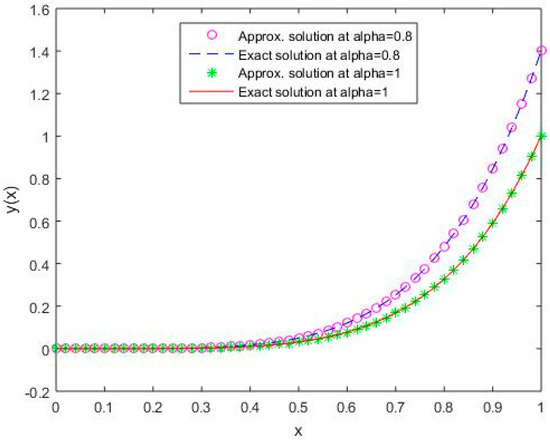

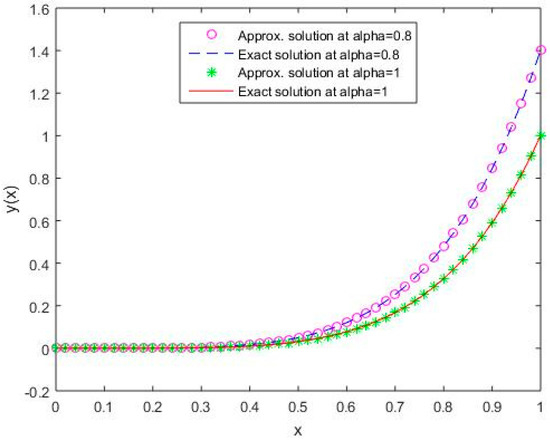

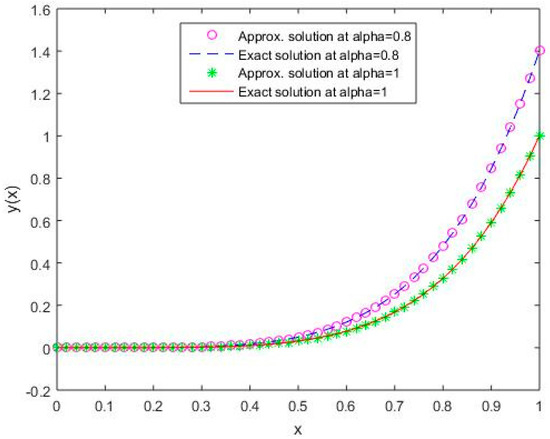

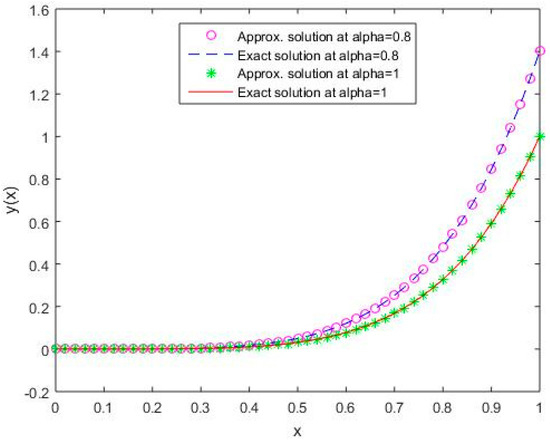

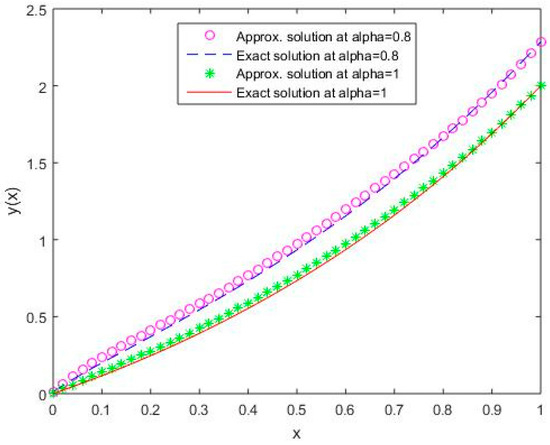

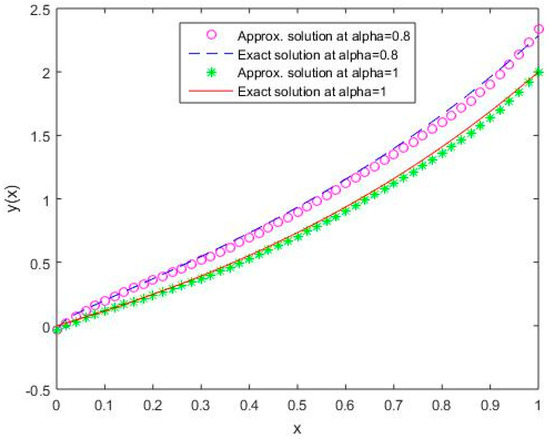

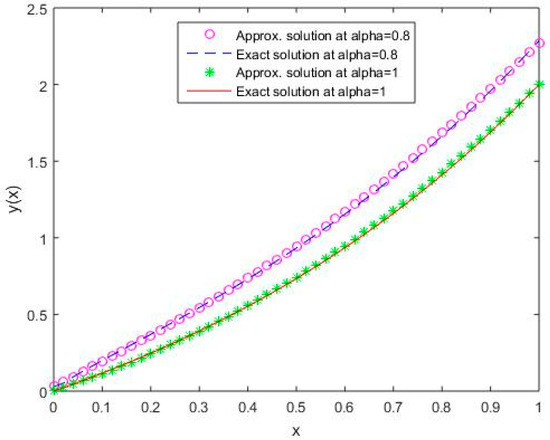

In Figure 1, Figure 2, Figure 3, Figure 4 and Figure 5, it is shown that the solutions for the two different values of coincide with the exact solutions for different orthogonal polynomials at n = 5.

Figure 1.

Comparison of exact and numerical solutions using S1 for , Example 1.

Figure 2.

Comparison of exact and numerical solutions using S2 for , Example 1.

Figure 3.

Comparison of exact and numerical solutions using S3 for , Example 1.

Figure 4.

Comparison of exact and numerical solutions using S4 for , Example 1.

Figure 5.

Comparison of exact and numerical solutions using S5 for , Example 1.

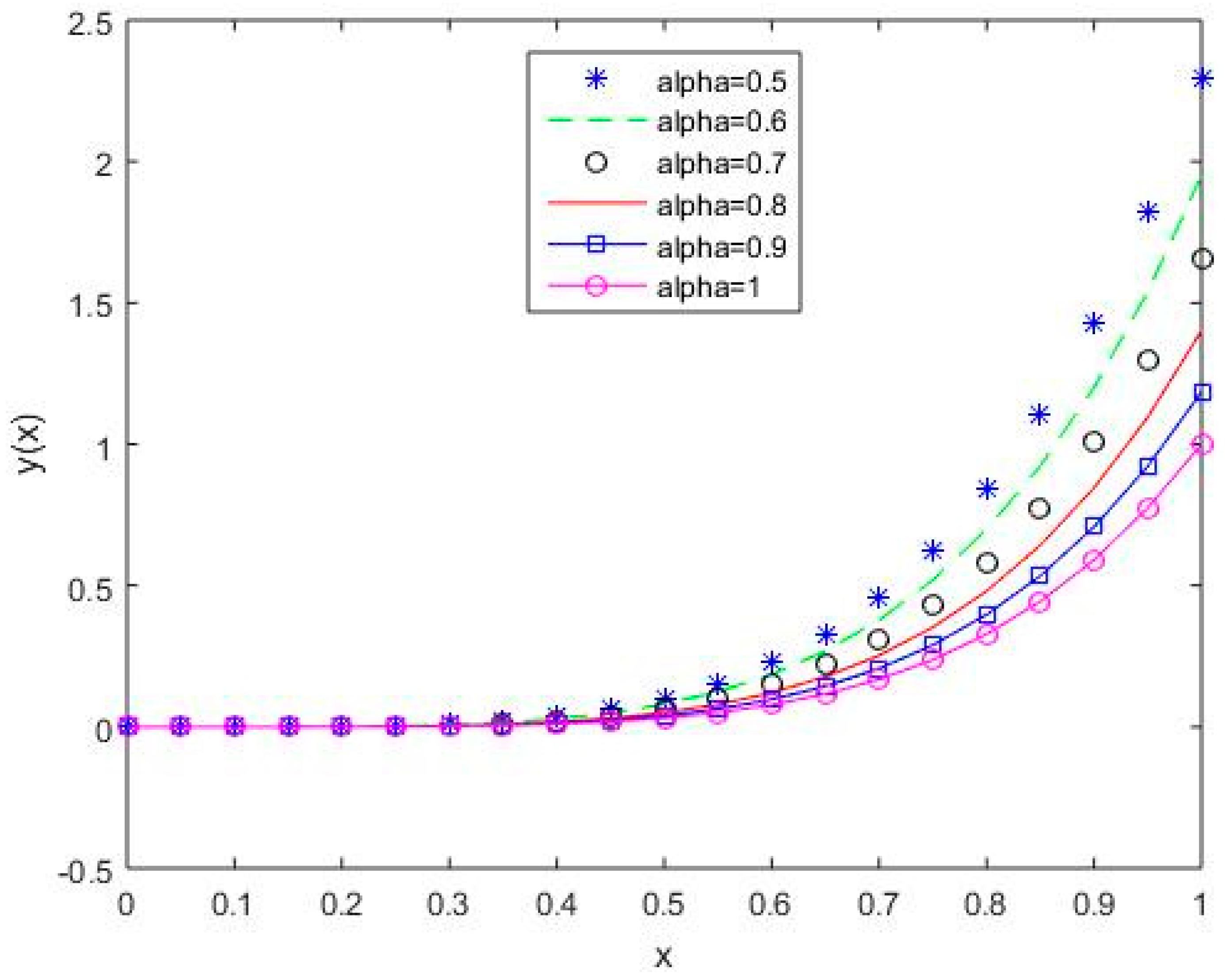

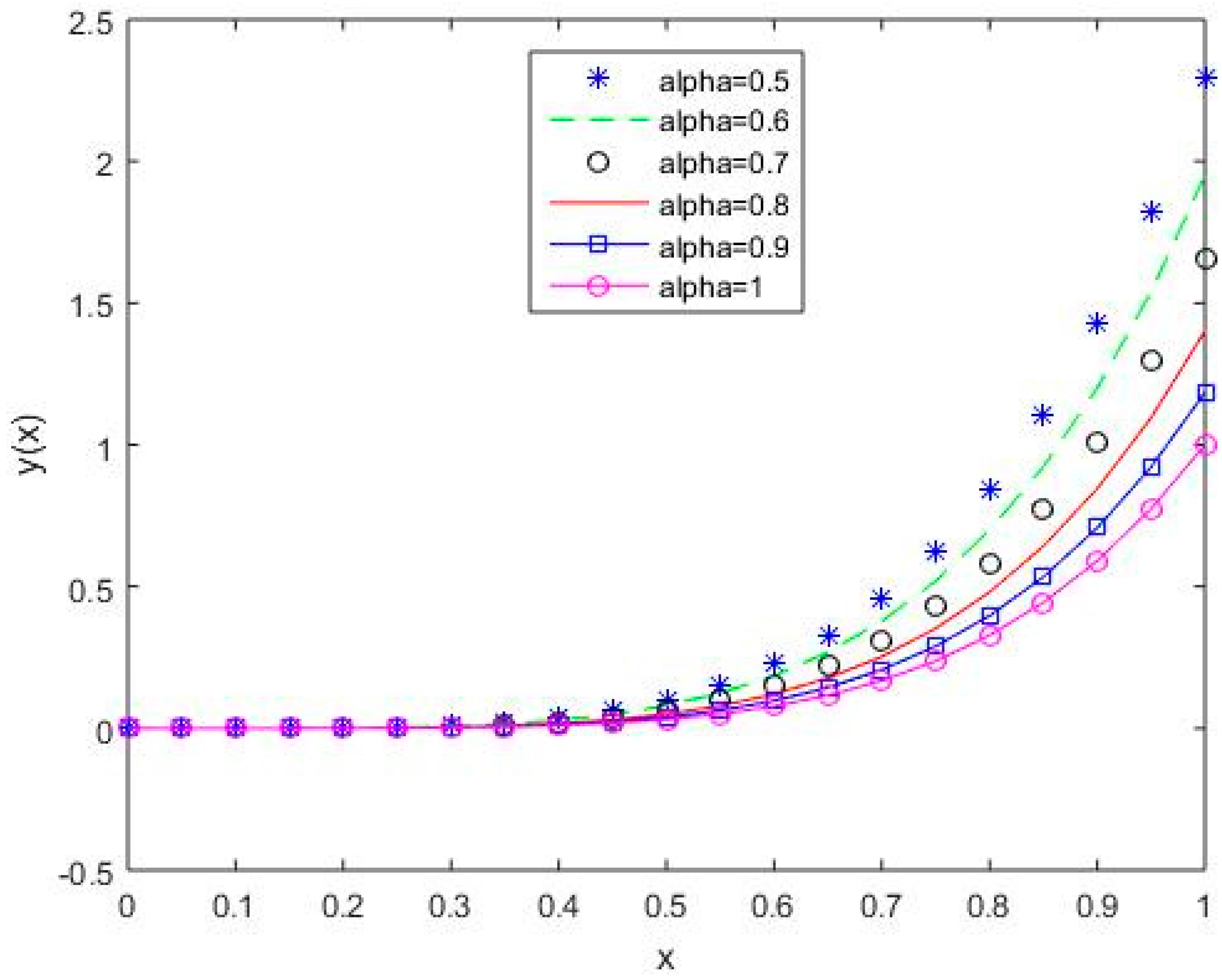

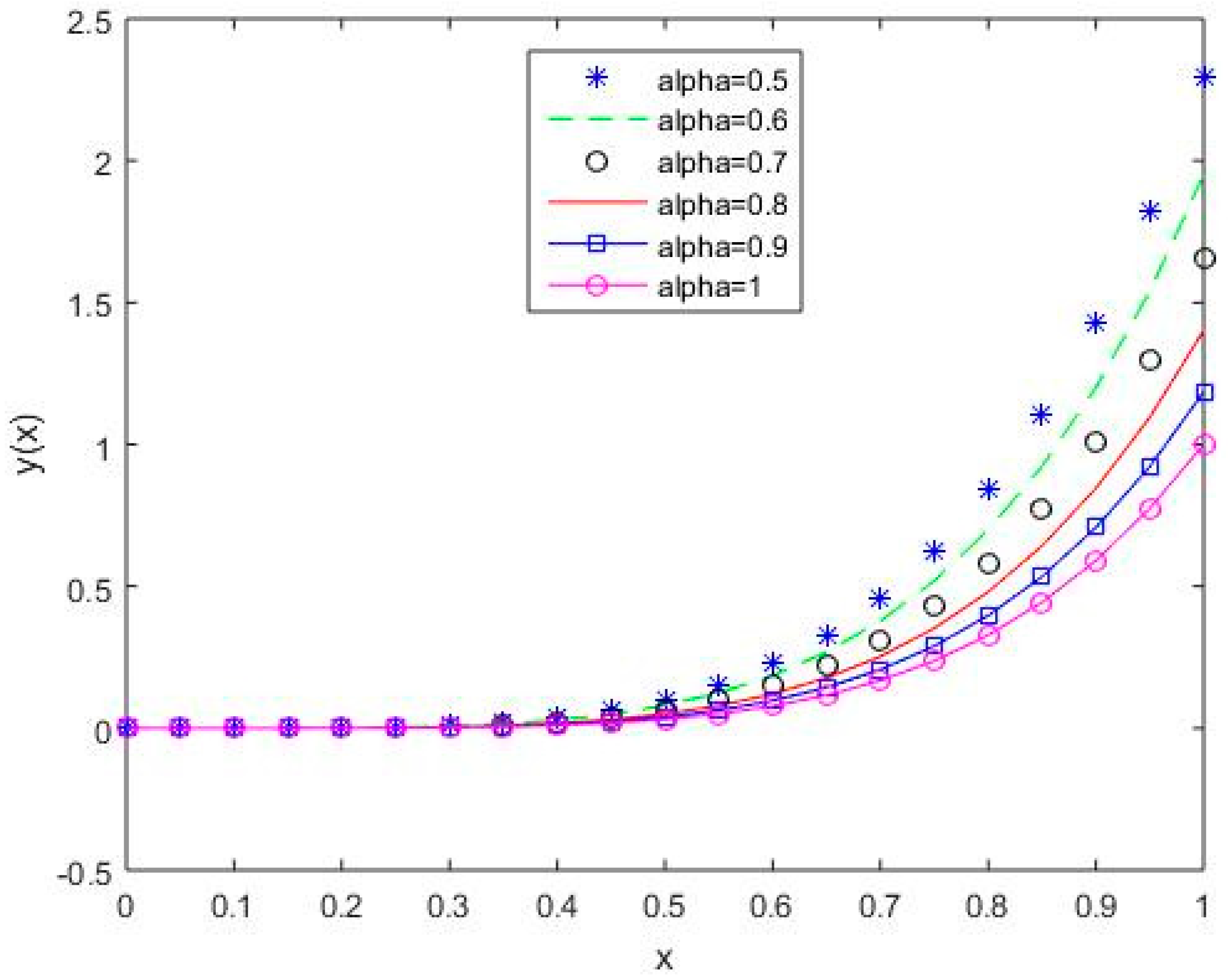

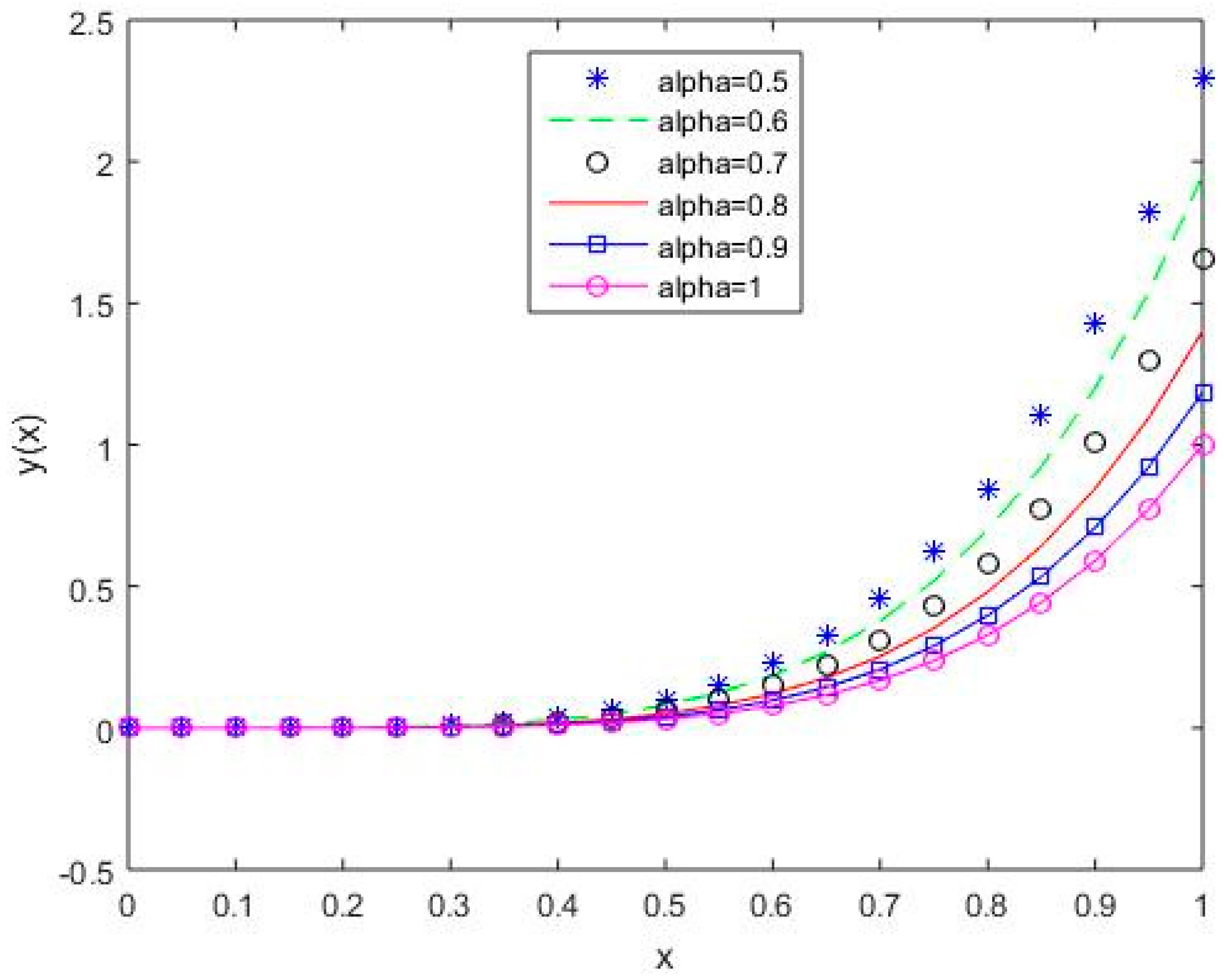

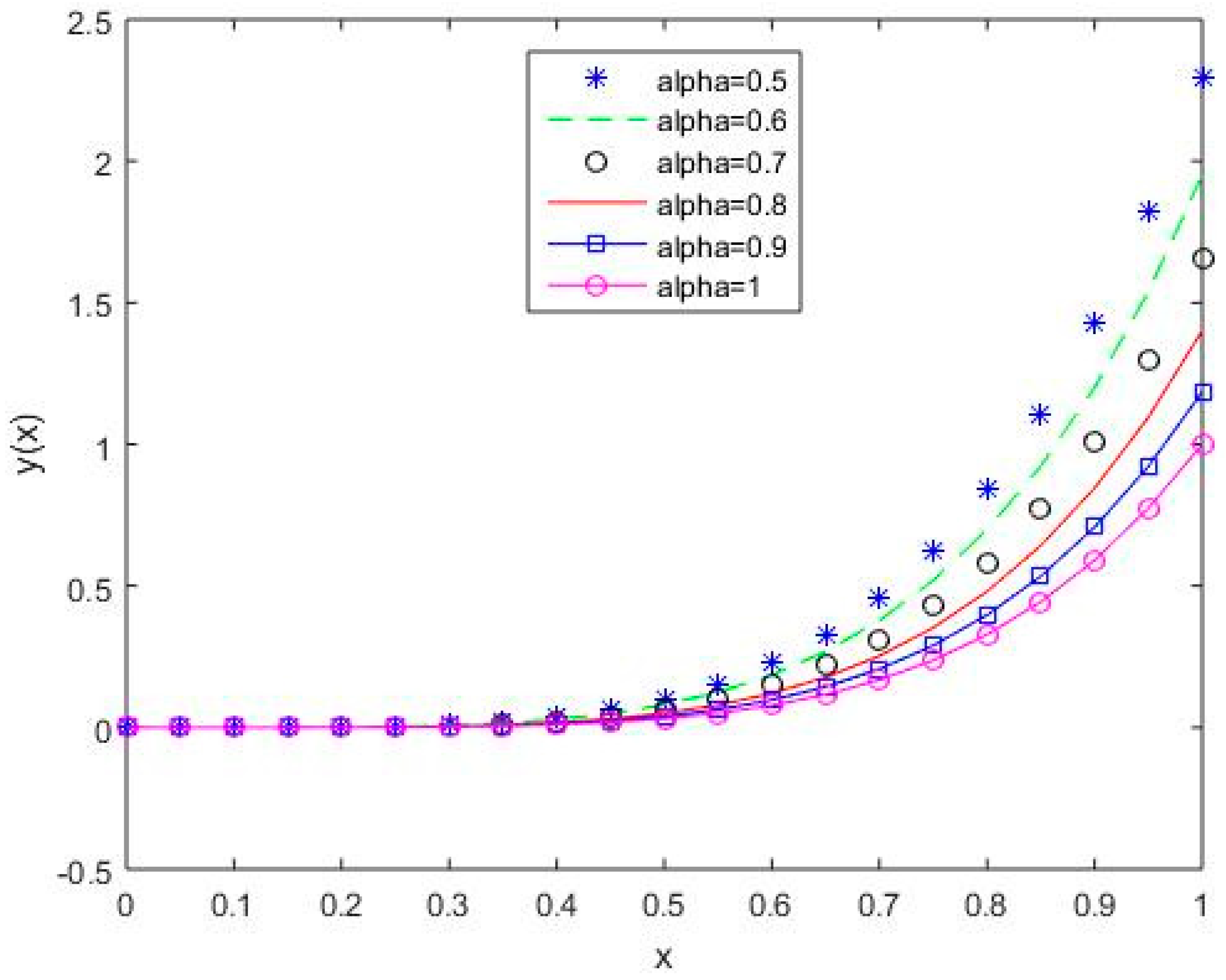

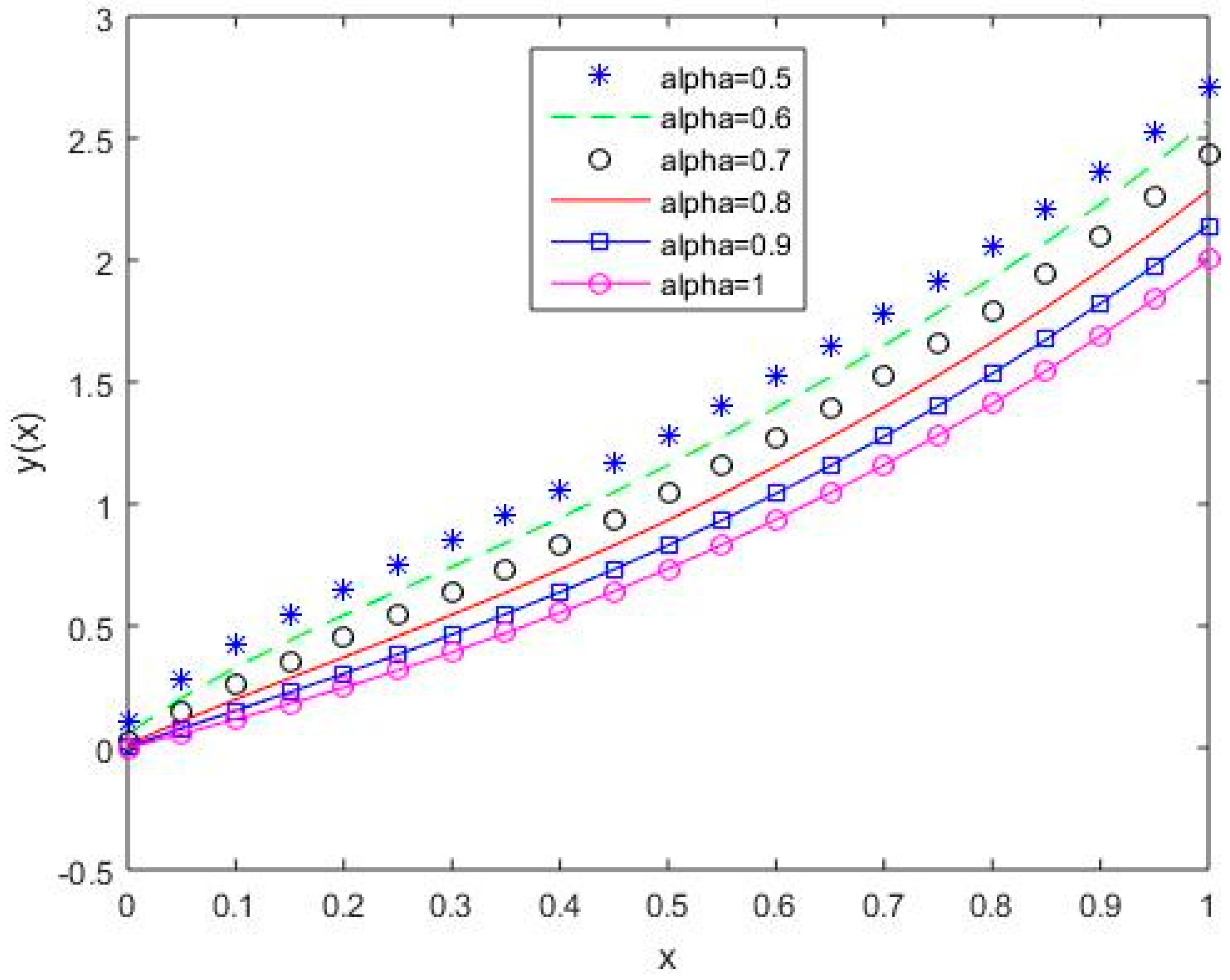

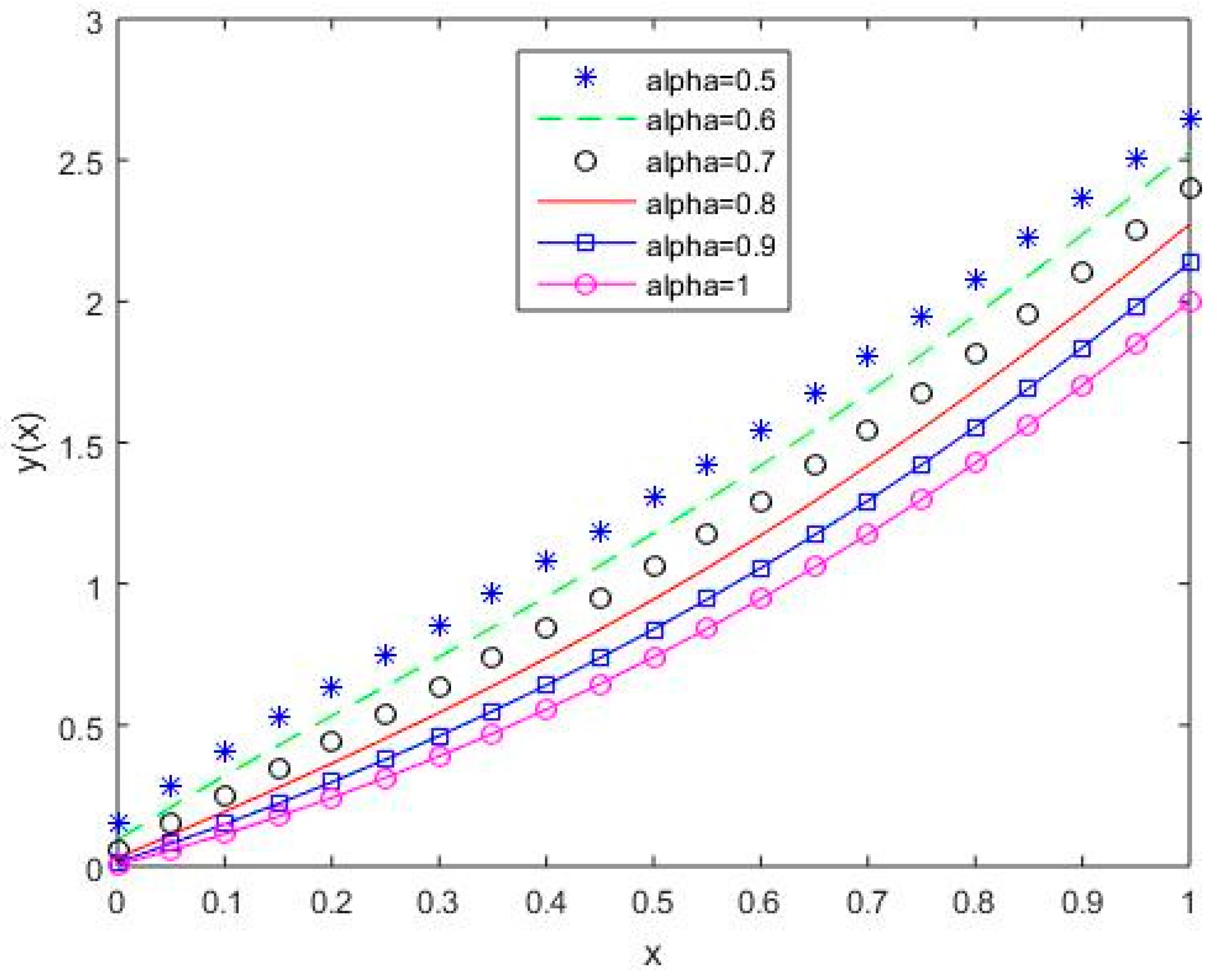

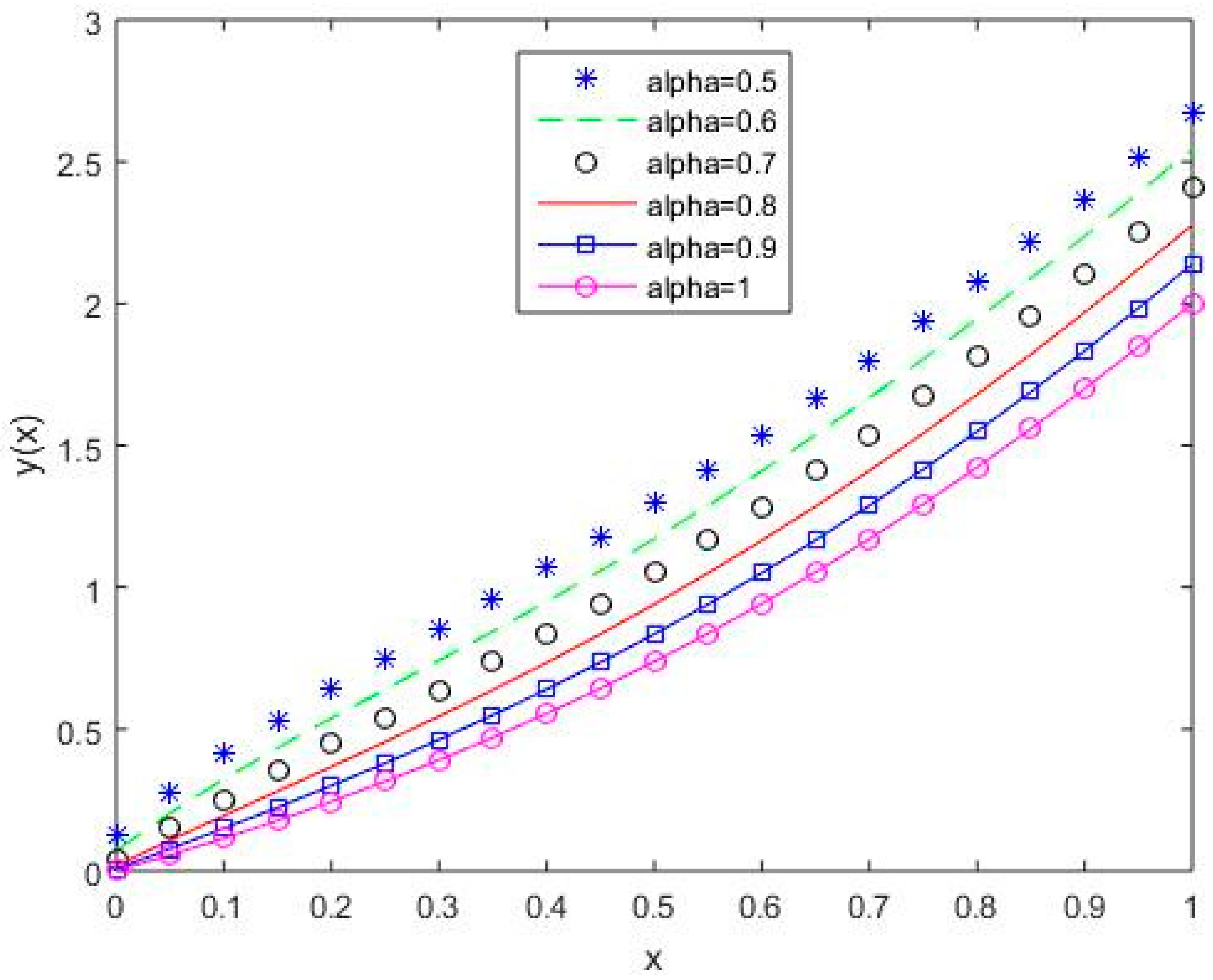

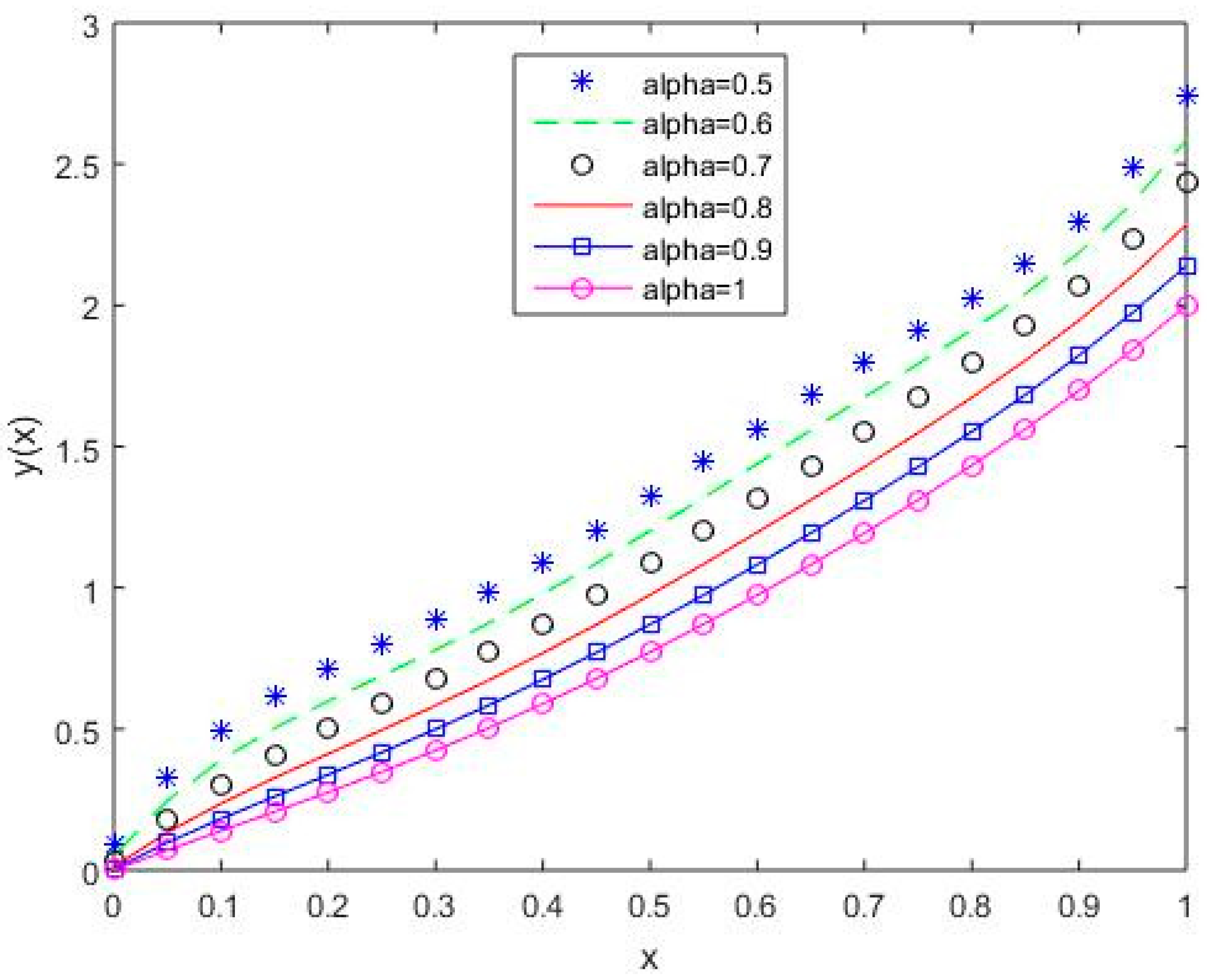

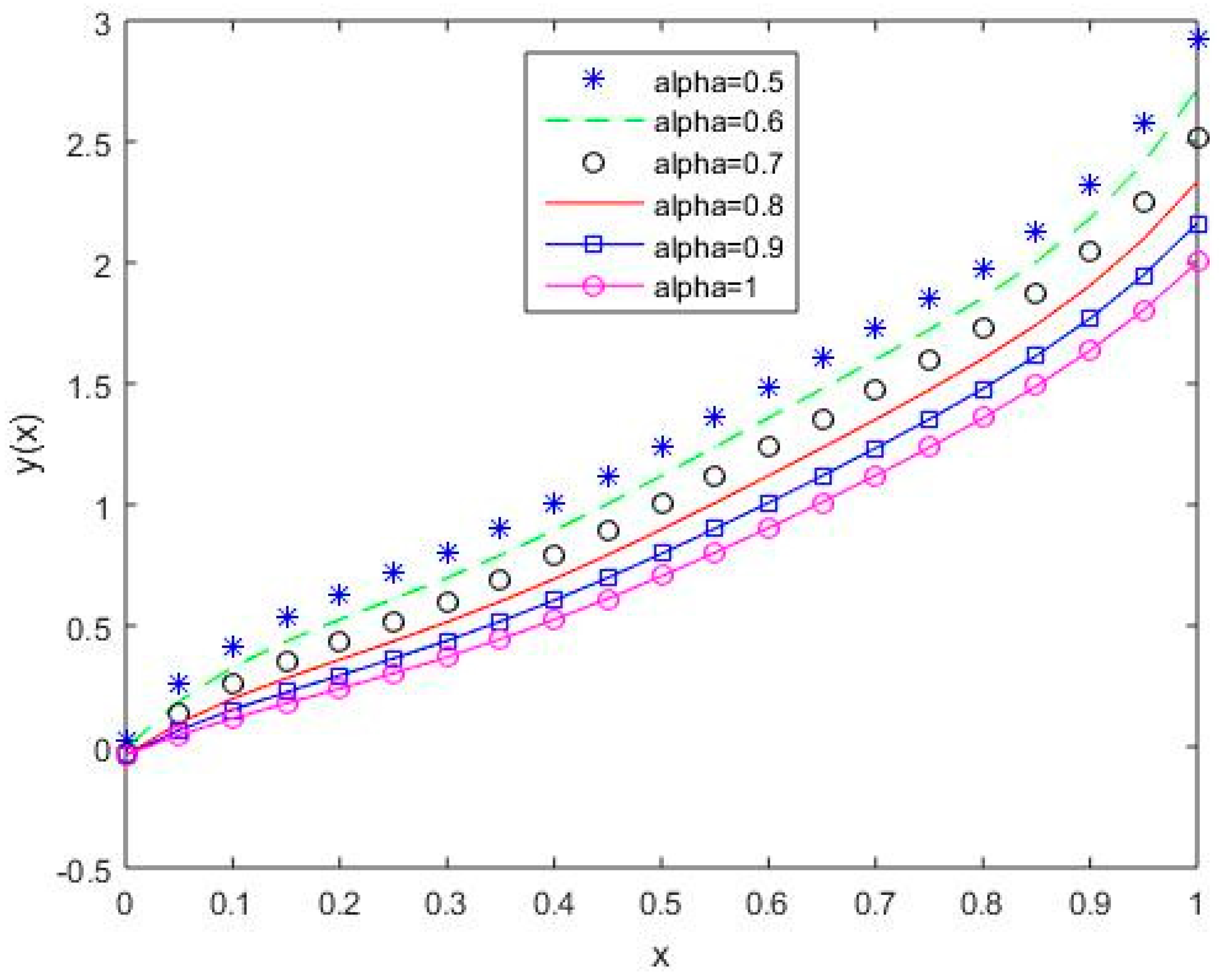

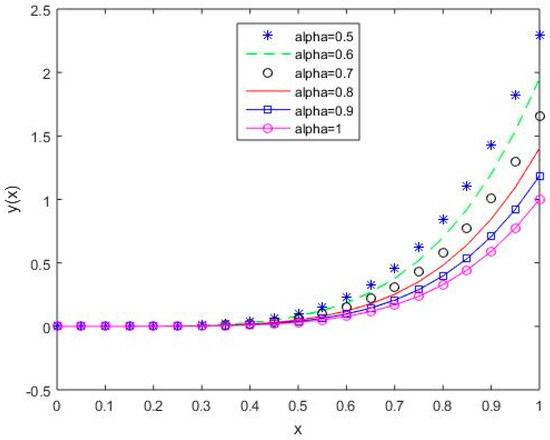

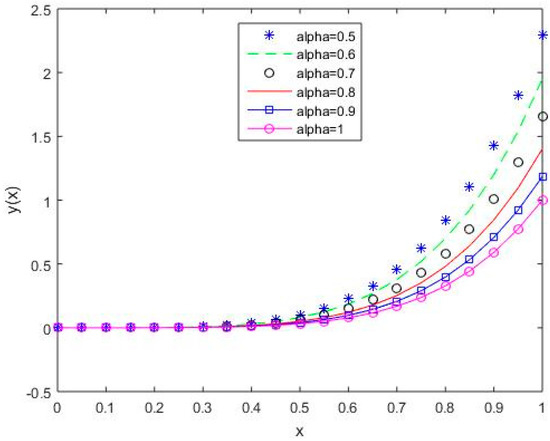

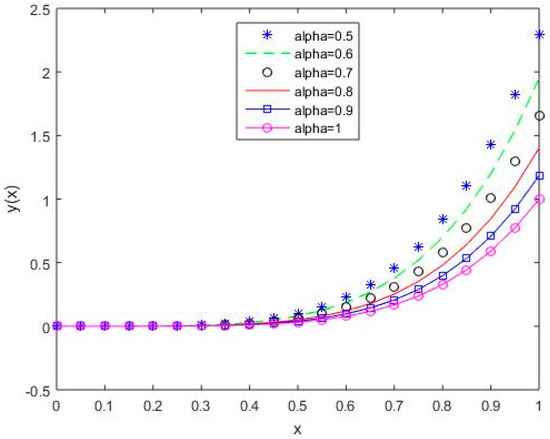

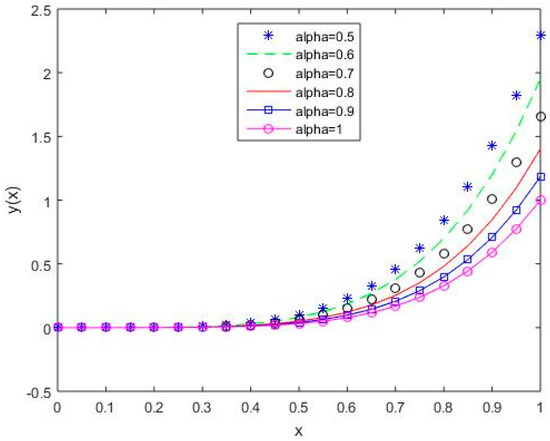

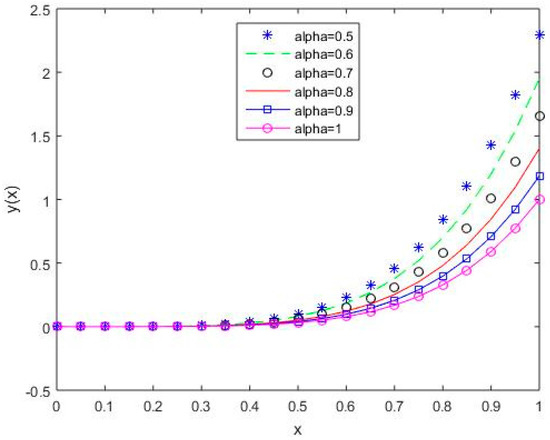

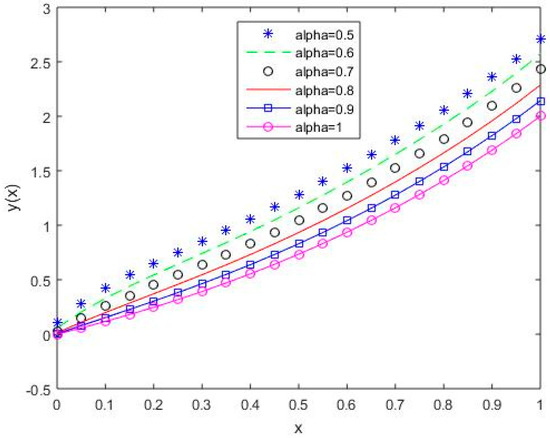

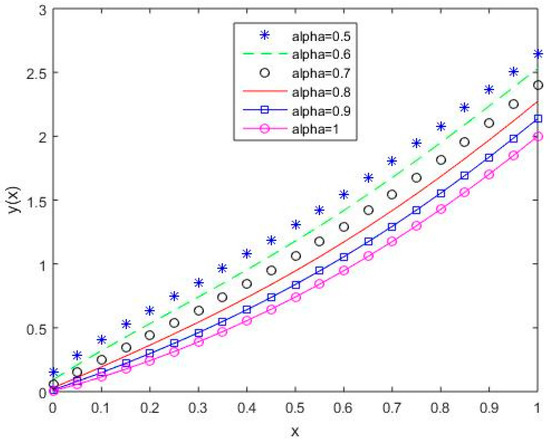

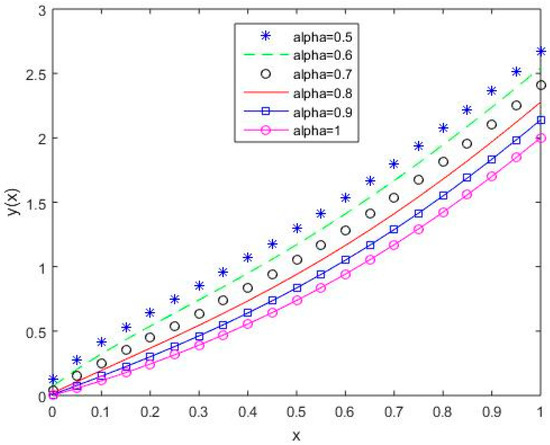

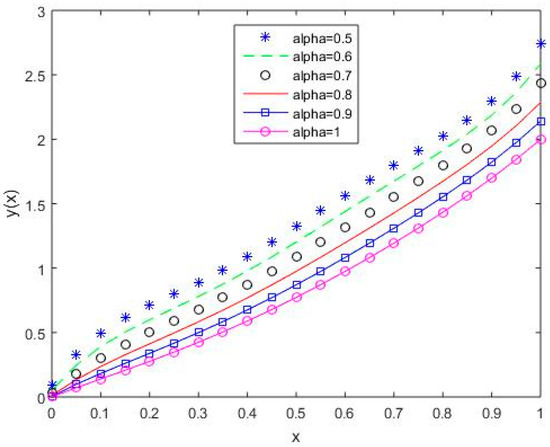

In Figure 6, Figure 7, Figure 8, Figure 9 and Figure 10, it is shown that the solution varies continuously for Shifted Legendre polynomials, Shifted Chebyshev polynomials of the second kind, Shifted Chebyshev polynomials of the third kind, Shifted Chebyshev polynomials of the fourth kind, and Gegenbauer polynomials, respectively, with different values of fractional order.

Figure 6.

The behavior of solutions using S1 for values of 0.5, 0.6, 0.7, 0.8, 0.9, and 1, Example 1.

Figure 7.

The behavior of solutions using S2 for values of 0.5, 0.6, 0.7, 0.8, 0.9, and 1, Example 1.

Figure 8.

The behavior of solutions using S3 for values of 0.5, 0.6, 0.7, 0.8, 0.9, and 1, Example 1.

Figure 9.

The behavior of solutions using S4 for values of 0.5, 0.6, 0.7, 0.8, 0.9, and 1, Example 1.

Figure 10.

The behavior of solutions using S5 for values of 0.5, 0.6, 0.7, 0.8, 0.9, and 1, Example 1.

In Table 1, we have listed the maximum absolute errors (MAE) and root-mean-square errors (RMSE) for Example 1 for the two different n values of 2 and 6.

Table 1.

Result comparison of Example 1 for different orthogonal polynomials at different values of n.

In Table 1, we have compared results for different polynomials, and it is observed that the results for Shifted Legendre polynomials and Gegenbauer polynomials are better than those for the other polynomials. It is also observed that the MAE and RMSE decrease with increasing n.

Example 2.

Consider a non-linear fractional variational problem as in Equation (1) with; we then have the following non-linear fractional variational problem [19]:

under the constraints

The exact solution of the above equation is given as

whereis the Mittag-Leffler function of orderandand is defined as

We discuss Example 2 for different values of 1 and

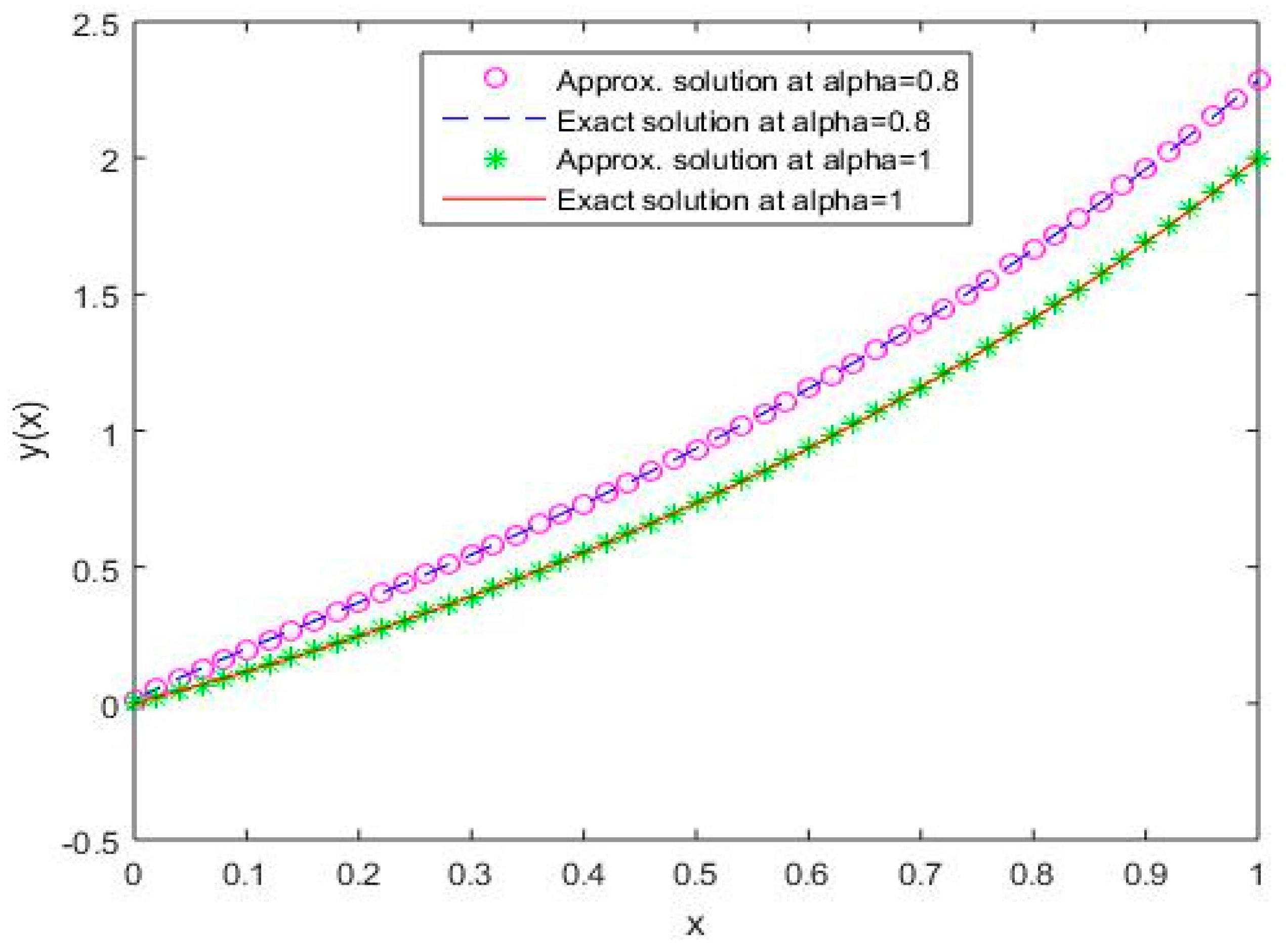

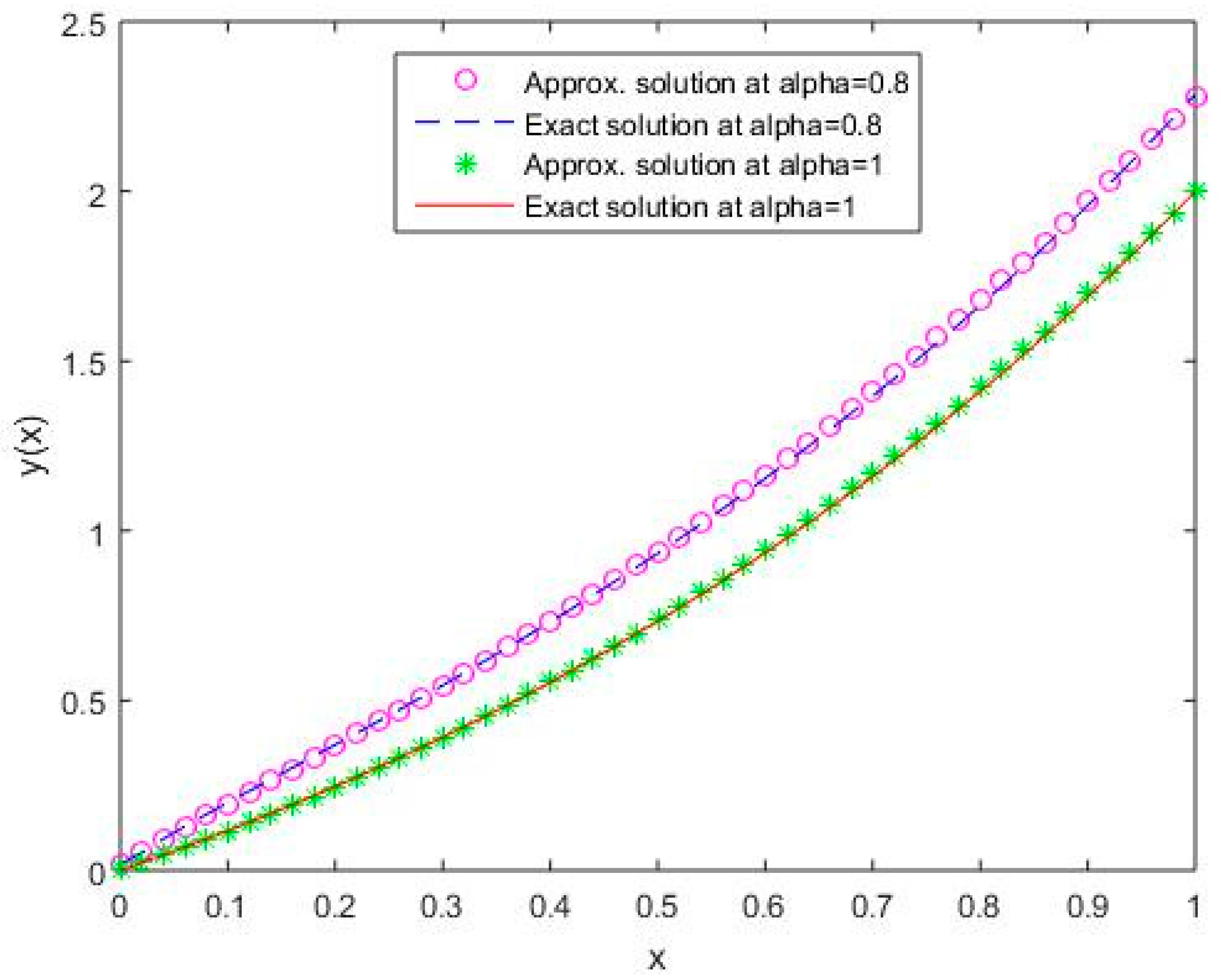

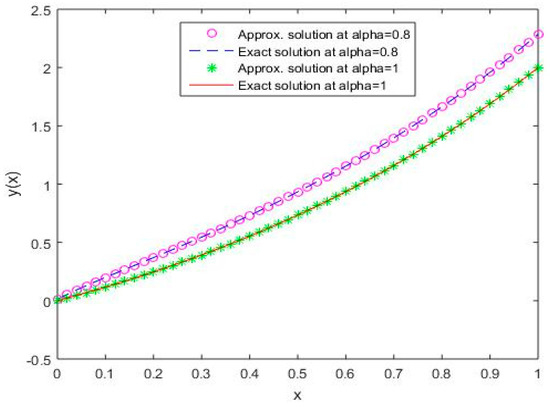

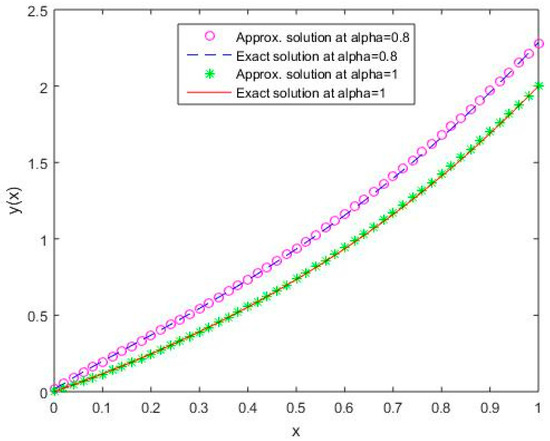

In Figure 11, Figure 12, Figure 13, Figure 14 and Figure 15, it is shown that the solutions for the two different values of coincide with the exact solutions for different orthogonal polynomials at n = 5.

Figure 11.

Comparison of exact and numerical solutions using S1 for , Example 2.

Figure 12.

Comparison of exact and numerical solutions using S2 for , Example 2.

Figure 13.

Comparison of exact and numerical solutions using S3 for , Example 2.

Figure 14.

Comparison of exact and numerical solutions using S4 for , Example 2.

Figure 15.

Comparison of exact and numerical solutions using S5 for , Example 2.

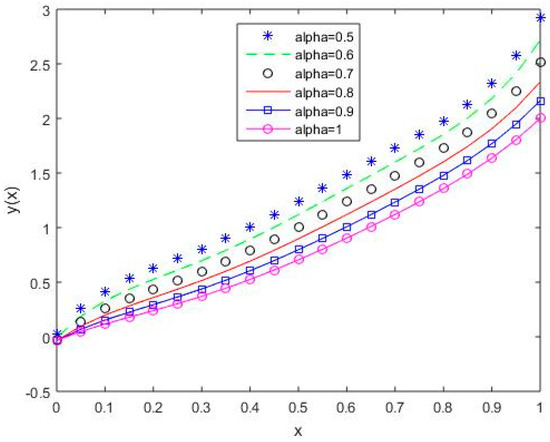

Figure 16, Figure 17, Figure 18, Figure 19 and Figure 20 reflect that the approximate solution varies continuously for Shifted Legendre polynomials, Shifted Chebyshev polynomials of the second kind, Shifted Chebyshev polynomials of the third kind, Shifted Chebyshev polynomials of the fourth kind, and Gegenbauer polynomials, respectively, with different values of fractional order.

Figure 16.

The behavior of solutions using S1 for values of 0.5, 0.6, 0.7, 0.8, 0.9, and 1, Example 2.

Figure 17.

The behavior of solutions using S2 for values of 0.5, 0.6, 0.7, 0.8, 0.9, and 1, Example 2.

Figure 18.

The behavior of solutions using S3 for values of 0.5, 0.6, 0.7, 0.8, 0.9, and 1, Example 2.

Figure 19.

The behavior of solutions using S4 for values of 0.5, 0.6, 0.7, 0.8, 0.9, and 1, Example 2.

Figure 20.

The behavior of solutions using S5 for values of 0.5, 0.6, 0.7, 0.8, 0.9, and 1, Example 2.

In Table 2, we have listed the maximum absolute errors (MAE) and root-mean-square errors (RMSE) for Example 2 for the two n values 2 and 6.

Table 2.

Result comparison of Example 2 for different orthogonal polynomials at different values of n.

In Table 2, we have compared results for different polynomials, and it is observed that the results for the Shifted Legendre polynomial are better than those for the other polynomials. It is also observed that the MAE and RMSE decrease as increases.

8. Conclusions

We extended the Ritz method [18,20,21,22,32,33] for solving a class of NLFVPs using different orthogonal polynomials such as shifted Legendre polynomials, shifted Chebyshev polynomials of the first kind, shifted Chebyshev polynomials of the third kind, shifted Chebyshev polynomials of the fourth kind, and Gegenbauer polynomials. These polynomials were used to approximate the unknown function in the NLFVP. The advantage of the method is that it converts the given NLFVPs into a set of non-linear algebraic equations which are then solved numerically. The error bound of the approximation method for NLFVP was established. It was also shown that the approximate numerical solution converges to the exact solution as we increase the number of basis functions in the approximation. At the end, numerical results were provided by applying the method to two test examples, and it was observed that the results showed good agreement with the exact solution. Numerical results obtained using different orthogonal polynomials were compared. A comparative study showed that the shifted Legendre polynomials were more accurate in approximating the numerical solution.

Author Contributions

All authors contributed equally to the writing of this paper. All authors read and approved the final manuscript.

Funding

This research received no external funding.

Acknowledgments

The authors are very grateful to the referees for their constructive comments and suggestions for the improvement of the paper.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Theorem A1.

Letbe a function such thatand letbe theapproximation of the function from; then [45]

where.

Proof .

Since , the Taylor polynomial of at , is given as

The upper bound of the error of the Taylor polynomial is given as

where .

Since and , we have

which shows that . □

References

- Dym, C.L.; Shames, I.H. Solid Mechanics: A Variational Approach; McGraw-Hill: New York, NY, USA, 1973. [Google Scholar]

- Frederico, G.S.F.; Torres, D.F.M. Fractional conservation laws in optimal control theory. Nonlinear Dyn. 2008, 53, 215–222. [Google Scholar] [CrossRef]

- Pirvan, M.; Udriste, C. Optimal control of electromagnetic energy. Balk. J. Geom. Appl. 2010, 15, 131–141. [Google Scholar]

- Agrawal, O.P. A general finite element formulation for fractional variational problems. J. Math. Anal. Appl. 2008, 337, 1–12. [Google Scholar] [CrossRef]

- Dehghan, M.; Tatari, M. The use of Adomian decomposition method for solving problems in calculus of variations. Math. Probl. Eng. 2006, 2006, 1–12. [Google Scholar] [CrossRef]

- Singh, H. A new stable algorithm for fractional Navier-Stokes equation in polar coordinate. Int. J. Appl. Comp. Math. 2017, 3, 3705–3722. [Google Scholar] [CrossRef]

- Robinson, A.D. The use of control systems analysis in neurophysiology of eye movements. Ann. Rev. Neurosci. 1981, 4, 462–503. [Google Scholar] [CrossRef] [PubMed]

- Singh, H. Operational matrix approach for approximate solution of fractional model of Bloch equation. J. King Saud Univ.-Sci. 2017, 29, 235–240. [Google Scholar] [CrossRef]

- Bagley, R.L.; Torvik, P.J. Fractional calculus a differential approach to the analysis of viscoelasticity damped structures. AIAA J. 1983, 21, 741–748. [Google Scholar] [CrossRef]

- Bagley, R.L.; Torvik, P.J. Fractional calculus in the transient analysis of viscoelasticity damped structures. AIAA J. 1985, 23, 918–925. [Google Scholar] [CrossRef]

- Panda, R.; Dash, M. Fractional generalized splines and signal processing. Signal Process. 2006, 86, 2340–2350. [Google Scholar] [CrossRef]

- Magin, R.L. Fractional calculus in bioengineering. Crit. Rev. Biomed. Eng. 2004, 32, 1–104. [Google Scholar] [CrossRef] [PubMed]

- Bohannan, G.W. Analog fractional order controller in temperature and motor control applications. J. Vib. Control. 2008, 14, 1487–1498. [Google Scholar] [CrossRef]

- Novikov, V.V.; Wojciechowski, K.W.; Komkova, O.A.; Thiel, T. Anomalous relaxation in dielectrics. Equations with fractional derivatives. Mater. Sci. 2005, 23, 977–984. [Google Scholar]

- Agrawal, O.P. A new Lagrangian and a new Lagrange equation of motion for fractionally damped systems. J. Appl. Mech. 2013, 237, 339–341. [Google Scholar] [CrossRef]

- Mozyrska, D.; Torres, D.F.M. Minimal modified energy control for fractional linear control systems with the Caputo derivative. Carpath. J. Math. 2010, 26, 210–221. [Google Scholar]

- Almeida, R.; Malinowska, R.; Torres, D.F.M. A fractional calculus of variations for multiple integrals with application to vibrating string. J. Math. Phys. 2010, 51, 033503. [Google Scholar] [CrossRef]

- Lotfi, A.; Dehghan, M.; Yousefi, S.A. A numerical technique for solving fractional optimal control problems. Comput. Math. Appl. 2011, 62, 1055–1067. [Google Scholar] [CrossRef]

- Khosravian-Arab, H.; Almeida, R. Numerical solution for fractional variational problems using the Jacobi polynomials. Appl. Math. Modell. 2015. [Google Scholar] [CrossRef]

- Agrawal, O.P. Formulation of Euler-Lagrange equations for fractional variational problems. J. Math. Anal. Appl. 2002, 272, 368–379. [Google Scholar] [CrossRef]

- Doha, E.H.; Bhrawy, A.H.; Baleanu, D.; Ezz-Eldien, S.S.; Hafez, R.M. An efficient numerical scheme based on the shifted orthonormal Jacobi polynomials for solving fractional optimal control problems. Adv. Differ. Equ. 2015. [Google Scholar] [CrossRef]

- Ezz-Eldie, S.S.; Doha, E.H.; Baleanu, D.; Bhrawy, A.H. A numerical approach based on Legendre orthonormal polynomials for numerical solutions of fractional optimal control problems. J. Vib. Ctrl. 2015. [Google Scholar] [CrossRef]

- Osama, H.M.; Fadhel, S.F.; Zaid, A.M. Numerical solution of fractional variational problems using direct Haar wavelet method. Int. J. Innov. Res. Sci. Eng. Technol. 2014, 3, 12742–12750. [Google Scholar]

- Ezz-Eldien, S.S. New quadrature approach based on operational matrix for solving a class of fractional variational problems. J. Comp. Phys. 2016, 317, 362–381. [Google Scholar] [CrossRef]

- Bastos, N.; Ferreira, R.; Torres, D.F.M. Discrete-time fractional variational problems. Signal Process. 2011, 91, 513–524. [Google Scholar] [CrossRef]

- Wang, D.; Xiao, A. Fractional variational integrators for fractional variational problems. Commun. Nonlinear Sci. Numer. Simul. 2012, 17, 602–610. [Google Scholar] [CrossRef]

- Odzijewicz, T.; Malinowska, A.B.; Torres, D.F.M. Fractional variational calculus with classical and combined Caputo derivatives. Nonlinear Anal. 2012, 75, 1507–1515. [Google Scholar] [CrossRef]

- Bhrawy, A.H.; Ezz-Eldien, S.S. A new Legendre operational technique for delay fractional optimal control problems. Calcolo 2015. [Google Scholar] [CrossRef]

- Tavares, D.; Almeida, R.; Torres, D.F.M. Optimality conditions for fractional variational problems with dependence on a combined Caputo derivative of variable order. Optimization 2015, 64, 1381–1391. [Google Scholar] [CrossRef]

- Ezz-Eldien, S.S.; Hafez, R.M.; Bhrawy, A.H.; Baleanu, D.; El-Kalaawy, A.A. New numerical approach for fractional variational problems using shifted Legendre orthonormal polynomials. J. Optim. Theory Appl. 2017, 174, 295–320. [Google Scholar] [CrossRef]

- Almeida, R. Variational problems involving a Caputo-type fractional derivative. J. Optim. Theory Appl. 2017, 174, 276–294. [Google Scholar] [CrossRef]

- Pandey, R.K.; Agrawal, O.P. Numerical Scheme for Generalized Isoparametric Constraint Variational Problems with A-Operator. In Proceedings of the ASME International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, Portland, OR, USA, 4–7 August 2013. [Google Scholar] [CrossRef]

- Pandey, R.K.; Agrawal, O.P. Numerical scheme for a quadratic type generalized isoperimetric constraint variational problems with A.-operator. J. Comput. Nonlinear Dyn. 2015, 10, 021003. [Google Scholar] [CrossRef]

- Pandey, R.K.; Agrawal, O.P. Comparison of four numerical schemes for isoperimetric constraint fractional variational problems with A-operator. In Proceedings of the ASME 2015 International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, Boston, MA, USA, 2–5 August 2015. [Google Scholar] [CrossRef]

- Singh, H.; Srivastava, H.M.D.; Kumar, D. A reliable numerical algorithm for the fractional vibration equation. Chaos Solitons Fractals 2017, 103, 131–138. [Google Scholar] [CrossRef]

- Singh, O.P.; Singh, V.K.; Pandey, R.K. A stable numerical inversion of Abel’s integral equation using almost Bernstein operational matrix. J. Quant. Spec. Rad. Trans. 2012, 111, 567–579. [Google Scholar] [CrossRef]

- Zhou, F.; Xu, X. Numerical solution of convection diffusions equations by the second kind Chebyshev wavelets. Appl. Math. Comput. 2014, 247, 353–367. [Google Scholar] [CrossRef]

- Yousefi, S.A.; Behroozifar, M.; Dehghan, M. The operational matrices of Bernstein polynomials for solving the parabolic equation subject to the specification of the mass. J. Comput. Appl. Math. 2011, 235, 5272–5283. [Google Scholar] [CrossRef]

- Singh, H. A New Numerical Algorithm for Fractional Model of Bloch Equation in Nuclear Magnetic Resonance. Alex. Eng. J. 2016, 55, 2863–2869. [Google Scholar] [CrossRef]

- Khalil, H.; Khan, R.A. A new method based on Legendre polynomials for solutions of the fractional two dimensional heat conduction equations. Comput. Math. Appl. 2014, 67, 1938–1953. [Google Scholar] [CrossRef]

- Singh, C.S.; Singh, H.; Singh, V.K.; Singh, O.P. Fractional order operational matrix methods for fractional singular integro-differential equation. Appl. Math. Modell. 2016, 40, 10705–10718. [Google Scholar] [CrossRef]

- Rivlin, T.J. An Introduction to the Approximation of Functions; Dover Publication: New York, NY, USA, 1981. [Google Scholar]

- Kreyszig, E. Introductory Functional Analysis with Applications; John Wiley and Sons, Inc.: Hoboken, NJ, USA, 1978. [Google Scholar]

- Bhrawy, A.H.; Tharwat, M.M.; Alghamdi, M.A. A new operational matrix of fractional integration for shifted Jacobi polynomials. Bull. Malays. Math. Sci. Soc. 2014, 37, 983–995. [Google Scholar]

- Behroozifar, M.; Sazmand, A. An approximate solution based on Jacobi polynomials for time-fractional convection–diffusion equation. Appl. Math. Comput. 2017, 296, 1–17. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).