Sparse Recovery Algorithm for Compressed Sensing Using Smoothed l0 Norm and Randomized Coordinate Descent

Abstract

:1. Introduction

2. Sparse Recovery Algorithm

2.1. Cost Function

2.2. Solution

2.3. Algorithm Description

2.4. Computational Cost Analysis

3. Comparative Experiment

3.1. Signal Recovery Experiment

3.1.1. Comparison of RCD and the Modified Newton Method

3.1.2. Comparison of L0RCD and the Other Sparse Recovery Algorithms

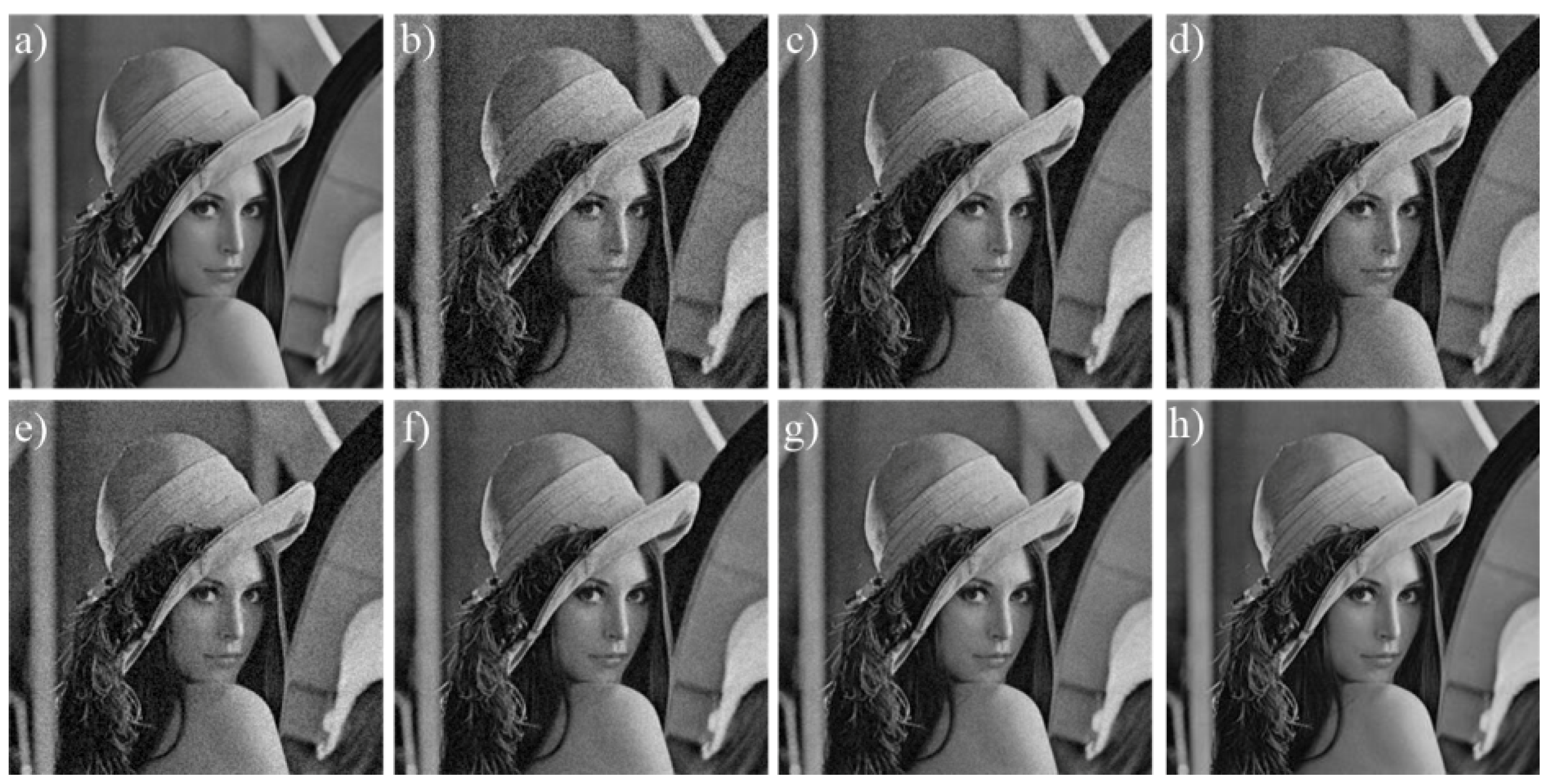

3.2. Image Denoising Experiment

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Donoho, D.L. Compressed sensing. IEEE Trans. Inf. Theory 2006, 52, 1289–1306. [Google Scholar] [CrossRef]

- Candes, E.J.; Wakin, M.B. An Introduction to Compressive Sampling. IEEE Signal Process. Mag. 2008, 25, 21–30. [Google Scholar] [CrossRef]

- Yuan, H.; Wang, X.; Sun, X.; Ju, Z. Compressive sensing-based feature extraction for bearing fault diagnosis using a heuristic neural network. Meas. Sci. Technol. 2017, 28, 065018. [Google Scholar] [CrossRef]

- Quan, T.M.; Nguyen-Duc, T.; Jeong, W.K. Compressed Sensing MRI Recovery Using a Generative Adversarial Network with a Cyclic Loss. IEEE Trans. Med. Imaging 2018, 37, 1488–1497. [Google Scholar] [CrossRef] [PubMed]

- Yi, S.; Cui, C.; Lu, J.; Wang, Q. Data compression and recovery of smart grid customers based on compressed sensing theory. Int. J. Electr. Power Energy Syst. 2016, 83, 21–25. [Google Scholar]

- Jin, D.; Yang, Y.; Luo, Y.; Liu, S. Lens distortion correction method based on cross-ratio invariability and compressed sensing. Opt. Eng. 2018, 57, 054103. [Google Scholar] [CrossRef]

- Ding, Y.; Selesnick, I.W. Artifact-Free Wavelet Denoising: Non-convex Sparse Regularization, Convex Optimization. IEEE Signal Process. Lett. 2015, 22, 1364–1368. [Google Scholar] [CrossRef]

- Lv, X.; Bi, G.; Wan, C. The Group Lasso for Stable Recovery of Block-Sparse Signal Representations. IEEE Trans. Signal Process. 2011, 59, 1371–1382. [Google Scholar] [CrossRef]

- Chen, S.S.; Donoho, D.L.; Saunders, M.A. Atomic Decomposition by Basis Pursuit. Siam Rev. 2001, 43, 129–159. [Google Scholar] [CrossRef] [Green Version]

- Elad, M. Sparse and Redundant Representations: From Theory to Applications in Signal and Image Processing; Springer: New York, NY, USA, 2010. [Google Scholar]

- Mcclure, M.R.; Carin, L. Matching pursuits with a wave-based dictionary. IEEE Trans. Signal Process. 1997, 45, 2912–2927. [Google Scholar] [CrossRef]

- Tropp, J.A.; Gilbert, A.C. Signal Recovery from Random Measurements Via Orthogonal Matching Pursuit. IEEE Trans. Inf. Theory 2007, 53, 4655–4666. [Google Scholar] [CrossRef]

- Donoho, D.L.; Tsaig, Y.; Drori, I.; Starck, J.L. Sparse Solution of Underdetermined Systems of Linear Equations by Stagewise Orthogonal Matching Pursuit. IEEE Trans. Inf. Theory 2012, 58, 1094–1121. [Google Scholar] [CrossRef]

- Karahanoglu, N.B.; Erdogan, H. A Orthogonal Matching Pursuit: Best-First Search for Compressed Sensing Signal Recovery. Digit. Signal Process. 2012, 22, 555–568. [Google Scholar] [CrossRef]

- Wei, D.; Milenkovic, O. Subspace Pursuit for Compressive Sensing Signal Recovery. IEEE Trans. Inf. Theory 2009, 55, 2230–2249. [Google Scholar]

- Vlachos, E.; Lalos, A.S.; Berberidis, K. Stochastic Gradient Pursuit for Adaptive Equalization of Sparse Multipath Channels. IEEE J. Emerg. Sel. Top. Circuits Syst. 2012, 2, 413–423. [Google Scholar] [CrossRef]

- Wang, J.H.; Huang, Z.T.; Zhou, Y.Y.; Wang, F.H. Robust sparse recovery based on approximate l0 norm. Acta Electron. Sin. 2012, 40, 1185–1189. [Google Scholar]

- Zhao, R.; Lin, W.; Li, H.; Hu, S. Recovery algorithm for compressive sensing based on smoothed l0 norm and revised Newton method. J. Comput.-Aided Des. Graph. 2014, 24, 478–484. [Google Scholar]

- Jin, D.; Yang, Y.; Ge, T.; Wu, D. A Fast Sparse Recovery Algorithm for Compressed Sensing Using Approximate l0 Norm and Modified Newton Method. Materials 2019, 12, 1227. [Google Scholar] [CrossRef]

- Wei, Z.; Zhang, J.; Xu, Z.; Huang, Y.; Liu, Y.; Fan, X. Gradient Projection with Approximate L 0 Norm Minimization for Sparse Recovery in Compressed Sensing. Sensors 2018, 18, 3373. [Google Scholar] [CrossRef]

- Bertsimas, D.; Tsitsiklis, J. Simulated Annealing. Stat. Sci. 1993, 8, 10–15. [Google Scholar] [CrossRef]

- Bender, M.A.; Muthukrishnan, S.; Rajaraman, R. Genetic algorithms: A survey. Computer 2002, 27, 17–26. [Google Scholar]

- Patrascu, A.; Necoara, I. Efficient random coordinate descent algorithms for large-scale structured nonconvex optimization. J. Glob. Optim. 2015, 61, 19–46. [Google Scholar] [CrossRef]

- Gardner, W.A. Learning characteristics of stochastic-gradient-descent algorithms: A general study, analysis, and critique. Signal Process. 1984, 6, 113–133. [Google Scholar] [CrossRef]

- Nocedal, J.; Wright, S.J. Numerical Optimization; Springer: Berlin, Germany, 2006. [Google Scholar]

- Lukas, M.A. Asymptotic optimality of generalized cross-validation for choosing the regularization parameter. Numer. Math. 1993, 66, 41–66. [Google Scholar] [CrossRef]

- Metzler, C.A.; Maleki, A.; Baraniuk, R.G. From Denoising to Compressed Sensing. IEEE Trans. Inf. Theory 2016, 62, 5117–5144. [Google Scholar] [CrossRef]

| Algorithm: L0RCD |

|---|

| Input:A, y, λ, h, K, and the termination condition: ; |

| Output: The recovery signal s*; |

| Initialization:s0 = AT(AAT)−1y, = 1; |

| Step 1: Calculate gradient according to Equation 17; |

| Step 2: Iterate, ; |

| Step 3: If , update s and , where , or else go to Step 1 and continue iterating. |

| Step 4: Take the updated s and as initial value, and repeat step 1 to step 3, stop iterating until and output to s*. |

| Algorithm | Lena | Cameraman | Boat |

|---|---|---|---|

| OMP | 26.27/43.02 | 25.98/44.57 | 25.11/47.54 |

| StOMP | 27.01/39.07 | 27.33/42.16 | 25.76/45.55 |

| BP | 28.34/76.37 | 28.97/78.39 | 29.05/84.29 |

| L0GP | 32.13/25.14 | 33.14/27.33 | 31.06/28.77 |

| L0MN | 33.08/18.04 | 34.59/20.25 | 33.27/23.05 |

| L0RCD | 34.95/17.12 | 35.65/20.01 | 34.05/21.86 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jin, D.; Yang, G.; Li, Z.; Liu, H. Sparse Recovery Algorithm for Compressed Sensing Using Smoothed l0 Norm and Randomized Coordinate Descent. Mathematics 2019, 7, 834. https://doi.org/10.3390/math7090834

Jin D, Yang G, Li Z, Liu H. Sparse Recovery Algorithm for Compressed Sensing Using Smoothed l0 Norm and Randomized Coordinate Descent. Mathematics. 2019; 7(9):834. https://doi.org/10.3390/math7090834

Chicago/Turabian StyleJin, Dingfei, Guang Yang, Zhenghui Li, and Haode Liu. 2019. "Sparse Recovery Algorithm for Compressed Sensing Using Smoothed l0 Norm and Randomized Coordinate Descent" Mathematics 7, no. 9: 834. https://doi.org/10.3390/math7090834