Spurious OLS Estimators of Detrending Method by Adding a Linear Trend in Difference-Stationary Processes—A Mathematical Proof and Its Verification by Simulation

Abstract

:1. Introducing the Problematic

2. Literature Review

3. A Mathematical Proof

4. Verification by Simulation

- Step 1: We generate a white noise, , with a sample size of T = 1,000,000. Here, we set the white noise as Gaussian. The seed values (see Table A1) employed for the simulations at this step are provided by the Rand Corporation (2001) [12] with a hardware random number generator to make sure that the simulations effectively use true random numbers, because the random number generated by software is in fact a “pseudorandom.”

- Step 2: We generate a random walk, , in our original equation by setting :also having a million observations.

- Step 3: We then regress the DS, , to a linear trend with an intercept.

- Step 4: We repeat this experiment 100 times successively, and each time we use a different true random number as a seed value.

- (1)

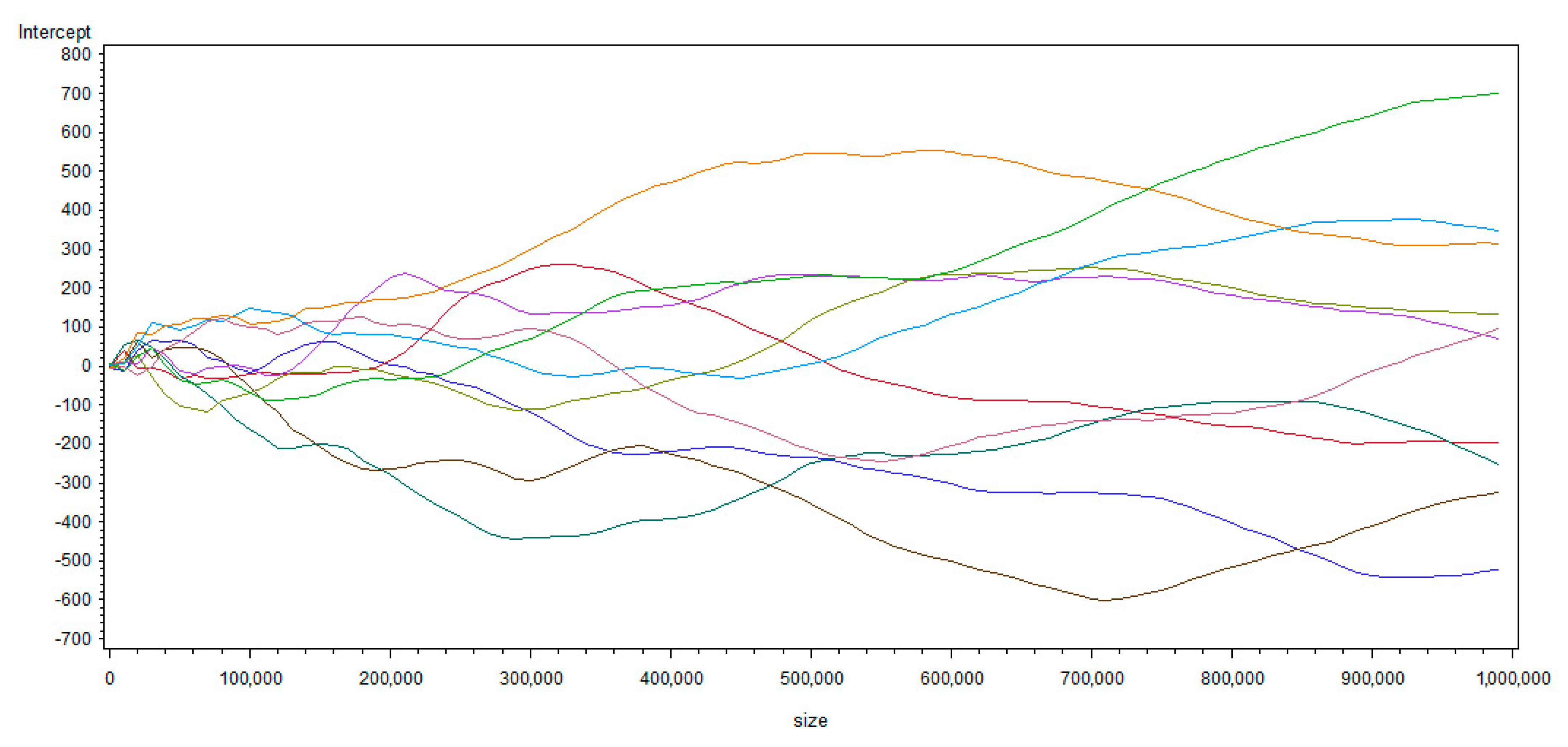

- From Figure 1 and Figure 2, we can observe that is divergent, with its variance increasing when the sample size grows, while converges to zero. The simulation results therefore confirm the mathematical proof previously provided. In addition, from Figure 2, we see that the sample size should be greater than at least 1000 to get a conclusion of convergence becoming clear. That is, the size of the samples simulated by Granger and Newbold (1974) [3] or Nelson and Kang (1981) [11] seem to not be big enough to support their conclusions; even if the latter are right, and can be confirmed and re-obtained by our own simulations mobilizing 1,000,000 observations as an approximation of infinity (the sample size was 50 for Granger and Newbold (1974) [3] and 101 (in order to calculate a sample autocorrelation function of 100 lags) for Nelson and Kang (1981). This is probably because computers’ calculation capacities were much less powerful in the 1970s than today. Thanks to the progress in computing science, we can reinforce the statistical credibility of their findings).

- (2)

- From Figure 3, we observe that, as expected, when , converges to zero (the magnitude level of is 10−5 considering that the decimal precision of the 32-bit computer used is 10−7, which is almost not-different from zero) and is divergent even if the seed values are modified. For 100 different simulations, the conclusions still hold, which indicates that there is no problem of pseudo-randomness in our simulations (even if their conclusions are correct, the simulations by Granger and Newbold (1974) [3] as well as by Nelson and Kang (1981) [11] did not pay attention to the pseudo-randomness, nor specify how the random numbers are obtained). By performing them, as we set all equal to zero, if is convergent, then it must converge to , in other words, to zero. However, seriously deviates from its mathematical expectation zero for different simulations. Thus, the regressions are spurious because the OLS estimator of the trend converges to zero and the other OLS estimator diverges when the sample size tends to infinity.

- (3)

- (4)

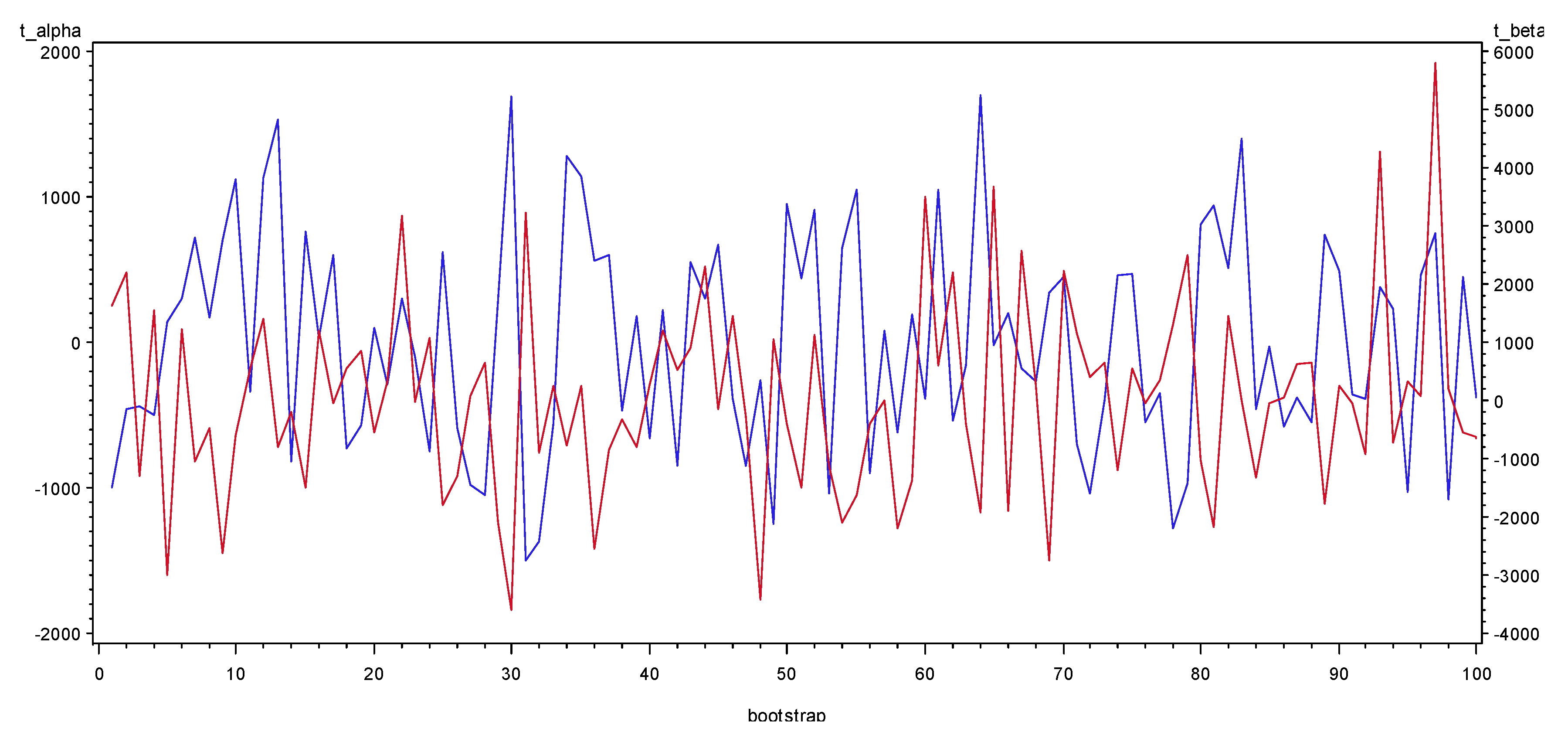

- From Table 1 and Figure 4, we see that the t-statistics of the OLS estimators are very high, and that all the p-values of and are zero. Thus, the OLS estimators are definitely significant when the sample size tends to infinity. This is also a well-known result associated to spurious regressions, since the residuals are not white noises (as indicated above, and studied by Nelson and Kang (1981) [11], we did not test the correlation of the residuals here). In these conditions, we understand that the usual and fundamental Fisher or Student tests of the OLS estimators are no longer valid, precisely because they are based on the assumption of residuals as white noises. If we use such a detrending method in DS processes, we will indeed get wrong conclusions of significance of the explicative variables.

5. Concluding Remarks

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A. Simulation Program by SAS, with Explanation Annotations

Appendix B

| 18200 | 77381 | 59443 | 87430 | 77462 | 41440 | 75496 | 49906 | 09823 | 81293 | 89793 |

| 18201 | 79729 | 86526 | 22633 | 99540 | 23354 | 55930 | 37734 | 97861 | 68270 | 33174 |

| 18202 | 82377 | 53502 | 13615 | 21230 | 25741 | 59935 | 60282 | 90430 | 66251 | 75758 |

| 18203 | 31592 | 30957 | 14458 | 77037 | 10777 | 45252 | 69494 | 74509 | 16031 | 80045 |

| 18204 | 33553 | 07210 | 29127 | 18634 | 71052 | 35182 | 89048 | 04978 | 00451 | 46072 |

| 18205 | 59326 | 45916 | 55698 | 08330 | 92541 | 10196 | 37699 | 81162 | 65562 | 24792 |

| 18206 | 61082 | 83586 | 98989 | 78927 | 68800 | 44882 | 96851 | 79167 | 92786 | 82529 |

| 18207 | 14373 | 76009 | 65876 | 29319 | 63212 | 22002 | 57795 | 28772 | 74823 | 95093 |

| 18208 | 90754 | 76767 | 81309 | 32874 | 61792 | 63659 | 10851 | 29106 | 84988 | 63128 |

| 18209 | 33936 | 11659 | 56754 | 48332 | 08687 | 41299 | 31220 | 37709 | 28335 | 91985 |

Appendix C

| Bootstrap | Alpha | T_Alpha | Beta | T_Beta | _RSQ_ |

|---|---|---|---|---|---|

| 1 | −516.428 | −994.213 | 0.001478 | 1642.975 | 0.729684 |

| 2 | −197.89 | −454.435 | 0.001673 | 2218.098 | 0.83108 |

| 3 | −270.459 | −441.39 | −0.00137 | −1293.6 | 0.625945 |

| 4 | −319.191 | −499.053 | 0.00172 | 1552.42 | 0.706746 |

| 5 | 59.65598 | 147.5 | −0.0021 | −3001.91 | 0.900115 |

| 6 | 128.2268 | 303.2709 | 0.000895 | 1222.664 | 0.599184 |

| 7 | 340.0485 | 723.4509 | −0.00085 | −1049.39 | 0.524084 |

| 8 | 106.5433 | 173.9056 | −0.0005 | −473.332 | 0.183036 |

| 9 | 312.9737 | 707.3468 | −0.00201 | −2623.79 | 0.873166 |

| 10 | 706.1975 | 1119.959 | −0.00064 | −587.675 | 0.256706 |

| 11 | −127.036 | −339.147 | 0.000358 | 552.4023 | 0.233804 |

| 12 | 543.8749 | 1136.501 | 0.001163 | 1402.911 | 0.663091 |

| 13 | 588.8941 | 1529.704 | −0.00052 | −783.353 | 0.380284 |

| 14 | −648.32 | −813.506 | −0.00027 | −192.58 | 0.035761 |

| 15 | 656.4477 | 765.2626 | −0.00222 | −1496.84 | 0.69141 |

| 16 | 11.84133 | 27.68874 | 0.000893 | 1205.204 | 0.592256 |

| 17 | 488.6455 | 598.7078 | −4.9 × 10−5 | −34.3688 | 0.00118 |

| 18 | −465.545 | −725.822 | 0.000631 | 568.3724 | 0.244169 |

| 19 | −248.422 | −564.73 | 0.000643 | 844.1401 | 0.416084 |

| 20 | 48.52915 | 105.5969 | −0.00044 | −549.449 | 0.231889 |

| 21 | −101.445 | −287.474 | 0.000207 | 338.1793 | 0.102628 |

| 22 | 127.0612 | 299.2239 | 0.002335 | 3175.413 | 0.909774 |

| 23 | −33.161 | −99.9226 | −1.3 × 10−5 | −22.9001 | 0.000524 |

| 24 | −453.84 | −745.754 | 0.001144 | 1085.507 | 0.540932 |

| 25 | 235.6821 | 619.602 | −0.00118 | −1797.21 | 0.763592 |

| 26 | −218.546 | −584.063 | −0.00084 | −1290.12 | 0.624682 |

| 27 | −440.194 | −973.892 | 6.51 × 10−5 | 83.11338 | 0.00686 |

| 28 | −449.678 | −1043.2 | 0.000493 | 660.5926 | 0.303807 |

| 29 | 112.1348 | 289.5755 | −0.00141 | −2105.15 | 0.815894 |

| 30 | 581.1902 | 1689.905 | −0.00215 | −3604.9 | 0.928548 |

| 31 | −587.032 | −1501.88 | 0.002193 | 3239.642 | 0.913008 |

| 32 | −369.762 | −1367.25 | −0.00042 | −889.29 | 0.441602 |

| 33 | −389.217 | −555.687 | 0.000312 | 256.8726 | 0.061899 |

| 34 | 867.6485 | 1281.799 | −0.00091 | −772.52 | 0.373743 |

| 35 | 436.7767 | 1145.472 | 0.000166 | 251.6912 | 0.059575 |

| 36 | 270.2492 | 565.8382 | −0.0021 | −2537.89 | 0.865607 |

| 37 | 216.3166 | 600.8799 | −0.00052 | −837.362 | 0.412172 |

| 38 | −231.201 | −467.153 | −0.00027 | −319.307 | 0.092524 |

| 39 | 91.05972 | 183.4367 | −0.00069 | −798.691 | 0.389465 |

| 40 | −311.184 | −661.038 | 0.000234 | 287.2567 | 0.076227 |

| 41 | 88.70737 | 226.1573 | 0.000826 | 1216.426 | 0.596725 |

| 42 | −418.93 | −845.305 | 0.00045 | 523.9499 | 0.215393 |

| 43 | 139.4184 | 556.7029 | 0.000388 | 895.4936 | 0.445033 |

| 44 | 131.9699 | 299.7307 | 0.001769 | 2319.397 | 0.843251 |

| 45 | 235.8081 | 671.6881 | −8.7 × 10−5 | −143.432 | 0.020158 |

| 46 | −183.356 | −385.223 | 0.001201 | 1457.079 | 0.679804 |

| 47 | −450.714 | −849.624 | −0.00025 | −270.624 | 0.06824 |

| 48 | −102.254 | −255.019 | −0.00238 | −3433.09 | 0.92179 |

| 49 | −639.041 | −1252.14 | 0.000926 | 1047.677 | 0.523272 |

| 50 | 362.1756 | 950.0575 | −0.00026 | −398.336 | 0.136943 |

| 51 | 210.3742 | 444.9447 | −0.00122 | −1484.72 | 0.687928 |

| 52 | 421.0867 | 909.9346 | 0.000898 | 1120.044 | 0.556443 |

| 53 | −455.699 | −1037.49 | −0.00086 | −1129.1 | 0.560416 |

| 54 | 421.7283 | 649.61 | −0.00237 | −2104.8 | 0.815844 |

| 55 | 521.1628 | 1052.502 | −0.0014 | −1628.74 | 0.726237 |

| 56 | −470.648 | −899.902 | −0.00035 | −387.837 | 0.130751 |

| 57 | 30.66679 | 83.32206 | 6.79 × 10−6 | 10.65426 | 0.000114 |

| 58 | −299.44 | −621.459 | −0.00184 | −2199.16 | 0.828659 |

| 59 | 83.65093 | 193.0325 | −0.00104 | −1381.63 | 0.656228 |

| 60 | −144.012 | −384.873 | 0.002268 | 3500.093 | 0.924532 |

| 61 | 831.9241 | 1056.377 | 0.000812 | 595.4674 | 0.261765 |

| 62 | −261.051 | −540.941 | 0.001855 | 2219.531 | 0.831261 |

| 63 | −98.1186 | −153.397 | −0.00042 | −382.35 | 0.127545 |

| 64 | 858.1653 | 1707.158 | −0.00167 | −1919.83 | 0.786587 |

| 65 | −4.99706 | −16.7538 | 0.001905 | 3686.877 | 0.931474 |

| 66 | 82.03943 | 203.1585 | −0.00132 | −1889.56 | 0.781203 |

| 67 | −79.6881 | −179.748 | 0.001974 | 2570.223 | 0.868526 |

| 68 | −144.75 | −270.425 | 0.000239 | 257.2805 | 0.062084 |

| 69 | 106.8698 | 344.2261 | −0.00147 | −2741.72 | 0.882588 |

| 70 | 224.7479 | 457.0921 | 0.001897 | 2227.121 | 0.832217 |

| 71 | −531.303 | −700.132 | 0.001526 | 1160.838 | 0.574023 |

| 72 | −431.824 | −1038.13 | 0.000284 | 393.6544 | 0.134172 |

| 73 | −209.465 | −398.03 | 0.000603 | 661.8163 | 0.304591 |

| 74 | 304.19 | 458.4119 | −0.00137 | −1195.68 | 0.588419 |

| 75 | 406.7961 | 471.6883 | 0.000832 | 556.7575 | 0.236629 |

| 76 | −275.16 | −545.632 | −4.3 × 10−5 | −49.7171 | 0.002466 |

| 77 | −149.003 | −351.097 | 0.000263 | 357.8227 | 0.113505 |

| 78 | −743.237 | −1276.95 | 0.001326 | 1315.394 | 0.633735 |

| 79 | −562.356 | −967.819 | 0.002533 | 2516.59 | 0.863635 |

| 80 | 426.6258 | 808.3939 | −0.00093 | −1012.77 | 0.506345 |

| 81 | 620.8599 | 946.9761 | −0.00246 | −2161.95 | 0.823759 |

| 82 | 206.2948 | 509.1332 | 0.001018 | 1449.979 | 0.677673 |

| 83 | 658.6789 | 1399.053 | 2.77 × 10−6 | 3.396448 | 1.15 × 10−5 |

| 84 | −183.309 | −454.236 | −0.00093 | −1326.52 | 0.637638 |

| 85 | −12.3161 | −31.8307 | −2.7 × 10−5 | −40.1805 | 0.001612 |

| 86 | −218.468 | −581.44 | 3.37 × 10−5 | 51.82376 | 0.002679 |

| 87 | −183.216 | −381.86 | 0.000529 | 636.5787 | 0.288374 |

| 88 | −286.844 | −549.021 | 0.000599 | 662.0088 | 0.304714 |

| 89 | 374.423 | 746.4409 | −0.00154 | −1775.53 | 0.759183 |

| 90 | 418.4504 | 492.2846 | 0.00037 | 251.4381 | 0.059462 |

| 91 | −159.337 | −361.947 | −2.9 × 10−5 | −37.6401 | 0.001415 |

| 92 | −229.543 | −385.27 | −0.00095 | −915.922 | 0.456201 |

| 93 | 149.2659 | 379.5512 | 0.002918 | 4283.706 | 0.948321 |

| 94 | 124.2723 | 235.9754 | −0.00066 | −726.347 | 0.34537 |

| 95 | −542.8 | −1029.7 | 0.000313 | 342.646 | 0.105071 |

| 96 | 335.8 | 459.1723 | 9.31 × 10−5 | 73.50074 | 0.005373 |

| 97 | 230.6743 | 753.4047 | 0.003076 | 5799.956 | 0.971131 |

| 98 | −450.053 | −1081.94 | 0.000144 | 199.1844 | 0.038161 |

| 99 | 180.6602 | 451.0257 | −0.00037 | −535.181 | 0.222649 |

| 100 | −206.238 | −370.228 | −0.00059 | −609.264 | 0.270714 |

References

- Wold Herman, O.A. A Study in the Analysis of Stationary Time Series, 2nd ed.; Almqvist and Wiksell: Uppsala, Sweden, 1954. [Google Scholar]

- Box, G.E.P.; Jenkins, G.M. Time Series Analysis: Forecasting and Control; Holden-Day: San Francisco, CA, USA, 1970. [Google Scholar]

- Granger, C.W.J.; Newbold, P. Spurious Regressions in Econometrics. J. Econom. 1974, 2, 111–120. [Google Scholar] [CrossRef] [Green Version]

- Phillips, P.C.B. Understanding Spurious Regressions in Econometrics. J. Econom. 1986, 33, 311–340. [Google Scholar] [CrossRef] [Green Version]

- Davidson, R.; MacKinnon, J.G. Estimation and Inference in Econometrics; Oxford University Press: New York, NY, USA, 1993. [Google Scholar]

- Nelson, C.R.; Plosser, C.R. Trends and Random Walks in Macroeconmic Time Series: Some Evidence and Implications. J. Monet. Econ. 1982, 10, 139–162. [Google Scholar] [CrossRef]

- Engle, R.F.; Granger, C.W.J. Co-integration and Error Correction: Representation, Estimation, and Testing. Econometrica 1987, 55, 251–276. [Google Scholar] [CrossRef]

- Johansen, S. Estimation and Hypothesis Testing of Cointegration Vectors in Gaussian Vector Autoregressive Models. Econometrica 1991, 59, 1551–1580. [Google Scholar] [CrossRef]

- Stock, J.H. Asymptotic Properties of Least Squares Estimators of Cointegrating Vectors. Econometrica 1987, 55, 1035–1056. [Google Scholar] [CrossRef]

- Chan, H.K.; Hayya, J.C.; Ord, J.K. A Note on Trend Removal Methods: The Case of Polynomial Regression Versus Variate Differencing. Econometrica 1977, 45, 737–744. [Google Scholar] [CrossRef]

- Nelson, C.R.; Kang, H. Spurious Periodicity in Inappropriately Detrended Time Series. Econometrica 1981, 49, 741–751. [Google Scholar] [CrossRef] [Green Version]

- Rand Corporation. Million Random Digits with 100,000 Normal Deviates; Rand: Santa Monica, CA, USA, 2001. [Google Scholar]

- Fischer, H. A History of the Central Limit Problem: From Classical to Modern Probability Theory; Sources and Studies in the History of Mathematics and Physical Sciences, Science & Business Media; Springer: Eichstätt, Germany, 2010. [Google Scholar]

- Knuth, D.E. The Art of Computer Programming: Fundamental Algorithms, 3rd ed.; Addison-Wesley: Boston, CA, USA, 1997. [Google Scholar]

- Tchebichef, P.L. Des Valeurs moyennes. J. Mathématiques Pures Appliquées 1867, 12, 177–184, (Originally published in 1867 by Mathematicheskii Sbornik, 2, pp. 1–9). [Google Scholar]

- Box, G.E.P.; Draper, N.R. Empirical Models Building and Response Surfaces; Wiley Series in Probability and Statistics; John Wiley & Sons: New York, NY, USA, 1987. [Google Scholar]

- Rubinstein, R.Y.; Kroese, D.P. Simulation and the Monte Carlo Method (Vol. 10); John Wiley & Sons: Hoboken, NJ, USA, 2016. [Google Scholar]

- Creal, D. A survey of sequential Monte Carlo methods for economics and finance. Econom. Rev. 2012, 31, 245–296. [Google Scholar] [CrossRef] [Green Version]

- Kourtellos, A.; Thanasis, S.; Chih, M.T. Structural threshold regression. Econom. Theory 2016, 32, 827. [Google Scholar] [CrossRef] [Green Version]

- Lux, T. Estimation of agent-based models using sequential Monte Carlo methods. J. Econ. Dyn. Control 2018, 91, 391–408. [Google Scholar] [CrossRef] [Green Version]

- Romer, P. The trouble with macroeconomics. Am. Econ. 2016, 20, 1–20. [Google Scholar]

- Reed, W.R.; Zhu, M. On estimating long-run effects in models with lagged dependent variables. Econ. Model. 2017, 64, 302–311. [Google Scholar] [CrossRef]

- Mankiw, N.G.; Romer, D.; Weil, D.N. A Contribution to the Empirics of Economic Growth. Q. J. Econ. 1992, 107, 407–427. [Google Scholar] [CrossRef]

- Chow, G.C.; Li, K.W. China’s Economic Growth: 1952–2010. Econ. Dev. Cult. Chang. 2002, 51, 247–256. [Google Scholar] [CrossRef]

- Darné, O. The Uncertain Unit Root in Real GNP: A Re-Examination. J. Macroecon. 2009, 31, 153–166. [Google Scholar] [CrossRef]

- Long, Z.; Herrera, R. A Contribution to Explaining Economic Growth in China: New Time Series and Econometric Tests of Various Models; Mimeo, CNRS UMR 8174—Centre d’Économie de la Sorbonne: Paris, France, 2015. [Google Scholar]

- Long, Z.; Herrera, R. Building Original Series of Physical Capital Stocks for China’s Economy: Methodological Problems, Proposals of Solutions and a New Database. China Econ. Rev. 2016, 40, 33–53. [Google Scholar] [CrossRef]

- Cleveland, W.S. The Inverse Autocorrelations of a Time Series and Their Applications. Technometrics 1972, 14, 277–298. [Google Scholar] [CrossRef]

- Chatfield, C. Inverse Autocorrelations. J. R. Stat. Soc. 1979, 142, 363–377. [Google Scholar] [CrossRef]

- Priestley, M.B. Spectral Analysis and Time Series, 1: Univariate Series; Probability and Mathematical Statistics; Academic Press: London, UK, 1981. [Google Scholar]

- Hosking, J.R.M. Fractional differencing. Biometrika 1981, 68, 165–176. [Google Scholar] [CrossRef]

| Mean | 6.11764361 | 6.14650186 | 0.00002174 | 60.0860629 | 0.45983 |

| Variance | 143,633.942 | 554,191.079 | 1.57763 × 10−6 | 2,744,822.52 | 0.10287 |

| Standard Deviation | 378.990689 | 744.440111 | 0.00125604 | 1656.75059 | 0.32073 |

| Max | 867.64848 | 1707.15789 | 0.00307578 | 5799.95595 | 0.97113 |

| Min | −743.23667 | −1501.88113 | −0.00245505 | −3604.89913 | 1.15357 × 10−5 |

| P-value of null test | 0 | - | 0 | - | - |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

LONG, Z.; HERRERA, R. Spurious OLS Estimators of Detrending Method by Adding a Linear Trend in Difference-Stationary Processes—A Mathematical Proof and Its Verification by Simulation. Mathematics 2020, 8, 1931. https://doi.org/10.3390/math8111931

LONG Z, HERRERA R. Spurious OLS Estimators of Detrending Method by Adding a Linear Trend in Difference-Stationary Processes—A Mathematical Proof and Its Verification by Simulation. Mathematics. 2020; 8(11):1931. https://doi.org/10.3390/math8111931

Chicago/Turabian StyleLONG, Zhiming, and Rémy HERRERA. 2020. "Spurious OLS Estimators of Detrending Method by Adding a Linear Trend in Difference-Stationary Processes—A Mathematical Proof and Its Verification by Simulation" Mathematics 8, no. 11: 1931. https://doi.org/10.3390/math8111931