An Extended Theory of Planned Behavior for the Modelling of Chinese Secondary School Students’ Intention to Learn Artificial Intelligence

Abstract

:1. Introduction

2. Literature Review

2.1. Background Factors

2.2. Attitude Towards Behavior

2.3. Perceived Behavioral Control

3. Method

3.1. Contexts and Participants

3.2. Instruments

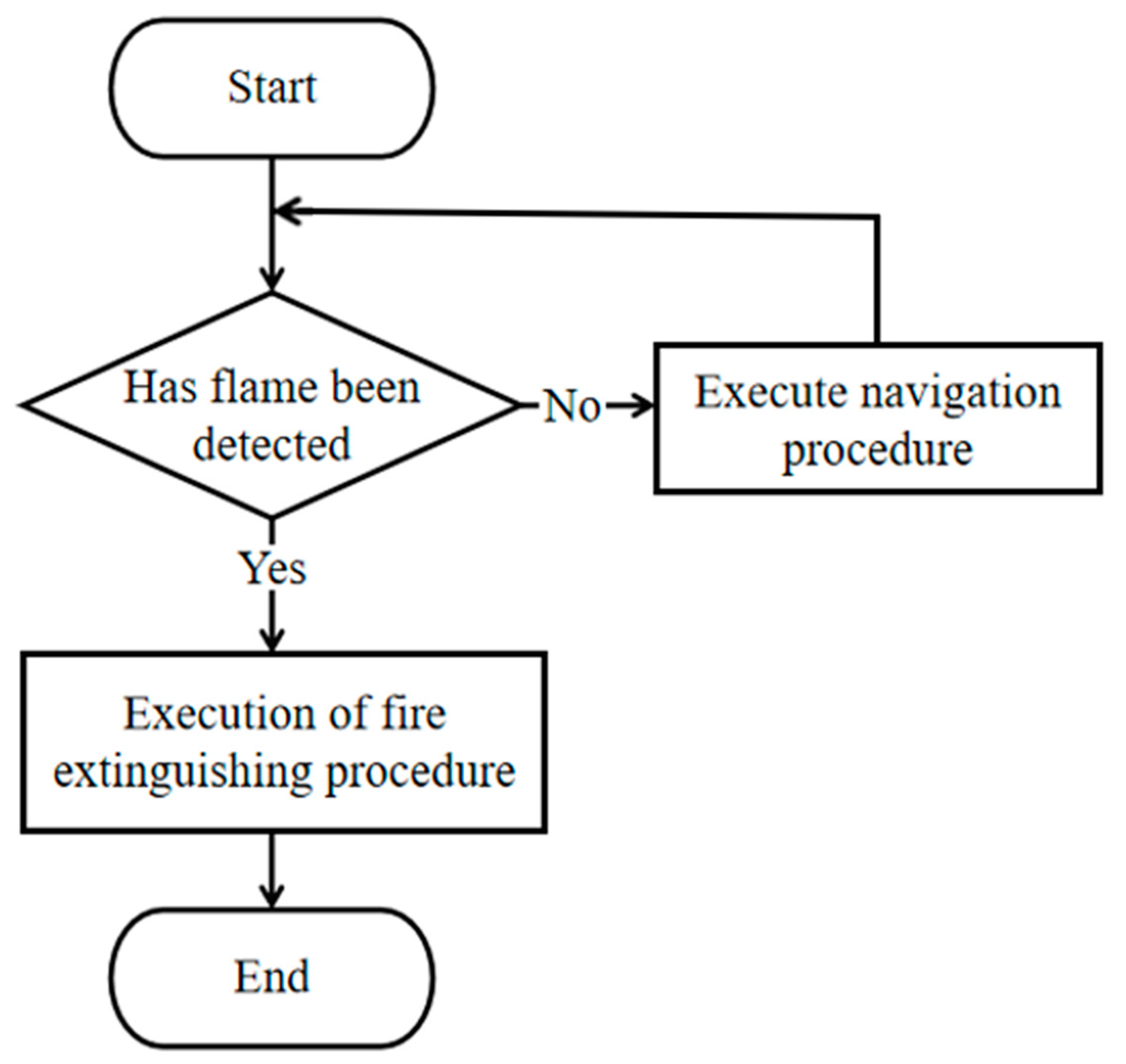

3.3. Data Analysis

4. Results

4.1. Analysis of the Measurement Model

4.2. Analysis of the Structural Model

5. Discussion and Conclusions

6. Limitations

Author Contributions

Funding

Conflicts of Interest

Appendix A

References

- Zawacki-Richter, O.; Marín, V.I.; Bond, M.; Gouverneur, F. Systematic review of research on artificial intelligence applications in higher education—Where are the educators? Int. J. Educ. Technol. High. Educ. 2019, 16, 39. [Google Scholar] [CrossRef] [Green Version]

- Chai, C.S.; Lin, P.Y.; Jong, M.S.Y.; Dai, Y.; Chiu, T.K.F.; Qin, J.J. Primary school students’ perceptions and behavioral intentions of learning artificial intelligence. Educ. Technol. Soc. 2020, in press. [Google Scholar]

- Knox, J. Artificial intelligence and education in China. Learn. Media Technol. 2020. [Google Scholar] [CrossRef]

- Roll, I.; Wylie, R. Evolution and revolution in artificial intelligence in education. Int. J. Artif. Intell. Educ. 2016, 26, 582–599. [Google Scholar] [CrossRef] [Green Version]

- Seldon, A.; Abidoye, O. The Fourth Education Revolution: Will Artificial Intelligence Liberate or Infantilise Humanity; University of Buckingham Press: Buckingham, UK, 2018; ISBN 978-1908684950. [Google Scholar]

- Ajzen, I. The theory of planned behavior. In Handbook of Theories of Social Psychology; Van Lange, P.A., Kruglanski, A.W., Higgins, E.T., Eds.; SAGE: London, UK, 2012; pp. 438–459. ISBN 9780857029614. [Google Scholar]

- Fishbein, M.; Ajzen, I. Predicting and Changing Behavior: The Reasoned Action Approach; Psychology Press: London, UK, 2010; ISBN 978-1138995215. [Google Scholar]

- Mei, B.; Brown, G.T.L.; Teo, T. Toward an understanding of preservice English as a foreign language teachers’ acceptance of computer-assisted language learning 2.0 in the People’s Republic of China. J. Educ. Comput. Res. 2018, 56, 74–104. [Google Scholar] [CrossRef]

- Rubio, M.A.; Romero-Zaliz, R.; Mañoso, C.; de Madrid, A.P. Closing the gender gap in an introductory programming course. Comput. Educ. 2015, 82, 409–420. [Google Scholar] [CrossRef]

- Liao, C.; Chen, J.L.; Yen, D.C. Theory of planning behavior (TPB) and customer satisfaction in the continued use of e-service: An integrated model. Comput. Hum. Behav. 2007, 23, 2804–2822. [Google Scholar] [CrossRef]

- Davies, R.S. Understanding technology literacy: A framework for evaluating educational technology integration. TechTrends 2011, 55, 45. [Google Scholar] [CrossRef]

- Moore, D.R. Technology literacy: The extension of cognition. Int. J. Technol. Des. Educ. 2011, 21, 185–193. [Google Scholar] [CrossRef]

- Jong, M.S.Y.; Chan, T.; Hue, M.T.; Tam, V.W. Gamifying and mobilising social enquiry-based learning in authentic outdoor environments. J. Educ. Technol. Soc. 2018, 21, 277–292. [Google Scholar]

- Mohammadyari, S.; Singh, H. Understanding the effect of e-learning on individual performance: The role of digital literacy. Comput. Educ. 2015, 82, 11–25. [Google Scholar] [CrossRef]

- Dai, Y.; Chai, C.S.; Lin, P.Y.; Jong, S.Y.; Guo, Y.; Qin, J. Promoting students’ well-being by developing their readiness for the artificial intelligence age. Sustainability 2020, 12, 6597. [Google Scholar] [CrossRef]

- Botero, G.G.; Questier, F.; Cincinnato, S.; He, T.; Zhu, C. Acceptance and usage of mobile assisted language learning by higher education students. J. Comput. High. Educ. 2018, 30, 426–451. [Google Scholar] [CrossRef]

- Hoi, V.N. Understanding higher education learners’ acceptance and use of mobile devices for language learning: A Rasch-based path modeling approach. Comput. Educ. 2020, 146, 103761. [Google Scholar] [CrossRef]

- Sohn, K.; Kwon, O. Technology acceptance theories and factors influencing artificial Intelligence-based intelligent products. Telemat. Inform. 2020, 47, 101324. [Google Scholar] [CrossRef]

- Wang, Y.Y.; Wang, Y.S. Development and validation of an artificial intelligence anxiety scale: An initial application in predicting motivated learning behavior. Interact. Learn. Environ. 2019, 1–16. [Google Scholar] [CrossRef] [Green Version]

- Parasuraman, A.; Colby, C.L. An updated and streamlined technology readiness index: TRI 2.0. J. Serv. Res. 2015, 18, 59–74. [Google Scholar] [CrossRef]

- Johnson, D.G.; Verdicchio, M. AI anxiety. J. Assoc. Inf. Sci. Technol. 2017, 68, 2267–2270. [Google Scholar] [CrossRef]

- Ajzen, I. The theory of planned behavior. Organ. Behav. Hum. Decis. Process. 1991, 50, 179–211. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef] [Green Version]

- Dai, H.M.; Teo, T.; Rappa, N.A.; Huang, F. Explaining chinese university students’ continuance learning intention in the mooc setting: A modified expectation confirmation model perspective. Comput. Educ. 2020, 150, 103850. [Google Scholar] [CrossRef]

- Bryson, J.; Winfield, A. Standardizing ethical design for artificial intelligence and autonomous systems. Computer 2017, 50, 116–119. [Google Scholar] [CrossRef]

- Floridi, L.; Cowls, J.; Beltrametti, M.; Chatila, R.; Chazerand, P.; Dignum, V.; Luetge, C.; Madelin, R.; Pagallo, U.; Rossi, F.; et al. AI4People—An ethical framework for a Good AI Society: Opportunities, risks, principles, and recommendations. Minds Mach. 2018, 28, 689–707. [Google Scholar] [CrossRef] [Green Version]

- Duncan, C.; Sankey, D. Two conflicting visions of education and their consilience. Educ. Philos. Theory 2019, 51, 1454–1464. [Google Scholar] [CrossRef]

- Qin, J.J.; Ma, F.G.; Guo, Y.M. Foundations of Artificial Intelligence for Primary School; Popular Science Press: Beijing, China, 2019; ISBN 9787110099759. [Google Scholar]

- Tang, X.; Chen, Y. Fundamentals of Artificial Intelligence; East China Normal University: Shanghai, China, 2018; ISBN 9787567575615. [Google Scholar]

- Basharat, T.; Iqbal, H.M.; Bibi, F. The Confucius philosophy and Islamic teachings of lifelong learning: Implications for professional development of teachers. Bull. Educ. Res. 2011, 33, 31–46. Available online: http://www.pu.edu.pk/images/journal/ier/PDF-FILES/3-Lifelong%20Learning.pdf (accessed on 19 November 2020).

- Teo, T.; Zhou, M.; Noyes, J. Teachers and technology: Development of an extended theory of planned behavior. Educ. Technol. Res. Dev. 2016, 64, 1033–1052. [Google Scholar] [CrossRef]

- Dutot, V.; Bhatiasevi, V.; Bellallahom, N. Applying the technology acceptance model in a three-countries study of smartwatch adoption. J. High Technol. Manag. Res. 2019, 30, 1–14. [Google Scholar] [CrossRef]

- Liaw, S.-S. Investigating students’ perceived satisfaction, behavioral intention, and effectiveness of e-learning: A case study of the Blackboard system. Comput. Educ. 2008, 51, 864–873. [Google Scholar] [CrossRef]

- Rafique, H.; Almagrabi, A.O.; Shamim, A.; Anwar, F.; Bashir, A.K. Investigating the acceptance of mobile library applications with an extended technology acceptance model (TAM). Comput. Educ. 2020, 145, 103732. [Google Scholar] [CrossRef]

- Ajzen, I. Perceived behavioral control, self-efficacy, locus of control, and the theory of planned behavior 1. J. Appl. Soc. Psychol. 2002, 32, 665–683. [Google Scholar] [CrossRef]

- Zhang, F.; Wei, L.; Sun, H.; Tung, L.C. How entrepreneurial learning impacts one’s intention towards entrepreneurship: A planned behavior approach. Chin. Manag. Stud. 2019, 13, 146–170. [Google Scholar] [CrossRef]

- Garland, K.; Noyes, J. Attitude and confidence towards computers and books as learning tools: A cross-sectional study of student cohorts. Br. J. Educ. Technol. 2005, 36, 85–91. [Google Scholar] [CrossRef]

- Lee, M.C. Explaining and predicting users’ continuance intention toward e-learning: An extension of the expectation–confirmation model. Comput. Educ. 2010, 54, 506–516. [Google Scholar] [CrossRef]

- Rhodes, R.E.; Courneya, K.S. Differentiating motivation and control in the theory of planned behavior. Psychol. Health Med. 2004, 9, 205–215. [Google Scholar] [CrossRef]

- King, R.B.; Caleon, I.S. School psychological capital: Instrument development, validation, and prediction. Child Indic. Res. 2020, 1–27. [Google Scholar] [CrossRef]

- Yildiz, H.D. Flipped learning readiness in teaching programming in middle schools: Modelling its relation to various variables. J. Comput. Assist. Learn. 2018, 34, 939–959. [Google Scholar] [CrossRef]

- Teo, T. Modelling Facebook usage among university students in Thailand: The role of emotional attachment in an extended technology acceptance model. Interact. Learn. Environ. 2016, 24, 745–757. [Google Scholar] [CrossRef]

- Hair, J.F.; Black, W.C.; Babin, B.J.; Anderson, R.E. Multivariate Data Analysis, 7th ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2010; ISBN 978-8131776483. [Google Scholar]

- Bollen, K.A.; Noble, M.D. Structural equation models and the quantification of behavior. Proc. Natl. Acad. Sci. USA 2011, 108 (Suppl. 3), 15639–15646. [Google Scholar] [CrossRef] [Green Version]

- Kline, R.B. Principles and Practice of Structural Equation Modeling, 4th ed.; Guilford Press: New York, NY, USA, 2015; ISBN 978-1462523344. [Google Scholar]

- Raykov, T.; Marcoulides, G.A. An Introduction to Applied Multivariate Analysis; Routledge: New York, NY, USA, 2008; ISBN 9780805863758. [Google Scholar]

- Song, S.H.; Keller, J.M. The ARCS Model for the Design of Motivationally Adaptive Computer-Mediated Instruction. J. Educ. Technol. 1999, 14, 119–134. [Google Scholar] [CrossRef]

- Ryan, R.M.; Deci, E.L. Intrinsic and extrinsic motivation from a self-determination theory perspective: Definitions, theory, practices, and future directions. Contemp. Educ. Psychol. 2020, 61, 101860. [Google Scholar] [CrossRef]

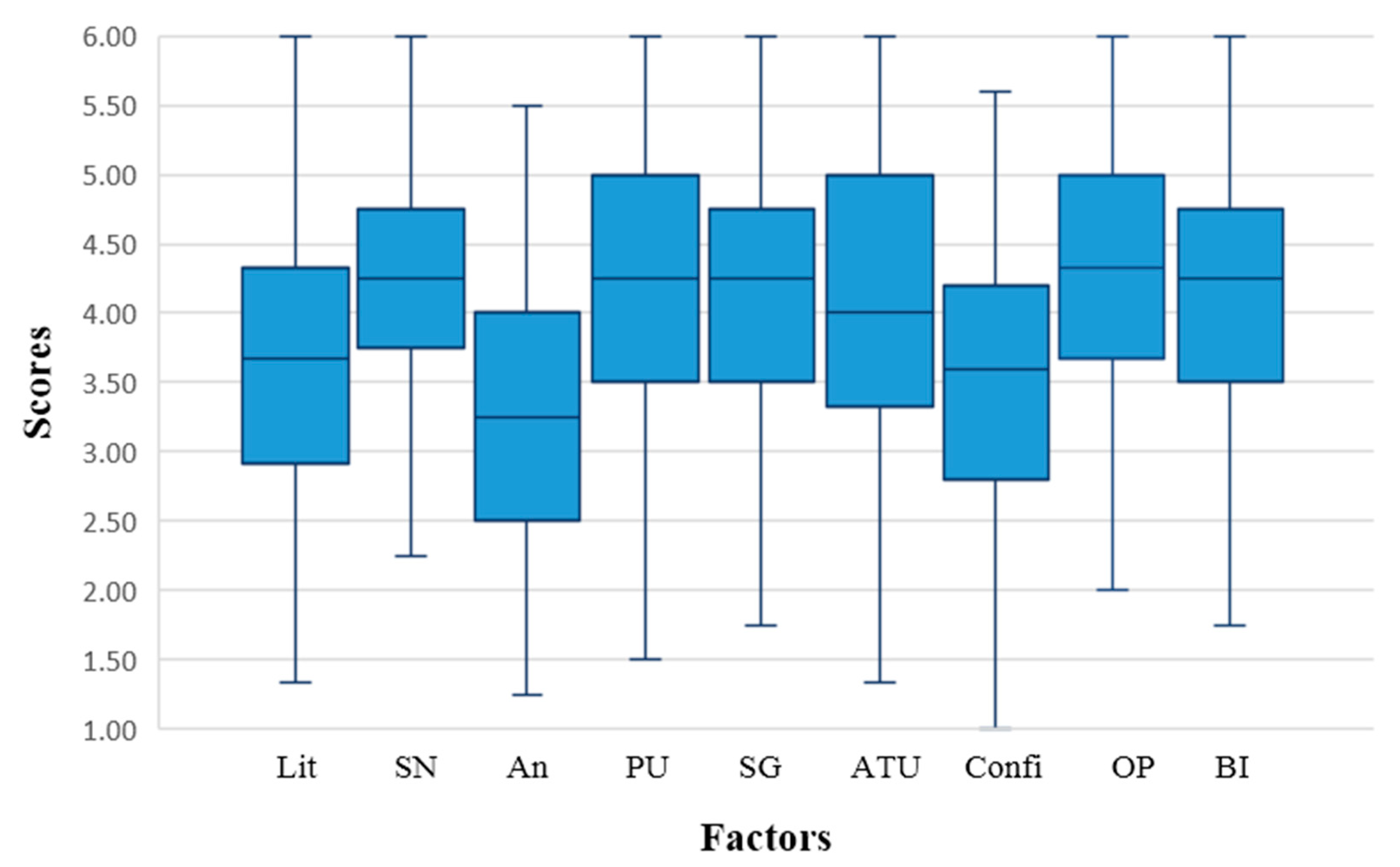

| Items | Standardized Loadings | CR | AVE |

|---|---|---|---|

| AI Literacy (Lit): M = 3.62, SD = 1.01, Cronbach’s Alpha = 0.897 | |||

| Lit2 I know the processes through which deep learning enables AI to perform voice recognition tasks. | 0.803 | 0.899 | 0.596 |

| Lit1 I understand why AI technology needs big data. | 0.793 | ||

| Lit6 I understand how computers process image to produce visual recognition. | 0.786 | ||

| Lit3 I understand how AI technology optimizes the translation output for online translation. | 0.779 | ||

| Lit5 I know how AI can be used to predict possible outcomes through statistics. | 0.750 | ||

| Lit4 I understand how AI assistant such as SIRI or Hello Google handles human-computer interaction. | 0.719 | ||

| Subjective Norms (SN): M = 4.22, SD = 0.89, Cronbach’s Alpha = 0.806 | |||

| SN2 My parents support me to learn about AI technology. | 0.759 | 0.808 | 0.513 |

| SN4 Most people I know think that I should learn about AI technology. | 0.713 | ||

| SN4 Most people I know think that I should learn about AI technology. | 0.701 | ||

| SN3My classmates feel that it is necessary to learn about AI technology. | 0.691 | ||

| AI Anxiety (Anx): M = 3.26, SD = 0.95, Cronbach’s Alpha = 0.840 | |||

| Anx5 I feel my heart sinking when I hear about AI advancement. | 0.815 | 0.844 | 0.577 |

| Anx1 When I think about AI, I cannot answer many questions about my future. | 0.811 | ||

| Anx2 When I consider the capabilities of AI, I think about how difficult my future will be. | 0.704 | ||

| Anx4 I have an uneasy, upset feeling when I think about AI. | 0.700 | ||

| Perceived Usefulness of AI (PU): M = 4.21, SD = 1.00, Cronbach’s Alpha = 0.829 | |||

| PU1 Using AI technology enables me to accomplish tasks more quickly. | 0.812 | 0.833 | 0.555 |

| PU4 Using AI technology enhances my effectiveness. | 0.757 | ||

| PU2 Using AI technology improves my performance | 0.731 | ||

| PU3 Using AI technology increases my productivity. | 0.674 | ||

| AI for Social Good (SG): M = 4.11, SD = 0.88, Cronbach’s Alpha = 0.816 | |||

| SG2 AI can be used to help disadvantaged people. | 0.805 | 0.819 | 0.532 |

| SG3 AI can promote human well-being. | 0.742 | ||

| SG1 I wish to use my AI knowledge to serve others. | 0.713 | ||

| SG5 The use of AI should aim to achieve common good. | 0.650 | ||

| Attitude toward using AI (ATU): M = 4.05, SD = 1.14, Cronbach’s Alpha = 0.844 | |||

| ATU2 Using AI technology is pleasant. | 0.846 | 0.847 | 0.648 |

| ATU4 I find using AI technology to be enjoyable | 0.790 | ||

| ATU3 I have fun using AI technology. | 0.778 | ||

| Confidence in learning AI (Confi): M = 3.52, SD = 1.03, Cronbach’s Alpha = 0.890 | |||

| Confi3 I am certain I can understand the most difficult material presented in the AI classes. | 0.826 | 0.892 | 0.623 |

| Confi5 I am confident I can understand the most complex material presented by the instructor in the AI classes. | 0.798 | ||

| Confi2 As I am taking the AI classes; I believe that I can succeed if I try hard enough. | 0.793 | ||

| Confi1 I feel confident that I will do well in the AI classes. | 0.772 | ||

| Confi4 I am confident I can learn the basic concepts about AI taught in the lessons. | 0.754 | ||

| AI Optimism (OP): M = 4.26, SD = 1.11, Cronbach’s Alpha = 0.859 | |||

| OP1 I am hopeful about my future in a world where AI is commonly used. | 0.818 | 0.859 | 0.670 |

| OP2 I always look on the positive side of things in the emerging AI world. | 0.817 | ||

| OP4 Overall, I expect more good things than bad things to happen to me in the AI enabled world. | 0.821 | ||

| Behavioral Intention (BI): M = 4.09, SD = 1.06, Cronbach’s Alpha = 0.913 | |||

| BI1 I will continue to learn AI technology in the future. | 0.873 | 0.915 | 0.730 |

| BI3 I will keep myself updated with the latest AI applications. | 0.861 | ||

| BI4 I plan to spend time in learning AI technology in the future. | 0.848 | ||

| BI2 I will pay attention to emerging AI applications. | 0.831 | ||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | |

|---|---|---|---|---|---|---|---|---|---|

| 1.Lit | (0.772) | ||||||||

| 2. SN | 0.251 *** | (0.716) | |||||||

| 3. Anx | −0.074 | −0.124 ** | (0.760) | ||||||

| 4. PU | 0.173 *** | 0.141 ** | −0.064 | (0.745) | |||||

| 5. SG | 0.249 *** | 0.254 *** | −0.170 *** | 0.150 *** | (0.729) | ||||

| 6. ATU | 0.542 *** | 0.302 *** | −0.388 *** | 0.221 *** | 0.376 *** | (0.805) | |||

| 7. Confi | 0.514 *** | 0.361 *** | −0.096 * | 0.415 *** | 0.320 *** | 0.425 *** | (0.795) | ||

| 8. OP | 0.418 *** | 0.452 *** | −0.383 *** | 0.268 *** | 0.527 *** | 0.507 *** | 0.458 *** | (0.819) | |

| 9. BI | 0.511 *** | 0.417 *** | −0.276 *** | 0.318 *** | 0.533 *** | 0.649 *** | 0.602 *** | 0.692 *** | (0.854) |

| Hypothesis | B Values | t-Values | Standardized Estimate | Status |

|---|---|---|---|---|

| H1:Lit→PU | 0.139 ** | 3.125 | 0.162 | Accepted |

| H2:Lit→SG | 0.156 *** | 3.854 | 0.199 | Accepted |

| H3:Lit→ATU | 0.569 *** | 11.157 | 0.510 | Accepted |

| H4:Lit→Confi | 0.406 *** | 6.829 | 0.395 | Accepted |

| H5:Lit→OP | 0.213 *** | 3.577 | 0.206 | Accepted |

| H6:Lit→BI | −0.030 | −0.527 | −0.026 | Not Accepted |

| H7:SN→PU | 0.113 * | 1.963 | 0.108 | Accepted |

| H8:SN→SG | 0.204 *** | 3.876 | 0.213 | Accepted |

| H9:SN→ATU | 0.127 * | 2.189 | 0.093 | Accepted |

| H10:SN→Confi | 0.266 *** | 4.751 | 0.211 | Accepted |

| H11:SN→OP | 0.343 *** | 6.070 | 0.272 | Accepted |

| H12:SN→BI | 0.027 | 0.475 | 0.018 | Not Accepted |

| H13:Anx→PU | −0.027 | −0.743 | −0.037 | Not Accepted |

| H14:Anx→SG | −0.102 ** | −3.073 | −0.152 | Accepted |

| H15:Anx→ATU | −0.352 *** | −8.890 | −0.370 | Accepted |

| H16:Anx→Confi | 0.014 | 0.333 | 0.016 | Not Accepted |

| H17:Anx→OP | −0.270 *** | −6.562 | −0.306 | Accepted |

| H18:Anx→BI | 0.089 * | 2.081 | 0.087 | Accepted |

| H19:PU→SG | 0.083 | 1.813 | 0.091 | Not Accepted |

| H20:PU→ATU | 0.119 * | 2.341 | 0.091 | Accepted |

| H21:PU→OP | 0.132 ** | 2.562 | 0.110 | Accepted |

| H22:PU→BI | 0.143 ** | 2.951 | 0.103 | Accepted |

| H23:PU→Confi | 0.404 *** | 7.850 | 0.336 | Accepted |

| H24:SG→ATU | 0.275 *** | 4.410 | 0.193 | Accepted |

| H25:SG→Confi | 0.161 ** | 2.698 | 0.122 | Accepted |

| H26:SG→OP | 0.510 *** | 7.979 | 0.386 | Accepted |

| H27:SG→BI | 0.205 ** | 3.081 | 0.134 | Accepted |

| H28:ATU→OP | −0.008 | −0.124 | −0.008 | Not Accepted |

| H29:ATU→BI | 0.405 *** | 6.860 | 0.379 | Accepted |

| H30:ATU→Confi | 0.027 | 0.422 | 0.030 | Not Accepted |

| H31:Confi→OP | 0.075 | 1.358 | 0.075 | Not Accepted |

| H32:Confi→BI | 0.237 *** | 4.598 | 0.205 | Accepted |

| H33:OP→BI | 0.429 *** | 6.007 | 0.372 | Accepted |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chai, C.S.; Wang, X.; Xu, C. An Extended Theory of Planned Behavior for the Modelling of Chinese Secondary School Students’ Intention to Learn Artificial Intelligence. Mathematics 2020, 8, 2089. https://doi.org/10.3390/math8112089

Chai CS, Wang X, Xu C. An Extended Theory of Planned Behavior for the Modelling of Chinese Secondary School Students’ Intention to Learn Artificial Intelligence. Mathematics. 2020; 8(11):2089. https://doi.org/10.3390/math8112089

Chicago/Turabian StyleChai, Ching Sing, Xingwei Wang, and Chang Xu. 2020. "An Extended Theory of Planned Behavior for the Modelling of Chinese Secondary School Students’ Intention to Learn Artificial Intelligence" Mathematics 8, no. 11: 2089. https://doi.org/10.3390/math8112089

APA StyleChai, C. S., Wang, X., & Xu, C. (2020). An Extended Theory of Planned Behavior for the Modelling of Chinese Secondary School Students’ Intention to Learn Artificial Intelligence. Mathematics, 8(11), 2089. https://doi.org/10.3390/math8112089