Abstract

We propose a simple multiple outlier identification method for parametric location-scale and shape-scale models when the number of possible outliers is not specified. The method is based on a result giving asymptotic properties of extreme z-scores. Robust estimators of model parameters are used defining z-scores. An extensive simulation study was done for comparing of the proposed method with existing methods. For the normal family, the method is compared with the well known Davies-Gather, Rosner’s, Hawking’s and Bolshev’s multiple outlier identification methods. The choice of an upper limit for the number of possible outliers in case of Rosner’s test application is discussed. For other families, the proposed method is compared with a method generalizing Gather-Davies method. In most situations, the new method has the highest outlier identification power in terms of masking and swamping values. We also created R package outliersTests for proposed test.

1. Introduction

The problem of multiple outliers identification received attention of many authors. The majority of outlier identification methods define rules for the rejection of the most extreme observations. The bulk of publications are concentrated on the normal distribution (see [1,2,3,4,5,6], see surveys in [7,8]. For non-normal case, the most of the literature pertains to the exponential and gamma distributions, see [9,10,11,12,13,14,15,16,17]. Outliers identification is important analyzing data collected in wide range of areas: pollution [18], IoT [19], medicine [20], fraud [21], smart city applications [22], and many more.

Constructing outlier identification methods, most authors suppose that the number s of observations suspected to be outliers is specified. These methods have a serious drawback: only two possible conclusions are done: exactly s observations are admitted as outliers or it is concluded that outliers are absent. More natural is to consider methods which do not specify the number of suspected observations or at least specify the upper limit s for it. Such methods are not very numerous and they concern normal or exponential samples. These are [1,5,23] methods for normal samples, [15,16,24] methods for exponential samples. The only method which does not specify the upper limit s is the [2] method for normal samples.

We give a competitive and simple method for outlier identification in samples from location-scale and shape-scale families of probability distributions. The upper limit s is not specified, as in the the case of Davies-Gather method. The method is based on a theorem giving asymptotic properties of extreme z-scores. Robust estimators of model parameters are used defining z-scores.

The following investigation showed that the proposed outlier identification method has superior performance as compared to existing methods. The proposed method widens considerably the scope of models applied in statistical analysis of real data. Differently from the normal probability distribution family many two-parameter families such as Weibull, logistic and loglogistic, extreme values, Cauchy, Laplace and other families can be applied for outlier identification. So it may be useful for models which cannot be symmetrized by simple transformations such as log-transform or others.

An advantage of the new method is that complicated computing is not needed because search of test statistic’s critical values by simulation is not needed for each sample size. It allowed to create an R package outliersTests which can be used for outlier search in real datasets. Another advantage is a very good potential for generalizations of the proposed method to regression, time series and other models. To have a competitor, we present not only the new method but also generalize the Davies-Gather method for non-normal data.

In Section 2 we present a short overview of the notion of the outlier region given by [2]. In Section 3 we give asymptotic properties of extreme z-scores based on equivariant estimators of model parameters, and introduce a new outlier identification method for parametric models based on the asymptotic result and robust estimators. In Section 4 we consider rather evident generalizations of Davies-Gather tests for normal data to location-scale families. In Section 5 we give a short overview of known multiple outlier identification methods for normal samples which do not specify an exact number of suspected outliers. In Section 6 we compare performance of the new and existing methods.

2. Outliers and Outlier Regions

Suppose that data are independent random variables . Denote by the c.d.f. of .

Let be a parametric family of absolutely continuous cumulative distribution functions with continuous unimodal densities f on the support of the c.d.f. F.

Suppose that if the data are not contaminated with unusual observations, then the following null hypothesis is true: there exist such that

There are two different definitions of an outlier. In the first case the outlier is an observation which falls into some outlier region . The outlier region is a set such that the probability for at least one observation from a sample to fall into it is small if the hypothesis is true. In such a case the probability that a specified observation falls into is very small. If an observation has distribution different from that under then this probability may be considerably higher.

In the second case, the value of is an outlier if the probability distribution of is different from that under , formally . In this case, outliers are often called contaminants.

Therefore, in the first case, there exists a very small probability to have an outlier under . If the hypothesis holds, then contaminants are absent and with very small probability some outliers (in the first sense) are possible. If contaminants are present, then the hypothesis is not true. Nevertheless, contaminants are not necessary outliers (in the first sense ) because it is possible that they do not fall into the outlier region. So the two notions are different. Both definitions give approximately the same outliers if the alternative distribution is concentrated in the outlier region. Namely such contaminants can be called outliers in the sense that outliers are anomalous extreme observations. In such a case it is possible to compare outlier and contaminant search methods.

In this paper, we consider location-scale and shape-scale families. Location-scale families have the form with the completely specified baseline c.d.f and p.d.f. . Shape-scale families have the form with completely specified baseline c.d.f and p.d.f. . By logarithmic transformation the shape-scale families are transformed to location-scale family, so we concentrate on location-scale families. Methods for such families are easily modified to methods for shape-scale families.

The right-sided -outlier region for a location-scale family is

and the left-sided -outlier region is

The two-sided -outlier region has the form

If is symmetric, then the two-sided outlier region is simpler:

The value of is chosen depending on the size n of a sample: . The choice is based on assumption that under for some close to zero

The equality (3) means that under the probability that none of falls into -outlier region is . It implies that

The sequence decreases from to 0 as n goes from 1 to ∞.

The first definition of an outlier is as follows: for a sample size n a realization of is called outlier if ; is called right outlier if .

The number of outliers under has the binomial distribution and the expected number of outliers in the sample under is Please note that as . For example, if , then and for the expected number of outliers is approximately , i.e., it practically does not depend on n. So under the expected number of outliers is negligible with respect to the sample size n.

3. New Method

3.1. Preliminary Results

Suppose that a c.d.f. belongs also to the domain of attraction , (see [25]).

If , then there exist normalizing constants and such that Similarly, if , , then ,

One of possible choices of the sequences and is

In the particular case of the normal distribution equivalent form can be used. Expressions of and for some most used distributions are given in Table 1.

Table 1.

Expressions of and .

Condition A.

- (a)

- and are consistent estimators of μ and σ;

- (b)

- the limit distribution of ( is non-degenerate;

- (c)

Condition A (c) is satisfied for many location-scale models including the normal, type I extreme value, type II extreme value, logistic, Laplace (), Cauchy ().

Set , . The random variables are called z-scores. Denote by and the respective order statistics

The following theorem is useful for right outliers detection test construction.

Theorem 1.

If and Conditions A hold, then for fixed s

as , where are i.i.d. standard exponential random variables.

If , and Conditions A hold, then the limit random vector is

Proof of Theorem 1.

Please note that

The s-dimensional random vector such that its ith component is the first term of the right side converges in distribution to the random vector given in the formulation of the theorem. It follows from Theorem 2.1.1 of [25] and Condition A (a). So it is sufficient to show that the second and the third terms converge to zero in probability. The second term is

the third term is

By Condition A (c)

It also implies because Proof completed. □

Remark 1.

Please note that . It implies that if , then for fixed i, ,

Similarly, if , , then for fixed i, ,

The following theorem is useful for construction of outlier detection tests in two-sided case when is symmetric. For any sequence denote by the ordered absolute values .

Theorem 2.

Suppose that the function is symmetric. If , and Conditions A hold, then for fixed s

as .

Proof of Theorem 2.

For any the following equality holds:

The c.d.f. of the random variables is , so if , then , and for the sequence the normalizing sequences are . So the s-dimensional random vector such that its ith component is the second term of the right side converges in distribution to the random vector given in the formulation of the theorem. It follows from Theorem 2.1.1 of [25]. So it is sufficient to show that the first term converges in probability to zero.

Please note that , and

So Analogously, the inequality implies that

Theorem 2.1.1 in [25] applied to the random variables implies that there exist a random variable with the c.d.f. () or , , (), such that

The convergence and Condition A (c) imply:

These results and Conditions A (a), (b) imply that the first term at the right of (8) converges in probability to zero. Proof completed. □

Remark 2.

Theorem 2 implies that if , , then for fixed i, ,

and if , , then

Suppose now that the function is not symmetric. Set . The c.d.f. and p.d.f. of are and , respectively. Set

For example, if type I extreme value distribution is considered, then

For the type II extreme value distribution have the same expressions as for the Type I extreme value distribution, respectively.

Remark 3.

Similarly as in Theorem 1 we have that if s is fixed and , then for fixed i, ,

and if , , then for fixed i, ,

3.2. Robust Estimators for Location-Shape Distributions

The choice of the estimators and is important when outlier detection problem is considered. The ML estimators from the complete sample are not stable when outliers exist.

In the case of location-scale families highly efficient robust estimators of the location and scale parameters and are (see [26])

where is the empirical median, are absolute values of the differences and is the lth order statistic from .

The constant d has the form , where is the inverse of the c.d.f. of , .

Expressions of and values d for some well-known location-scale families are given in Table 2.

Table 2.

Values of d for various probability distributions.

The above considered estimators are equivariant under , i.e. for any , the following equalities hold:

Equivariant estimators have the following property: the distribution of , and does not depend on the values of the parameters and .

3.3. Right Outliers Identification Method for Location-Scale Families

Theorem 3.

The distribution of the statistic is parameter-free for any fixed n.

Proof of Theorem 3.

The result follows from the equality

equivariance of the estimators , and the fact that the distribution of the random vector does not depend on the values of the parameters and . □

Denote by the critical value of the statistic . Please note that it is exact, not asymptotic critical value: under .

Theorem 1 implies that the limit distribution (as ) of the random variable coincides with the distribution of the random variable where , are i.i.d. standard exponential random variables. The random variables are dependent identically distributed and the distribution of each is uniform: .

Denote by the critical values of the random variable . They are easily found by simulation many times generating s i.i.d. standard exponential random variables and computing values of the random variables .

Our simulations showed that the below proposed outlier identification methods based on exact and approximate critical values of the statistic give practically the same results, so for samples of size we recommend to approximate the -critical level of the statistic by the critical values which depend only on s. We shall see that for the purpose of outlier identification only the critical values are needed. We found that the critical values are: , , .

Our simulations showed that the performance of the below proposed outlier identification method based on exact and approximate critical values of the statistic is similar for samples of size .

We write shortly -method for the below considered method.

method for right outliers. Begin outlier search using observations corresponding to the largest values of . We recommend begin with five largest. So take and compute the values of the statistics

If , then it is concluded that outliers are absent and no further investigation is done. Under the probability of such event is approximately .

If , then it is concluded that outliers exist.

Please note that (see the classification scheme below) that if , then minimum one observation is declared as an outlier. So the probability to declare absence of outliers does not depend on the following classification scheme.

If it is concluded that outliers exist then search of outliers is done using the following steps.

Step 1. Set . Please note that the maximum exists because .

If , then classification is finished at this step: observations are declared as right outliers because if the value of is declared as an outlier, then it is natural to declare values of as outliers, too.

If , then it is possible that the number of outliers is higher than 5. Then the observation corresponding to (i.e., corresponding to ) is declared as an outlier and we proceed to the step 2.

Step 2. The above written procedure is repeated taking instead of ; here

Set . If , the classification is finished and observations are declared as outliers.

If , then it is possible that the number of outliers is higher than 6. Then the observation corresponding to the largest is declared as an outlier, in total 2 observations (i.e., the observations corresponding to (i.e., corresponding to and ) are declared as outliers and we proceed to the Step 3, and so on. Classification finishes at the lth step when . So we declare outliers in the previous steps and outliers in the last one. The total number of observations declared as outliers is . These observations are values of .

3.4. Left Outliers Identification Method for Location-Scale Families

Let be the normalizing constants defined by (12). If , , then set

If , , then replace by . Denote by the critical value of the statistic .

Theorem 1 and Remark 3 imply that the limit distribution (as ) of the random variable coincides with the distribution of the random variable . So the critical values are approximated by the critical values .

The left outliers search method coincides with the right outliers search method replacing + to − in all formulas.

3.5. Outlier Detection Tests for Location-Scale Families: Two-Sided Alternative, Symmetric Distributions

Let be defined by (5). If , , then set

If , , then replace by . Denote by the critical value of the statistic .

Theorem 1 and Remark 2 imply that the limit distribution (as ) of the random variable coincides with the distribution of the random variable . So the critical values are approximated by the critical values .

The outliers search method coincides with the right outliers search method skipping upper index + in all formulas.

3.6. Outlier Detection Tests for Location-Scale Families: Two-Sided Alternative, Non-Symmetric Distributions

Suppose now that the function is not symmetric. Let be defined by (12).

Begin outlier search using observations corresponding to the largest and the smallest values of . We recommend begin with five smallest and five largest. So compute the values of the statistics and . If and , then it is concluded that outliers are absent and no further investigation is done.

If or , then it is concluded that outliers exist. If , then left outliers are searched as in Section 3.3. If , then right outliers are searched as in Section 3.2. The only difference is that is replaced by in all formulas.

3.7. Outlier Identification Method for Shape-Scale Families

If shape-scale families of the form with specified are considered then the above given tests for location-scale families could be used because if is a sample from shape scale family then , , is a sample from location-scale family with , , .

3.8. Illustrative Example

To illustrate simplicity of the -method, let us consider an illustrative example of its application (sample size , outliers). The sample of size from standard normal distribution was generated. The 1st-3rd and 17th-20th observations were replaced by outliers. The observations , the absolute values of the z-scores , and the ranks of are presented in Table 3.

Table 3.

Illustrative sample ().

In Table 4 we present steps of the classification procedure by the method. First, we compute (see line 1 of Table 4) value of the statistic . Since , we reject the null hypothesis, conclude that outliers exist and begin the search of outliers.

Table 4.

Illustrative example of test observations classification.

Step 1. The inequality (note that corresponds to the fifth largest observation in absolute value) implies that . So it is possible that the number of outliers might be greater than 5. We reject the largest in absolute value 20th observation as an outlier and continue the search of outliers.

Step 2. The inequality (note that corresponds to the fifth largest observation in absolute value from the remaining 19 observations) implies that . So it is possible that the number of outliers might be greater than 6. We declare the second largest in absolute value observation as an outlier. So two observations (19th and 20th) are declared as outliers. We continue the search of outliers.

Step 3. The inequality implies that . We declare the third largest in absolute value observation as an outlier. So three observations (2nd, 19th and 20th) are declared as outliers. We continue the search of outliers.

Step 4. The inequalities and imply that . So four additional observations (the fourth, fifth, sixth and seventh largest in absolute value observations), namely the 3d, 1st, 17th, and 7th are declared as outliers, The outlier search is finished. In all, 7 observations were declared as outliers: 1–3,17–20, as was expected. Please note that since the outlier search procedure was done after rejection of the null hypothesis, the significance level did not change.

3.9. Practical Example

Let’s consider the stent fatigue testing dataset from reliability control [27]. The dataset contains 100 observations. Let us consider the Weibull, lollogistic and lognormal models. These are the most applied models for analysis of reliability data. For preliminary choice of suitable model we compare the values of various goodness-of-fit statistics and information criteria (see Table 5). The Weibull model is obviously the most suited because values of all five statistics are smallest for this model.

Table 5.

Values of goodness-of-fit statistics and information criteria (initial sample).

Using the function WEDF.test from the R package EWGoF we applied the following goodness-of-fit tests for Weibull distribution: Anderson-Darling (p-value = 0.86), Kolmogorov-Smirnov (p-value = 0.82), Cramer-von-Mises (p-value = 0.795), Watson (p-value = 0.795). So all tests do not contradict to the Weibull model.

The logarithms of observations have type I extreme value distribution. Minimal and maximal values are and . Let us consider the situation, where fatigue data contain two outliers and . All goodness-of-fit tests applied to the data with outliers reject the Weibull model: Anderson-Darling (p-value ), Kolmogorov-Smirnov (p-value ), Cramer-von-Mises (p-value ), Watson (p-value ).

Let us apply the method for outlier identification. Values of the statistics are: . Since , we reject the null hypothesis.

Step 1. Since . the search procedure is finished and the observations and , namely and , are declared as outliers. We see that our method did not allow masking other equal observations . It is a very important advantage of the method.

After outliers removal, we repeated goodness-of-fit procedure. All tests did not reject the Weibull model: Anderson-Darling (p-value = 0.88), Kolmogorov-Smirnov (p-value = 0.8), Cramer-von-Mises (p-value = 0.93), Watson (p-value = 0.895). Once more, we compared values of goodness-of-fit statistics and information criteria for above considered models using data without removed outliers, see Table 6.

Table 6.

Values of goodness-of-fit statistics and information criteria (sample without removed outliers).

The Weibull distribution gives clearly the best fit.

Values of ML estimators from the initial non-contaminated data and from the final cleared from outliers data are similar: shape practically did not change: , scale changed slightly: .

We created R package outliersTests (https://github.com/linas-p/outliersTests) to be able to use the proposed test in practice within R package.

4. Generalization of Davies-Gather Outlier Identification Method

Let us consider location-scale families. Following the idea of Davies-Gather [2] define an empirical analogue of the right outlier region as a random region

where is found using the condition

and are robust equivariant estimators of the parameters .

Set

The distribution of is parameter-free under .

The Equation (18) is equivalent to the equation equation

So is the upper critical value of the random variable . It is easily computed by simulation.

Generalized Davies-Gather method for right outliers identification: if , then it is concluded that right outliers are absent. The probability of such event is . If , then it is concluded that right outliers exist. The value of the random variable is admitted as an outlier if , i.e., if . Otherwise it is admitted as a non-outlier.

An empirical analogue of the left outlier region as a random region

where is found using the condition

Set

The distribution of is parameter-free under .

The Equation (20) is equivalent to the equation equation

So is the upper critical value of the random variable . It is easily computed by simulation.

Generalized Davies-Gather method for left outliers identification: if , then it is concluded that left outliers are absent. The probability of such event is . If , then it is concluded that left outliers exist. The value of the random variable is admitted as an outlier if , i.e., if . Otherwise it is admitted as a non-outlier.

Let us consider two-sided case.

If the distribution of is symmetric, then the empirical analogue of the outlier region is the random region

In this case

Generalized Davies-Gather method for left and right outliers identification (symmetric distributions): if , then it is concluded that outliers are absent. The probability of such event is . If , then it is concluded that outliers exist. The value of the random variable is admitted as a left outlier if , it is admitted as a right outlier if . Otherwise it is admitted as a non-outlier.

If distribution of is non-symmetric, then the empirical analogue of the outlier region is defined as follows:

In this case

Generalized Davies-Gather method for left and right outliers identification (non-symmetric distributions): if and , then it is concluded that outliers are absent. The probability of such event is . If or , then it is concluded that outliers exist. The value of the random variable is admitted as a left outlier if , it is admitted as a right outlier if . Otherwise it is admitted as a non-outlier.

5. Short Survey of Multiple Outlier Identification Methods for Normal Data

5.1. Rosner’s Method

Let us formulate Rosner’s method in the form mostly used in practice. Suppose that the number of outliers does not exceed s and the two-sided alternative is considered. Set (see [5,28])

may be interpreted as a distance between and . Remove the observation which is most distant from . This maximal distance is . The value of is a possible candidate for contaminant.

Recompute the statistic using remaining observations and denote by the obtained statistic. Remove the observation which is most distant from the new empirical mean. The value of is also possible candidate for contaminant. Repeat the procedure until the statistics are computed. So we obtain all possible candidates for contaminants. They are values of

Fix and find such that

If , then the approximations

are recommended (see [5]); here is the p critical value of the Student distribution with degrees of freedom.

Rosner’s method for left and right outliers identification: if for all , then it is concluded that outliers are absent. If there exists such that , i.e., the event occurs, then it is concluded that outliers exist. In this case, classification of observations to outliers and non-outliers is done in the following way: if , then it is concluded that there are s outliers and they are values of . If for , and , then it is concluded that there are i outliers and they are values of .

If right outliers are searched, then define and repeat the above procedure taking approximations

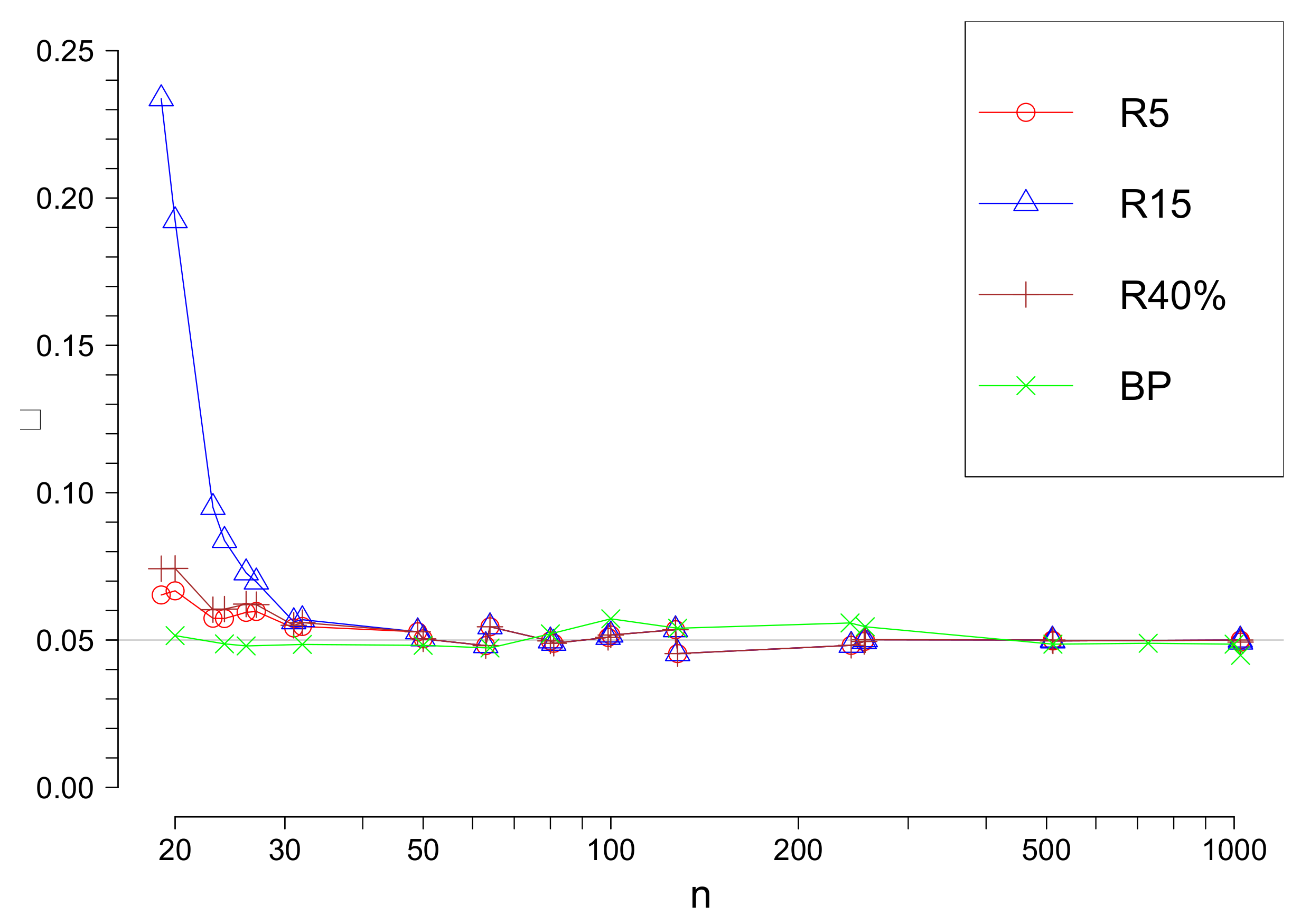

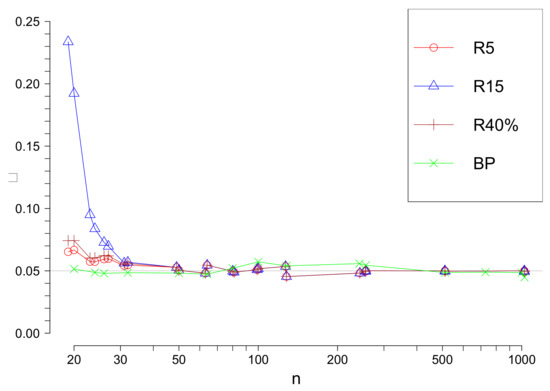

Denote by the Rosner’s test with a fixed upper limit s. Our simulation results confirm that the true significance level is different from the level suggested by the approximation when n is not large. Nevertheless, it is approaching as n increases, see Figure 1. The true significance value of the test, which uses asymptotic values of the test statistic are also presented in Figure 1.

Figure 1.

The true values of the significance level of Rosner’s and tests in function of n for different values of s ( is used in approximations).

5.2. Bolshev’s Method

Suppose that the number of contaminants does not exceed s. For set

where and s are the empirical mean and standard deviation, is the c.d.f. of Thompson’s distribution with degrees of freedom.

Let us consider search for right outliers. Please note that the largest s observations define the smallest s order statistics . Possible candidates for outliers are namely the values of .

Set

Bolshev’s method for right outliers search. If , then it is concluded that outliers are absent; here is the critical value of the test statistic under . If , then it is concluded that outliers exist. In such a case outliers are selected in the following way: if then the value of the order statistic is admitted as an outlier, .

In the case of left and right outliers search Bolshev’s method uses instead of , defining the statistic

Bolshev’s method for left and right outliers search. If , then it is concluded that outliers are absent; here is the critical value of the statistic under . If , then it is concluded that outliers exist. In such a case they are selected in the following way: if then the observation corresponding to is admitted as an outlier, .

5.3. Hawking’s Method

Suppose that the number of contaminants does not exceed s. Let us consider the search for right outliers. For set

proportional to the sum of k largest . Set

Hawking’s method. If then it is concluded that outliers are absent; here is the critical value of the statistic under . If , then it is concluded that outliers exist. In such a case outliers are selected in the following way: if , then the value of the order statistic is admitted as an outlier, .

6. Comparative Analysis of Outlier Identification Methods by Simulation

In the case of location-scale classes probability distribution of all considered test statistics does not depend on and , so we generated samples of various sizes n with observations with the c.d.f. and r observations with various alternative distributions concentrated in the outlier region. We shall call such observations “contaminant outliers”, shortly c-outliers. As was mentioned, outliers which are not c-outliers, i.e., outliers from regular observations with the c.d.f. , are very rare.

We repeated simulations 100,000 times and using various methods we classified observations to outliers and non-outliers and computed the mean number of correctly identified c-outliers, the mean number of c-outliers which were not identified, the mean number of non c-outliers admitted as outliers, and the mean number of non c-outliers admitted as non-outliers.

An outlier identification method is ideal if each outlier is detected and each non-outlier is declared as a non-outlier. In practice it is impossible to do with the probability one. Two errors are possible: (a) an outlier is not declared as such (masking effect); (b) a non-outlier is declared as an outlier (swamping effect). We shall write shortly “masking value” for the mean number of non-detected c-outliers and “swamping value” for the mean number of “normal” observations declared as outliers in the simulated samples.

If swamping is small for two tests then a test with smaller masking effect should be preferred because in this case the distribution of the data remaining after excluding of suspected outliers should be closer to the distribution of non-outlier data.

From the other side, if swamping for Method 1 is considerably bigger than swamping of Method 2 and masking is smaller for Method 1, then it does not mean that Method 1 is better because this method rejects many extreme non-outliers from the tails of the regular distribution and the sample remaining after classification may be not treated as a sample from this regular distribution even if all c-outliers are eliminated.

For various families of distributions, sample sizes n, and alternatives we compared Davies-Gather () and new () methods performance. In the case of normal distribution we also compared them with Rosner’s, Bolshev’s and Hawking’s methods.

We used two different classes of alternatives: in the first case c-outliers are spread widely in the outlier region around the mean, in the second case c-outliers are concentrated in a very short interval laying in the outlier region. More precisely, if right outliers were searched, then we simulated r observations concentrated in in the right outlier region using the following alternative families of distribution:

- (1)

- Two parameter exponential distribution with the scale parameter . If is small, then outliers are concentrated near the border of the outlier region. If is large then outliers are widely spread in the outlier region. If increases, then the mean of outlier distribution increases. Please note that even if is very near 0 and the true number of outliers r is large, these outliers may corrupt strongly the data making tails of histogram two heavy.

- (2)

- Truncated normal distribution with the location and scale parameters (. If is small then this distribution is concentrated in a small interval around . If increases, then the mean of outlier distribution increases.

For lack of place we present a small part of our investigations. Please note that the results are very similar for all sample sizes . Multiple outlier problem is not very relevant for smaller sample sizes.

6.1. Investigation of Outlier Identification Methods for Normal Data

We use notation , and for the Bolshev’s, Hawking’s, Rosner’s, Davies-Gather’s, and the new methods, respectively. If method is based on maximum likelihood estimators, then we write method, if it is based on robust estimators, we write method.

For comparison of above considered methods we fixed the significance level . We remind that the significance level is the probability to reject minimum one observation as an outlier under the hypothesis which means that all observations are realizations of i.i.d. with the same normal distribution. The only test, namely R method uses approximate critical values of the test statistic, so the significance values for this test is only approximately and depends on s and n. In Figure 1 the true significance level value for and in function of n are given.

The , and R tests methods have a drawback that the upper bound for the possible number of outliers s must be fixed. The and tests have an advantage that they do not require it.

Our investigations showed that H,B and methods have other serious drawbacks. So firstly let us look closer at these methods.

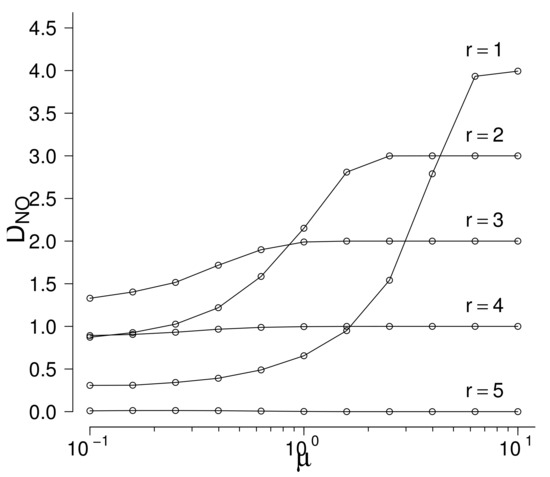

If the true number of c-outliers r exceeds s, then the B and H methods cannot find them even if they are very far from the limits of the outlier region. Nevertheless, suppose that r does not exceed s and look at the performance of the H method. Set , , and suppose that c-outliers are generated by right-truncated normal distribution with fixed and increasing . Note that the true number of c-outliers is supposed to be unknown but do not exceed . In Figure 2 the mean numbers of rejected non-c-outliers are given in function of the parameter (the value of the parameter is fixed) for fixed values of r see Figure 2. In Table 7 the values of plus the values of the mean numbers of truly rejected c-outliers are given. Table 7 shows that if , then if is sufficiently large, the c-outlier is found but the number of rejected non-c-outliers increases to 4, so swamping is very large. Similarly, if , then increases to 3, so swamping is large. Beginning from not all c-outliers are found even for large . Swamping is smallest if the true value r coincides with s but even in this case one c-outlier is not found even for large . Taking into account that the true number r of c-outliers is not known in real data, the performance of the H methos is very poor. Results are similar for other values of n, s, and distributions of c-outliers. As a rule, H mehod finds rather well the c-outliers but swamping is very large because this method has a tendency to reject a number near s of observations for remote alternatives. which is good if but is bad if r is different from s.

Figure 2.

Hawkin’s method: the values of in function of and r (, ).

Table 7.

Hawkin’s method: the values of in function of and r (, ).

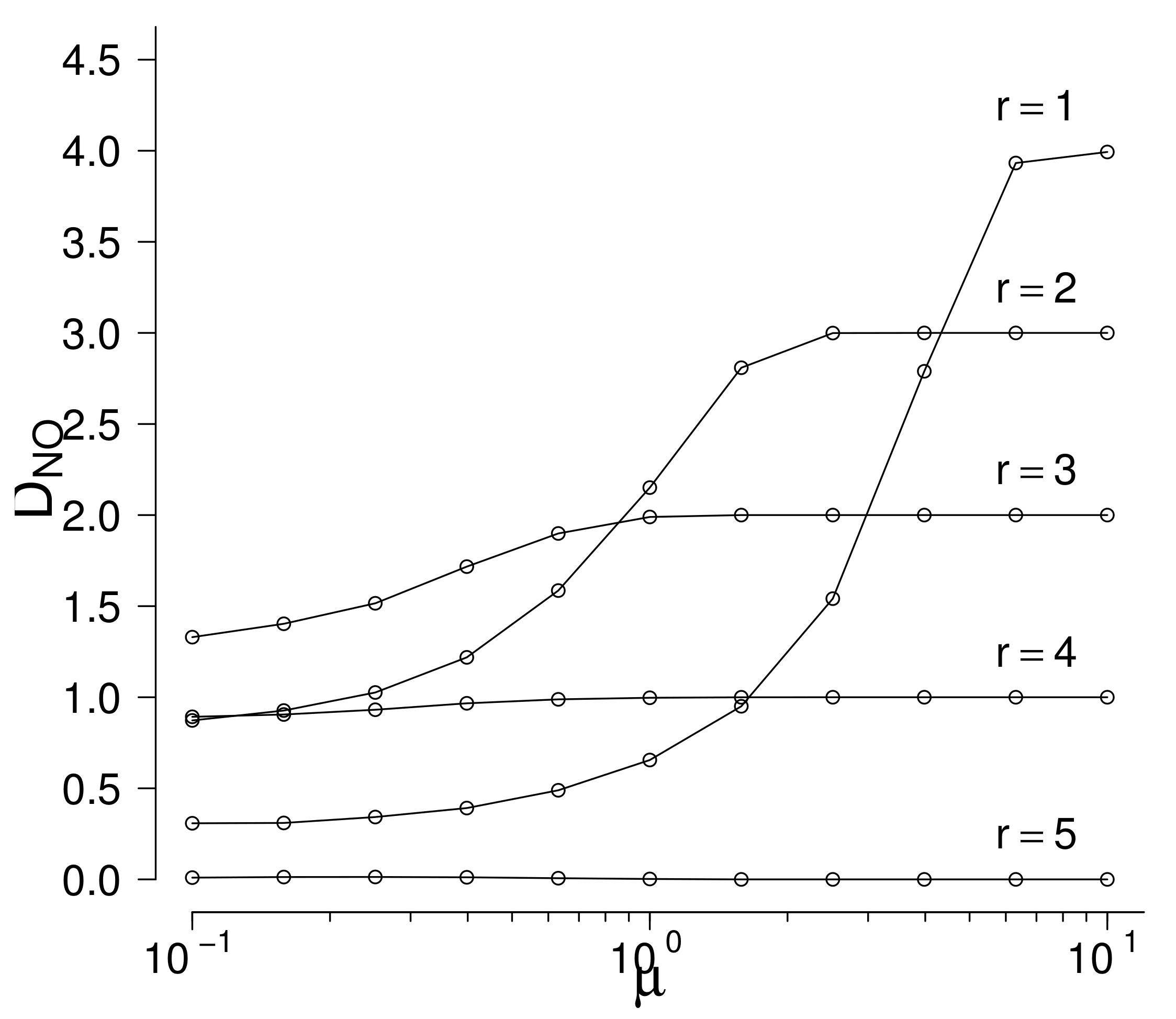

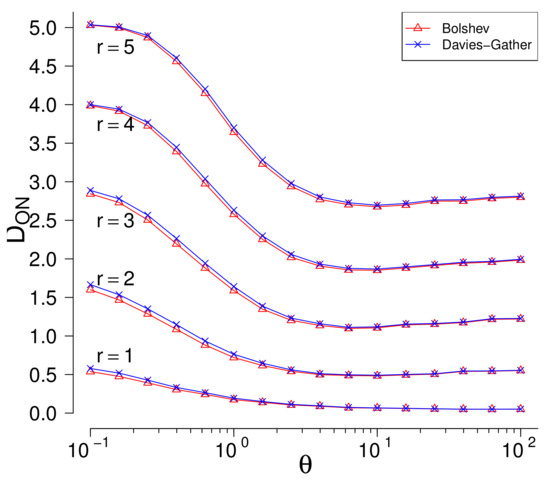

The B and tests have a drawback that they use maximum likelihood estimators which are not robust and estimate parameters badly in presence of outliers. Once more, set , , and suppose that c-outliers are generated by two-parameters exponential distribution with increasing . Swamping values are negligible in, so only masking values( mean numbers of non-rejected c-outliers ) are important. In Figure 3 the masking values in function of the parameter are given for fixed values of r.

Figure 3.

The number of outliers rejected as non-outliers (). The alternative: two-sided, the outliers generated by two-parameters exponential distribution on both sides.

Both methods perform very similarly. The masking values are large for every value of . If r increases, then masking values increase, too. For example, if , then almost 3 c-outliers from 5 are not rejected on average even for large values of .

Similar results hold taking other values of n, s and various distributions of c-outliers.

The above analysis shows that the B, H, methods have serious drawbacks, so we exclude these methods from further consideration.

Let us consider the remaining three methods: R, , and . For small n the true significance level of Rosner’s test differ considerably from the suggested, so we present comparisons of tests performance for (see Table 8 and Table 9). Truncated exponential distribution was used for outliers simulation. Remoteness of the mean of outliers from the border of the outlier region is characterized by the parameter .

Table 8.

The masking values ( and ).

Table 9.

The masking values ().

Swamping values (the mean numbers of non-c-outliers declared as outliers) are very small for all tests. For example, even if , the R and methods reject on average as outliers only 0.05 from non-c-outliers. For the method this number is from , and 900 non-c-outliers, respectively. So only masking values (the mean numbers of c-outliers declared as non-outliers) are important for outlier identification methods comparison.

Necessity to guess the upper limit s for a possible number of outliers is considered as a drawback of the Rosner’s method. Indeed, if the true number of outliers r is greater than the chosen upper limit s, then outliers are not identified with the probability one. In addition, even if , it is not clear how important is closeness of r to s. So first we investigated the problem of the upper limit choice.

Here we present masking values of the Rosner’s tests for and . Similar results are obtained for other values of s.

Our investigations show that it is sufficient to fix , which is clearly larger than it can be expected in real data. Indeed, Table 8 and Table 9 show that for and do not find outliers even if they are very remote, as it should be. Nevertheless, we see that even if the true number of outliers r is much smaller than , for any considered n, the masking values of the test are approximately the same (even a little smaller) as the masking values of the tests and , for they are clearly smaller.

Hence, should be recommended for Rosner’s test application, and performance of , Davies-Gather robust ( and the proposed methods should be compared.

All three methods find all c-outliers if they are sufficiently remote. For the method gives uniformly smallest masking values and the method gives uniformly largest masking values for any considered r in all diapason of alternatives. For and the result is the same. For and (it means that even for very small the data is seriously corrupted) the BP method is also the best except that for the most remote alternatives the method slightly outperforms the BP method. For and the most of alternatives the BP method strongly outperforms other methods, except the most remote alternatives.

The and Rosner’s methods have very large masking if many outliers are concentrated near the outlier region border. In this case data is seriously corrupted; however, these methods do not see outliers.

Conclusion: in most considered situations the method is the best outlier identification method. The second is Rosner’s method with , and the third is the Davies-Gather method based on robust estimation. Other methods have poor performance.

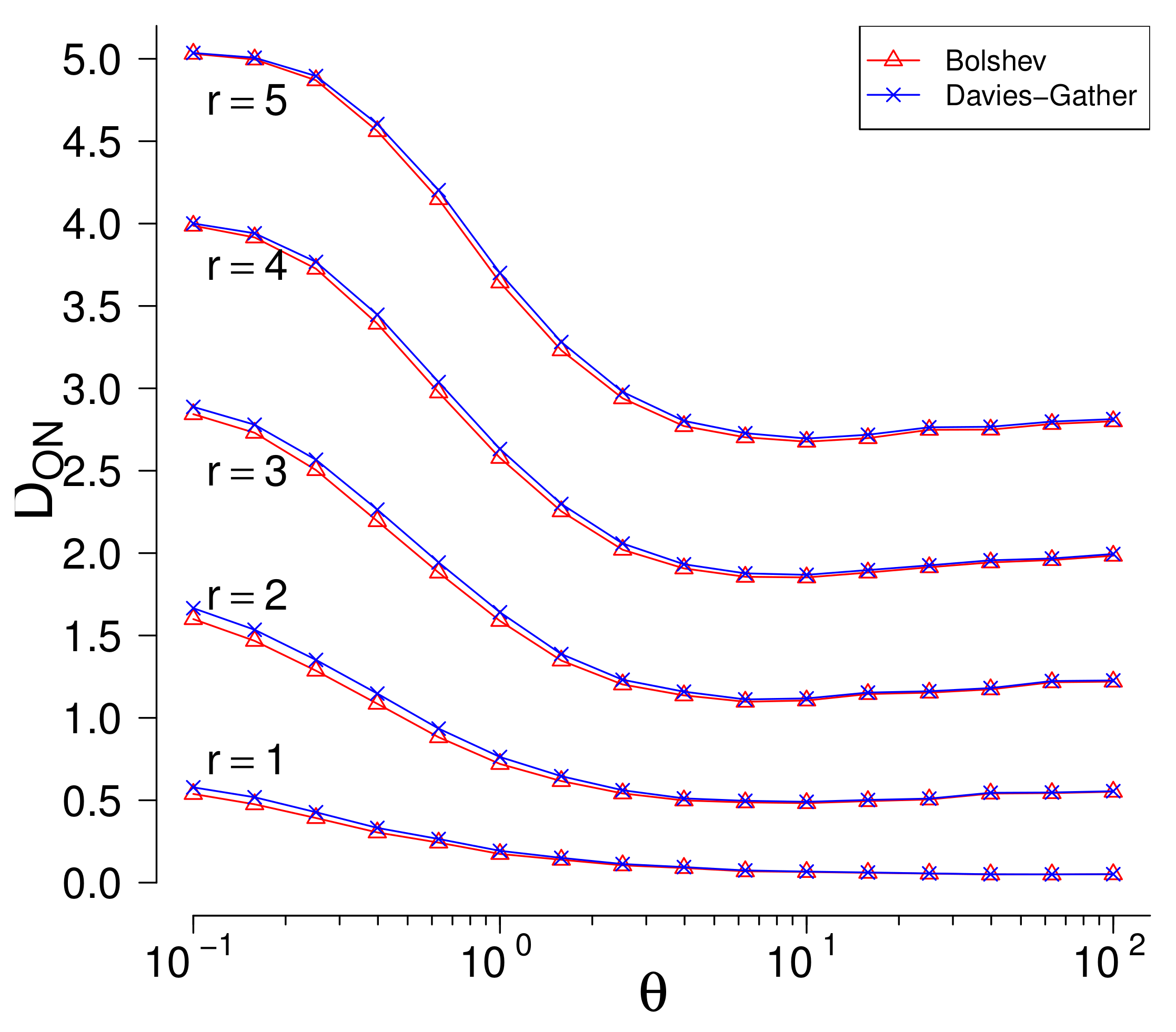

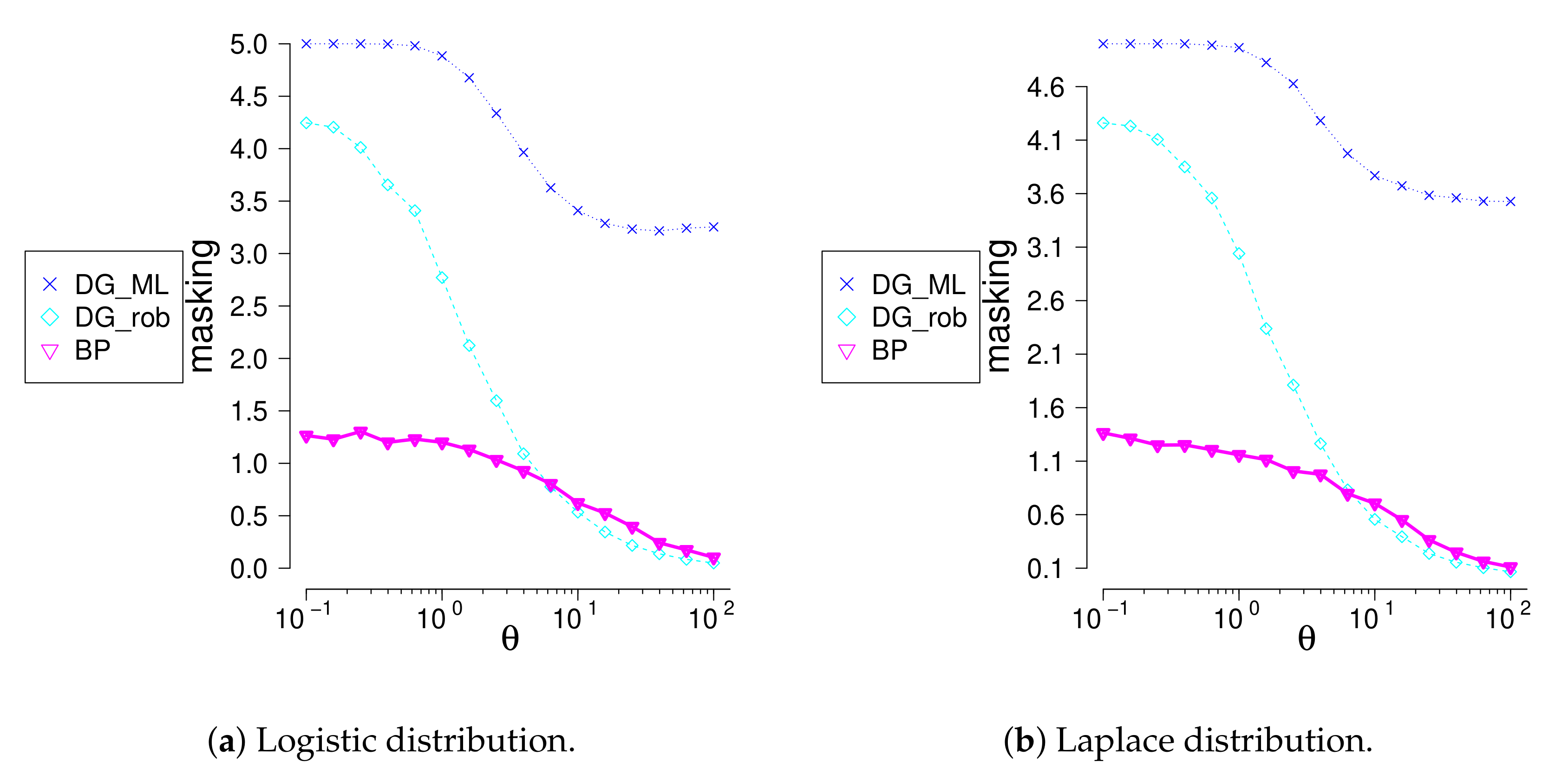

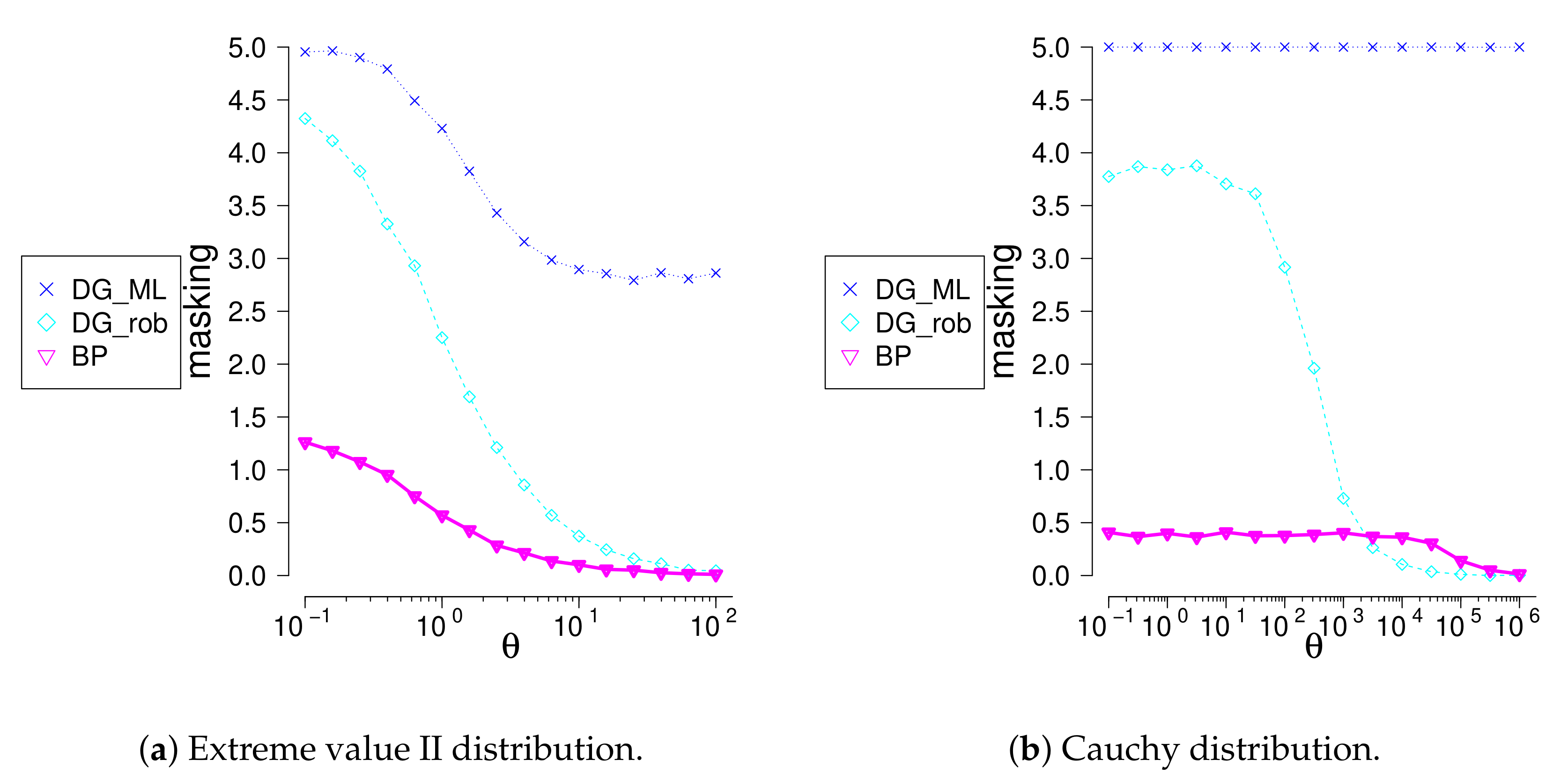

6.2. Investigation of Outlier Identification Methods for Other Location-Scale Models

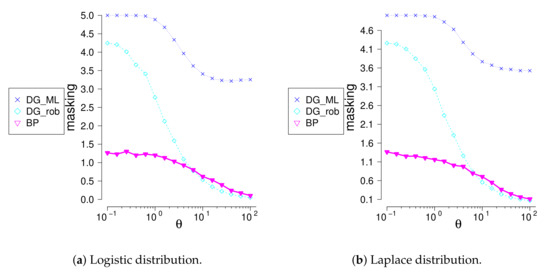

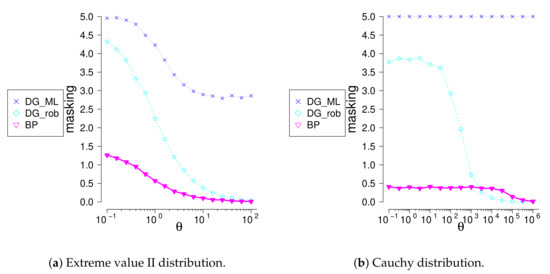

We investigated performance of the new method for location-scale families different from normal. We compare the method with the generalized Davies-Gather method for logistic, Laplace (symmetric, ), extreme values (non-symmetric ), and Cauchy (symmetric, ) families. C-outliers were generating using truncated exponential distribution concentrated in two-sided outlier region. Swamping values being small, masking value, see Table 10 and differences between the true number of c-outliers and the number of rejected observations, see Figure 4 and Figure 5, were compared. The and methods find very well the most remote outliers; meanwhile, the method identifies much better closer outliers. The method identifies badly multiple outliers concentrated near the border of the outlier region, whereas the method does well. The is not appropriate for multiple outlier search.

Table 10.

Masking values for logistic, Laplace, extreme value II and Cauchy distribution, when , .

Figure 4.

The difference between number outliers and rejected observations given that sample size and outliers.

Figure 5.

The difference between number outliers and rejected observations given that sample size and outliers.

7. Conclusions

We compared by simulation outlier identification results of the new method and methods given in previous studies. Even in the case of the normal model, which is investigated by many authors, the new method shows excellent identification power. In many situations, it has superior performance as compared to existing methods.

The obtained results widened considerably the spectre of most used non-regression models needing outlier identification methods. Many two-parameter models such as Weibull, logistic and loglogistic, extreme values, Cauchy, Laplace, and others can be investigated applying the new method.

The advantage of the proposed outlier identification method is that it has very good potential for generalizations. The authors are at the completion stage of research on outlier identification methods for accelerated failure time regression models and generalized linear models, gamma regression model in particular. Outlier identification methods for time series is another direction of the future work. Possible direction is investigation of Gaussian mixture regression models (see [29]).

Limitation of the new method is that it cannot be applied for analysis of discreet models. Taking into consideration that the method is based on asymptotic results, we recommend not applying it to samples of very small size .

The R package outliersTests was created for the practical usage of proposed test.

Author Contributions

Investigation, V.B. and L.P.; Methodology, V.B. and L.P.; Supervision, V.B.; Writing—original draft, V.B. and L.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bol’shev, L.; Ubaidullaeva, M. Chauvenet’s Test in the Classical Theory of Errors. Theory Probab. Appl. 1975, 19, 683–692. [Google Scholar] [CrossRef]

- Davies, L.; Gather, U. The Identification of Multiple Outliers. J. Am. Stat. Assoc. 1993, 88, 782–792. [Google Scholar] [CrossRef]

- Dixon, W.J. Analysis of Extreme Values. Ann. Math. Stat. 1950, 21, 488–506. [Google Scholar] [CrossRef]

- Grubbs, F.E. Sample Criteria for Testing Outlying Observations. Ann. Math. Stat. 1950, 21, 27–58. [Google Scholar] [CrossRef]

- Rosner, B. On the Detection of Many Outliers. Technometrics 1975, 17, 221–227. [Google Scholar] [CrossRef]

- Tietjen, G.L.; Moore, R.H. Some Grubbs-Type Statistics for the Detection of Several Outliers. Technometrics 1972, 14, 583–597. [Google Scholar] [CrossRef]

- Barnett, V.; Lewis, T. Outliers in Statistical Data; John Wiley & Sons: Hoboken, NJ, USA, 1974. [Google Scholar]

- Zerbet, A. Statistical Tests for Normal Family in Presence of Outlying Observations. In Goodness-of-Fit Tests and Model Validity; Huber-Carol, C., Balakrishnan, N., Nikulin, M.S., Mesbah, M., Eds.; Birkhäuser Boston: Basel, Switzerland, 2002; pp. 57–64. [Google Scholar]

- Chikkagoudar, M.; Kunchur, S.H. Distributions of test statistics for multiple outliers in exponential samples. Commun. Stat. Theory Methods 1983, 12, 2127–2142. [Google Scholar] [CrossRef]

- Kabe, D.G. Testing outliers from an exponential population. Metrika 1970, 15, 15–18. [Google Scholar] [CrossRef]

- Kimber, A. Testing upper and lower outlier paris in gamma samples. Commun. Stat. Simul. Comput. 1988, 17, 1055–1072. [Google Scholar] [CrossRef]

- Lalitha, S.; Kumar, N. Multiple outlier test for upper outliers in an exponential sample. J. Appl. Stat. 2012, 39, 1323–1330. [Google Scholar] [CrossRef]

- Lewis, T.; Fieller, N.R.J. A Recursive Algorithm for Null Distributions for Outliers: I. Gamma Samples. Technometrics 1979, 21, 371–376. [Google Scholar] [CrossRef]

- Likeš, I.J. Distribution of Dixon’s statistics in the case of an exponential population. Metrika 1967, 11, 46–54. [Google Scholar] [CrossRef]

- Lin, C.T.; Balakrishnan, N. Exact computation of the null distribution of a test for multiple outliers in an exponential sample. Comput. Stat. Data Anal. 2009, 53, 3281–3290. [Google Scholar] [CrossRef]

- Lin, C.T.; Balakrishnan, N. Tests for Multiple Outliers in an Exponential Sample. Commun. Stat. Simul. Comput. 2014, 43, 706–722. [Google Scholar] [CrossRef]

- Zerbet, A.; Nikulin, M. A new statistic for detecting outliers in exponential case. Commun. Stat. Theory Methods 2003, 32, 573–583. [Google Scholar] [CrossRef]

- Torres, J.M.; Pastor Pérez, J.; Sancho Val, J.; McNabola, A.; Martínez Comesaña, M.; Gallagher, J. A functional data analysis approach for the detection of air pollution episodes and outliers: A case study in Dublin, Ireland. Mathematics 2020, 8, 225. [Google Scholar] [CrossRef]

- Gaddam, A.; Wilkin, T.; Angelova, M.; Gaddam, J. Detecting Sensor Faults, Anomalies and Outliers in the Internet of Things: A Survey on the Challenges and Solutions. Electronics 2020, 9, 511. [Google Scholar] [CrossRef]

- Ferrari, E.; Bosco, P.; Calderoni, S.; Oliva, P.; Palumbo, L.; Spera, G.; Fantacci, M.E.; Retico, A. Dealing with confounders and outliers in classification medical studies: The Autism Spectrum Disorders case study. Artif. Intell. Med. 2020, 108, 101926. [Google Scholar] [CrossRef]

- Zhang, C.; Xiao, X.; Wu, C. Medical Fraud and Abuse Detection System Based on Machine Learning. Int. J. Environ. Res. Public Health 2020, 17, 7265. [Google Scholar] [CrossRef] [PubMed]

- Souza, T.I.; Aquino, A.L.; Gomes, D.G. A method to detect data outliers from smart urban spaces via tensor analysis. Future Gener. Comput. Syst. 2019, 92, 290–301. [Google Scholar] [CrossRef]

- Hawkins, D.M. Identification of Outliers; Springer: Dordrecht, The Netherlands, 1980; Volume 11. [Google Scholar]

- Kimber, A.C. Tests for Many Outliers in an Exponential Sample. J. R. Stat. Soc. 1982, 31, 263–271. [Google Scholar] [CrossRef]

- De Haan, L.; Ferreira, A. Extreme Value Theory: An Introduction; Springer: New York, NY, USA, 2007. [Google Scholar]

- Rousseeuw, P.J.; Croux, C. Alternatives to the median absolute deviation. J. Am. Stat. Assoc. 1993, 88, 1273–1283. [Google Scholar] [CrossRef]

- Liu, Y.; Abeyratne, A.I. Practical Applications of Bayesian Reliability; John Wiley & Sons: Hoboken, NJ, USA, 2019. [Google Scholar]

- Rosner, B. Percentage points for the RST many outlier procedure. Technometrics 1977, 19, 307–312. [Google Scholar] [CrossRef]

- Su, H.; Hu, Y.; Karimi, H.R.; Knoll, A.; Ferrigno, G.; De Momi, E. Improved recurrent neural network-based manipulator control with remote center of motion constraints: Experimental results. Neural Netw. 2020, 131, 291–299. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).