Ideal and Predictable Hit Ratio for Matrix Transposition in Data Caches

Abstract

:1. Introduction

- Theoretical expressions to estimate the ideal data cache behaviour (compulsory misses) of matrix transposition, independently of the cache configuration and the matrix transposition algorithm.

- Theoretical expressions for an optimal LRU data cache configuration of the tiling version of matrix transposition.

- Validation of previous expressions and comparison to cache oblivious by means of simulations.

- Experimental results on real hardware and comparison to pseudo-LRU (PLRU).

- No more than two ways of data caching and a few sets are required in general for matrix transposition, so the remaining ways in a set-associative data cache could be powered off, with its corresponding energy savings and no negative impact.

- Contemporary partitionable last-level caches could offer just two ways to the matrix transposition process, avoiding unnecessary pollution to other computations with no negative impact.

- Hit-miss results can be easily predicted for the tiling version of matrix transposition (mandatory for real-time applications) in an LRU cache and, under specific cache configurations, in a PLRU cache.

2. Related Work

3. Ideal Data Cache Hit Ratios in Matrix Transposition

| Algorithm 1 TransposeMatrix(, N): Transposes a matrix. |

| 1: for to N do # N iterations |

| 2: for to N do # N iterations |

| 3: # Memory load |

| 4: # Memory load and store |

| 5: # Memory store |

| 6: end for |

| 7: end for |

3.1. Bound of the Ideal Hit Ratio with a Cache Line Holding L Elements

3.2. Ideal Hit Ratio in a Matrix with Row Size Multiple of Cache Line Size ()

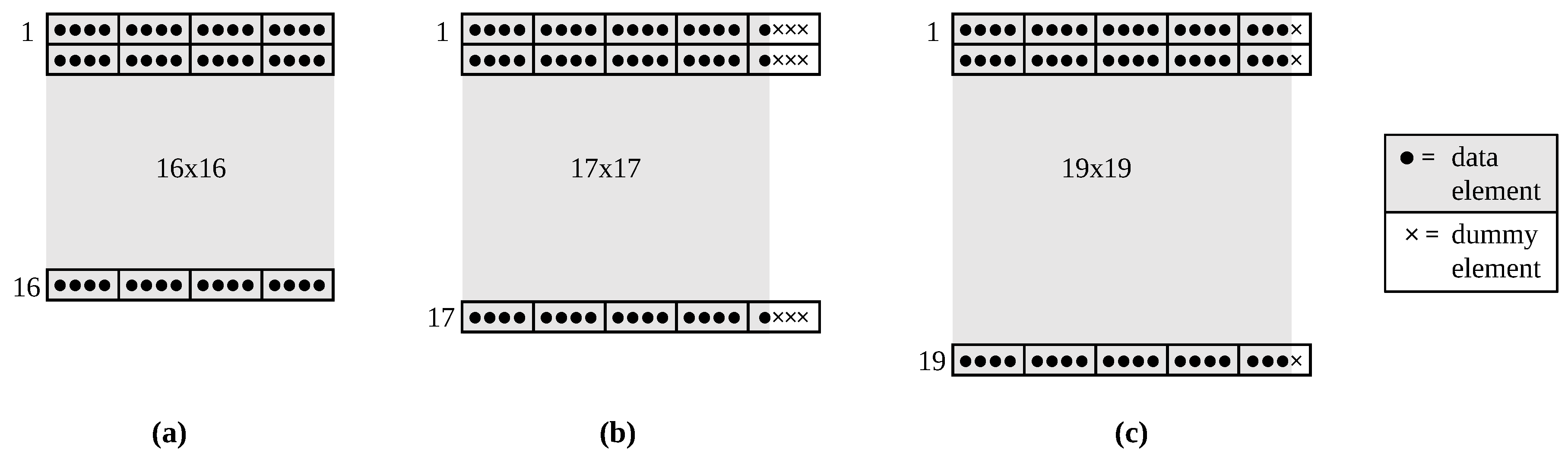

3.3. Ideal Hit Ratio in a Matrix with () Padding

3.4. Ideal Hit Ratio in a Matrix with () Padding

4. Required LRU Cache Configuration to Achieve Ideal Hit Ratios

| Algorithm 2 Tiling(, N, T): Transposes a padded matrix, stored in row major order, through tiles [4]. |

| 1: for to N step T do # triangular matrix traversal, by tiles |

| 2: for to i step T do |

| 3: for to step 1 do # transpose non-diagonal tiles |

| 4: for to step 1 do |

| 5: |

| 6: |

| 7: |

| 8: end for |

| 9: end for |

| 10: end for |

| 11: for to step 1 do # transpose diagonal tiles |

| 12: for to step 1 do |

| 13: |

| 14: |

| 15: |

| 16: end for |

| 17: end for |

| 18: end for |

5. Reducing the Requirements of Set-Associative LRU Caches with Further Padding

5.1. Bounds on W to Achieve Ideal Hit Ratios with

5.1.1. Case

5.1.2. Case

5.1.3. Case

5.2. Bound on W to Achieve Ideal Hit Ratios with

5.3. Bound on W to Achieve Ideal Hit Ratios with

6. Experiments

6.1. Data Cache Hit Ratio in Tiled Matrix Transposition

6.2. Tiling vs. Oblivious Data Hit Ratio

| Algorithm 3 Transpose(, N, , ): transposes a phantom-padded matrix, stored in row major order, recursively. |

| 1: if then |

| 2: |

| 3: else |

| 4: ; |

| 5: ; |

| 6: if then |

| 7: ; |

| 8: ; |

| 9: end if |

| 10: end if |

| Algorithm 4 TransposeSwap(, N, , , , ). |

| 1: if then |

| 2: for to do |

| 3: for to do |

| 4: |

| 5: end for |

| 6: end for |

| 7: else |

| 8: if then |

| 9: ; |

| 10: ; |

| 11: ; |

| 12: ; |

| 13: ; |

| 14: ; |

| 15: end if |

| 16: end if |

6.3. PLRU and Execution Time on Real Platforms

6.4. Modelling and Reducing the Energy Consumption of the Memory Subsystem for the tiled Matrix Transposition

6.4.1. Analytical Modeling of Energy Consumption

6.4.2. Energy Consumption Estimation and Possible Improvements

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Rearden, B.T.; Jessee, M.A. SCALE Code System; Technical Report; Oak Ridge National Laboratory (ORNL): Oak Ridge, TN, USA, 2016.

- Ballard, S.; Hipp, J.; Kraus, B.; Encarnacao, A.; Young, C. GeoTess: A Generalized Earth Model Software Utility. Seismol. Res. Lett. 2016, 87, 719–725. [Google Scholar] [CrossRef] [Green Version]

- Chatterjee, S.; Sen, S. Cache-efficient matrix transposition. In Proceedings of the Sixth International Symposium on High-Performance Computer Architecture HPCA-6 (Cat. No.PR00550), Toulouse, France, 8–12 January 2000; pp. 195–205. [Google Scholar] [CrossRef] [Green Version]

- Lam, M.D.; Rothberg, E.E.; Wolf, M.E. The Cache Performance and Optimizations of Blocked Algorithms. SIGPLAN Not. 1991, 26, 63–74. [Google Scholar] [CrossRef]

- Bao, W.; Krishnamoorthy, S.; Pouchet, L.N.; Sadayappan, P. Analytical Modeling of Cache Behavior for Affine Programs. Proc. ACM Program. Lang. 2017, 2, 32:1–32:26. [Google Scholar] [CrossRef] [Green Version]

- Reineke, J.; Grund, D.; Berg, C.; Wilhelm, R. Timing Predictability of Cache Replacement Policies. Real-Time Syst. 2007, 37, 99–122. [Google Scholar] [CrossRef] [Green Version]

- Zhong, Y.; Dropsho, S.G.; Shen, X.; Studer, A.; Ding, C. Miss Rate Prediction Across Program Inputs and Cache Configurations. IEEE Trans. Comput. 2007, 56, 328–343. [Google Scholar] [CrossRef] [Green Version]

- Tsifakis, D.; Rendell, A.P.; Strazdins, P.E. Cache Oblivious Matrix Transposition: Simulation and Experiment. Computational Science - ICCS 2004; Bubak, M., van Albada, G.D., Sloot, P.M.A., Dongarra, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2004; pp. 17–25. [Google Scholar]

- Frigo, M.; Leiserson, C.E.; Prokop, H.; Ramachandran, S. Cache-Oblivious Algorithms. In Proceedings of the 40th Annual Symposium on Foundations of Computer Science FOCS ’99, New York, NY USA, 17–18 October 1999; IEEE Computer Society: Washington, DC, USA, 1999; p. 285. [Google Scholar]

- Yotov, K.; Roeder, T.; Pingali, K.; Gunnels, J.; Gustavson, F. An Experimental Comparison of Cache-oblivious and Cache-conscious Programs. In Proceedings of the Nineteenth Annual ACM Symposium on Parallel Algorithms and Architectures SPAA ’07, New York, NY, USA, 9–11 June 2007; ACM: New York, NY, USA, 2007; pp. 93–104. [Google Scholar] [CrossRef] [Green Version]

- Leiserson, C.E. Cache-Oblivious Algorithms. In Algorithms and Complexity; Petreschi, R., Persiano, G., Silvestri, R., Eds.; Springer: Berlin/Heidelberg, Germany, 2003; p. 5. [Google Scholar]

- Frigo, M.; Leiserson, C.E.; Prokop, H.; Ramachandran, S. Cache-Oblivious Algorithms. ACM Trans. Algorithms 2012, 8. [Google Scholar] [CrossRef]

- Hong, C.; Bao, W.; Cohen, A.; Krishnamoorthy, S.; Pouchet, L.N.; Rastello, F.; Ramanujam, J.; Sadayappan, P. Effective Padding of Multidimensional Arrays to Avoid Cache Conflict Misses. In Proceedings of the 37th ACM SIGPLAN Conference on Programming Language Design and Implementation PLDI ’16, Santa Barbara, CA, USA, 13–17 June 2016; ACM: New York, NY, USA, 2016; pp. 129–144. [Google Scholar] [CrossRef] [Green Version]

- Panda, P.R.; Nakamura, H.; Dutt, N.D.; Nicolau, A. Augmenting loop tiling with data alignment for improved cache performance. IEEE Trans. Comput. 1999, 48, 142–149. [Google Scholar] [CrossRef]

- Bacon, D.F.; Chow, J.H.; Ju, D.C.R.; Muthukumar, K.; Sarkar, V. A Compiler Framework for Restructuring Data Declarations to Enhance Cache and TLB Effectiveness. In Proceedings of the 1994 Conference of the Centre for Advanced Studies on Collaborative Research CASCON ’94, Toronto, ON, Canada, 31 October–3 November 1994; IBM Press: Indianapolis, IN, USA, 1994; p. 3. [Google Scholar]

- Morton, G.M. A Computer Oriented Geodetic Data Base and a New Technique in File Sequencing; Technical Report; IBM Ltd.: Ottawa, ON, Canada, 1966. [Google Scholar]

- Abel, A.; Reineke, J. Measurement-based Modeling of the Cache Replacement Policy. In Proceedings of the 2013 IEEE 19th Real-Time and Embedded Technology and Applications Symposium (RTAS), Philadelphia, PA, USA, 9–11 April 2013. [Google Scholar]

- Berg, C. PLRU Cache Domino Effects. In Proceedings of the Workshop On Worst-Case Execution Time (WCET) Analysis, Dresden, Germany, 4 July 2006. [Google Scholar]

- Abel, A.; Reineke, J. Reverse engineering of cache replacement policies in Intel microprocessors and their evaluation. In Proceedings of the 2014 IEEE International Symposium on Performance Analysis of Systems and Software (ISPASS 2014), Monterey, CA, USA, 23–25 March 2014; IEEE Computer Society: Washington, DC, USA, 2014; pp. 141–142. [Google Scholar] [CrossRef]

- Balasubramonian, R.; Kahng, A.B.; Muralimanohar, N.; Shafiee, A.; Srinivas, V. CACTI 7: New Tools for Interconnect Exploration in Innovative Off-Chip Memories. ACM Trans. Archit. Code Optim. 2017, 14, 14:1–14:25. [Google Scholar] [CrossRef]

- Fitzgerald, B.; Lopez, S.; Sahuquillo, J. Drowsy cache partitioning for reduced static and dynamic energy in the cache hierarchy. In Proceedings of the 2013 International Green Computing Conference Proceedings, Arlington, VA, USA, 27–29 June 2013; pp. 1–6. [Google Scholar] [CrossRef] [Green Version]

- Flautner, K.; Kim, N.S.; Martin, S.; Blaauw, D.; Mudge, T. Drowsy caches: Simple techniques for reducing leakage power. In Proceedings of the 29th Annual International Symposium on Computer Architecture, Anchorage, AK, USA, 25–29 May 2002; pp. 148–157. [Google Scholar] [CrossRef]

| Sequence of Memory Access Load (ld) and Store (st) Instructions | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | |

| ld | ld | st | st | ld | ld | st | st | ld | |

| 1 | |||||||||

| 2 | |||||||||

| 3 | |||||||||

| 4 | |||||||||

| 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | |

| ld | st | st | ld | ld | st | st | ld | ld | |

| 1 | |||||||||

| 2 | |||||||||

| 3 | |||||||||

| 4 | |||||||||

| 19 | 20 | 21 | 22 | 23 | 24 | 25 | 26 | 27 | |

| st | st | ld | ld | st | st | ld | ld | st | |

| 1 | |||||||||

| 2 | |||||||||

| 3 | |||||||||

| 4 | |||||||||

| 28 | 29 | 30 | 31 | 32 | 33 | ||||

| st | ld | ld | st | st | ld | ||||

| 1 | |||||||||

| 2 | |||||||||

| 3 | |||||||||

| 4 | |||||||||

| 2QuadQ9550 | i7-2640M | i7-4810MQ | XeonL5410 | |

|---|---|---|---|---|

| Number of Cache Levels | 2 | 3 | 3 | 2 |

| Line Size | 64 B | 64 B | 64 B | 64 B |

| Technological node | 45 nm | 32 nm | 22 nm | 45 nm |

| L1 Size | 32 KiB | 32 KiB | 32 KiB | 32 KiB |

| L1 Associativity | 8 way | 8 way | 8 way | 8 way |

| L1 Read energy (pJ) 8 B | 19.3 | 13.3 | 6.9 | 19.3 |

| L1 Read energy (pJ) 64 B | 665.3 | 464.8 | 327.5 | 66.5 |

| L1 Write energy (pJ) 8 B | 24.2 | 16.3 | 8.2 | 24.2 |

| L1 Write energy (pJ) 64 B | 670.3 | 468.2 | 333.0 | 67.0 |

| L1 Leakage Power (mW) | 65.2 | 45.2 | 20.4 | 65.2 |

| L2 Size | 6 MiB | 256 KiB | 256 KiB | 12 MiB |

| L2 Associativity | 24 way | 8 way | 8 way | 24 way |

| L2 Read energy (nJ) 64 B | 3.7 | 0.8 | 0.4 | 23.0 |

| L2 Write energy (nJ) 64 B | 4.0 | 0.8 | 0.5 | 25.1 |

| L2 Leakage Power (W) | 10.6 | 0.3 | 0.1 | 16.2 |

| L2 Inclusion Policy | Inclusive | Inclusive | Inclusive | Inclusive |

| simulated (actual) | (non-inclusive) | (non-inclusive) | ||

| L3 Size | N/A | 4 MiB | 6 MiB | N/A |

| L3 Associativity | N/A | 16 way | 32 way | N/A |

| L3 Read energy (nJ) 64 B | N/A | 2.3 | 1.5 | N/A |

| L3 Write energy (nJ) 64 B | N/A | 2.4 | 1.6 | N/A |

| L3 Leakage Power (W) | N/A | 2.4 | 1.7 | N/A |

| L3 Inclusion Policy | N/A | Inclusive | Inclusive | N/A |

| Main Memory Size (DDR3) | 1 GiB | 1 GiB | 1 GiB | 1 GiB |

| MM Read energy (nJ) 64 B | 17.7 | 17.7 | 17.7 | 17.7 |

| MM Write energy (nJ) 64 B | 17.7 | 17.7 | 17.7 | 17.7 |

| MM Leakage Power (W) | 2.2 | 2.2 | 2.2 | 2.2 |

| 2QuadQ9550 | i7-2640M | |||||||||||

| 8 | 16 | 32 | 64 | 128 | 256 | 8 | 16 | 32 | 64 | 128 | 256 | |

| Scenario 1 | 4.2 | 4.3 | 4.2 | 3.5 | 3.3 | 3.1 | 10.7 | 10.9 | 10.2 | 10.3 | 11.1 | 11.5 |

| Scenario 2 | 34.2 | 34.1 | 34.2 | 34.5 | 34.6 | 34.8 | 21.7 | 21.6 | 21.8 | 21.8 | 21.6 | 21.5 |

| i7-4810MQ | XeonL5410 | |||||||||||

| 8 | 16 | 32 | 64 | 128 | 256 | 8 | 16 | 32 | 64 | 128 | 256 | |

| Scenario 1 | 6.5 | 6.1 | 5.8 | 6.0 | 6.3 | 6.4 | 19.1 | 19.1 | 18.6 | 18.6 | 18.8 | 18.7 |

| Scenario 2 | 16.8 | 17.0 | 17.1 | 17.1 | 16.9 | 16.9 | 46.6 | 46.6 | 46.5 | 46.5 | 46.5 | 46.5 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pedro-Zapater, A.; Rodríguez, C.; Segarra, J.; Gran Tejero, R.; Viñals-Yúfera, V. Ideal and Predictable Hit Ratio for Matrix Transposition in Data Caches. Mathematics 2020, 8, 184. https://doi.org/10.3390/math8020184

Pedro-Zapater A, Rodríguez C, Segarra J, Gran Tejero R, Viñals-Yúfera V. Ideal and Predictable Hit Ratio for Matrix Transposition in Data Caches. Mathematics. 2020; 8(2):184. https://doi.org/10.3390/math8020184

Chicago/Turabian StylePedro-Zapater, Alba, Clemente Rodríguez, Juan Segarra, Rubén Gran Tejero, and Víctor Viñals-Yúfera. 2020. "Ideal and Predictable Hit Ratio for Matrix Transposition in Data Caches" Mathematics 8, no. 2: 184. https://doi.org/10.3390/math8020184

APA StylePedro-Zapater, A., Rodríguez, C., Segarra, J., Gran Tejero, R., & Viñals-Yúfera, V. (2020). Ideal and Predictable Hit Ratio for Matrix Transposition in Data Caches. Mathematics, 8(2), 184. https://doi.org/10.3390/math8020184