A Generic Approach to Covariance Function Estimation Using ARMA-Models

Abstract

:1. Introduction and Motivation

2. Least Squares Collocation

3. The Second-Order Gauss–Markov Process

3.1. The Covariance Function of the SOGM-Process

3.2. Positive Definiteness of the SOGM-Process

4. Discrete AR-Processes

4.1. Definition of the Process

4.2. The Covariance Function of the AR(2)-Process

4.3. AR(p)-Process

4.3.1. AR(2)-Model

4.3.2. AR(1)-Model

4.4. Summary

5. Generalization to ARMA-Models

5.1. Covariance Representation of ARMA-Processes

5.2. The Numerical Solution for ARMA-Models

6. Estimation and Interpolation of the Covariance Series

Modeling Guidelines

- Determine the empirical autocorrelation function to as estimates for the covariances to . The biased or unbiased estimate can be used.

- Optional step: Reduce by an arbitrary additive white noise component (nugget) such that is a plausible y-intercept to and the higher lags.

- Define a target order p and compute the autoregressive coefficients by

- Compute the poles of the process, which follow from the coefficients, see Equation (11). Check if the process is stationary, which requires all . If this is not given, it can be helpful to make the estimation more overdetermined by increasing n. Otherwise, the target order of the estimation needs to be reduced. A third possibility is to choose only selected process roots and continue the next steps with this subset of poles. An analysis of the process properties such as system frequencies a or can be useful, for instance in the pole-zero plot.

- Define the number of empirical covariances m to be used for the estimation. Set up the linear system cf. Equation (28) either with or without . Solve the system of equations either

- –

- uniquely using to determine the . This results in a pure AR(p)-process.

- –

- or as an overdetermined manner in the least squares sense, i.e., up to . This results in an underlying ARMA-process.

- is given by from which can be determined by . If exceeds , it is possible to constrain the solution to pass exactly through or below . This can be done using a constrained least squares adjustment with the linear condition (cf. e.g., [69] (Ch. 3.2.7)) or by demanding the linear inequality [70] (Ch. 3.3–3.5).

- Check for positive definiteness (Equation (8)) of each second-order section (SOGM component). In addition, the phases need to be in the range . If the solution does not fulfill these requirements, process diagnostics are necessary to determine whether the affected component might be ill-shaped. If the component is entirely negative definite, i.e., with negative , it needs to be eliminated.Here, it also needs to be examined whether the empirical covariances decrease sufficiently towards the high lags. If not, the stationarity of the residuals can be questioned and an enhanced trend reduction might be necessary.

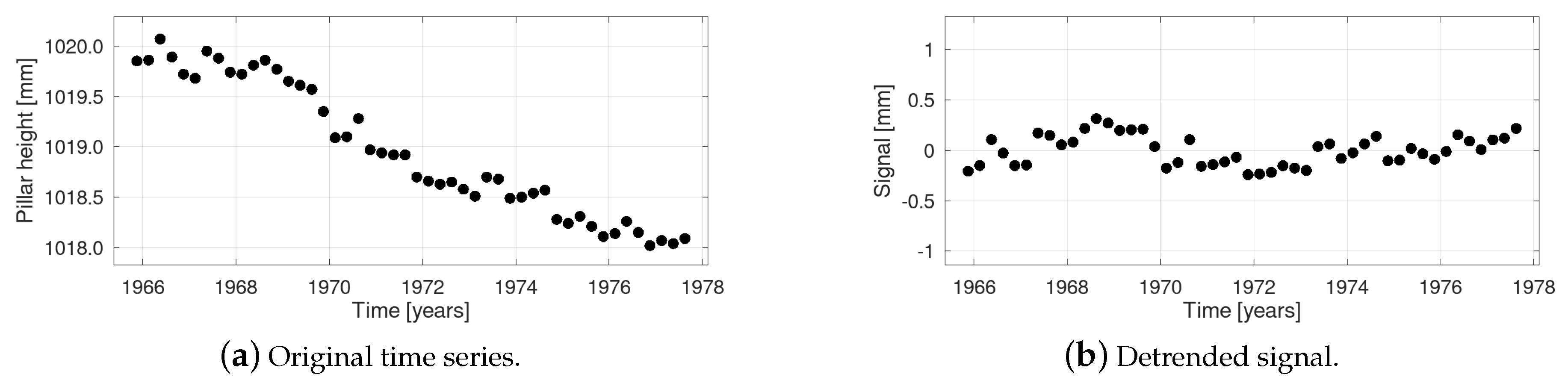

7. An Example: Milan Cathedral Deformation Time Series

8. Summary and Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| AR | autoregressive |

| ARMA | autoregressive moving average |

| LSC | least squares collocation |

| MA | moving average |

| MYW | modified Yule–Walker |

| SOGM | second-order Gauss–Markov |

| SOS | second-order sections |

| YW | Yule–Walker |

References

- Kolmogorov, A.N. Grundbegriffe der Wahrscheinlichkeitsrechnung; Springer: Berlin/Heidelberg, Germany, 1933. [Google Scholar] [CrossRef]

- Wiener, N. Extrapolation, Interpolation, and Smoothing of Stationary Time Series; MIT Press: Cambridge, MA, USA, 1949. [Google Scholar]

- Moritz, H. Advanced Least-Squares Methods; Number 175 in Reports of the Department of Geodetic Science; Ohio State University Research Foundation: Columbus, OH, USA, 1972. [Google Scholar]

- Moritz, H. Advanced Physical Geodesy; Wichmann: Karlsruhe, Germany, 1980. [Google Scholar]

- Schuh, W.D. Signalverarbeitung in der Physikalischen Geodäsie. In Handbuch der Geodäsie, Erdmessung und Satellitengeodäsie; Freeden, W., Rummel, R., Eds.; Springer Reference Naturwissenschaften; Springer: Berlin/Heidelberg, Germany, 2016; pp. 73–121. [Google Scholar] [CrossRef]

- Moritz, H. Least-Squares Collocation; Number 75 in Reihe A; Deutsche Geodätische Kommission: München, Germany, 1973. [Google Scholar]

- Reguzzoni, M.; Sansó, F.; Venuti, G. The Theory of General Kriging, with Applications to the Determination of a Local Geoid. Geophys. J. Int. 2005, 162, 303–314. [Google Scholar] [CrossRef] [Green Version]

- Moritz, H. Covariance Functions in Least-Squares Collocation; Number 240 in Reports of the Department of Geodetic Science; Ohio State University: Columbus, OH, USA, 1976. [Google Scholar]

- Cressie, N.A.C. Statistics for Spatial Data; Wiley Series in Probability and Statistics; Wiley: New York, NY, USA, 1991. [Google Scholar] [CrossRef]

- Chilès, J.P.; Delfiner, P. Geostatistics: Modeling Spatial Uncertainty; Wiley Series in Probability and Statistics; John Wiley & Sons: Hoboken, NJ, USA, 1999. [Google Scholar] [CrossRef]

- Thiébaux, H.J. Anisotropic Correlation Functions for Objective Analysis. Mon. Weather Rev. 1976, 104, 994–1002. [Google Scholar] [CrossRef] [Green Version]

- Franke, R.H. Covariance Functions for Statistical Interpolation; Technical Report NPS-53-86-007; Naval Postgraduate School: Monterey, CA, USA, 1986. [Google Scholar]

- Gneiting, T. Correlation Functions for Atmospheric Data Analysis. Q. J. R. Meteorol. Soc. 1999, 125, 2449–2464. [Google Scholar] [CrossRef]

- Gneiting, T.; Kleiber, W.; Schlather, M. Matérn Cross-Covariance Functions for Multivariate Random Fields. J. Am. Stat. Assoc. 2010, 105, 1167–1177. [Google Scholar] [CrossRef]

- Meissl, P. A Study of Covariance Functions Related to the Earth’s Disturbing Potential; Number 151 in Reports of the Department of Geodetic Science; Ohio State University: Columbus, OH, USA, 1971. [Google Scholar]

- Tscherning, C.C.; Rapp, R.H. Closed Covariance Expressions for Gravity Anomalies, Geoid Undulations, and Deflections of the Vertical Implied by Anomaly Degree Variance Models; Technical Report DGS-208; Ohio State University, Department of Geodetic Science: Columbus, OH, USA, 1974. [Google Scholar]

- Mussio, L. Il metodo della collocazione minimi quadrati e le sue applicazioni per l’analisi statistica dei risultati delle compensazioni. In Ricerche Di Geodesia, Topografia e Fotogrammetria; CLUP: Milano, Italy, 1984; Volume 4, pp. 305–338. [Google Scholar]

- Koch, K.R.; Kuhlmann, H.; Schuh, W.D. Approximating Covariance Matrices Estimated in Multivariate Models by Estimated Auto- and Cross-Covariances. J. Geod. 2010, 84, 383–397. [Google Scholar] [CrossRef]

- Sansò, F.; Schuh, W.D. Finite Covariance Functions. Bull. Géodésique 1987, 61, 331–347. [Google Scholar] [CrossRef]

- Gaspari, G.; Cohn, S.E. Construction of Correlation Functions in Two and Three Dimensions. Q. J. R. Meteorol. Soc. 1999, 125, 723–757. [Google Scholar] [CrossRef]

- Gneiting, T. Compactly Supported Correlation Functions. J. Multivar. Anal. 2002, 83, 493–508. [Google Scholar] [CrossRef] [Green Version]

- Kraiger, G. Untersuchungen zur Prädiktion nach kleinsten Quadraten mittels empirischer Kovarianzfunktionen unter besonderer Beachtung des Krümmungsparameters; Number 53 in Mitteilungen der Geodätischen Institute der Technischen Universität Graz; Geodätische Institute der Technischen Universität Graz: Graz, Austria, 1987. [Google Scholar]

- Jenkins, G.M.; Watts, D.G. Spectral Analysis and Its Applications; Holden-Day: San Francisco, CA, USA, 1968. [Google Scholar]

- Box, G.; Jenkins, G. Time Series Analysis: Forecasting and Control; Series in Time Series Analysis; Holden-Day: San Francisco, CA, USA, 1970. [Google Scholar]

- Maybeck, P.S. Stochastic Models, Estimation, and Control; Vol. 141-1, Mathematics in Science and Engineering; Academic Press: New York, NY, USA, 1979. [Google Scholar] [CrossRef]

- Priestley, M.B. Spectral Analysis and Time Series; Academic Press: London, UK; New York, NY, USA, 1981. [Google Scholar]

- Yaglom, A.M. Correlation Theory of Stationary and Related Random Functions: Volume I: Basic Results; Springer Series in Statistics; Springer: New York, NY, USA, 1987. [Google Scholar]

- Brockwell, P.J.; Davis, R.A. Time Series Theory and Methods, 2nd ed.; Springer Series in Statistics; Springer: New York, NY, USA, 1991. [Google Scholar] [CrossRef]

- Hamilton, J.D. Time Series Analysis; Princeton University Press: Princeton, NJ, USA, 1994. [Google Scholar]

- Buttkus, B. Spectral Analysis and Filter Theory in Applied Geophysics; Springer: Berlin/Heidelberg, Germany, 2000. [Google Scholar] [CrossRef]

- Jones, R.H. Fitting a Continuous Time Autoregression to Discrete Data. In Applied Time Series Analysis II; Findley, D.F., Ed.; Academic Press: New York, NY, USA, 1981; pp. 651–682. [Google Scholar] [CrossRef]

- Jones, R.H.; Vecchia, A.V. Fitting Continuous ARMA Models to Unequally Spaced Spatial Data. J. Am. Stat. Assoc. 1993, 88, 947–954. [Google Scholar] [CrossRef]

- Brockwell, P.J. Continuous-Time ARMA Processes. In Stochastic Processes: Theory and Methods; Shanbhag, D., Rao, C., Eds.; Volume 19, Handbook of Statistics; North-Holland: Amsterdam, The Netherlands, 2001; pp. 249–276. [Google Scholar] [CrossRef]

- Kelly, B.C.; Becker, A.C.; Sobolewska, M.; Siemiginowska, A.; Uttley, P. Flexible and Scalable Methods for Quantifying Stochastic Variability in the Era of Massive Time-Domain Astronomical Data Sets. Astrophys. J. 2014, 788, 33. [Google Scholar] [CrossRef] [Green Version]

- Tómasson, H. Some Computational Aspects of Gaussian CARMA Modelling. Stat. Comput. 2015, 25, 375–387. [Google Scholar] [CrossRef] [Green Version]

- Schuh, W.D. Tailored Numerical Solution Strategies for the Global Determination of the Earth’s Gravity Field; Volume 81, Mitteilungen der Geodätischen Institute; Technische Universität Graz (TUG): Graz, Austria, 1996. [Google Scholar]

- Schuh, W.D. The Processing of Band-Limited Measurements; Filtering Techniques in the Least Squares Context and in the Presence of Data Gaps. Space Sci. Rev. 2003, 108, 67–78. [Google Scholar] [CrossRef]

- Klees, R.; Ditmar, P.; Broersen, P. How to Handle Colored Observation Noise in Large Least-Squares Problems. J. Geod. 2003, 76, 629–640. [Google Scholar] [CrossRef] [Green Version]

- Siemes, C. Digital Filtering Algorithms for Decorrelation within Large Least Squares Problems. Ph.D. Thesis, Landwirtschaftliche Fakultät der Universität Bonn, Bonn, Germany, 2008. [Google Scholar]

- Krasbutter, I.; Brockmann, J.M.; Kargoll, B.; Schuh, W.D. Adjustment of Digital Filters for Decorrelation of GOCE SGG Data. In Observation of the System Earth from Space—CHAMP, GRACE, GOCE and Future Missions; Flechtner, F., Sneeuw, N., Schuh, W.D., Eds.; Vol. 20, Advanced Technologies in Earth Sciences, Geotechnologien Science Report; Springer: Berlin/Heidelberg, Germany, 2014; pp. 109–114. [Google Scholar] [CrossRef]

- Farahani, H.H.; Slobbe, D.C.; Klees, R.; Seitz, K. Impact of Accounting for Coloured Noise in Radar Altimetry Data on a Regional Quasi-Geoid Model. J. Geod. 2017, 91, 97–112. [Google Scholar] [CrossRef] [Green Version]

- Schuh, W.D.; Brockmann, J.M. The Numerical Treatment of Covariance Stationary Processes in Least Squares Collocation. In Handbuch der Geodäsie: 6 Bände; Freeden, W., Rummel, R., Eds.; Springer Reference Naturwissenschaften; Springer: Berlin/Heidelberg, Germany, 2018; pp. 1–36. [Google Scholar] [CrossRef]

- Pail, R.; Bruinsma, S.; Migliaccio, F.; Förste, C.; Goiginger, H.; Schuh, W.D.; Höck, E.; Reguzzoni, M.; Brockmann, J.M.; Abrikosov, O.; et al. First, GOCE Gravity Field Models Derived by Three Different Approaches. J. Geod. 2011, 85, 819. [Google Scholar] [CrossRef] [Green Version]

- Brockmann, J.M.; Zehentner, N.; Höck, E.; Pail, R.; Loth, I.; Mayer-Gürr, T.; Schuh, W.D. EGM_TIM_RL05: An Independent Geoid with Centimeter Accuracy Purely Based on the GOCE Mission. Geophys. Res. Lett. 2014, 41, 8089–8099. [Google Scholar] [CrossRef]

- Schubert, T.; Brockmann, J.M.; Schuh, W.D. Identification of Suspicious Data for Robust Estimation of Stochastic Processes. In IX Hotine-Marussi Symposium on Mathematical Geodesy; Sneeuw, N., Novák, P., Crespi, M., Sansò, F., Eds.; International Association of Geodesy Symposia; Springer: Berlin/ Heidelberg, Germany, 2019. [Google Scholar] [CrossRef]

- Krarup, T. A Contribution to the Mathematical Foundation of Physical Geodesy; Number 44 in Meddelelse; Danish Geodetic Institute: Copenhagen, Denmark, 1969. [Google Scholar]

- Amiri-Simkooei, A.; Tiberius, C.; Teunissen, P. Noise Characteristics in High Precision GPS Positioning. In VI Hotine-Marussi Symposium on Theoretical and Computational Geodesy; Xu, P., Liu, J., Dermanis, A., Eds.; International Association of Geodesy Symposia; Springer: Berlin/ Heidelberg, Germany, 2008; pp. 280–286. [Google Scholar] [CrossRef] [Green Version]

- Kermarrec, G.; Schön, S. On the Matérn Covariance Family: A Proposal for Modeling Temporal Correlations Based on Turbulence Theory. J. Geod. 2014, 88, 1061–1079. [Google Scholar] [CrossRef]

- Tscherning, C.; Knudsen, P.; Forsberg, R. Description of the GRAVSOFT Package; Technical Report; Geophysical Institute, University of Copenhagen: Copenhagen, Denmark, 1994. [Google Scholar]

- Arabelos, D.; Tscherning, C.C. Globally Covering A-Priori Regional Gravity Covariance Models. Adv. Geosci. 2003, 1, 143–147. [Google Scholar] [CrossRef] [Green Version]

- Arabelos, D.N.; Forsberg, R.; Tscherning, C.C. On the a Priori Estimation of Collocation Error Covariance Functions: A Feasibility Study. Geophys. J. Int. 2007, 170, 527–533. [Google Scholar] [CrossRef] [Green Version]

- Darbeheshti, N.; Featherstone, W.E. Non-Stationary Covariance Function Modelling in 2D Least-Squares Collocation. J. Geod. 2009, 83, 495–508. [Google Scholar] [CrossRef] [Green Version]

- Barzaghi, R.; Borghi, A.; Sona, G. New Covariance Models for Local Applications of Collocation. In IV Hotine-Marussi Symposium on Mathematical Geodesy; Benciolini, B., Ed.; International Association of Geodesy Symposia; Springer: Berlin/Heidelberg, Germany, 2001; pp. 91–101. [Google Scholar] [CrossRef] [Green Version]

- Kvas, A.; Behzadpour, S.; Ellmer, M.; Klinger, B.; Strasser, S.; Zehentner, N.; Mayer-Gürr, T. ITSG-Grace2018: Overview and Evaluation of a New GRACE-Only Gravity Field Time Series. J. Geophys. Res. Solid Earth 2019, 124, 9332–9344. [Google Scholar] [CrossRef] [Green Version]

- Rasmussen, C.; Williams, C. Gaussian Processes for Machine Learning; Adaptive Computation and Machine Learning; MIT Press: Cambridge, MA, USA, 2006. [Google Scholar]

- Jarmołowski, W.; Bakuła, M. Precise Estimation of Covariance Parameters in Least-Squares Collocation by Restricted Maximum Likelihood. Studia Geophysica et Geodaetica 2014, 58, 171–189. [Google Scholar] [CrossRef]

- Fitzgerald, R.J. Filtering Horizon-Sensor Measurements for Orbital Navigation. J. Spacecr. Rocket. 1967, 4, 428–435. [Google Scholar] [CrossRef]

- Jackson, L.B. Digital Filters and Signal Processing, 3rd ed.; Springer: New York, NY, USA, 1996. [Google Scholar] [CrossRef]

- Titov, O.A. Estimation of the Subdiurnal UT1-UTC Variations by the Least Squares Collocation Method. Astron. Astrophys. Trans. 2000, 18, 779–792. [Google Scholar] [CrossRef]

- Halsig, S. Atmospheric Refraction and Turbulence in VLBI Data Analysis. Ph.D. Thesis, Landwirtschaftliche Fakultät der Universität Bonn, Bonn, Germany, 2018. [Google Scholar]

- Bochner, S. Lectures on Fourier Integrals; Number 42 in Annals of Mathematics Studies; Princeton University Press: Princeton, NJ, USA, 1959. [Google Scholar]

- Sansò, F. The Analysis of Time Series with Applications to Geodetic Control Problems. In Optimization and Design of Geodetic Networks; Grafarend, E.W., Sansò, F., Eds.; Springer: Berlin/Heidelberg, Germany, 1985; pp. 436–525. [Google Scholar] [CrossRef]

- Kay, S.; Marple, S. Spectrum Analysis—A Modern Perspective. Proc. IEEE 1981, 69, 1380–1419. [Google Scholar] [CrossRef]

- Friedlander, B.; Porat, B. The Modified Yule-Walker Method of ARMA Spectral Estimation. IEEE Trans. Aerosp. Electron. Syst. 1984, AES-20, 158–173. [Google Scholar] [CrossRef]

- Gelb, A. Applied Optimal Estimation; The MIT Press: Cambridge, MA, USA, 1974. [Google Scholar]

- Phadke, M.S.; Wu, S.M. Modeling of Continuous Stochastic Processes from Discrete Observations with Application to Sunspots Data. J. Am. Stat. Assoc. 1974, 69, 325–329. [Google Scholar] [CrossRef]

- Tunnicliffe Wilson, G. Some Efficient Computational Procedures for High Order ARMA Models. J. Stat. Comput. Simul. 1979, 8, 301–309. [Google Scholar] [CrossRef]

- Woodward, W.A.; Gray, H.L.; Haney, J.R.; Elliott, A.C. Examining Factors to Better Understand Autoregressive Models. Am. Stat. 2009, 63, 335–342. [Google Scholar] [CrossRef]

- Koch, K.R. Parameter Estimation and Hypothesis Testing in Linear Models, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 1999. [Google Scholar] [CrossRef]

- Roese-Koerner, L. Convex Optimization for Inequality Constrained Adjustment Problems. Ph.D. Thesis, Landwirtschaftliche Fakultät der Universität Bonn, Bonn, Germany, 2015. [Google Scholar]

| Equation (28), | Equation (28) with , | Equation (28), | |

|---|---|---|---|

| YW-Equations | AR-model, interpolation of the first covariances | AR-model, approximation | ARMA-model, approximation |

| MYW-Equations, | AR-model, approximation | AR-model, approximation | ARMA-model, approximation |

| Roots | Frequency | Frequency | Damping | Phase | ||

|---|---|---|---|---|---|---|

| A | [1/year] | |||||

| B | [1/year] | |||||

| Roots | Frequency | Frequency | Damping | Phase | ||

|---|---|---|---|---|---|---|

| A | [1/year] | |||||

| B | [1/year] | |||||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Schubert, T.; Korte, J.; Brockmann, J.M.; Schuh, W.-D. A Generic Approach to Covariance Function Estimation Using ARMA-Models. Mathematics 2020, 8, 591. https://doi.org/10.3390/math8040591

Schubert T, Korte J, Brockmann JM, Schuh W-D. A Generic Approach to Covariance Function Estimation Using ARMA-Models. Mathematics. 2020; 8(4):591. https://doi.org/10.3390/math8040591

Chicago/Turabian StyleSchubert, Till, Johannes Korte, Jan Martin Brockmann, and Wolf-Dieter Schuh. 2020. "A Generic Approach to Covariance Function Estimation Using ARMA-Models" Mathematics 8, no. 4: 591. https://doi.org/10.3390/math8040591

APA StyleSchubert, T., Korte, J., Brockmann, J. M., & Schuh, W.-D. (2020). A Generic Approach to Covariance Function Estimation Using ARMA-Models. Mathematics, 8(4), 591. https://doi.org/10.3390/math8040591