Abstract

In multiple attribute decision-making in an intuitionistic fuzzy environment, the decision information is sometimes given by intuitionistic fuzzy soft sets. In order to address intuitionistic fuzzy decision-making problems in a more efficient way, many scholars have produced increasingly better procedures for ranking intuitionistic fuzzy values. In this study, we further investigate the problem of ranking intuitionistic fuzzy values from a geometric point of view, and we produce related applications to decision-making. We present Minkowski score functions of intuitionistic fuzzy values, which are natural generalizations of the expectation score function and other useful score functions in the literature. The rationale for Minkowski score functions lies in the geometric intuition that a better score should be assigned to an intuitionistic fuzzy value farther from the negative ideal intuitionistic fuzzy value. To capture the subjective attitude of decision makers, we further propose the Minkowski weighted score function that incorporates an attitudinal parameter. The Minkowski score function is a special case corresponding to a neutral attitude. Some fundamental properties of Minkowski (weighted) score functions are examined in detail. With the aid of the Minkowski weighted score function and the maximizing deviation method, we design a new algorithm for solving decision-making problems based on intuitionistic fuzzy soft sets. Moreover, two numerical examples regarding risk investment and supplier selection are employed to conduct comparative analyses and to demonstrate the feasibility of the approach proposed in this article.

1. Introduction

Multiple attribute decision making (MADM) refers to the general process of ranking a collection of alternatives or choosing the best alternative(s) from them, by taking into account the evaluations of all the alternatives against several attributes. The assessment information carried by these attributes characterizes the performance of the alternatives from several standpoints. In recent years, researchers from different countries and diverse fields have strained to develop various methods, models, and algorithms to support MADM [1]. Many in-depth studies have been devoted to the exploration of MADM and its applications. Altogether, they have provided effective solutions to MADM problems emerging from a wide range of different fields [2,3].

Fuzzy set (FS) theory is a powerful mathematical model proposed by Zadeh [4] in order to deal with uncertainty from the perspective of gradual belongingness. Since then, the idea of fuzzy sets has been playing an important role in the broad area of soft computing. It has achieved the purpose of easy processing, robustness, and low cost in the treatment of approximate knowledge, uncertainty, inaccuracy, and partial truth [5]. In order to further improve the applicability of fuzzy sets, in 1983, Atanassov [6] put forward the idea of intuitionistic fuzzy sets (IFSs). They are characterized by the stipulation of dissociated membership and non-membership degrees of the alternatives. Xu and Yager [7] coined the ordered pairs composed of the membership and non-membership grades in an intuitionistic fuzzy set as intuitionistic fuzzy values (IFVs). Despite their simplicity as an extension of Zadeh’s fuzzy sets, Atanassov’s intuitionistic fuzzy sets are of great significance to describe the uncertainty caused by human cognitive limitations or indecision [8].

Later on in 1999, soft set (SS) theory [9] gave rise to a wide mathematical framework for approaching uncertain, vaguely outlined objects that, however, possess defined characteristics. The basic principle of soft sets is dependent on the idea of parameterization, and it has broken the limits of membership functions. Soft set theory and its extensions show that real-life concepts can be understood from their characteristic aspects, and each separate feature provides an approximate portrayal of the entire entity [10]. Ali et al. [11,12,13] deeply explored algebraic and logical aspects of soft set theory in order to formulate its theoretical basis. Feng and Li [14] established relationships amongst five distinct kinds of soft subsets. They also investigated free soft algebras associated with soft product operations. Jun et al. studied the use of soft sets in the investigation of various algebraic structures such as ordered semigroups [15] and BCK/BCI-algebras [16,17]. Inspired by the notion of soft sets, Maji et al. [18] also presented the concept of a fuzzy soft set, which combines the idea of a fuzzy membership and soft set theory. Both soft sets and fuzzy soft sets were further generalized to hesitant fuzzy soft sets [19,20], N-soft sets [21,22], hesitant N-soft sets [23], and fuzzy N-soft sets [24]. From a different position, Maji et al. [25] introduced the concept of intuitionistic fuzzy soft sets (IFSSs). This hybrid structure yields an effective framework to describe and analyze more general MADM problems [26,27]. This model and its applications in decision-making were further expanded by Agarwal et al. [28], who devised generalized intuitionistic fuzzy soft sets. Feng et al. [29] clarified and improved the structure of this model. To be precise, they formulated it as a blend of intuitionistic fuzzy soft sets over the set of alternatives plus intuitionistic fuzzy sets on the set of attributes. Peng et al. [30] combined soft sets with Pythagorean fuzzy sets and presented the notion of Pythagorean fuzzy soft sets. Both fuzzy soft sets and intuitionistic fuzzy soft sets are special cases of Pythagorean fuzzy soft sets. Athira et al. [31] defined some entropy measures for Pythagorean fuzzy soft sets to compute the degree of fuzziness of the sets, which means that the larger the entropy, the lesser the vagueness. In addition, other different extension structures of soft sets were proposed and used to solve decision problems. For example, Dey et al. [32] presented the idea of a hesitant multi-fuzzy set. They joined the characteristics of a hesitant multi-fuzzy set with the parametrization of the soft sets to construct the hesitant multi-fuzzy soft sets. Likewise, intuitionistic multi-fuzzy sets [33] take advantage of both intuitionistic and multi-fuzzy features. Wei [34] proposed a scheme based on intuitionistic fuzzy soft sets to determine the weight of each attribute in two cases: incomplete knowledge of attribute information and complete ignorance of attribute information. Liu et al. [35] recently produced centroid transformations of intuitionistic fuzzy values that were built on intuitionistic fuzzy aggregation operators.

As the most elementary components in intuitionistic fuzzy MADM, IFVs quantify the performance of alternatives in an intuitionistic fuzzy setting. From a more general point of view, IFVs can be regarded as the intuitionistic fuzzy counterpart of real numbers in the unit interval . Both play comparable roles in intuitionistic fuzzy and classical mathematical modeling, respectively. It is well known that the usual order of the real numbers endows the unit interval with the structure of a chain (i.e., they produce a totally ordered set). As a result, such real numbers can be compared or ranked in an unequivocal way. Nonetheless, the ranking problem becomes much more complicated when we consider IFVs. The main reason is that the set of all IFVs only forms a complete lattice rather than a chain under the usual order of IFVs (see Equation (1) below). In response to this, various ways have been proposed to compare intuitionistic fuzzy values. They fall into two broad categories. The first kind suggests ranking IFVs by the score function [36], the accuracy function [37], Xu and Yager’s rule [7], and the dominance degree [38]. Their common feature is that they all rely on the membership function or non-membership function. Recently, Garg and Arora [39] presented an approach to solve decision-making problems by utilizing the TOPSIS method based on correlation measures under an intuitionistic fuzzy set environment, and their approach also belongs to this category. The other group tends to rank IFVs using geometric representations, such as Szmidt and Kacprzyk’s measure R [40], Guo’s measure Z [41], as well as Zhang and Xu’s measure l [42]. Distance measures of intuitionistic fuzzy sets have been well explored in the literature [43]. They can be used to quantify the difference between the information carried by intuitionistic fuzzy sets. Xing et al. [44] proposed a method in which IFVs are represented by points in a Euclidean space and ranked by their Euclidean distances to the most favorable point. This ranking method has made a great breakthrough in the satisfaction of desirable properties, such as weak admissibility, robustness, and determinacy, which had been basically neglected in previous geometric representation methods. In recent years, with the increasing complexity of socio-economic systems and related decision-making problems, this high performance ranking method for IFVs has become very practical.

However, it should be pointed out that some existing score functions based on the Hamming or Euclidean distance fail to differentiate those distinct IFVs that have the same expectation score or Euclidean score (called the ideal positive degree in [44]). To overcome this difficulty, the current study aims to introduce the Minkowski score function that enables us to prioritize all IFVs in by adjusting parameters if necessary. We further extend the Minkowski score function with the inclusion of the attitudinal parameter of the decision makers. Altogether, they produce a more general concept, called the Minkowski weighted score function of IFVs. We prove that this new score function naturally reduces to the Minkowski score function when the attitudinal parameter equals 0.5 (i.e., a decision maker’s neutral attitude). Note also that the Minkowski (weighted) score function builds on the Minkowski distance between the negative ideal IFV and the given IFV in . Thus, our score functions simplify the formulas and calculation with regard to the ideal positive degree in [44].

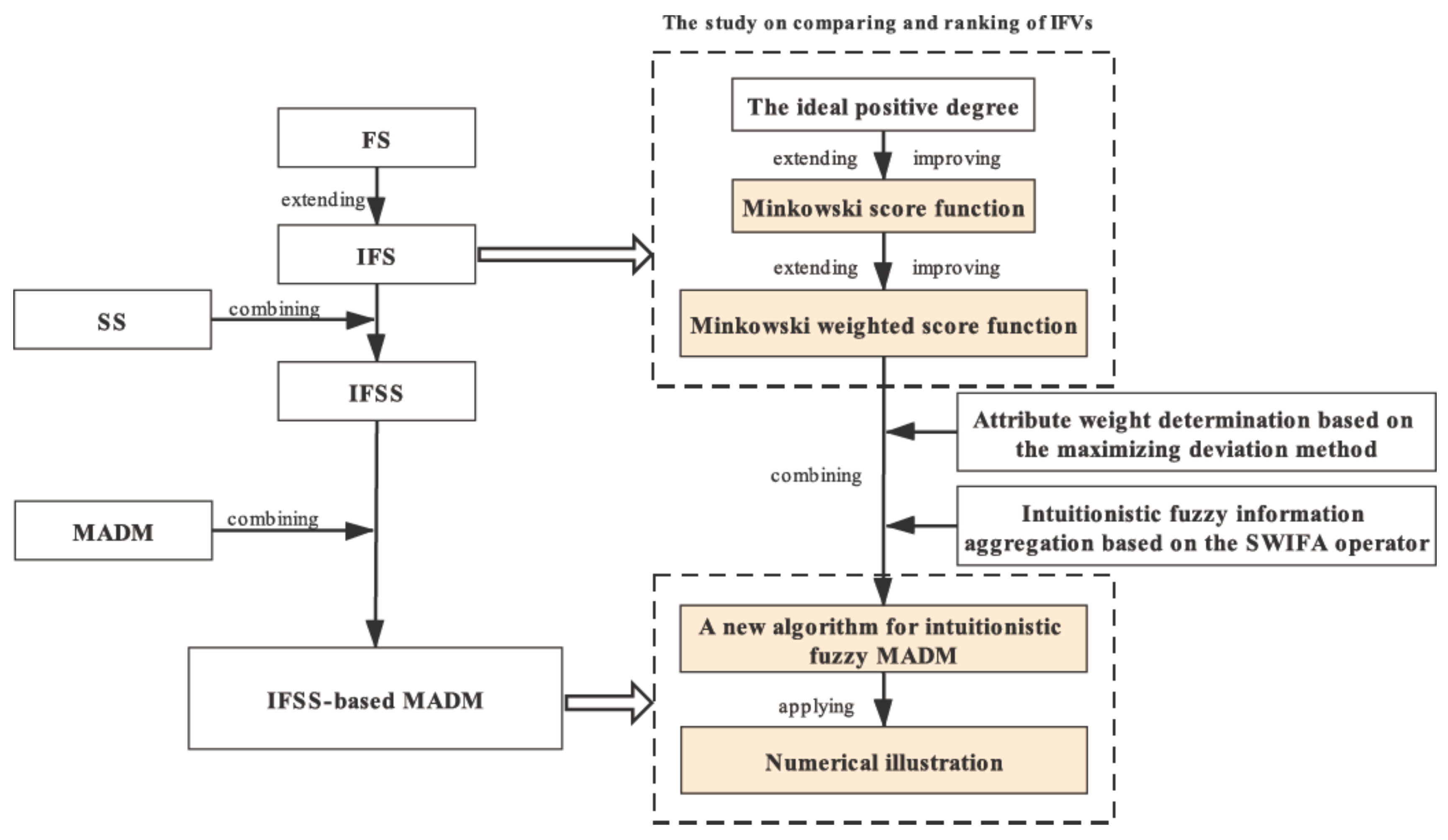

Figure 1 illustrates the workflow and framework of this study. Its first aim is to extend and simplify the ideal positive degree proposed by Xing et al. [44], which results in the concept of the Minkowski score function. Next, this function is further generalized to the Minkowski weighted score function thanks to the introduction of the attitudinal parameter of decision makers. Finally, an algorithm is designed for solving MADM problems based on intuitionistic fuzzy soft sets. This algorithm mainly builds on the Minkowski weighted score function, the maximizing deviation method for the determination of attribute weights, and the simply weighted intuitionistic fuzzy averaging (SWIFA) operator.

Figure 1.

Workflow and framework of this study. IFSS, intuitionistic fuzzy soft set; MADM, multiple attribute decision making (MADM); SWIFA, simply weighted intuitionistic fuzzy averaging.

The rest of this study consists of the following sections. Section 2 recaps some fundamental concepts concerning (intuitionistic) fuzzy sets and intuitionistic fuzzy soft sets. Section 3 presents some known order relations for ranking IFVs. In Section 4, we define a new score function based on the Minkowski distance between IFVs and the negative ideal IFV. Then, some relevant properties are established and discussed. Section 5 defines our extended methodology that takes into account decision makers’ biases. Section 6 introduces the maximizing deviation method for the determination of the vector of weights and an algorithm that leans on this method is proposed to solve the intuitionistic fuzzy MADM. In Section 7, the decisions in two situations (namely, venture capital and supplier selection problems) are compared with those of lexicographic orders, Wei’s method [34], Xia’s method [45], Song’s method [46], and TOPSIS (a shorthand for Technique for Order Preference by Similarity to Ideal Solution) [47] . This comparative analysis validates the feasibility of the new approach. Finally, Section 8 gives the conclusion and future research directions.

2. Preliminaries

Along this paper, denotes a non-empty set of alternatives. This set is often known as the universe of discourse.

We proceed to recall fundamental concepts from the theories of (intuitionistic) fuzzy sets and intuitionistic fuzzy soft sets. These notions and related tools will be needed in subsequent analyses.

A fuzzy set in (sometimes also a fuzzy subset of ) is characterized by its membership function, namely . Then, each is associated with its membership degree to this fuzzy set. It captures the degree of belongingness of x to . Henceforth, denotes the collection of all fuzzy subsets of .

Fuzzy subsethood and equality are quite natural. We declare when for each . As expected, holds when both and are true. In terms of the original formulation by Zadeh [4], fuzzy versions of the set union and intersection, as well as a fuzzy complement operation can be respectively given by the expressions: for each and ,

- ,

- , and

- .

Intuitionistic fuzzy sets extend fuzzy sets in the following manner:

Definition 1

([6]). An intuitionistic fuzzy set on is defined by:

The mappings and respectively provide a membership grade and a non-membership grade for to η. Moreover, it is required that holds true for all .

Unlike the case of fuzzy sets, a degree of hesitancy (or indeterminacy) of x to can be defined. It consists of the difference , and when it is universally equal to zero, can be identified with a fuzzy set. In the following, the collection of all intuitionistic fuzzy sets on will be denoted by .

The set-theoretical operations defined for fuzzy sets can be extended to Definition 1. Thus, in that realm, we define union, intersection, and subsethood as follows: when ,

- ;

- ;

- for all , both and are true.

It is just natural to declare exactly when both and hold true. The complement of is defined as:

In addition to their relationship with fuzzy sets, intuitionistic fuzzy sets can be regarded as L-fuzzy sets with respect to , the complete lattice with:

and lattice order given by:

for all [48,49]. Each ordered pair is an intuitionistic fuzzy value (IFV) [7]. The complement of the IFV is defined as . Based on this viewpoint, the intuitionistic fuzzy set:

can be viewed as the L-fuzzy set with for all .

Soft set theory builds on different grounds. Now, we need (a parameter space, often denoted by E when the set of alternatives is common knowledge). These are all the parameters that approximately represent the objects in . The pair is also known as a soft universe. Put differently, E comprises the collection of parameters that produce an appropriate joint model of the problem under inspection.

Definition 2

([9]). A soft set over is a pair . It is assumed that and . F is a mapping known as the approximate function of .

The natural combination of fuzzy sets with soft sets produces the following notion:

Definition 3

([18]). A fuzzy soft set over is a pair . It is assumed that and . Now, F is a mapping called the approximate function of .

Henceforth, the collection of all fuzzy soft sets over whose sets of attributes are from E will be represented by .

Refinements of Definition 3 with different characteristics exist. Valuation fuzzy soft sets refine fuzzy soft sets. They were introduced in [50] with the purpose of favoring decisions in cases like the valuation of assets. In addition, a very natural blend of intuitionistic fuzzy sets with soft sets generalizes Definition 3 as follows:

Definition 4

([25]). An intuitionistic fuzzy soft set (IFSS) over is a pair . It is assumed that and . Now, is a mapping called the approximate function of .

Hereinafter, represents the collection of all IFSSs over whose attributes belong to E.

Two increasingly general concepts of complements for IFSSs are applicable:

Definition 5.

The complement of is the IFSS such that satisfies when .

Definition 6.

The generalized B-complement of , where , is the IFSS such that for all ,

Observe and . In other words, the generalized B-complement coincides with itself when B is the empty set, and it reduces to the complement if .

Finally, the scalar product of a fuzzy set and an intuitionistic fuzzy soft set is defined as follows:

Definition 7.

Let be an intuitionistic fuzzy soft set over and μ be a fuzzy set in A. The scalar product of μ and is the IFSS , with its approximate function given by:

where , , and .

3. Order Relations for Ranking IFVs

In the following, let us recall some well-known concepts about binary relations and orders.

Definition 8.

When A and B are sets, any is a binary relation between A and B.

The fact is usually denoted by . Then, a is R-related to b.

The domain of R is formed by the elements that satisfy for at least one . Its range is formed by the elements that satisfy for at least one .

If , then R is called a binary relation on A. In this context, several properties may apply to R.

Definition 9.

When R is a binary relation on X, we say that R is:

- reflexive when for each .

- irreflexive when is false, for each .

- symmetric when implies , for each .

- asymmetric when implies , for each .

- antisymmetric when and imply , for each .

- transitive when and imply , for each .

- complete when implies or , for each .

- total or strongly complete, when either or holds true, for each .

Preorders are reflexive and transitive binary relations. Complete preorders are called weak orders. Antisymmetric weak orders are called linear or total orders, and antisymmetric preorders are called partial orders. A set A endowed with a partial order ⪯ is usually called a poset, and we denote it by . A poset is called a chain if ⪯ is a total order.

Definition 10.

Let , be posets. A mapping is called an order homomorphism when for all ,

An order homomorphism is an order isomorphism when f is a bijection, and its inverse is also an order homomorphism.

Definition 11.

Let and be two posets. The lexicographic composition of and is the lexicographic order ⪯ on defined by:

for all .

In the above definition, the notation means and . The lexicographic order ≤ is a partial order on .

Xu and Yager [7] put forward a remarkable method for ranking IFVs. It uses some concepts that we proceed to recall:

Definition 12

([36]). The application defined by for all is called the score function of IFVs.

Definition 13

([37]). The application defined by for all is called the accuracy function of IFVs.

Moreover, we refer to the mapping as the hesitancy function of IFVs, which is defined as for all .

IFVs can be compared according to the following rules:

Definition 14

([7]). When and are two IFVs:

- if , we say that A is smaller than B; we express this fact as ;

- , then three options appear:

- (1)

- when , we say that A is equivalent to B; we express this fact as ;

- (2)

- when , we say that A is smaller than B; we express this fact as ;

- (3)

- when , we say that A is greater than B; we express this fact as .

Definition 14 can be simply stated as a binary relation on the set of of IFVs, namely:

Henceforth, is referred to as the Xu–Yager lexicographic order of IFVs. It is a linear order on . Feng et al. [51] presented several alternative lexicographic orders:

Definition 15

([51]). The binary relation on is given by:

for each .

It is easy to see that is equivalent to the Xu–Yager lexicographic order:

Proposition 1

([51]). When , it must be the case that:

Definition 16

([51]). The binary relation on is given by:

for each .

Definition 17

([51]). The binary relation on is given by:

for each .

Definition 18

([51]). The binary relation on is given by:

for each .

4. Minkowski Score Functions

This section introduces and investigates a novel technical construction. We need some preliminaries.

Definition 19

([29]). The expectation score function of IFVs is the application defined by the expression:

for all .

The next proposition recalls some of the basic properties of :

Proposition 2

([29]). The expectation score function satisfies:

- (1)

- ;

- (2)

- ;

- (3)

- is increasing with respect to ; and

- (4)

- is deceasing with respect to .

Definition 20

([35]). Let (). The simply weighted intuitionistic fuzzy averaging (SWIFA) operator of dimension n is the application defined by the expression:

where is a weight vector with the usual restriction that () and .

In particular, the SWIFA operator with is denoted by , and it is referred to as the simple intuitionistic fuzzy averaging (SIFA) operator, i.e.,

The aforementioned elegant, but useful aggregation operator was introduced by Xu and Yager [52] in their analysis of intuitionistic fuzzy preference relations.

Let and be two IFVs. Then, the normalized Minkowski distance between them is defined as follows:

where .

If , the Minkowski distance is reduced to the normalized Hamming distance between two IFVs given by:

If , the Minkowski distance is reduced to the normalized Euclidean distance between two IFVs given by:

Definition 21

([44]). Let . The ideal positive degree of A is defined as:

The ideal positive degree is a useful measure for evaluating the preference grade of a given IFV. From a geometric point of view, it is easy to observe that the closer an IFV is to the positive ideal IFV , the better the IFV A is among all the IFVs in , and so, a greater ideal positive degree should be assigned to it. As pointed out by Xing et al. [44],

This shows that the positive ideal IFV is the most preferable IFV in , and its ideal positive degree reaches the maximum value.

It is worth noting that the ideal positive degree based on Euclidean distances might fail to differentiate IFVs, since different IFVs may have the same ideal positive degree. The next example illustrates this possibility:

Example 1.

Consider the IFVs:

Although A and B are different, we cannot tell A apart from B if we only rely on their ideal positive degrees.

Dually, a simpler and more general measure of IFVs arises in the following way, if we directly consider the Minkowski distance between the negative ideal IFV and any given IFV in .

Definition 22.

Let and . The Minkowski score function of IFVs is a mapping defined as:

for all .

If , we have:

Thus, the Minkowski score function is reduced to the expectation score function when . In other words, the expectation score of an IFV A coincides with the normalized Hamming distance between A and .

If , then Equation (10) is reduced to the following form:

which is called the Euclidean score function of IFVs.

As shown below, the Minkowski score function effectively solves the limitation of the ideal positive degree posed by Example 1.

Example 2.

In continuation of Example 1, the application of Equation (11) produces:

and:

therefore . Thus, we conclude that is superior to if we compare them by the Euclidean score function.

It also might happen that two IFVs cannot be distinguished since they have the same Minkowski score. However, we can easily address this issue by adjusting the parameter p in the Minkowski score function. This can be illustrated by an example as follows.

Example 3.

Let us consider two IFVs:

and:

Since:

we cannot distinguish A from B with the expectation score function of IFVs. Nevertheless, we can tell them apart if we resort to a Minkowski score function with . For instance, by Equation (11), we have:

and:

Since , we can deduce that is superior to according to the Euclidean score function of IFVs. Similarly, by setting in Equation (10), we have:

and:

Since , we also deduce that A is superior to B based on the Minkowski score function with .

The following result indicates that the Euclidean score function and the ideal positive degree can be seen as a pair of dual concepts:

Proposition 3.

Let be the Minkowski score function and . Then:

where is the complement of A.

Proof.

By Equation (11), we have

and we can also get the following result according to Definition 21 that:

where is the complement of A. Then, it is easy to deduce that:

which completes the proof. □

It is interesting to observe that the expectation and Euclidean score functions are not logically equivalent for the purpose of comparing IFVs. This can be illustrated by an example as follows.

Example 4.

We consider the IFVs:

and:

It is clear that . Thus, we deduce that is superior to if we compare them according to the expectation score function.

On the other hand, by Equation (11), we have:

and:

Obviously, . Hence, we deduce that is inferior to if we compare them according to the Euclidean score function.

It is worth noting that the Minkowski score function satisfies some reasonable properties as shown below:

Proposition 4.

Let be the Minkowski score function. Then:

for all .

Proof.

By Definition 22 and Equation (10), we have and:

If , then . Note also that and . Thus, and . That is, . Conversely, assume that . Then, it is easy to see that . □

Proposition 5.

Let be the Minkowski score function. Then:

for all .

Proof.

The proof is similar to that of Proposition 4 and thus omitted. □

The following result shows that the Minkowski score function is an order homomorphism from the lattice to the lattice .

Proposition 6.

Let be the Minkowski score function. Then:

for all and in .

Proof.

Let and be two IFVs with . By Equation (1), we have and . Thus, for every , it follows that and . This implies that:

which completes the proof. □

It is worth noting that the converse of the implication in Proposition 6 does not hold as shown below:

Example 5.

We consider the IFVs:

and:

Thus, it is clear that . Nevertheless, it should be noted that does not hold since .

Proposition 7.

Let be the Minkowski score function. Then, for all .

Proof.

Let . By the definition of , it is easy to see that:

From Proposition 6, it follows that:

Note also that and . Thus, we have . □

Proposition 8.

Let be the Minkowski score function and with . Then, .

Proof.

Let with . Thus, we have . From Definition 22 and Equation (10), it follows that:

completing the proof. □

Proposition 9.

The Minkowski score function is surjective.

Proof.

For each , let us take the IFV in . From Proposition 8, it follows that . This shows that the Minkowski score function is a surjection. □

Proposition 10.

Let be the Minkowski score function and . Then, is non-decreasing with respect to .

Proof.

By Definition 22 and Equation (10), we have and:

By calculation, we get the partial derivative function of with respect to , which is as follows:

Hence, we conclude that is non-decreasing with respect to . □

Proposition 11.

Let be the Minkowski score function and . Then, is non-increasing with respect to .

Proof.

The proof is similar to that of Proposition 10 and thus omitted. □

5. Minkowski Weighted Score Functions

This section investigates the traits of an improved version of Minkowski’s score functions. We show that it is possible to preserve a good deal of its attractive properties while gaining generality.

Definition 23.

Let and be the attitudinal parameter. The Minkowski weighted score function of IFVs is a mapping defined as:

for all .

If , then Equation (12) is reduced to the following form:

which is called the Hamming weighted score function of IFVs.

If , then Equation (12) is reduced to the following form:

which is called the Euclidean weighted score function of IFVs.

Proposition 12.

Let be the Minkowski weighted score function and . Then, we have:

- (1)

- ;

- (2)

- ;

- (3)

- .

Proof.

By Definition 23 and Equation (12), we have:

and where , .

If , then and . It follows that:

If , then and . Thus, according to Definition 22 and Equation (10), we have:

Similarly, if , then and . Thus, it is easy to deduce that

This completes the proof. □

Now, let us investigate whether the Minkowski weighted score function is bounded on the attitudinal parameter .

Proposition 13.

Let be the Minkowski weighted score function. Then:

for all .

Proof.

By Definition 23 and Equation (12), we have , and:

By calculation, we can get the partial derivative function of with respect to , which is as follows:

where , . Note also that since . Thus, it follows that . Hence, we conclude that is non-decreasing with respect to , so has the least value and the largest value when and , respectively. According to Proposition 12, we can get that:

which completes the proof. □

The above proof also proved the following proposition.

Proposition 14.

Let and be two Minkowski weighted score functions with . Then, for all .

Proof.

Note that is non-decreasing with respect to as shown in the proof of Proposition 13. This directly implies that if , which completes the proof. □

Remark 1.

In view of Propositions 12–14, it is worth noting that the attitudinal parameter can be used to capture decision maker’s general attitude toward the information expressed in terms of IFVs. Given an IFV , its Minkowski weighted score will not decrease when the attitudinal parameter α increases. The least Minkowski weighted score function is obtained when , which corresponds to the most pessimistic attitude. The largest Minkowski weighted score function is obtained when , which corresponds to the most optimistic attitude. In the middle lies the usual Minkowski score function obtained by taking , which corresponds to a neutral attitude.

It is interesting to see that we might be able to express the newly defined Minkowski weighted score function in terms of the previously known functions.

Proposition 15.

Let . Then, .

Proof.

Let . By definition, . According to Equation (13), we have:

This completes the proof. □

Proposition 16.

Let . Then, .

Proof.

By the definition of and , we have and . Then, according to Equation (14), we have:

which completes the proof. □

It can be seen that the Minkowski weighted score function satisfies some reasonable properties as shown below.

Proposition 17.

Let be the Minkowski weighted score function. Then, for all .

Proof.

Let . By Proposition 13, we have:

according to the definition of the IFVs. It is easy to see that the least value of is zero, and the largest value of is one. Hence, we conclude that for all . □

Proposition 18.

Let be the Minkowski weighted score function and with . Then, .

Proof.

Let with . Thus, we have . From Definition 23 and Equation (12), it follows that:

completing the proof. □

Proposition 19.

The Minkowski weighted score function is surjective.

Proof.

For each , let us take the IFV in . From Proposition 18, it follows that . This shows that the Minkowski weighted score function is a surjection. □

Proposition 20.

Let . The Minkowski weighted score function is non-decreasing with respect to .

Proof.

By Definition 23 and Equation (12), we have , , and:

By calculation, we get the partial derivative function of with respect to , which is as follows:

Hence, we conclude that is non-decreasing with respect to . □

Proposition 21.

Let . The Minkowski weighted score function is non-increasing with respect to .

Proof.

The proof is similar to that of Proposition 20 and thus omitted. □

6. A New Intuitionistic Fuzzy MADM Method

In this section, we propose a new approach to intuitionistic fuzzy MADM based on the SWIFA operator and the Minkowski weighted score function.

The components of our model are as follows.

We denote a general set of options by . The collection of relevant characteristics is captured by , and we split it into the disjoint subsets and that respectively comprise benefit and cost attributes. A committee of experts evaluates each alternative with respect to the attributes in A. Their evaluations produce an IFSS , and the evaluation of option with respect to the attribute is the following IFV:

for every possible and .

6.1. Wei’s Method to Determine Weight Vector of Attributes

In [34], Wei put forward a procedure for solving intuitionistic fuzzy MADM problems with partially known or completely unknown information about attribute weights. We proceed to describe the fundamental constituents of their approach.

Definition 24

([53]). The normalized Hamming distance between is:

Wei [34] proposed a maximizing deviation method that determines the weight vector . A weight vector w is selected that maximizes the total weighted deviation value among all options and with respect to all attributes. This item is defined by:

To achieve this goal, the following single-objective programming model is generated:

where:

and H captures the weight information that is known.

The solution of problem (M1) gives a vector w that can act as the weight vector for solving the MADM.

Further, in the case of complete lack of information about attribute weights, the following alternative programming model is at hand:

The following Lagrange function is helpful to solve this model:

Upon normalization of the attribute weights that are obtained, we can produce:

for .

6.2. A New Algorithm

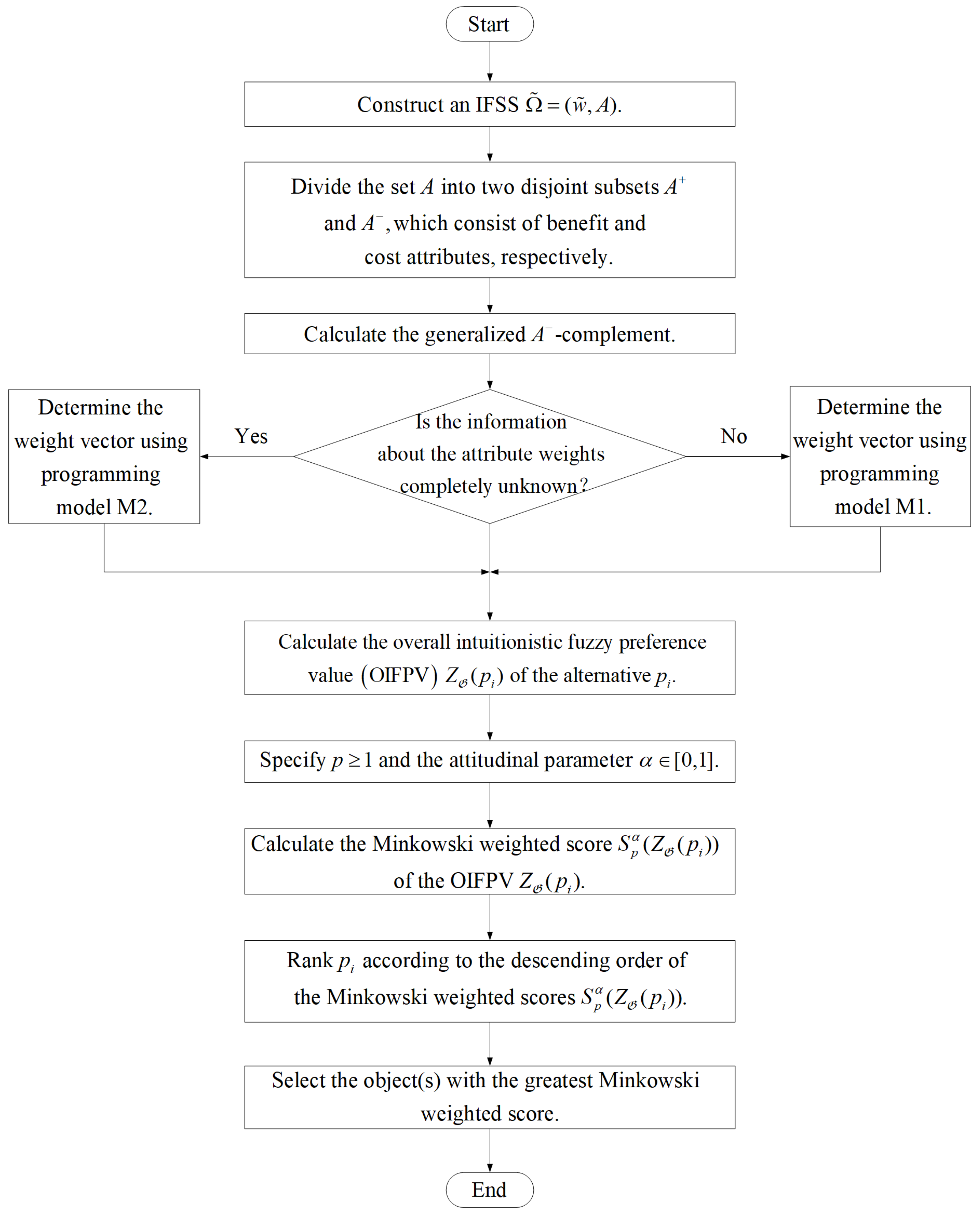

With the combined use of the maximizing deviation method, the SWIFA operator, and the Minkowski weighted score function, a new Algorithm 1 for intuitionistic fuzzy MADM can be developed.

| Algorithm 1 The algorithm for intuitionistic fuzzy MADM. |

|

For clarity, the decision-making process of this method is demonstrated in Figure 2.

Figure 2.

Flowchart of the proposed algorithm for intuitionistic fuzzy MADM.

7. Numerical Illustration

The purpose of this section is twofold. We illustrate the application of our methodology in two inspiring case-studies. We compare the results that we obtain with those of other benchmark methodologies in the same context.

7.1. An Investment Problem

In this subsection, we revisit a benchmark problem regarding venture capital investment, originally raised by Herrera and Herrera-Viedma [54]. Wei first investigated this problem under an intuitionistic fuzzy setting in [34]. Chen and Tu [55] further examined this problem to demonstrate the discriminative capability of some dual bipolar measures of IFVs. Note also that Feng et al. [51] explored the same problem to illustrate some lexicographic orders of IFVs. In what follows, this problem is to be used for illustrating Algorithm 1 in the case where the information about the attribute weights is completely unknown.

Suppose that an investment bank plans to make a venture capital investment in the most suitable company among five alternatives:

- is a car company;

- is a food company;

- is a computer company;

- is an arms company;

- is a television company.

The set consisting of these companies is denoted by U, and the decision is made based on four criteria in the set , where:

- represents the risk analysis;

- represents the growth analysis;

- represents the social-political impact analysis;

- represents the environmental impact analysis.

Now, let us solve the above risk investment problem using Algorithm 1 proposed in Section 6. The step-wise description is presented below:

Step 1. Based on the evaluation results adopted from [34], we construct an IFSS over as shown in Table 1.

Table 1.

Intuitionistic fuzzy soft set .

Step 2. It easy to see that and since , , , and are all benefit attributes.

Step 3. By Definition 6, the generalized -complement of is:

and its approximate function is denoted by:

where and .

Step 4. In this case, the information about the attribute weights is completely unknown. Accordingly, a single-objective programming model can be established as follows:

By solving this model, we can get the following optimal solution:

After normalization, the obtained weight vector is:

Step 5. Using the weight vector , the OIFPV is calculated as follows:

The OIFPVs of all alternatives () can be found in Table 2.

Table 2.

OIFPVs and their corresponding scores.

Step 6. Assume that the decision maker specifies and the attitudinal parameter , respectively.

Step 7. Based on the selected values, we calculate the Euclidean weighted scores of the OIFPVs according to Equation (14):

for . For instance, the Euclidean weighted score can be obtained as:

The other results can be found in Table 2.

Step 8. According to the descending order of the Euclidean weighted score , the ranking of () can be obtained as follows:

Step 9. The optimal decision is to invest in the company since it has the greatest Euclidean weighted score.

In order to verify the effectiveness of the proposed method and its consistency with existing literature, now we make a comparative check against several lexicographic orders introduced in [51] and four other representative methods [34,45,46]. We apply them to the same illustrative example that we have studied above.

According to Definition 15 and the classical scores () shown in Table 2, we can obtain the following ranking:

Table 3 summarizes all the ranking results obtained by Algorithm 1, Wei’s method [34], Xia and Xu’s method [45], Song et al.’s method [46], and the lexicographic orders , , , and . By comparison, it can be seen that the ranking obtained by Algorithm 1 is only slightly different from the result of the lexicographic order . Although the proposed method, Wei’s method [34], Xia and Xu’s method [45], Song et al.’s method [46], and the lexicographic orders , , and can produce the same ranking of alternatives, the rationales of these approaches is quite different.

Table 3.

Ranking results given by different methods.

Remark 2.

Let us briefly recall some essential facts concerning the approaches that we use for contrast. Wei’s method [34] selects the weight vector w, which maximizes the total weighted deviation value among all options and with respect to all attributes. Xia and Xu’s method [45] determines the optimal weights of attributes based on entropy and cross entropy. According to Xia and Xu’s idea, an attribute with smaller entropy and larger cross entropy should be assigned a larger weight. The design of Song et al.’s similarity measure [46] for IFSs involves Jousselme’s distance measure and the cosine similarity measure between basic probability assignments (BPAs). Their similarity measure can avoid the counter-intuitive outputs by a single evaluation of the similarity measure, and it grants more rationality to the ranking results.

Feng et al. [51] pointed out that lexicographic orders , , , and are not logically equivalent. Therefore, we might observe that they produce different outputs. In our case, notice that the ranking obtained under is different from the other three rankings. It is also worth noting that the rankings derived from and are identical, despite the fact that the scores are all different.

This comparison analysis shows that our new approach provides an effective and consistent tool for solving multiple attribute decision-making problems under an intuitionistic fuzzy environment.

7.2. A Supplier Selection Problem

In this subsection, we illustrate Algorithm 1 in the case where the information about the attribute weights is partly known. We revisit a multi-attribute intuitionistic fuzzy group decision-making problem studied in Boran et al. [47]. This reference analyzed a supplier selection problem by means of the TOPSIS method with intuitionistic fuzzy sets. We resort to a modified version of the case study in [47] where the number of alternatives is artificially increased to ten, for the purpose of better understanding Algorithm 1.

Following [47], an automotive company intends to select the most appropriate supplier for one of the key components in its manufacturing process. The selection will be made among ten alternatives in , which are evaluated based on four criteria. The set consisting of these criteria is denoted by , where:

- stands for product quality;

- stands for relationship closeness;

- stands for delivery performance;

- stands for product price.

Now, let us solve the above supplier selection problem using Algorithm 1. The step-wise description is given below:

Step 1. Based on the evaluation results adopted from [47], we construct an IFSS over as shown in Table 4. It should be noted that the evaluations for the alternatives are inherited from [47], while the evaluations of the new alternatives are the simple intuitionistic fuzzy average (SIFA) values of the original values for , taken by pairs.

Table 4.

Intuitionistic fuzzy soft set .

For instance, the SIFA of the evaluations of the alternatives and is used as the evaluation result of the artificial alternative . More specifically, by Equation (5), we get:

All other evaluation results can be obtained in a similar fashion. For convenience, we simply write , , , , and .

Step 2. Divide the set C into two disjoint subsets and . It is easy to see that and , since , and are benefit attributes, while is a cost attribute.

Step 3. Calculate the generalized -complement of the IFSS according to Definition 6, and the results are shown in Table 5. For convenience, the approximate function of is denoted by:

where and .

Table 5.

Intuitionistic fuzzy soft set .

Step 4. In this case, the information about the attribute weights is partly known. Accordingly, a single-objective programming model can be established as follows:

By solving this model, we can get the following normalized optimal weight vector:

Step 5. Using the weight vector , the OIFPVs of all alternatives can be calculated, and the results are presented in Table 6.

Table 6.

OIFPVs and their scores with and .

Step 6. Assume that the decision maker specifies and the attitudinal parameter , respectively. As mentioned in Remark 1, the Minkowski weighted score function is reduced to the Minkowski score function when the attitudinal parameter , representing the decision maker’s neutral attitude.

Step 7. Based on the selected values, we calculate the Minkowski weighted scores of the OIFPVs according to Equation (10):

for . For instance, the Minkowski weighted score is:

To make a thorough comparison, we also consider two other cases in which and , representing the decision maker’s negative and positive attitudes, respectively. Table 6 gives all the OIFPVs and their Minkowski weighted scores with and various choices of the attitudinal parameter .

Step 8. The ranking of () based on the descending order of the Minkowski weighted scores can be obtained as follows:

Step 9. The optimal decision is to select the supplier since it has the greatest Minkowski weighted score.

The analysis above presumes a neutral attitude, i.e., . In order to visualize the influence of the attitudinal parameter on the final ranking, let us now see what conclusion we draw when we keep and rank the alternatives with two other choices of the attitudinal parameter . Table 6 gives the Minkowski weighted scores and of the OIFPVs . They correspond to a negative attitudinal parameter and a positive attitude that we associate with , respectively. It is interesting to observe that the rankings are almost identical to the ranking under a neutral attitude. This indicates that Algorithm 1 is to some extent robust with respect to the choice of the attitudinal parameter.

In addition, Boran et al. introduced the TOPSIS method based on intuitionistic fuzzy sets in [47], which can be used for ranking IFVs as well. To further verify the rationality of the proposed methods, we proceed to compare the ranking that arises from the weight vector obtained by Algorithm 1, the intuitionistic fuzzy TOPSIS method, and various lexicographic orders. Similarly, the ranking results of lexicographic orders can be obtained based on the classical scores listed in Table 6.

The attribute weight vector in [47] is an IFV representation based on the opinions of experts, and it is different from the expression of . Therefore, the construction of the aggregate weighted intuitionistic fuzzy decision matrix in Step 4 of the intuitionistic fuzzy TOPSIS method in [47] must be changed. Assume that is the fuzzy set in C such that (). Then, the weighted IFSS can easily be obtained by calculating the scalar product of the IFSS and the fuzzy set . For instance, by Definition 7 and Equation (2), we have:

The weighted IFSS that arises is shown in Table 7.

Table 7.

Weighted IFSS .

By performing the rest of the steps of the intuitionistic fuzzy TOPSIS method in [47], we get Table 8, which consists of the Euclidean distance between each object and the positive ideal solution, the Euclidean distance between each object and the negative ideal solution, and the relative closeness coefficient . According to the intuitionistic fuzzy TOPSIS method, we can obtain the ranking results based on the descending order of the relative closeness coefficient as shown in Table 9.

Table 8.

Separation measures and the relative closeness coefficients.

Table 9.

Ranking results based on different methods.

Table 9 summarizes all the ranking results obtained by Algorithm 1 (with three different attitudinal parameters ), the intuitionistic fuzzy TOPSIS method [47], and the lexicographic orders , , , and . By comparison, it can be seen that the ranking obtained by Algorithm 1 with the pessimistic attitude is only slightly different from those obtained with either the neutral or optimistic attitude. Algorithm 1 with either the neutral or optimistic attitude and the lexicographic orders , produce the same results. The ranking given by Algorithm 1 with the pessimistic attitude coincides with the results of the lexicographic orders and the intuitionistic fuzzy TOPSIS method. Whatever the choice, the best supplier is , and the worst supplier is . Thus, this numerical example concerning supplier selection further illustrates that the method proposed in this study is feasible for solving multiple attribute decision-making problems in real-life environments.

Remark 3.

Let us briefly highlight several key points with regard to the above discussion. Note first that the set of alternatives is expanded from the original set [47] consisting of five alternatives to a new set containing ten alternatives. All the evaluation results of these alternatives are shown in Table 4. It should be noted that the evaluation results of the alternatives () are inherited directly from [47]. In contrast, the alternatives () in Table 4 are artificial ones, and the evaluation results of them are obtained by computing the SIFA values of the IFVs associated with in a pairwise manner. Note also that the obtained artificial alternatives provide an additional way to verify the rationality of Algorithm 1. In fact, we can see from Table 9 that each artificial alternative lies between the two original alternatives that are used to produce it. For instance, lies between and in all the ranking results. This is due to the fact that we obtain the evaluation results of by simply averaging the corresponding results of and . Finally, the ranking results in Table 9 with , , and show that the selection of different attitudinal parameters may affect the final ranking results. However, to a certain extent, Algorithm 1 is robust with respect to the choice of the attitudinal parameter as well.

8. Conclusions

Based on the geometric intuition that a better score should be assigned to an intuitionistic fuzzy value farther from the negative ideal intuitionistic fuzzy value , Minkowski score functions of intuitionistic fuzzy values were proposed for comparing intuitionistic fuzzy values in the complete lattice . By taking into account an attitudinal parameter, we also presented the concept of Minkowski weighted score functions, which enabled us to describe the decision makers’ subjective attitudes as well. We investigated some basic properties of Minkowski (weighted) score functions and developed a new algorithm for solving multiple attribute decision-making problems based on intuitionistic fuzzy soft sets. Based on two numerical examples concerning risk investment and supplier selection, a brief comparative analysis was made between the newly proposed algorithm and existing alternatives in the literature. It was shown that our method was feasible for solving intuitionistic fuzzy soft set based decision-making problems in real-world scenarios.

Our fundamental tool (Minkowski weighted score function) can be used in the future for the efficient aggregation of infinite chains of intuitionistic fuzzy sets [56]. This line of research concerns decisions in a temporal setting, possibly with an infinite horizon. The breadth of the theory of the aggregation of infinite streams of numbers was discussed in [57] and the references therein.

Author Contributions

Conceptualization, F.F. and J.C.R.A.; formal analysis, F.F., Y.Z., and J.C.R.A.; writing, original draft, F.F. and Y.Z.; writing, review and editing, F.F., Y.Z., J.C.R.A., and Q.W. All authors read and agreed to the published version of the manuscript.

Funding

This work was partially supported by the National Natural Science Foundation of China (Grant Nos. 51875457 and 11301415), the Natural Science Basic Research Plan in Shaanxi Province of China (Grant No. 2018JM1054), and the Special Funds Project for Key Disciplines Construction of Shaanxi Universities.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ishizaka, A.; Nemery, P. Multi-Criteria Decision Analysis: Methods and Software; Wiley: Chichester, UK, 2013. [Google Scholar]

- Pamučar, D.S.; Savin, L.M. Multiple-criteria model for optimal off-road vehicle selection for passenger transportation: BWM-COPRAS model. Vojnoteh. Glas. 2020, 68, 28–64. [Google Scholar] [CrossRef]

- Xu, Z.S. Uncertain Multi-Attribute Decision Making; Tsinghua University Press: Beijing, China, 2004. [Google Scholar]

- Zadeh, L.A. Fuzzy sets. Inf. Control 1965, 8, 338–353. [Google Scholar] [CrossRef]

- Rahman, S. On cuts of Atanassov’s intuitionistic fuzzy sets with respect to fuzzy connectives. Inf. Sci. 2016, 340, 262–278. [Google Scholar] [CrossRef]

- Atanassov, K.T. Intuitionistic fuzzy sets. In Intuitionistic Fuzzy Sets; Physica: Heidelberg, Germany, 1986; Volume 20, pp. 87–96. [Google Scholar]

- Xu, Z.S.; Yager, R.R. Some geometric aggregation operators based on intuitionistic fuzzy sets. Int. J. Gen. Syst. 2006, 35, 417–433. [Google Scholar] [CrossRef]

- Yager, R.R. Multicriteria decision making with ordinal/linguistic intuitionistic fuzzy sets for mobile apps. IEEE Trans. Fuzzy Syst. 2016, 24, 590–599. [Google Scholar] [CrossRef]

- Molodtsov, D.A. Soft set theory-first results. Comput. Math. Appl. 1999, 37, 19–31. [Google Scholar] [CrossRef]

- Feng, F.; Cho, J.; Pedrycz, W.; Fujita, H.; Herawan, T. Soft set based association rule mining. Knowl. Based Syst. 2016, 111, 268–282. [Google Scholar] [CrossRef]

- Ali, M.I.; Feng, F.; Liu, X.Y.; Min, W.K.; Shabir, M. On some new operations in soft set theory. Comput. Math. Appl. 2009, 57, 1547–1553. [Google Scholar] [CrossRef]

- Ali, M.I.; Shabir, M.; Naz, M. Algebraic structures of soft sets associated with new operations. Comput. Math. Appl. 2011, 61, 2647–2654. [Google Scholar] [CrossRef]

- Ali, M.I.; Shabir, M. Logic connectives for soft sets and fuzzy soft sets. IEEE Trans. Fuzzy Syst. 2014, 22, 1431–1442. [Google Scholar] [CrossRef]

- Feng, F.; Li, Y.M. Soft subsets and soft product operations. Inf. Sci. 2013, 232, 44–57. [Google Scholar] [CrossRef]

- Jun, Y.B.; Lee, K.J.; Khan, A. Soft ordered semigroups. Math. Log. Q. 2010, 56, 42–50. [Google Scholar] [CrossRef]

- Jun, Y.B.; Park, C.H. Applications of soft sets in ideal theory of BCK/BCI-algebras. Inf. Sci. 2008, 178, 2466–2475. [Google Scholar] [CrossRef]

- Jun, Y.B. Soft BCK/BCI-algebras. Comput. Math. Appl. 2008, 56, 1408–1413. [Google Scholar] [CrossRef]

- Maji, P.K.; Biswas, R.; Roy, A.R. Fuzzy soft sets. J. Fuzzy Math. 2001, 9, 589–602. [Google Scholar]

- Das, S.; Kar, S. The hesitant fuzzy soft set and its application in decision-making. In Facets of Uncertainties and Applications. Springer Proceedings in Mathematics & Statistics; Chakraborty, M.K., Skowron, A., Maiti, M., Kar, S., Eds.; Springer: New Delhi, India, 2015; Volume 125. [Google Scholar]

- Das, S.; Malakar, D.; Kar, S.; Pal, T. Correlation measure of hesitant fuzzy soft sets and their application in decision making. Neural Comput. Appl. 2019, 31, 1023–1039. [Google Scholar] [CrossRef]

- Fatimah, F.; Rosadi, D.; Hakim, R.B.F.; Alcantud, J.C.R. N-soft sets and their decision making algorithms. Soft Comput. 2018, 22, 3829–3842. [Google Scholar] [CrossRef]

- Alcantud, J.C.R.; Feng, F.; Yager, R.R. An N-soft set approach to rough sets. IEEE Trans. Fuzzy Syst. 2019. [Google Scholar] [CrossRef]

- Akram, M.; Adeel, A.; Alcantud, J.C.R. Group decision-making methods based on hesitant N-soft sets. Expert Syst. Appl. 2019, 115, 95–105. [Google Scholar] [CrossRef]

- Akram, M.; Adeel, A.; Alcantud, J.C.R. Fuzzy N-soft sets: A novel model with applications. J. Intell. Fuzzy Syst. 2018, 35, 4757–4771. [Google Scholar] [CrossRef]

- Maji, P.K.; Biswas, R.; Roy, A.R. Intuitionistic fuzzy soft sets. J. Fuzzy Math. 2001, 9, 677–692. [Google Scholar]

- Ali, M.I.; Feng, F.; Mahmood, T.; Mahmood, I.; Faizan, H. A graphical method for ranking Atanassov’s intuitionistic fuzzy values using the uncertainty index and entropy. Int. J. Intell. Syst. 2019, 34, 2692–2712. [Google Scholar] [CrossRef]

- Feng, F.; Xu, Z.; Fujita, H.; Liang, M. Enhancing PROMETHEE method with intuitionistic fuzzy soft sets. Int. J. Intell. Syst. 2020, 35, 1071–1104. [Google Scholar] [CrossRef]

- Agarwal, M.; Biswas, K.K.; Hanmandlu, M. Generalized intuitionistic fuzzy soft sets with applications in decision-making. Appl. Soft Comput. 2013, 13, 3552–3566. [Google Scholar] [CrossRef]

- Feng, F.; Fujita, H.; Ali, M.I.; Yager, R.R.; Liu, X. Another view on generalized intuitionistic fuzzy soft sets and related multiattribute decision making methods. IEEE Trans. Fuzzy Syst. 2019, 27, 474–488. [Google Scholar] [CrossRef]

- Peng, X.D.; Yang, Y.; Song, J.P. Pythagoren fuzzy soft set and its application. Comput. Eng. 2015, 41, 224–229. [Google Scholar]

- Athira, T.M.; John, S.J.; Garg, H. A novel entropy measure of Pythagorean fuzzy soft sets. AIMS Math. 2019, 5, 1050–1061. [Google Scholar] [CrossRef]

- Dey, A.; Senapati, T.; Pal, M.; Chen, G.Y. A novel approach to hesitant multi-fuzzy soft set based decision-making. AIMS Math. 2020, 5, 1985–2008. [Google Scholar] [CrossRef]

- Das, S.; Kar, M.B.; Kar, S. Group multi-criteria decision making using intuitionistic multi-fuzzy sets. J. Uncertain. Anal. Appl. 2013, 1, 10. [Google Scholar] [CrossRef]

- Wei, G.W. Maximizing deviation method for multiple attribute decision making in intuitionistic fuzzy setting. Knowl. Based Syst. 2008, 21, 833–836. [Google Scholar] [CrossRef]

- Liu, X.; Kim, H.S.; Feng, F.; Alcantud, J.C.R. Centroid transformations of intuitionistic fuzzy values based on aggregation operators. Mathematics 2018, 6, 215. [Google Scholar] [CrossRef]

- Chen, S.M.; Tan, J.M. Handling multicriteria fuzzy decision-making problems based on vague set theory. Fuzzy Sets Syst. 1994, 67, 163–172. [Google Scholar] [CrossRef]

- Hong, D.H.; Choi, C.H. Multi-criteria fuzzy decision-making problems based on vague set theory. Fuzzy Sets Syst. 2000, 114, 103–113. [Google Scholar] [CrossRef]

- Yu, X.; Xu, Z.; Liu, S.; Chen, Q. On ranking of intuitionistic fuzzy values based on dominance relations. Int. J. Uncertain. Fuzziness Knowl. Based Syst. 2014, 22, 315–335. [Google Scholar] [CrossRef]

- Garg, H.; Arora, R. TOPSIS method based on correlation coefficient for solving decision-making problems with intuitionistic fuzzy soft set information. AIMS Math. 2020, 5, 2944–2966. [Google Scholar] [CrossRef]

- Szmidt, E.; Kacprzyk, J. Amount of infornation and its reliability in the ranking of Atanassov’s intuitionistic fuzzy alternatives. In Recent Advances in Decision Making; Series Studies in Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2009; pp. 7–19. [Google Scholar]

- Guo, K. Amount of information and attitudinal-based method for ranking Atanassov’s intuitionistic fuzzy values. IEEE Trans. Fuzzy Syst. 2014, 22, 177–188. [Google Scholar] [CrossRef]

- Zhang, X.; Xu, Z. A new method for ranking intuitionistic fuzzy values and its application in multi-attribute decision making. Fuzzy Optim. Decis. Mak. 2012, 11, 135–146. [Google Scholar] [CrossRef]

- Wang, W.; Xin, X. Distance measure between intuitionistic fuzzy sets. Pattern Recognit. Lett. 2005, 26, 2063–2069. [Google Scholar] [CrossRef]

- Xing, Z.; Xiong, W.; Liu, H. A Euclidean approach for ranking intuitionistic fuzzy values. IEEE Trans. Fuzzy Syst. 2018, 26, 353–365. [Google Scholar] [CrossRef]

- Xia, M.M.; Xu, Z.S. Entropy/cross entropy-based group decision making under intuitionistic fuzzy environment. Inf. Fusion 2012, 13, 31–47. [Google Scholar] [CrossRef]

- Song, Y.F.; Wang, X.D.; Lei, L.; Quan, W.; Huang, W.L. An evidential view of similarity measure for Atanassov’s intuitionistic fuzzy sets. J. Intell. Fuzzy Syst. 2016, 31, 1653–1668. [Google Scholar] [CrossRef]

- Boran, F.E.; Genc, S.; Kurt, M.; Akay, D. A multi-criteria intuitionistic fuzzy group decision making for supplier selection with TOPSIS method. Expert Syst. Appl. 2009, 36, 11363–11368. [Google Scholar] [CrossRef]

- Wang, G.J.; He, Y.Y. Intuitionistic fuzzy sets and L-fuzzy sets. Fuzzy Sets Syst. 2000, 10, 271–274. [Google Scholar] [CrossRef]

- Deschrijver, G.; Kerre, E.E. On the relationship between some extensions of fuzzy set theory. Fuzzy Sets Syst. 2003, 133, 227–235. [Google Scholar] [CrossRef]

- Alcantud, J.C.R.; Cruz-Rambaud, S.; Muñoz Torrecillas, M.J. Valuation fuzzy soft sets: A flexible fuzzy soft set based decision making procedure for the valuation of assets. Symmetry 2017, 9, 253. [Google Scholar] [CrossRef]

- Feng, F.; Liang, M.; Fujita, H.; Yager, R.R.; Liu, X. Lexicographic orders of intuitionistic fuzzy values and their relationships. Mathematics 2019, 7, 166. [Google Scholar] [CrossRef]

- Xu, Z.S.; Yager, R.R. Intuitionistic and interval-valued intutionistic fuzzy preference relations and their measures of similarity for the evaluation of agreement within a group. Fuzzy Optim. Decis. Mak. 2009, 8, 123–139. [Google Scholar] [CrossRef]

- Xu, Z.S. Models for multiple attribute decision-making with intuitionistic fuzzy information. Int. J. Uncertain. Fuzziness Knowl. Based Syst. 2007, 15, 285–297. [Google Scholar] [CrossRef]

- Herrera, F.; Herrera-Viedma, E. Linguistic decision analysis: Steps for solving decision problems under linguistic information. Fuzzy Sets Syst. 2000, 115, 67–82. [Google Scholar] [CrossRef]

- Chen, L.H.; Tu, C.C. Dual bipolar measures of Atanassov’s intuitionistic fuzzy sets. IEEE Trans. Fuzzy Syst. 2014, 22, 966–982. [Google Scholar] [CrossRef]

- Alcantud, J.C.R.; Khameneh, A.Z.; Kilicman, A. Aggregation of infinite chains of intuitionistic fuzzy sets and their application to choices with temporal intuitionistic fuzzy information. Inf. Sci. 2020, 514, 106–117. [Google Scholar] [CrossRef]

- Alcantud, J.C.R.; García-Sanz, M.D. Evaluations of infinite utility streams: Pareto efficient and egalitarian axiomatics. Metroeconomica 2013, 64, 432–447. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).