A Metamorphic Testing Approach for Assessing Question Answering Systems

Abstract

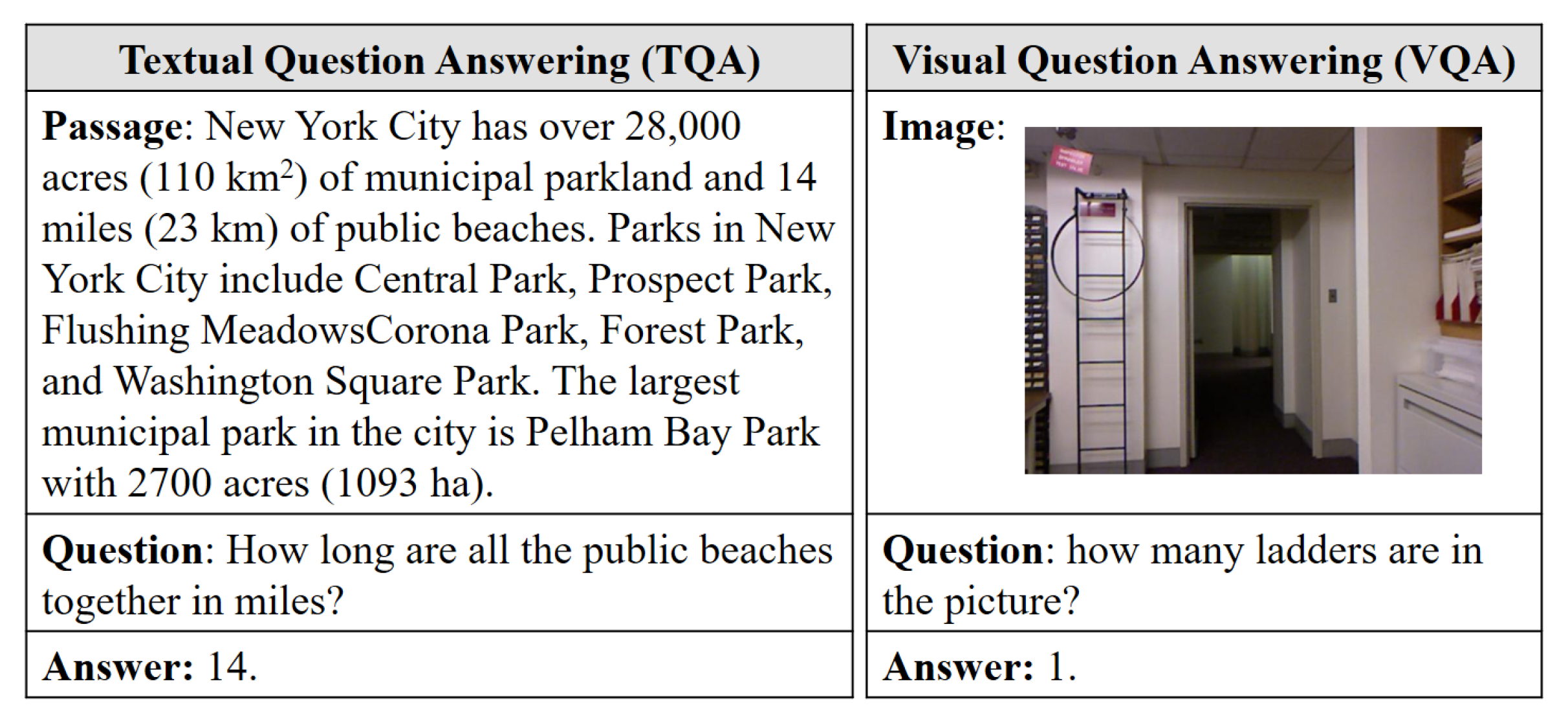

1. Introduction

- We proposed to apply the technique of metamorphic testing to assess QA systems from the users’ perspectives, and presented 17 MRs by considering different aspects of QA systems.

- We conducted experiments on four common QA systems (two TQA systems and two VQA systems), demonstrating the feasibility and effectiveness of MT in assessing QA systems.

- We conducted comparison analysis among subject QA systems to reveal their capabilities of understanding and processing the input data, and also demonstrated how the analysis results can help the user to select appropriate QA system for their specific needs.

2. Metamorphic Testing

3. Methodology

4. Metamorphic Relations of Question Answering Systems

4.1. Output Relationships

- Equivalent: and are regarded to be equivalent if they have similar semantics.

- Different: and are regarded to be different if they have distinct semantics.

4.2. MRs for QA Systems

- MR1.x has = (P, ) and = (P, ) or = (I, ) and = (I, ). That is, and of MR1.x have the same P (or I), but different questions and . This category of MRs operates on to construct . Hence, they focus on QA’s capability of understanding and answering questions

- MR2.x has = (, Q) and = (, Q). That is, and of MR2.x have the same Q, but different passages and . This category of MRs operate on to construct , and they focus on the TQA’s capability of processing and understanding the input passage.

- MR3.x has = (, Q) and = (, Q). That is, and of MR3.x have the same Q, but different images and . This category of MRs operate on to construct , and they concentrate on the VQA’s capability of processing and understanding the input image.

4.2.1. MR1.x

4.2.2. MR2.x

4.2.3. MR3.x

5. Experimental Setup

5.1. MRs Implementation

5.2. Subject QA Systems

- AllenNLP-TQA (https://demo.allennlp.org/reading-comprehension, accessed on 10 November 2020), which is a TQA API at the AllenNLP platform [24]. AllenNLP-TQA is an implementation of the BiDAF model [8] with ELMo embeddings.

- Transformers-TQA (https://github.com/huggingface/transformers, accessed on 10 November 2020), which is a TQA API at the Transformers platform [25]. This API is built upon the the DistilBERT model [32].

- AllenNLP-VQA (https://demo.allennlp.org/visual-question-answering, accessed on 10 November 2020), which is a VQA API at the AllenNLP platform [24]. This API is built upon the ViLBERT model [11].

- CloudCV-VQA (http://vqa.cloudcv.org/, accessed on 10 November 2020), which is an API provided by the CloudCV. This API utilizes the Pythia model [33].

5.3. Datasets and Source Inputs of MRs

6. Results and Analysis

6.1. MT Results for QA Systems

6.2. Further Analysis

6.2.1. QA’s Capability of Understanding and Answering Questions

6.2.2. TQA’s Capability of Understanding and Processing Passages

6.2.3. VQA’s Capability of Understanding and Processing Images

6.2.4. Further Analysis and Discussion

7. Related Work

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bouziane, A.; Bouchiha, D.; Doumi, N.; Malki, M. Question Answering Systems: Survey and Trends. Procedia Comput. Sci. 2015, 73, 366–375. [Google Scholar] [CrossRef]

- Zeng, C.; Li, S.; Li, Q.; Hu, J.; Hu, J. A Survey on Machine Reading Comprehension: Tasks, Evaluation Metrics, and Benchmark Datasets. Appl. Sci. 2020, 10, 7640. [Google Scholar] [CrossRef]

- Liu, S.; Zhang, X.; Zhang, S.; Wang, H.; Zhang, W. Neural Machine Reading Comprehension: Methods and Trends. Appl. Sci. 2019, 9, 3698. [Google Scholar] [CrossRef]

- Antol, S.; Agrawal, A.; Lu, J.; Mitchell, M.; Parikh, D. VQA: Visual Question Answering. Int. J. Comput. Vis. 2015, 123, 4–31. [Google Scholar]

- Reddy, S.; Chen, D.; Manning, C.D. CoQA: A Conversational Question Answering Challenge. Trans. Assoc. Comput. Linguist. 2019, 7, 249–266. [Google Scholar] [CrossRef]

- Cui, L.; Huang, S.; Wei, F.; Tan, C.; Zhou, M. SuperAgent: A Customer Service Chatbot for E-commerce Websites. In Proceedings of the ACL 2017, System Demonstrations, Vancouver, BC, Canada, 30 July–4 August 2017; pp. 97–102. [Google Scholar]

- Li, H.; Wang, P.; Shen, C.; Hengel, A.V.D. Visual Question Answering as Reading Comprehension. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6319–6328. [Google Scholar]

- Seo, M.; Kembhavi, A.; Farhadi, A.; Hajishirzi, H. Bidirectional Attention Flow for Machine Comprehension. arXiv 2018, arXiv:1611.01603. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2019, arXiv:1810.04805. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Lu, J.; Batra, D.; Parikh, D.; Lee, S. ViLBERT: Pretraining Task-Agnostic Visiolinguistic Representations for Vision-and-Language Tasks. arXiv 2019, arXiv:1908.02265. [Google Scholar]

- Jia, R.; Liang, P. Adversarial Examples for Evaluating Reading Comprehension Systems. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 9–11 September 2017; pp. 2021–2031. [Google Scholar]

- Kaushik, D.; Lipton, Z.C. How Much Reading Does Reading Comprehension Require? A Critical Investigation of Popular Benchmarks. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 5010–5015. [Google Scholar]

- Mudrakarta, P.K.; Taly, A.; Sundararajan, M.; Dhamdhere, K. Did the Model Understand the Question? In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; pp. 1896–1906. [Google Scholar]

- Ribeiro, M.T.; Wu, T.; Guestrin, C.; Singh, S. Beyond Accuracy: Behavioral Testing of NLP Models with CheckList. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online. 5–10 July 2020; pp. 4902–4912. [Google Scholar]

- Patro, B.N.; Patel, S.; Namboodiri, V.P. Robust Explanations for Visual Question Answering. In Proceedings of the 2020 IEEE Winter Conference on Applications of Computer Vision (WACV), Village, CO, USA, 1–5 March 2020; pp. 1566–1575. [Google Scholar]

- Chen, J.; Durrett, G. Robust Question Answering Through Sub-part Alignment. arXiv 2020, arXiv:2004.14648. [Google Scholar]

- Zhou, Z.Q.; Sun, L. Metamorphic testing of driverless cars. Commun. ACM 2019, 62, 61–67. [Google Scholar] [CrossRef]

- Xie, X.; Wong, W.E.; Chen, T.Y.; Xu, B.W. Metamorphic slice: An application in spectrum-based fault localization. Inf. Softw. Technol. 2013, 55, 866–879. [Google Scholar] [CrossRef]

- Jiang, M.; Chen, T.Y.; Kuo, F.C.; Towey, D.; Ding, Z. A metamorphic testing approach for supporting program repair without the need for a test oracle. J. Syst. Softw. 2017, 126, 127–140. [Google Scholar] [CrossRef]

- Jiang, M.; Chen, T.Y.; Zhou, Z.Q.; Ding, Z. Input Test Suites for Program Repair: A Novel Construction Method Based on Metamorphic Relations. IEEE Trans. Reliab. 2020. [Google Scholar] [CrossRef]

- Zhou, Z.Q.; Xiang, S.; Chen, T.Y. Metamorphic testing for software quality assessment: A study of search engines. IEEE Trans. Softw. Eng. 2016, 42, 264–284. [Google Scholar] [CrossRef]

- Zhou, Z.Q.; Sun, L.; Chen, T.Y.; Towey, D. Metamorphic Relations for Enhancing System Understanding and Use. IEEE Trans. Softw. Eng. 2020, 46, 1120–1154. [Google Scholar] [CrossRef]

- Gardner, M.; Grus, J.; Neumann, M.; Tafjord, O.; Dasigi, P.; Liu, N.F.; Peters, M.; Schmitz, M.; Zettlemoyer, L. AllenNLP: A Deep Semantic Natural Language Processing Platform. In Proceedings of the Workshop for NLP Open Source Software (NLP-OSS), Melbourne, Australia, 20 July 2018; pp. 1–6. [Google Scholar]

- Wolf, T.; Debut, L.; Sanh, V.; Chaumond, J.; Delangue, C.; Moi, A.; Cistac, P.; Rault, T.; Louf, R.; Funtowicz, M.; et al. Transformers: State-of-the-Art Natural Language Processing. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, Virtual Conference. 16–20 November 2020; pp. 38–45. [Google Scholar]

- Segura, S.; Fraser, G.; Sanchez, A.B.; Ruiz-Cortés, A. A survey on metamorphic testing. IEEE Trans. Softw. Eng. 2016, 42, 805–824. [Google Scholar] [CrossRef]

- Chen, T.Y.; Kuo, F.C.; Liu, H.; Poon, P.L.; Towey, D.; Tse, T.H.; Zhou, Z.Q. Metamorphic Testing: A Review of Challenges and Opportunities. ACM Comput. Surv. 2018, 51, 4:1–4:27. [Google Scholar] [CrossRef]

- Xiao, H. bert-As-Service. 2018. Available online: https://github.com/hanxiao/bert-as-service (accessed on 8 November 2020).

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Reimers, N.; Gurevych, I. Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing, Hong Kong, China, 3–7 November 2019. [Google Scholar]

- Tian, Y.; Pei, K.; Jana, S.; Ray, B. DeepTest: Automated Testing of Deep-Neural-Network-Driven Autonomous Cars. In Proceedings of the 40th International Conference on Software Engineering, Gothenburg, Sweden, 27 May–3 June 2018; pp. 303–314. [Google Scholar]

- Sanh, V.; Debut, L.; Chaumond, J.; Wolf, T. DistilBERT, a distilled version of BERT: Smaller, faster, cheaper and lighter. arXiv 2020, arXiv:1910.01108. [Google Scholar]

- Singh, A.; Goswami, V.; Natarajan, V.; Jiang, Y.; Chen, X.; Shah, M.; Rohrbach, M.; Batra, D.; Parikh, D. MMF: A Multimodal Framework for Vision and Language Research. 2020. Available online: https://github.com/facebookresearch/mmf (accessed on 12 September 2020).

- Rajpurkar, P.; Jia, R.; Liang, P. Know What You Don’t Know: Unanswerable Questions for SQuAD. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers), Melbourne, Australia, 15–20 July 2018; pp. 784–789. [Google Scholar]

- Malinowski, M.; Fritz, M. A Multi-World Approach to Question Answering about Real-World Scenes based on Uncertain Input. In Proceedings of the 27th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 1682–1690. [Google Scholar]

- Shah, M.; Chen, X.; Rohrbach, M.; Parikh, D. Cycle-Consistency for Robust Visual Question Answering. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 6642–6651. [Google Scholar]

- Huang, J.H.; Dao, C.D.; Alfadly, M.; Ghanem, B. A Novel Framework for Robustness Analysis of Visual QA Models. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2019; Volume 33, pp. 8449–8456. [Google Scholar]

- Wallace, E.; Feng, S.; Kandpal, N.; Gardner, M.; Singh, S. Universal Adversarial Triggers for Attacking and Analyzing NLP. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 2153–2162. [Google Scholar]

- Ribeiro, M.T.; Guestrin, C.; Singh, S. Are Red Roses Red? Evaluating Consistency of Question-Answering Models. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 6174–6184. [Google Scholar]

| Source and Follow-Up Inputs | Number of MRs | |

|---|---|---|

| MR1.x | = (P, ), = (P, ) | 4 (MR1.1–MR1.4) |

| = (I, ), = (I, ) | ||

| MR2.x | = (, Q), = (, Q) | 5 (MR2.1–MR2.5) |

| MR3.x | = (, Q), = (, Q) | 8 (MR3.1–MR3.8) |

| MRs | Interpretation of MR Violation | Examples of Pairs of (Q,Q) |

|---|---|---|

| MR1.1 | QA is sensitive to the letter case of a question. | blueWhat song won Best R&B Performance? WHAT SONG WON BEST R&B PERFORMANCE? |

| MR1.2 | QA is sensitive to questions using different comparative descriptions. | blueIn how many years will A remain higher than B in population? In how many years will B remain lower than A in population? |

| MR1.3 | QA cannot properly understand the questions expressed via different comparative words. | blueWhat type of residents tend to be more fluent than rural ones? What type of residents tend to be less fluent than rural ones? |

| MR1.4 | QA cannot properly understand the questions involving different subjects. | blueWhat is the name of the final studio album from Destiny’s Child? What is the name of the final studio album from Bob’s Child? |

| MRs | Interpretation of MR Violations | Operation Used for Constructing |

|---|---|---|

| MR2.1 | TQA is sensitive to the letter case of a passage. | Capitalization |

| MR2.2 | TQA is sensitive to the order of sentences in a passage. | Order reversing |

| MR2.3 | TQA is sensitive to the added sentences that are irrelevant to the question. | Addition |

| MR2.4 | TQA is sensitive to the deleted sentences that are irrelevant to the question. | Removal |

| MR2.5 | TQA is incapable of properly understanding and processing the question related texts. | Replacement |

|

|

|

| AllenNLP-TQA | Transformers-TQA | AllenNLP-VQA | CloudCV-VQA | |

|---|---|---|---|---|

| MR1.1 | 65.10% | 91.11% | 10.34% | 20.14% |

| MR1.2 | 42.86% | 7.14% | * | * |

| MR1.3 | 92.98% | 3.51% | * | * |

| MR1.4 | 86.97% | 68.45% | * | * |

| MR2.1 | 67.37% | 86.86% | - | - |

| MR2.2 | 8.12% | 6.14% | - | - |

| MR2.3 | 2.05% | 0.61% | - | - |

| MR2.4 | 3.73% | 1.18% | - | - |

| MR2.5 | 33.18% | 23.99% | - | - |

| MR3.1 | - | - | 81.10% | 66.14% |

| MR3.2 | - | - | 80.79 % | 62.71% |

| MR3.3 | - | - | 33.58% | 66.42% |

| MR3.4 | - | - | 55.25 % | 47.68% |

| MR3.5 | - | - | 48.10% | 20.86% |

| MR3.6 | - | - | 79.54% | 62.74% |

| MR3.7 | - | - | 32.06% | 29.73% |

| MR3.8 | - | - | 32.68% | 31.51% |

| Average | 44.71% | 32.11% | 56.68% | 48.47 % |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tu, K.; Jiang, M.; Ding, Z. A Metamorphic Testing Approach for Assessing Question Answering Systems. Mathematics 2021, 9, 726. https://doi.org/10.3390/math9070726

Tu K, Jiang M, Ding Z. A Metamorphic Testing Approach for Assessing Question Answering Systems. Mathematics. 2021; 9(7):726. https://doi.org/10.3390/math9070726

Chicago/Turabian StyleTu, Kaiyi, Mingyue Jiang, and Zuohua Ding. 2021. "A Metamorphic Testing Approach for Assessing Question Answering Systems" Mathematics 9, no. 7: 726. https://doi.org/10.3390/math9070726

APA StyleTu, K., Jiang, M., & Ding, Z. (2021). A Metamorphic Testing Approach for Assessing Question Answering Systems. Mathematics, 9(7), 726. https://doi.org/10.3390/math9070726