1. Introduction

Gamification is defined as a process that applies gaming elements to non-game contexts [

1,

2]. Among the most commonly included game elements are levels, points, memes, quests, leader boards, combat, badges, gifting, boss fights, avatars, social graphs, certificates, and content unlocking [

3,

4].

Many benefits of the application of gamification to teaching have been described, including by Torres-Toukoumidis et al. [

5] and Carnero [

6]: it encourages autonomous, rigorous, and methodical working; leads to healthy competition; increases the intrinsic involvement and motivation of the participants, and motivates trying again, as feedback is immediate; improves group dynamics; maintains continuous intellectual activity by interacting constantly with the computer; incorporates fun into learning; uses a high level of interdisciplinarity; combines theory and practice, facilitating knowledge acquisition; increases the use of creativity; promotes interaction with other students; develops search and information selection skills; helps in problem solving, visualising simulations; increases interest in class participation and the number of communication channels between teacher and students; drives connectivity and interoperability in mixed distance-classroom learning; combines the application with other teaching methods; improves academic performance; and modernises the educational landscape in the new digital era [

7], given the great importance of digital literacy in the modern world [

8]. It also allows students to be surveyed on any aspect related to teaching, or to determine their previous level of knowledge of a subject easily. It facilitates analysis of the results obtained during an academic year and comparison of the results with those of previous years, as well as with other subjects. It facilitates the teachers’ self-assessment of their own teaching and control of attendance of the students [

9]. Furthermore, some applications include social media, which allows students to create, share, and exchange content with classmates, and thereby create a sense of community [

10]; this goes with the fact that student assessment is undertaken in an innovative way, since the level of knowledge acquisition is measured during the learning process, and not simply given as a final mark. Moreover, gamification provides a source of data on student learning, guaranteeing more effective, accurate, and useful information for teachers, parents, administrators, and public education policy managers [

11].

In the literature review carried out on the databases Emerald, MDPI, Hindawi, Proquest, Science Direct, and Scopus using the terms “select app multicriteria”, “education game multicriteria”, and “gamification multicriteria”, the only precedents found were those of Kim [

12] and Rajak and Shaw [

13]. Kim [

12] sets out a model built via AHP to assess three gamification platforms applied to a project in a company located in South Korea using the criteria typical in the selection of business software, such as credibility of supplier, competitiveness of product, continuity of service, etc. in a model designed for business managers. Rajak and Shaw [

13] assesses 10 mHealth applications on the market via AHP and fuzzy-TOPSIS, recognising that a suitable framework for assessing the efficacy of mobile applications is not to be found in the literature. Therefore, there is no study in the literature analysing the choice of gamification apps for degree-level university courses with fuzzy multicriteria techniques. However, as recognised in Boneu [

14], the selection of the e-learning platform is very important, as it identifies and defines the pedagogical methodologies that can be designed based on the tools and services they offer.

This study describes a method that combines fuzzy Technique for Order of Preference by Similarity to Ideal Solution (TOPSIS) with the Measuring Attractiveness by a Categorical Based Evaluation Technique (MACBETH) approach and fuzzy Shannon entropy for the choice of the most suitable gamification app for the Manufacturing Systems and Industrial Organisation course, taught in the second-year degree programmes of Electrical Engineering and Industrial and Automatic Electronic Engineering at the Higher Technical School of Industrial Engineering at the Ciudad Real campus of the University of Castilla-La Mancha. The objective weights calculated with fuzzy Shannon entropy are compared with those calculated from fuzzy De Luca and Termini entropy and exponential entropy. The feasibility and validity of the proposed method is tested through the Preference Ranking Organisation METHod for Enrichment of Evaluations (PROMETHEE) II, ELimination and Choice Expressing REality (ELECTRE) III and fuzzy VIKOR method (VIsekriterijumska optimizacija i KOmpromisno Resenje)) (some of the methods proposed by the application that recommend the most suitable Multi-Criteria Decision Analysis (MCDA) methods carried out by Wątróbski et al. [

15]).

The inclusion of fuzzy logic allows the uncertainties, ambiguities, or indecisions typical of real decision-making processes to be taken into account. The choice of fuzzy TOPSIS rather than other fuzzy multicriteria decision analysis techniques is because it has been shown to be a robust technique for handling complex real-life problems [

16] and is widely used in many areas [

17]. This is the first study in the literature to integrate the subjective weights from the judgements given by the lecturer who teaches the course, processed using the MACBETH approach, with objective weights based on objective information (fuzzy decision matrix), calculated using fuzzy Shannon entropy. In this way, the deficiencies that occur both in a subjective and an objective approach are overcome. The subjective weighted methods are indeed highly subjective and it is difficult to fully express the effectiveness of the weights in evaluating the criteria. The objective weighted methods can easily lose key information due to the limited sample of measurement data [

18]. Subsequently, fuzzy TOPSIS is used to obtain the classification of alternatives. The MACBETH approach was chosen because it provides additional tools for handling ambiguous, imprecise, or inadequate information, or the impossibility of giving precise values. In the literature, fuzzy TOPSIS is usually combined only with the subjective weighting methods AHP or fuzzy AHP to calculate the relative weights of the criteria [

19], while fuzzy TOPSIS is used to rank the alternatives; examples of these combinations can be seen in Torfi et al. [

20], Amiri [

21], Sun [

22], Kutlu and Ekmekçioğlu [

23], Senthil et al. [

24], Beikkhakhian et al. [

25], Shaverdi et al. [

26], Samanlioglu et al. [

27], and Nojavan et al. [

28]. This is because the strengths of the two methods are complementary, since while TOPSIS uses two for comparison and better visualisation, AHP gives the weightings of the criteria based on consistency ratio analysis [

29]. However, this study chose MACBETH instead of AHP because, although both methods use pairwise comparisons, the scales used by the decision maker to give judgements are different; AHP uses a 9-point ratio scale whereas MACBETH uses an ordinal scale with six semantic values. AHP uses an eigenvalue method for determining the weights, while MACBETH uses linear programming. AHP allows up to 10% inconsistency in the judgements given in each matrix, while MACBETH does not allow any inconsistency [

30]. The main advantage of MACBETH is that it provides a complete methodology for ensuring accuracy in the weightings of the criteria, such as the reference levels and the definition of a descriptor associated with each criterion; it also gives the aforementioned tools for including doubts or incomplete knowledge of the decision maker. Furthermore, MACBETH has the advantage of creating quantitative measurement scales based on qualitative judgements by linear programming.

It is difficult, but essential, to determine the most suitable MCDA method for any given problem [

31], as none of the methods are perfect, nor can any one method be used for all decision problems [

32]. This is an important question that is still being widely discussed in the literature, but to which no answer has yet been found. The reasons may be related to the high level of MCDA methods available, both those specific to certain areas and general-purpose methods [

33]. Furthermore, different methods can give different results for the same problem [

34], even when the same weights are applied to the criteria. One reason for this is that, at times, the alternatives are very similar and are close to each other. However, it may also come about because each MCDA technique can use weights in the calculations in a different way, because the algorithms are different, the algorithms try to scales the objectives, affecting the already-chosen weights, or because the algorithms introduce extra parameters which affect the classification [

15]. Each method may, therefore, assign a different rating, depending on its exact working, and thus the final ranking can vary from one method to another [

35]. Since the correct ranking is not known, and so cannot be compared to the results obtained, it is not possible to determine which method to choose [

36]. The decision maker thus faces a paradox by which the choice of an MCDA method becomes a decision problem in itself [

37,

38]. It should also not be forgotten that MCDA methods include subjective information provided by the decision maker, such that a change of decision maker can lead to a change in the solution [

32]. The literature agrees that a number of methods should be applied to the same problem, as in the literature review undertaken by Zavadskas et al. [

39], which states that there is a significant number of publications that apply comparative analysis of separate MCDA methods (see for example [

40,

41,

42]). If all or most of the methods agree on the first-placed alternative, it may be concluded that this alternative is the most suitable; this, however, does not lead to conclusions about how the behaviour of the methods might be generalised. As a result of this, the choice of methodology, and the framework for assessment of decisions, is a current and future line of research [

15,

38]. Initially, Guitouni and Martel [

43] produced guidelines for the selection of the most suitable MCDA, and Zanakis et al. [

34] used 12 measures of similarity of performance to compare the performance of eight MCDA methods. Subsequently, Ishizaka and Nemery [

32] show how analysing the required input information (data and parameters), the outcomes (choice, sorting, or partial or complete ranking), and their granularity can be an approach making a choice of the appropriate MCDA method. Saaty and Ergu [

38] proposed 16 criteria for evaluating a number of MCDA methods. More recently, Wątróbski et al. [

15], set out a guideline for MCDA method selection, independently of the problem domain, taking into account the lack of knowledge about the description of the situation; an online application is also proposed to assist in making this choice at

http://www.mcda.it/, accessed on 4 April 2021 [

44]. Including a set of properties of the case study described in this paper, this application proposes, from a total of 56 MCDA methods, a group of suitable methods: fuzzy TOPSIS, fuzzy VIKOR, fuzzy AHP, fuzzy Analytic Network Process (ANP), fuzzy AHP + fuzzy TOPSIS, fuzzy ANP + fuzzy TOPSIS, PROMETHEE I, PROMETHEE II, ELECTRE III, ELECTRE TRI, ELECTRE IS, Organization, Rangement Et Synthese De Donnes Relationnelles (ORESTE), etc. The proposed model, combining MACBETH with fuzzy TOPSIS, will be compared with the ranking obtained from applying PROMETHEE II, ELECTRE III, and fuzzy VIKOR.

Shannon entropy was chosen as an objective weighting method because it is a method applied widely and with success in the literature and is a data-based weight-determination technique that computes optimal criteria weights based on the initial decision matrix. Therefore, its use is recognised as enhancing the reliability of results [

45]. A comparison was made of the results obtained with objective fuzzy Shannon weights with those computed from fuzzy De Luca and Termini entropy and exponential Pal and Pal entropy.

TurningPoint, Socrative, Quizziz, Mentimeter, and Kahoot! are the apps assessed in this study because they are the ones most commonly used in teaching, they have a free version for the number of students signed up to the course analysed, and they do not place constraints on the number of questions that can be included in questionnaires [

46].

The article is laid out as follows.

Section 2 contains a review of the literature. Next, the fuzzy TOPSIS methodology is introduced. The model built is then described, with the structuring results, the subjective weighs obtained via the MACBETH approach, and the objective weights computed by fuzzy Shannon entropy, fuzzy De Luca and Termini entropy, and exponential Pal and Pal entropy, with a prior introduction to all methods. The intermediate decision matrices resulting from applying fuzzy TOPSIS are shown below. Finally, the results, the validity of the proposed method, the sensitivity analysis, the conclusions, and future lines of work are set out.

2. Literature Review

There is increasingly powerful evidence of the favourable acceptance of gamification and its effectiveness in promoting highly engaging learning experiences [

7,

47,

48]. For example, Hamari et al. [

49], in their literature review, analysed 24 empirical studies in which gamification of education or learning was the most common field of application, and all the studies considered the learning results to be mostly positive, in terms of increased motivation and participation in learning activities and enjoyment. However, some studies bring out the negative effects of greater competition, difficulties in assessing tasks, and the importance of the design characteristics of the application on the results. In their literature review of 93 studies using Kahoot!, Wang and Tahir [

50], show that gamification can have a beneficial effect on learning at K-12 and higher education, reducing student anxiety and giving favourable results for attention, confidence, concentration, engagement, enjoyment, motivation, perceived learning, and satisfaction. It also has positive effects from the point of view of teachers, such as increasing their own motivation, ease of use, support for training, assessment of the knowledge of students in real time, stimulating students to express their opinions in class, increasing class participation, or reducing the teacher’s workload. Nevertheless, it also states that there are studies that show little or no effect, and that things such as unreliable internet connections, questions and answers that are difficult to read on projector screens, the impossibility of changing the answers once they have been given, the time pressure to respond, or not having enough time or fear of losing are some of the problems mentioned by students. Licorish et al. [

10] note as results of the experiment with Kahoot! that the use of educational games probably minimises distractions and therefore improves the quality of teaching and learning beyond that which comes from the traditional teaching methods. Zainuddin et al. [

48], in their experiment with 94 students using Socrative, Quizizz, and iSpring Learn LMS, showed that its application was effective in assessing students’ learning performance, especially with the formative assessments after finishing each unit. Dell et al. [

51] describe how the performance of students during the game shows a significant correlation with the marks for the course, and also sees games as fun tools to review course content which, can serve as an effective method of determining students’ understanding, progress, and knowledge. Other authors, such as Knutas et al. [

52], Iosup and Epema [

53], Laskowski [

54], and Dicheva et al. [

55] also found improvements in the marks of the participants at all levels of the education system, especially university education [

5]. Huang and Hew [

56] suggest that university students in Hong Kong were more motivated to do activities using gamification outside class, while Huang et al. [

57] worked with pre-degree students and concluded that the group with gamification-enhanced flipped learning was more likely to do pre-class and post-class activities on time, and achieved significantly better marks on the post-course test than those who did not use gamification.

Since the publication of the Gartner [

58] and IEEE [

59] reports predicting that most companies and organisations would be using gamification in the near future [

60], gamification is one of the fastest-growing industries worldwide, with multi-billion-dollar profits, and interest in gamification from the market and from users is still growing [

61]. This increasing interest in gamification has led to the production of evermore applications aimed at different fields, such as advertising, commerce, education, environmental behaviour, enterprise resource planning, exercise, intra-organisational communication and activity, government services and public engagement, science, health, marketing, etc. [

60]. Gamification applications for the education sector include: Padlet, Blinkist, BookWidgets, Brainscape, Breakout EDU, Cerebriti, Classcraft, Mentimeter, ClassDojo, Arcademics, Coursera, Minecraft: Education Edition, Duolingo, Toovari, Edmodo Gamification, Maven, Quizlet, Goose Chase, Knowre, Tinycards, Kahoot!, keySkillset, Khan Academy, Gimkit, Memrise, Pear Deck, Google Forms and Flubaroo, Play Brighter, Udemy, Quizizz, TEDEd, CodeCombat, The World Peace Game, Trivinet, SoloLearn, Class Realm, Yousician, Edpuzzle, Virtonomics, etc. [

62,

63,

64,

65,

66]; additionally, a number of applications produced for a less commercial environment or more related to research include StudyAid [

67], GamiCAD [

68], WeBWorK [

69], the online quiz system designed by Snyder and Hartig [

70] aimed at medical residents, or the gamification plugin of Domínguez et al. [

1] designed as an e-learning platform, and the number of teaching apps is expected to rise considerably in the future [

71].

However, the many apps available on the market make it difficult to choose the most suitable one for a particular degree or course, and although there are some studies, like that of Zainuddin et al. [

7], that provide a literature review about gamification in the educational domain, and states that the platforms and apps most commonly used in research are: ClassDojo and ClassBadges, Ribbonhero of Microsoft, Rain classroom, Quizbot, Duolingo, Kahoot! and Quizizz, Math Widgets, Google + Communities, and iSpring Learn LMS. Acuña [

72] says that FlipQuiz, Quizizz, Socrative, Kahoot, and uLearn Play are the five best applications for university students. Roger et al. [

73] state that Kahoot! and Socrative are the two applications most commonly used in teaching, while Plump and LaRosa [

74] say that Kahoot! is the most used gamification app, with more than 70 million users [

50]. In the statistical study carried out by Göksün and Gürsoy [

65], the activities gamified with Kahoot! had a more positive impact on academic performance and student engagement when compared with a control group and another group that did activities with Quizizz. It was seen that the impact of the activities carried out with Quizizz was lower than that of the instruction method used with the control group based both on academic performance and student engagement. [

9] points out that the use of TurningPoint in the university subject pharmacology improves the performance of students by increasing their participation in class and fixing the knowledge provided by the teacher, as well as allowing the teacher to know what aspects of the class should be better explained, before taking the concepts as known. TurningPoint has also been used in the Faculty of Economics of the University of Valencia in different subjects and teaching sessions, with the result that 82.8% of students consider that its use in class is useful for the development and understanding of the subject; in addition, the participation of the attendees increased, since more than 90% of them participated in using the tool, and the interaction between the audience and the speakers increased notably [

75]. Gokbulut [

76] appreciates that Mentimeter actively engage students in classroom activities and enjoy learning as in Kahoot!. However, in Mentimeter, the personal information of the student is not collected or displayed on the teacher’s screen, so participation in class increases and students feel more comfortable, especially those who are less likely to participate due to the influence of cultural factors, gender, shyness, anxiety, or other factors such as speech impediments [

77]. In the study carried out in [

77], 68% of the students who answered indicated that Mentimeter did not increase learning, but other students, across disciplinary areas, stated that Mentimeter improved content retention and that most of the students increased their learning. A model is thus required that uses multiple criteria, objective and based on the perceptions of teachers and students, to facilitate decision-making in this field.

Contributions in the literature related to the selection of apps in different fields are very few; for example, Basilico et al. [

78] analyse mobile apps for diabetes self-care, because the large number makes it difficult for patients who have no tools for judgement to assess them properly. A pictorial identification scheme was developed for diabetes self-care tools, which identifies the strengths and weaknesses of a diabetes self-care app. Similarly in the area of diabetes treatment, Krishnan and Selvam [

79] use multiple regression analysis to identify success factors in diabetes smartphone apps. Mao et al. [

80] propose a behavioural change technique based on an mHealth App recommendation method to choose the most suitable mHealth apps for users. They do this by codifying information on behavioural change techniques included in each mHealth app and in a similar way for each user. They next developed a prediction model which, together with the AdaBoost algorithm, related behavioural change techniques with a possible user; this then recommends the app with the highest behavioural change technique, matching levels to a possible user. Păsărelu et al. [

81] identify 109 apps that analyse assessment, treatment, or both in attention-deficit/hyperactivity disorder. The following information was collected for each app: target population, confidentiality, available language besides English, cost, number of downloads, category, ratings, main purpose, and empirical support and type of developer. Descriptive statistics were produced for each of these categories. Robillard et al. [

82] assess mental health apps in terms of availability, readability, and privacy-related content of the privacy policies and terms of agreement. In a field other than medicine, Beck et al. [

83] identify 57 apps out of a total 2400 that target direct energy use and include an element of gamification; the apps are then assessed statistically in the categories: gamification components, game elements, and behavioural constructs.

3. Fuzzy TOPSIS

TOPSIS was developed by Hwang and Yoon [

84] as a method of choosing the alternatives with the shortest distance to a positive ideal solution (PIS) and the longest distance to a negative ideal solution (NIS). While PIS is the solution preferred by the decision maker, maximises the criteria of the benefit type, and minimises the criteria of cost type, NIS acts in the opposite way. TOPSIS provides a cardinal raking of the alternatives according to the best distance to PIS and the greatest distance to NIS. It also does not matter whether the attributes are independent [

85,

86]. A broad literature review including 266 studies up to the year 2012 can be seen in Behzadian et al. [

87].

Subsequently, Chen [

88] adapted TOPSIS to the fuzzy environment. Fuzzy TOPSIS has been widely and successfully used in real-world decision problems [

19]. Some examples of these applications can be seen in the literature reviews carried out by Salih et al. [

89] and Palczewski and Sałabun [

19].

Bottani and Rizzi [

90] and Asuquo [

17] explain the advantages of choosing fuzzy TOPSIS as a multicriteria technique:

It is easy to understand;

It is a realistic compensatory method that can include or exclude alternatives based on hard cut-offs;

It is easy to add more criteria without the need to start again;

The mathematical notions behind fuzzy TOPSIS are simple.

However, TOPSIS and fuzzy TOPSIS have some disadvantages, such as rank reversal [

91]. That is, ranking changes in the alternatives when an alternative is added to or removed from the hierarchy, and so the validity of the method could be in question. Furthermore, in fuzzy TOPSIS, the problems are related to the fact that there are no consistency and reliability checks, and these aspects are more relevant in decision-making and may lead to misleading results [

92]. The assessment of alternatives is also carried out through linguistic expressions, in which the linguistic terms must be quantified within a previously established value scale. The quantifying of qualitative values generally involves translating the standard linguistic terms into values on a previously agreed scale. Therefore, to address problems defined in this way, the uncertain information given by the linguistic terms must be taken into account [

93].

Zadeh [

94] proposed fuzzy set theory to formulate real decision problems in which alternative ratings and criteria weights cannot be precisely defined, due to the existence of: unquantifiable information, incomplete information, unobtainable information, and partial ignorance [

95].

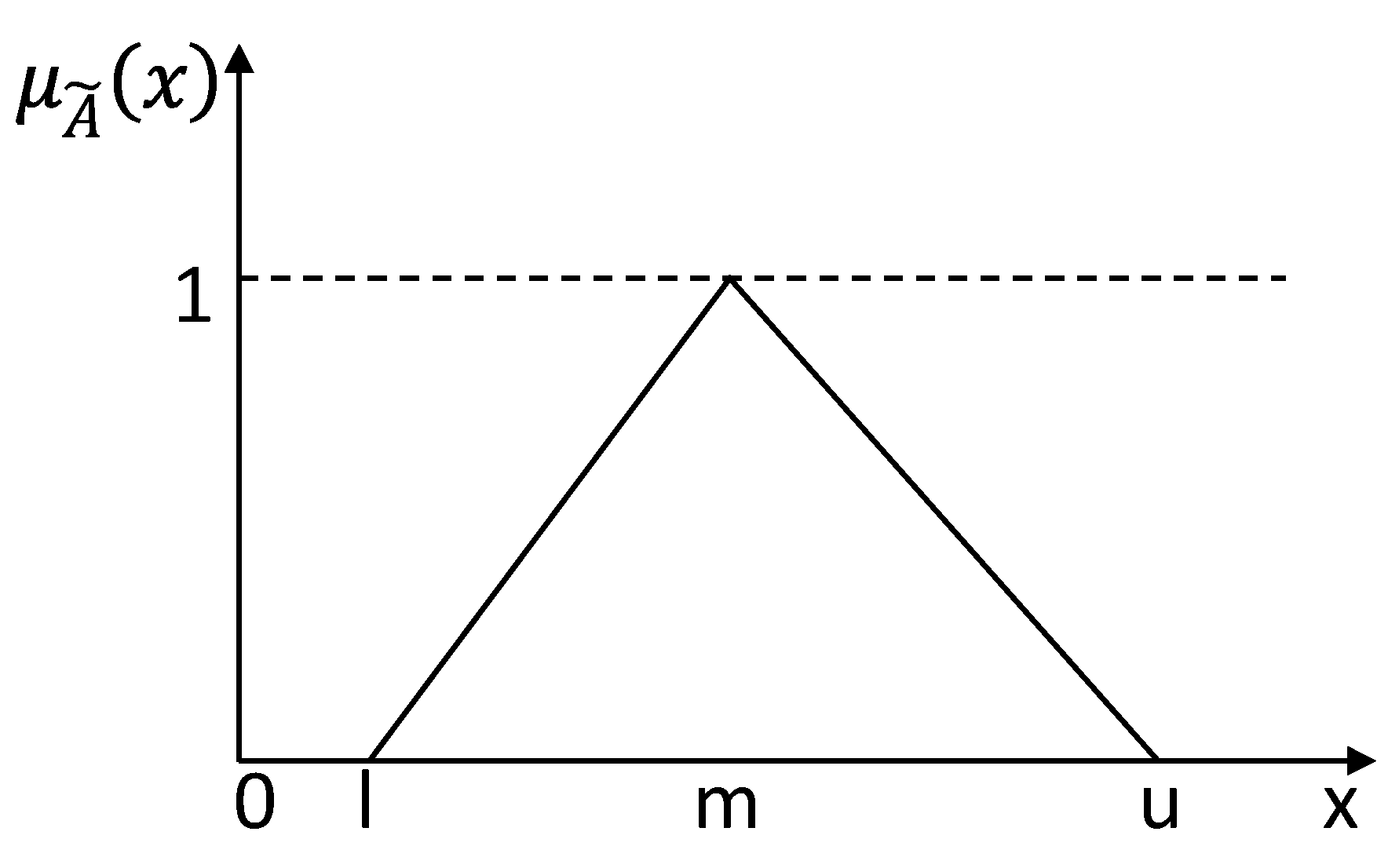

A Triangular Fuzzy Number (TFN)

can be defined as a triplet

with a membership function

, as shown in Equation (1) [

88]:

where

,

l, and

u are the lower and upper value of fuzzy number

and m the modal value (see

Figure 1).

Let

and

be two TFNs, then the operational laws of these triangular fuzzy numbers are as follows [

96]:

and the distance between the two TFN’s

and

, according to the vertex method established in Chen [

88] is calculated by Equation (8).

In a decision problem with criteria and alternatives , the best alternative in fuzzy TOPSIS should have the shortest distance to a fuzzy positive ideal solution (FPIS) and the farthest distance from a fuzzy negative ideal solution (FNIS). The FPIS is computed using the best performance values for each criterion and the FNIS is generated from the worst performance values.

In fuzzy TOPSIS, the criteria should satisfy one of the following conditions to ensure that they are monotonic [

17]:

As the value of the variable increases, the other variables will also increase;

As the value of the variable increases, the other variables decrease.

Monotonic criteria can be classified into benefit or cost type. A criterion can be classified as of benefit type if, the more desirable the alternative, the higher the score of the criterion. On the other hand, cost type criteria will classify the alternative as less desirable the higher its value in that criterion.

In fuzzy TOPSIS, the decision makers use linguistic variables to obtain the weightings of the criteria and the ratings of the alternatives. If there is a decision group made up of

k individuals, the fuzzy weight and rating of the

kth decision maker with respect to the

ith alternative in the

jth criterion are respectively:

where

and

.

The aggregate fuzzy weights

of each criterion given by

k decision makers are calculated using Equation (11).

Equation (12) is used to calculate the aggregate ratings of the alternatives [

97].

A fuzzy multicriteria decision-making problem can be expressed in matrix form as is shown in Equation (13) [

88]:

with

and

linguistic variables be described by triangular fuzzy numbers.

The weightings of the criteria can be calculated by assigning directly the following linguistic variables:

The ratings of the alternatives are found using the linguistic variables of

Table 1 [

88].

The linear scale transformation is used to transform the various criteria scales into a comparable scale. Thus, we obtain the normalised fuzzy decision matrix

. The normalisation method should be used to transform the various criteria scales into a comparable scale, which ensures compatibility between the assessments of the criteria and the linguistic ratings of the subjective criteria [

98].

where

in the case of benefit type criteria,

in the case of cost type criteria

Next, the weighted normalised decision matrix

is calculated by multiplying the weightings of the criteria

, by the elements

of the normalised fuzzy decision matrix.

A positive ideal point

and a negative ideal point

should be defined using the following equations [

99]:

The calculation of Euclidean distances

and

of each weighted alternative from the FPIS (

and FNIS (

are computed using Equations (18) and (19) [

100].

Finally, the closeness coefficient,

, of each alternative

i is calculated using Equation (20) [

88].

The ranking of alternatives is calculated considering that an alternative is closer to the FPIS and further from the FNIS as approaches 1. is the fuzzy satisfaction degree in the ith alternative. is considered to be the fuzzy gap degree in the ith alternative.

5. Results and Discussion

The detailed characteristics of each app included in the research are described on the official website of each application (

https://account.turningtechnologies.com/account/, accessed on 4 April 2021 [

129], accessed on 4 April 2021;

https://www.socrative.com/, accessed on 4 April 2021 [

130];

https://quizizz.com/, accessed on 4 April 2021 [

131];

https://www.mentimeter.com/, accessed on 4 April 2021 [

132] and

https://kahoot.com/, accessed on 4 April 2021 [

133]). TurningPoint and Quizizz are free applications, while Socrative has a number of versions (free, Socrative PRO for K–12 teachers, and Socrative PRO for Higher Ed & Corporate), which is also the case with Kahoot! (free, Standard, Pro, Kahoot! 360, and Kahoot! 360Pro) and Mentimeter (free, Basic, Pro, and Enterprise). This analysis used the free version of each app.

Once the weightings and their means of integration are obtained, fuzzy TOPSIS is applied to obtain the ranking of the apps. The fuzzy weighted normalised decision matrix that results from combining the objective and subjective weights of

Table 4 and

Table 7, respectively, and applying

and

is shown in

Table 8. These are the values considered most appropriate and which give similar importance to objective and subjective weightings.

Since all the criteria are of the benefit type, the positive ideal point is defined as and the negative ideal point from Equations (16) and (17). The Euclidean distances

and

of each alternative from the

and

and the closeness coefficient

in the case

and

are shown in

Table 9. It can be seen that Quizizz, Socrative, and Kahoot! are chosen in first, second, and third place, respectively.

Table 10 shows the distances, normalised closeness coefficient, and ranking of alternatives in the case of

and

, using objective weights from fuzzy Luca and Termini entropy, and

Table 11 includes the same parameters but applying the objective weights from exponential entropy. The same ranking in the first three positions as for Shannon entropy is obtained from exponential entropy but, in the case of De Luca and Termini entropy, Socrative is in first place in the ranking, followed by TurningPoint and Quizizz. This is quite surprising, as TurningPoint is in a lower position in the ranking with the other objective weights and MCDA techniques used to validate the method (see the Validity of the proposed method section following).

5.1. Validity of the Proposed Method

The feasibility and validity of the proposed method is tested through PROMETHEE II, ELECTRE III, and fuzzy VIKOR (some of the methods proposed by the application that recommend the most suitable MCDA method carried out by Wątróbski et al. [

15]). Objective weights from fuzzy Shannon entropy and subjective weights from MACBETH and

and

were used in all the MCDA applied.

In PROMETHEE II, the type I or strict immediate preference function was used in all criteria. The result was to obtain the positive

and negative

outranking flows and the net outranking flow

of alternative A, as shown in

Table 12.

ELECTRE III performs the ranking from antagonistic classifications (ascending and descending distillation), and orders the alternatives from best to least good, and from worst to least bad. This is done using fuzzy overclassification logic. The data for the alternatives were normalised to a scale of 0 to 10. Therefore, for each criterion

, the indifference

and preference coefficients

must be the same for all criteria [

134]; the veto threshold is not considered since for normalisation of the values of the alternatives with respect to the criteria, the differences between these values are very small, and the introduction of high values for the parameter makes no sense in this case, since in some criteria the preference threshold

plays this role.

Table 13 shows the ascending ranking (from the worst alternative to the best), descending ranking (from the best alternative to the worst), and average ranking (to obtain a complete ranking, the final ranking is held to be an average of the ascending and descending ranking).

Table 14 shows the dominance matrix.

In the fuzzy VIKOR method,

, where

is the number of alternatives. The

and

are respectively the fuzzy separation of alternative

from the fuzzy best value

and the separation of alternative

from the fuzzy worst value

.

gives the fuzzy separation measure of an alternative from the best alternative.

,

, and

are defuzzified using the Centre of Area (COA) method.

The resulting crisp

,

, and

and the corresponding associated rankings asociados are shown in

Table 15. The lower

, the better the alternative.

Table 16 summarises the rankings obtained with MACBETH+TOPSIS, fuzzy VIKOR, PROMETHEE II, and ELECTRE III. It can be seen that all the techniques give Quizizz as the best solution, followed by Socrative.

In order to validate the proposed method, the similarity of the rankings obtained with all the MCDA techniques used was assessed using the Value of Similarity (

) coefficient developed by Salabun and Urganiak [

135]. This coefficient is strongly correlated with the difference between two rankings at particular positions, and the top of the ranking has a more significant influence on similarity than the bottom. The

coefficient is calculated using Equation (30):

where

is the length of ranking and

and

are defined as the place in the ranking for the ith element in ranking

x and ranking

y, respectively. If the

coefficient is less than 0.234, then the similarity is low, and if it is higher than 0.808, then the similarity is high [

135].

Table 17 shows the

coefficients of the ranking of the method described in this research with respect to those used to validate the method. Therefore, in all cases the similarity is high, but it is slightly higher for the ranking obtained from ELECTRE III.

5.2. Sensitivity Analysis

The sensitivity analysis performed by modifying the values of

and

can be seen in

Table 18,

Table 19 and

Table 20 with the objective weights obtained from fuzzy Shannon entropy, fuzzy De Luca and Termini entropy, and exponential entropy, respectively.

Table 16 shows that as the influence of the subjective weights increases, especially for very high values

, a permutation in the ranking of the fourth and fifth positions takes place. If only the subjective weights are considered (

), Socrative is the alternative ranked first, but in all other cases, when the objective weights are taken into account, Quizizz is the alternative chosen. Therefore, it can be seen that the subjective weights have no influence on the ranking when

and that Quizizz, Socrative, and Kahoot! are ranked in first, second, and third places, respectively, in these cases. The results demonstrate the need to combine subjective and objective weights to obtain more accurate results in the decision models, since, if only the subjective weights had been used, the results could have been misleading.

The sensitivity analysis performed by modifying the values of

and

using objective weights from fuzzy De Luca and Termini entropy is shown in

Table 19. It can be seen that, as

, Socrative is the first-placed alternative, followed by Quizizz. When only the objective weights are included, TurningPoint is the highest-valued alternative, followed by Socrative. The other alternatives also undergo changes in the ranking; for example, Kahoot! goes from last place, when only objective weights are considered, to fourth place when objective and subjective weights are given equal weight, and finally to third place when

. TurningPoint goes from being the best alternative, when only objective weights are considered, to being in second place when

, third place when

, and finally fourth place when

. It therefore seems that in this case, the results are unstable in the classification of alternatives, and the classification of an alternative may vary as the contributions of the objective or subjective weights are altered.

The sensitivity analysis performed by modifying the values of

and

using objective weights from Pal and Pal entropy is shown in

Table 20. Quizziz and Socrative are the first and second alternatives, respectively, in all cases except when only subjective weights are considered. Kahoot!, TurningPoint, and Mentimeter are in third, fourth, and fifth place, respectively, in all cases; there is no change in the classification as the contribution of the objective and subjective weights is altered. These results show that the model is very stable and robust. Furthermore, these results are more in agreement with those obtained with fuzzy Shannon entropy.

A sensitivity analysis was also carried out, increasing and decreasing by 10% and 20% the weights of each criterion with respect to those obtained from MACBETH+Fuzzy Shannon entropy, while maintaining the weights assigned to the other criteria, to see whether this leads to any changes in the ranking of alternatives.

Figure 7 shows the results of these variations. There is only one permutation in the ranking, between Mentimeter and TurningPoint, which move to the fifth and fourth places, respectively, when the weighting of the Control of learning criterion is decreased by 20%. The model is therefore seen to be stable.

The results were shown to the teacher, who was asked his opinion. He remarked that due to the characteristics of the course, subject, and student body, Quizizz was the alternative he considered most suitable, too.

Quizizz was therefore chosen as the application to do gamification in the Manufacturing Systems and Industrial Organisation course. Specifically, Quizizz was applied in the practical and problem classes as a way of remembering concepts and increasing student motivation. Students were divided into two groups (Group 1 and Group 2) to do these practical exercises and problems. These questionnaires include an extra question, about their assessment of what can be learnt with the tool used. The first year that Quizizz was used with students, 59.7% of students in Group 1 considered that the app was good or very good for learning, with 39% precision in giving correct answers; in Group 2, 90% of students valued the learning with Quizizz as good or very good, with a precision in the results of 44%. The following year, 73.17% of students in Group 1 valued it positively, with an academic result of 52% of correct answers; 72% of the students in Group 2 valued it positively, while the precision in the answers given was 61%. It was seen that Group 2 had better academic results than Group 1 in both years. The gamification activities were undertaken first in Group 1, and then a week later in Group 2. It seems that the students in Group 2, once they knew what Group 1 had done, performed better academically. It is also seen that the academic results have increased over the academic years, and so it is likely that the results will improve 10% in the next year.

6. Conclusions

There is ever stronger evidence of the favourable acceptance of gamification and its effectiveness in favouring highly engaging learning experiences. The many benefits described have led to a considerable increase in the number of applications aimed at gamification in teaching. Choosing the best one for a programme or year has thus become a complex decision. Nevertheless, the literature review carried out on different databases has shown that there are no studies using fuzzy multicriteria techniques to analyse the selection of gamification apps in university courses.

This study describes a model combining fuzzy TOPSIS with the MACBETH approach and fuzzy Shannon entropy, in order to choose the most suitable gamification application in the second-year degree programmes in Electrical Engineering and Industrial and Automatic Electronic Engineering at the Higher Technical School of Industrial Engineering at the Ciudad Real campus of the University of Castilla-La Mancha (Spain).

In the literature, fuzzy TOPSIS is usually combined with AHP or fuzzy AHP, despite the many criticisms directed at AHP. However, this study is the first in the literature to combine subjective weights obtained via MACBETH with objective weights computed using fuzzy Shannon entropy, and with the fuzzy TOPSIS methodology to obtain the ranking of alternatives. MACBETH provides a complete methodology for ensuring the accuracy of the weightings in the criteria, such as the reference levels and the definitions of the descriptors associated with each criterion; it also supplies a variety of tools to include doubts or incomplete knowledge of the decision maker, as well as the need to validate the results as they are obtained; furthermore, it avoids the many criticisms aimed at AHP. Additionally, weights derived from the data computed via fuzzy Shannon entropy are included in the study, giving greater reliability to the results. Objective weights from fuzzy De Luca and Termini entropy and exponential entropy computed with the Pal and Pal definition are compared with the obtained from fuzzy Shannon entropy, as well as the rankings obtained using these objective weights combined with the subjective weighs produced by MACBETH. The same ranking in the top three places as using Shannon entropy is obtained from exponential entropy but, in the case of De Luca and Termini entropy, Socrative is in first place in the ranking, followed by TurningPoint and Quizizz.

Objective and subjective weights are combined by assuming they are of equal importance. The results show that Quizizz, Socrative, and Kahoot! are to be found in first, second, and third place, respectively. The results of the proposed method are validated with PROMETHEE II, ELECTRE III, and fuzzy VIKOR. All the MCDA techniques used return Quizizz as the best solution, followed by Socrative. The similarity between the rankings of the various techniques was computed using the coefficient, and values greater than 0.808 were obtained in all cases, and thus great similarity, although it was slightly greater for ELECTRE III.

The results obtained by the model were shown to the teacher of the subject, who also considered that Quizizz was the most suitable gamification tool. The solution was also contrasted with the real experience of the use of Quizizz over a number of academic years in a course. An average of 74.85% of the students considered that, in the first year that it was used, the learning experience was very good or good. In the second year, an average of 72.59% of students considered the learning experience to be very good or good. With respect to the learning results, the first year achieved a percentage of correct answers to the questionnaire of 41.5%, while in the second year the average of correct answers was 56.5%.

The models, criteria, and weightings of the criteria can be used as described in this study in other courses and programmes, or, indeed, adapted to the specifics of each course.

As future lines of development, the aim is to include, together with the apps assessed, other additional applications, to see whether Quizizz is still the first choice; the alternatives assessed in this study also continuously introduce new utilities, and so their valuation with respect to some of the criteria may change. Students’ achievements with different apps will also be tested over a number of years in the course or degree programme, as an increase in learning has been detected as they are used over successive academic years. It is also intended that a study of the most suitable apps in the area of master’s degrees be carried out. New methods to obtain the objective weights could be developed and compared. Additionally, group decision-making, considering all the teachers in the field the course belongs to, could be involved in the proposed method. This could be applied to the course analysed in this study, or to other courses. This might allow the most suitable gamification apps to be identified for each subject taught. Modern MCDA methods could also be used to validate the method proposed, provided they were adapted to the characteristics of the problem described in this research. One such proposal, for example, is the Characteristic Objects METhod (COMET), which is completely free from the rank reversal phenomenon.