A Convergent Collocation Approach for Generalized Fractional Integro-Differential Equations Using Jacobi Poly-Fractonomials

Abstract

1. Introduction

1.1. Generalized Fractional Integro-Differential Equations

1.2. Preliminaries. Jacobi Poly-Fractonomials and Function Approximation

1.2.1. Definition and Properties of Shifted Jacobi Poly-Fractonomials

1.2.2. Function Approximation Using Jacobi Poly-Fractonomials

2. Collocation Method for GFIDEs

3. Convergence Analysis

4. Error Analysis

Error Estimate

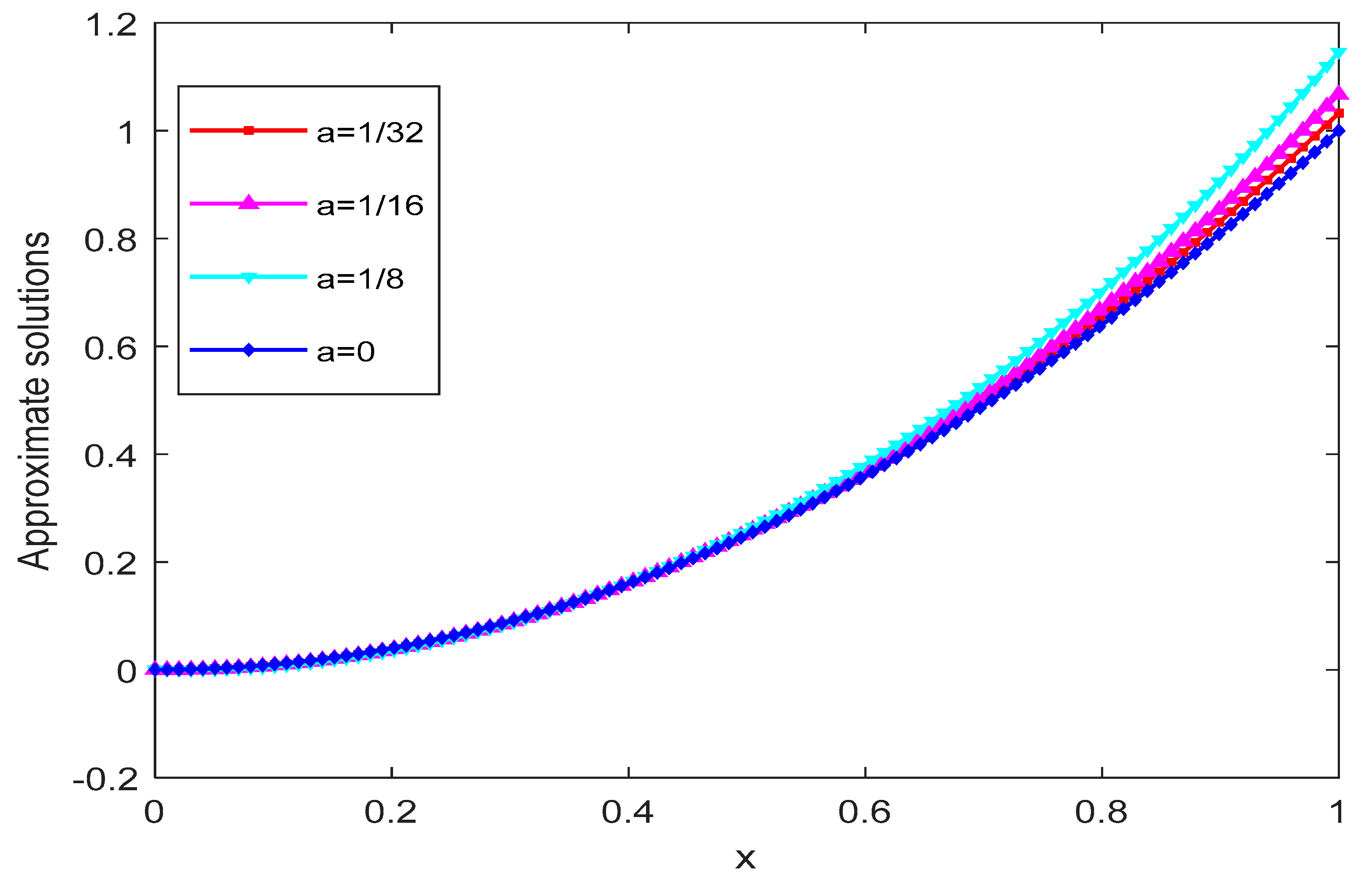

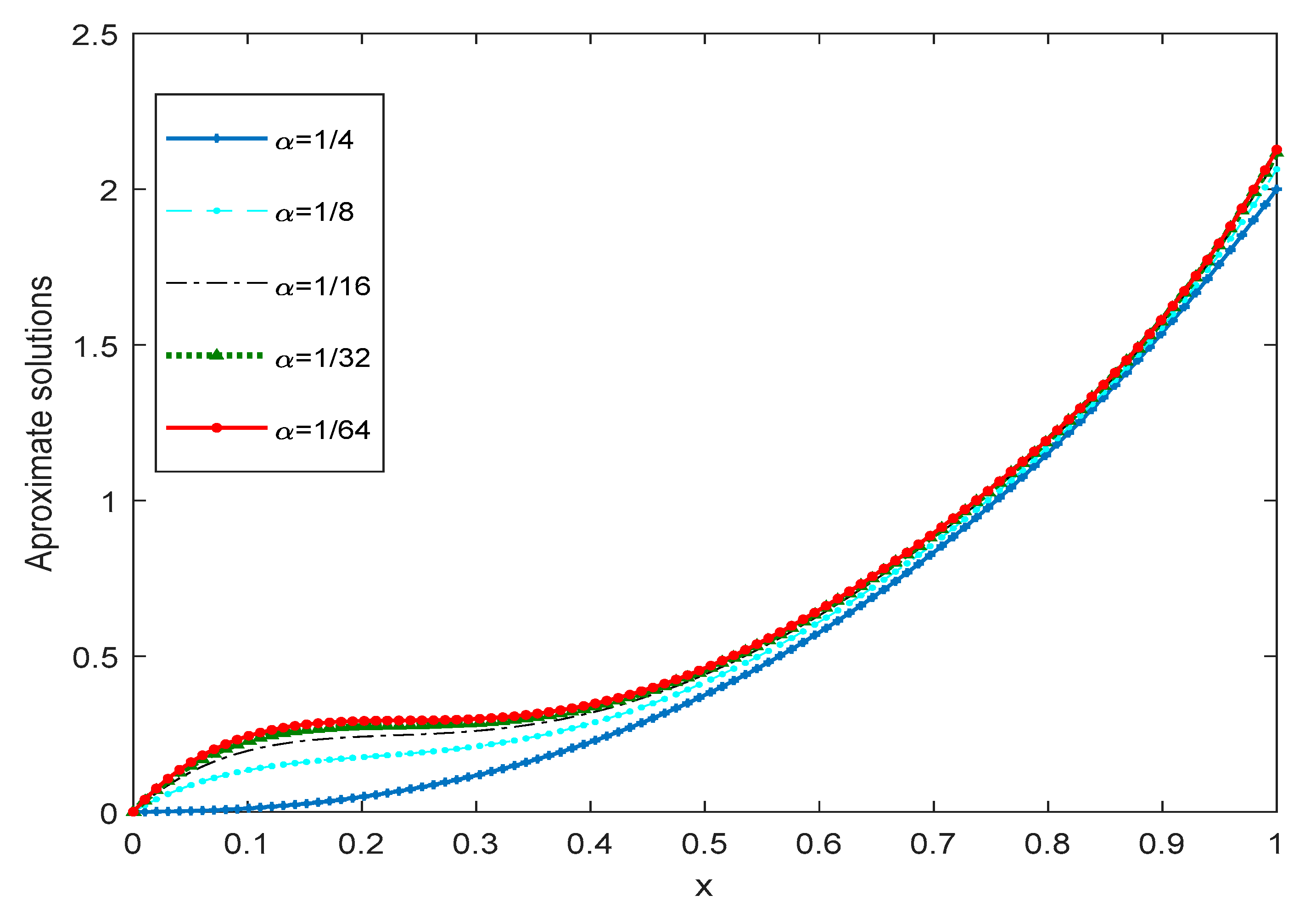

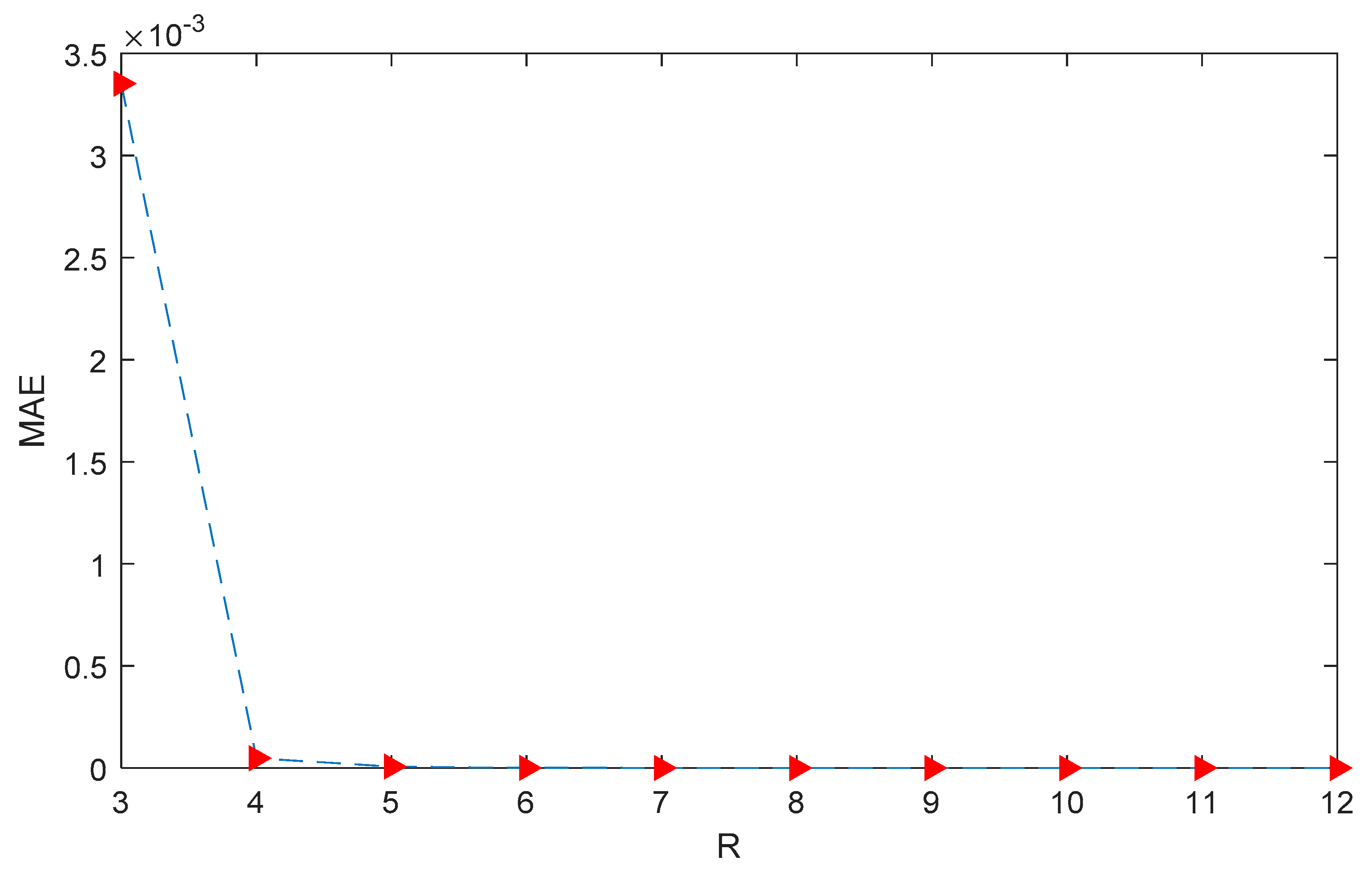

5. Numerical Examples

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Srivastava, H.M. Fractional-order derivatives and integrals: Introductory overview and recent developments. Kyungpook Math. J. 2020, 60, 73–116. [Google Scholar]

- Kreyszig, E. Introductory Functional Analysis with Applications; Wiley: New York, NY, USA, 1978. [Google Scholar]

- Podlubny, I. Fractional Differential Equations of Mathematics in Science and Engineering; Academic Press: San Diego, CA, USA, 1999; Volume 198. [Google Scholar]

- Sabatier, J.; Agrawal, O.P.; Machado, J.T. Advances in Fractional Calculus; Springer: Berlin/Heidelberg, Germany, 2007; Volume 4. [Google Scholar]

- Oldham, K.; Spanier, J. The Fractional Calculus Theory and Applications of Differentiation and Integration to Arbitrary Order; Elsevier: Amsterdam, The Netherlands, 1974; Volume 111. [Google Scholar]

- Oldham, K.B.; Spanier, J. The Fractional Calculus, Vol. of Mathematics in Science and Engineering; Academic Press: New York, NY, USA; London, UK, 1974; Volume 111. [Google Scholar]

- Miller, K.S.; Ross, B. An introduction to the Fractional Calculus and Fractional Differential Equations; Wiley: New York, NY, USA, 1993. [Google Scholar]

- Magin, R.L. Fractional calculus in bioengineering, part 2. Critical reviews TM. Biomed Eng. 2004, 32, 195–377. [Google Scholar]

- Goodrich, C.S. Existence of a positive solution to a system of discrete fractional boundary value problems. Appl. Math. Comput. 2011, 217, 4740–4753. [Google Scholar] [CrossRef]

- Oldham, K.B. Fractional differential equations in electrochemistry. Adv. Eng. Softw. 2010, 41, 9–12. [Google Scholar] [CrossRef]

- Engheta, N. On fractional calculus and fractional multipoles in electromagnetism. IEEE Trans. Antennas Propag. 1996, 44, 554–566. [Google Scholar] [CrossRef]

- Saadatmandi, A.; Dehghan, M. A Legendre collocation method for fractional integro-differential equations. J. Vib. Control 2011, 17, 2050–2058. [Google Scholar] [CrossRef]

- Bagley, R.L.; Torvik, P.J. Fractional calculus in the transient analysis of viscoelastically damped structures. AIAA J. 1985, 23, 918–925. [Google Scholar] [CrossRef]

- Tarasov, V.E. Fractional integro-differential equations for electromagnetic waves in dielectric media. Theor. Math. Phys. 2009, 158, 355–359. [Google Scholar] [CrossRef]

- Angell, J.; Olmstead, W.E. Singular perturbation analysis of an integro -differential equation modelling filament stretching. Z. Für Angew. Math. Und Phys. Zamp. 1985, 36, 487–490. [Google Scholar] [CrossRef]

- Khosro, S.; Machado, J.T.; Masti, I. On dual Bernstein polynomials and stochastic fractional integro-differential equations. Math. Methods Appl. Sci. 2020, 43, 9928–9947. [Google Scholar]

- Kilbas, A.A.; Srivastava, H.M.; Trujillo, J.J. Theory and Applications of Fractional Differential Equations; Elsevier: Amsterdam, The Netherlands, 2006; Volume 204. [Google Scholar]

- Yanxin, W.; Zhu, L.; Wang, Z. Fractional-order Euler functions for solving fractional integro-differential equations with weakly singular kernel. Adv. Differ. Equ. 2018, 2018, 254. [Google Scholar]

- Saadatmandi, A.; Dehghan, M. A new operational matrix for solving fractional-order differential equations. Comput. Math. Appl. 2010, 59, 1326–1336. [Google Scholar] [CrossRef]

- Kumar, K.; Pandey, R.K.; Sharma, S. Numerical Schemes for the Generalized Abel’s Integral Equations. Int. J. Appl. Comput. Math. 2018, 4, 68. [Google Scholar] [CrossRef]

- Bonilla, B.; Rivero, M.; Trujillo, J.J. On systems of linear fractional differential equations with constant coefficients. Appl. Math. Comput. 2007, 187, 68–78. [Google Scholar] [CrossRef]

- Momani, S.; Aslam Noor, M. Numerical methods for fourth-order fractional integro-differential equations. Appl. Math. Comput. 2006, 182, 754–760. [Google Scholar] [CrossRef]

- Momani, S.; Qaralleh, R. An efficient method for solving systems of fractional integro-differential equations. Comput. Math. Appl. 2006, 52, 459–470. [Google Scholar] [CrossRef]

- Bhrawy, A.; Zaky, M. Shifted fractional-order Jacobi orthogonal functions: Application to a system of fractional differential equations. Appl. Math. Model. 2016, 40, 832–845. [Google Scholar] [CrossRef]

- Kojabad, E.A.; Rezapour, S. Approximate solutions of a sum-type fractional integro-differential equation by using Chebyshev and Legendre polynomials. Adv. Differ. Equ. 2017, 2017, 1–18. [Google Scholar]

- Rawashdeh, E.A. Numerical solution of fractional integro-differential equations by collocation method. Appl. Math. Comput. 2006, 176, 1–6. [Google Scholar] [CrossRef]

- Syam, M.; Al-Refai, M. Solving fractional diffusion equation via the collocation method based on fractional Legendre functions. J. Comput. Methods Phys. 2014, 2014. [Google Scholar] [CrossRef]

- Eslahchi, M.R.; Dehghan, M.; Parvizi, M. Application of the collocation method for solving nonlinear fractional integro-differential equations. J. Comput. Appl. Math. 2014, 257, 105–128. [Google Scholar] [CrossRef]

- Sharma, S.; Pandey, R.K.; Kumar, K. Collocation method with convergence for generalized fractional integro-differential equations. J. Comput. Appl. Math. 2018, 342, 419–430. [Google Scholar] [CrossRef]

- Odibat, Z.M. Analytic study on linear systems of fractional differential equations. Comput. Math. Appl. 2010, 59, 1171–1183. [Google Scholar] [CrossRef]

- Ma, J.; Liu, J.; Zhou, Z. Convergence analysis of moving finite element methods for space fractional differential equations. J. Comput. Appl. Math. 2014, 255, 661–670. [Google Scholar] [CrossRef]

- Raslan, K.R.; Ali, K.K.; Mohamed, E.M. Spectral Tau method for solving general fractional order differential equations with linear functional argument. J. Egypt. Math. Soc. 2019, 27, 33. [Google Scholar] [CrossRef]

- Zurigat, M.; Momani, S.; Odibat, Z.; Ahmad, A. The homotopy analysis method for handling systems of fractional differential equations. Appl. Math. Model. 2010, 34, 24–35. [Google Scholar] [CrossRef]

- Hassani, H.; Machado, J.T.; Naraghirad, E.; Sadeghi, B. Solving nonlinear systems of fractional-order partial differential equations using an optimization technique based on generalized polynomials. Comput. Appl. Math. 2020, 39, 1–19. [Google Scholar] [CrossRef]

- Agrawal, O.P. Generalized variational problems and Euler–Lagrange equations. Comput. Math. Appl. 2010, 59, 1852–1864. [Google Scholar] [CrossRef]

- Zayernouri, M.; Karniadakis, G.E. Fractional Sturm–Liouville eigen-problems: Theory and numerical approximation. J. Comput. Phys. 2013, 252, 495–517. [Google Scholar] [CrossRef]

| R | Example 1 | Example 2 |

|---|---|---|

| 2 | ||

| 3 | ||

| 4 |

| R | Present Method | Method [29] |

|---|---|---|

| 2 | ||

| 3 |

| R | Present Method | Method [29] |

|---|---|---|

| 3 | ||

| 4 | ||

| 5 | ||

| 6 |

| R | Example 3 | Example 5 |

|---|---|---|

| 3 | ||

| 4 | ||

| 5 | ||

| 6 | ||

| 7 | ||

| 8 | ||

| 9 | ||

| 10 | ||

| 11 | ||

| 12 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kumar, S.; Pandey, R.K.; Srivastava, H.M.; Singh, G.N. A Convergent Collocation Approach for Generalized Fractional Integro-Differential Equations Using Jacobi Poly-Fractonomials. Mathematics 2021, 9, 979. https://doi.org/10.3390/math9090979

Kumar S, Pandey RK, Srivastava HM, Singh GN. A Convergent Collocation Approach for Generalized Fractional Integro-Differential Equations Using Jacobi Poly-Fractonomials. Mathematics. 2021; 9(9):979. https://doi.org/10.3390/math9090979

Chicago/Turabian StyleKumar, Sandeep, Rajesh K. Pandey, H. M. Srivastava, and G. N. Singh. 2021. "A Convergent Collocation Approach for Generalized Fractional Integro-Differential Equations Using Jacobi Poly-Fractonomials" Mathematics 9, no. 9: 979. https://doi.org/10.3390/math9090979

APA StyleKumar, S., Pandey, R. K., Srivastava, H. M., & Singh, G. N. (2021). A Convergent Collocation Approach for Generalized Fractional Integro-Differential Equations Using Jacobi Poly-Fractonomials. Mathematics, 9(9), 979. https://doi.org/10.3390/math9090979