1. Introduction

Since December 2019, COVID-19 has been featured in the media as a severe health problem. This Severe Acute Respiratory Syndrome Coronavirus (SARS-CoV-2) is part of the coronavirus family that gets transmitted through direct contact or by fomites. Symptoms of coronavirus infection include fever, cough, fatigue, and a loss of taste. Coronavirus can cause severe respiratory problems such as pneumonia, lung disorders, and kidney malfunction in some cases. A serial interval of five to seven days and a reproduction rate of two to three people make the virus very dangerous [

1]. Several people are healthy carriers of a virus, which causes between 5% and 10% of acute respiratory infections [

2]. To stop the spread of the COVID-19 infection, the timely quarantine, diagnosis, and treatment of infected people are the most necessary and important work.

RT-PCR [

3] and Enzyme-linked Immunosorbent Assay (ELISA) [

4] are the most widely used methods for identifying the novel coronavirus. RT-PCR is the primary screening procedure for identifying COVID-19 cases as it can detect the virus’ RNA in lower respiratory tract samples. The samples are collected in various ways, including nasopharyngeal and oropharyngeal swabs. Most countries are experiencing a shortage of testing kits due to the rapid increase in the number of infected people. Therefore, it would be prudent to consider other methods of identifying COVID-19-contaminated patients so that they can be isolated and the impact of the pandemic on many people can be mitigated.

The use of Computed Tomography (CT) for the diagnosis of infected people is a complement to RT-PCR. As every hospital has CT imaging machines, COVID-19 detection based on CT imaging can be applied efficiently as a way to test infected patients, but it does require expert diagnosis and additional time. Therefore, Computer-aided Diagnosis (CAD) systems can be used to classify COVID-19 patients based on their chest CT images [

5]. CT images can be employed for COVID-19 screening for the following reasons:

Ability to detect the disease quickly and enable rapid diagnosis.

Utilization of readily available and accessible radiological images.

Utilization of these systems in isolation rooms, which eliminates the risk of transmission.

The use of Deep Learning-based techniques has made significant progress in recent years in terms of efficiency and prediction accuracy. They have proven their generalization ability in solving complex computer-vision problems, especially within the medical and biological fields, such as organs recognition [

6], bacterial colony classification [

7,

8], and disease identification [

9]. CNNs have demonstrated exceptional performance in the medical imaging field compared to other networks [

10].

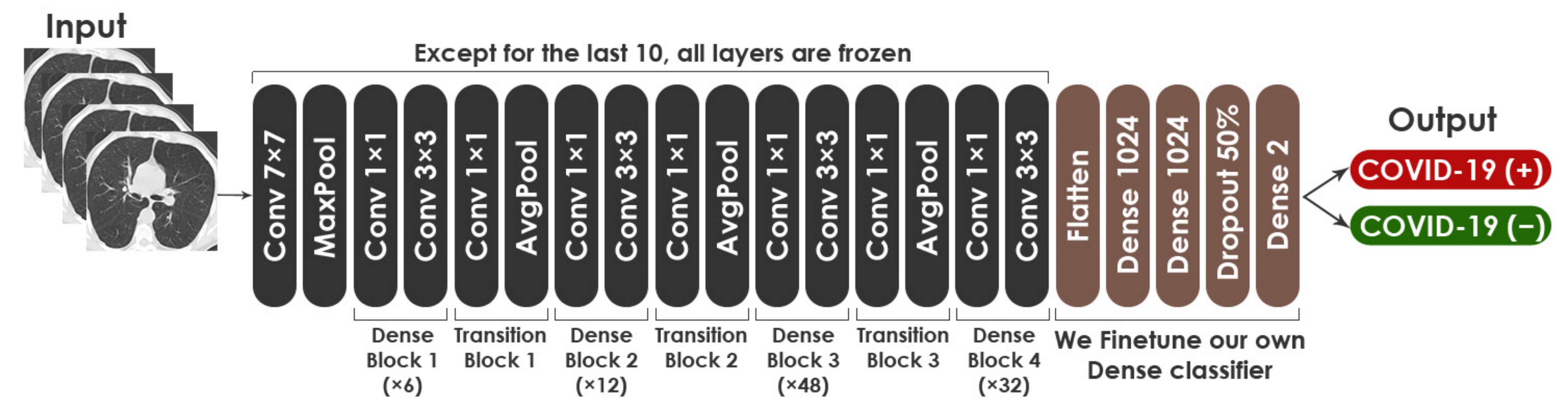

The following study presents an efficient Deep Learning-based CAD system for detecting COVID-19. We combined three well-known Deep Learning models (the Visual Geometry Group (VGG)-19 [

11], the Residual Network (ResNet)-50 [

12], and the Densely Connected Convolutional Network (DenseNet)-201 [

13]) using Stacking and Weighted Average Ensemble (WAE), following the basic philosophy that the performance is better with a combination of various classifiers than with individual classifiers. Further, the insufficient training data issue was resolved by using Data Augmentation technique [

14], which enhance the training dataset by adding the transformed original instances. The performance of the system we proposed makes it clear that CT images can be employed in a real-world scenario for the detection of COVID-19. The contributions of this paper are as follows:

A set of Ensemble Learning-based models was proposed to detect COVID-19 infected patients, extending the standard by modifying the topology of three well-recognized CNNs and picking the optimal set of hyper-parameters for network training.

The proposed Ensemble Learning-based models were tested using two different chest CT-scan datasets.

Various strategies were used to deal with the small datasets, including fine-tuning, regularization, checkpoint callback, and data augmentation.

For the first time, the concept of WAE is applied to the specific COVID-19 detection problem, achieving a high level of performance compared to the existing methods.

The paper is organized in the following manner.

Section 2 discusses the related work.

Section 3 describes the proposed three Ensemble Learning-based models for the detection of COVID-19 from chest CT images.

Section 4 presents the experimental results.

Section 5 provides discussions of the results. Finally,

Section 6 includes the conclusion.

2. Related Work

Due to the evolution of medical image processing techniques, the development of intelligent diagnosis and prediction tools began to emerge at a rapid pace [

15]. The use of Machine Learning methods is widely accepted as a useful tool for improving the diagnosis and prediction of many diseases [

16,

17]. Feature extraction techniques are, however, necessary to obtain better Machine Learning models. Therefore, Deep Learning models have been broadly accepted in medical imaging systems due to their ability of extracting features automatically or by using pre-trained models such as ResNet [

18].

When COVID-19 first emerged, the main challenge was the lack of datasets for testing and building Deep Learning models [

19,

20]. A private dataset was used by Xu et al. [

21] to demonstrate how chest X-rays and chest CT scans can be used to detect COVID-19. They collected a total of 618 CT samples, achieving an overall accuracy of 86.7%. Yang et al. [

22] published a public dataset that included 349 COVID-19 (+) scans from 216 patients and 463 COVID-19 (−) scans from 55 patients. A prominent radiologist who has been treating and diagnosing infected patients since the beginning of this epidemic confirms the value of their dataset. Their diagnosis techniques relied on self-supervised learning and multi-task learning, and they reported an accuracy of 89% and an F1-score of 90%. Wang et al. [

23] introduced an open-access benchmark dataset (COVID-x), consisting of 13,975 Chest X-ray (CXR) images across 13,870 patient cases from five open-access data repositories. Their model obtained an accuracy of 93% which was later enhanced by Farooq et al. [

24], with an accuracy of 96%. He et al. [

25] provided another publicly-available dataset comprising of 349 COVID-19-positive CT images. In order to avoid overfitting, they proposed a self-supervised Transfer Learning technique that learns unbiased and powerful feature representations. Their methods achieved an Area Under Curve (AUC) of 94% and an F1-score of 85%.

In the wake of the dissemination of public chest X-rays and CT scans, researchers focused their efforts on developing Deep Learning models with a low average classification time and high accuracy [

26,

27]. Loey et al. [

28] presented Conditional Generative Adversarial Nets (CGAN) along with classic Data Augmentation techniques based on a deep Transfer Learning approach. The use of classical Data Augmentation and CGAN assisted in increasing the CT dataset and solving the overfitting issue. Moreover, they selected five deep Transfer Learning models (VGGNet16, VGGNet19, ResNet50, AlexNet, and GoogleNet) for investigation. Their experimental results demonstrated that ResNet50 outperformed the other four deep models in detecting COVID-19 from a chest CT dataset. Polsinelli et al. [

29] presented a light CNN design based on the SqueezeNet architecture to discriminate between COVID-19 and other CT scans (community-acquired pneumonia and healthy images). Their proposed model outperformed the original SqueezeNet on both dataset arrangements, obtaining an accuracy of 83%, a precision of 81%, an F1-score of 83%, and a recall of 85%. Lokwani et al. [

30] identified the site of infection using a two-dimensional segmentation model based on U-Net architecture. Their model was trained using full CT scans from a private Indian Hospital and a set of open-source images, available as individual CT slices. They reported a specificity of 0.88 (95% Confidence Interval: 0.82–0.94) and a sensitivity of 0.96 (95% Confidence Interval: 0.88–1).

Another challenge is extracting features from chest CT images for the detection of COVID-19 [

31]. Wang et al. [

32] presented a joint learning strategy for COVID-19 CT identification that learns efficiently with heterogeneous datasets from various data sources. They created a strong backbone by rebuilding the recently suggested COVID-Net from the architecture and learning approach. On top of their improved backbone, they performed separate feature normalization in latent space to reduce the cross-site data heterogeneity. Their method outperformed the original COVID-Net on two large-scale public datasets. A new hybrid feature selection method was proposed by Shaban et al. [

33], which combined both wrapper and filter feature selection methods. Almost all of the models used Deep Learning to extract the features [

34,

35,

36].

The researchers employed the Transfer Learning technique to reach high accuracy and low computation time in COVID-19 detection [

37], and among VGG16, VGG19, ResNet50, GoogleNet, and AlexNet, ResNet50 achieved the highest level of accuracy. Taresh et al. [

38] evaluated the ability of different state-of-the-art pre-trained CNNs in predicting COVID-19-positive cases accurately from chest X-ray scans. The dataset employed in their experiments includes 1200 CXR scans from COVID-19 patients, 1345 CXR scans from viral pneumonia patients, and 1341 CXR scans from healthy people. Their experimental findings demonstrated the superiority of VGG16, MobileNet, InceptionV3, and DenseNet169 in detecting COVID-19 CXR images with excellent accuracy and sensitivity. Rahimzadeh et al. [

39] came up with a robust method for increasing the accuracy of CNNs by adopting the ResNet50V2 network with a modified feature selection pyramid network. They presented a new dataset of 48,260 CT scans from 282 healthy people and 15,589 images from 95 COVID-19 patients. Their technique was tested in two ways: one on over 7796 scans and the other on about 245 patients and 41,892 scans of varying thicknesses. They were capable of recognizing 234 of the 245 patients, achieving an accuracy of 98%. Azemin et al. [

40] used a Deep Learning approach based on the ResNet101 model. They employed thousands of readily available chest radiograph scans for training, validation, and testing and achieved an accuracy of 71%, an AUC of 82%, a specificity of 71%, and a recall of 77%.

As can be observed, the majority of the recent studies on COVID19 detection have relied on individual Deep Learning models e.g., AlexNet, VGG16, VGG19, ResNet50, and ResNet101 [

28,

38,

40]. None of the studies attempted to combine the models in order to increase their detection capabilities except for one investigation by Ebenezer et al. [

41] which has proposed a stacked ensemble that includes four pre-trained CNN networks (VGG19, ResNet101, DenseNet169, and WideResNet50-2) to detect COVID-19. Their stacked ensemble system was generated using a similarity measure and a systematic approach. On three different chest CT datasets, their system reached high recall and accuracy, outperforming the baseline models.

Another point to note is that most of the mentioned literature employed a single dataset to evaluate the performance, which is not sufficient when dealing with a medical scenario such as this [

21,

22,

23,

24,

25,

28,

33,

34,

35,

38,

39,

40].

Table 1 summarizes the aforementioned state-of-the-art methods.

In this study, we analyzed and discussed the benefits of employing ensemble techniques. By exploring the differences in performance levels between Stacking and WAE, we demonstrated the superior performance provided by WAE. Additionally, valuable findings were obtained while modifying pre-trained VGG19, ResNet50, and DenseNet201 models and fine-tuning our own dense classifier. Moreover, we conducted experiments on two different chest CT-scan datasets and compared the performances of the individual models, ensemble models, and existing models using the most used evaluation metrics in Machine Learning.

We built on the usage of Transfer Learning and ensemble techniques to complete three major goals.

Develop a medical recognition system by employing Transfer Learning approach on state-of-the-art CNN models and combining them to form an ensemble using two Ensemble Learning techniques that may be readily duplicated by Deep Learning practitioners and researchers who may benefit from the present work to combat COVID-19.

Achieve competitive performance by attaining high levels of accuracy, precision, recall, and F1-score on both datasets.

Present and elaborate on the limitations of dealing with small datasets in important and sensitive tasks such as diagnosing COVID-19, as well as how fine-tuning, regularization, checkpoint callback, and data augmentation techniques can be used to overcome them.

5. Discussion

In this paper, we investigated two Ensemble Learning methods (Stacking and WAE) for detecting Covid-19 positive cases in chest CT images. We experimented Stacking with two and three levels. Each Ensemble Learning-based model was derived from a fusion of three fine-tuned CNNs: VGG19, ResNet50, and Densenet201. Two chest datasets were used to train and validate these networks. The Random Forest regressor algorithm was employed at the Meta-Learner level for 2-Levels Stacking to generate a final model. We picked Random Forest and Extra Trees classifiers as Meta-Learners for the second level of 3-Levels Stacking, and Logistic Regression for the third level. For all three methods, the same Base-Learners were used. The main difference between Stacking and WAE is that Stacking learns to combine the Base-Learners using a Meta-Learner. The WAE approach, on the other hand, does not include a Meta-Learner. The goal is to optimize the weights that are utilized for weighting the outputs of all Base-Learners and calculate the Weighted Average.

The small size of the datasets available was one of the major limitations of the current study. Despite this limitation, our proposed Ensemble Learning-based models were able to weed out false positives and false negatives and detect true positives and true negatives with a high level of performance on both datasets by employing strategies such as fine-tuning, drop-out, checkpoint callback, and data augmentation. To the best of our knowledge, this is the first paper to use WAE to detect COVID-19 from Chest CT scans. This method was found to be the most effective in this experiment, with > 98.5% accuracy on the SARS-CoV-2 CT-scan dataset [

42] and >95% accuracy on the COVID-CT dataset [

22]. These values are regarded as “extremely good” in the field of medical diagnosis and can be improved with a larger data set.

Fine-tuning was adopted on all three pre-trained CNN architectures using chest CT scans to enable networks to converge quickly and obtain features that are relevant to our study’s domain. It aided in the enhancement of the performance of these networks. VGG19, in particular, achieved a high level of performance on both datasets.

Figure 20 summarizes all experimental results described in this paper.

The Ensemble Learning strategies used in this work have the considerable advantage of automating the randomization process, allowing the researcher to investigate multiple databases and capture useful insights. Rather than being restricted to a single classifier, they create many classifiers iteratively while randomly varying the inputs. By combining several single classifiers into one, we can obtain a more adaptive prediction scheme. In addition, these strategies can tackle the topic of RT-PCR kit lack of supply by requiring only a CT scan machine, which is already present in the majority of hospitals around the world. As a result, countries will no longer be forced to wait for RT-PCR kits’ large shipments.

The missing part of this work is that the models are yet to be validated during real clinical routines, so we are still in theoretical research mode. Therefore, we intend to evaluate our proposed models in the clinical routine and consult with doctors about how such a medical recognition system might fit into the clinical routine.

6. Conclusions

The focus of this paper is to demonstrate how Ensemble Learning can be used to perform important and sensitive tasks such as diagnosing COVID-19. We proposed three Ensemble Learning-Based models for COVID-19 detection from chest CT images. Each Ensemble Learning-based model was a combination of pre-trained VGG19, ResNet50, and DenseNet201 networks. We began by preparing the two datasets to be used. We fine-tuned the pre-trained networks by unfreezing a part of each model. We combined the modified models through Stacking and WAE techniques. We used accuracy, precision, recall, and F1-score to compare performance results. We found very encouraging results, especially with the WAE method, which performed the best on the two publicly available chest CT-scan datasets. Consequently, Ensemble Learning, especially the WAE method, is strongly recommended for developing reliable models for diagnosing COVID-19, as well as for a variety of further applications in medicine.

A number of future works are highlighted by the authors. Firstly, the use of chest X-rays datasets to determine whether the ensemble models can be more successful with chest X-ray datasets than with chest CT datasets. Secondly, the use of other Ensemble methods to uncover new findings. Thirdly, the use of some pre-processing techniques to improve the visibility of chest CT images such as gain gradient filter, integrated means filter, etc. Lastly, testing the proposed models in clinical practice and consulting with doctors about their thoughts on these models.