1. Introduction

Surgical navigation systems. Surgical work today routinely involves interpreting images on a computer [

1,

2,

3]. Current surgical navigation systems integrate imaging functions to help surgeons conduct preoperative simulations [

4,

5,

6]. For example, Finke et al. [

7] combined a fully electric surgical microscope with a robotic navigation system to accurately and repeatedly place the microscope without interrupting the clinical workflow. Kockro [

8] combined a handheld navigation probe tracked by the tracking system with a miniature camera to observe real-time images through the miniature camera and navigate during surgery. Birth et al. [

9] combined an intraoperative ultrasound probe navigation system with an online navigation waterjet dissector for clinical liver resection. However, such computer-based navigation requires surgeons to continuously shift their attention back and forth between the screen and the patient, leading to distraction and loss of focus.

AR-based surgical navigation systems. The integration of augmented reality (AR) in surgical navigation systems can enhance the intuitiveness of system use [

10]. Konishi et al. [

11] developed an intraoperative ultrasound (IOUS) AR navigation system to improve surgical accuracy. Pokhrel et al. [

12] incorporated AR technology to effectively reduce cutting errors during knee replacement surgery by approximately 1 mm. Fotouhi et al. [

13] proposed a head-mounted display (HMD)-based AR system designed to guide optimal surgical robotic arm setup.

AR-based surgical navigation system for pre-surgical simulation. Many AR applications in medical treatment are used for pre-surgical simulation and practice [

14,

15,

16]. Chiou et al. [

17] proposed an augmented reality system based on image target positioning, which can superimpose digital imaging and communications in medicine (DICOM) images over the patient’s head to provide more intuitive surgical assistance. Konishi et al. [

11] performed pre-surgical computed tomography (CT) and magnetic resonance imaging (MRI) examinations on patients using body surface markers, with optical tracking used to create 3D reconstruction images which were then superimposed on the patient during subsequent surgery. Konishi et al. [

18] later combined their augmented reality navigation system with a magneto-optic hybrid 3D sensor configuration to assist surgeons in performing endoscopic surgery. Okamoto et al. [

19] developed a short rigid scope for use in pancreatic surgery, allowing surgeons to obtain 3D images of organs, which could then be superimposed on 3D images obtained by physicians during surgery. Tang et al. [

20] applied AR technology in hepatobiliary surgery, reconstructing 3D images of liver and biliary tract structures using preoperative CT and MRI data, which were then superimposed on organs during surgery, thus enhancing the surgeon’s perception of intrahepatic structures and increasing surgical precision. Volonté et al. [

21] created 3D reconstructions from CT slides, which they then projected onto the patient’s body to enhance spatial perception during surgery, and used image overlay navigation for laparoscopic operations such as cholecystectomy, abdominal exploration, distal pancreas resection, and robotic liver resection. Bourdel et al. [

22] used AR technology to assist physicians in judging the location of organs, using AR to locate adenomyomas during laparoscopic examination, giving the virtual uterus a translucent appearance that allowed surgeons to better locate adenomyomas and determine uterine position.

AR-based surgical navigation system for brains neurosurgery. However, most AR surgical navigation methods are not applicable for brain surgery. Zeng et al. [

23] proposed a prototype system that uses SEEG to realize see-through video augmented reality (VAR) and spatial augmented reality (SAR) in 2017. This system can help surgeons quickly and intuitively confirm registration accuracy, locate entry points, and visualize the internal anatomy in a virtual image space and the actual patient space. Léger et al. [

24] studied the impact of two different types of AR-based image-guided surgery (mobile AR and desktop AR) and traditional surgical navigation on attention shifts for the specific task of craniotomy planning. In 2015, Besharati Tabrizi and Mehran [

25] proposed a method for using an image projector to project an image of the patient’s skull onto the patient’s head for surgical navigation. Hou et al. [

26] proposed a method to achieve augmented reality surgical navigation using a low-cost iPhone. Zhang et al. [

27] developed a surgical navigation system that projects near-infrared fluorescence and ultrasound images onto Google Glass, clearly showing the tumor boundary that would otherwise be invisible to the naked eye, thus facilitating surgery. Müller et al. [

28] proposed fiducial marker image navigation, using a lens to identify fiducial points and generating virtual images for percutaneous nephrolithotomy (PCNL). Prakosa et al. [

29] designed AR guidance in a virtual heart to increase catheter navigation accuracy and thus affect ventricular tachycardia (VT) termination. Tu et al. [

30] proposed a HoloLens-to-world registration method using an external EM tracker and a customized registration cube, improving depth perception and reducing registration.

Research Target. However, these methods do not provide a comprehensive solution for the presentation of surgical target, scalpel entry point, and scalpel orientation for neurosurgery. Therefore, we propose an AR-based optical surgical navigation system to achieve AR brain neurosurgery navigation.

2. Research Purpose

Neurosurgeons currently rely on DICOM-format digital images to find the operation target; determine the entry point, scalpel position, and depth; and to confirm the scalpel position and depth during the operation. Augmented reality surgical systems can help surgeons improve scalpel position and depth accuracy, while enhancing the intuitiveness and efficiency of surgery. To improve the intuitiveness, efficiency, and accuracy of EVD surgery, this paper proposes an AR-based surgical navigation system for external ventricular drain, achieving the following targets.

- (1)

An AR-based virtual image is superimposed on the patient’s body, thus relieving the surgeon of needing to switch views.

- (2)

The system directly locks the surgical target selected by the surgeon through the DICOM-formatted image.

- (3)

The surgeon can see the surgical target image directly superimposed on the patient’s head on a tablet computer or HMD.

- (4)

Once the surgeon selects the surgical entry point, it is displayed on the screen and superimposed on the patient’s head.

- (5)

The scalpel orientation image is displayed on the screen and superimposed on the patient’s head.

- (6)

The surgeon can change the surgical entry point at will, and the system will reflect such changes in real time.

- (7)

The scalpel entry point and direction are displayed in real time along with the surgeon’s navigation stick, providing accurate and real-time guidance.

- (8)

On-screen color-coded prompts ensure the surgeon uses the correct entry point and scalpel direction.

- (9)

The superimposed scalpel images can be displayed on the screen before and during surgery, providing surgeons with more intuitive and accurate guidance.

- (10)

Highly accurate image superimposition.

- (11)

Low pre-surgical preparation time requirements for DICOM file processing and program importing.

- (12)

Low preoperative preparation time in the operating room.

- (13)

System use does not extend the duration of traditional surgery.

- (14)

Clinically demonstrated feasibility and efficacy, and is ready for clinical surgical trials.

3. Materials, Methods and Implementations

The proposed AR-based neurosurgery navigation system uses a laptop computer (Windows 10 operating system, Intel core i7-8750H CPU @ 2.20 GHz processor, 32 G RAM memory) as a server, a tablet computer (Samsung Galaxy Tab S5e, Android 9.0 operating system and an eight-core processor) as an operating panel, and an optical measurement system (NDI Polaris Vicra) for positioning, connected by a WiFi router (ASUS RT-N13U).

The accuracy of the optical measurement system (NDI Polaris Vicra) was first verified before designing functions to allow surgeons to quickly select the desired surgical target and entry point positions, using the tablet to display superimposed images of surgical target, entry point, and scalpel to validate scalpel orientation.

The system can calculate corresponding 3D images using mobile devices such as AR glasses, tablet computers, and smartphones. While these three types of devices have different characteristics, this paper mainly focuses on tablet computer applications.

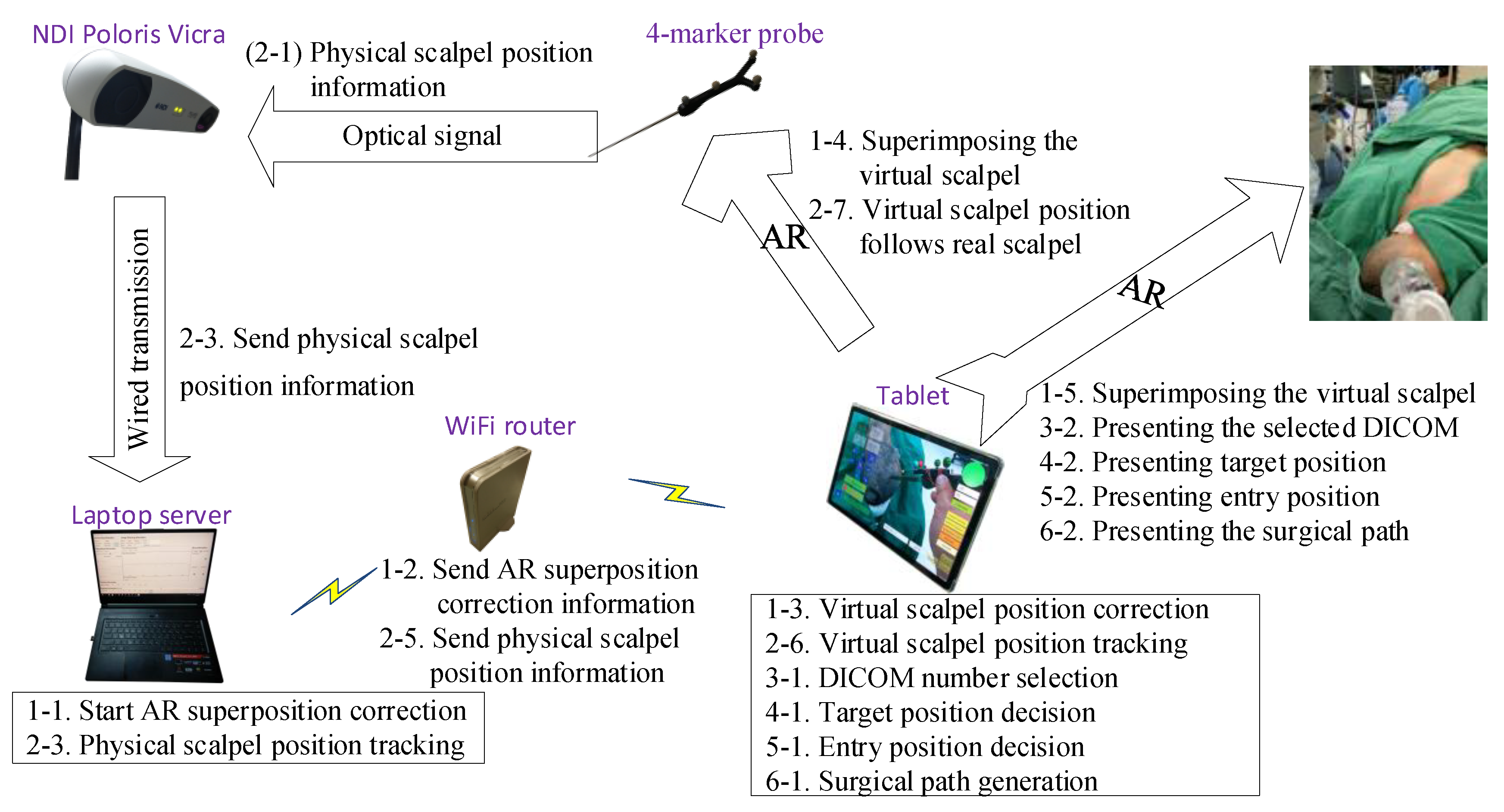

Table 1 defines the symbols and parameters used in the proposed method, and

Figure 1 shows the overall architecture diagram. The detailed implementation and operation are described in the following steps.

3.1. AR Model Creation

A 4-marker scalpel model (

Figure 2A) was created in Unity (a cross-platform game engine developed by Unity Technologies), using the patient’s CT or MRI DICOM data to make an AR scalp model (

Figure 2B through the Avizo image analysis software, placing markers on the ears and top of the AR scalp model (

Figure 2B).

3.2. AR Superimposition Accuracy

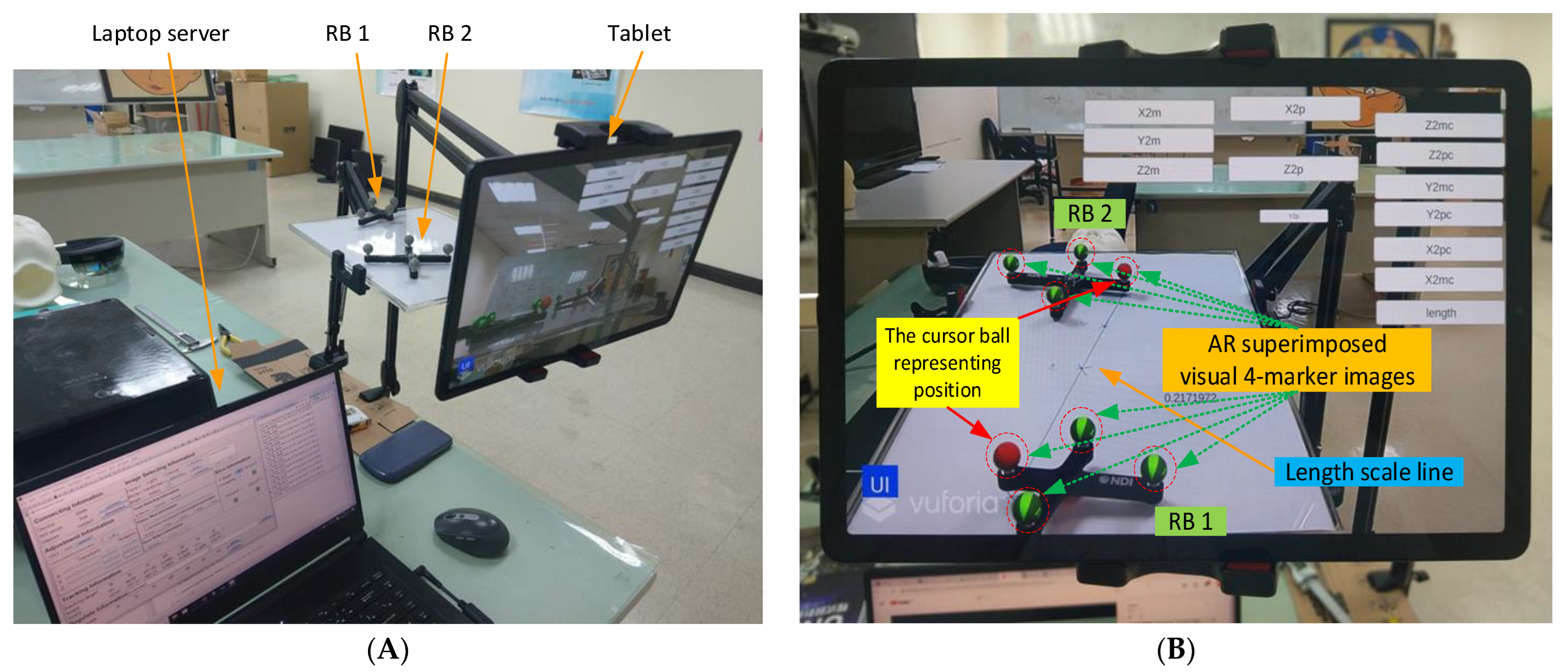

Manual AR superimposition in the preoperative phase may produce errors. To understand and minimize such errors, we evaluated the impact of each component on AR accuracy, including the laptop server, the NDI Polaris Vicra, the tablet, the wireless router, and two rigid bodies. (

Figure 3A).

First, two sets of AR 4-marker images were superimposed on two sets of 4-marker rigid bodies (

Figure 3B). The distance between the two sets of 4-marker rigid bodies was calculated according to the coordinate data from NDI Polaris Vicra (

Figure 3C), and the distance between the two sets of AR 4-marker images was calculated according to the coordinate data using Unity. The distance differences were then compared using the same method to modify the distances and angles (

Figure 3D) for error testing.

Each rigid body has four cursor balls, one of which indicates the body’s position, here identified as a red virtual AR ball (see

Figure 3B). The position of this ball in the optical positioning world represents the coordinate data (position) of the rigid body sent by the NDI Polaris Vicra. In the virtual world, this position represents the position of the red AR virtual image ball displayed in Unity. The two rigid bodies were positioned on a scaled line (respectively 1, 2, 3, 4, and 5 cm) to measure (1) their actual distance, (2) the distance calculated from the NDI Polaris Vicra data, and (3) the distance calculated from the position displayed by Unity. These three distance datapoints were then used to calculate the accuracy and error values. The impact of viewing angle on accuracy was assessed using angles of 0 degrees, 45 degrees, and 90 degrees (

Figure 3D).

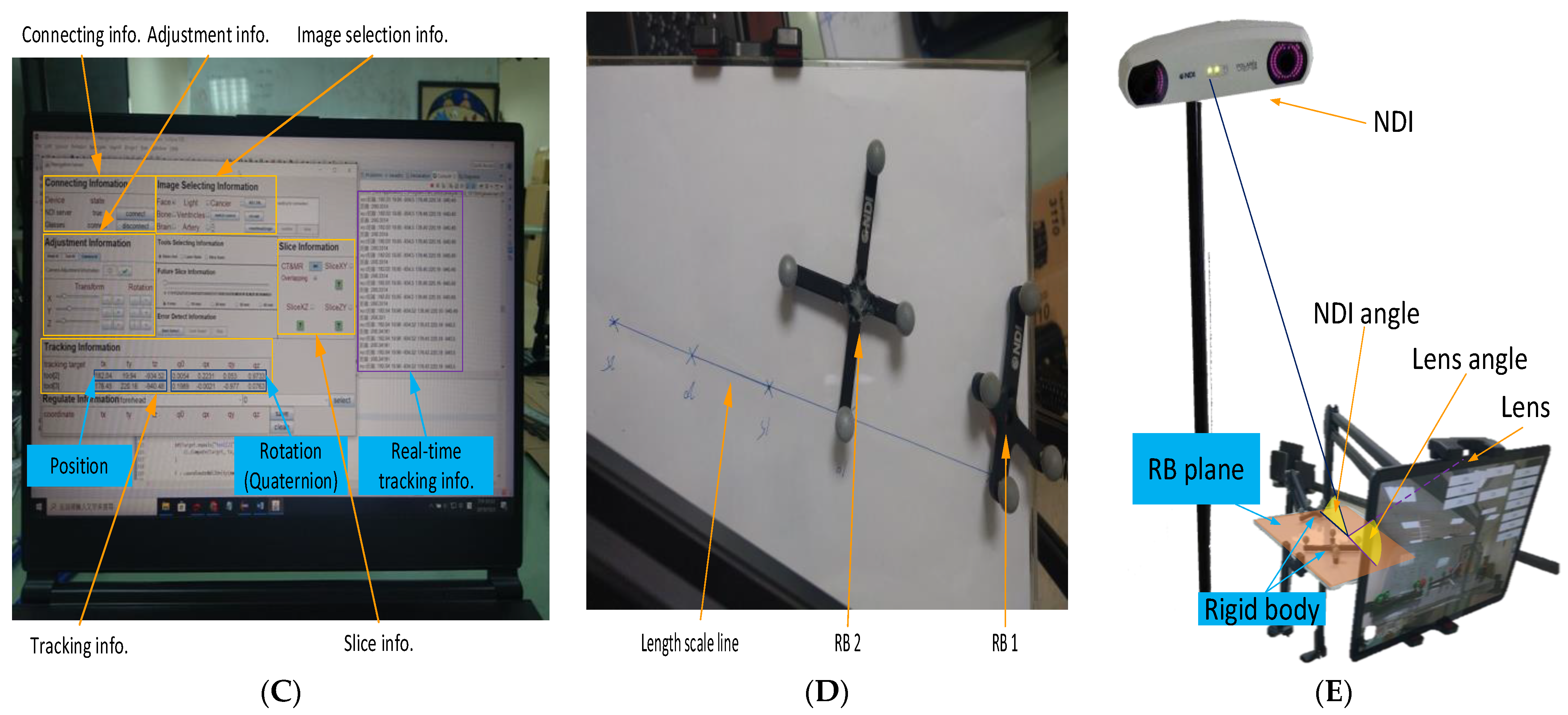

Figure 3C shows the user interface with eight information blocks. The connection information block is used to connect to NDI Polaris Vicra via a wired USB cable and to connect to a tablet via the WiFi router. The adjustment information block is used to adjust the position and rotation of the AR virtual image. The image selection information block is used to select specific parts of the head in the AR virtual image, such as face, specific bones, brain, tumors, etc. The tracking information block is used to obtain the rigid body coordinate data sent by the NDI Polaris Vicra. This block contains the three-coordinate positioning information and the rotation of the quaternion. In addition to calculating the relative position of the two rigid bodies, the degree of rotation of each rigid body must also be used to calculate the relative angle between the two rigid bodies. The slice information block is used to select slices of various DICOM orientations to display the AR virtual images, including the XY, YZ, and XZ planes.

The three remaining blocks (tools selection, future slice, and error detection) are rarely used. They are used to select various tools (such as scalpel or ultrasound), to display the extension of the scalpel image in the AR virtual image, and to facilitate error detection within the program. Aside from the user interface, the programming interface continuously collects tracking information (including current and past tracking information) in real time.

As shown in

Figure 3E, the lens/NDI angle is the angle between the RB plane (i.e., XZ plane) and the extension line of the tablet lens/NDI positioning device. Test results found that the distance error of the NDI positioning device is smallest when the NDI angle is 90 degrees. Similarly, after adding the AR function, the distance error of Unity is smallest when the lens angle is 90 degrees. However, the NDI angle and the lens angle cannot be 90 degrees at the same time. We also found that when the NDI angle changes, the effect of the distance error of the NDI positioning device is smaller than that of the Unity distance error when the lens angle changes. Therefore, we tested the comprehensive error of NDI and Unity by changing the RB plane (

Figure 3E). We can see that the closer the lens angle is to 90 degrees, the farther the NDI angle is from 90 degrees. However, when the lens angle is 90 degrees, we can obtain the smallest distance error and the highest stability with the best overall performance due to the reduced influence of the NDI angle change.

The mean and the standard deviation of the distance errors are shown in

Figure 4 and

Table 2, where lens angle means the angle between the 4-marker-rigid-bodies plane angle and the tablet–PC–camera–lens photography angle (

Figure 3E). NDI distance and Unity distance stand for the error (mean or variance) between the “actual distance” and the “calculated distance”, which are respectively calculated based on the values of the two sets of rigid bodies displayed by the NDI Polaris Vicra, and based on the two sets of AR 4-marker images displayed by Unity. Difference means the difference between each NDI distance and the Unity distance. NDI distance works best with a lens angle of 0 degrees, while Unity distance works best with a lens angle of 90 degrees. All things considered, this AR system works best with a 90 degree lens angle. From the result, the reliable lens angle is 90 ± 10 degrees. A lens angle of approximately 90 degrees minimizes the AR superimposition error and maximizes data stability, with a mean error of 2.01 ± 1.12 mm. (Note: Abdoh’s review article, [

31], found considerable variation in intracranial catheter length from 5 to 7 cm.)

3.3. Laboratory Simulated Clinical Trials

Simulated experiments were used to revise various parameters. DICOM data for selected patients was used to create a high-accuracy head phantom (

Figure 5).

The proposed system was developed in two versions, with three and two rigid bodies. In the three-rigid-bodies version, the three rigid bodies were located on the navigation stick, the bed, and the top of the tablet, used in positioning the scalpel, the head, and the tablet (i.e., the camera), respectively. The two-rigid-bodies version did not include the tablet. The three-rigid-bodies version tracks real-time movement of the tablet, but the position and rotation information of these three rigid bodies must be calculated in real time, along with their relative positions and angles, resulting in occasional screen lag. The 2-rigid-bodies version is thus more stable, but the tablet must remain in a static position. Otherwise, AR superimposition must be used to recalibrate relative positioning information.

Laboratory tests were conducted to assess hardware and software pre-processing performance, followed by AR overlay experiments (see

Figure 6). In the hardware tests, the patient’s DICOM data with 147 slices, each with a thickness of 2 mm and a file size 74.0 MB, were converted into a 3D printer format to create a patient-specific head phantom model, including the patient’s ventricle and a head cover. The model was then printed using white nylon (see

Figure 7), and placed in the experimental environment. The software test asked the surgeon to identify the target position in DICOM, after which Avizo was used to make an AR scalp model from the DICOM data.

This target information can optionally be manually set at the target position found by the surgeon. The AR scalp model is then loaded into the tablet APP.

Software and hardware pre-processing was followed by AR-based virtual image overlay experiments. The steps in

Section 3 were followed to determine the target position of the patient, which was then displayed on the tablet so that it overlapped the patient’s head. After the entry point position has been determined, the APP automatically generates a scalpel stick azimuth auxiliary circle on the upper right of the tablet. The surgeon then corrects the scalpel direction until the scalpel image turns green, indicating correct direction.

3.4. Experimental Setup

The system hardware, including the laptop server, the optical measurement system (i.e., NDI Polaris Vicra), the tablet computer, the wireless router, and the 4-marker probe (i.e., scalpel stick) were arrayed around an operating bed (

Figure 8).

3.5. AR-Based Virtual Image Superimposition

In the preoperative phase, a technician took 5–7 min to superimpose the virtual head image and scalpel. The 4-marker scalpel model was then superimposed on the 4-marker probe (

Figure 9A), and then the AR head model was superimposed on the patient’s head based on the three markers (right and left ears and top of head) (

Figure 9B).

3.6. Target Position Determination

In this step, the surgeon required about one minute to establish the target position, observing the DICOM-formatted images on a computer or directly on the tablet PC to identify the surgical target and obtain its DICOM page number (

Figure 10). The surgeon then input the DICOM page number and determined the target position by tapping the tablet screen (displaying a ball), and clicked the “Set Target” button to complete the target positioning (

Figure 11).

3.7. Entry Point Position Setting

In this step, the surgeon selected an entry point position and set it on the tablet, an operation that took approximately one minute. The surgeon clicked the “Entry Position” button to superimpose the AR entry point image over the scalpel tip (

Figure 12). When the surgeon moved the scalpel tip to determine the entry point, he pressed the “Entry Position” button again to complete the setting.

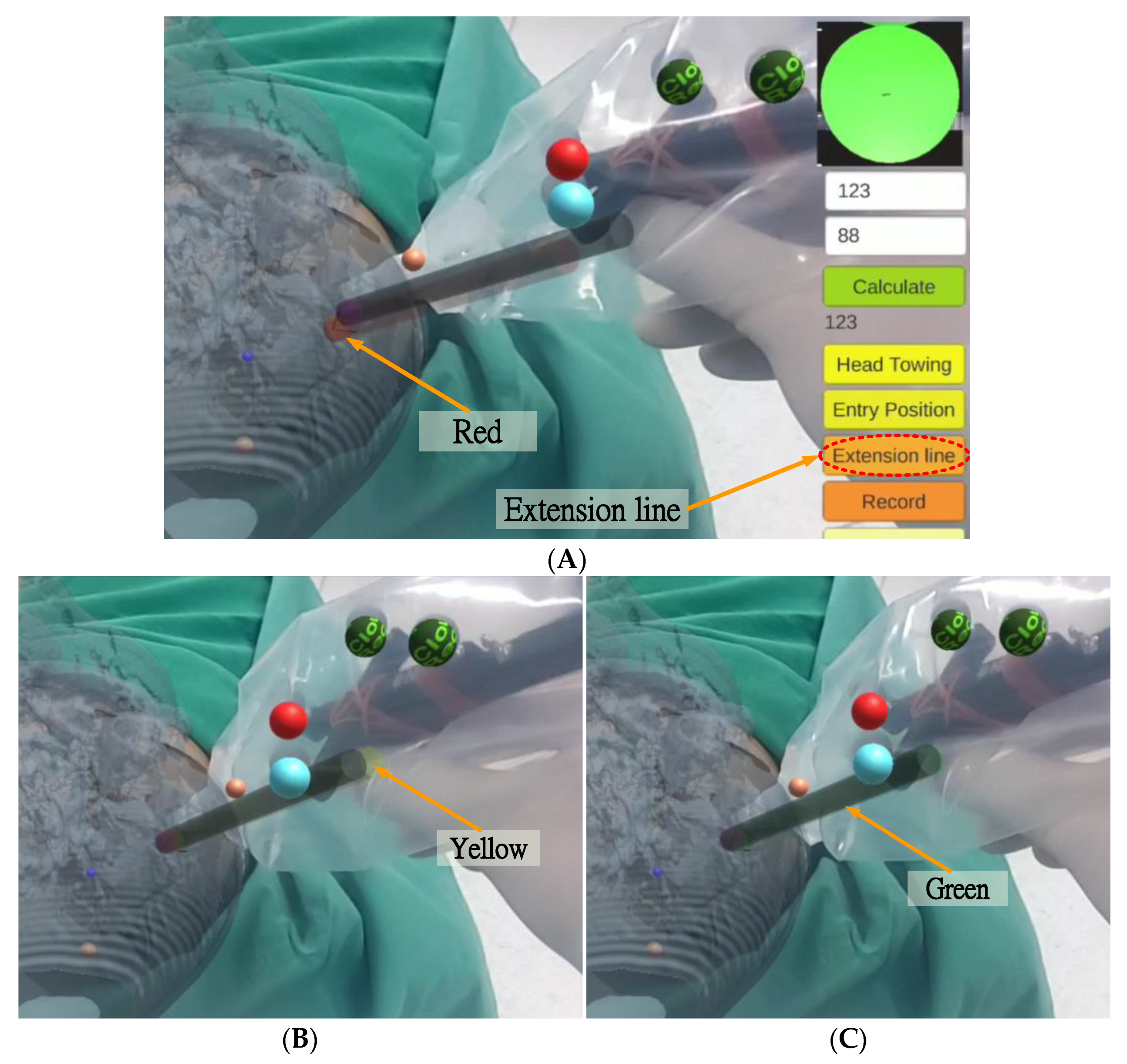

3.8. Scalpel Orientation Guidance and Correction

In this step, the tablet dynamically displays the superimposed image of the scalp, target position, entry point position, and scalpel (including the scalpel tip and scalpel spatial direction calibrator).

After setting the entry point position, the surgeon pressed the “Extension line” button, and a red scalpel stick virtual image (the virtual guide scalpel stick

) appeared superimposed over the scalp as a scalpel path extension bar, guiding the surgeon vis-à-vis the scalpel position and angle (

Figure 13A). The surgeon can fine-tune the scalpel position and angle based on the color of the virtual scalpel stick and the orientation calibrator (a green circle in the upper right corner).

The color change rule of the scalpel stick virtual image is as follows:

where

,

, and

represent the virtual entry point, the virtual auxiliary line, and the virtual stick of 4-marker scalpel model, respectively (

Figure 2A);

and

are the position and the vector of

, respectively;

is the tip point of

, and

are the coordinates of

.

If the distance between the tip position of the scalpel image and the actual entry point is less than 2 mm and the angle between the scalpel and the scalpel stick virtual image is greater than 1.5 degrees, the scalpel image will turn yellow (

Figure 13B), indicating that the position of the scalpel tip is correct but the angle is incorrect. If the distance between the tip position of the scalpel image and the actual entry point is less than 2 mm and the angle between the scalpel and the scalpel stick virtual image is less than 1.5 degrees, the scalpel image will turn green (

Figure 13C), indicating that both the position of the scalpel tip and the angle are correct.

3.9. Scalpel Stick Azimuth Auxiliary Circle

In the upper right corner of the UI, there is a scalpel stick azimuth auxiliary circle

, which can assist surgeons in correcting the orientation of the surgical scalpel stick (

Figure 9). The surgeon can infer whether the angle of the real scalpel stick is consistent with the angle of the virtual guide scalpel stick according to the length and the angle of the line in the circle, and adjust the orientation accordingly.

The shorter the length of this line (i.e., closer to a point), the smaller the angle difference. The line’s deviation direction stands for the real scalpel stick’s direction of deviation. The technical steps of creation are as follows.

The system first creates a virtual auxiliary circle

in Unity and sets its position

at the position of the virtual entry point

:

It then sets the rotation of

as orthogonal to the virtual guide scalpel stick

:

where

and

stand for the position and rotation of

, respectively, and

is an orthogonal rotation such that

and

are orthogonal.

Then the system then creates a virtual auxiliary line,

, and sets the start position of this line at the position of

:

The rotation of

is equal to that of

:

The length of this line is the radius of

:

where

and

respectively stand for the start position and the length of the line

, and

is the virtual stick of the 4-marker scalpel model (

Figure 2A). Finally, the system creates a virtual scalpel stick azimuth auxiliary line

:

and produces the scalpel stick azimuth auxiliary circle

and the scalpel stick azimuth auxiliary line

:

The system then displays

and

in the upper right of the UI, where

is a vector projection of

onto

, line

is the normal vector of line

in circle

, and (

,

) is identical to (

,

).

4. Results

This study proposes an AR-based optical surgical navigation system for use in the preoperative stage of EVD surgery by accurately superimposing virtual images of the surgical target position, scalpel entry point, and scalpel direction over the patient’s head. This superimposition is displayed on a tablet, where the color of the virtual scalpel stick and an azimuth auxiliary circle increase the accuracy, intuitiveness, and efficiency of EVD surgery. The resulting accuracy is within 2.01 ± 1.12 mm, presenting a significant improvement over the optical positioning navigation (18.8 ± 8.56 mm) proposed by Ieiri et al. [

32], the mobile AR for percutaneous nephrolithotomy (7.9 mm) proposed by Müller et al. [

28], the phantom and porcine model AR evaluation (2.8 ± 2.7 and 3.52 ± 3.00 mm proposed by Kenngott et al. [

33], the AR imaging for endonasal skull base surgery (2.8 ± 2.7 mm) proposed by Lai et al. [

34], and the image positioning navigation (2.5 mm) proposed by Deng et al. [

35].

Table 3 shows that the proposed optical positioning method provided the best results in terms of average accuracy and standard deviation.

In addition, a total of four clinical trials of external ventricular drain (EVD) surgery were performed as follows:

- (1)

DICOM acquisition. Patient CT DICOM data was obtained from Keelung Chang Gung Memorial Hospital.

- (2)

AR scalp image production. Avizo was used to create a 3D virtual scalp image from the patient DICOM data.

- (3)

Data import. 3D virtual scalp images and patient DICOM data were imported into Unity, and used to produce and update the tablet PC APP.

- (4)

APP test and surgery simulation. Following APP update, simulated surgery was conducted to ensure the correctness of the APP.

- (5)

Clinical trials. Clinical trials were conducted at Keelung Chang Gung Memorial Hospital.

Steps (1) to (4) of the preparation stage required an average of 2 ± 0.5 h to complete. Step (5), the preoperative stage (including virtual image superimposition, target position determination, entry point position setting, and scalpel orientation guidance and correction), required 10 ± 2 min on average.

5. Discussion

A good surgical navigation system needs the following four properties: convenient instruments, rapid DICOM data processing, instant operation, and high precision.

The method proposed by Chiou et al. [

17] enables the instant display of DICOM-formatted images superimposed on the patient’s head. However, their method is only a concept, and can only place the identification map under the patient’s head. They do not mention superimposition accuracy or the method used to superimpose the virtual image.

Kenngott et al. [

33] performed three AR evaluations: phantom model evaluation (with a mean reprojection error of 2.8 ± 2.7 mm), porcine model evaluation (with a mean reprojection error of 3.52 ± 3.00 mm), and human feasibility test. Although their system outperforms other AR-like systems in terms of accuracy, they provided no accuracy data for human testing.

Tabrizi and Mehran [

25] proposed a method which uses an image projector to create augmented reality by projecting an image of the patient’s skull on the patient’s actual head, thereby enhancing surgical navigation. However, their method cannot display the scalpel’s relative position in the augmented reality, and also cannot display detailed brain tissue structures or the corresponding position and angle of the CT image.

Hou et al. [

26] implemented augmented reality surgical navigation using a low-cost iPhone. However, this approach only provides AR images from specific angles, making it poorly suited to actual surgical conditions.

Zhang et al. [

27] developed a surgical navigation system using near-infrared fluorescence and ultrasound images that can be automatically displayed on Google Glass, clearly showing tumor boundaries that are invisible to the naked eye. However, their current implementation still has some significant shortcomings and limitations, particularly related to Google Glass’s short battery life, its tendency to overheat, its limited field of view, and limited focal length. Another limitation is that the ICG used in fluorescent imaging is not a tumor-specific contrast agent, so it cannot be used in tumor-specific clinical applications.

The fiducial marker image navigation proposed by Müller et al. [

28] uses lenses to identify fiducials and generate virtual images for percutaneous nephrolithotomy (PCNL). However, in addition to the time-consuming pre-surgical placement of reference points (99 s), the average error of 2.5 mm is not conducive to neurosurgical procedures (such as EVD).

In summary, previously proposed methods do not present a comprehensive solution for the accurate guidance for surgical targets, scalpel entry points, and scalpel orientation in brain surgery, and the proposed approach seeks to address these shortcomings.

To maximize user convenience, the proposed system uses a tablet PC as the primary AR device, ensuring ease of portability. DICOM data processing takes about two hours to complete the system update. Surgeons can use the proposed system before and during surgery for real time guidance for surgical target, entry point, and scalpel path. Finally, in terms of precision, the proposed system has an average spatial error is 2.01 ± 1.12 mm, a considerable improvement on many previous methods.

6. Conclusions

This paper proposes an AR-based surgical navigation system, which superimposes a virtual image over the patient, providing surgeons with more intuitive and accurate operations without the need to shift views. The system directly locks on the surgical target selected by the surgeon, with images of the surgical target, the scalpel entry point, and the scalpel orientation all displayed on the screen and superimposed on the patient’s head, providing the surgeon with a highly accurate and intuitive view which changes in real time in response to the surgeon’s actions while providing visual prompts to assist the surgeon in optimizing scalpel entry point and direction.

In addition, an on-screen image of the scalpel entry point and orientation guides the surgeon in real-time, with guidance provided by color-coded on-screen prompts and an azimuth auxiliary circle. In the DICOM-formatted image display mode, the DICOM-formatted image of the operation target position can be displayed and accurately superimposed on the correct position (of the virtual head). When the physician switches or moves the DICOM position, the on-screen DICOM-formatted image adjusts in real time, shifting to the new relative position.

The proposed method outperforms other existing approaches in terms of mean precision (2.01 ± 1.12 mm) and standard deviation. The preparation time required before each procedure is within acceptable limits, with an average of 120 ± 30 min needed for DICOM file processing and program importing, while preoperative preparation only required six minutes on average during hospital clinical trials, and only 3.5 min were needed on average for the surgeon to set the target and entry point location and accurately identify the direction with the surgical stick.

Future work will seek to further reduce superposition error, along with time required for the preparation and preoperative stages, thus improving the clinical utility of the proposed system.

Author Contributions

H.-L.L. designed the study. Z.-Y.Z. collected the clinical data, helped to design the study and helped write the manuscript. S.-Y.C. performed the scheduling and statistical analysis, wrote the manuscript, and built the figures and tables. J.-L.Y. mastered and arranged clinical trials. K.-C.W. provided equipment, manpower, and funds, and arranged clinical trials. P.-Y.C. validated clinical approaches and mastered clinical trials. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Ministry of Science and Technology under Grant MOST 111-2221-E-182-020 and by the CGMH project under Grant CMRPD2M0021.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki and approved by the Institutional Review Board of Chang Gung Medical Foundation (with IRB No.: 201900856B0A3, and Date of Approval: 16 July 2019).

Informed Consent Statement

Not applicable for studies not involving humans.

Data Availability Statement

The statistical data presented in this study are available in

Table 2. The datasets used and/or analyzed during the current study are available from the corresponding author upon request. These data are not publicly available due to privacy concerns and ethical reasons.

Acknowledgments

This work was supported in part by the Ministry of Science and Technology under Grant MOST 111-2221-E-182-020 and in part by the CGMH Project under Grant CMRPD2M0021 and CMRPG2M0161.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhu, T.; Ong, Y.; Kim, M.; Liang, X.; Finlay, J.; Dimofte, A. Evaluation of Light Fluence Distribution Using an IR Navigation System for HPPH-mediated Pleural Photodynamic Therapy (pPDT). Photochem. Photobiol. 2019, 96, 310–319. [Google Scholar] [CrossRef] [PubMed]

- Razzaque, S.; Heaney, B. Medical Device Guidance. US Patent 10,314,559, 11 June 2019. [Google Scholar]

- Bucholz, D.R.; Foley, T.K.; Smith, K.R.; Bass, D.; Wiedenmaier, T.; Pope, T.; Wiedenmaier, U. Surgical Navigation Systems Including Reference and Localization Frames. US Patent 6,236,875, 22 May 2001. [Google Scholar]

- Mezger, U.; Jendrewski, C.; Bartels, M. Navigation in surgery. Langenbecks Arch. Surg. 2013, 398, 501–514. [Google Scholar] [CrossRef] [PubMed]

- Casas, C.Q. Image-Guided Surgery with Surface Reconstruction and Augmented Reality Visualization. US Patent 10,154,239, 11 December 2018. [Google Scholar]

- Li, R.; Yang, T.; Si, W.; Liao, X.; Wang, Q. Augmented Reality Guided Respiratory Liver Tumors Punctures: A Preliminary Feasibility Study. In Proceedings of the SIGGRAPH Asia 2019 Technical Briefs, Brisbane, Australia, 17–20 November 2019; pp. 114–117. [Google Scholar]

- Finke, M.; Schweikard, A. Motorization of a surgical microscope for intra-operative navigation and intuitive control. Int. J. Med. Robot. Comput. Assist. Surg. 2010, 6, 269–280. [Google Scholar] [CrossRef] [PubMed]

- Kockro, R.A. Computer Enhanced Surgical Navigation Imaging System (Camera Probe). US Patent 7,491,198, 17 February 2009. [Google Scholar]

- Birth, M.; Kleemann, M.; Hildebrand, P.; Bruch, P.H. Intraoperative online navigation of dissection of the hepatical tissue—A new dimension in liver surgery? Int. Congr. Ser. 2004, 1268, 770–774. [Google Scholar] [CrossRef]

- Nakajima, S.; Orita, S.; Masamune, K.; Sakuma, I.; Dohi, T.; Nakamura, K. Surgical navigation system with intuitive three-dimensional display. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Strasbourg, France, 27 September–1 October 2021; pp. 403–411. [Google Scholar]

- Konishi, K.; Nakamoto, M.; Kakeji, Y.; Tanoue, K.; Kawanaka, H.; Yamaguchi, S.; Ieiri, S. A real-time navigation system for laparoscopic surgery based on three-dimensional ultrasound using magneto-optic hybrid tracking configuration. Int. J. Comput. Assist. Radiol. Surg. 2007, 2, 1–10. [Google Scholar] [CrossRef]

- Pokhrel, S.; Alsadoon, A.; Prasad, P.; Paul, M. A novel augmented reality (AR) scheme for knee replacement surgery by considering cutting error accuracy. Int. J. Med. Robot. Comput. Assist. Surg. 2019, 15, e1985. [Google Scholar] [CrossRef]

- Fotouhi, J.; Song, T.; Mehrfard, A.; Taylor, G.; Martin-Gomez, A. Reflective-AR Display: An Interaction Methodology for Virtual-Real Alignment in Medical Robotics. arXiv 2019, arXiv:1907.10138. [Google Scholar] [CrossRef]

- Lee, J.H.; Shvetsova, O.A. The Impact of VR Application on Student’s Competency Development: A Comparative Study of Regular and VR Engineering Classes with Similar Competency Scopes. Sustainability 2019, 11, 2221. [Google Scholar] [CrossRef]

- Chang, S.C.; Hsu, T.C.; Kuo, W.C.; Jong, M.S.-Y. Effects of applying a VR-based two-tier test strategy to promote elementary students’ learning performance in a Geology class. Brit. J. Educ. Technol. 2019, 51, 148–165. [Google Scholar] [CrossRef]

- Daniela, L.; Lytras, M.D. Editorial: Themed issue on enhanced educational experience in virtual and augmented reality. Virtual Real. 2019, 23, 325–327. [Google Scholar] [CrossRef]

- Chiou, S.-Y.; Liu, H.-L.; Lee, C.-W.; Lee, M.-Y.; Tsai, C.-Y.; Fu, C.-L.; Chen, P.-Y.; Wei, K.-C. A Novel Surgery Navigation System Combined with Augmented Reality based on Image Target Positioning System. In Proceedings of the IEEE International Conference on Computing, Electronics & Communications Engineering 2019 (IEEE iCCECE ‘19), London, UK, 22–23 August 2019; pp. 173–176. [Google Scholar]

- Konishi, K.; Hashizume, M.; Nakamoto, M.; Kakeji, Y.; Yoshino, I.; Taketomi, A.; Sato, Y. Augmented reality navigation system for endoscopic surgery based on three-dimensional ultrasound and computed tomography: Application to 20 clinical cases. Int. Congr. Ser. 2005, 1281, 537–542. [Google Scholar] [CrossRef]

- Okamoto, T.; Onda, S.; Yanaga, K.; Suzuki, N.; Hattori, A. Clinical application of navigation surgery using augmented reality in the abdominal field. Surg. Today 2015, 45, 397–406. [Google Scholar] [CrossRef] [PubMed]

- Tang, R.; Ma, F.L.; Rong, X.Z.; Li, D.M.; Zeng, P.J.; Wang, D.X.; Liao, E.H.; Dong, H.J. Augmented reality technology for preoperative planning and intraoperative navigation during hepatobiliary surgery: A review of current methods. Hepatobiliary Pancreat. Dis. Int. 2018, 17, 101–112. [Google Scholar] [CrossRef] [PubMed]

- Volonté, F.; Pugin, F.; Bucher, P.; Sugimoto, M.; Ratib, O.; Morel, P. Augmented reality and image overlay navigation with OsiriX in laparoscopic and robotic surgery: Not only a matter of fashion. J. Hepato-Biliary-Pancreat. Sci. 2011, 18, 506–509. [Google Scholar] [CrossRef] [PubMed]

- Bourdel, N.; Chauvet, P.; Calvet, L.; Magnin, B.; Bartoli, A. Use of augmented reality in gynecologic surgery to visualize adenomyomas. J. Minim. Invasive Gynecol. 2019, 26, 1177–1180. [Google Scholar] [CrossRef]

- Zeng, B.; Meng, F.; Ding, H.; Wang, G. A surgical robot with augmented reality visualization for stereoelectroencephalography electrode implantation. Int. J. Comput. Assist. Radiol. Surg. 2017, 12, 1355–1368. [Google Scholar] [CrossRef]

- Léger, É.; Drouin, S.; Collins, D.L.; Popa, T.; Marta, K.O. Quantifying attention shifts in augmented reality image-guided neurosurgery. Healthcare Technol. Lett. 2017, 4, 188–192. [Google Scholar] [CrossRef]

- Tabrizi, L.B.; Mehran, M. Augmented reality-guided neurosurgery: Accuracy and intraoperative application of an image projection technique. J. Neurosurg. 2015, 123, 206–211. [Google Scholar] [CrossRef]

- Hou, Y.Z.; Ma, L.C.; Chen, R.; Zhang, J. A low-cost iPhone-assisted augmented reality solution for the localization of intracranial lesions. PLoS ONE 2016, 11, e0159185. [Google Scholar] [CrossRef]

- Zhang, Z.; Pei, J.; Wang, D.; Hu, C.; Ye, J.; Gan, Q.; Liu, P.; Yue, J.; Wang, B.; Shao, P.; et al. A Google Glass navigation system for ultrasound and fluorescence dualmode image-guided surgery. In Proceedings of the Advanced Biomedical and Clinical Diagnostic and Surgical Guidance Systems XIV, San Francisco, CA, USA, 7 March 2016; p. 96980I. [Google Scholar]

- Müller, M.; Rassweiler, M.C.; Klein, J.; Seitel, A.; Gondan, M.; Baumhauer, M.; Teber, D.; Rassweiler, J.J.; Meinzer, H.P.; Maier-Hein, L. Mobile augmented reality for computer-assisted percutaneous nephrolithotomy. Int. J. Comput. Assist. Radiol. Surg. 2013, 8, 663–675. [Google Scholar] [CrossRef]

- Prakosa, A.; Southworth, M.K.; Silva, J.N.A.; Silva, J.R.; Trayanova, N.A. Impact of augmented-reality improvement in ablation catheter navigation as assessed by virtual-heart simulations of ventricular tachycardia ablation. Comput. Biol. Med. 2021, 133, 104366. [Google Scholar] [CrossRef] [PubMed]

- Tu, P.; Gao, Y.; Lungu, A.J.; Li, D.; Wang, H.; Chen, X. Augmented reality based navigation for distal interlocking of intramedullary nails utilizing Microsoft HoloLens 2. Comput. Biol. Med. 2021, 133, 104402. [Google Scholar] [CrossRef] [PubMed]

- Abdoh, M.G.; Bekaert, O.; Hodel, L.; Diarra, S.M.; le Guerinel, C.; Nseir, R.; Bastuji-Garin, S.; Decq, P. Accuracy of external ventricular drainage catheter placement. Acta Neurochir. 2012, 154, 153–159. [Google Scholar] [CrossRef] [PubMed]

- Ieiri, S.; Uemura, M.; Konishi, K.; Souzaki, R.; Nagao, Y.; Tsutsumi, N.; Akahoshi, T.; Ohuchida, K.; Ohdaira, T.; Tomikawa, M.; et al. Augmented reality navigation system for laparoscopic splenectomy in children based on preoperative CT image using optical tracking device. Pediatr. Surg. Int. 2012, 28, 341–346. [Google Scholar] [CrossRef] [PubMed]

- Kenngott, H.G.; Preukschas, A.A.; Wagner, M.; Nickel, F.; Müller, M.; Bellemann, N.; Stock, C.; Fangerau, M.; Radeleff, B.; Kauczor, H.-U.; et al. Mobile, real-time, and point-of-care augmented reality is robust, accurate, and feasible: A prospective pilot study. Surg. Endosc. 2018, 32, 2958–2967. [Google Scholar] [CrossRef] [PubMed]

- Lai, M.; Skyrman, S.; Shan, C.; Babic, D.; Homan, R.; Edström, E.; Persson, O.; Urström, G.; Elmi-Terander, A.; Hendriks, B.H.; et al. Fusion of augmented reality imaging with the endoscopic view for endonasal skull base surgery; a novel application for surgical navigation based on intraoperative cone beam computed tomography and optical tracking. PLoS ONE 2020, 15, e0227312. [Google Scholar]

- Deng, W.; Deng, F.; Wang, M.; Song, Z. Easy-to-use augmented reality neuronavigation using a wireless tablet PC. Stereotact. Funct. Neurosurg. 2014, 92, 17–24. [Google Scholar] [CrossRef]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).