Eye-Tracking-Based Analysis of Situational Awareness of Nurses

Abstract

1. Introduction

2. Materials and Methods

2.1. Eye-Tracking

2.2. Study Design

2.3. Data Collection

Ethical Considerations

2.4. Data Analysis

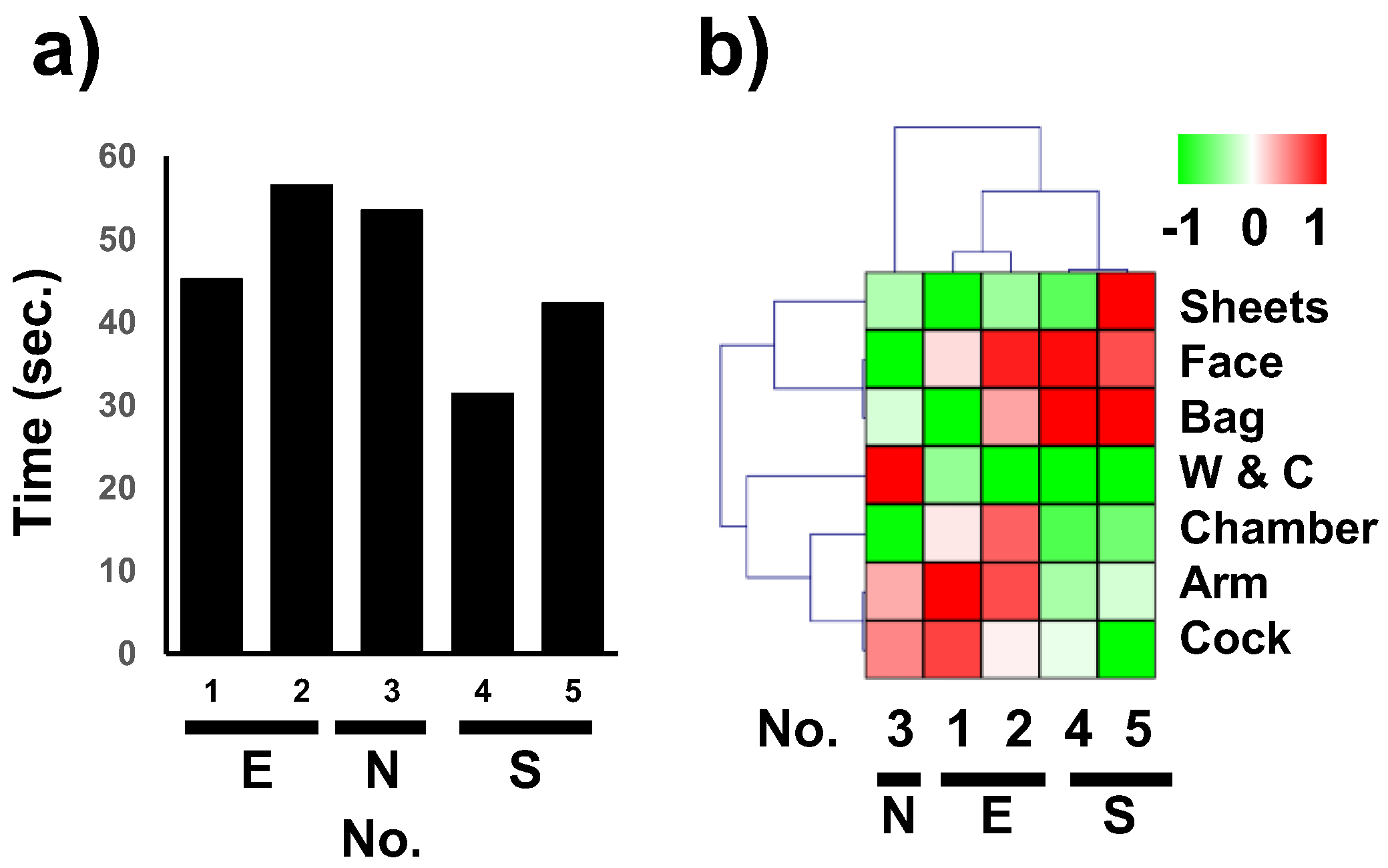

3. Results

3.1. Determination of AOIs and Customization of STD

3.2. Differences between Gaze Durations Corresponding to Different AOIs

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Rouleau, G.; Gagnon, M.-P.; Côté, J.; Payne-Gagnon, J.; Hudson, E.; Dubois, C.-A.; Bouix-Picasso, J. Effects of E-Learning in a Continuing Education Context on Nursing Care: Systematic Review of Systematic Qualitative, Quantitative, and Mixed-Studies Reviews. J. Med. Internet Res. 2019, 21, e15118. [Google Scholar] [CrossRef] [PubMed]

- O’Flaherty, J.A.; Laws, T.A. Nursing Student’s Evaluation of a Virtual Classroom Experience in Support of Their Learning Bioscience. Nurse Educ. Pract. 2014, 14, 654–659. [Google Scholar] [CrossRef] [PubMed]

- Villegas, N.; Cianelli, R.; Cerisier, K.; Fernandez-Pineda, M.; Jacobson, F.; Lin, H.H.; Sanchez, H.; Davenport, E.; Zavislak, K. Development and Evaluation of a Telehealth-Based Simulation to Improve Breastfeeding Education and Skills among Nursing Students. Nurse Educ. Pract. 2021, 57, 103226. [Google Scholar] [CrossRef] [PubMed]

- Chen, F.-Q.; Leng, Y.-F.; Ge, J.-F.; Wang, D.-W.; Li, C.; Chen, B.; Sun, Z.-L. Effectiveness of Virtual Reality in Nursing Education: Meta-Analysis. J. Med. Internet Res. 2020, 22, e18290. [Google Scholar] [CrossRef] [PubMed]

- Padilha, J.M.; Machado, P.P.; Ribeiro, A.; Ramos, J.; Costa, P. Clinical Virtual Simulation in Nursing Education: Randomized Controlled Trial. J. Med. Internet Res. 2019, 21, e11529. [Google Scholar]

- Oermann, M.H. Technology and Teaching Innovations in Nursing Education: Engaging the Student. LWW 2015, 40, 55–56. [Google Scholar] [CrossRef]

- Ashraf, H.; Sodergren, M.H.; Merali, N.; Mylonas, G.; Singh, H.; Darzi, A. Eye-Tracking Technology in Medical Education: A Systematic Review. Med. Teach. 2018, 40, 62–69. [Google Scholar] [CrossRef]

- Al-Moteri, M.O.; Symmons, M.; Plummer, V.; Cooper, S. Eye Tracking to Investigate Cue Processing in Medical Decision-Making: A Scoping Review. Comput. Hum. Behav. 2017, 66, 52–66. [Google Scholar] [CrossRef]

- Kołodziej, P.; Tuszyńska-Bogucka, W.; Dzieńkowski, M.; Bogucki, J.; Kocki, J.; Milosz, M.; Kocki, M.; Reszka, P.; Kocki, W.; Bogucka-Kocka, A. Eye Tracking-an Innovative Tool in Medical Parasitology. J. Clin. Med. 2021, 10, 2989. [Google Scholar] [CrossRef]

- Cho, H.; Powell, D.; Pichon, A.; Kuhns, L.M.; Garofalo, R.; Schnall, R. Eye-Tracking Retrospective Think-Aloud as a Novel Approach for a Usability Evaluation. Int. J. Med. Inform. 2019, 129, 366–373. [Google Scholar] [CrossRef]

- King, A.J.; Cooper, G.F.; Clermont, G.; Hochheiser, H.; Hauskrecht, M.; Sittig, D.F.; Visweswaran, S. Leveraging Eye Tracking to Prioritize Relevant Medical Record Data: Comparative Machine Learning Study. J. Med. Internet Res. 2020, 22, e15876. [Google Scholar] [CrossRef]

- Sanchez-Ferrer, F.; Ramos-Rincón, J.M.; Fernández, E. Use of Eye-Tracking Technology by Medical Students Taking the Objective Structured Clinical Examination: Descriptive Study. J. Med. Internet Res. 2020, 22, e17719. [Google Scholar]

- Zhang, J.Y.; Liu, S.L.; Feng, Q.M.; Gao, J.Q.; Zhang, Q. Correlative Evaluation of Mental and Physical Workload of Laparoscopic Surgeons Based on Surface Electromyography and Eye-Tracking Signals. Sci. Rep. 2017, 7, 11095. [Google Scholar] [CrossRef]

- Maekawa, Y.; Majima, Y.; Soga, M. Quantifying Eye Tracking between Skilled Nurses and Nursing Students in Intravenous Injection. In Nursing Informatics 2016; IOS Press: Amsterdam, The Netherlands, 2016; pp. 525–529. [Google Scholar]

- Williams, B.; Quested, A.; Cooper, S. Can Eye-Tracking Technology Improve Situational Awareness in Paramedic Clinical Education? Open Access Emerg. Med. 2013, 5, 23–28. [Google Scholar] [CrossRef][Green Version]

- O’Meara, P.; Munro, G.; Williams, B.; Cooper, S.; Bogossian, F.; Ross, L.; Sparkes, L.; Browning, M.; McClounan, M. Developing Situation Awareness Amongst Nursing and Paramedicine Students Utilizing Eye Tracking Technology and Video Debriefing Techniques: A Proof of Concept Paper. Int. Emerg. Nurs. 2015, 23, 94–99. [Google Scholar] [CrossRef]

- Marquard, J.L.; Henneman, P.L.; He, Z.; Jo, J.; Fisher, D.L.; Henneman, E.A. Nurses’ Behaviors and Visual Scanning Patterns May Reduce Patient Identification Errors. J. Exp. Psychol. Appl. 2011, 17, 247. [Google Scholar] [CrossRef]

- Charusiri, P.; Yamada, K.; Wang, Y.; Tanaka, T. Benefits of Product Infographic Labeling on Meat Packaging. Int. J. Asia Digit. Art. Des. Assoc. 2021, 25, 30–38. [Google Scholar]

- Egi, H.; Ozawa, S.; Mori, Y. Analyses of Comparative Gaze with Eye-Tracking Technique During Classroom Observations. In Proceedings of the Society for Information Technology & Teacher Education International Conference, Jacksonville, FL, USA, 17–21 March 2014; pp. 733–738. [Google Scholar]

- Sokan, A.; Egi, H.; Fujinami, K. Spatial Connectedness of Information Presentation for Safety Training in Chemistry Experiments. In Proceedings of the ACM International Conference on Interactive Tabletops and Surfaces, Kobe, Japan, 13–16 November 2011; pp. 252–253. [Google Scholar]

- Egi, H.; Ozawa, S. A Preliminary Analysis of Taking Notes in E-Learning with Eye-Tracking of Students. In Proceedings of the EdMedia+ Innovate Learning, Lisbon, Portugal, 27 June 2011; pp. 1516–1521. [Google Scholar]

- Sugimoto, M.; Hikichi, S.; Takada, M.; Toi, M. Machine Learning Techniques for Breast Cancer Diagnosis and Treatment: A Narrative Review. Ann. Breast. Surg. 2021. [Google Scholar] [CrossRef]

- Koh, R.Y.; Park, T.; Wickens, C.D.; Ong, L.T.; Chia, S.N. Differences in Attentional Strategies by Novice and Experienced Operating Theatre Scrub Nurses. J. Exp. Psychol. Appl. 2011, 17, 233. [Google Scholar] [CrossRef]

- Brunyé, T.T.; Drew, T.; Weaver, D.L.; Elmore, J.G. A Review of Eye Tracking for Understanding and Improving Diagnostic Interpretation. Cogn. Res. Princ. Implic. 2019, 4, 7. [Google Scholar] [CrossRef] [PubMed]

- Shinnick, M.A. Validating Eye Tracking as an Objective Assessment Tool in Simulation. Clin. Simul. Nurs. 2016, 12, 438–446. [Google Scholar] [CrossRef]

- Henneman, E.A.; Cunningham, H.; Fisher, D.L.; Plotkin, K.; Nathanson, B.H.; Roche, J.P.; Marquard, J.L.; Reilly, C.A.; Henneman, P.L. Eye Tracking as a Debriefing Mechanism in the Simulated Setting Improves Patient Safety Practices. Dimens. Crit. Care Nurs. 2014, 33, 129–135. [Google Scholar] [CrossRef] [PubMed]

| No. | Age | Sex | Experience | Note |

| 1 | 52 | F | 26Y | Experienced |

| 2 | 30 | M | 10Y | Experienced |

| 3 | 23 | F | 8M | Novice |

| 4 | 20 | F | 3rd grade | Student |

| 5 | 22 | M | 4th grade | Student |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sugimoto, M.; Tomita, A.; Oyamada, M.; Sato, M. Eye-Tracking-Based Analysis of Situational Awareness of Nurses. Healthcare 2022, 10, 2131. https://doi.org/10.3390/healthcare10112131

Sugimoto M, Tomita A, Oyamada M, Sato M. Eye-Tracking-Based Analysis of Situational Awareness of Nurses. Healthcare. 2022; 10(11):2131. https://doi.org/10.3390/healthcare10112131

Chicago/Turabian StyleSugimoto, Masahiro, Atsumi Tomita, Michiko Oyamada, and Mitsue Sato. 2022. "Eye-Tracking-Based Analysis of Situational Awareness of Nurses" Healthcare 10, no. 11: 2131. https://doi.org/10.3390/healthcare10112131

APA StyleSugimoto, M., Tomita, A., Oyamada, M., & Sato, M. (2022). Eye-Tracking-Based Analysis of Situational Awareness of Nurses. Healthcare, 10(11), 2131. https://doi.org/10.3390/healthcare10112131