Yoga Pose Estimation Using Angle-Based Feature Extraction

Abstract

:1. Introduction

2. Related Works

2.1. Importance of Yoga Apps

2.2. Pose Estimation Methods

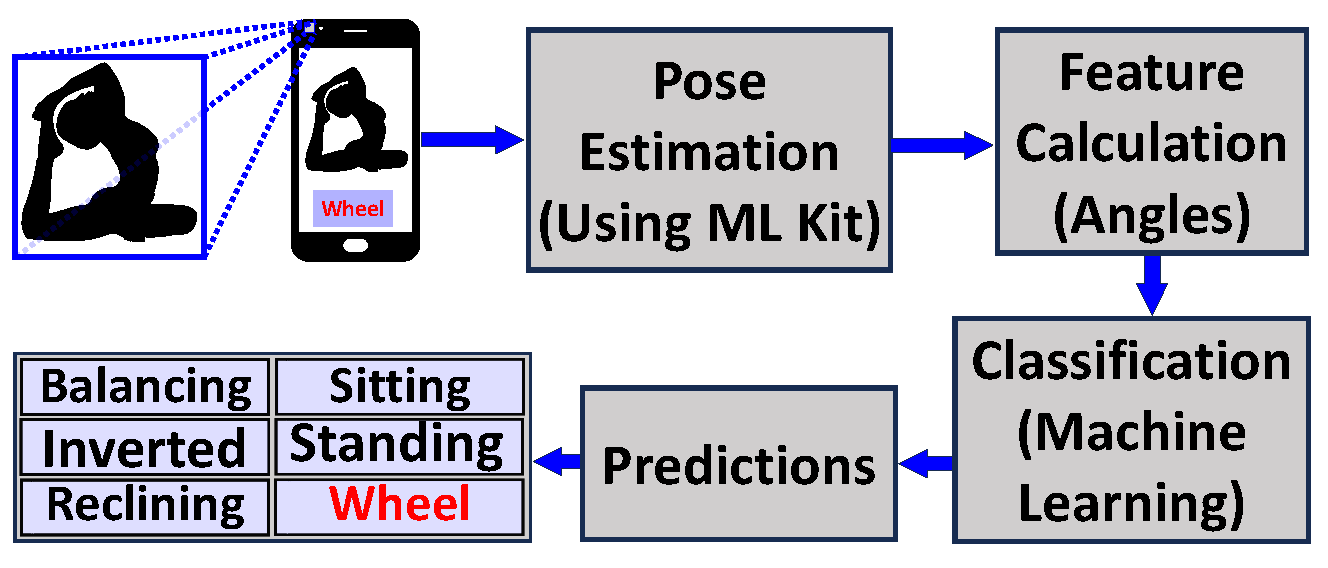

3. Proposed Approach

3.1. Dataset and Preprocessing

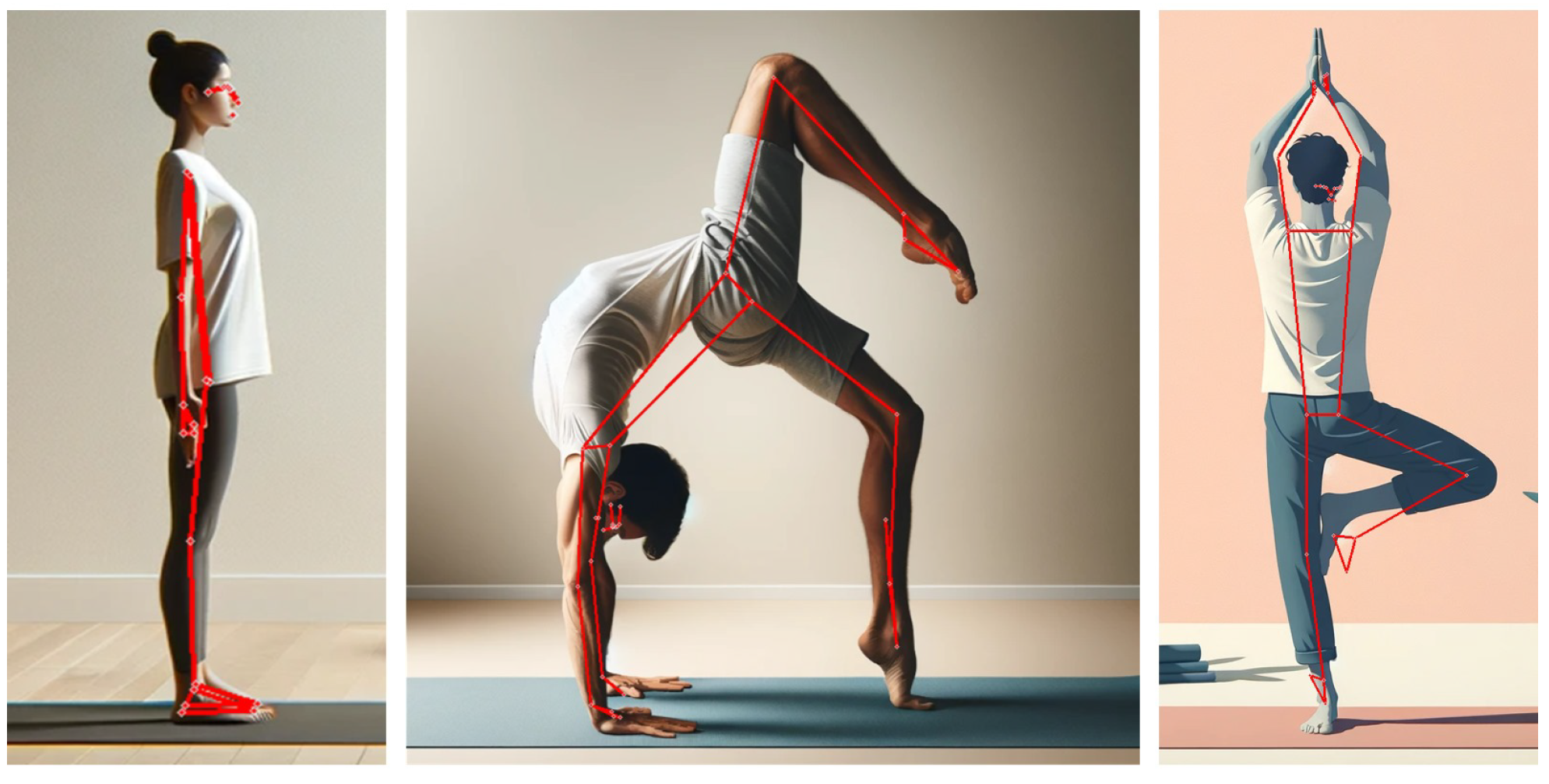

3.2. MLKit Pose Estimation

3.3. Calculating the Angles

- The Pseudocode is as follows (Figure 2):

- : a 2D vector representing the position of the hip;

- : a 2D vector representing the position of the knee;

- : a 2D vector representing the position of the ankle;

- Outputs, namely : the angle in degrees between the vectors of the hip and knee as well as the knee and ankle.

- The steps are as follows:

- Compute the vector differences and magnitudes:

- Compute the dot product and angle:

- Return as the output.

3.4. Supervised Modeling

4. Experiments and Results

4.1. Experiments

4.2. Results

4.3. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sigal, L. Human Pose Estimation. In Computer Vision: A Reference Guide; Ikeuchi, K., Ed.; Springer: New York, NY, USA, 2014; pp. 362–370. [Google Scholar] [CrossRef]

- Chen, S.; Yang, R.R. Pose trainer: Correcting exercise posture using pose estimation. arXiv 2020, arXiv:2006.11718. [Google Scholar]

- Ross, A.; Thomas, S. The health benefits of yoga and exercise: A review of comparison studies. J. Altern. Complement. Med. 2010, 16, 3–12. [Google Scholar] [CrossRef] [PubMed]

- Akhtar, P.; Yardi, S.; Akhtar, M. Effects of yoga on functional capacity and well being. Int. J. Yoga 2013, 6, 76. [Google Scholar] [CrossRef] [PubMed]

- Raub, J.A. Psychophysiologic effects of Hatha Yoga on musculoskeletal and cardiopulmonary function: A literature review. J. Altern. Complement. Med. 2002, 8, 797–812. [Google Scholar] [CrossRef] [PubMed]

- Polsgrove, M.J.; Eggleston, B.M.; Lockyer, R.J. Impact of 10-weeks of yoga practice on flexibility and balance of college athletes. Int. J. Yoga 2016, 9, 27. [Google Scholar] [CrossRef]

- Woodyard, C. Exploring the therapeutic effects of yoga and its ability to increase quality of life. Int. J. Yoga 2011, 4, 49. [Google Scholar] [CrossRef] [PubMed]

- Marentakis, G.; Borthakur, D.; Batchelor, P.; Andersen, J.P.; Grace, V. Using Breath-like Cues for Guided Breathing. In Proceedings of the Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; pp. 1–7. [Google Scholar]

- Brynzak, S.; Burko, S. Improving athletic performance of basketball student team with the classical yoga exercises. Pedagog. Psychol. Med.-Biol. Probl. Phys. Train. Sport. 2013, 17, 3–6. [Google Scholar]

- Kanaujia, S.; Saraswati, P.; Anshu; Singh, N.; Singh, S.; Kataria, N.; Yadav, P. Effect of yoga and mindfulness on psychological correlates in young athletes: A meta-analysis. J. Ayurveda Integr. Med. 2023, 14, 100725. [Google Scholar] [CrossRef]

- Wolff, M.; Sundquist, K.; Larsson Lönn, S.; Midlöv, P. Impact of yoga on blood pressure and quality of life in patients with hypertension—A controlled trial in primary care, matched for systolic blood pressure. BMC Cardiovasc. Disord. 2013, 13, 1–9. [Google Scholar] [CrossRef]

- Litman, L.; Rosen, Z.; Spierer, D.; Weinberger-Litman, S.; Goldschein, A.; Robinson, J. Mobile exercise apps and increased leisure time exercise activity: A moderated mediation analysis of the role of self-efficacy and barriers. J. Med. Internet Res. 2015, 17, e4142. [Google Scholar] [CrossRef]

- Gkioxari, G.; Hariharan, B.; Girshick, R.; Malik, J. R-cnns for pose estimation and action detection. arXiv 2014, arXiv:1406.5212. [Google Scholar]

- Anand Thoutam, V.; Srivastava, A.; Badal, T.; Kumar Mishra, V.; Sinha, G.; Sakalle, A.; Bhardwaj, H.; Raj, M. Yoga pose estimation and feedback generation using deep learning. Comput. Intell. Neurosci. 2022, 2022, 4311350. [Google Scholar] [CrossRef] [PubMed]

- Garg, S.; Saxena, A.; Gupta, R. Yoga pose classification: A CNN and MediaPipe inspired deep learning approach for real-world application. J. Ambient. Intell. Humaniz. Comput. 2022, 1–12. [Google Scholar] [CrossRef]

- Chasmai, M.; Das, N.; Bhardwaj, A.; Garg, R. A View Independent Classification Framework for Yoga Postures. SN Comput. Sci. 2022, 3, 476. [Google Scholar] [CrossRef] [PubMed]

- Palanimeera, J.; Ponmozhi, K. Classification of yoga pose using machine learning techniques. Mater. Today Proc. 2021, 37, 2930–2933. [Google Scholar] [CrossRef]

- Kinger, S.; Desai, A.; Patil, S.; Sinalkar, H.; Deore, N. Deep Learning Based Yoga Pose Classification. In Proceedings of the 2022 International Conference on Machine Learning, Big Data, Cloud and Parallel Computing (COM-IT-CON), Faridabad, India, 26–27 May 2022; Volume 1, pp. 682–691. [Google Scholar]

- Gard, T.; Noggle, J.J.; Park, C.L.; Vago, D.R.; Wilson, A. Potential self-regulatory mechanisms of yoga for psychological health. Front. Hum. Neurosci. 2014, 8, 770. [Google Scholar] [CrossRef] [PubMed]

- Yu, N.; Huang, Y.T. Important factors affecting user experience design and satisfaction of a mobile health app—A case study of daily yoga app. Int. J. Environ. Res. Public Health 2020, 17, 6967. [Google Scholar] [CrossRef]

- van Ravenswaaij-Arts, C.M.; Kollee, L.A.; Hopman, J.C.; Stoelinga, G.B.; van Geijn, H.P. Heart rate variability. Ann. Intern. Med. 1993, 118, 436–447. [Google Scholar] [CrossRef]

- Chiddarwar, G.G.; Ranjane, A.; Chindhe, M.; Deodhar, R.; Gangamwar, P. AI-based yoga pose estimation for android application. Int. J. Innov. Sci. Res. Technol. 2020, 5, 1070–1073. [Google Scholar] [CrossRef]

- Long, C.; Jo, E.; Nam, Y. Development of a yoga posture coaching system using an interactive display based on transfer learning. J. Supercomput. 2022, 1–16. [Google Scholar] [CrossRef]

- Pavitra, G.; Anamika, C. Deep Learning-Based Yoga Learning Application. In Computer Vision and Robotics: Proceedings of CVR 2021; Springer: Berlin/Heidelberg, Germany, 2022; pp. 365–380. [Google Scholar]

- Chaudhari, A.; Dalvi, O.; Ramade, O.; Ambawade, D. Yog-Guru: Real-Time Yoga Pose Correction System Using Deep Learning Methods. In Proceedings of the 2021 International Conference on Communication information and Computing Technology (ICCICT), Mumbai, India, 25–27 June 2021; pp. 1–6. [Google Scholar]

- Chen, Y.; Zheng, B.; Zhang, Z.; Wang, Q.; Shen, C.; Zhang, Q. Deep learning on mobile and embedded devices: State-of-the-art, challenges, and future directions. ACM Comput. Surv. (CSUR) 2020, 53, 1–37. [Google Scholar] [CrossRef]

- Zhao, T.; Xie, Y.; Wang, Y.; Cheng, J.; Guo, X.; Hu, B.; Chen, Y. A survey of deep learning on mobile devices: Applications, optimizations, challenges, and research opportunities. Proc. IEEE 2022, 110, 334–354. [Google Scholar] [CrossRef]

- Rafi, U.; Leibe, B.; Gall, J.; Kostrikov, I. An Efficient Convolutional Network for Human Pose Estimation. In Proceedings of the BMVC, York, UK, 19–22 September 2016; Volume 1, p. 2. [Google Scholar]

- Kothari, S. Yoga Pose Classification Using Deep Learning. Master’s Thesis, San Jose University, San Jose, CA, USA, 2020. [Google Scholar]

- Shavit, Y.; Ferens, R. Introduction to camera pose estimation with deep learning. arXiv 2019, arXiv:1907.05272. [Google Scholar]

- Cao, Z.; Simon, T.; Wei, S.E.; Sheikh, Y. Realtime multi-person 2d pose estimation using part affinity fields. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7291–7299. [Google Scholar]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.E.; Sheikh, Y. OpenPose: Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields. arXiv 2019, arXiv:cs.CV/1812.08008. [Google Scholar] [CrossRef]

- Pishchulin, L.; Insafutdinov, E.; Tang, S.; Andres, B.; Andriluka, M.; Gehler, P.V.; Schiele, B. Deepcut: Joint subset partition and labeling for multi person pose estimation. In Proceedings of the P IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 4929–4937. [Google Scholar]

- Verma, M.; Kumawat, S.; Nakashima, Y.; Raman, S. Yoga-82: A new dataset for fine-grained classification of human poses. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 13–19 June 2020; pp. 1038–1039. [Google Scholar]

- Borthakur, D.; Dubey, H.; Constant, N.; Mahler, L.; Mankodiya, K. Smart fog: Fog computing framework for unsupervised clustering analytics in wearable internet of things. In Proceedings of the 2017 IEEE Global Conference on Signal and Information Processing (GlobalSIP), Montreal, QC, Canada, 14–16 November 2017; pp. 472–476. [Google Scholar]

- Lundberg, S.M.; Lee, S.I. A unified approach to interpreting model predictions. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

| App Name | Origin | Features |

|---|---|---|

| FitYoga | India | Offers guided yoga and meditation with AI-driven real-time movement tracking for posture improvement. Features include chakra balancing and wellness practices. https://fityoga.app/, accessed on 1 November 2023 |

| Sofia | India | AI-based yoga training with Google sign in. Features voice-assisted Asanas, real-time pose recognition, and rewards for pose accuracy. https://soumi7.github.io/project-sofia.html, accessed on 1 November 2023 |

| YogAI | UK | Features a learning and practice module with TensorFlow PoseNet and ml5js for pose detection and classification. Requires camera access for pose matching. https://cris-maillo.github.io/yogAI/index.html, accessed on 1 November 2023. |

| YogaIntelliJ | India | A web-based yoga application that predicts body coordinates for yoga poses using a webcam and provides feedback on pose accuracy. https://eager-bardeen-e9f94f.netlify.app/, accessed on 1 Decemeber 2023 |

| Yoga Studio by Gaiam | USA | Offers over 180 classes and custom flows. Includes meditations but requires a subscription for some features. Layout can be complex, and updates may cause glitches. https://yogastudioapp.com/gaiam, accessed on 1 Decemebre 2023 |

| Down Dog | USA | Customizable practices with various styles and instructor voices. Focuses on beginner to intermediate levels but requires a membership for full access and offers limited music in the free version. https://www.downdogapp.com/, accessed on 1 November 2023 |

| Daily Yoga | China | Features the largest global yoga community with new classes weekly. Offers extensive yoga and meditation classes but is more expensive and advanced, requiring a subscription for full access. https://www.dailyyoga.com/, accessed on 1 November 2023 |

| Feature | Description | Feature | Description |

|---|---|---|---|

| LSA | Left Shoulder Angle | RSA | Right Shoulder Angle |

| LEA | Left Elbow Angle | REA | Right Elbow Angle |

| LHA | Left Hip Angle | RHA | Right Hip Angle |

| LKA | Left Knee Angle | RKA | Right Knee Angle |

| Algorithm | Precision | Recall | F1 | Accuracy | |||

|---|---|---|---|---|---|---|---|

| (Overall) | (Weighted) | (Overall) | (Weighted) | (Overall) | (Weighted) | ||

| Traditional Logistic Regression | 0.27 | 0.56 | 0.26 | 0.69 | 0.24 | 0.61 | 0.69 |

| Polynomial Logistic Regression | 0.78 | 0.84 | 0.67 | 0.85 | 0.72 | 0.84 | 0.85 |

| Support Vector Machines | 0.84 | 0.84 | 0.60 | 0.84 | 0.67 | 0.82 | 0.84 |

| Random Forest | 0.90 | 0.89 | 0.73 | 0.89 | 0.79 | 0.88 | 0.89 |

| Extremely Randomized Trees (Extra Trees) | 0.91 | 0.91 | 0.78 | 0.91 | 0.83 | 0.91 | 0.91 |

| Gradient Boosting | 0.86 | 0.88 | 0.72 | 0.88 | 0.77 | 0.87 | 0.88 |

| Extreme Gradient Boosting (XGBoost) | 0.86 | 0.89 | 0.75 | 0.89 | 0.80 | 0.89 | 0.89 |

| Deep Neural Networks | 0.83 | 0.87 | 0.73 | 0.88 | 0.77 | 0.87 | 0.88 |

| Architecture | Precision | Recall | F1 | Accuracy | |||

|---|---|---|---|---|---|---|---|

| (Overall) | (Weighted) | (Overall) | (Weighted) | (Overall) | (Weighted) | ||

| Traditional Logistic Regression | 0.27 | 0.56 | 0.24 | 0.67 | 0.21 | 0.59 | 0.67 |

| Polynomial Logistic Regression | 0.76 | 0.82 | 0.65 | 0.83 | 0.69 | 0.82 | 0.83 |

| Support Vector Machines | 0.82 | 0.83 | 0.60 | 0.83 | 0.66 | 0.81 | 0.83 |

| Random Forest | 0.91 | 0.90 | 0.74 | 0.90 | 0.79 | 0.89 | 0.90 |

| Extremely Randomized Trees (Extra Trees) | 0.92 | 0.92 | 0.79 | 0.92 | 0.84 | 0.91 | 0.92 |

| Gradient Boosting | 0.89 | 0.89 | 0.74 | 0.89 | 0.80 | 0.89 | 0.89 |

| Extreme Gradient Boosting (XGBoost) | 0.88 | 0.89 | 0.74 | 0.90 | 0.80 | 0.89 | 0.90 |

| Deep Neural Networks | 0.84 | 0.85 | 0.65 | 0.85 | 0.71 | 0.83 | 0.85 |

| Architecture | Top-1 % Accuracy |

|---|---|

| ResNet-50 | 63.44 |

| ResNet-101 | 65.84 |

| ResNet-50-V2 | 62.56 |

| ResNet-101-V2 | 61.81 |

| DenseNet-121 | 73.48 |

| DenseNet-169 | 74.73 |

| DenseNet-201 | 74.91 |

| MobileNet | 67.55 |

| MobileNet-V2 | 71.11 |

| ResNext-50 | 68.45 |

| ResNext-101 | 65.24 |

| Extremely Randomized Trees (Extra Trees) | 92 |

| Algorithm | Prediction Latency | |

|---|---|---|

| (Mean) | (Median) | |

| Logistic Regression | 2.587 | 2.390 |

| Polynomial Regression | 2.129 | 1.490 |

| Support Vector Machines | 24.327 | 24.115 |

| Random Forest | 33.698 | 32.593 |

| Extremely Randomized Trees (ExtraTrees) | 33.827 | 31.371 |

| Gradient Boosting | 13.189 | 12.290 |

| Extreme Gradient Boosting (XGBoost) | 17.060 | 16.724 |

| Deep Neural Networks | 64.200 | 59.813 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Borthakur, D.; Paul, A.; Kapil, D.; Saikia, M.J. Yoga Pose Estimation Using Angle-Based Feature Extraction. Healthcare 2023, 11, 3133. https://doi.org/10.3390/healthcare11243133

Borthakur D, Paul A, Kapil D, Saikia MJ. Yoga Pose Estimation Using Angle-Based Feature Extraction. Healthcare. 2023; 11(24):3133. https://doi.org/10.3390/healthcare11243133

Chicago/Turabian StyleBorthakur, Debanjan, Arindam Paul, Dev Kapil, and Manob Jyoti Saikia. 2023. "Yoga Pose Estimation Using Angle-Based Feature Extraction" Healthcare 11, no. 24: 3133. https://doi.org/10.3390/healthcare11243133

APA StyleBorthakur, D., Paul, A., Kapil, D., & Saikia, M. J. (2023). Yoga Pose Estimation Using Angle-Based Feature Extraction. Healthcare, 11(24), 3133. https://doi.org/10.3390/healthcare11243133