Sociodemographic Variables in Offender and Non-Offender Patients Diagnosed with Schizophrenia Spectrum Disorders—An Explorative Analysis Using Machine Learning

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Population

2.1.1. Forensic Psychiatric Subpopulation (OP)

2.1.2. General Psychiatric Subpopulation (NOP)

2.2. Data Source and Extraction

2.3. Selection of Predictor Variables

2.4. Data Analysis Using Machine Learning

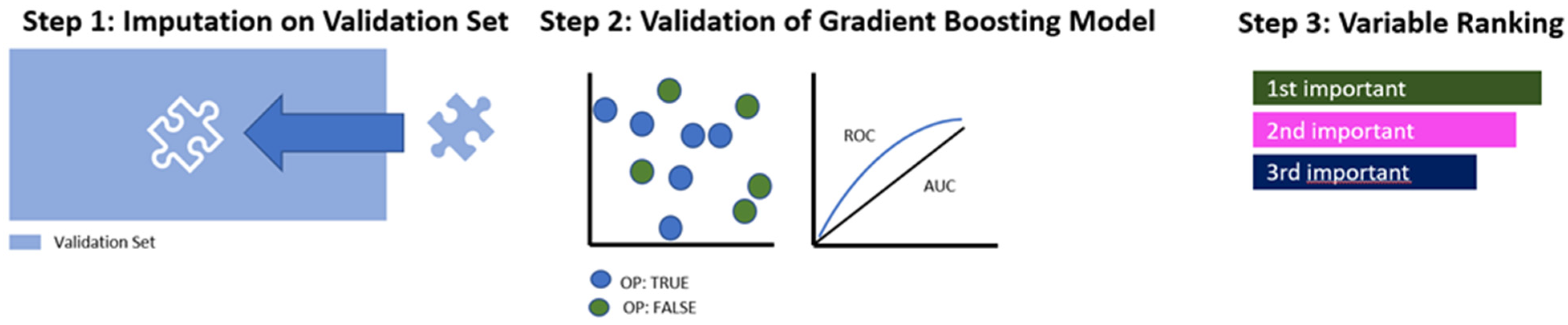

2.4.1. Preprocessing

2.4.2. Training of the Algorithm

2.4.3. Validation of the Algorithm

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Variable Code | Variable Label |

| y (Outcome variable) | Is the patient forensic? |

| SD1 | Age at admission? |

| SD2 | Sex according to patient files |

| SD3a | Country of birth: Switzerland? |

| SD3b | Country of birth: Balkan region country? |

| SD3c | Country of birth: other European country? |

| SD3d | Country of birth: Middle east? |

| SD3e | Country of birth: Africa? |

| SD3f | Country of birth: other country? |

| SD3g | If 3b–3f do not apply, legal residence in Switzerland? |

| SD4a | Christian faith? |

| SD4b | Islamic faith? |

| SD5a | Marital Status (at time of the investigated offence)—married? |

| SD5b | Marital Status (at time of the investigated offence)—single? |

| SD6a | Living situation (at time of the investigated offence)—mental health care institution |

| SD6b | Living situation (at time of the investigated offence)—assisted living |

| SD6c | Living situation (at time of the investigated offence)—home alone |

| SD6d | Living situation (at time of the investigated offence)—home with others |

| SD6e | Living situation (at time of the investigated offence)—at parents’ home |

| SD6f | Living situation (at time of the investigated offence)—with relatives |

| SD6g | Living situation (at time of the investigated offence)—homeless |

| SD6h | Living situation (at time of the investigated offence)—prison |

| SD6i | Living situation (at time of the investigated offence)—other |

| SD7a | Highest graduation (at time of the investigated offence)—no compulsory schooling |

| SD7b | Highest graduation: (at time of the investigated offence)—compulsory schooling |

| SD7c | Highest graduation (at time of the investigated offence)—graduation |

| SD7d | Highest graduation (at time of the investigated offence)—college/university |

| SD8a | Learned profession: no apprenticeship |

| SD8b | Learned profession: college/university degree |

| SD8c | Learned profession: official/civil servant |

| SD8d | Learned profession: mercantile job |

| SD8e | Learned profession: non-mercantile job |

| SD8f | Learned profession: crafting job |

| SD8g | Learned profession: other job |

| SD9 | Is the patient a nonworker (at time of the investigated offence)? |

| SD11 | Is the patient a nonworker (majority of occupational time)? |

| SD12a | Rank at job: basal (majority of occupational time)? |

| SD12b | Rank at job: complex (majority of occupational time)? |

| SD14 | Own children? |

| SD15 | Any siblings? |

| SD17 | Was the legal guardian married? |

| SD18a | Who was/is the legal guardian—birth parents? |

| SD18b | Who was/is the legal guardian—single parents? |

| SD18c | Who was/is the legal guardian—step-parents? |

| SD18d | Who was/is the legal guardian—one step-parent? |

| SD18f | Who was/is the legal guardian—grandparents? |

| SD18g | Who was/is the legal guardian—foster parents? |

| SD18h | Who was/is the legal guardian—child home? |

| SD19 | Member in a (leisure) club? |

References

- El Naqa, I.; Murphy, M.J. What Is Machine Learning? In Machine Learning in Radiation Oncology: Theory and Applications; El Naqa, I., Li, R., Murphy, M.J., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 3–11. [Google Scholar]

- Rebala, G.; Ravi, A.; Churiwala, S. Machine Learning Definition and Basics. In An Introduction to Machine Learning; Rebala, G., Ravi, A., Churiwala, S., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 1–17. [Google Scholar]

- Nasteski, V. An overview of the supervised machine learning methods. Horizons B 2017, 4, 51–62. [Google Scholar] [CrossRef]

- Kirchebner, J. A collection of medical findings using machine learning and their relevance to psychiatry. Swiss Arch. Neurol. Psychiatry Psychother. 2022, 173, w03251. [Google Scholar] [CrossRef]

- Gutierrez, D.D. Machine Learning and Data Science: An Introduction to Statistical Learning Methods with R; Technics Publications: Sedona, AZ, USA, 2015. [Google Scholar]

- Oquendo, M.A.; Baca-Garcia, E.; Artés-Rodríguez, A.; Perez-Cruz, F.; Galfalvy, H.C.; Blasco-Fontecilla, H.; Madigan, D.; Duan, N. Machine learning and data mining: Strategies for hypothesis generation. Mol. Psychiatry 2012, 17, 956–959. [Google Scholar] [CrossRef] [PubMed]

- Silva-Ayçaguer, L.C.; Suárez-Gil, P.; Fernández-Somoano, A. The null hypothesis significance test in health sciences research (1995–2006): Statistical analysis and interpretation. BMC Med. Res. Methodol. 2010, 10, 44. [Google Scholar] [CrossRef]

- Ryffel, F.A. Alpha and beta error (accumulation). In The International Encyclopedia of Communication Research Methods; John Wiley & Sons: Hoboken, NJ, USA, 2017; pp. 1–5. [Google Scholar]

- Popper, K.R. Logik der Forschung: Zur Erkenntnistheorie der Moderner Naturwissenschaft; Verlag von Julius Springer: Berlin/Heidelberg, Germany, 1935. [Google Scholar]

- Szucs, D.; Ioannidis, J.P.A. When Null Hypothesis Significance Testing Is Unsuitable for Research: A Reassessment. Front. Hum. Neurosci. 2017, 11, 390. [Google Scholar] [CrossRef]

- Krueger, J. Null hypothesis significance testing: On the survival of a flawed method. Am. Psychol. 2001, 56, 16. [Google Scholar] [CrossRef]

- Balluerka, N.; Gómez, J.; Hidalgo, D. The Controversy over Null Hypothesis Significance Testing Revisited. Methodology 2005, 1, 55–70. [Google Scholar] [CrossRef]

- Krantz, D.H. The Null Hypothesis Testing Controversy in Psychology. J. Am. Stat. Assoc. 1999, 94, 1372–1381. [Google Scholar] [CrossRef]

- Sedgwick, P.M.; Hammer, A.; Kesmodel, U.S.; Pedersen, L.H. Current controversies: Null hypothesis significance testing. Acta Obs. Gynecol Scand 2022, 101, 624–627. [Google Scholar] [CrossRef]

- Ingram, R.E.; Luxton, D.D. Vulnerability-stress models. Dev. Psychopathol. A Vulnerability-Stress Perspect. 2005, 46, 32–46. [Google Scholar]

- Cheng, S.C.; Walsh, E.; Schepp, K.G. Vulnerability, Stress, and Support in the Disease Trajectory from Prodrome to Diagnosed Schizophrenia: Diathesis–Stress–Support Model. Arch. Psychiatr. Nurs. 2016, 30, 810–817. [Google Scholar] [CrossRef] [PubMed]

- Darrell-Berry, H.; Berry, K.; Bucci, S. The relationship between paranoia and aggression in psychosis: A systematic review. Schizophr. Res. 2016, 172, 169–176. [Google Scholar] [CrossRef]

- Fava, G.A. The Intellectual Crisis of Psychiatric Research. Psychother. Psychosom. 2006, 75, 202–208. [Google Scholar] [CrossRef] [PubMed]

- Zhou, J.; Gandomi, A.H.; Chen, F.; Holzinger, A. Evaluating the quality of machine learning explanations: A survey on methods and metrics. Electronics 2021, 10, 593. [Google Scholar] [CrossRef]

- Parmigiani, G.; Barchielli, B.; Casale, S.; Mancini, T.; Ferracuti, S. The impact of machine learning in predicting risk of violence: A systematic review. Front. Psychiatry 2022, 13, 1015914. [Google Scholar] [CrossRef] [PubMed]

- Watts, D.; Moulden, H.; Mamak, M.; Upfold, C.; Chaimowitz, G.; Kapczinski, F. Predicting offenses among individuals with psychiatric disorders—A machine learning approach. J. Psychiatr. Res. 2021, 138, 146–154. [Google Scholar] [CrossRef]

- Trinhammer, M.L.; Merrild, A.C.H.; Lotz, J.F.; Makransky, G. Predicting crime during or after psychiatric care: Evaluating machine learning for risk assessment using the Danish patient registries. J. Psychiatr. Res. 2022, 152, 194–200. [Google Scholar] [CrossRef]

- Kirchebner, J.; Lau, S.; Machetanz, L. Offenders and non-offenders with schizophrenia spectrum disorders: Do they really differ in known risk factors for aggression? Front. Psychiatry 2023, 14, 1145644. [Google Scholar] [CrossRef]

- Machetanz, L.; Lau, S.; Habermeyer, E.; Kirchebner, J. Suicidal Offenders and Non-Offenders with Schizophrenia Spectrum Disorders: A Retrospective Evaluation of Distinguishing Factors Using Machine Learning. Brain Sci 2023, 13, 97. [Google Scholar] [CrossRef]

- Farrington, D.P.; Loeber, R.; Ttofi, M.M. Risk and protective factors for offending. In The Oxford Handbook of Crime Prevention; Oxford University Press: Oxford, UK, 2012; pp. 46–69. [Google Scholar]

- Jolliffe, D.; Farrington, D.P.; Piquero, A.R.; Loeber, R.; Hill, K.G. Systematic review of early risk factors for life-course-persistent, adolescence-limited, and late-onset offenders in prospective longitudinal studies. Aggress. Violent Behav. 2017, 33, 15–23. [Google Scholar] [CrossRef]

- Takahashi, A. The role of social isolation stress in escalated aggression in rodent models. Neurosci. Res. 2022. [Google Scholar] [CrossRef] [PubMed]

- Fazel, S.; Gulati, G.; Linsell, L.; Geddes, J.R.; Grann, M. Schizophrenia and violence: Systematic review and meta-analysis. PLoS Med 2009, 6, e1000120. [Google Scholar] [CrossRef] [PubMed]

- Fazel, S.; Långström, N.; Hjern, A.; Grann, M.; Lichtenstein, P. Schizophrenia, Substance Abuse, and Violent Crime. JAMA 2009, 301, 2016–2023. [Google Scholar] [CrossRef]

- Fazel, S.; Sariaslan, A. Victimization in people with severe mental health problems: The need to improve research quality, risk stratification and preventive measures. World Psychiatry 2021, 20, 437. [Google Scholar] [CrossRef] [PubMed]

- Latalova, K.; Kamaradova, D.; Prasko, J. Violent victimization of adult patients with severe mental illness: A systematic review. Neuropsychiatr. Dis. Treatment. 2014, 10, 1925–1939. [Google Scholar] [CrossRef]

- Rajula, H.S.R.; Verlato, G.; Manchia, M.; Antonucci, N.; Fanos, V. Comparison of Conventional Statistical Methods with Machine Learning in Medicine: Diagnosis, Drug Development, and Treatment. Medicina 2020, 56, 455. [Google Scholar] [CrossRef]

- World Health Organization. ICD-10: International Statistical Classification of Diseases and Related Health Problems: Tenth Revision; World Health Organization: Geneva, Switzerland, 2004. [Google Scholar]

- Slee, V.N. The International classification of diseases: Ninth revision (ICD-9). Ann. Intern. Med. 1978, 88, 424–426. [Google Scholar] [CrossRef]

- Seifert, D. Die Entwicklung des psychiatrischen Massregelvollzugs (§ 63StGB) in Nordrhein-Wesfalen. Psychiat Prax 1997, 24, 237–244. [Google Scholar]

- Brennan, P.F.; Hays, B.J. Focus on psychometrics the kappa statistic for establishing interrater reliability in the secondary analysis of qualitative clinical data. Res. Nurs. Health 1992, 15, 153–158. [Google Scholar] [CrossRef]

- Osisanwo, F.; Akinsola, J.; Awodele, O.; Hinmikaiye, J.; Olakanmi, O.; Akinjobi, J. Supervised machine learning algorithms: Classification and comparison. Int. J. Comput. Trends Technol. (IJCTT) 2017, 48, 128–138. [Google Scholar]

- Bischl, B.; Lang, M.; Kotthoff, L.; Schiffner, J.; Richter, J.; Studerus, E.; Casalicchio, G.; Jones, Z. Machine learning in RJ Mach. Learn. Res 2016, 17, 5938–5942. [Google Scholar]

- Joseph, V.R. Optimal ratio for data splitting. Stat. Anal. Data Min. ASA Data Sci. J. 2022, 15, 531–538. [Google Scholar] [CrossRef]

- Thomas, T.; Rajabi, E. A systematic review of machine learning-based missing value imputation techniques. Data Technol. Appl. 2021, 55, 558–585. [Google Scholar] [CrossRef]

- Ying, X. An overview of overfitting and its solutions. J. Phys. Conf. Ser. 2019, 1168, 022022. [Google Scholar] [CrossRef]

- James, G.; Witten, D.; Hastie, T.; Tibshirani, R. An Introduction to Statistical Learning: With Applications in R; Springer New York: New York, NY, USA, 2013. [Google Scholar]

- Hastie, T.; Tibshirani, R.; Friedman, J. Model Assessment and Selection. In The Elements of Statistical Learning: Data Mining, Inference, and Prediction; Springer New York: New York, NY, 2009; pp. 219–259. [Google Scholar]

- Varoquaux, G.; Colliot, O. Evaluating Machine Learning Models and Their Diagnostic Value. In Machine Learning for Brain Disorders; Colliot, O., Ed.; Springer US: New York, NY, USA, 2023; pp. 601–630. [Google Scholar]

- King, R.D.; Orhobor, O.I.; Taylor, C.C. Cross-validation is safe to use. Nat. Mach. Intell. 2021, 3, 276. [Google Scholar] [CrossRef]

- Dykxhoorn, J.; Hollander, A.C.; Lewis, G.; Magnusson, C.; Dalman, C.; Kirkbride, J.B. Risk of schizophrenia, schizoaffective, and bipolar disorders by migrant status, region of origin, and age-at-migration: A national cohort study of 1.8 million people. Psychol. Med. 2019, 49, 2354–2363. [Google Scholar] [CrossRef]

- Henssler, J.; Brandt, L.; Muller, M.; Liu, S.; Montag, C.; Sterzer, P.; Heinz, A. Migration and schizophrenia: Meta-analysis and explanatory framework. Eur. Arch. Psychiatry Clin. Neurosci. 2020, 270, 325–335. [Google Scholar] [CrossRef]

- Selten, J.P.; van der Ven, E.; Termorshuizen, F. Migration and psychosis: A meta-analysis of incidence studies. Psychol. Med. 2020, 50, 303–313. [Google Scholar] [CrossRef]

- Gabrielsen, G.; Kramp, P. Forensic psychiatric patients among immigrants in Denmark–diagnoses and criminality. Nord. J. Psychiatry 2009, 63, 140–147. [Google Scholar] [CrossRef]

- Fazel, S.; Wolf, A.; Palm, C.; Lichtenstein, P. Violent crime, suicide, and premature mortality in patients with schizophrenia and related disorders: A 38-year total population study in Sweden. Lancet Psychiatry 2014, 1, 44–54. [Google Scholar] [CrossRef]

- Bianchi, M.; Buonanno, P.; Pinotti, P. Do Immigrants Cause Crime? J. Eur. Econ. Assoc. 2012, 10, 1318–1347. [Google Scholar] [CrossRef]

- Adelman, R.; Reid, L.W.; Markle, G.; Weiss, S.; Jaret, C. Urban crime rates and the changing face of immigration: Evidence across four decades. J. Ethn. Crim. Justice 2017, 15, 52–77. [Google Scholar] [CrossRef]

- Ousey, G.C.; Kubrin, C.E. Immigration and Crime: Assessing a Contentious Issue. Annu. Rev. Criminol. 2018, 1, 63–84. [Google Scholar] [CrossRef]

- Woodward, A.; Howard, N.; Wolffers, I. Health and access to care for undocumented migrants living in the European Union: A scoping review. Health Policy Plan. 2014, 29, 818–830. [Google Scholar] [CrossRef]

- Hacker, K.; Anies, M.; Folb, B.L.; Zallman, L. Barriers to health care for undocumented immigrants: A literature review. Risk Manag Health Policy 2015, 8, 175–183. [Google Scholar] [CrossRef]

- Bilger, V.; Hollomey, C.; Wyssmüller, C.; Efionayi-Mäder, D. Health Care for Undocumented Migrants in Switzerland; Federal Office of Public Health: Bern, Switzerland, 2011. [Google Scholar]

- Rüefli, C.; Huegli, E. Krankenversicherung und Gesundheitsversorgung von Sans Papiers, Bericht zur Beantwortung des Postulats Heim (09.3484). 2011. Available online: https://www.beobachtungsstelle.ch/fileadmin/user_upload/pdf_divers/Berichte/2012/Bericht_Postulat_Heim_komplett_d.pdf (accessed on 22 August 2024).

- Dickson, H.; Hedges, E.P.; Ma, S.Y.; Cullen, A.E.; MacCabe, J.H.; Kempton, M.J.; Downs, J.; Laurens, K.R. Academic achievement and schizophrenia: A systematic meta-analysis. Psychol. Med. 2020, 50, 1949–1965. [Google Scholar] [CrossRef]

- Gubbels, J.; van der Put, C.E.; Assink, M. Risk Factors for School Absenteeism and Dropout: A Meta-Analytic Review. J. Youth Adolesc. 2019, 48, 1637–1667. [Google Scholar] [CrossRef] [PubMed]

- Rosenthal, L.; Moro, M.R.; Benoit, L. Migrant Parents of Adolescents With School Refusal: A Qualitative Study of Parental Distress and Cultural Barriers in Access to Care. Front. Psychiatry 2019, 10, 942. [Google Scholar] [CrossRef] [PubMed]

- Nahm, F.S. Receiver operating characteristic curve: Overview and practical use for clinicians. Korean J. Anesth. 2022, 75, 25–36. [Google Scholar] [CrossRef]

- Trevethan, R. Sensitivity, Specificity, and Predictive Values: Foundations, Pliabilities, and Pitfalls in Research and Practice. Front. Public Health 2017, 5, 307. [Google Scholar] [CrossRef]

- Carbone, M.R. When not to use machine learning: A perspective on potential and limitations. MRS Bull. 2022, 47, 968–974. [Google Scholar] [CrossRef]

- Sambasivan, N.; Kapania, S.; Highfill, H.; Akrong, D.; Paritosh, P.; Aroyo, L.M. “Everyone wants to do the model work, not the data work”: Data Cascades in High-Stakes AI. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; pp. 1–15. [Google Scholar]

- Talari, K.; Goyal, M. Retrospective Studies—Utility and Caveats. J. R. Coll. Physicians Edinb. 2020, 50, 398–402. [Google Scholar] [CrossRef] [PubMed]

- Vabalas, A.; Gowen, E.; Poliakoff, E.; Casson, A.J. Machine learning algorithm validation with a limited sample size. PLoS ONE 2019, 14, e0224365. [Google Scholar] [CrossRef]

- Dietterich, T. Overfitting and undercomputing in machine learning. ACM Comput. Surv. (CSUR) 1995, 27, 326–327. [Google Scholar] [CrossRef]

- Heymans, M.W.; Twisk, J.W.R. Handling missing data in clinical research. J. Clin. Epidemiol. 2022, 151, 185–188. [Google Scholar] [CrossRef]

- Schafer, J.L.; Graham, J.W. Missing data: Our view of the state of the art. Psychol. Methods 2002, 7, 147–177. [Google Scholar] [CrossRef]

- Tortora, L. Beyond Discrimination: Generative AI Applications and Ethical Challenges in Forensic Psychiatry. Front. Psychiatry 2024, 15, 1346059. [Google Scholar] [CrossRef]

- Joyce, D.W.; Kormilitzin, A.; Smith, K.A.; Cipriani, A. Explainable artificial intelligence for mental health through transparency and interpretability for understandability. NPJ Digit. Med. 2023, 6, 6. [Google Scholar] [CrossRef]

- Xu, F.; Uszkoreit, H.; Du, Y.; Fan, W.; Zhao, D.; Zhu, J. Explainable AI: A Brief Survey on History, Research Areas, Approaches and Challenges. In Natural Language Processing and Chinese Computing; Springer: Cham, Switzerland, 2019; pp. 563–574. [Google Scholar]

| Performance Measures | Explanation |

|---|---|

| Receiver operating characteristics, area under the curve (AUC) | Overall ability of a model to discriminate between two groups, as indicated by graph plotting sensitivity and 1-specificity. The higher the AUC, the better the model distinguishes between positive and negative classes. |

| Balanced Accuracy | The average of sensitivity and specificity, providing a single measure that allows for interpreting both false positives and false negatives. |

| Sensitivity | The ability of a model to correctly identify true positives. Sensitivity is also called recall or the true positive rate. |

| Specificity | The ability of a model to correctly identify true negatives, also called the true negative rate. |

| Positive predictive value (PPV) | The proportion of positive test results that are true positives. Used to interpret an individual’s actual probability of being a true positive in case of a positive test result. |

| Negative predictive value (NPV) | The proportion of negative test results that are true negatives. Used to interpret an individual’s actual probability of being a true negative in case of a negative test result. |

| Variable Description | OP n/N (%) | Mean (SD) | NOP n/N (%) | Mean (SD) |

|---|---|---|---|---|

| Age at admission | 34.2 (10.2) | 36.2 (12.2) | ||

| Sex *: male | 339/370 (91.6) | 339/370 (91.6) | ||

| Country of birth: Switzerland | 167/370 (45.1) | 245/367 (66.8) | ||

| Marital status: Single | 297/364 (81.6) | 282/364 (77.5) | ||

| Diagnosis: Schizophrenia | 294/370 (79.5) | 287/370 (77.6) | ||

| Co-Diagnosis: Addiction Disorder | 269/200 (72.9) | 183/327 (56) | ||

| Co-Diagnosis: Personality Disorder | 47/370 (12.7) | 26/370 (7) |

| Statistical Procedure | Balanced Accuracy (%) | AUC | Sensitivity (%) | Specificity (%) | PPV (%) | NPV (%) |

|---|---|---|---|---|---|---|

| Logistic Regression | 62.20 | 0.68 | 74.90 | 49.50 | 58.20 | 69.60 |

| Tree | 63.00 | 0.64 | 82.30 | 43.80 | 57.70 | 74.00 |

| Random Forest | 62.4 | 0.68 | 78.3 | 46.5 | 57.9 | 73.4 |

| Gradient Boosting | 63.3 | 0.69 | 76.7 | 49.9 | 59.3 | 72.1 |

| KNN | 56.9 | 0.59 | 78.9 | 35 | 44.6 | 85.9 |

| SVM | 61.9 | 0.68 | 73.8 | 49.9 | 58.1 | 68.9 |

| Naive Bayes | 62.6 | 0.68 | 62.3 | 62.9 | 61.2 | 65.3 |

| Variable Description | OP n/N (%) | NOP n/N (%) |

|---|---|---|

| Country of birth: Switzerland | 167/370 (45.1) | 245/367 (66.8) |

| Illegal residence in Switzerland | 95/370 (25.7) | 34/367 (9.3) |

| Graduation: did not complete compulsory schooling | 89/342 (26) | 18/321 (5.6) |

| Performance Measures | % (95% CI) |

|---|---|

| AUC | 0.65 (0.58–0.72) |

| Balanced Accuracy | 63.1 (56.5–69.1) |

| Sensitivity | 63 (53.6–71.6) |

| Specificity | 63.1 (53–72.2) |

| PPV | 66.4 (56.8–74.8) |

| NPV | 59.6 (49.8–68.8) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hofmann, A.B.; Dörner, M.; Machetanz, L.; Kirchebner, J. Sociodemographic Variables in Offender and Non-Offender Patients Diagnosed with Schizophrenia Spectrum Disorders—An Explorative Analysis Using Machine Learning. Healthcare 2024, 12, 1699. https://doi.org/10.3390/healthcare12171699

Hofmann AB, Dörner M, Machetanz L, Kirchebner J. Sociodemographic Variables in Offender and Non-Offender Patients Diagnosed with Schizophrenia Spectrum Disorders—An Explorative Analysis Using Machine Learning. Healthcare. 2024; 12(17):1699. https://doi.org/10.3390/healthcare12171699

Chicago/Turabian StyleHofmann, Andreas B., Marc Dörner, Lena Machetanz, and Johannes Kirchebner. 2024. "Sociodemographic Variables in Offender and Non-Offender Patients Diagnosed with Schizophrenia Spectrum Disorders—An Explorative Analysis Using Machine Learning" Healthcare 12, no. 17: 1699. https://doi.org/10.3390/healthcare12171699