Abstract

Background/Objectives: Artificial intelligence-based software as a medical device (AI-SaMD) refers to AI-powered software used for medical purposes without being embedded in physical devices. Despite increasing approvals over the past decade, research in this domain—spanning technology, healthcare, and national security—remains limited. This research aims to bridge the existing research gap in AI-SaMD by systematically reviewing the literature from the past decade, with the aim of classifying key findings, identifying critical challenges, and synthesizing insights related to technological, clinical, and regulatory aspects of AI-SaMD. Methods: A systematic literature review based on the PRISMA framework was performed to select the relevant AI-SaMD studies published between 2015 and 2024 in order to uncover key themes such as publication venues, geographical trends, key challenges, and research gaps. Results: Most studies focus on specialized clinical settings like radiology and ophthalmology rather than general clinical practice. Key challenges to implement AI-SaMD include regulatory issues (e.g., regulatory frameworks), AI malpractice (e.g., explainability and expert oversight), and data governance (e.g., privacy and data sharing). Existing research emphasizes the importance of (1) addressing the regulatory problems through the specific duties of regulatory authorities, (2) interdisciplinary collaboration, (3) clinician training, (4) the seamless integration of AI-SaMD with healthcare software systems (e.g., electronic health records), and (5) the rigorous validation of AI-SaMD models to ensure effective implementation. Conclusions: This study offers valuable insights for diverse stakeholders, emphasizing the need to move beyond theoretical analyses and prioritize practical, experimental research to advance the real-world application of AI-SaMDs. This study concludes by outlining future research directions and emphasizing the limitations of the predominantly theoretical approaches currently available.

1. Introduction

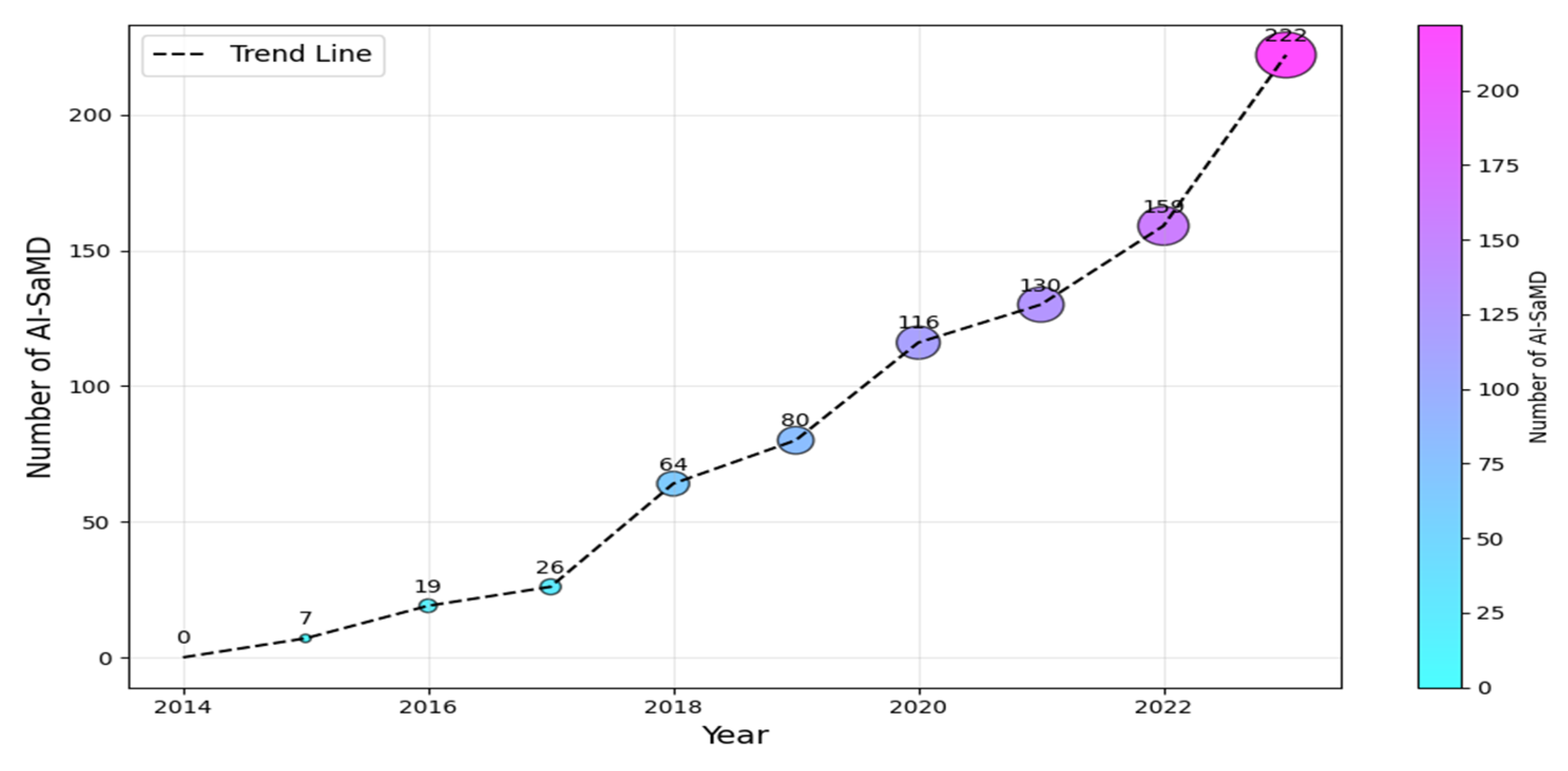

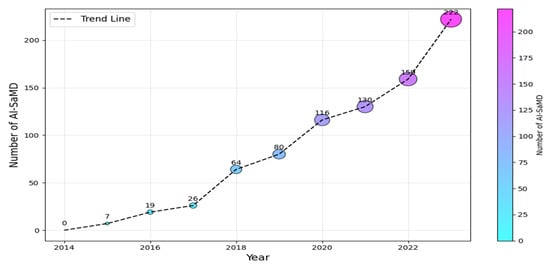

According to the International Medical Device Regulators Forum (IMDRF (https://www.imdrf.org/)), software as a medical device (SaMD) is software intended for medical purposes independent of physical devices [1]. It operates independently of any hardware and can run on general-purpose platforms like smartphones or computers. Similarly, the US Food and Drug Administration (FDA (https://www.fda.gov/)) adopts a similar interpretation but emphasizes that SaMD must adhere to its classification rules based on risk to patients (Class I, II, or III). The importance of SaMD is evident in its growing adoption and transformative impact on healthcare. As of 2023, the global SaMD market, valued at USD 1.1 billion, was projected to experience a compound annual growth rate of over 16%, reaching USD 5.4 billion by 2032 [2,3]. In contrast, when SaMD incorporates artificial intelligence (AI) algorithms for medical purposes, it is called AI-based SaMD (hereafter, AI-SaMD). These can include diagnosing diseases, predicting patient outcomes, recommending treatments, or even detecting anomalies in medical data (such as radiographs or ECG signals). Using AI tools brings different benefits, for example, analyzing radiology images (X-rays and CT scans) to identify tumors or other abnormalities and assessing a patient’s risk of heart disease by prediction models based on historical records and genetic information [3,4]. In addition, AI algorithms automatically adjust insulin doses for diabetic patients based on real-time glucose readings. Figure 1 shows the increasing number of approved AI-SaMDs between 2015 and 2023. From the figure, it can be seen that more than 50% (472 out of 942) of AI-SaMDs were approved in the last three years, and the number of approved devices is clearly increasing every year [5]. Table 1 summarizes the key differences between SaMD and AI-SaMD.

Figure 1.

Number of AI-SaMD versus years (2015–2023).

Table 1.

Differences between SaMD and AI-SaMD.

In general, this field is still emerging, and it faces obstacles in implementation and deployment in real life. These complexities span all aspects of human health research and stem not only from technological advancements but also from other sources such as healthcare providers and governmental agencies. Research in this domain spans various fields, including social care, welfare, bioengineering, AI, machine learning, software development, and citizen science [6]. However, the literature survey revealed that there are critical areas of AI-SaMD that have not been given much attention by researchers. The focus of this paper is on these areas, aligning with the concerns and innovations driving research in this field. We provide deep insights into the theory and practice of AI-SaMD in international contexts. The paper is structured as follows. In Section 2, we briefly describe the architecture of AI-SaMD. Section 3 explains the research methodology. In Section 4, we present the results obtained and a discussion of them. Limitations of the study and future work are presented in Section 5. Finally, we conclude the paper in Section 6.

2. AI-SaMD Architecture

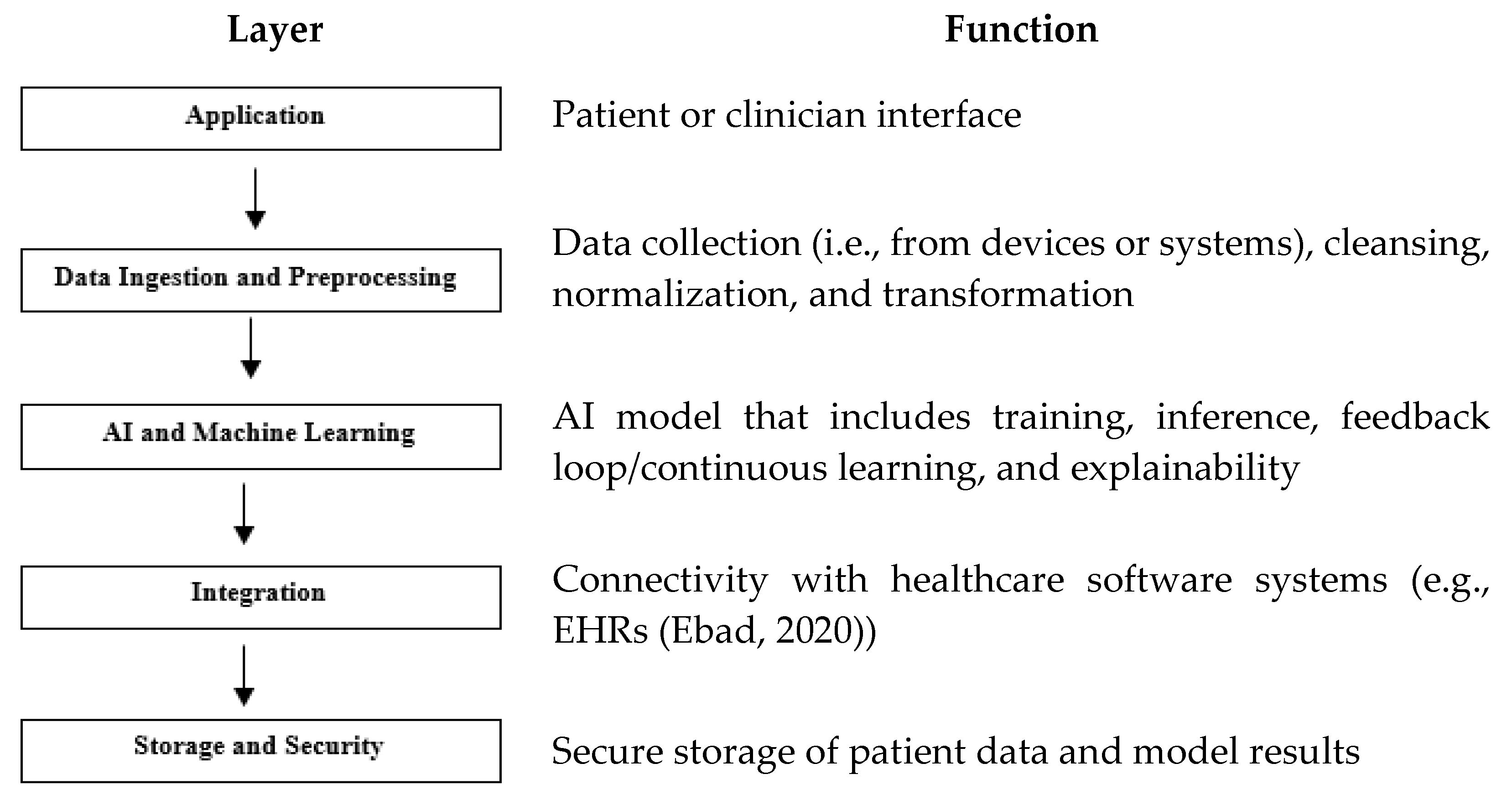

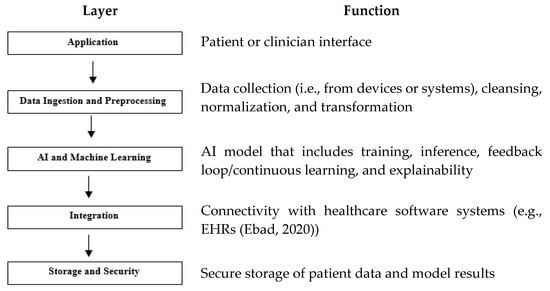

To meet quality standards, AI-SaMD’s architecture follows a multi-layered structure.

This structure, from a software-intensive systems perspective, facilitates incremental development. As layers are developed, their services can be gradually made available to users. This architecture also exhibits high changeability and portability [7]. Fundamentally, this approach organizes the system into hierarchical layers, each encapsulating related functionality. Layers provide services to those above them, with lower-level layers offering core services utilized throughout the system [7].

Figure 2 describes its layers with their functionalities. While AI-SaMD shares some architectural principles with traditional SaMD, it introduces additional layers for model training, inference, continuous learning, and explainability. These layers support the advanced capabilities of AI, making the architecture more complex but powerful in handling dynamic, data-intensive tasks.

Figure 2.

AI-SaMD layers and their functions. Note, one example [8] has shown in the function of the layer of Integration.

3. Research Approach

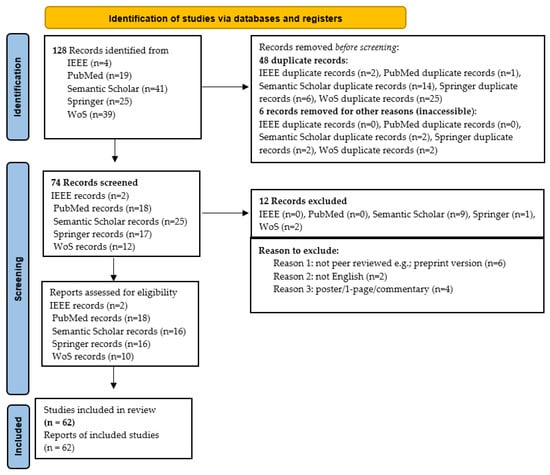

A systematic literature review (SLR) is a well-defined way to evaluate, identify, synthesize, and interpret high-quality studies regarding a particular research question or a specific subject [9]. Therefore, it helps identify research gaps and suggests future research directions. This SLR was conducted based on the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) checklist [10]. It progressed through identification, screening, eligibility, and inclusion. The identification phase retrieved relevant literature from e-libraries on 1 November 2024. During screening, titles, abstracts, and keywords were reviewed to filter initial results. The eligibility phase then assessed the full texts of potentially relevant articles. Ultimately, publications meeting the inclusion criteria were selected to characterize the AI-SaMD elements. This SLR study was performed by a team of five academic faculty members. One author developed the study protocol, which was then critically reviewed by all other team members. All researchers participated in the phases of the study.

3.1. Question Formulation

To refine the focus of our research, this study explores five key research questions (RQs), outlined in Table 2 along with their corresponding motivations.

Table 2.

RQs with their motivations.

3.2. Search Strategy

The keywords utilized to address the RQs were AI, artificial intelligence, SaMD, and software as medical device. By combining these keywords with the logical operators “AND” and “OR”, the resulting search command was constructed as follows:

(AI OR artificial intelligence) AND (SaMD OR software as medical device).

3.3. Source Selection

The above search command was applied to each of the search engines of the sources. By using these strings, we were able to find studies about AI-SaMD. Prior to executing the review protocol, we conducted exploratory database searches to identify optimal repositories. The selected repositories returned high-quality publications on technology and/or health from reputable, internationally recognized journals. The following five sources were used to carry out our SLR:

- PubMed;

- Semantic Scholar;

- SpringerLink;

- Web of Science (WoS);

- IEEE Explore.

3.4. Study Selection

Our SLR followed an iterative and incremental process. It is iterative because each search source was analyzed sequentially, completing one source before moving to the next. It is incremental in that the SLR document evolved with each iteration, expanding from an initial subset of sources to a comprehensive review. For inclusion, studies were selected based on the analysis of titles, abstracts, and keywords from the articles retrieved. Only studies written in English and published between 2015 and November 2024 were included, as this period reflects significant advancements in AI [4]. The following exclusion criteria were applied to the selected abstracts and titles:

- Exclude papers unrelated to AI-SaMD, as it is the primary focus.

- Exclude MS/PhD theses, posters, technical reports, and commentary articles.

- Exclude duplicate studies.

- Exclude non-peer-reviewed studies, such as those from preprint repositories.

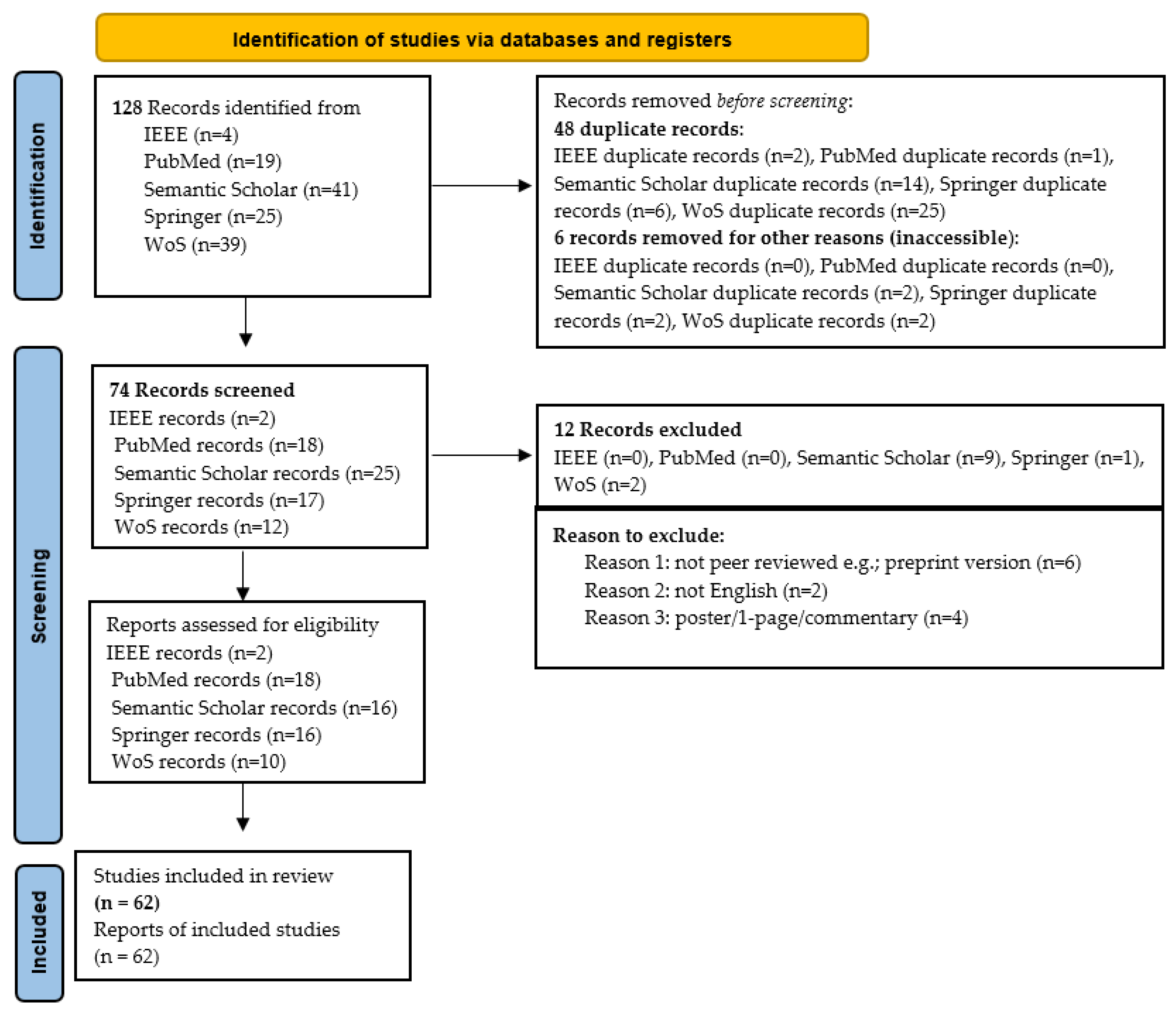

When ambiguity remained after reviewing the title and abstract, the full paper was examined. Figure 3 illustrates the details of how this SLR was carried out, highlighting the number of articles included and excluded at each stage. A total of 128 studies were initially identified; after applying inclusion and exclusion criteria, 62 were classified as primary studies. Table 3 provides a comprehensive breakdown of each iteration, cross-referenced with study numbers. Note that references cited within primary studies were not included in this SLR.

Figure 3.

Procedure for conducting the SLR based PRISMA statement.

Table 3.

Distribution of studies following inclusion and exclusion criteria (2015–2024).

3.5. Information Extraction

As depicted in Figure 3, data extraction from the primary studies was conducted using a standardized information extraction form (Appendix A). This form, based on the search string and identified AI-SaMD studies, captured key details from each publication. The data extraction format included the following columns: study title, journal/magazine name, publication date, author(s), methodology used, study results, study objectives, country where the study was conducted, challenges associated with AI-SaMD, clinical applications or disease types where AI-SaMD was applied, data analysis details, key factors for future work, and recommendations. This structured approach ensured comprehensive information capture, facilitating an in-depth review of AI-SaMD research.

4. Results and Discussion

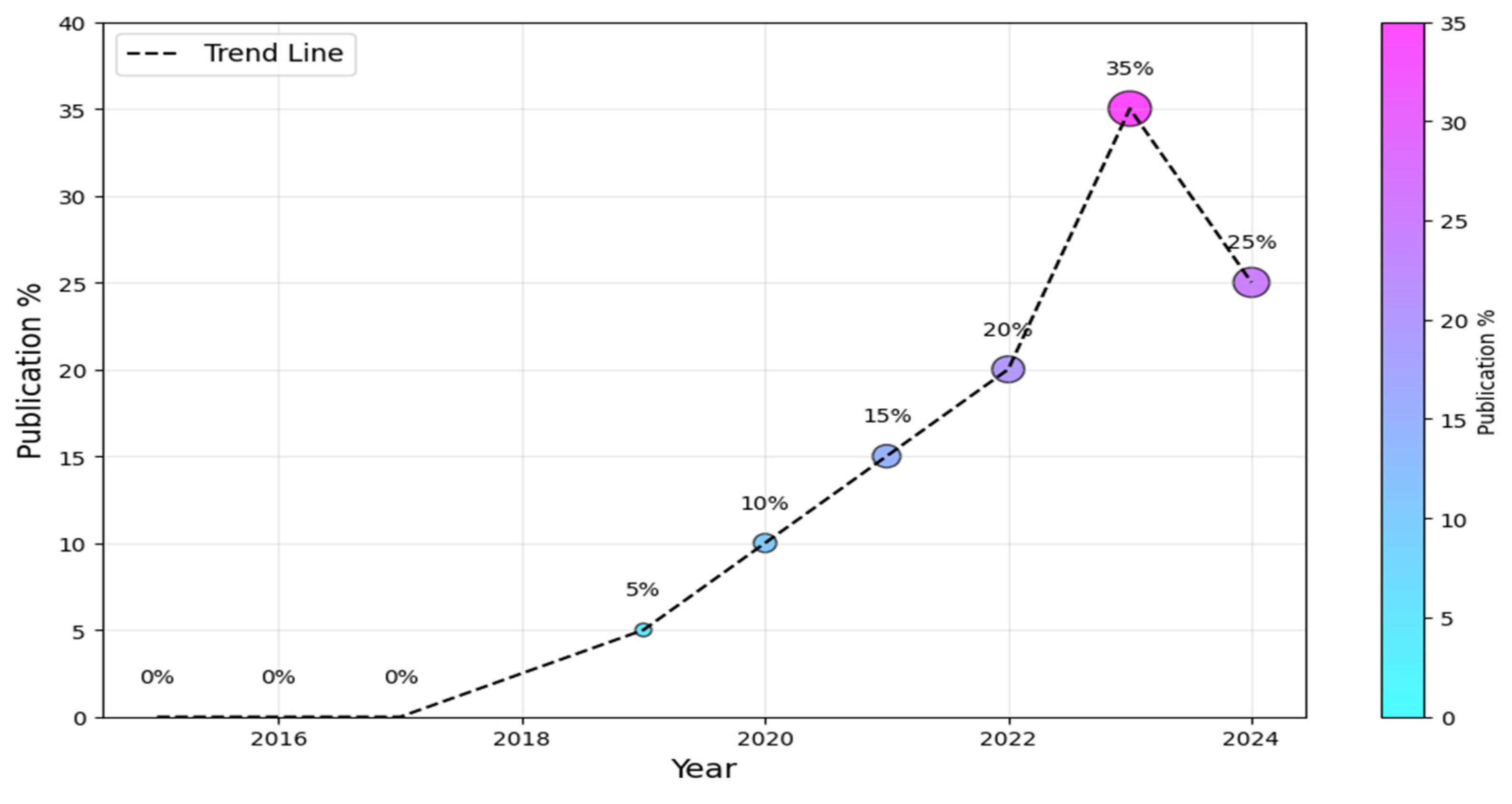

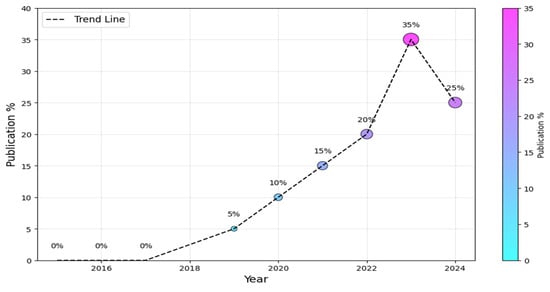

To begin, we must ascertain the prevailing research trends within the AI-SaMD domain, encompassing both the information technology and healthcare sectors. Figure 4 visually depicts the annual distribution of studies. It is worth noting that the field of AI-SaMD is still emerging; that is why there was a substantial increase in publications in the second half of the period (i.e., 2019–2024) compared with the first half, where there were no publications at all. The dotted trend line suggests a positive overall trend in the percentage of publications over time, despite the slight drop in 2024. The downward trend in 2024 comes from the fact that not all empirical studies conducted in 2024 were published during performing this study, though it is still higher than earlier years. Without a doubt, this trend indicates the growing importance of AI-SaMD in recent years.

Figure 4.

Publication trends of AI-SaMD.

4.1. Analysis of Publication Venues and Source Types (RQ1)

To address RQ1, this study investigated the publication venues and source types within the field of AI-SaMD. We limited our analysis to studies published in five major libraries, listed in Table 3. These studies were found in three main publication types: conference proceedings, journal articles, and book chapters. Table 4 presents the distribution of selected studies by publication type. The percentage of studies published in journals was (c. 57, 92%), while those published in conferences, workshops, and book chapters were (c. 3, 4.8%), (c. 1, 1.6%), and (c. 1, 1.6%), respectively. It is worth mentioning that most of studies were published in journals; the rationale of this finding comes from the nature of research on AI-SaMD, which often demands a higher degree of rigor and validation, plus a relatively large space for writing. This makes journals more appropriate venues for this type of research. However, as the field grows, it is possible that one will see more specialized conferences, workshops, and symposia dedicated to the intersection of AI and healthcare, including AI-SaMD. The results of Table 4 show that (c. 50, 81%) of primary papers were retrieved from PubMed, Semantic Scholar, and Springer libraries. The contribution of the IEEE library is the smallest among all of the sources because the overall scope of this library is technological innovation but not pure healthcare like PubMed.

Table 4.

Study distribution by publication venue.

The results of Table 4 show that PubMed is the primary venue for publications in the AI-SaMD area, followed by Semantic Scholar and Springer. Table 5 identifies the key journals that publish papers on AI-SaMD. It displays the most common venues for the primary studies with a frequency of two or more. The results show that the Journal of Korean Radiology has published the highest number of studies in the current domain, with a frequency of three. This is followed by npj Digital Medicine, International Journal of Computer Assisted Radiology and Surgery, Healthcare—MDPI, Australian Journal of Dermatology, and Emergency Radiology, each with a share of two papers.

Table 5.

Publication venues with multiple selected studies.

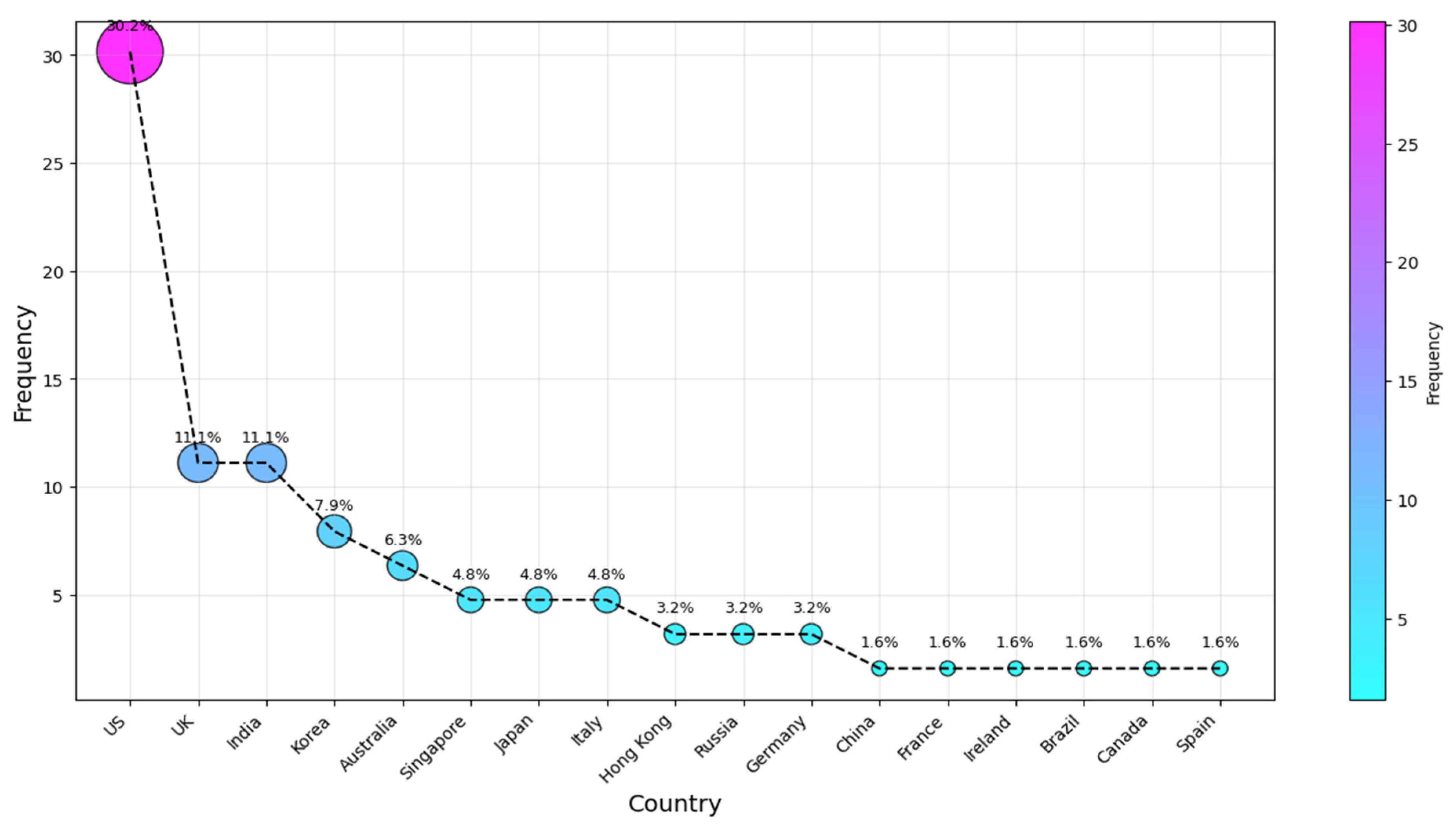

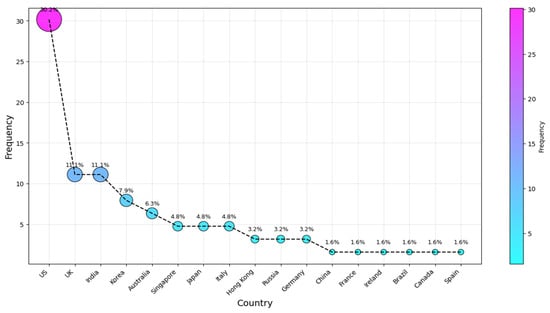

4.2. Demographic Trends (RQ2)

To identify and rank the most active countries in AI-SaMD research, the authors’ affiliations were analyzed. This ranking aims to address RQ2 and determine the countries where researchers publishing in this field are based. We analyzed the affiliation information provided in each paper, using the country of the first author, even if the author had moved since the publication. Several review articles (e.g., [9,73]) have used this method to assess national research activity, making this a well-established bibliometric approach. Typically, the first author is the primary contributor, and their affiliation at publication reflects where the research was conducted. While alternative methods exist, this approach remains widely accepted, as it aligns with prior studies and minimizes biases from multi-author collaborations. Potential researcher mobility does not significantly affect its validity, as national funding, institutional resources, and local expertise play a key role. Figure 5 shows the top ten countries of affiliation for the studies we considered. The results for our second research question (RQ2) indicate that the four countries most frequently associated with the studies are the US, with the UK and India tied, followed by Korea and then Australia (c. 19, 31%; 7, 11%; 5, 8%; and 4, 6.5%), respectively. They were followed by authors from Singapore, Japan, and Italy, who contributed approximately 5% each. Russian and Hong Kong researchers came after that, each with a 3.2% share. The remaining articles were published across various countries, each with a frequency of one publication, as illustrated in Figure 5.

Figure 5.

Country of publication.

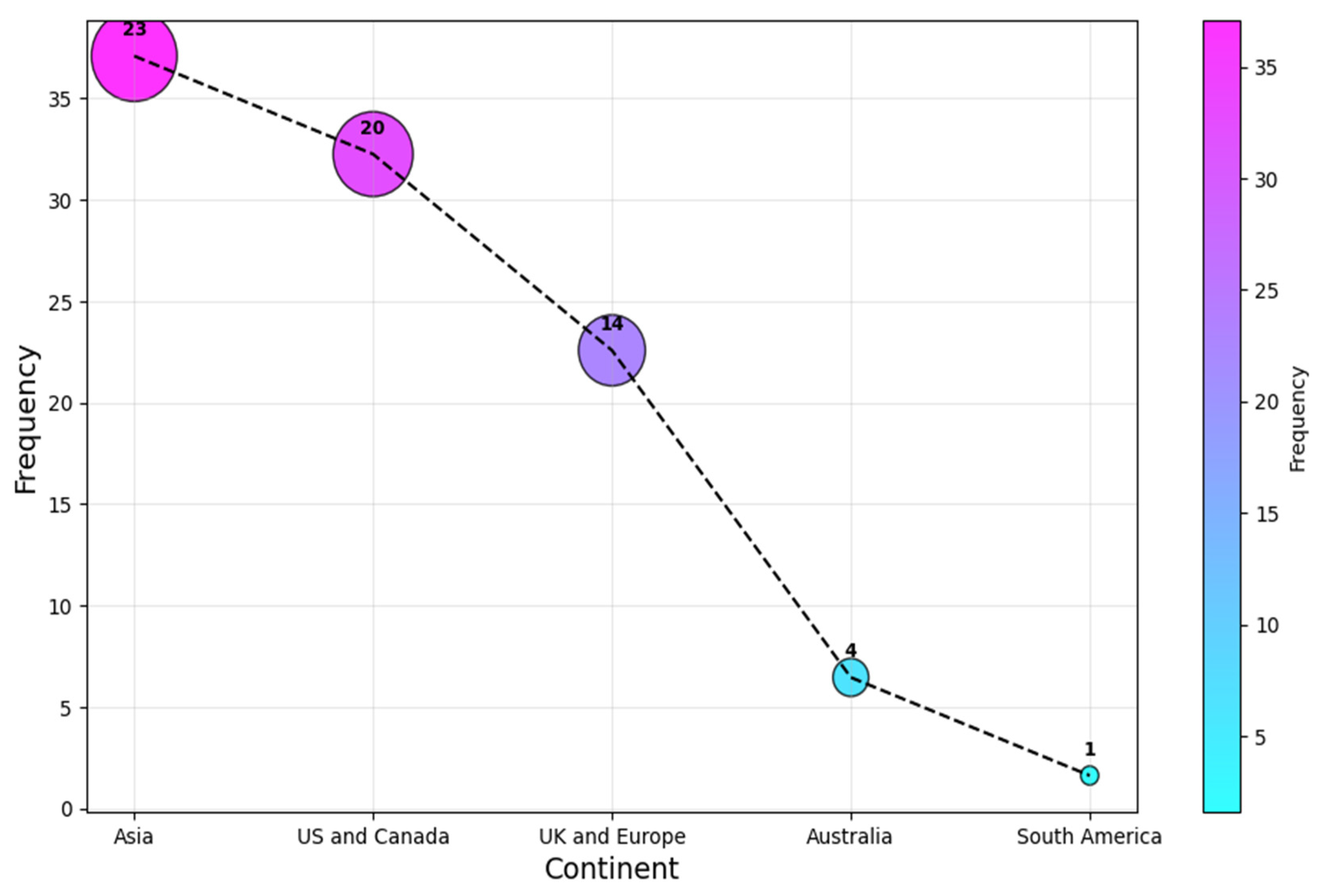

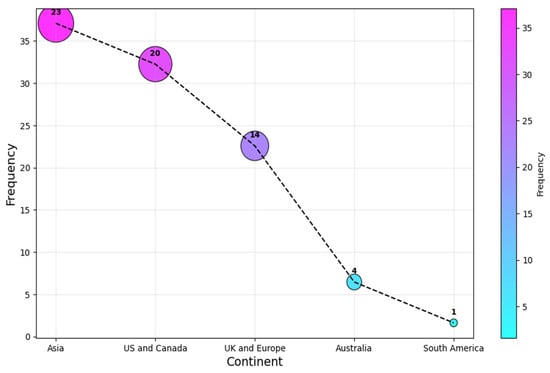

In contrast, as a continent, Asia leads the statistics with a (c. 23, 37%) share, followed by the US and Canada, and UK and European researchers contributed (c. 20, 32%) and (c. 14, 23%), respectively. Authors from Australia and South America were the least associated continents for the selected articles, with a share of (c. 4, 6%) and (c. 1, 2%) each. This result is shown in Figure 6. It is clear that the research is relatively concentrated to a selected number of regions, as the four most affiliated countries account for over 60% of all the affiliations. This highlights the need for more research on AI-SaMD across different countries to better understand the impact of sociocultural differences, particularly in Africa, a continent with no published studies in this area.

Figure 6.

Continent of publication.

4.3. Analysis Based on Research Strategy and Clinical Environment (RQ3.1 and 3.2)

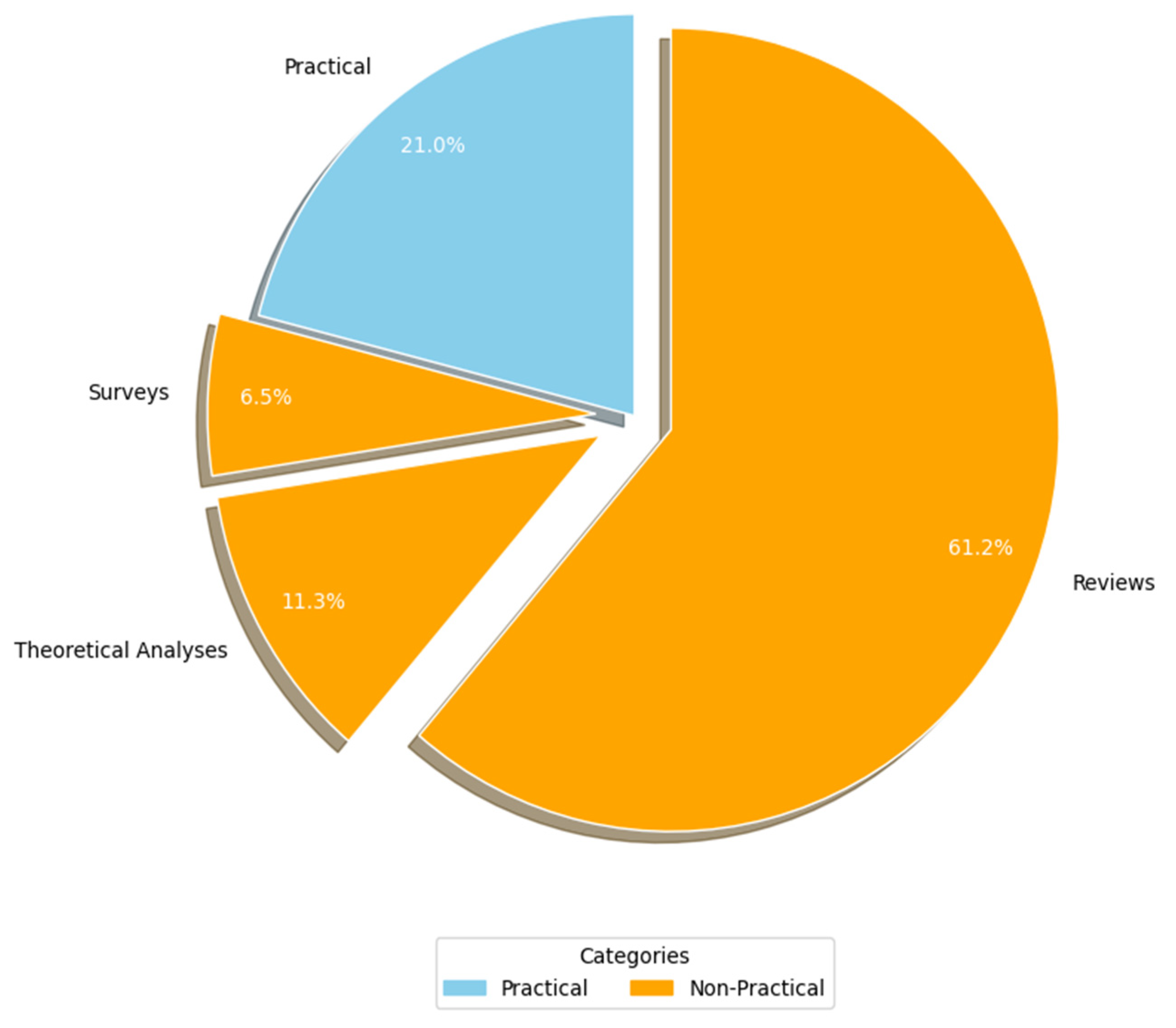

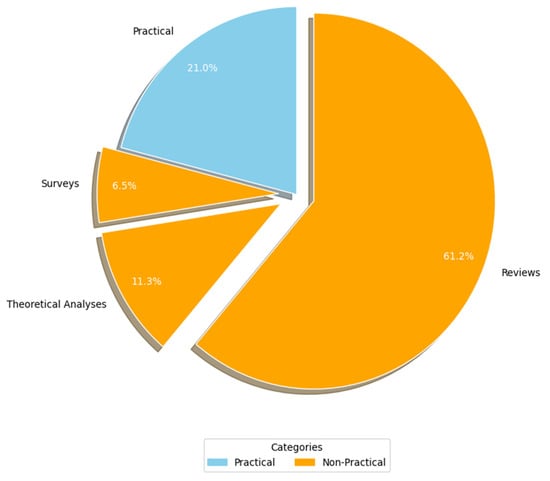

Figure 7 (RQ3.1) presents the breakdown of the studies based on the research methodologies employed. Primary studies were categorized into two primary research approaches: practical and non-practical. Practical studies involve implementation or real-world application to generate results (e.g., experiments, case studies, and simulations). In contrast, non-practical studies rely on theoretical analyses, reviews, surveys, and questionnaires to derive insights without direct practical implementation. A significant majority of the AI-SaMD research (c. 49, 79%) adopted non-practical approaches, primarily through reviews (c. 38, 61.3%), theoretical analyses (c. 7, 11.3%), and surveys (c. 4, 6.5%). A potential explanation for the lack of practical studies might come from the fact that working with a real-world case study from healthcare environments is a problem in much research because of confidentiality issues. In other words, publishing details about the participant's healthcare organization, including the specific AI-SaMD outcomes used by that organization, could breach confidentiality. Because most of the articles in this study were non-practical, their data were analyzed qualitatively.

Figure 7.

Study strategy used.

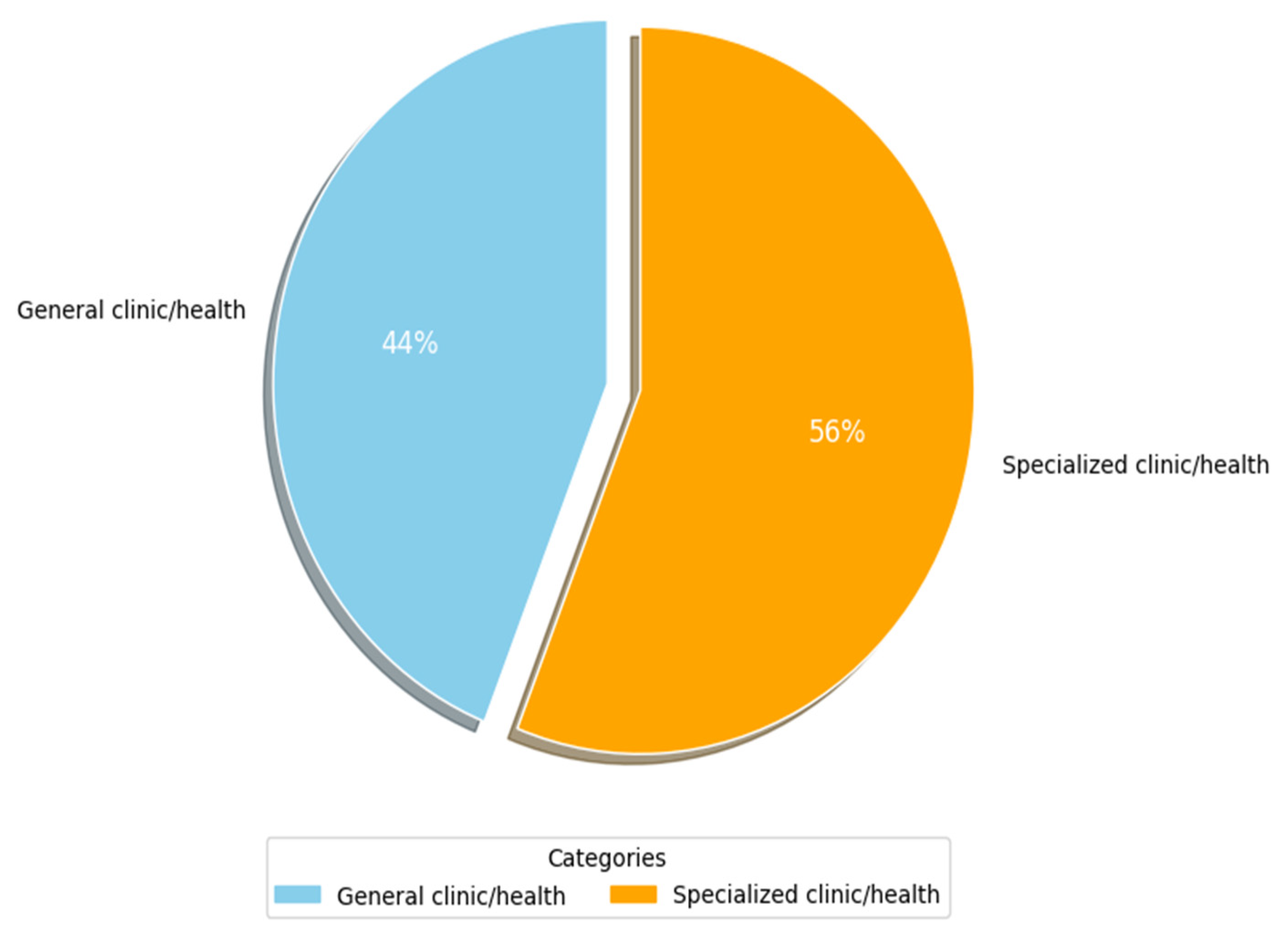

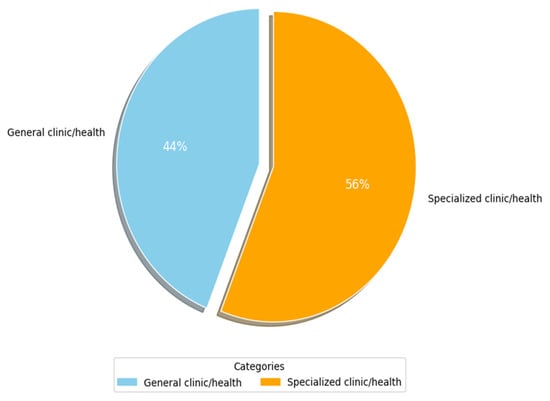

Figure 8 shows the main types of clinics and medical environments that have been used by researchers (RQ3.2). More than 50% of researchers (c. 35, 56%) focused on specialized clinics in their studies. Table 6 lists the specialized clinics that were identified. Columns 3 and 4 show the frequency and percentage of occurrence for each attribute as it appeared in the primary studies, respectively, while column 2 shows the primary study number with sub-categories of such clinics. Herein, the key environments identified are nine. The most widely specialized environment is radiology (c. 11, 17.7%), followed by clinical administration with a share of (c. 5, 8.1%). Next are clinical trials, ophthalmology, and cancer contexts, with a (c. 3, 4.8%) share each. They are followed by dermatology, geriatric and children care, and cardiovascular clinics with a (c. 2, 3.2%) share each. The specialized clinics of kidneys, hypertension, Alzheimer, and nuclear medicine are the least widely considered in the primary studies of this SLR, with a (c., 1, 1.6%) share each. The last column lists examples of clinic/disease categories and their respective study counts. From Table 6, it can be seen that many studies that did not focus on a specialized clinic (i.e., general clinic shown in Figure 8) addressed different issues related to regulatory frameworks and guidelines of AI-SaMD. A plausible explanation for the above findings is that AI-based SaMD has progressed rapidly in radiology diagnostics because image-based data are well-suited for AI applications like pattern recognition, anomaly detection, and diagnostics. AI models thrive in environments where large, structured datasets are available, and medical imaging (CT, MRI, and X-rays) provides this. This makes radiology an ideal testing ground for SaMD solutions. In contrast, areas like kidney diseases, hypertension, and Alzheimer’s often rely on less uniform data, such as lab tests, clinical observations, and patient history, which are harder to standardize and analyze using current AI methods. Additionally, the general health environments have large commercial markets due to their broader applicability. Companies developing AI-based SaMD solutions often prioritize fields with a higher potential return on investment. Fields like Alzheimer’s or kidney diseases, while critical, may not offer the same immediate commercial benefits, slowing down focused research and deployment.

Figure 8.

Clinic/medical environment used.

Table 6.

The primary environments in which the researchers applied AI-SaMD.

4.4. Analysis Based on Challenges (RQ4)

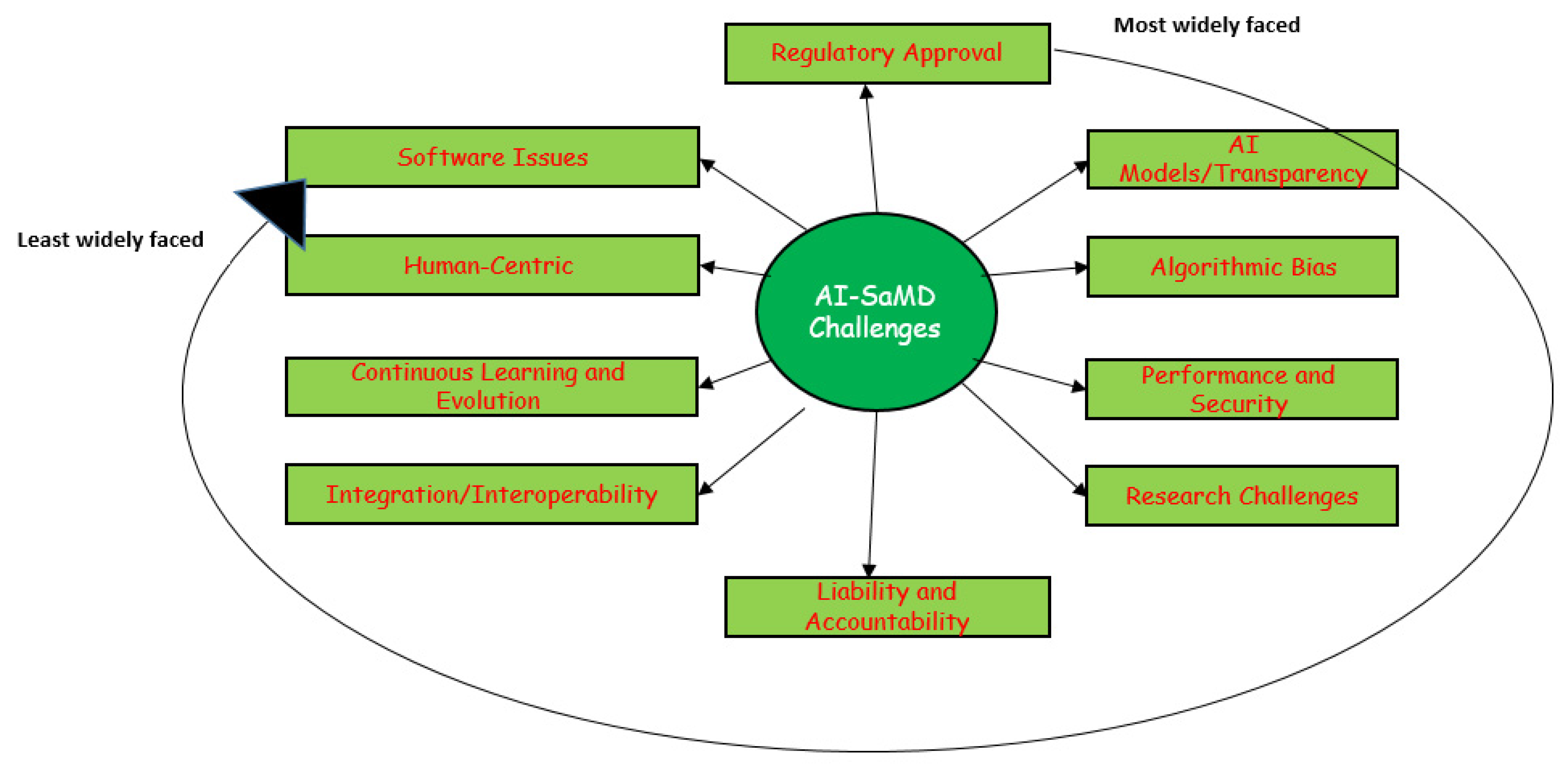

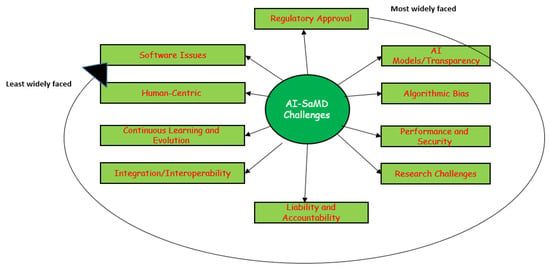

Developing AI-SaMD solutions with retrospective data faces significant challenges. Figure 9 illustrates the top 10 most common issues and difficulties faced by researchers and clinicians when using AI-SaMD (RQ4 in this SLR). They are, in order, regulatory approval, “Black-box” AI models/transparency, algorithmic bias, performance and security, research challenges, liability and accountability, integration/interoperability, continuous learning and evolution, human-centric, and software issues.

Figure 9.

The most widely faced challenges that researchers encountered when using AI-SaMD.

Table 7 provides a detailed breakdown of the challenges identified in our study. The first column lists the specific problems, the second column explains each problem in more detail, and the next three columns show the number of the study where the challenge was found, how often it occurred, and the percentage of studies that had this challenge. Due to space constraints, we classified the most significant challenges into four groups.

Table 7.

The common challenges of AI-SaMD.

4.4.1. Regulatory Frameworks Around the World (Challenges 1 and 6)

Approximately 70% of AI-SaMD researchers focus on regulatory issues, as these are crucial not only for technology and health but also for national security (e.g., medical image ownership [75]). AI-SaMDs must comply with local regulations to be marketed and used on patients. In the EU, compliance leads to Conformité Européenne marking, while in the US, the FDA (the Food and Drug Administration (https://www.fda.gov/)) handles approvals. Post-Brexit, the UK introduced the United Kingdom Conformity Assessment mark for medical devices, with a transition period until mid-2023 [76]. Navigating diverse regulatory frameworks is complex for companies, though countries like Switzerland have eased this process by accepting FDA-approved devices [77].

4.4.2. AI Model (Challenges 2 and 3)

AI-SaMDs often rely on black-box solutions, where the underlying algorithms generate outputs without providing clear insights into the decision-making process. This lack of transparency can hinder the assessment of AI-generated results, making it challenging to justify medical decisions, especially in the context of potential malpractice liability claims. To address these concerns, the interpretability and explainability of AI tools have emerged as crucial strategies. Interpretable AI employs transparent or “white-box” algorithms, such as linear models or decision trees, which can be readily understandable. In contrast, explainable AI utilizes a secondary AI algorithm to provide post hoc explanations for the outputs of black-box models. However, current techniques may not be sufficiently robust to explain the complex, individual-level decisions made by black-box AI systems [78,79].

4.4.3. Human-Centric Factors (Challenge 9)

This challenge includes different human and organization factors such as significant inter-expert variability in diagnosing [15], limited clinical understanding of how AI reaches decisions [35], lack of human resources [36], trust between clinicians and AI systems [40], the need for stakeholder collaboration [45], staff training [54], limited user acceptance [60], the need for guidelines on AI-SaMD use [65], and skills related to data governance [36]. Miscommunication is central with these factors. This occurs on two fronts: (1) ineffective communication among stakeholders and (2) miscommunication between healthcare professionals and AI systems. These communication breakdowns contribute to diminished trust in AI-SaMD [40], limited user acceptance [60], and the need for comprehensive staff training [54]. According to [7], misunderstanding often stems from loosely defined terminology. While it is assumed that stakeholders in a meeting are using the same terms with a shared understanding, in reality, their interpretations may vary significantly. This misalignment can lead to prolonged debates, misunderstandings, and poor decision making. Such issues appear to be a facet of human nature and are not exclusive to terminology related to technology [80].

4.4.4. Data Governance (Challenges 4 and 5)

Limited access to large datasets like EHRs is an obstacle in implementing AI-SaMD. This stems from data protection laws such as the GDPR (the General Data Protection Regulation (https://gdpr-info.eu/ (accessed on 30 March 2025)) in Europe and the need for specific patient consent [28]. Public data on dataset validation and testing across algorithms is insufficient, making it impossible to assess generalization or detect potential biases (e.g., demographic bias) [27]. Alternatively, the collection and sharing of extensive datasets via open platforms such as OpenNeuro (OpenNeuro is a free and open platform for sharing neuroimaging data (https://openneuro.org/)) can present significant challenges, notably in terms of ownership and privacy. As shown in Table 6, radiology is the most specialized clinic widely used by researchers. While healthcare facilities in the US generally hold “ownership rights” over imaging data, patients retain certain rights, and their privacy must be protected under laws like HIPAA in the US [81]. Copyright concerns are minimal, as medical images are often not copyrightable, and datasets are typically distributed under CC-0 licenses to avoid legal complications. Ultimately, patient privacy, rather than ownership or copyright, is the primary challenge in sharing medical imaging data [76,81].

4.5. Analysis on Researcher Recommendations (RQ5)

There are some recommendations in the current literature that need implementation in real healthcare environments, which can definitely lead to improving AI-SaMD quality. In addition, such recommendations can be a roadmap for further research in this field. Table 8 shows the most important detailed recommendations made by the researchers. Column one lists the recommendations or research directions that were found in our study. Column two briefly provides more details about each recommendation, column three displays the corresponding primary study number, and columns four and five present the frequency and percentage of recommendations.

Table 8.

The suggestion most commonly recommended by researchers for implementation in clinical settings.

From Table 8, it is clear that over 50% of the researchers believe the most important recommendation is that related to addressing the regulatory authorities (#1 in Table 8). Regulatory authorities play a role; their involvement ensures that AI-SaMDs are safe, effective, and reliable while fostering innovation and public trust. Currently, their role might be focused on four aspects: (a) establishing clear guidelines and standards, (b) facilitating innovation through flexible pathways, (c) enforcing rigorous post-market surveillance, and (d) ensuring ethical AI use, particularly around patient data privacy, informed consent, and bias reduction. These four tasks are essential to unlocking the potential of AI-SaMD technologies while safeguarding public health and fostering trust. However, the implementation of such tasks is not easy because regulatory authorities, considering that their role is crucial, face challenges such as staying ahead of technological advancements, defining risk-based classifications for AI-SaMD, and balancing innovation with safety. Table 8 also shows that current AI-SaMD researchers recommend the importance of collaborations between diverse stakeholders (e.g., clinicians, IT specialists, regulators, and legal experts). Such an interdisciplinary collaboration is expected to enhance publication dissemination (recommendation 4 in Table 8) through improving research quality, broadening co-authorship networks, and enabling access to shared data, leading to high-impact studies. Collaboration with industry and regulators aligns research with real-world needs, increasing its relevance and visibility. Collaborative researchers also present findings at different conferences, expanding their reach and citation potential. Without a doubt, large-scale, multi-institutional studies attract wider readership and improve dissemination across academic, industry, and policy platforms.

5. Limitations and Future Work

Our systematic literature review (SLR) has some limitations. The study only considered papers written in English, excluding research published in other languages. In addition, only peer-reviewed publications were included, while preprints from open-access repositories such as arXiv and PsyArXiv were omitted. Future studies could broaden the scope by incorporating non-English papers, preprint sources, and governmental websites. In addition to the language and database restrictions, it is important to acknowledge a limitation within our search strategy, specifically, the omission of “machine learning” and “deep learning” as keywords. Consequently, some relevant studies may not have been retrieved, particularly those that discuss AI-based methodologies without explicitly mentioning AI. This could have led to the exclusion of certain relevant publications, potentially affecting the comprehensiveness of our review. Future studies may consider refining the search string to capture a broader range of AI-related research. All materials as well as templates used in our review can be provided to other researchers interested in repeating it, but we should note that the changes that occur in digital libraries from time to time might mean that searches are not completely repeatable.

As highlighted in Figure 7, there is a critical need to evaluate the quality of AI-SaMD through practical research methods, such as experimentation, real-world case studies, and simulations; these types of research are under-represented compared to non-practical methods like reviews and theoretical analyses. Increasing the emphasis on practical investigations, as recommended by some researchers (see #4 in Table 8), could significantly enhance the quality and applicability of AI-SaMD publications. A final open point for further research lies in the descriptive nature of the authors’ analyses. Incorporating statistical inference or modeling techniques could enhance the depth of the findings. For instance, developing a predictive model to forecast the annual publication trend would offer a more robust understanding of research output trajectories.

6. Conclusions

This study provides a comprehensive summary of state-of-the-art AI-SaMDs based on several attributes. A systematic literature review was conducted, analyzing 62 studies published between 2015 and 2024. Key findings include the following: (1) journals contributed more significantly to this field of research than other publication venues (e.g., conferences, workshops, and symposiums), with the Korean Journal of Radiology emerging as the most common venue; (2) researchers from the United States, the United Kingdom, India, Korea, and Australia were the most active in this area; (3) the predominant research strategy was non-practical, with over 50% of studies focusing on specialized clinical settings, and radiology, clinical administration, trials, ophthalmology, and oncology were the most extensively studied domains; (4) the top 10 challenges of AI-SaMD identified were regulatory approval, lack of transparency in “black-box” AI models, algorithmic bias, performance and security concerns, research challenges, liability and accountability issues, integration and interoperability difficulties, the continuous learning and evolution of AI systems, human-centric factors, and software-related issues; and (5) key recommendations from researchers to address these challenges included fostering interdisciplinary partnerships, providing education and training for clinicians, integrating AI-SaMD with other healthcare systems (e.g., EHRs), and improving the quality of AI-SaMD publications through the enhanced validation of datasets. These findings aim to assist health organizations, AI specialists, compliance officers, and other stakeholders in better understanding the current state and applicability of AI-SaMDs. Additionally, this study offers a solid foundation for researchers and practitioners to address identified challenges in greater detail when developing new AI models. Finally, this article outlines its limitations and suggests promising directions for future research in this rapidly evolving field.

Author Contributions

Conceptualization, methodology, software, validation, formal analysis, S.A.E.; investigation, resources, data curation, writing—original draft preparation, A.A. and M.A.; writing—review and editing, visualization, M.A., M.S. and A.B.M.; supervision, project administration, funding acquisition, S.A.E. and A.B.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Northern Border University, grant number NBU-CRP-2025-1564.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Acknowledgments

The authors extend their appreciation to Northern Border University, Saudi Arabia, for supporting this work through project number (NBU-CRP-2025-1564).

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Data Extraction Form

| Section 1: Paper information | |

| Author | Author(s) of the study |

| Name | Title of the study |

| Type | Publication types: journal, conference, workshop, or symposium |

| Country | The place of the study |

| Year | The year of the study |

| Section 2: Quality assessment | |

| The rationale for the study in the introduction clear? | Yes/No |

| The design/methodology of the study appropriate? | Yes/No |

| The findings and results of study are clearly stated? | Yes/No |

| The findings of the study are evaluated properly? | Yes/No |

| Section 3: Data extraction | |

| Methodology | It can be either practical or non-practical. If the study relies on observation, it is practical; otherwise, it is non-practical. Experiments, simulations, and real-world case studies are examples of practical studies, while reviews, theoretical analyses, surveys, and questionnaires are examples of non-practical studies. |

| Objective | Objectives/aims of the study. |

| Challenges in implementation of AI-SaMD | List the difficulties that researchers and clinicians often face when using AI-SaMD. |

| Quality attribute | The AI-SaMD quality attributes that were supposed to be measured and validated (e.g., safety). |

| Measure | The metrics and validation approaches used to measure considered quality attributes. |

| Context | It includes the specific types of clinics and diseases targeted by the studies. |

| Data analysis | Whether quantitative or qualitative. |

| Results | The subjective results of the study. |

References

- IMDRF SaMD Working Group. Software as a Medical Device (SaMD): Key Definitions. International Medical Device Regulators Forum (IMDRF), December 2013. Available online: https://www.imdrf.org/sites/default/files/docs/imdrf/final/technical/imdrf-tech-131209-samd-key-definitions-140901.pdf (accessed on 30 March 2025).

- Yaskevich, V. Ultimate Guide to Software as a Medical Device. 2023. Available online: https://www.elinext.com/industries/healthcare/trends/samd-guide-to-software-as-a-medical-device (accessed on 30 March 2025).

- Pozza, A.; Zanella, L.; Castaldi, B.; Di Salvo, G. How Will Artificial Intelligence Shape the Future of Decision-Making in Congenital Heart Disease? J. Clin. Med. 2024, 13, 2996. [Google Scholar] [CrossRef] [PubMed]

- The Konverge Team. From Code to Cure: The Potential of Software as a Medical Device. 2023. Available online: https://www.konverge.com/blog/general/code-to-cure-potential-software-medical-device-samd (accessed on 30 March 2025).

- Kou, Q. The Landscape of FDA-Approved Artificial Intelligence and Machine Learning (AI/ML)-Enabled Medical Devices. NyquistAI, 19 July 2024. Available online: https://nyquistai.com/the-landscape-of-fda-approved-artificial-intelligence-and-machine-learning-ai-ml-enabled-medical-devices (accessed on 30 March 2025).

- Laurie, G.; Dove, E.; Ganguli-Mitra, A.; McMillan, C.; Postan, E.; Sethi, N.; Sorbie, A. The Cambridge Handbook of Health Research Regulation; Cambridge University Press: Cambridge, UK, 2021. [Google Scholar] [CrossRef]

- Sommerville, I. Software Engineering, 10th ed.; Pearson: Essex, UK, 2015. [Google Scholar]

- Ebad, S.A. Healthcare software design and implementation—A project failure case. Softw. Pract. Exp. 2020, 50, 1258–1276. [Google Scholar] [CrossRef]

- Ebad, S.A.; Darem, A.A.; Abawajy, J.H. Measuring software obfuscation quality—A systematic literature review. IEEE Access 2021, 9, 99024–99038. [Google Scholar]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Ishizuka, N. Statistical review of regulatory requirements for AI diagnosis. In Proceedings of the IEEE 48th Annual Computers, Software, and Applications Conference (COMPSAC), Osaka, Japan, 2–4 July 2024. [Google Scholar]

- Ramachandran, A.; Malhotra, P.; Soni, D. Current Regulatory Landscape of Software as Medical Device in India: Framework for Way Forward. In Proceedings of the 10th International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 15–17 March 2023. [Google Scholar]

- Gerke, S.; Babic, B.; Evgeniou, T.; Cohen, I.G. The need for a system view to regulate artificial intelligence/machine learning-based software as medical device. npj Digit. Med. 2020, 3, 53. [Google Scholar]

- Gunasekeran, D.V.; Zheng, F.; Lim, G.Y.S.; Chong, C.C.Y.; Zhang, S.; Ng, W.Y.; Keel, S.; Xiang, Y.; Park, K.H.; Park, S.J. Acceptance and Perception of Artificial Intelligence Usability in Eye Care (APPRAISE) for Ophthalmologists: A Multinational Perspective. Front. Med. 2022, 9, 875242. [Google Scholar]

- Campbell, J.P.; Chiang, M.F.; Chen, J.S.; Moshfeghi, D.M.; Nudleman, E.; Ruambivoonsuk, P.; Cherwek, H.; Cheung, C.Y.; Singh, P.; Kalpathy-Cramer, J. Artificial Intelligence for Retinopathy of Prematurity: Validation of a Vascular Severity Scale against International Expert Diagnosis. Ophthalmology 2022, 129, e69–e76. [Google Scholar]

- Hedderich, D.M.; Weisstanner, C.; Van Cauter, S.; Federau, C.; Edjlali, M.; Radbruch, A.; Gerke, S.; Haller, S. Artificial intelligence tools in clinical neuroradiology: Essential medico-legal aspects. Neuroradiology 2023, 65, 1091–1099. [Google Scholar]

- Moglia, A.; Georgiou, K.; Morelli, L.; Toutouzas, K.; Satava, R.M.; Cuschieri, A. Breaking down the silos of artificial intelligence in surgery: Glossary of terms. Surg. Endosc. 2022, 36, 7986–7997. [Google Scholar] [CrossRef]

- Zinchenko, V.; Chetverikov, S.; Akhmad, E.; Arzamasov, K.; Vladzymyrskyy, A.; Andreychenko, A.; Morozov, S. Changes in software as a medical device based on artificial intelligence technologies. Int. J. Comput. Assist. Radiol. Surg. 2022, 17, 1969–1977. [Google Scholar]

- O'Driscoll, F.; O'Brien, N.; Guo, C.; Prime, M.; Darzi, A.; Ghafur, S. Clinical Simulation in the Regulation of Software as a Medical Device: An eDelphi Study. JMIR Form. Res. 2024, 8, e56241. [Google Scholar] [PubMed]

- Parums, D.V. Editorial: Global Initiatives Support the Use and Regulation of Digital Health Technology During the COVID-19 Pandemic. Med. Sci. Monit. 2021, 27, e935123. [Google Scholar] [CrossRef] [PubMed]

- Palaniappan, K.; Lin, E.Y.T.; Vogel, S. Global Regulatory Frameworks for the Use of Artificial Intelligence (AI) in the Healthcare Services Sector. Healthcare 2024, 12, 562. [Google Scholar] [CrossRef] [PubMed]

- Ho, C.W.L. Implementing the human right to science in the regulatory governance of artificial intelligence in healthcare. J. Law Biosci. 2023, 10, lsad026. [Google Scholar]

- Smith, J.A.; Abhari, R.E.; Hussain, Z.; Heneghan, C.; Collins, G.S.; Carr, A.J. Industry ties and evidence in public comments on the FDA framework for modifications to artificial intelligence/machine learning-based medical devices: A cross sectional study. BMJ Open 2020, 10, e039969. [Google Scholar]

- Zinchenko, V.V.; Arzamasov, K.M.; Chetverikov, S.F.; Maltsev, A.V.; Novik, V.P.; Akhmad, E.S.; Sharova, D.E.; Andreychenko, A.E.; Vladzymyrskyy, A.V.; Morozov, S.P. Methodology for Conducting Post-Marketing Surveillance of Software as a Medical Device Based on Artificial Intelligence Technologies. Sovrem. Tehnol. V Med. 2022, 14, 15–23. [Google Scholar]

- Lee, C.J.; Rim, T.H.; Kang, H.G.; Yi, J.K.; Lee, G.; Yu, M.; Park, S.H.; Hwang, J.T.; Tham, Y.C.; Wong, T.Y.; et al. Pivotal trial of a deep-learning-based retinal biomarker (Reti-CVD) in the prediction of cardiovascular disease: Data from CMERC-HI. J. Am. Med. Inform. Assoc. 2023, 31, 130–138. [Google Scholar] [CrossRef]

- Carolan, J.E.; McGonigle, J.; Dennis, A.; Lorgelly, P.; Banerjee, A. Technology-Enabled, Evidence-Driven, and Patient-Centered: The Way Forward for Regulating Software as a Medical Device. JMIR Med. Inform. 2022, 10, e34038. [Google Scholar]

- Giansanti, D. The Regulation of Artificial Intelligence in Digital Radiology in the Scientific Literature: A Narrative Review of Reviews. Healthcare 2022, 10, 1824. [Google Scholar] [CrossRef]

- Angehrn, Z.; Haldna, L.; Zandvliet, A.S.; Gil Berglund, E.; Zeeuw, J.; Amzal, B.; Cheung, S.Y.A.; Polasek, T.M.; Pfister, M.; Kerbusch, T.; et al. Artificial Intelligence and Machine Learning Applied at the Point of Care. Front. Pharmacol. 2020, 11, 759. [Google Scholar] [CrossRef]

- Muthamilselvan, S.; Baabum, R.S.P.; Palaniappan, A. Microfluidics for Profiling miRNA Biomarker Panels in AI-Assisted Cancer Diagnosis and Prognosis. Appl. Microfluid. Cancer Res. 2023, 22, 15330338231185284. [Google Scholar]

- Arora, A. Conceptualising Artificial Intelligence as a Digital Healthcare Innovation: An Introductory Review. Med. Devices Evid. Res. 2020, 13, 223–230. [Google Scholar] [CrossRef] [PubMed]

- Shimron, E.; Perlman, O. AI in MRI: Computational Frameworks for a Faster, Optimized, and Automated Imaging Workflow. Bioengineering 2023, 10, 492. [Google Scholar] [CrossRef]

- Zapata, K.A.C.; Patil, R.; Ward, T.; Loughran, R.; McCaffery, F. Analysis of the Classification of Medical Device Software in the AI Act Proposal. In Proceedings of the Workshops at the Second International Conference on Hybrid Human-Artificial Intelligence (HHAI-WS 2023), Munich, Germany, 26–27 June 2023. [Google Scholar]

- Waissengrin, B.; Garasimov, A.; Bainhoren, O.; Merimsky, O.; Shamai, S.; Erental, A.; Wolf, I.; Hershkovitz, D. Artificial intelligence (AI) molecular analysis tool assists in rapid treatment decision in lung cancer: A case report. J. Clin. Pathol. 2023, 76, 790–792. [Google Scholar] [CrossRef]

- Ganapathy, K. Artificial Intelligence and Healthcare Regulatory and Legal Concerns. Telehealth Med. Today 2021, 6, 252. [Google Scholar] [CrossRef]

- Woodside, J.M.; Florida, D. Decision-Making Model Transparency for SaMD Machine Learning Algorithms. In Proceedings of the Conference on Information Systems Applied Research (ISCAP), Virtual Conference, 2020; Available online: https://iscap.us/proceedings/conisar/2020/pdf/5351.pdf (accessed on 30 March 2025).

- Haneef, R.; Delnord, M.; Vernay, M.; Bauchet, E.; Gaidelyte, R.; Van Oyen, H.; Or, Z.; Pérez-Gómez, B.; Palmieri, L.; Achterberg, P.; et al. Innovative use of data sources: A cross-sectional study of data linkage and artificial intelligence practices across European countries. Arch. Public Health 2020, 78, 55. [Google Scholar]

- Ingvar, Å.; Oloruntoba, A.; Sashindranath, M.; Miller, R.; Soyer, H.P.; Guitera, P.; Caccetta, T.; Shumack, S.; Abbott, L.; Arnold, C. Minimum labeling requirements for dermatology artificial intelligence-based Software as Medical Device (SaMD): A consensus statement. Aust. J. Dermatol. 2023, 65, e21–e29. [Google Scholar]

- Dortche, K.; Mccarthy, G.; Banbury, S.; Yannatos, I. Promoting Health Equity through Improved Regulation of Artificial Intelligence Medical Devices. J. Sci. Policy Gov. 2023, 21. [Google Scholar] [CrossRef]

- Park, S.H.; Choi, J.I.; Fournier, L.; Vasey, B. Randomized Clinical Trials of Artificial Intelligence in Medicine: Why, When, and How? Korean J. Radiol. 2022, 23, 1119–1125. [Google Scholar]

- Lal, A.; Dang, J.; Nabzdyk, C.; Gajic, O.; Herasevich, V. Regulatory oversight and ethical concerns surrounding software as medical device (SaMD) and digital twin technology in healthcare. Ann. Transl. Med. 2022, 10, 950. [Google Scholar]

- Suravarapu, S.T.; Yetukuri, K. Software Programmes Employed as Medical Devices. World J. Curr. Med. Pharm. Res. 2023, 5, 67–74. [Google Scholar]

- Hwang, E.J.; Goo, J.M.; Yoon, S.H.; Beck, K.S.; Seo, J.B.; Choi, B.W.; Chung, M.J.; Park, C.M.; Jin, K.N.; Lee, S.M. Use of Artificial Intelligence-Based Software as Medical Devices for Chest Radiography: A Position Paper from the Korean Society of Thoracic Radiology. Korean J. Radiol. 2021, 22, 1743–1748. [Google Scholar] [PubMed]

- Calvin, W.L.H. When Learning Is Continuous: Bridging the Research–Therapy Divide in the Regulatory Governance of Artificial Intelligence as Medical Devices. In The Cambridge Handbook of Health Research Regulation; Cambridge University Press: Cambridge, UK, 2021. [Google Scholar] [CrossRef]

- Chothani, F.; Movaliya, V.; Vaghela, K.; Zaveri, M.; Deshpande, S.; Kanki, N. Regulatory Prospective on Software as a Medical Device. Int. J. Drug Regul. Aff. 2022, 10, 13–17. [Google Scholar]

- Rathod, J.K.A.; Bhavani, N.; Saldanha, P.P.; Rao, P.M.; Patil, P. A Review of Current Applications of Artificial Intelligence and Machine Learning in Medical Science. Int. J. Adv. Res. Sci. Commun. Technol. 2021, 11. [Google Scholar] [CrossRef]

- Petzold, A.; Albrecht, P.; Balcer, L.; Bekkers, E.; Brandt, A.U.; Calabresi, P.A.; Deborah, O.G.; Graves, J.S.; Green, A.; Keane, P.A.; et al. Artificial intelligence extension of the OSCAR-IB criteria. Ann. Clin. Transl. Neurol. 2021, 8, 1528–1542. [Google Scholar]

- Battista, P.; Nemni, R.; Vitali, P.; Alì, M.; Zanardo, M.; Salvatore, C.; Sirabian, G.; Capelli, D.; Bet, L.; Callus, E.; et al. Alzheimer’s Dementia Early Diagnosis, Characterization, Prognosis and Treatment Decision via A Software-As-Medical Device With An Artificial Intelligent Agent. Alzheimer Dement. 2023, 19, e075674. [Google Scholar]

- Thompson, Y.L.E.; Sahiner, B.; Li, Q.; Petrick, N.; Delfino, J.G.; Lago, M.A.; Cao, Q.; Samuelson, F.W. Applying Queueing Theory to Evaluate Wait-Time-Savings of Triage Algorithms. Queueing Syst. 2024, 108, 579–610. [Google Scholar]

- Megerian, J.T.; Dey, S.; Melmed, R.D.; Coury, D.L.; Lerner, M.; Nicholls, C.J.; Sohl, K.; Rouhbakhsh, R.; Narasimhan, A.; Romain, J.; et al. Evaluation of an artificial intelligence-based medical device for diagnosis of autism spectrum disorder. npj Digit. Med. 2022, 5, 57. [Google Scholar] [CrossRef]

- Askin, S.; Burkhalter, D.; Calado, G.; El Dakrouni, S. Artificial Intelligence Applied to clinical trials: Opportunities and challenges. Health Technol. 2023, 13, 203–213. [Google Scholar]

- Loftus, T.J.; Shickel, B.; Ozrazgat-Baslanti, T.; Ren, Y.; Glicksberg, B.S.; Cao, J.; Singh, K.; Chan, L.; Nadkarni, G.N.; Bihorac, M.A. Artificial intelligence-enabled decision support in nephrology. Nat. Rev. Nephrol. 2023, 18, 452–465. [Google Scholar]

- Rassi-Cruz, M.; Valente, F.; Caniza, M.V. Digital therapeutics and the need for regulation: How to develop products that are innovative, patient-centric and safe. Diabetol. Metab. Syndr. 2022, 14, 48. [Google Scholar] [CrossRef] [PubMed]

- Rakugi, H. Healthy 100-year life in hypertensive patients: Messages from the 45th Annual Meeting of the Japanese Society of Hypertension. Hypertens. Res. 2024, 47, 1–3. [Google Scholar] [CrossRef] [PubMed]

- Miller, M.I.; Shih, L.C.; Kolachalama, V.B. Machine Learning in Clinical Trials: A Primer with Applications to Neurology. Neurotherapeutics 2023, 20, 1066–1080. [Google Scholar] [CrossRef] [PubMed]

- Berliner, L. Minimizing possible negative effects of artificial intelligence. Int. J. Comput. Assist. Radiol. Surg. 2024, 19, 1473–1476. [Google Scholar] [CrossRef]

- Zhou, K.; Gattinger, G. The Evolving Regulatory Paradigm of AI in MedTech: A Review of Perspectives and Where We Are Today. Ther. Innov. Regul. Sci. 2024, 58, 456–464. [Google Scholar] [CrossRef]

- He, J.; Baxter, S.L.; Xu, J.; Xu, J.; Zhou, X.; Zhang, K. The practical implementation of artificial intelligence technologies in medicine. Nat. Med. 2019, 25, 30–36. [Google Scholar] [CrossRef]

- Cerdá-Alberich, L.; Solana, J.; Mallol, P.; Ribas, G.; García-Junco, M.; Alberich-Bayarri, A.; Marti-Bonmati, L. MAIC–10 brief quality checklist for publications using artificial intelligence and medical images. Insights Imaging 2023, 14, 11. [Google Scholar] [CrossRef]

- Agrawal, A.; Khatri, G.D.; Khurana, B.; Sodickson, A.D.; Liang, Y.; Dreizin, D. A survey of ASER members on artificial intelligence in emergency radiology: Trends, perceptions, and expectations. Emerg. Radiol. 2023, 30, 267–277. [Google Scholar] [CrossRef]

- Dreizin, D.; Staziaki, P.V.; Khatri, G.D.; Beckmann, N.M.; Feng, Z.; Liang, Y.; Delproposto, Z.S.; Klug, M.; Spann, J.S.; Sarkar, N.; et al. Artificial intelligence CAD tools in trauma imaging: A scoping review from the American Society of Emergency Radiology (ASER) AI/ML Expert Panel. Emerg. Radiol. 2023, 30, 251–265. [Google Scholar] [CrossRef]

- Woodman, R.J.; Mangoni, A.A. A comprehensive review of machine learning algorithms and their application in geriatric medicine: Present and future. Aging Clin. Exp. Res. 2023, 35, 2363–2397. [Google Scholar] [CrossRef]

- Tettey, F.; Parupelli, S.K.; Desai, S. A Review of Biomedical Devices: Classification, Regulatory Guidelines, Human Factors, Software as a Medical Device, and Cybersecurity. Biomed. Mater. Devices 2023, 2, 316–341. [Google Scholar]

- Hwang, E.J.; Park, J.E.; Song, K.D.; Yang, D.H.; Kim, K.W.; Lee, J.G.; Yoon, J.H.; Han, K.; Kim, D.H. 2023 Survey on User Experience of Artificial Intelligence Software in Radiology by the Korean Society of Radiology. Korean J. Radiol. 2023, 25, 613–622. [Google Scholar] [CrossRef] [PubMed]

- Feng, J.; Emerson, S.; Simon, N. Approval policies for modifications to machine learning-based software as a medical device: A study of bio-creep. Biom. Methodol. 2019, 77, 31–44. [Google Scholar]

- Herington, J.; McCradden, M.D.; Creel, M.K.; Boellaard, R.; Jones, E.C.; Jha, A.K.; Rahmim, A.; Scott, P.J.H.; Sunderland, J.J.; Wahl, R.L.; et al. Ethical Considerations for Artificial Intelligence in Medical Imaging: Deployment and Governance. J. Nucl. Med. 2023, 64, 1509–1515. [Google Scholar] [CrossRef]

- Dickinson, H.; Feifel, J.; Muylle, K.; Ochi, T.; Vallejo-Yagüe, E. Learning with an evolving medicine label: How artificial intelligence-based medication recommendation systems must adapt to changing medication labels. Expert Opin. Drug Saf. 2024, 23, 547–552. [Google Scholar] [CrossRef]

- Li, P.; Williams, R.; Gilbert, S.; Anderson, S. Regulating Artificial Intelligence and Machine Learning-Enabled Medical Devices in Europe and the United Kingdom. Law Technol. Hum. 2023, 5, 94–113. [Google Scholar] [CrossRef]

- Oloruntoba, A.; Ingvar, Å.; Sashindranath, M.; Anthony, O.; Abbott, L.; Guitera, P.; Caccetta, T.; Janda, M.; Soyer, H.P.; Mar, V. Examining labelling guidelines for AI-based software as a medical device: A review and analysis of dermatology mobile applications in Australia. Aust. J. Dermatol. 2023, 65, 409–422. [Google Scholar] [CrossRef]

- Miller, D.D. Machine Intelligence in Cardiovascular Medicine. Cardiol. Rev. 2020, 28, 53–64. [Google Scholar]

- Hua, D.; Petrina, N.; Young, N.; Cho, J.G.; Poon, S.K. Understanding the factors influencing acceptability of AI in medical imaging domains among healthcare professionals: A scoping review. Artif. Intell. Med. 2024, 147, 102698. [Google Scholar]

- Lim, K.; Heo, T.Y.; Yun, J. Trends in the Approval and Quality Management of Artificial Intelligence Medical Devices in the Republic of Korea. Diagnostics 2022, 12, 355. [Google Scholar] [CrossRef]

- Humayun, M.; Niazi, M.; Jhanjhi, N.Z.; Alshayeb, M.; Mahmood, S. Cyber Security Threats and Vulnerabilities: A Systematic Mapping Study. Arab. J. Sci. Eng. 2020, 45, 2341–2369. [Google Scholar]

- Ebad, S.A. The influencing causes of software unavailability: A case study from industry. Softw. Pract. Exp. 2018, 48, 1056–1076. [Google Scholar] [CrossRef]

- Komatsu, M.; Teraya, N.; Natsume, T.; Harada, N.; Takeda, K.; Hamamoto, R. Clinical Application of Artificial Intelligence in Ultrasound Imaging for Oncology. JMA J. 2024, 8, 18–25. [Google Scholar] [PubMed]

- United States Copyright Office. Copyrightable authorship: What can be registered. In Compendium of U.S. Copyright Office Practices §101, 3rd ed.; United States Copyright Office: Washington, DC, USA, 2021; pp. 4–39. Available online: https://www.copyright.gov/comp3/chap300/ch300-copyrightable-authorship.pdf (accessed on 30 March 2025).

- Medicines and Healthcare Products Regulatory Agency. Regulating Medical Devices in the UK. 2024. Available online: https://www.gov.uk/guidance/regulating-medical-devices-in-the-uk (accessed on 30 March 2025).

- Van Raamsdonk, A. Swiss Regulators Set to Recognize US FDA-Cleared or Approved Medical Devices. 2022. Available online: https://www.emergobyul.com/news/swiss-regulators-set-recognize-us-fda-cleared-or-approved-medical-devices (accessed on 30 March 2025).

- Babic, B.; Gerke, S.; Evgeniou, T.; Cohen, I.G. Beware explanations from AI in health care. Science 2021, 373, 284–286. [Google Scholar] [CrossRef]

- Vogel, D.A. Medical Device Software Verification, Validation, and Compliance; Artech House: London, UK, 2011. [Google Scholar]

- Mezrich, J.L.; Siegel, E. Who owns the image? Archiving and retention issues in the digital age. J. Am. Coll. Radiol. 2014, 11, 384–386. [Google Scholar] [CrossRef]

- White, T.; Blok, E.; Calhoun, V.D. Data sharing and privacy issues in neuroimaging research: Opportunities, obstacles, challenges, and monsters under the bed. Hum. Brain Mapp. 2022, 43, 278–291. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).