An Image Analysis for the Development of a Skin Change-Based AI Screening Model as an Alternative to the Bite Pressure Test

Abstract

1. Introduction

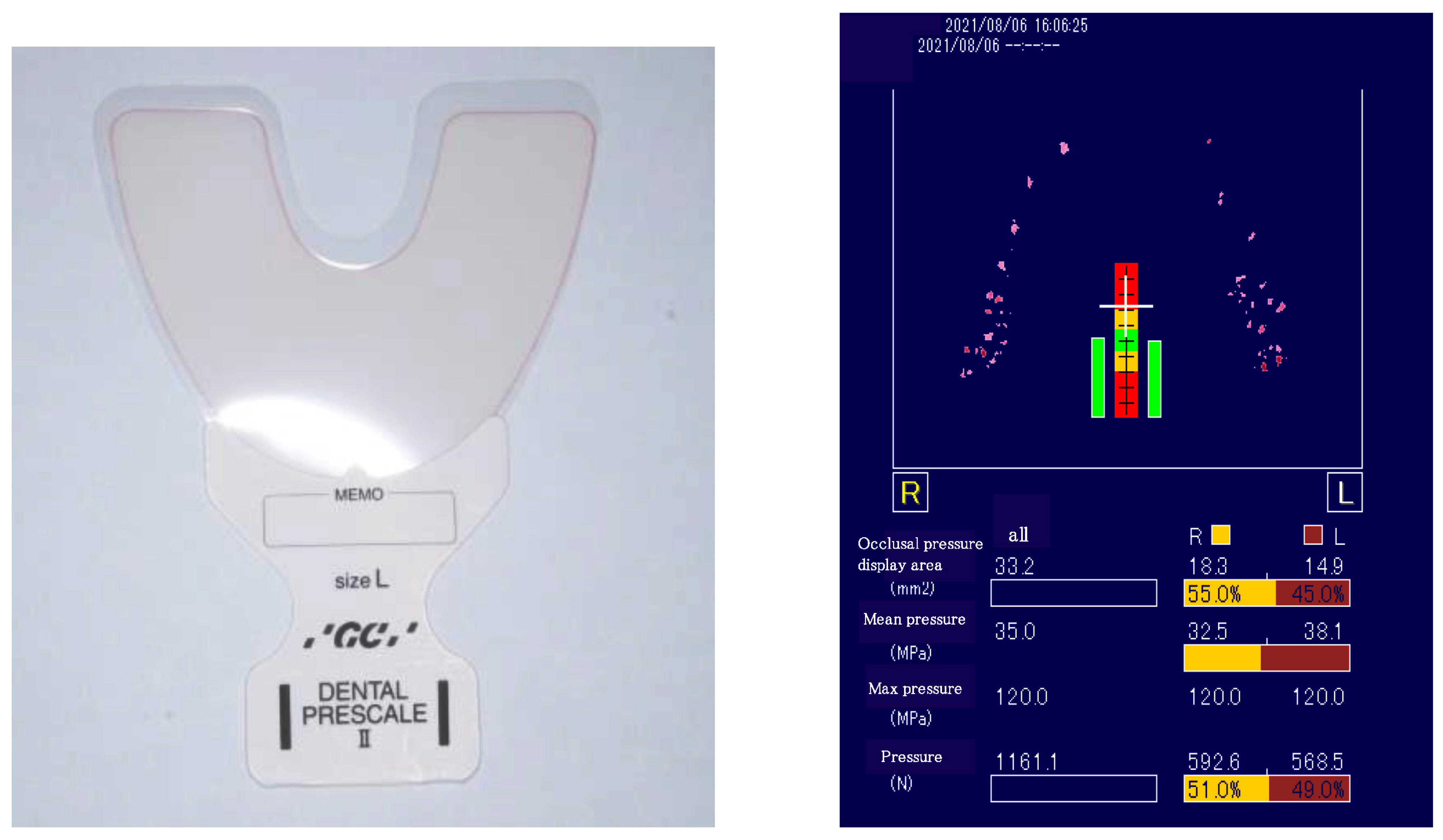

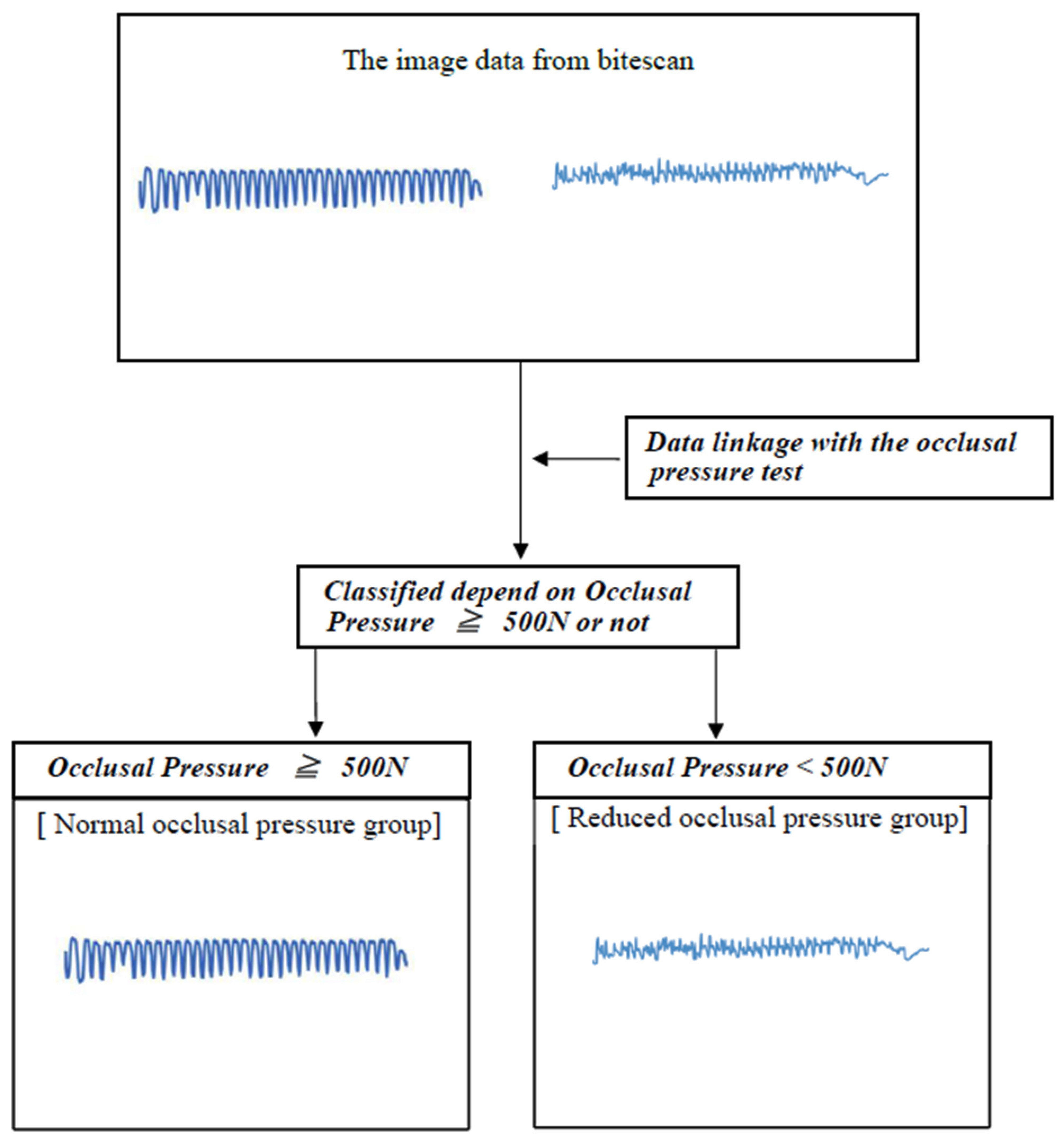

2. Materials and Methods

2.1. Subjects and Recruitment Method

2.2. Investigators and Ethical Considerations

2.3. Test Items

2.4. Calibration

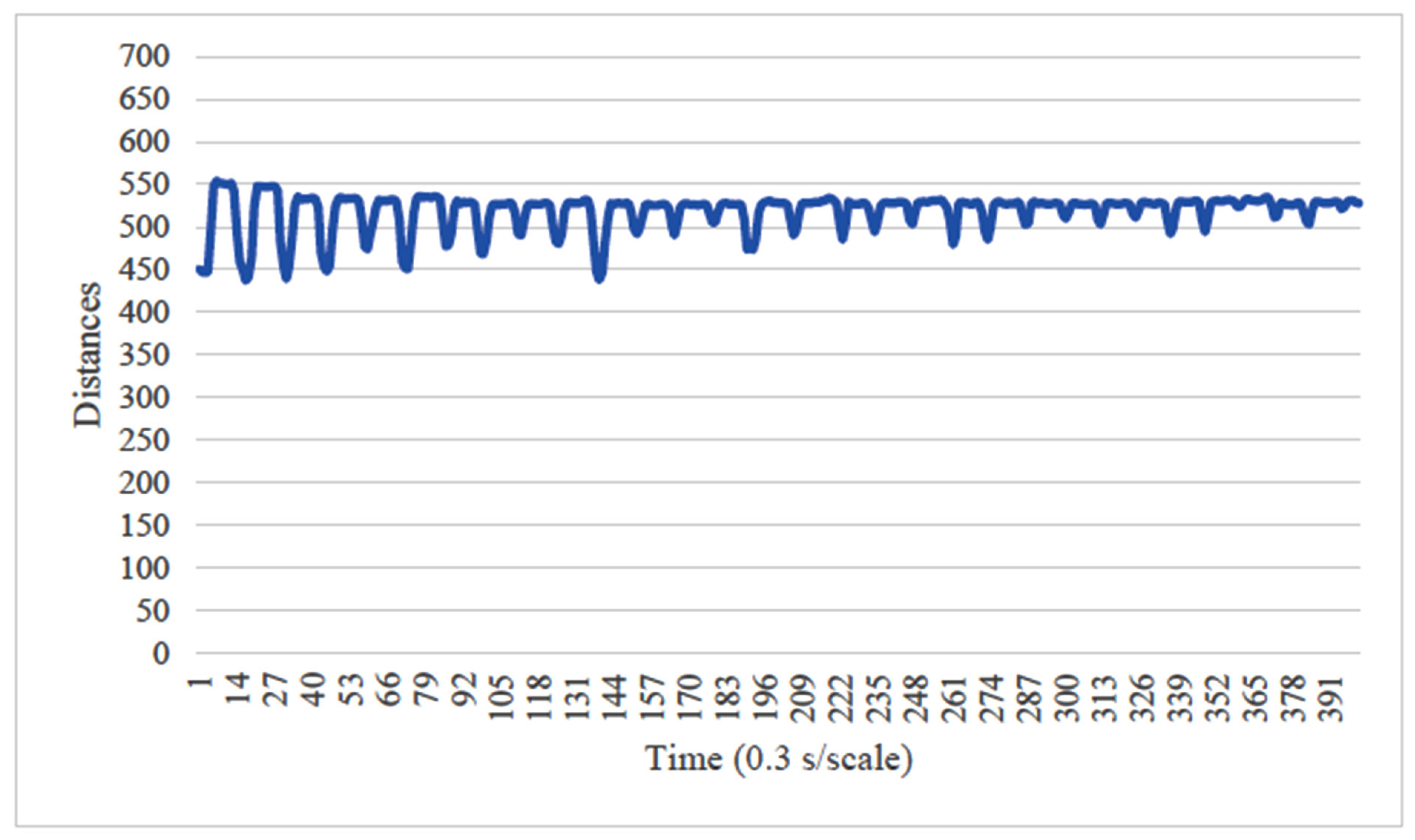

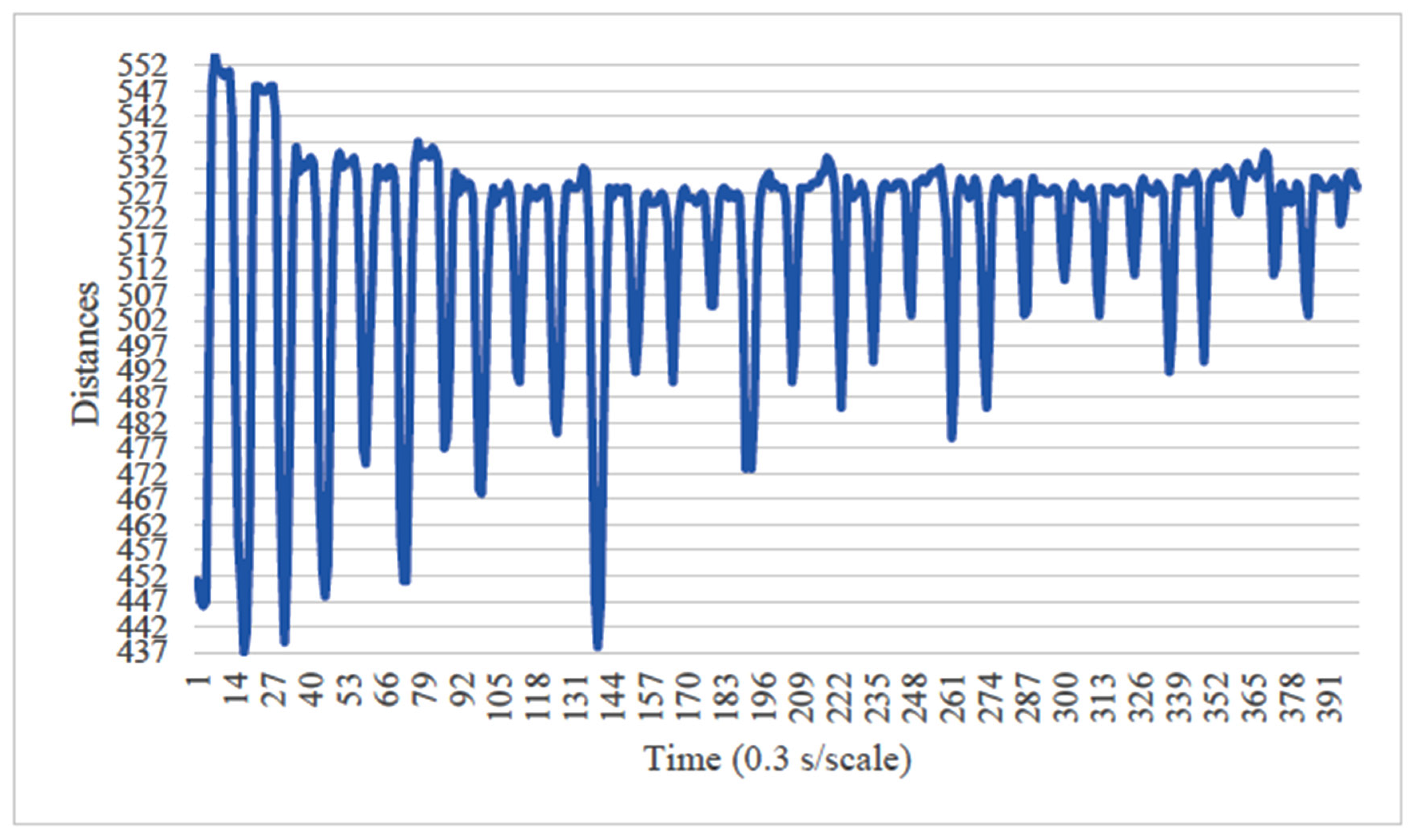

3. Image Analysis

Setting Image Processing Conditions

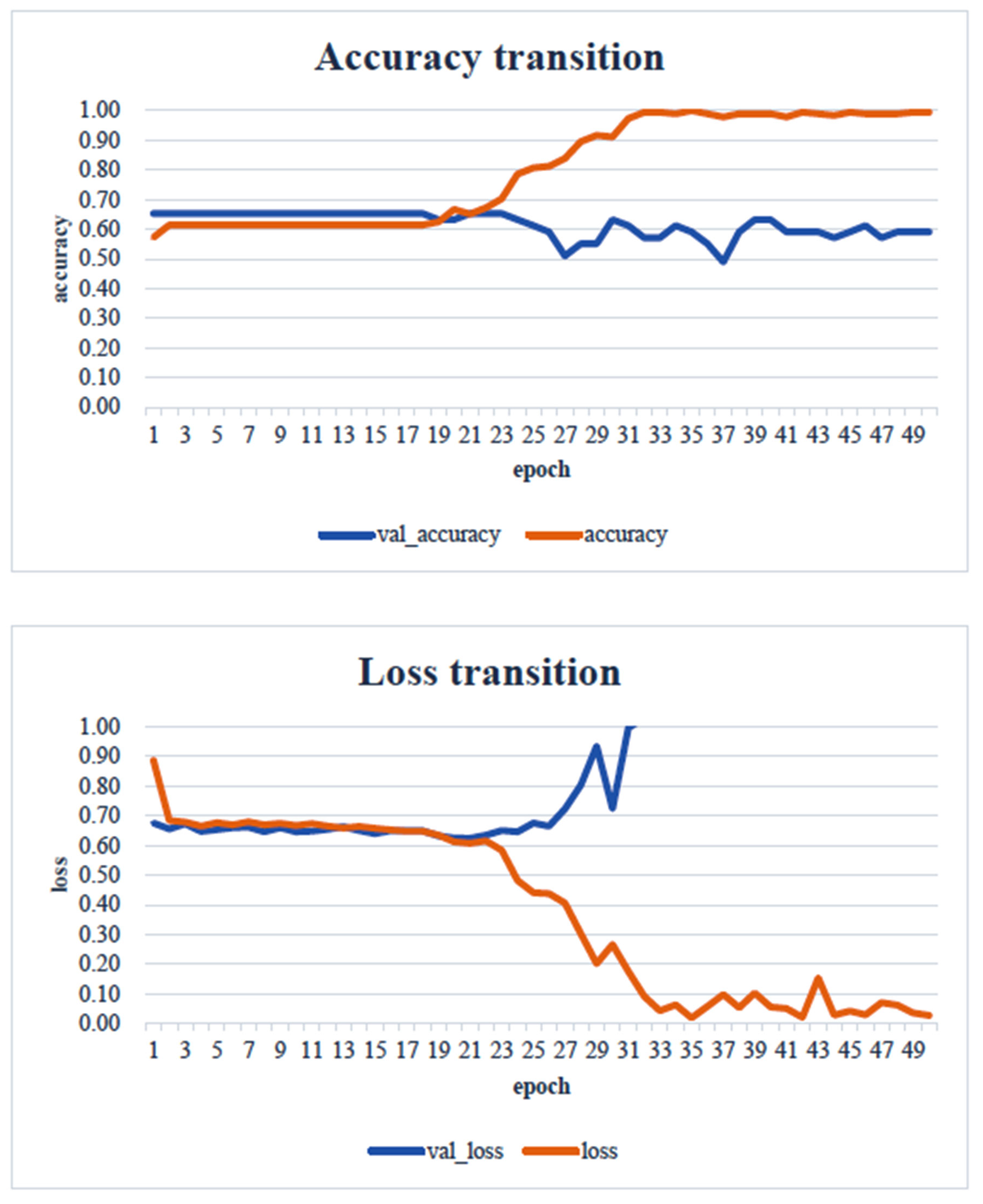

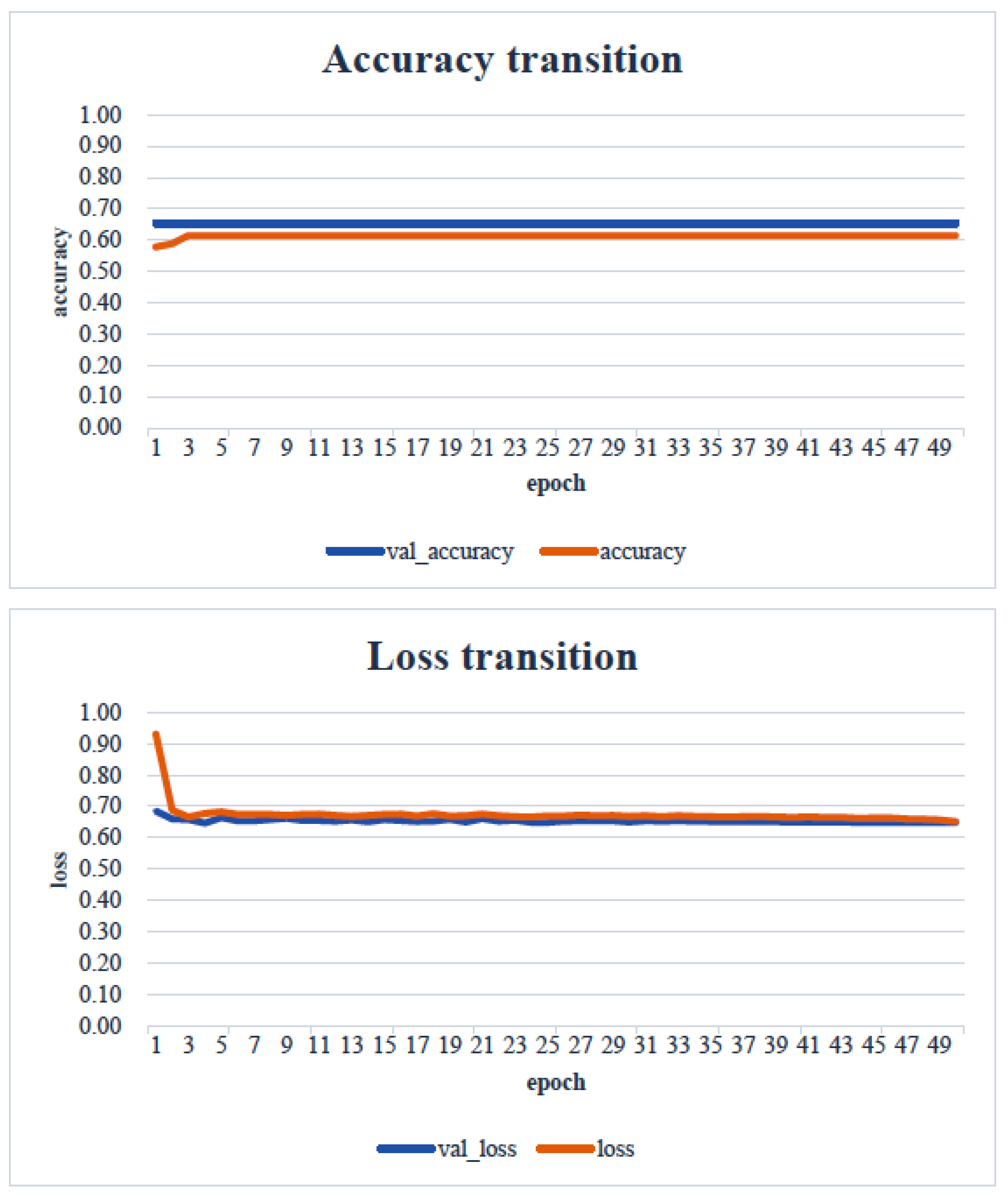

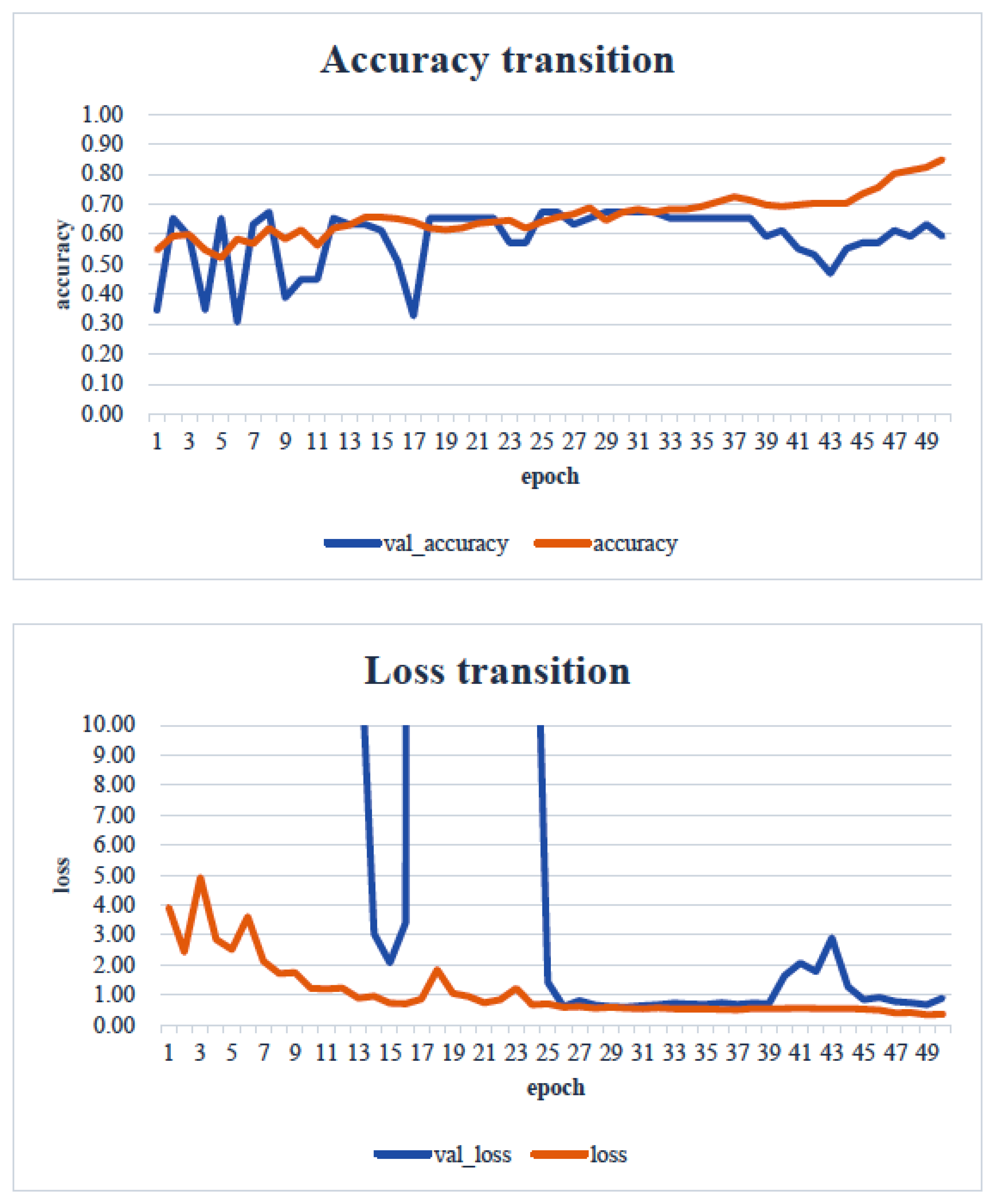

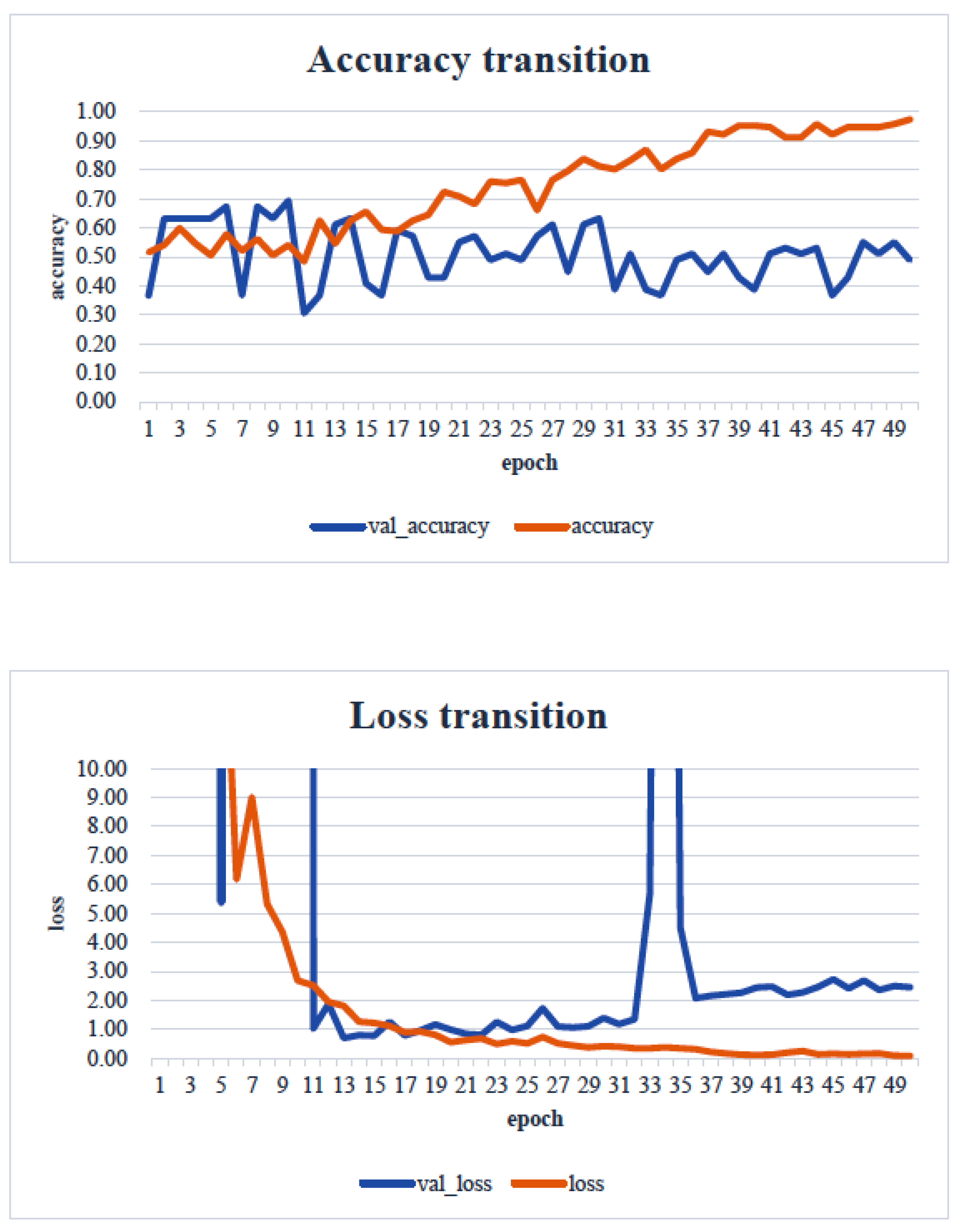

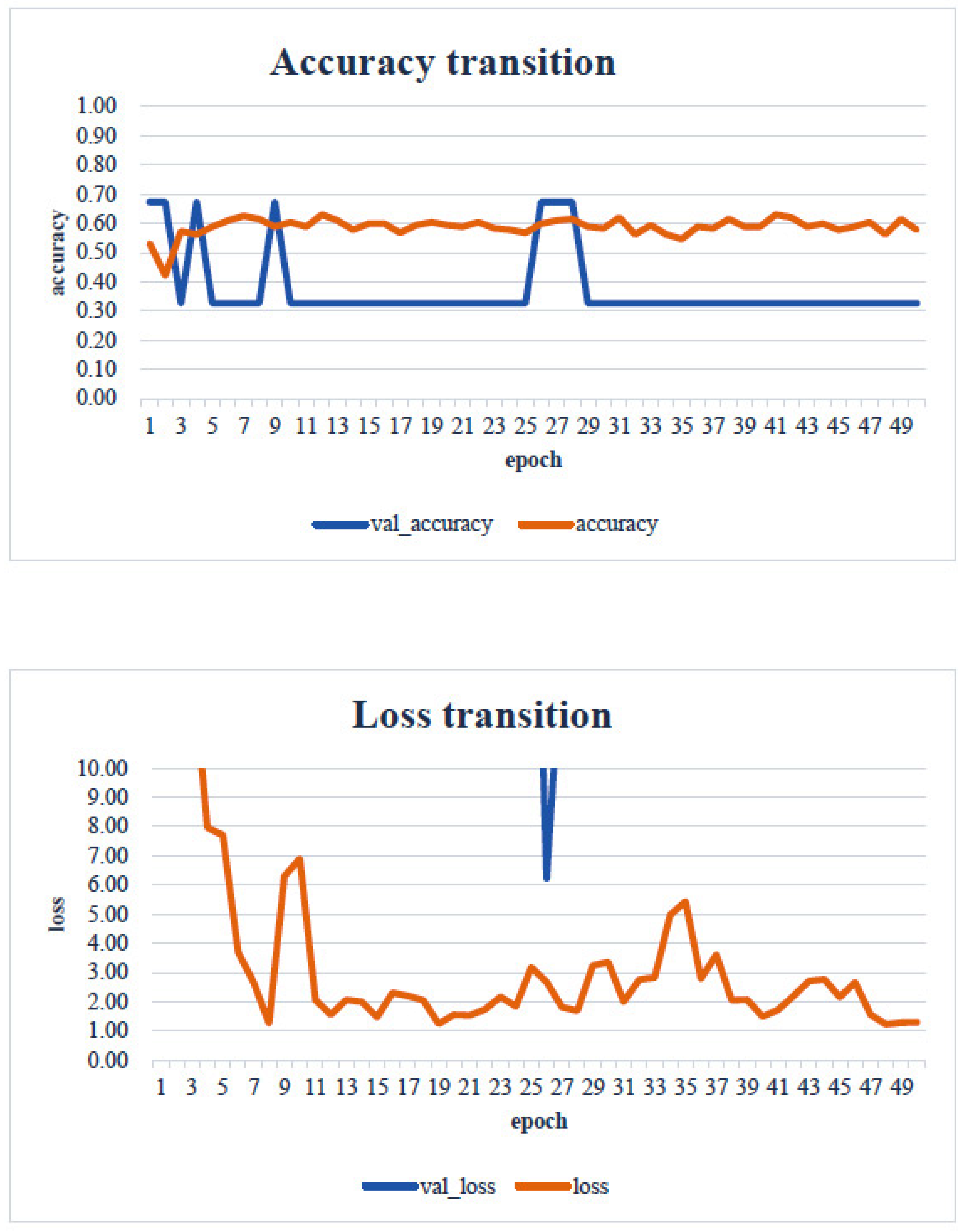

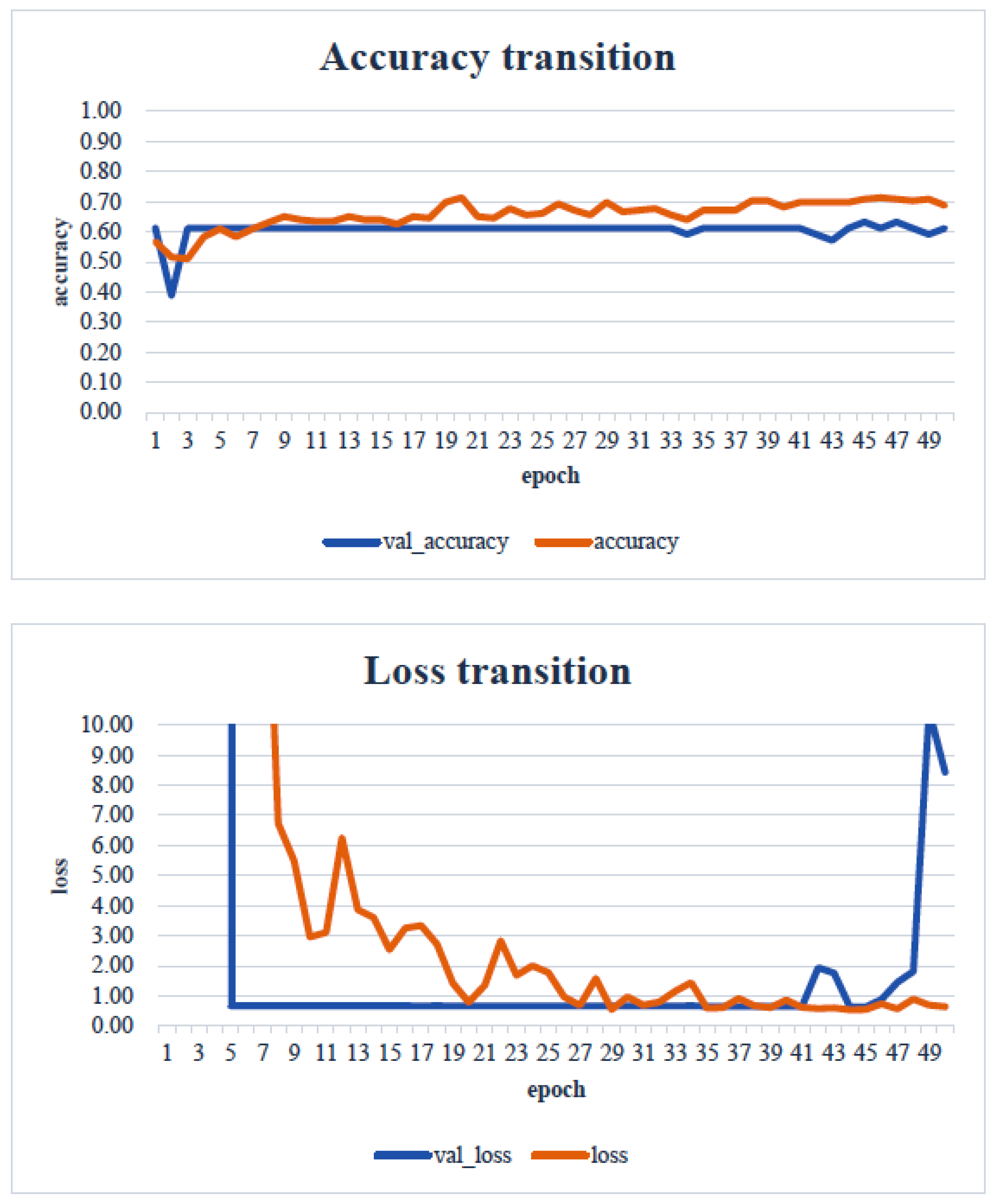

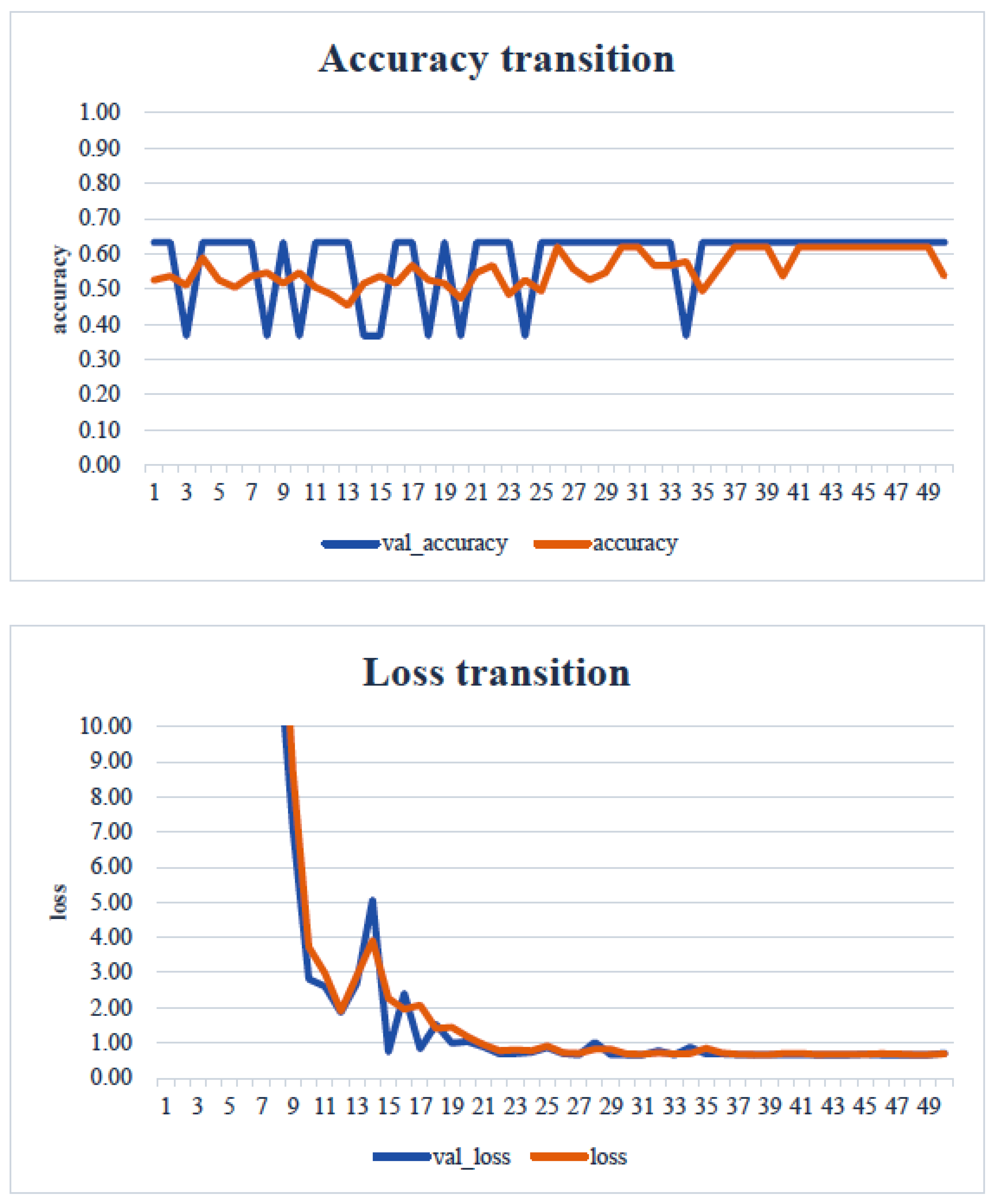

4. Results

5. Consideration

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- The Japan Prosthodontic Society. Guidelines for evaluating masticatory dysfunction—Primarily methods for evaluating chewing ability. J. Jpn. Prosthodont. Soc. 2002, 46, 619–625. [Google Scholar]

- Ministry of Health, Labour and Welfare. Basic Checklist. 2009. Available online: https://www.mhlw.go.jp/topics/2009/05/dl/tp0501-1f_0005.pdf (accessed on 2 July 2024).

- Shiga, H.; Kobayashi, Y.; Kumano, M.; Ohsako, C.; Mizuchi, I. Evaluation of chewing efficiency using chewing time for gum jelly. J. Jaw Funct. 2004, 11, 21–25. [Google Scholar]

- Yamaguchi, Y.; Hisatsune, T.; Kimura, T.; Komatsu, K.; Uchiyama, Y. Evaluation of the detection rate of occlusal contact points in the intercuspal position using Dental Press Scale®. J. Jpn. Prosthodont. Soc. 1995, 39, 1113–1120. [Google Scholar] [CrossRef][Green Version]

- Ikebata, K.; Hatta, K.; Mihara, Y.; Murakami, K. Key issues on “oral dysfunction”. Geriatr. Dent. 2020, 34, 451–456. [Google Scholar]

- Hirano, H. Verification of “Oral Dysfunction” Using Cohort Data from Elderly Residents in the Community. Ministry of Health, Labour and Welfare Science Research Grants (Research Project on Long-Term Care and Aging). 2024. Available online: https://mhlw-grants.niph.go.jp/system/files/report_pdf/202116004A-buntan1.pdf (accessed on 30 June 2024).

- Nakamura, T.; Morishita, M.; Muraoka, K.; Hanatani, T.; Usui, M.; Nakajima, K. Estimation of bite force from facial morphology. J. Jpn. Prosthodont. Soc. 2016, 58, 33–40. [Google Scholar]

- Okabe, Y.; Ai, T. The influence of different occlusal support regions on the electromyographic activity of the masseter and temporalis muscles during biting. J. Jpn. Soc. Study Jaw Funct. 1995, 7, 77–88. [Google Scholar]

- Masamura, K.; Yoshida, E.; Ono, K.; Inaba, R.; Iwata, H. The relationship between subjective chewing satisfaction, remaining teeth, and health in the elderly. J. Public Health 1996, 43, 835–843. [Google Scholar]

- Tominaga, I.; Ando, Y. The relationship between subjective and objective evaluations in assessing masticatory ability. J. Oral Hyg. Sci. 2007, 57, 166–175. [Google Scholar]

- Tominaga, I.; Hamano, T.; Tsuchisaka, S.; Ando, Y. The relationship between chewing and cognitive dysfunction. J. Oral Hyg. Sci. 2016, 66, 274. [Google Scholar]

- Kikutani, T. Determination of food texture in elderly with movement disorder-related chewing dysfunction. J. Jpn. Prosthodont. Soc. 2016, 8, 126–131. [Google Scholar]

- Kamiya, K.; Akinaga, K.; Harusaku, S.; Yoshida, R.; Uchida, S. Study on the actual situation and factors of observation in oral care practiced by nurses. Biomed. Fuzzy Syst. Soc. J. 2020, 22, 1–9. [Google Scholar]

- Chang, M.-Y.; Lee, G.; Jung, Y.-J.; Park, J.-S. Effect of Neuromuscular Electrical Stimulation on Masseter Muscle Thickness and Maximal Bite Force Among Healthy Community-Dwelling Persons Aged 65 Years and Older: A Randomized, Double Blind, Placebo-Controlled Study. Int. J. Environ. Res. Public Health 2020, 17, 3783. [Google Scholar] [CrossRef]

- Haralur, S.B.; Majeed, M.I.; Chaturvedi, S.; Alqahtani, N.M.; Alfarsi, M. Association between preferred chewing side and dynamic occlusal parameters. J. Int. Med. Res. 2019, 47, 1908–1915. [Google Scholar] [CrossRef] [PubMed]

- Sakaguchi, K.; Maeda, N.; Yokoyama, A. Examination of lower facial skin movements during left- and right-side chewing. J. Prosthodont. Res. 2011, 55, 32–39. [Google Scholar] [CrossRef]

- Torii, K.; Honda, E.; Kita, K. Development of a medical record input support system from panoramic X-ray images using AI. Dent. Radiol. 2022, 62, 24–34. [Google Scholar]

- Khanagar, S.B.; Vishwanathaiah, S.; Naik, S.; Al-Kheraif, A.A.; Divakar, D.D.; Sarode, S.C.; Bhandi, S.; Patil, S. Application and performance of artificial intelligence technology in forensic odontology—A systematic review. Leg. Med. 2021, 48, 101826. [Google Scholar] [CrossRef]

- Tajima, S.; Sonoda, K.; Kobayashi, H. Development of an AI model to automatically detect root bifurcation lesions in panoramic X-ray images. J. Jpn. Soc. Periodontol. 2021, 63, 119–128. [Google Scholar] [CrossRef]

- Shankara Narayanan, V.; Ahmed, S.A.; Sneha Varsha, M.; Guruprakash, J. Mobile Application for Oral Disease Detection Using Federated Learning. arXiv 2023, arXiv:2403.12044v1. [Google Scholar]

- Fujisawa, M.; Miura, S.; Isogai, T.; Niitani, K.; Maeno, M.; Komine, T.; Hosaka, K.; Mine, A.; Sato, Y.; Okubo, R.; et al. A study on the development of an online oral health management system using AI. J. Jpn. Soc. Oral Health 2022, 41, 32–36. [Google Scholar]

- Weng, W.; Imaizumi, M.; Murono, S.; Zhu, X. Expert-level aspiration and penetration detection during flexible endoscopic evaluation of swallowing with artificial intelligence-assisted diagnosis. Sci. Rep. 2022, 12, 21689. [Google Scholar] [CrossRef]

- Kikutani, T.; Tamura, F.; Nishiwaki, K.; Kodama, M.; Suda, M.; Fukui, T.; Takahashi, N.; Yoshida, M.; Akagawa, Y.; Kimura, M. Oral motor function and masticatory performance in the community-dwelling elderly. Odontology 2009, 97, 38–42. [Google Scholar] [CrossRef]

- Ueda, T.; Mizuguchi, S.; Ikebeya, K.; Tamura, F.; Nagao, H.; Furuya, J.; Matsuo, K.; Yamamoto, K.; Kanazawa, M.; Sakurai, K. Examination and diagnosis of oral dysfunction—Interim report for revision. Geriatr. Dent. 2018, 33, 299–303. [Google Scholar]

- Hori, K.; Uehara, F.; Yamaga, Y.; Yoshimura, S.; Okawa, J.; Tanimura, M.; Ono, T. Reliability of a novel wearable device to measure chewing frequency. J. Prosthodont. Res. 2021, 65, 340–345. [Google Scholar] [CrossRef] [PubMed]

- Fujita, H. From the Basics of Medical Image Diagnosis using AI technology to Generative AI. Off. J. Jpn. Soc. Child Neurol. 2024, 56, 411–419. [Google Scholar]

- Kamishima, T. Transfer Learning. Jpn. Soc. Artif. Intell. 2010, 25, 572–580. [Google Scholar]

- Park, J.-H.; Moon, H.-S.; Jung, H.-I.; Hwang, J.; Choi, Y.-H.; Kim, J.-E. Deep learning and clustering approaches for dental implant size classification based on periapical radiographs. Sci. Rep. 2023, 13, 16856. [Google Scholar] [CrossRef]

- Lee, J.-H.; Kim, D.-H.; Jeong, S.-N.; Choi, S.-H. Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm. J. Dent. 2018, 77, 106–111. [Google Scholar] [CrossRef]

- Mohammad-Rahimi, H.; Motamedian, S.R.; Pirayesh, Z.; Haiat, A.; Zahedrozegar, S.; Mahmoudinia, E.; Rohban, M.H.; Krois, J.; Lee, J.-H.; Schwendicke, F. Deep learning in periodontology and oral implantology: A scoping review. J. Periodontal Res. 2022, 57, 942–951. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Takeda, Y.; Yamaguchi, K.; Takahashi, N.; Nakanishi, Y.; Ochi, M. An Image Analysis for the Development of a Skin Change-Based AI Screening Model as an Alternative to the Bite Pressure Test. Healthcare 2025, 13, 936. https://doi.org/10.3390/healthcare13080936

Takeda Y, Yamaguchi K, Takahashi N, Nakanishi Y, Ochi M. An Image Analysis for the Development of a Skin Change-Based AI Screening Model as an Alternative to the Bite Pressure Test. Healthcare. 2025; 13(8):936. https://doi.org/10.3390/healthcare13080936

Chicago/Turabian StyleTakeda, Yoshihiro, Kanetaka Yamaguchi, Naoto Takahashi, Yasuhiro Nakanishi, and Morio Ochi. 2025. "An Image Analysis for the Development of a Skin Change-Based AI Screening Model as an Alternative to the Bite Pressure Test" Healthcare 13, no. 8: 936. https://doi.org/10.3390/healthcare13080936

APA StyleTakeda, Y., Yamaguchi, K., Takahashi, N., Nakanishi, Y., & Ochi, M. (2025). An Image Analysis for the Development of a Skin Change-Based AI Screening Model as an Alternative to the Bite Pressure Test. Healthcare, 13(8), 936. https://doi.org/10.3390/healthcare13080936