Pre- Trained Language Models for Mental Health: An Empirical Study on Arabic Q&A Classification

Abstract

1. Introduction

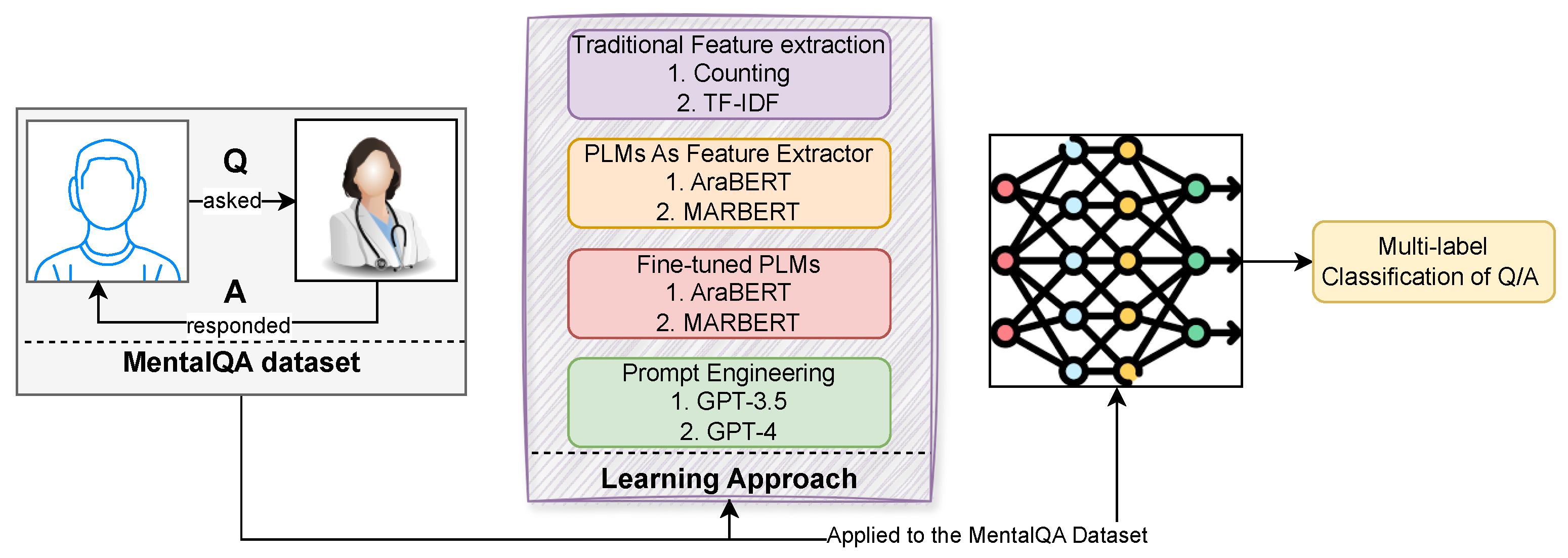

Research Objectives and Contributions

- Conducting the first set of experiments on the MentalQA dataset, the novel Arabic mental health question-answering dataset featuring question-answering interactions.

- Exploring the effectiveness of Classical Machine Learning models and PLMs on the MentalQA dataset.

- Demonstrating the current capabilities and limitations of both classical models, Arabic PLMs and promoting GPT models for mental health care and ways for further improving the results of PLMs on the MentalQA dataset. By identifying the areas where these models excel and where they fall short, we can pave the way for further development and refinement.

2. Related Work

2.1. Classical Machine Learning

2.2. Pre-Trained Language Models

2.3. Resources for Mental Health Support

2.4. How Does This Research Differ from Existing Work?

3. Experiments

3.1. Dataset Description

3.2. Task Setting

3.3. Experimental Design

- Task [task prompt]: Your task is to analyze the question in the TEXT below from a patient and classify it with one or multiple of the seven labels in the following list: “Diagnosis”, “Treatment”, etc.

- Text [input text]: Be persistent with your doctor, and inform them of your concerns. Embrace the spiritual aspects and try to nurture them, as they will assist you in transcendental thinking and relaxation.

- Response [output]: Guidance, emotional support.

3.4. Application of Learning Approaches in MentalQA

3.5. Training Objective

3.6. Implementation Details

3.7. Experimental Results

- F1-Micro aggregates the contributions of all classes to compute the average performance, which is useful when all classes are equally important.

- F1-Weighted accounts for label imbalance by weighting the F1 score of each class by its support (i.e., number of true instances).

- Jaccard Score (also known as Intersection over Union) is particularly suitable for multi-label settings, as it measures the similarity between the predicted and true label sets.

4. Evaluation of the Results

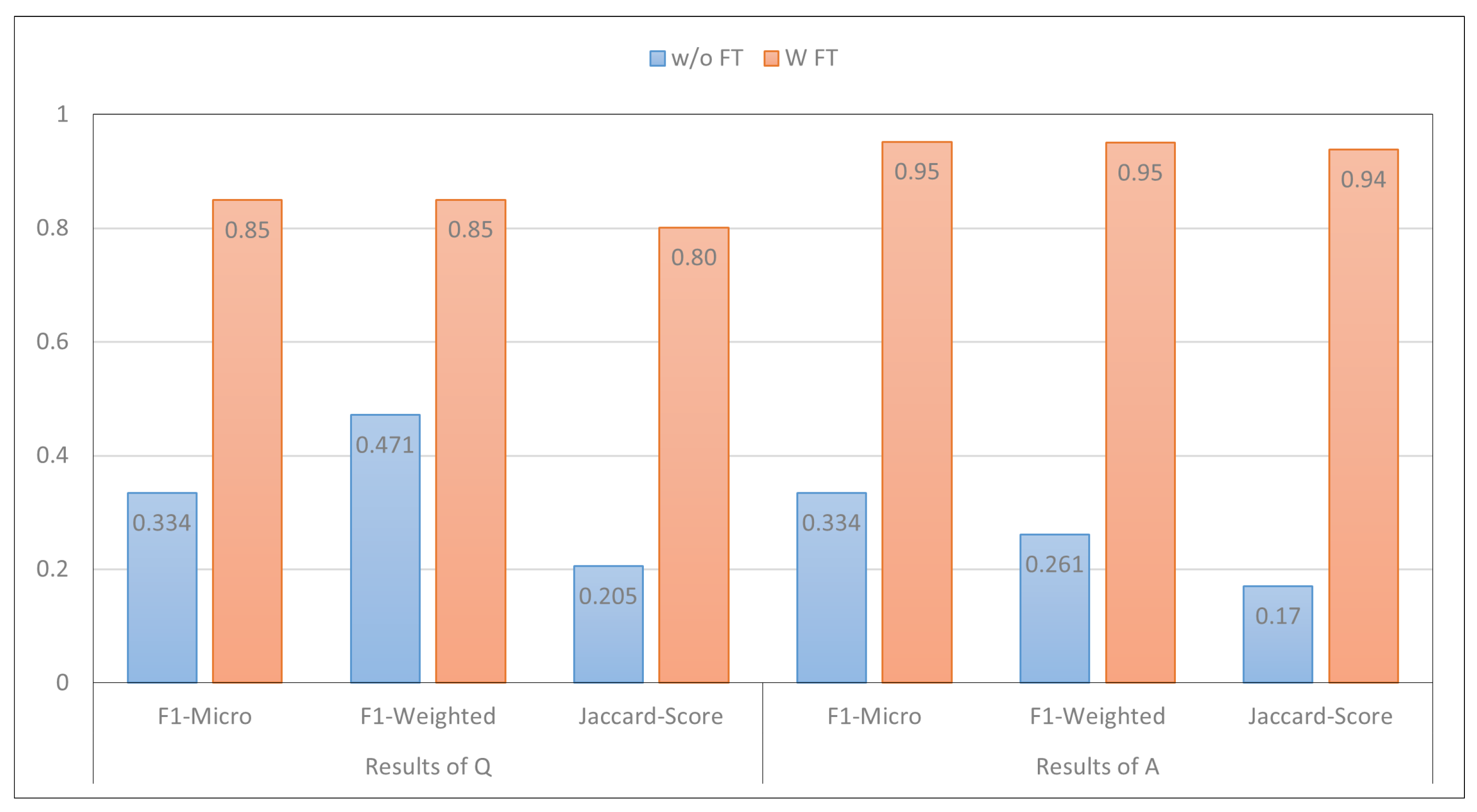

4.1. Effect of Fine-Tuning PLMs

4.2. Effect of Zero-Shot vs. Few-Shot Learning

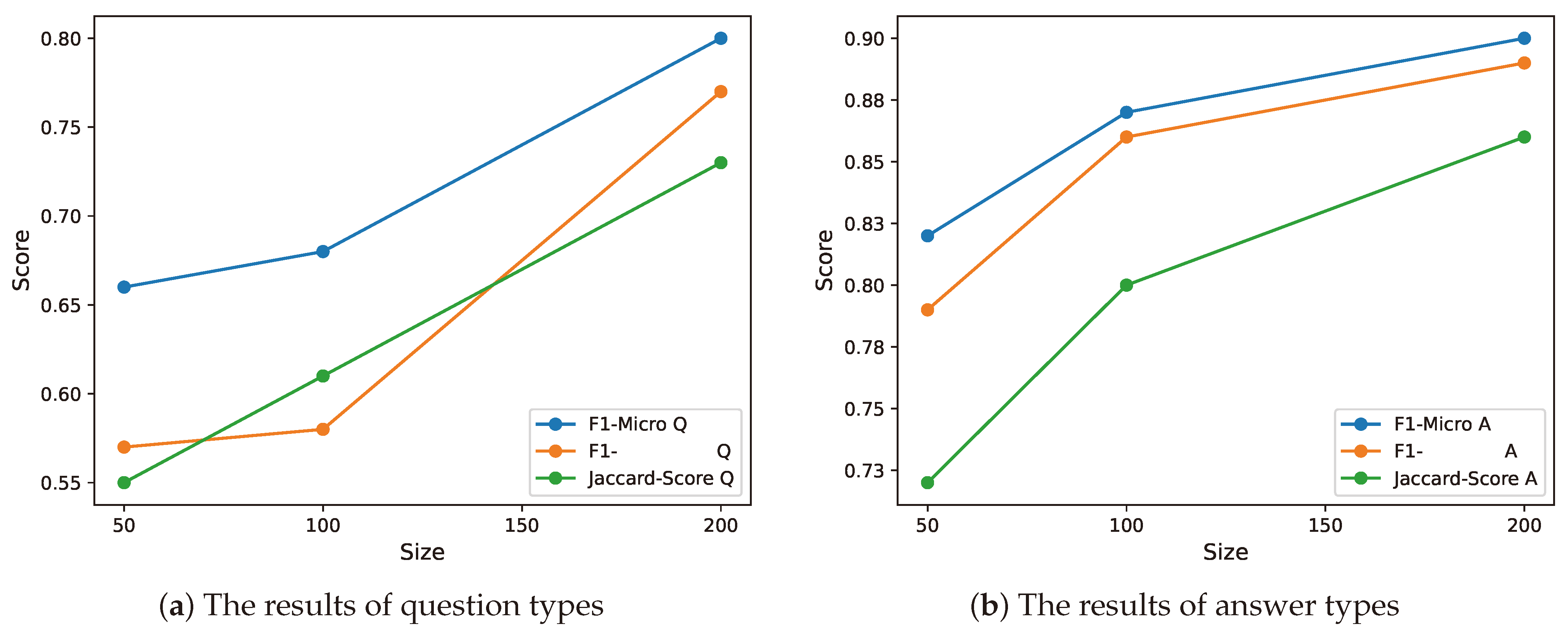

4.3. Effect of Data Size on Performance

4.4. Case Study

5. Discussion

5.1. Implications

5.2. Limitations and Ethical Considerations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- He, K.; Mao, R.; Lin, Q.; Ruan, Y.; Lan, X.; Feng, M.; Cambria, E. A survey of large language models for healthcare: From data, technology, and applications to accountability and ethics. arXiv 2023, arXiv:2310.05694. [Google Scholar] [CrossRef]

- Liu, J.M.; Li, D.; Cao, H.; Ren, T.; Liao, Z.; Wu, J. Chatcounselor: A large language models for mental health support. arXiv 2023, arXiv:2309.15461. [Google Scholar]

- Brocki, L.; Dyer, G.C.; Gładka, A.; Chung, N.C. Deep learning mental health dialogue system. In Proceedings of the 2023 IEEE International Conference on Big Data and Smart Computing (BigComp), Jeju, Republic of Korea, 13–16 February 2023; pp. 395–398. [Google Scholar]

- Sharma, A.; Lin, I.W.; Miner, A.S.; Atkins, D.C.; Althoff, T. Human–AI collaboration enables more empathic conversations in text-based peer-to-peer mental health support. Nat. Mach. Intell. 2023, 5, 46–57. [Google Scholar] [CrossRef]

- Guellil, I.; Saâdane, H.; Azouaou, F.; Gueni, B.; Nouvel, D. Arabic natural language processing: An overview. J. King Saud Univ.-Comput. Inf. Sci. 2021, 33, 497–507. [Google Scholar] [CrossRef]

- Atapattu, T.; Herath, M.; Elvitigala, C.; de Zoysa, P.; Gunawardana, K.; Thilakaratne, M.; de Zoysa, K.; Falkner, K. EmoMent: An Emotion Annotated Mental Health Corpus from Two South Asian Countries. In Proceedings of the 29th International Conference on Computational Linguistics, Gyeongju, Republic of Korea, 12–17 October 2022; pp. 6991–7001. [Google Scholar]

- Kabir, M.K.; Islam, M.; Kabir, A.N.B.; Haque, A.; Rhaman, M.K. Detection of Depression Severity Using Bengali Social Media Posts on Mental Health: Study Using Natural Language Processing Techniques. JMIR Form. Res. 2022, 6, e36118. [Google Scholar] [CrossRef]

- Sun, H.; Lin, Z.; Zheng, C.; Liu, S.; Huang, M. PsyQA: A Chinese Dataset for Generating Long Counseling Text for Mental Health Support. In Proceedings of the Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021, Online, 1–6 August 2021; pp. 1489–1503. [Google Scholar]

- Zhang, T.; Schoene, A.M.; Ji, S.; Ananiadou, S. Natural language processing applied to mental illness detection: A narrative review. NPJ Digit. Med. 2022, 5, 46. [Google Scholar] [CrossRef]

- Abdulsalam, A.; Alhothali, A.; Al-Ghamdi, S. Detecting Suicidality in Arabic Tweets Using Machine Learning and Deep Learning Techniques. Arab. J. Sci. Eng. 2024, 49, 12729–12742. [Google Scholar] [CrossRef]

- Aldhafer, S.H.; Yakhlef, M. Depression Detection In Arabic Tweets Using Deep Learning. In Proceedings of the 2022 6th International Conference on Information Technology, Information Systems and Electrical Engineering (ICITISEE), Yogyakarta, Indonesia, 13–14 December 2022; pp. 1–6. [Google Scholar]

- Al-Musallam, N.; Al-Abdullatif, M. Depression Detection Through Identifying Depressive Arabic Tweets From Saudi Arabia: Machine Learning Approach. In Proceedings of the 2022 Fifth National Conference of Saudi Computers Colleges (NCCC), Makkah, Saudi Arabia, 17–18 December 2022; pp. 11–18. [Google Scholar]

- Al-Laith, A.; Alenezi, M. Monitoring people’s emotions and symptoms from Arabic tweets during the COVID-19 pandemic. Information 2021, 12, 86. [Google Scholar] [CrossRef]

- El-Ramly, M.; Abu-Elyazid, H.; Mo’men, Y.; Alshaer, G.; Adib, N.; Eldeen, K.A.; El-Shazly, M. CairoDep: Detecting depression in Arabic posts using BERT Transformers. In Proceedings of the 2021 Tenth International Conference on Intelligent Computing and Information Systems (ICICIS), Cairo, Egypt, 5–7 December 2021; pp. 207–212. [Google Scholar]

- Alhuzali, H.; Alasmari, A.; Alsaleh, H. MentalQA: An Annotated Arabic Corpus for Questions and Answers of Mental Healthcare. IEEE Access 2024, 12, 101155–101165. [Google Scholar] [CrossRef]

- Fernández-Delgado, M.; Cernadas, E.; Barro, S.; Amorim, D. Do we need hundreds of classifiers to solve real world classification problems? J. Mach. Learn. Res. 2014, 15, 3133–3181. [Google Scholar]

- Nikam, S.S. A comparative study of classification techniques in data mining algorithms. Orient. J. Comput. Sci. Technol. 2015, 8, 13–19. [Google Scholar]

- Howard, J.; Ruder, S. Universal Language Model Fine-tuning for Text Classification. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Melbourne, Australia, 15–20 July 2018; Volume 1, pp. 328–339. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Lan, Z.; Chen, M.; Goodman, S.; Gimpel, K.; Sharma, P.; Soricut, R. Albert: A lite bert for self-supervised learning of language representations. arXiv 2019, arXiv:1909.11942. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. Roberta: A robustly optimized bert pretraining approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Yang, Z.; Dai, Z.; Yang, Y.; Carbonell, J.; Salakhutdinov, R.R.; Le, Q.V. Xlnet: Generalized autoregressive pretraining for language understanding. Adv. Neural Inf. Process. Syst. 2019, 32, 1–11. [Google Scholar]

- Shaalan, K.; Siddiqui, S.; Alkhatib, M.; Abdel Monem, A. Challenges in Arabic natural language processing. In World Scientific: Computational Linguistics, Speech and Image Processing for Arabic Language; World Scientific: Singapore, 2019; pp. 59–83. [Google Scholar]

- ElJundi, O.; Antoun, W.; El Droubi, N.; Hajj, H.; El-Hajj, W.; Shaban, K. hulmona: The universal language model in arabic. In Proceedings of the Fourth Arabic Natural Language Processing Workshop, Florence, Italy, 1 August 2019; pp. 68–77. [Google Scholar]

- Antoun, W.; Baly, F.; Hajj, H. AraBERT: Transformer-based Model for Arabic Language Understanding. In Proceedings of the LREC 2020 Workshop Language Resources and Evaluation Conference, Marseille, France, 11–16 May 2020; p. 9. [Google Scholar]

- Lan, W.; Chen, Y.; Xu, W.; Ritter, A. An Empirical Study of Pre-trained Transformers for Arabic Information Extraction. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 4727–4734. [Google Scholar]

- Abdul-Mageed, M.; Elmadany, A.; Nagoudi, E.M.B. ARBERT & MARBERT: Deep Bidirectional Transformers for Arabic. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Virtual, 1–6 August 2021; pp. 7088–7105. [Google Scholar]

- Inoue, G.; Alhafni, B.; Baimukan, N.; Bouamor, H.; Habash, N. The Interplay of Variant, Size, and Task Type in Arabic Pre-trained Language Models. In Proceedings of the Sixth Arabic Natural Language Processing Workshop, Kiev, Ukraine, 19 April 2021; pp. 92–104. [Google Scholar]

- Almazrouei, E.; Cojocaru, R.; Baldo, M.; Malartic, Q.; Alobeidli, H.; Mazzotta, D.; Penedo, G.; Campesan, G.; Farooq, M.; Alhammadi, M.; et al. AlGhafa Evaluation Benchmark for Arabic Language Models. In Proceedings of the ArabicNLP 2023, Singapore, 7 December 2023; pp. 244–275. [Google Scholar]

- Abdelali, A.; Mubarak, H.; Chowdhury, S.; Hasanain, M.; Mousi, B.; Boughorbel, S.; Abdaljalil, S.; El Kheir, Y.; Izham, D.; Dalvi, F.; et al. LAraBench: Benchmarking Arabic AI with Large Language Models. In Proceedings of the 18th Conference of the European Chapter of the Association for Computational Linguistics (Volume 1: Long Papers), St. Julian’s, Malta, 17–22 March 2024; pp. 487–520. [Google Scholar]

- Lee, J.; Yoon, W.; Kim, S.; Kim, D.; Kim, S.; So, C.H.; Kang, J. BioBERT: A pre-trained biomedical language representation model for biomedical text mining. Bioinformatics 2020, 36, 1234–1240. [Google Scholar] [CrossRef] [PubMed]

- Alsentzer, E.; Murphy, J.; Boag, W.; Weng, W.H.; Jindi, D.; Naumann, T.; McDermott, M. Publicly Available Clinical BERT Embeddings. In Proceedings of the 2nd Clinical Natural Language Processing Workshop, Minneapolis, MN, USA, 7 June 2019; pp. 72–78. [Google Scholar]

- Ji, S.; Zhang, T.; Ansari, L.; Fu, J.; Tiwari, P.; Cambria, E. MentalBERT: Publicly Available Pretrained Language Models for Mental Healthcare. In Proceedings of the Thirteenth Language Resources and Evaluation Conference, Marseille, France, 20–25 June 2022; pp. 7184–7190. [Google Scholar]

- Guo, Z.; Lai, A.; Thygesen, J.H.; Farrington, J.; Keen, T.; Li, K. Large Language Model for Mental Health: A Systematic Review. arXiv 2024, arXiv:2403.15401. [Google Scholar]

- Coppersmith, G.; Dredze, M.; Harman, C.; Hollingshead, K.; Mitchell, M. CLPsych 2015 shared task: Depression and PTSD on Twitter. In Proceedings of the 2nd Workshop on Computational Linguistics and Clinical Psychology: From Linguistic Signal to Clinical Reality, Denver, CO, USA, 5 June 2015; pp. 31–39. [Google Scholar]

- Alasmari, A.; Kudryashov, L.; Yadav, S.; Lee, H.; Demner-Fushman, D. CHQ-SocioEmo: Identifying Social and Emotional Support Needs in Consumer-Health Questions. Sci. Data 2023, 10, 329. [Google Scholar] [CrossRef]

- Shen, G.; Jia, J.; Nie, L.; Feng, F.; Zhang, C.; Hu, T.; Chua, T.S.; Zhu, W. Depression detection via harvesting social media: A multimodal dictionary learning solution. In Proceedings of the 26th International Joint Conference on Artificial Intelligence, Melbourne, Australia, 19–25 August 2017; pp. 3838–3844. [Google Scholar]

- Turcan, E.; Mckeown, K. Dreaddit: A Reddit Dataset for Stress Analysis in Social Media. In Proceedings of the Tenth International Workshop on Health Text Mining and Information Analysis (LOUHI 2019), Hong Kong, China, 3 November 2019; pp. 97–107. [Google Scholar]

- Rastogi, A.; Liu, Q.; Cambria, E. Stress detection from social media articles: New dataset benchmark and analytical study. In Proceedings of the 2022 International Joint Conference on Neural Networks (IJCNN), Padua, Italy, 18–23 July 2022; pp. 1–8. [Google Scholar]

- Boonyarat, P.; Liew, D.J.; Chang, Y.C. Leveraging enhanced BERT models for detecting suicidal ideation in Thai social media content amidst COVID-19. Inf. Process. Manag. 2024, 61, 103706. [Google Scholar] [CrossRef]

- Ghosh, S.; Ekbal, A.; Bhattacharyya, P. Cease, a corpus of emotion annotated suicide notes in English. In Proceedings of the Twelfth Language Resources and Evaluation Conference, Marseille, France, 11–16 May 2020; pp. 1618–1626. [Google Scholar]

- Chaturvedi, J.; Velupillai, S.; Stewart, R.; Roberts, A. Identifying Mentions of Pain in Mental Health Records Text: A Natural Language Processing Approach. Stud. Health Technol. Informatics 2024, 310, 695–699. [Google Scholar]

- Garg, M.; Saxena, C.; Saha, S.; Krishnan, V.; Joshi, R.; Mago, V. CAMS: An Annotated Corpus for Causal Analysis of Mental Health Issues in Social Media Posts. In Proceedings of the Thirteenth Language Resources and Evaluation Conference, Marseille, France, 20–25 June 2022; pp. 6387–6396. [Google Scholar]

- Garg, M. WellXplain: Wellness concept extraction and classification in Reddit posts for mental health analysis. Knowl.-Based Syst. 2024, 284, 111228. [Google Scholar] [CrossRef]

- Peters, M.E.; Neumann, M.; Iyyer, M.; Gardner, M.; Clark, C.; Lee, K.; Zettlemoyer, L. Deep contextualized word representations. In Proceedings of the NAACL-HLT, New Orleans, LA, USA, 1–6 June 2018; pp. 2227–2237. [Google Scholar]

- Peters, M.; Neumann, M.; Zettlemoyer, L.; Yih, W.t. Dissecting Contextual Word Embeddings: Architecture and Representation. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 1499–1509. [Google Scholar]

- Peters, M.; Ammar, W.; Bhagavatula, C.; Power, R. Semi-supervised sequence tagging with bidirectional language models. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Vancouver, BC, Canada, 30 July–4 August 2017; Volume 1, pp. 1756–1765. [Google Scholar]

- Wahba, Y.; Madhavji, N.; Steinbacher, J. A comparison of svm against Pre-Trained Language Models (plms) for text classification tasks. In Proceedings of the International Conference on Machine Learning, Optimization, and Data Science, Certosa di Pontignano, Italy, 18–22 September 2022; pp. 304–313. [Google Scholar]

- Alhuzali, H.; Zhang, T.; Ananiadou, S. Predicting Sign of Depression via Using Frozen Pre-trained Models and Random Forest Classifier. In Proceedings of the CLEF (Working Notes), Bucharest, Romania, 21–24 September 2021. [Google Scholar]

- Alhuzali, H.; Ananiadou, S. SpanEmo: Casting Multi-label Emotion Classification as Span-prediction. In Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume, Online, 19–23 April 2021; pp. 1573–1584. [Google Scholar]

- Felbo, B.; Mislove, A.; Søgaard, A.; Rahwan, I.; Lehmann, S. Using millions of emoji occurrences to learn any-domain representations for detecting sentiment, emotion and sarcasm. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 9–11 September 2017; pp. 1615–1625. [Google Scholar]

- Paszke, A.; Gross, S.; Chintala, S.; Chanan, G.; Yang, E.; DeVito, Z.; Lin, Z.; Desmaison, A.; Antiga, L.; Lerer, A. Automatic differentiation in pytorch. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Wolf, T.; Debut, L.; Sanh, V.; Chaumond, J.; Delangue, C.; Moi, A.; Cistac, P.; Rault, T.; Louf, R.; Funtowicz, M.; et al. HuggingFace’s Transformers: State-of-the-art Natural Language Processing. arXiv 2019, arXiv:1910.03771. [Google Scholar]

- Mohammad, S.; Bravo-Marquez, F.; Salameh, M.; Kiritchenko, S. Semeval-2018 task 1: Affect in tweets. In Proceedings of the 12th International Workshop on Semantic Evaluation, New Orleans, lLA, USA, 5–6 June 2018; pp. 1–17. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Gururangan, S.; Marasović, A.; Swayamdipta, S.; Lo, K.; Beltagy, I.; Downey, D.; Smith, N.A. Don’t Stop Pretraining: Adapt Language Models to Domains and Tasks. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 8342–8360. [Google Scholar]

- Alhuzali, H.; Ananiadou, S. Improving classification of adverse drug reactions through using sentiment analysis and transfer learning. In Proceedings of the 18th BioNLP Workshop and Shared Task, Florence, Italy, 1 August 2019; pp. 339–347. [Google Scholar]

- Zhang, T.; Yang, K.; Ji, S.; Ananiadou, S. Emotion fusion for mental illness detection from social media: A survey. Inf. Fusion 2023, 92, 231–246. [Google Scholar] [CrossRef]

- Alhuzali, H. Neural Networks for Textual Emotion Recognition and Analysis; The University of Manchester: Manchester, UK, 2022. [Google Scholar]

- Zhang, T.; Yang, K.; Alhuzali, H.; Liu, B.; Ananiadou, S. PHQ-aware depressive symptoms identification with similarity contrastive learning on social media. Inf. Process. Manag. 2023, 60, 103417. [Google Scholar] [CrossRef]

- Althnian, A.; AlSaeed, D.; Al-Baity, H.; Samha, A.; Dris, A.B.; Alzakari, N.; Abou Elwafa, A.; Kurdi, H. Impact of dataset size on classification performance: An empirical evaluation in the medical domain. Appl. Sci. 2021, 11, 796. [Google Scholar] [CrossRef]

| # | Q-Types | # | A-Types |

|---|---|---|---|

| 1 | Diagnosis | 1 | Information |

| 2 | Treatment | 2 | Direct Guidance |

| 3 | Anatomy | 3 | Emotional Support |

| 4 | Epidemiology | ||

| 5 | Healthy Lifestyle | ||

| 6 | Health Provider Choice | ||

| 7 | Other |

| Info./Task | Q-Types | A-Types |

|---|---|---|

| Train (#) | 300 | 300 |

| Validation (#) | 100 | 100 |

| Test (#) | 100 | 100 |

| Total (#) | 500 | 500 |

| Classes (#) | 7 | 3 |

| Setup | multi-label | multi-label |

| Parameter | Value |

|---|---|

| dimension | 768 |

| Batch-size | 8 |

| Dropout | |

| Early-stop-patience | 10 |

| #epochs | 15 |

| learning rate | |

| Optimizer | Adam |

| Results of Q Classification | Results of A Classification | |||||

|---|---|---|---|---|---|---|

| Model | F1-Micro | F1-Weighted | Jaccard-Score | F1-Micro | F1-Weighted | Jaccard-Score |

| Baseline | ||||||

| Random | 0.33 | 0.26 | 0.21 | 0.50 | 0.45 | 0.34 |

| Most common class | 0.62 | 0.75 | 0.49 | 0.79 | 0.83 | 0.67 |

| SVM | ||||||

| SVM (Occurrences) | 0.83 | 0.84 | 0.78 | 0.91 | 0.92 | 0.87 |

| SVM (Count) | 0.83 | 0.84 | 0.78 | 0.93 | 0.93 | 0.90 |

| SVM (TF-IDF) | 0.84 | 0.85 | 0.79 | 0.93 | 0.93 | 0.90 |

| PLMs As Feature Extractor | ||||||

| SVM (AraBERT) | 0.80 | 0.81 | 0.74 | 0.92 | 0.92 | 0.89 |

| SVM (CAMelBERT-DA) | 0.81 | 0.81 | 0.79 | 0.92 | 0.93 | 0.91 |

| SVM (MARBERT) | 0.81 | 0.81 | 0.79 | 0.92 | 0.92 | 0.89 |

| Fine-tuning PLMs | ||||||

| AraBERT | 0.84 | 0.82 | 0.79 | 0.94 | 0.94 | 0.92 |

| CAMelBERT-DA | 0.85 | 0.83 | 0.80 | 0.94 | 0.93 | 0.92 |

| MARBERT | 0.85 | 0.85 | 0.80 | 0.95 | 0.95 | 0.94 |

| Prompt Engineering | ||||||

| GPT-3.5 (Z-Shot) | 0.59 | 0.55 | 0.45 | 0.42 | 0.40 | 0.39 |

| GPT-4 (Z-Shot) | 0.57 | 0.54 | 0.44 | 0.43 | 0.41 | 0.40 |

| GPT-3.5 (F-Shot-3) | 0.66 | 0.61 | 0.53 | 0.66 | 0.68 | 0.57 |

| GPT-4 (F-Shot-3) | 0.66 | 0.60 | 0.52 | 0.66 | 0.68 | 0.56 |

| # | Translated Text | Actual | Prediction |

|---|---|---|---|

| Examples representing Answers | |||

| 1 | Be persistent with your doctor, and inform them of your concerns. Embrace the spiritual aspects and try to nurture them, as they will assist you in transcendental thinking and relaxation. | Guid, Emo | Guid |

| 2 | Good evening. It is necessary to consult a mental health professional to assess your condition. In the meantime, think positively about yourself and reconcile with yourself, loving yourself. | Guid, Emo | Guid |

| 3 | The sadness will end, and you will live in complete happiness, God willing. I advise you to speak to a specialist in psychological therapy, as there are modern methods and wonderful medications that can help you, God willing, to live happily. Many people go through tough times and overcome them, and you are one of them. Do not suppress your sadness, and consult someone you trust. | Guid, Emo | Guid |

| Examples representing Questions | |||

| 4 | I went to a psychiatrist two years ago and received treatment there. The symptoms disappeared, and I felt much better, so I stopped the treatment. However, now the sleep disturbances and anxiety have returned. What should I do? Should I go back to the doctor? | diag, treat, prov | treat |

| 5 | “I am a 40-year-old man who has never been married before, but now I am considering getting married. However, some people advise me against getting married at this age, saying that I won’t be able to live a happy life after reaching this age”. | H.life | diag, treat |

| 6 | The withdrawal symptoms of escitalopram are intense for me when I stopped taking it due to fear of its impact on pregnancy. However, the symptoms were strong, and I visited the doctor feeling unwell. My blood tests and urine tests came out normal, but I experienced a setback after discontinuing the medication two months ago. I am unable to visit the psychiatrist, and I have been using a dose of 10mg and then 20mg for approximately two and a half years. I need urgent help. | diag, treat | treat |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alhuzali, H.; Alasmari, A. Pre- Trained Language Models for Mental Health: An Empirical Study on Arabic Q&A Classification. Healthcare 2025, 13, 985. https://doi.org/10.3390/healthcare13090985

Alhuzali H, Alasmari A. Pre- Trained Language Models for Mental Health: An Empirical Study on Arabic Q&A Classification. Healthcare. 2025; 13(9):985. https://doi.org/10.3390/healthcare13090985

Chicago/Turabian StyleAlhuzali, Hassan, and Ashwag Alasmari. 2025. "Pre- Trained Language Models for Mental Health: An Empirical Study on Arabic Q&A Classification" Healthcare 13, no. 9: 985. https://doi.org/10.3390/healthcare13090985

APA StyleAlhuzali, H., & Alasmari, A. (2025). Pre- Trained Language Models for Mental Health: An Empirical Study on Arabic Q&A Classification. Healthcare, 13(9), 985. https://doi.org/10.3390/healthcare13090985