Multitask Deep Learning-Based Pipeline for Gas Leakage Detection via E-Nose and Thermal Imaging Multimodal Fusion

Abstract

1. Introduction

2. Previous Works

3. Materials and Methods

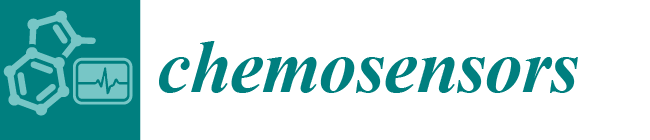

3.1. Fusion Methods of Multimodal Data

3.2. Deep Learning Models

3.3. Multimodal Dataset for Gas Leakage Detection

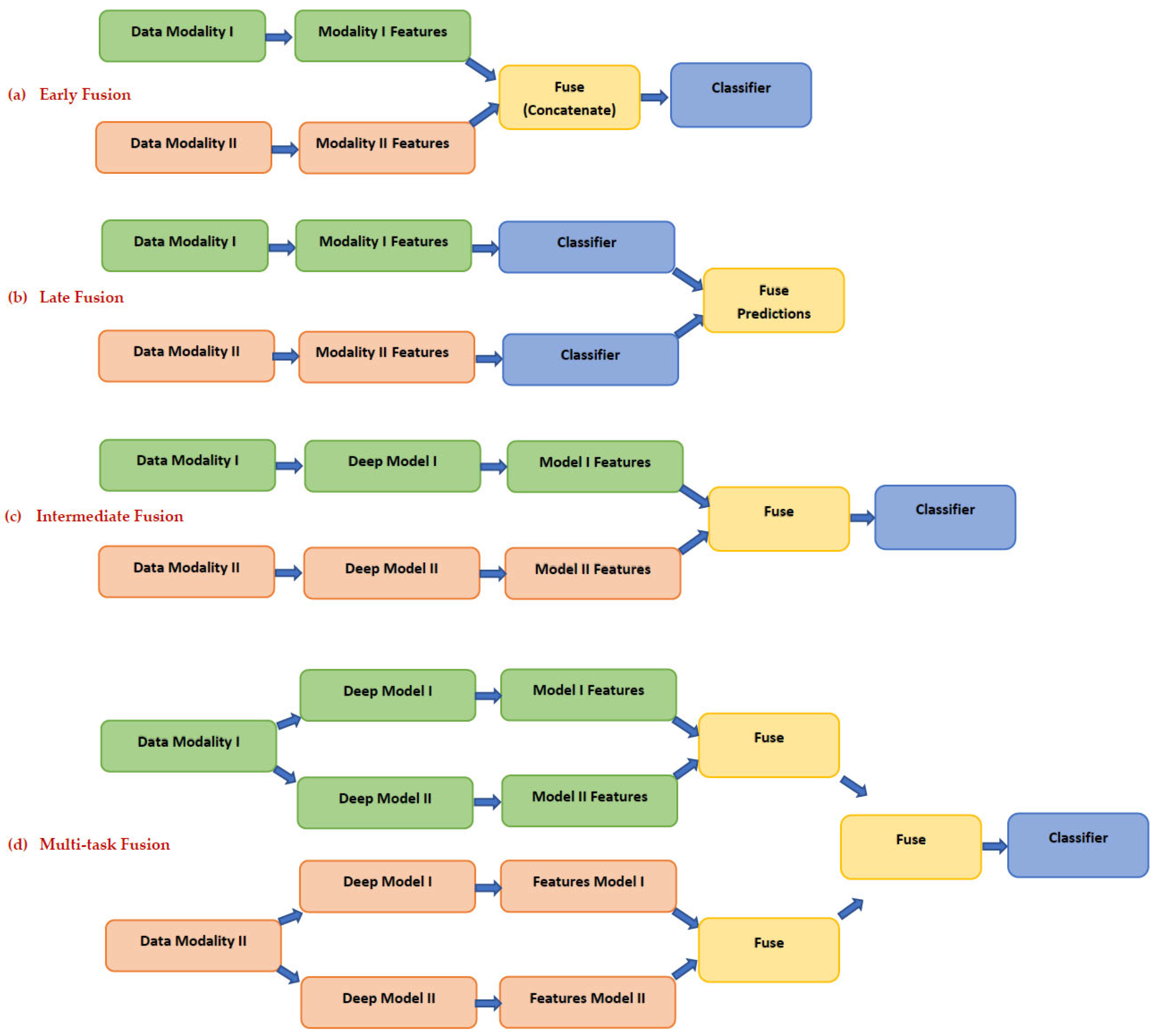

3.4. Proposed Pipeline for Gas Leakage Detection

3.4.1. Preprocessing of Multimodal Data

3.4.2. CNN Models Re-Training and Feature Extraction

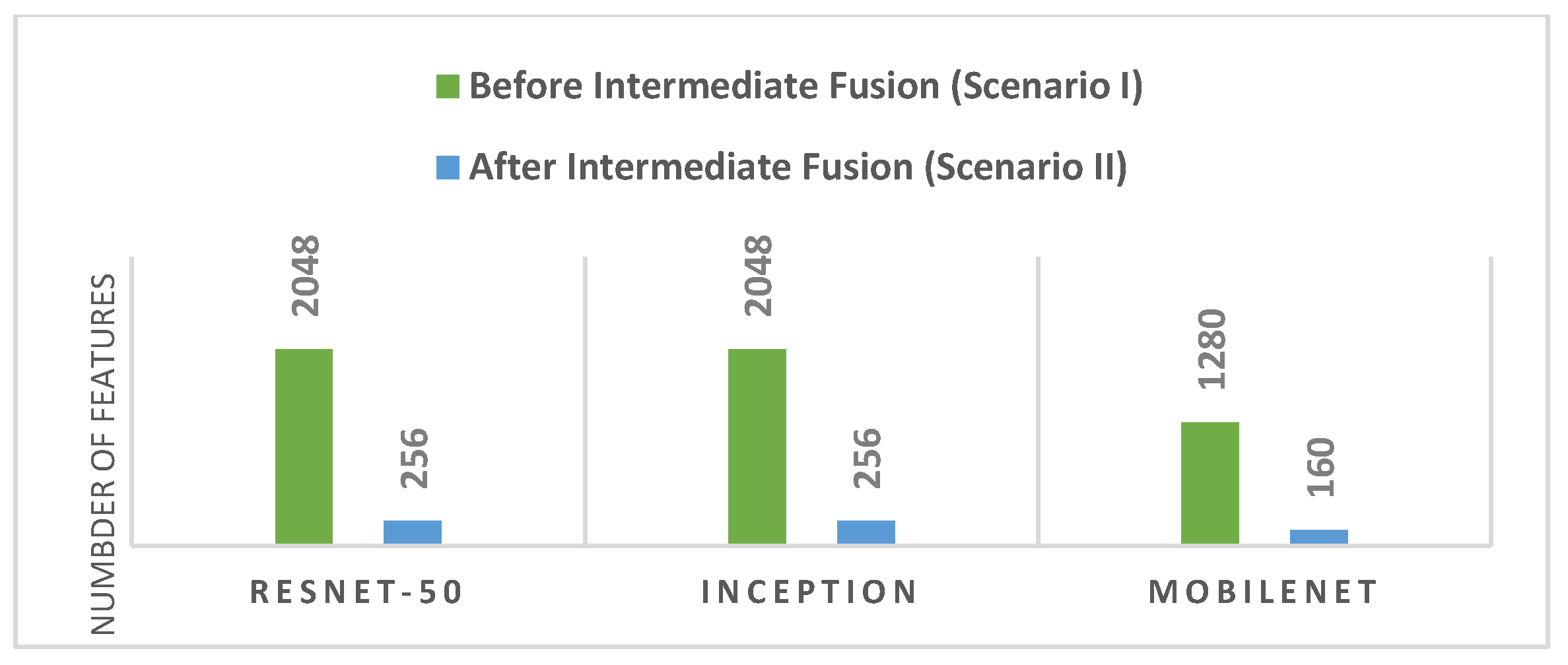

3.4.3. Multimodal Data Fusion

3.4.4. Gas Leakage Detection and Identification

4. Experimental Setting

4.1. Setting of the Parameters

4.2. Performance Evaluation Measures

5. Results

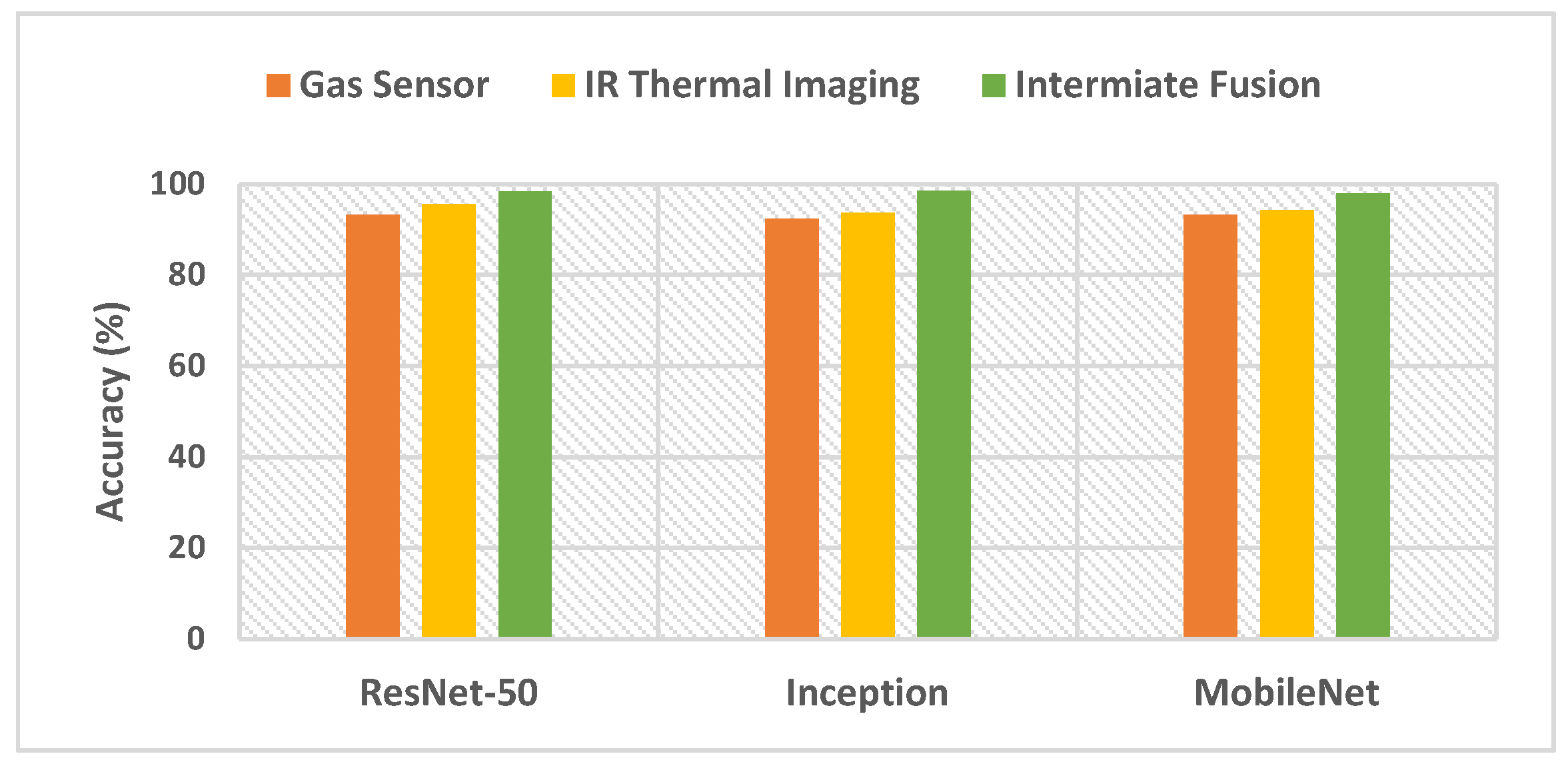

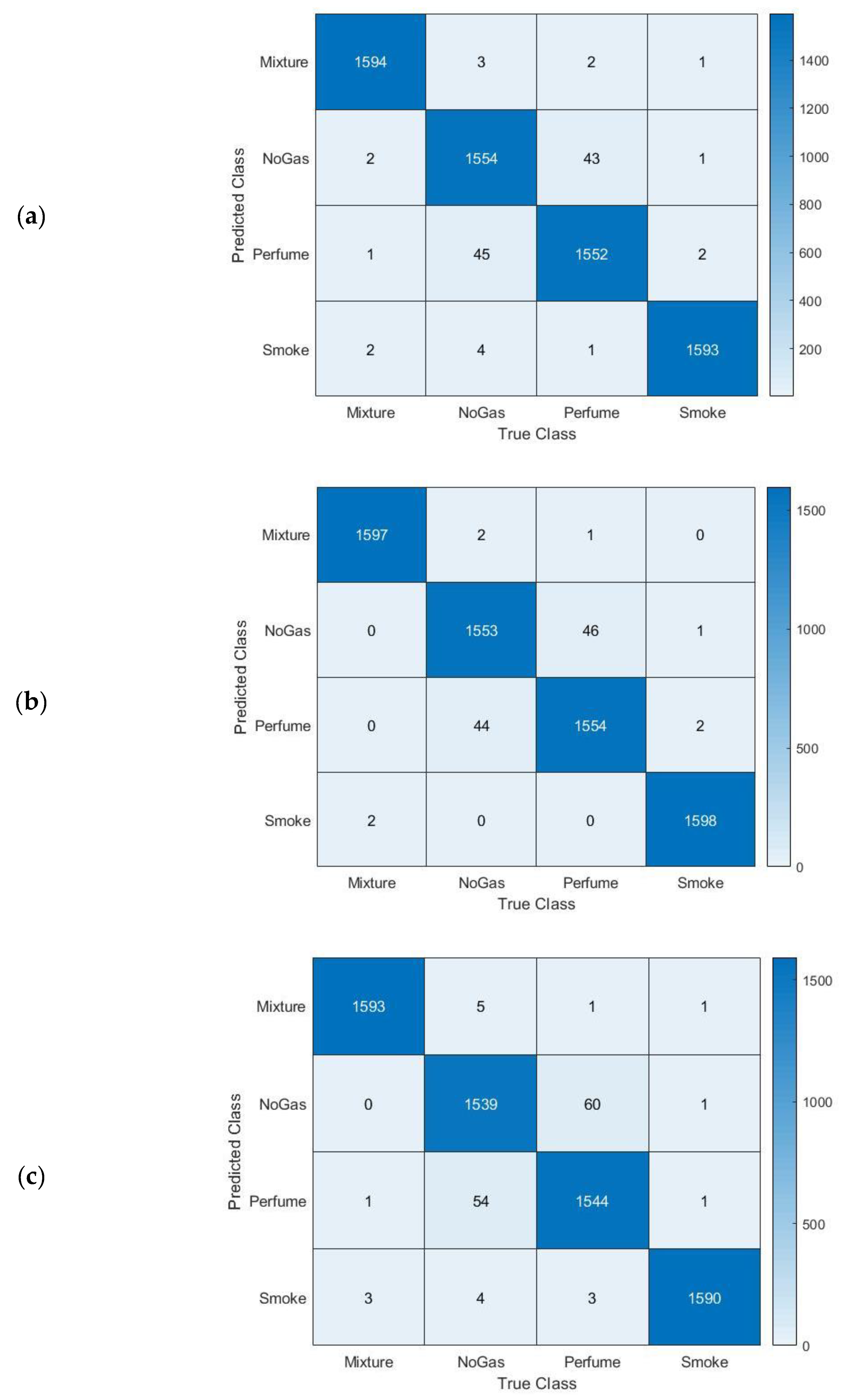

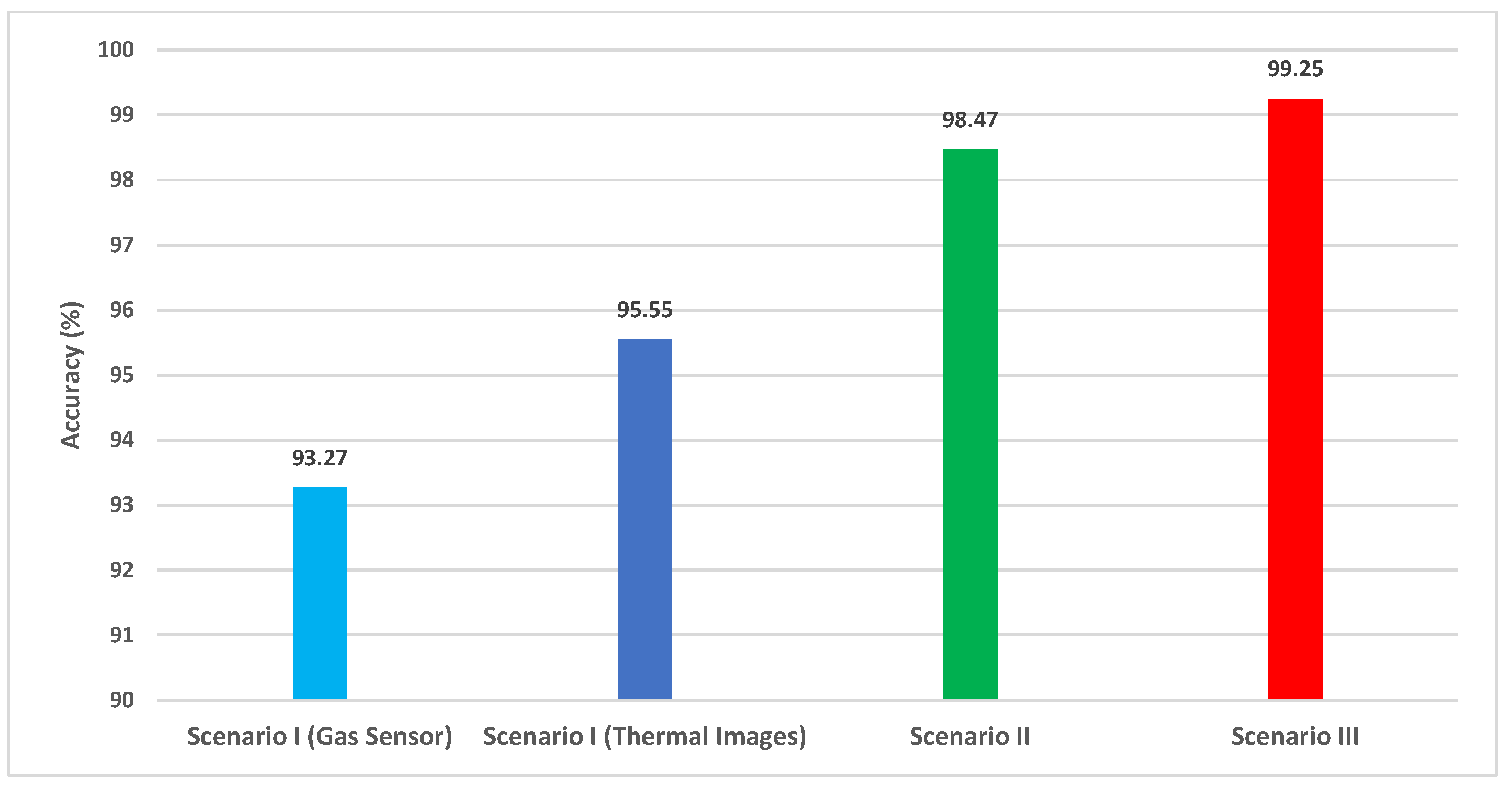

5.1. Bi-LSTM Results of Scenario I

5.2. Bi-LSTM Results of Scenario II

5.3. Bi-LSTM Results of Scenario III

6. Discussion

6.1. Comparisons

6.2. Complexity and Computational Analysis

6.3. Limitations and Upcoming Prospects

7. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhou, Y.; Zhao, X.; Zhao, J.; Chen, D. Research on Fire and Explosion Accidents of Oil Depots. In Proceedings of the 3rd International Conference on Applied Engineering, Wuhan, China, 22–25 April 2016; AIDIC-Associazione Italiana Di Ingegneria Chimica: Milano, Italy, 2016; Volume 51, pp. 163–168. [Google Scholar]

- Bonvicini, S.; Antonioni, G.; Morra, P.; Cozzani, V. Quantitative Assessment of Environmental Risk Due to Accidental Spills from Onshore Pipelines. Process Saf. Environ. Prot. 2015, 93, 31–49. [Google Scholar] [CrossRef]

- Kopbayev, A.; Khan, F.; Yang, M.; Halim, S.Z. Gas Leakage Detection Using Spatial and Temporal Neural Network Model. Process Saf. Environ. Prot. 2022, 160, 968–975. [Google Scholar] [CrossRef]

- Fox, A.; Kozar, M.P.; Steinberg, P.A. Gas Chromatography and Gas Chromatography—Mass Spectrometry. 2000. Available online: https://www.thevespiary.org/library/Files_Uploaded_by_Users/Sedit/Chemical%20Analysis/Crystalization,%20Purification,%20Separation/Encyclopedia%20of%20Separation%20Science/Level%20III%20-%20Practical%20Applications/CARBOHYDRATES%20-%20Gas%20Chromatography%20and%20Gas%20Chromatography-Ma.pdf (accessed on 10 November 2022).

- Attallah, O. MB-AI-His: Histopathological Diagnosis of Pediatric Medulloblastoma and Its Subtypes via AI. Diagnostics 2021, 11, 359. [Google Scholar] [CrossRef] [PubMed]

- Attallah, O. GabROP: Gabor Wavelets-Based CAD for Retinopathy of Prematurity Diagnosis via Convolutional Neural Networks. Diagnostics 2023, 13, 171. [Google Scholar] [CrossRef] [PubMed]

- Attallah, O. Cervical Cancer Diagnosis Based on Multi-Domain Features Using Deep Learning Enhanced by Handcrafted Descriptors. Appl. Sci. 2023, 13, 1916. [Google Scholar] [CrossRef]

- Attallah, O. RADIC: A Tool for Diagnosing COVID-19 from Chest CT and X-Ray Scans Using Deep Learning and Quad-Radiomics. Chemom. Intell. Lab. Syst. 2023, 233, 104750. [Google Scholar] [CrossRef]

- Cardona, T.; Cudney, E.A.; Hoerl, R.; Snyder, J. Data Mining and Machine Learning Retention Models in Higher Education. J. Coll. Stud. Retent. Res. Theory Pract. 2023, 25, 51–75. [Google Scholar] [CrossRef]

- Liu, L.T.; Wang, S.; Britton, T.; Abebe, R. Reimagining the Machine Learning Life Cycle to Improve Educational Outcomes of Students. Proc. Natl. Acad. Sci. USA 2023, 120, e2204781120. [Google Scholar] [CrossRef]

- Sripathi, K.N.; Moscarella, R.A.; Steele, M.; Yoho, R.; You, H.; Prevost, L.B.; Urban-Lurain, M.; Merrill, J.; Haudek, K.C. Machine Learning Mixed Methods Text Analysis: An Illustration from Automated Scoring Models of Student Writing in Biology Education. J. Mix. Methods Res. 2023, 1–23. [Google Scholar] [CrossRef]

- Olan, F.; Liu, S.; Suklan, J.; Jayawickrama, U.; Arakpogun, E.O. The Role of Artificial Intelligence Networks in Sustainable Supply Chain Finance for Food and Drink Industry. Int. J. Prod. Res. 2022, 60, 4418–4433. [Google Scholar] [CrossRef]

- Zeng, F.; Wang, C.; Ge, S.S. A Survey on Visual Navigation for Artificial Agents with Deep Reinforcement Learning. IEEE Access 2020, 8, 135426–135442. [Google Scholar] [CrossRef]

- Attallah, O.; Ibrahim, R.A.; Zakzouk, N.E. CAD System for Inter-Turn Fault Diagnosis of Offshore Wind Turbines via Multi-CNNs & Feature Selection. Renew. Energy 2023, 203, 870–880. [Google Scholar]

- Attallah, O. Tomato Leaf Disease Classification via Compact Convolutional Neural Networks with Transfer Learning and Feature Selection. Horticulturae 2023, 9, 149. [Google Scholar] [CrossRef]

- Xiong, Y.; Li, Y.; Wang, C.; Shi, H.; Wang, S.; Yong, C.; Gong, Y.; Zhang, W.; Zou, X. Non-Destructive Detection of Chicken Freshness Based on Electronic Nose Technology and Transfer Learning. Agriculture 2023, 13, 496. [Google Scholar] [CrossRef]

- Amkor, A.; El Barbri, N. Classification of Potatoes According to Their Cultivated Field by SVM and KNN Approaches Using an Electronic Nose. Bull. Electr. Eng. Inform. 2023, 12, 1471–1477. [Google Scholar] [CrossRef]

- Piłat-Rożek, M.; Łazuka, E.; Majerek, D.; Szeląg, B.; Duda-Saternus, S.; Łagód, G. Application of Machine Learning Methods for an Analysis of E-Nose Multidimensional Signals in Wastewater Treatment. Sensors 2023, 23, 487. [Google Scholar] [CrossRef]

- Hamilton, S.; Charalambous, B. Leak Detection: Technology and Implementation; IWA Publishing: London, UK, 2013. [Google Scholar]

- Attallah, O.; Morsi, I. An Electronic Nose for Identifying Multiple Combustible/Harmful Gases and Their Concentration Levels via Artificial Intelligence. Measurement 2022, 199, 111458. [Google Scholar] [CrossRef]

- Arroyo, P.; Meléndez, F.; Suárez, J.I.; Herrero, J.L.; Rodríguez, S.; Lozano, J. Electronic Nose with Digital Gas Sensors Connected via Bluetooth to a Smartphone for Air Quality Measurements. Sensors 2020, 20, 786. [Google Scholar] [CrossRef]

- Fan, H.; Schaffernicht, E.; Lilienthal, A.J. Ensemble Learning-Based Approach for Gas Detection Using an Electronic Nose in Robotic Applications. Front. Chem. 2022, 10, 863838. [Google Scholar] [CrossRef]

- Manjula, R.; Narasamma, B.; Shruthi, G.; Nagarathna, K.; Kumar, G. Artificial Olfaction for Detection and Classification of Gases Using E-Nose and Machine Learning for Industrial Application. In Machine Intelligence and Data Analytics for Sustainable Future Smart Cities; Springer: Berlin/Heidelberg, Germany, 2021; pp. 35–48. [Google Scholar]

- Luo, J.; Zhu, Z.; Lv, W.; Wu, J.; Yang, J.; Zeng, M.; Hu, N.; Su, Y.; Liu, R.; Yang, Z. E-Nose System Based on Fourier Series for Gases Identification and Concentration Estimation from Food Spoilage. IEEE Sens. J. 2023, 23, 3342–3351. [Google Scholar] [CrossRef]

- Narkhede, P.; Walambe, R.; Mandaokar, S.; Chandel, P.; Kotecha, K.; Ghinea, G. Gas Detection and Identification Using Multimodal Artificial Intelligence Based Sensor Fusion. Appl. Syst. Innov. 2021, 4, 3. [Google Scholar] [CrossRef]

- Adefila, K.; Yan, Y.; Wang, T. Leakage Detection of Gaseous CO2 through Thermal Imaging. In Proceedings of the 2015 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Pisa, Italy, 11–14 May 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 261–265. [Google Scholar]

- Jadin, M.S.; Ghazali, K.H. Gas Leakage Detection Using Thermal Imaging Technique. In Proceedings of the 2014 UKSim-AMSS 16th International Conference on Computer Modelling and Simulation, Cambridge, UK, 26–28 March 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 302–306. [Google Scholar]

- Bin, J.; Rahman, C.A.; Rogers, S.; Liu, Z. Tensor-Based Approach for Liquefied Natural Gas Leakage Detection from Surveillance Thermal Cameras: A Feasibility Study in Rural Areas. IEEE Trans. Ind. Inform. 2021, 17, 8122–8130. [Google Scholar] [CrossRef]

- Steffens, C.R.; Messias, L.R.V.; Drews, P.J.L., Jr.; da Costa Botelho, S.S. On Robustness of Robotic and Autonomous Systems Perception. J. Intell. Robot. Syst. 2021, 101, 61. [Google Scholar] [CrossRef]

- Rahate, A.; Mandaokar, S.; Chandel, P.; Walambe, R.; Ramanna, S.; Kotecha, K. Employing Multimodal Co-Learning to Evaluate the Robustness of Sensor Fusion for Industry 5.0 Tasks. Soft Comput. 2022, 27, 4139–4155. [Google Scholar] [CrossRef]

- Attallah, O. CerCan·Net: Cervical Cancer Classification Model via Multi-Layer Feature Ensembles of Lightweight CNNs and Transfer Learning. Expert Syst. Appl. 2023, 229 Pt B, 120624. [Google Scholar] [CrossRef]

- Attallah, O.; Samir, A. A Wavelet-Based Deep Learning Pipeline for Efficient COVID-19 Diagnosis via CT Slices. Appl. Soft Comput. 2022, 128, 109401. [Google Scholar] [CrossRef]

- Kalman, E.-L.; Löfvendahl, A.; Winquist, F.; Lundström, I. Classification of Complex Gas Mixtures from Automotive Leather Using an Electronic Nose. Anal. Chim. Acta 2000, 403, 31–38. [Google Scholar] [CrossRef]

- Yang, T.; Zhang, P.; Xiong, J. Association between the Emissions of Volatile Organic Compounds from Vehicular Cabin Materials and Temperature: Correlation and Exposure Analysis. Indoor Built Environ. 2019, 28, 362–371. [Google Scholar] [CrossRef]

- Imahashi, M.; Miyagi, K.; Takamizawa, T.; Hayashi, K. Artificial Odor Map and Discrimination of Odorants Using the Odor Separating System. In AIP Conference Proceedings; American Institute of Physics: College Park, MD, USA, 2011; Volume 1362, pp. 27–28. [Google Scholar]

- Liu, H.; Meng, G.; Deng, Z.; Li, M.; Chang, J.; Dai, T.; Fang, X. Progress in Research on VOC Molecule Recognition by Semiconductor Sensors. Acta Phys.-Chim. Sin. 2020, 38, 2008018. [Google Scholar] [CrossRef]

- Charumporn, B.; Omatu, S.; Yoshioka, M.; Fujinaka, T.; Kosaka, T. Fire Detection Systems by Compact Electronic Nose Systems Using Metal Oxide Gas Sensors. In Proceedings of the 2004 IEEE International Joint Conference on Neural Networks (IEEE Cat. No. 04CH37541), Budapest, Hungary, 25–29 July 2004; IEEE: Piscataway, NJ, USA, 2004; Volume 2, pp. 1317–1320. [Google Scholar]

- Cheng, L.; Liu, Y.-B.; Meng, Q.-H. A Novel E-Nose Chamber Design for VOCs Detection in Automobiles. In Proceedings of the 2020 39th Chinese Control Conference (CCC), Shenyang, China, 27–29 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 6055–6060. [Google Scholar]

- Ye, Z.; Liu, Y.; Li, Q. Recent Progress in Smart Electronic Nose Technologies Enabled with Machine Learning Methods. Sensors 2021, 21, 7620. [Google Scholar] [CrossRef]

- Wijaya, D.R.; Afianti, F.; Arifianto, A.; Rahmawati, D.; Kodogiannis, V.S. Ensemble Machine Learning Approach for Electronic Nose Signal Processing. Sens. Bio-Sens. Res. 2022, 36, 100495. [Google Scholar] [CrossRef]

- Feng, L.; Dai, H.; Song, X.; Liu, J.; Mei, X. Gas Identification with Drift Counteraction for Electronic Noses Using Augmented Convolutional Neural Network. Sens. Actuators B Chem. 2022, 351, 130986. [Google Scholar] [CrossRef]

- Li, Z.; Yu, J.; Dong, D.; Yao, G.; Wei, G.; He, A.; Wu, H.; Zhu, H.; Huang, Z.; Tang, Z. E-Nose Based on a High-Integrated and Low-Power Metal Oxide Gas Sensor Array. Sens. Actuators B Chem. 2023, 380, 133289. [Google Scholar] [CrossRef]

- Kang, M.; Cho, I.; Park, J.; Jeong, J.; Lee, K.; Lee, B.; Del Orbe Henriquez, D.; Yoon, K.; Park, I. High Accuracy Real-Time Multi-Gas Identification by a Batch-Uniform Gas Sensor Array and Deep Learning Algorithm. ACS Sens. 2022, 7, 430–440. [Google Scholar] [CrossRef]

- Faleh, R.; Kachouri, A. A Hybrid Deep Convolutional Neural Network-Based Electronic Nose for Pollution Detection Purposes. Chemom. Intell. Lab. Syst. 2023, 237, 104825. [Google Scholar] [CrossRef]

- Rahman, S.; Alwadie, A.S.; Irfan, M.; Nawaz, R.; Raza, M.; Javed, E.; Awais, M. Wireless E-Nose Sensors to Detect Volatile Organic Gases through Multivariate Analysis. Micromachines 2020, 11, 597. [Google Scholar] [CrossRef]

- Travis, B.; Dubey, M.; Sauer, J. Neural Networks to Locate and Quantify Fugitive Natural Gas Leaks for a MIR Detection System. Atmos. Environ. X 2020, 8, 100092. [Google Scholar] [CrossRef]

- De Pérez-Pérez, E.J.; López-Estrada, F.R.; Valencia-Palomo, G.; Torres, L.; Puig, V.; Mina-Antonio, J.D. Leak Diagnosis in Pipelines Using a Combined Artificial Neural Network Approach. Control Eng. Pract. 2021, 107, 104677. [Google Scholar] [CrossRef]

- Zhang, J.; Xue, Y.; Zhang, T.; Chen, Y.; Wei, X.; Wan, H.; Wang, P. Detection of Hazardous Gas Mixtures in the Smart Kitchen Using an Electronic Nose with Support Vector Machine. J. Electrochem. Soc. 2020, 167, 147519. [Google Scholar] [CrossRef]

- Ragila, V.V.; Madhavan, R.; Kumar, U.S. Neural Network-Based Classification of Toxic Gases for a Sensor Array. In Sustainable Communication Networks and Application; Springer: Berlin/Heidelberg, Germany, 2021; pp. 373–383. [Google Scholar]

- Peng, P.; Zhao, X.; Pan, X.; Ye, W. Gas Classification Using Deep Convolutional Neural Networks. Sensors 2018, 18, 157. [Google Scholar] [CrossRef]

- Spandonidis, C.; Theodoropoulos, P.; Giannopoulos, F.; Galiatsatos, N.; Petsa, A. Evaluation of Deep Learning Approaches for Oil & Gas Pipeline Leak Detection Using Wireless Sensor Networks. Eng. Appl. Artif. Intell. 2022, 113, 104890. [Google Scholar]

- Pan, X.; Zhang, H.; Ye, W.; Bermak, A.; Zhao, X. A Fast and Robust Gas Recognition Algorithm Based on Hybrid Convolutional and Recurrent Neural Network. IEEE Access 2019, 7, 100954–100963. [Google Scholar] [CrossRef]

- Liu, Q.; Hu, X.; Ye, M.; Cheng, X.; Li, F. Gas Recognition under Sensor Drift by Using Deep Learning. Int. J. Intell. Syst. 2015, 30, 907–922. [Google Scholar] [CrossRef]

- Marathe, S. Leveraging Drone Based Imaging Technology for Pipeline and RoU Monitoring Survey. In Proceedings of the SPE Symposium: Asia Pacific Health, Safety, Security, Environment and Social Responsibility, Kuala Lumpur, Malaysia, 23–24 April 2019. [Google Scholar]

- Liu, B.; Ma, H.; Zheng, X.; Peng, L.; Xiao, A. Monitoring and Detection of Combustible Gas Leakage by Using Infrared Imaging. In Proceedings of the 2018 IEEE International Conference on Imaging Systems and Techniques (IST), Krakow, Poland, 16–18 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar]

- Guo, W.; Wang, J.; Wang, S. Deep Multimodal Representation Learning: A Survey. IEEE Access 2019, 7, 63373–63394. [Google Scholar] [CrossRef]

- Ngiam, J.; Khosla, A.; Kim, M.; Nam, J.; Lee, H.; Ng, A.Y. Multimodal Deep Learning. In Proceedings of the ICML, Bellevue, WA, USA, 28 June–2 July 2011. [Google Scholar]

- Siami-Namini, S.; Tavakoli, N.; Namin, A.S. The Performance of LSTM and BiLSTM in Forecasting Time Series. In Proceedings of the 2019 IEEE International Conference on Big Data (Big Data), Los Angeles, CA, USA, 9–12 December 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 3285–3292. [Google Scholar]

- Gao, J.; Li, P.; Chen, Z.; Zhang, J. A Survey on Deep Learning for Multimodal Data Fusion. Neural Comput. 2020, 32, 829–864. [Google Scholar] [CrossRef] [PubMed]

- Lahat, D.; Adali, T.; Jutten, C. Multimodal Data Fusion: An Overview of Methods, Challenges, and Prospects. Proc. IEEE 2015, 103, 1449–1477. [Google Scholar] [CrossRef]

- Attallah, O. A Computer-Aided Diagnostic Framework for Coronavirus Diagnosis Using Texture-Based Radiomics Images. Digit. Health 2022, 8, 20552076221092544. [Google Scholar] [CrossRef]

- Attallah, O. DIAROP: Automated Deep Learning-Based Diagnostic Tool for Retinopathy of Prematurity. Diagnostics 2021, 11, 2034. [Google Scholar] [CrossRef]

- Attallah, O. ECG-BiCoNet: An ECG-Based Pipeline for COVID-19 Diagnosis Using Bi-Layers of Deep Features Integration. Comput. Biol. Med. 2022, 142, 105210. [Google Scholar] [CrossRef]

- Boulahia, S.Y.; Amamra, A.; Madi, M.R.; Daikh, S. Early, Intermediate and Late Fusion Strategies for Robust Deep Learning-Based Multimodal Action Recognition. Mach. Vis. Appl. 2021, 32, 121. [Google Scholar] [CrossRef]

- Attallah, O.; Sharkas, M. GASTRO-CADx: A Three Stages Framework for Diagnosing Gastrointestinal Diseases. PeerJ Comput. Sci. 2021, 7, e423. [Google Scholar] [CrossRef]

- Liu, H.; Li, Q.; Gu, Y. A Multi-Task Learning Framework for Gas Detection and Concentration Estimation. Neurocomputing 2020, 416, 28–37. [Google Scholar] [CrossRef]

- Sarvamangala, D.R.; Kulkarni, R.V. Convolutional Neural Networks in Medical Image Understanding: A Survey. Evol. Intell. 2021, 15, 1–22. [Google Scholar] [CrossRef]

- Attallah, O. An Intelligent ECG-Based Tool for Diagnosing COVID-19 via Ensemble Deep Learning Techniques. Biosensors 2022, 12, 299. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Angeline, P.J.; Saunders, G.M.; Pollack, J.B. An Evolutionary Algorithm That Constructs Recurrent Neural Networks. IEEE Trans. Neural Netw. 1994, 5, 54–65. [Google Scholar] [CrossRef]

- Narkhede, P.; Walambe, R.; Chandel, P.; Mandaokar, S.; Kotecha, K. MultimodalGasData: Multimodal Dataset for Gas Detection and Classification. Data 2022, 7, 112. [Google Scholar] [CrossRef]

- Havens, K.J.; Sharp, E. Thermal Imaging Techniques to Survey and Monitor Animals in the Wild: A Methodology; Academic Press: Cambridge, MA, USA, 2015. [Google Scholar]

- Korotcenkov, G. Current Trends in Nanomaterials for Metal Oxide-Based Conductometric Gas Sensors: Advantages and Limitations. Part 1: 1D and 2D Nanostructures. Nanomaterials 2020, 10, 1392. [Google Scholar] [CrossRef]

- Morsi, I. Electronic Nose System and Artificial Intelligent Techniques for Gases Identification. Data Storage 2010, 80, 175–200. [Google Scholar]

- Lu, J.; Behbood, V.; Hao, P.; Zuo, H.; Xue, S.; Zhang, G. Transfer Learning Using Computational Intelligence: A Survey. Knowl.-Based Syst. 2015, 80, 14–23. [Google Scholar] [CrossRef]

- Miri, A.; Sharifian, S.; Rashidi, S.; Ghods, M. Medical Image Denoising Based on 2D Discrete Cosine Transform via Ant Colony Optimization. Optik 2018, 156, 938–948. [Google Scholar] [CrossRef]

- He, K.; Sun, J. Convolutional Neural Networks at Constrained Time Cost. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5353–5360. [Google Scholar]

| Gas Type | Sample 1 | Sample 2 | ||

|---|---|---|---|---|

| Gas Sensor | IR Thermal Imaging | Gas Sensor | IR Thermal Imaging | |

| Smoke | [615,339,396,412,574,598,312] |  | [512,354,396,412,575,582,299] |  |

| Mixture | [506,392,344,311,395,222,302] |  | [530,397,370,338,409,248,355] |  |

| Perfume | [753,523,489,461,685,696,495] |  | [642,526,431,429,647,595,461] |  |

| NoGas | [555,515,377,388,666,451,416] |  | [669,525,422,419,650,648,449] |  |

| CNN Features | Gas Sensors | IR Thermal Images |

|---|---|---|

| ResNet-50 | 93.27 | 95.55 |

| Inception | 92.28 | 93.60 |

| MobileNet | 93.27 | 94.22 |

| # Features | Sensitivity | Specificity | Precision | F1-Score | MCC |

|---|---|---|---|---|---|

| 50 | 0.980 | 0.993 | 0.980 | 0.980 | 0.973 |

| 100 | 0.986 | 0.995 | 0.986 | 0.986 | 0.981 |

| 150 | 0.987 | 0.996 | 0.987 | 0.983 | 0.983 |

| 200 | 0.989 | 0.996 | 0.987 | 0.987 | 0.983 |

| 250 | 0.987 | 0.996 | 0.987 | 0.987 | 0.983 |

| 300 | 0.990 | 0.997 | 0.990 | 0.990 | 0.986 |

| 350 | 0.992 | 0.997 | 0.992 | 0.992 | 0.989 |

| 400 | 0.991 | 0.997 | 0.991 | 0.991 | 0.988 |

| 450 | 0.991 | 0.997 | 0.991 | 0.991 | 0.988 |

| 500 | 0.993 | 0.997 | 0.993 | 0.993 | 0.990 |

| 550 | 0.992 | 0.997 | 0.992 | 0.992 | 0.989 |

| 600 | 0.992 | 0.997 | 0.992 | 0.992 | 0.990 |

| Article | Method | Accuracy | Sensitivity | Precision | F1-Score |

|---|---|---|---|---|---|

| [25] | LSTM + CNN Early Fusion | 0.960 | 0.963 | 0.963 | 0.963 |

| [30] | LSTM + CNN Intermediate Fusion | 0.945 | - | - | - |

| [30] | LSTM + CNN Multitask Fusion | 0.969 | |||

| Proposed | Inception + DWT + Bi-LSTM Intermediate Fusion | 0.985 | 0.985 | 0.985 | 0.985 |

| Proposed | (ResNet50 + Inception + MobileNet) + DWT + DCT + Bi-LSTM Multitask Fusion | 0.992 | 0.992 | 0.992 | 0.992 |

| Model | Input Size to the Model | Sum of Parameters (H) | Total Amount of Layers | Per-Layer Training Complexity (O) | Training Time | |

|---|---|---|---|---|---|---|

| E-Nose Data | IR Thermal Data | |||||

| Offline Phase | ||||||

| ResNet-50 | Photos Aspect 224 × 224 × 3 | 23.0 M | 50 | [80] d: is the number of convolutional layers the total sum of filters in the lth layer : the number of input channels of the lth layer : the spatial size of the filter’s kernel dimension : the dimension of the output feature map | 83 min 11 s | 72 min 37 s |

| Inception | Photos Aspect 229 × 229 × 3 | 23.6 M | 48 | 133 min 43 s | 144 min 27 s | |

| MobileNet | Photos Aspect 224 × 224 × 3 | 3.5 M | 28 | 79 min 43 s | 81 min 56 s | |

| Online Phase | ||||||

| Scenario II | 256 Features for ResNet-50 and Inception 160 Features for MobileNet | k: the number of hidden units p: input size | 1 | Bi-LSTM ) w: number of weights | ResNet-50 Fusion of Enose and IR thermal data | |

| 4 min 1 s | ||||||

| Inception Fusion of Enose and IR thermal data | ||||||

| 4 min 0 s | ||||||

| MobileNet Fusion of Enose and IR thermal data | ||||||

| 3 min 95 s | ||||||

| Scenario III | 500 Features | k: the number of hidden units p: input size | 1 | Bi-LSTM ) w: number of weights | 4 min 3 s | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Attallah, O. Multitask Deep Learning-Based Pipeline for Gas Leakage Detection via E-Nose and Thermal Imaging Multimodal Fusion. Chemosensors 2023, 11, 364. https://doi.org/10.3390/chemosensors11070364

Attallah O. Multitask Deep Learning-Based Pipeline for Gas Leakage Detection via E-Nose and Thermal Imaging Multimodal Fusion. Chemosensors. 2023; 11(7):364. https://doi.org/10.3390/chemosensors11070364

Chicago/Turabian StyleAttallah, Omneya. 2023. "Multitask Deep Learning-Based Pipeline for Gas Leakage Detection via E-Nose and Thermal Imaging Multimodal Fusion" Chemosensors 11, no. 7: 364. https://doi.org/10.3390/chemosensors11070364

APA StyleAttallah, O. (2023). Multitask Deep Learning-Based Pipeline for Gas Leakage Detection via E-Nose and Thermal Imaging Multimodal Fusion. Chemosensors, 11(7), 364. https://doi.org/10.3390/chemosensors11070364