Monitoring of Indoor Air Quality in a Classroom Combining a Low-Cost Sensor System and Machine Learning

Abstract

1. Introduction

2. Materials and Methods

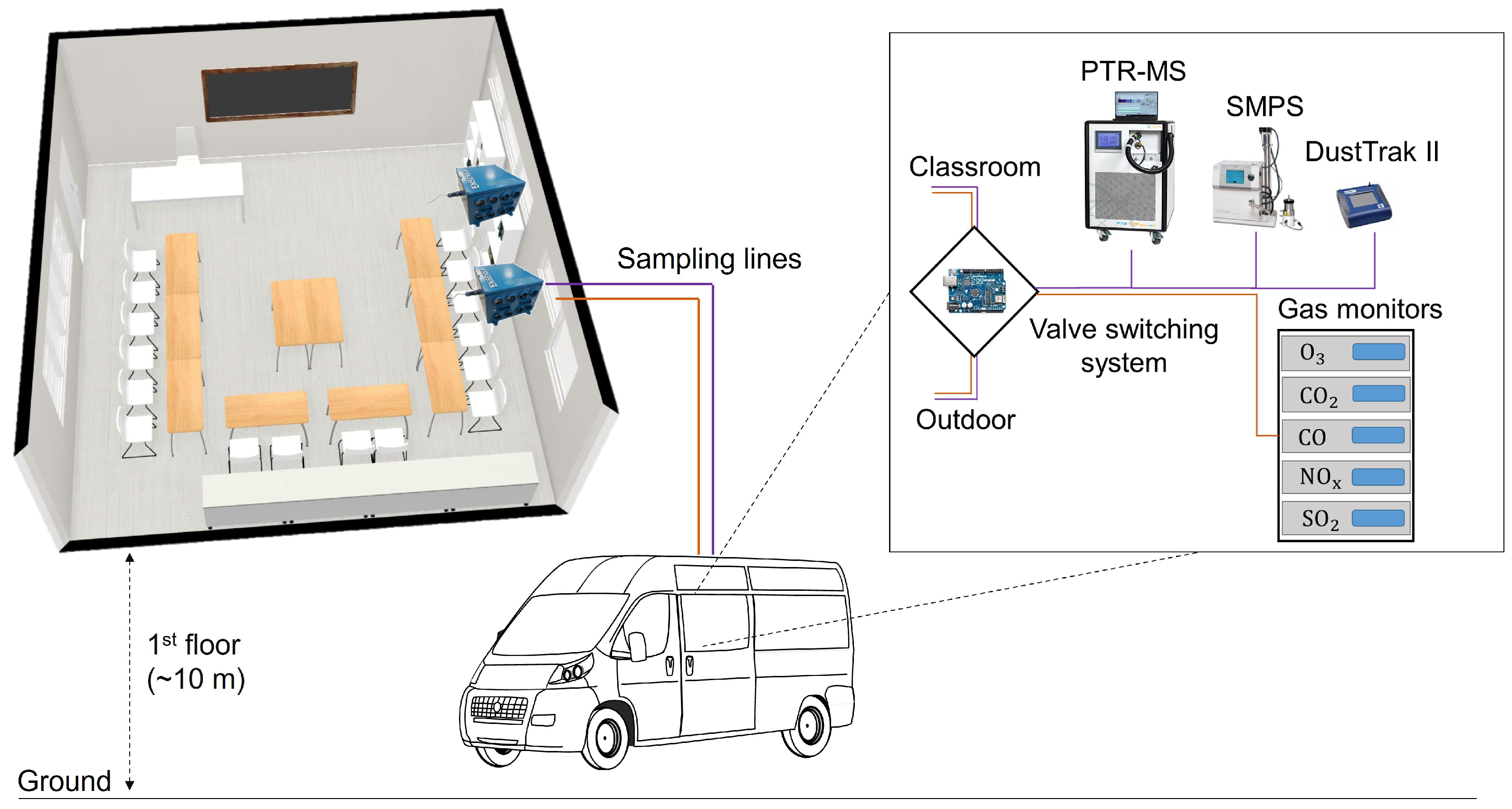

2.1. Measurement Site and Setup

2.2. The ENSENSIA Sensor System

2.3. Data Collection and Processing

2.3.1. Reference Data

2.3.2. ENSENSIA Data

2.4. Sensor Calibration Using Machine Learning

2.5. Evaluation Metrics

3. Results

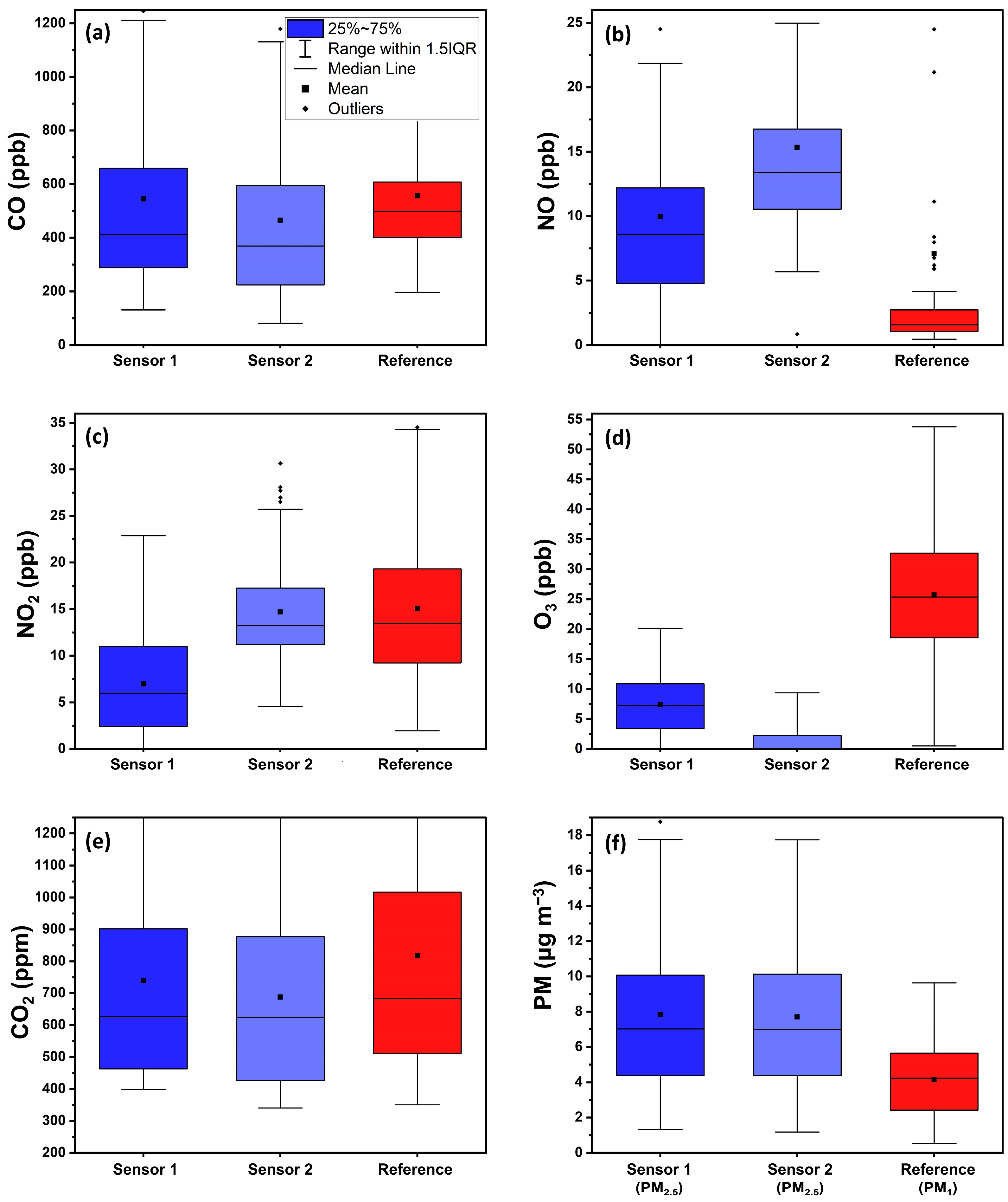

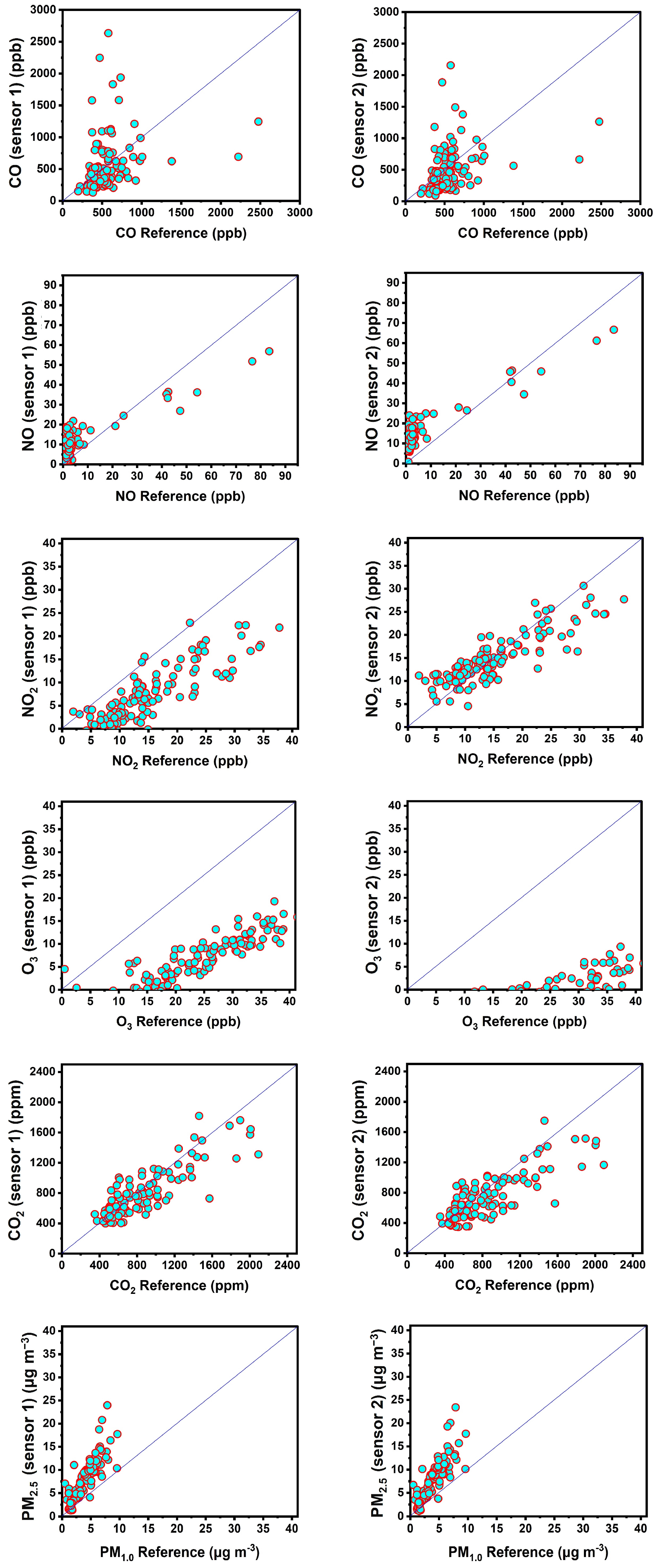

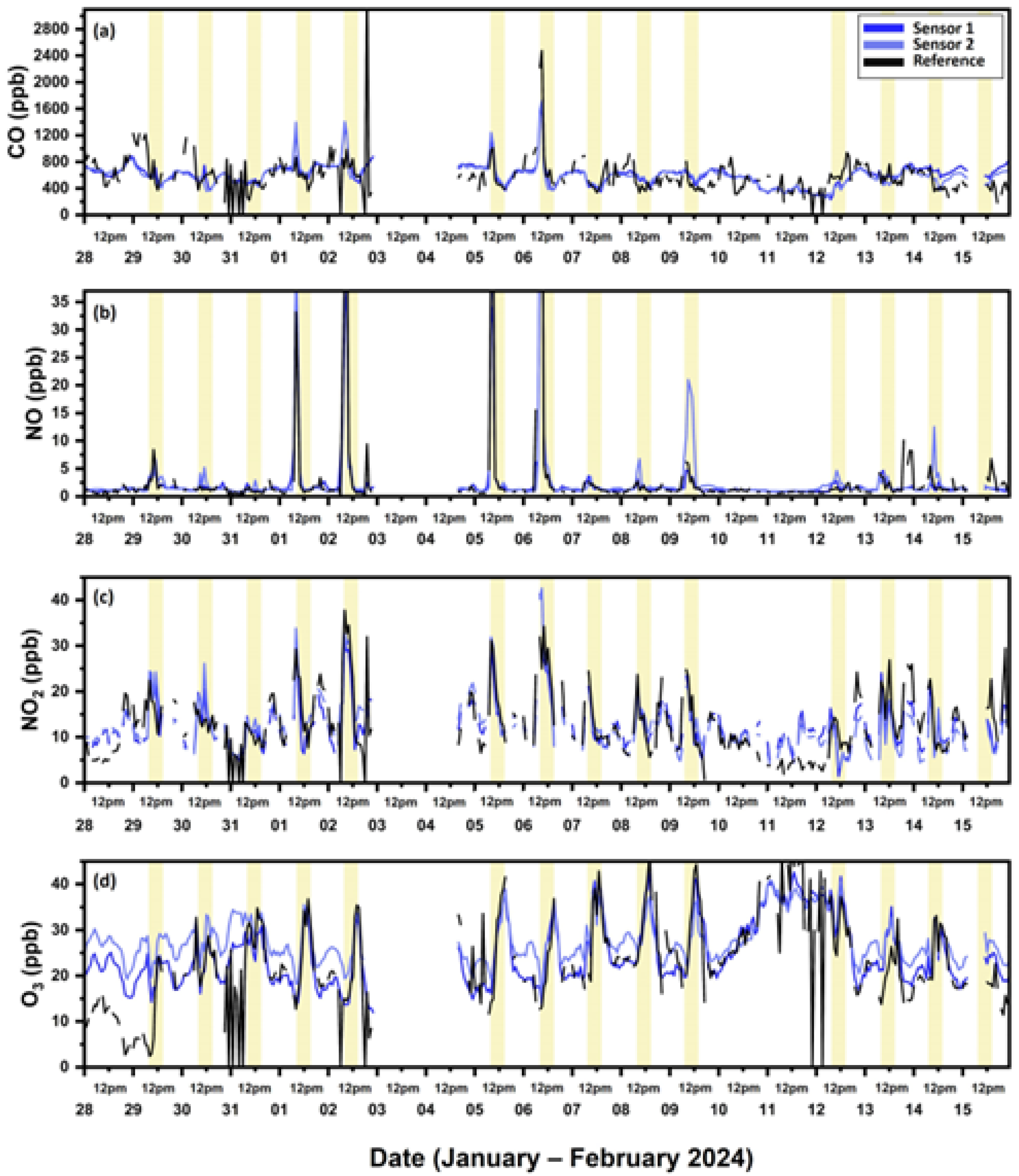

3.1. Performance of Factory Calibration

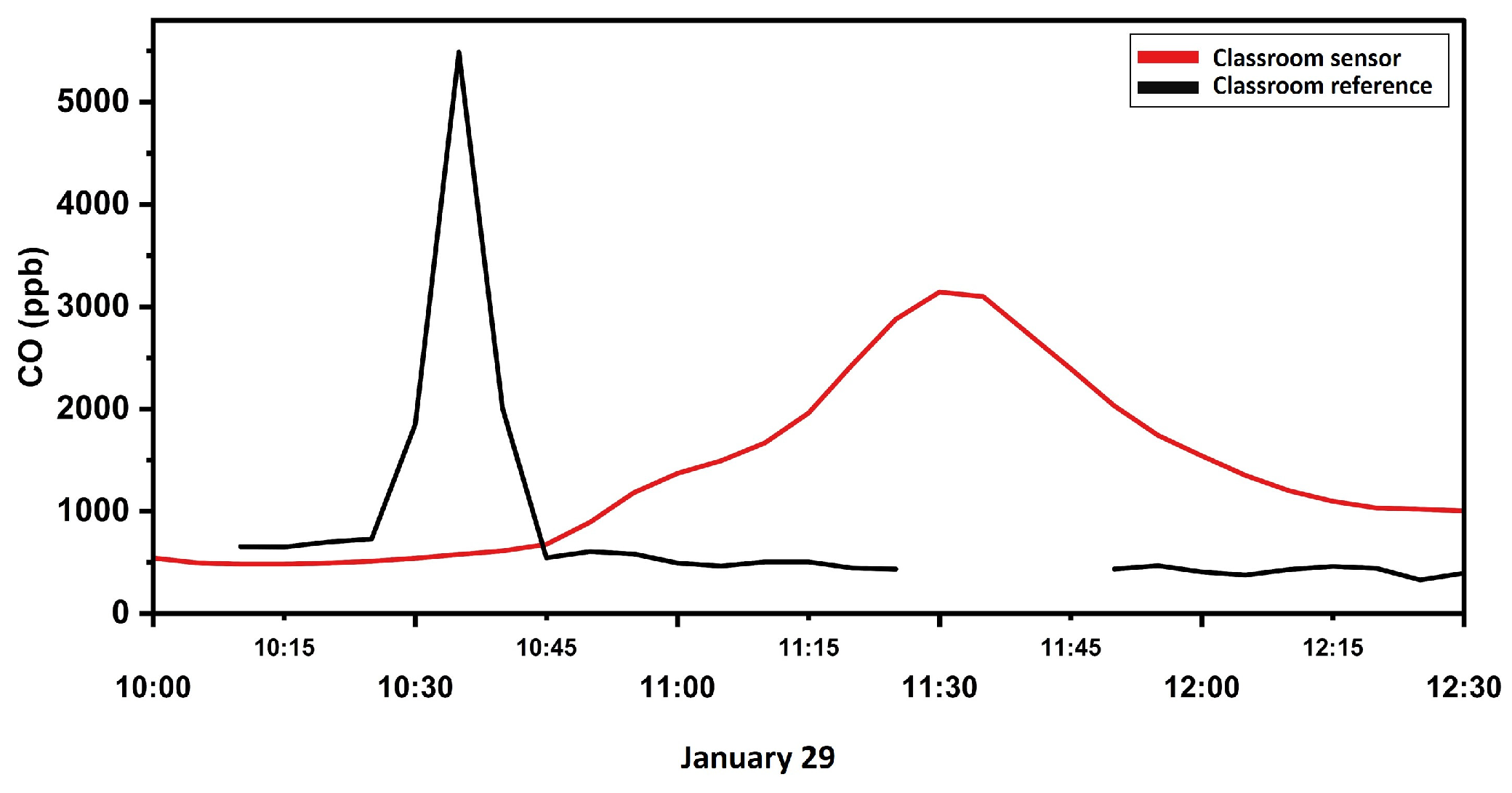

3.1.1. Carbon Monoxide

3.1.2. Nitric Oxide

3.1.3. Nitrogen Dioxide

3.1.4. Ozone

3.1.5. Carbon Dioxide

3.1.6. Fine Particle Matter

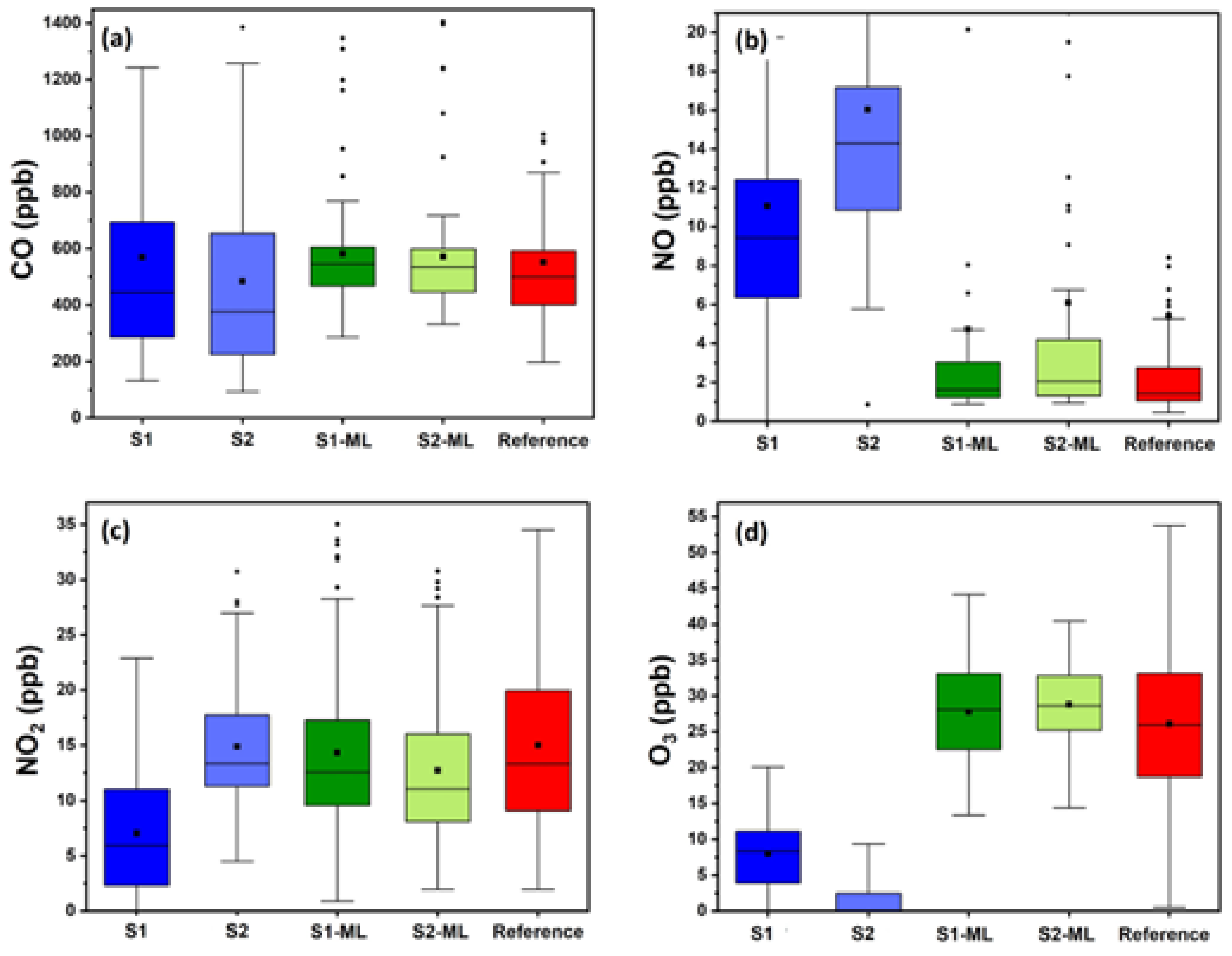

3.2. Performance of ML Calibration

3.3. Impact of ML on Inter-Unit Consistency

4. Discussion and Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ML | Machine Learning; |

| WHO | World Health Organization; |

| NO2 | Nitrogen Dioxide; |

| PM | Particle matter; |

| NO | Nitric Oxide; |

| CO | Carbon Monoxide; |

| O3 | Ozone; |

| SO2 | Sulfur Dioxide; |

| CO2 | Carbon Dioxide; |

| LCS | Low-cost sensors; |

| SMPS | Scanning Mobility Particle Sizer; |

| DMA | Differential Mobility Analyzer; |

| CPC | Condensation Particle Counter; |

| WE | Working Electrode; |

| WEe | Working Electrode electronic zero; |

| AE | Auxiliary Electrode; |

| AEe | Auxiliary Electrode electronic zero; |

| WE0 | Working Electrode zero; |

| AE0 | Auxiliary Electrode zero; |

| S | Sensitivity; |

| XGBoost | Extreme Gradient Boosting; |

| RF | Random Forest; |

| CatBoost | Categorical Boosting; |

| LightGMB | Light Gradient Boosting Machine; |

| KNN | K-Nearest Neighbors; |

| NB | Naïve Bayes; |

| MLR | Multiple Linear Regression; |

| SVR | Support Vector Regression; |

| MLP | Multilayer Perceptron; |

| ME | Mean Error; |

| nME | Normalized Mean Error; |

| COD | Coefficient of Divergence |

References

- Chojer, H.; Branco, P.T.B.S.; Martins, F.G.; Alvim-Ferraz, M.C.M.; Sousa, S.I.V. Development of Low-Cost Indoor Air Quality Monitoring Devices: Recent Advancements. Sci. Total Environ. 2020, 727, 138385. [Google Scholar] [CrossRef] [PubMed]

- Suriano, D.; Penza, M. Assessment of the Performance of a Low-Cost Air Quality Monitor in an Indoor Environment through Different Calibration Models. Atmosphere 2022, 13, 567. [Google Scholar] [CrossRef]

- World Health Organization Ambient (Outdoor) Air Pollution. Available online: https://www.who.int/news-room/fact-sheets/detail/ambient-(outdoor)-air-quality-and-health (accessed on 9 September 2024).

- World Health Organization How Air Pollution Is Destroying Our Health. Available online: https://www.who.int/news-room/spotlight/how-air-pollution-is-destroying-our-health (accessed on 9 September 2024).

- Ródenas García, M.; Spinazzé, A.; Branco, P.T.B.S.; Borghi, F.; Villena, G.; Cattaneo, A.; Di Gilio, A.; Mihucz, V.G.; Gómez Álvarez, E.; Lopes, S.I.; et al. Review of Low-Cost Sensors for Indoor Air Quality: Features and Applications. Appl. Spectrosc. Rev. 2022, 57, 747–779. [Google Scholar] [CrossRef]

- European Commission Indoor Air Pollution: New EU Research Reveals Higher Risks than Previously Thought. 22 September 2003. Available online: https://ec.europa.eu/commission/presscorner/detail/en/ip_03_1278 (accessed on 9 September 2024).

- EPA The Inside Story: A Guide to Indoor Air Quality. Available online: https://www.epa.gov/indoor-air-quality-iaq/inside-story-guide-indoor-air-quality (accessed on 9 September 2024).

- Anand, P.; Sekhar, C.; Cheong, D.; Santamouris, M.; Kondepudi, S. Occupancy-Based Zone-Level VAV System Control Implications on Thermal Comfort, Ventilation, Indoor Air Quality and Building Energy Efficiency. Energy Build. 2019, 204, 109473. [Google Scholar] [CrossRef]

- Cincinelli, A.; Martellini, T. Indoor Air Quality and Health. Int. J. Environ. Res. Public Health 2017, 14, 1286. [Google Scholar] [CrossRef]

- Saini, J.; Dutta, M.; Marques, G. Sensors for Indoor Air Quality Monitoring and Assessment through Internet of Things: A Systematic Review. Environ. Monit. Assess. 2021, 193, 66. [Google Scholar] [CrossRef] [PubMed]

- World Health Organization. WHO Global Air Quality Guidelines: Particulate Matter (PM2.5 and PM10), Ozone, Nitrogen Dioxide, Sulfur Dioxide and Carbon Monoxide, xxi, ed.; World Health Organization: Geneva, Switzerland, 2021; ISBN 978-92-4-003422-8. [Google Scholar]

- World Health Organization. Who Guidelines for Indoor Air Quality: Selected PollutantsWHO: Copenhagen, Denmark, 2010; ISBN 978-92-890-0213-4.

- Arcega-Cabrera, F.; Fargher, L.; Quesadas-Rojas, M.; Moo-Puc, R.; Oceguera-Vargas, I.; Noreña-Barroso, E.; Yáñez-Estrada, L.; Alvarado, J.; González, L.; Pérez-Herrera, N.; et al. Environmental Exposure of Children to Toxic Trace Elements (Hg, Cr, As) in an Urban Area of Yucatan, Mexico: Water, Blood, and Urine Levels. Bull. Environ. Contam. Toxicol. 2018, 100, 620–626. [Google Scholar] [CrossRef]

- Iglesias-González, A.; Hardy, E.M.; Appenzeller, B.M.R. Cumulative Exposure to Organic Pollutants of French Children Assessed by Hair Analysis. Environ. Int. 2020, 134, 105332. [Google Scholar] [CrossRef]

- Landrigan, P.J.; Fuller, R.; Acosta, N.J.R.; Adeyi, O.; Arnold, R.; Basu, N.; Baldé, A.B.; Bertollini, R.; Bose-O’Reilly, S.; Boufford, J.I.; et al. The Lancet Commission on Pollution and Health. Lancet 2018, 391, 462–512. [Google Scholar] [CrossRef]

- Zhu, Y.; Li, X.; Fan, L.; Li, L.; Wang, J.; Yang, W.; Wang, L.; Yao, X.; Wang, X. Indoor Air Quality in the Primary School of China—Results from CIEHS 2018 Study. Environ. Pollut. 2021, 291, 118094. [Google Scholar] [CrossRef]

- Hoek, G.; Pattenden, S.; Willers, S.; Antova, T.; Fabianova, E.; Braun-Fahrländer, C.; Forastiere, F.; Gehring, U.; Luttmann-Gibson, H.; Grize, L.; et al. PM 10, and Children’s Respiratory Symptoms and Lung Function in the PATY Study. Eur. Respir. J. 2012, 40, 538–547. [Google Scholar] [CrossRef] [PubMed]

- Pacitto, A.; Amato, F.; Moreno, T.; Pandolfi, M.; Fonseca, A.; Mazaheri, M.; Stabile, L.; Buonanno, G.; Querol, X. Effect of Ventilation Strategies and Air Purifiers on the Children’s Exposure to Airborne Particles and Gaseous Pollutants in School Gyms. Sci. Total Environ. 2020, 712, 135673. [Google Scholar] [CrossRef] [PubMed]

- Gaihre, S.; Semple, S.; Miller, J.; Fielding, S.; Turner, S. Classroom Carbon Dioxide Concentration, School Attendance, and Educational Attainment. J. Sch. Health 2014, 84, 569–574. [Google Scholar] [CrossRef]

- Gauderman, W.J.; Vora, H.; McConnell, R.; Berhane, K.; Gilliland, F.; Thomas, D.; Lurmann, F.; Avol, E.; Kunzli, N.; Jerrett, M.; et al. Effect of Exposure to Traffic on Lung Development from 10 to 18 Years of Age: A Cohort Study. Lancet 2007, 369, 571–577. [Google Scholar] [CrossRef] [PubMed]

- Alemany, S.; Vilor-Tejedor, N.; García-Esteban, R.; Bustamante, M.; Dadvand, P.; Esnaola, M.; Mortamais, M.; Forns, J.; Van Drooge, B.L.; Álvarez-Pedrerol, M.; et al. Traffic-Related Air Pollution, APOE Ε4 Status, and Neurodevelopmental Outcomes among School Children Enrolled in the BREATHE Project (Catalonia, Spain). Environ. Health Perspect. 2018, 126, 87001. [Google Scholar] [CrossRef]

- Berman, J.D.; McCormack, M.C.; Koehler, K.A.; Connolly, F.; Clemons-Erby, D.; Davis, M.F.; Gummerson, C.; Leaf, P.J.; Jones, T.D.; Curriero, F.C. School Environmental Conditions and Links to Academic Performance and Absenteeism in Urban, Mid-Atlantic Public Schools. Int. J. Hyg. Environ. Health 2018, 221, 800–808. [Google Scholar] [CrossRef]

- Grineski, S.E.; Collins, T.W.; Adkins, D.E. Hazardous Air Pollutants Are Associated with Worse Performance in Reading, Math, and Science among US Primary Schoolchildren. Environ. Res. 2020, 181, 108925. [Google Scholar] [CrossRef]

- Jovanović, M.; Vučićević, B.; Turanjanin, V.; Živković, M.; Spasojević, V. Investigation of Indoor and Outdoor Air Quality of the Classrooms at a School in Serbia. Energy 2014, 77, 42–48. [Google Scholar] [CrossRef]

- Castell, N.; Dauge, F.R.; Schneider, P.; Vogt, M.; Lerner, U.; Fishbain, B.; Broday, D.; Bartonova, A. Can Commercial Low-Cost Sensor Platforms Contribute to Air Quality Monitoring and Exposure Estimates? Environ. Int. 2017, 99, 293–302. [Google Scholar] [CrossRef]

- Karagulian, F.; Barbiere, M.; Kotsev, A.; Spinelle, L.; Gerboles, M.; Lagler, F.; Redon, N.; Crunaire, S.; Borowiak, A. Review of the Performance of Low-Cost Sensors for Air Quality Monitoring. Atmosphere 2019, 10, 506. [Google Scholar] [CrossRef]

- Rackes, A.; Ben-David, T.; Waring, M.S. Sensor Networks for Routine Indoor Air Quality Monitoring in Buildings: Impacts of Placement, Accuracy, and Number of Sensors. Sci. Technol. Built Environ. 2018, 24, 188–197. [Google Scholar] [CrossRef]

- Sá, J.P.; Alvim-Ferraz, M.C.M.; Martins, F.G.; Sousa, S.I.V. Application of the Low-Cost Sensing Technology for Indoor Air Quality Monitoring: A Review. Environ. Technol. Innov. 2022, 28, 102551. [Google Scholar] [CrossRef]

- Alastair, L.; Richard, P.W.; von Schneidemesser, E. Low-Cost Sensors for the Measurement of Atmospheric Composition: Overview of Topic and Future Applications; World Meteorological Organization (WMO): Geneva, Switzerland; The University of York: York, UK, 2018. [Google Scholar]

- Borghi, F.; Spinazzè, A.; Campagnolo, D.; Rovelli, S.; Cattaneo, A.; Cavallo, D.M. Precision and Accuracy of a Direct-Reading Miniaturized Monitor in PM2.5 Exposure Assessment. Sensors 2018, 18, 3089. [Google Scholar] [CrossRef]

- Cavaliere, A.; Carotenuto, F.; Di Gennaro, F.; Gioli, B.; Gualtieri, G.; Martelli, F.; Matese, A.; Toscano, P.; Vagnoli, C.; Zaldei, A. Development of Low-Cost Air Quality Stations for Next Generation Monitoring Networks: Calibration and Validation of PM2.5 and PM10 Sensors. Sensors 2018, 18, 2843. [Google Scholar] [CrossRef]

- Crilley, L.R.; Shaw, M.; Pound, R.; Kramer, L.J.; Price, R.; Young, S.; Lewis, A.C.; Pope, F.D. Evaluation of a Low-Cost Optical Particle Counter (Alphasense OPC-N2) for Ambient Air Monitoring. Atmos. Meas. Tech. 2018, 11, 709–720. [Google Scholar] [CrossRef]

- Gao, M.; Cao, J.; Seto, E. A Distributed Network of Low-Cost Continuous Reading Sensors to Measure Spatiotemporal Variations of PM2.5 in Xi’an, China. Environ. Pollut. 2015, 199, 56–65. [Google Scholar] [CrossRef]

- Holder, A.L.; Mebust, A.K.; Maghran, L.A.; McGown, M.R.; Stewart, K.E.; Vallano, D.M.; Elleman, R.A.; Baker, K.R. Field Evaluation of Low-Cost Particulate Matter Sensors for Measuring Wildfire Smoke. Sensors 2020, 20, 4796. [Google Scholar] [CrossRef]

- Holstius, D.M.; Pillarisetti, A.; Smith, K.R.; Seto, E. Field Calibrations of a Low-Cost Aerosol Sensor at a Regulatory Monitoring Site in California. Atmos. Meas. Tech. 2014, 7, 1121–1131. [Google Scholar] [CrossRef]

- Huang, J.; Kwan, M.-P.; Cai, J.; Song, W.; Yu, C.; Kan, Z.; Yim, S.H.-L. Field Evaluation and Calibration of Low-Cost Air Pollution Sensors for Environmental Exposure Research. Sensors 2022, 22, 2381. [Google Scholar] [CrossRef]

- Jiao, W.; Hagler, G.; Williams, R.; Sharpe, R.; Brown, R.; Garver, D.; Judge, R.; Caudill, M.; Rickard, J.; Davis, M.; et al. Community Air Sensor Network (CAIRSENSE) Project: Evaluation of Low-Costsensor Performance in a Suburban Environment in the Southeastern UnitedStates. Atmos. Meas. Tech. 2016, 9, 5281–5292. [Google Scholar] [CrossRef]

- Johnson, K.K.; Bergin, M.H.; Russell, A.G.; Hagler, G.S.W. Field Test of Several Low-Cost Particulate Matter Sensors in High and Low Concentration Urban Environments. Aerosol Air Qual. Res. 2018, 18, 565–578. [Google Scholar] [CrossRef] [PubMed]

- Kelly, K.; Whitaker, J.; Petty, A.; Widmer, C.; Dybwad, A.; Sleeth, D.; Martin, R.; Butterfield, A. Ambient and Laboratory Evaluation of a Low-Cost Particulate Matter Sensor. Environ. Pollut. 2017, 221, 491–500. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.-Y.; Schneider, P.; Haugen, R.; Vogt, M. Performance Assessment of a Low-Cost PM2.5 Sensor for a near Four-Month Period in Oslo, Norway. Atmosphere 2019, 10, 41. [Google Scholar] [CrossRef]

- Mukherjee, A.; Stanton, L.; Graham, A.; Roberts, P. Assessing the Utility of Low-Cost Particulate Matter Sensors over a 12-Week Period in the Cuyama Valley of California. Sensors 2017, 17, 1805. [Google Scholar] [CrossRef]

- Sayahi, T.; Butterfield, A.; Kelly, K.E. Long-Term Field Evaluation of the Plantower PMS Low-Cost Particulate Matter Sensors. Environ. Pollut. 2019, 245, 932–940. [Google Scholar] [CrossRef]

- Kang, K.; Kim, T.; Kim, H. Effect of Indoor and Outdoor Sources on Indoor Particle Concentrations in South Korean Residential Buildings. J. Hazard. Mater. 2021, 416, 125852. [Google Scholar] [CrossRef]

- Leung, D.Y.C. Outdoor-Indoor Air Pollution in Urban Environment: Challenges and Opportunity. Front. Environ. Sci. 2015, 2, 69. [Google Scholar] [CrossRef]

- Shrestha, P.M.; Humphrey, J.L.; Carlton, E.J.; Adgate, J.L.; Barton, K.E.; Root, E.D.; Miller, S.L. Impact of Outdoor Air Pollution on Indoor Air Quality in Low-Income Homes during Wildfire Seasons. Int. J. Environ. Res. Public Health 2019, 16, 3535. [Google Scholar] [CrossRef]

- Tofful, L.; Canepari, S.; Sargolini, T.; Perrino, C. Indoor Air Quality in a Domestic Environment: Combined Contribution of Indoor and Outdoor PM Sources. Build. Environ. 2021, 202, 108050. [Google Scholar] [CrossRef]

- Yang, F.; Kang, Y.; Gao, Y.; Zhong, K. Numerical Simulations of the Effect of Outdoor Pollutants on Indoor Air Quality of Buildings next to a Street Canyon. Build. Environ. 2015, 87, 10–22. [Google Scholar] [CrossRef]

- Apostolopoulos, I.D.; Fouskas, G.; Pandis, S.N. Field Calibration of a Low-Cost Air Quality Monitoring Device in an Urban Background Site Using Machine Learning Models. Atmosphere 2023, 14, 368. [Google Scholar] [CrossRef]

- Apostolopoulos, I.D.; Androulakis, S.; Kalkavouras, P.; Fouskas, G.; Pandis, S.N. Calibration and Inter-Unit Consistency Assessment of an Electrochemical Sensor System Using Machine Learning. Sensors 2024, 24, 4110. [Google Scholar] [CrossRef] [PubMed]

- Kaltsonoudis, C.; Jorga, S.D.; Louvaris, E.; Florou, K.; Pandis, S.N. A Portable Dual-Smog-Chamber System for Atmospheric Aerosol Field Studies. Atmos. Meas. Tech. 2019, 12, 2733–2743. [Google Scholar] [CrossRef]

- Wiedensohler, A.; Birmili, W.; Putaud, J.; Ogren, J. Recommendations for Aerosol Sampling. In Aerosol Science; Colbeck, I., Lazaridis, M., Eds.; Wiley: Hoboken, NJ, USA, 2013; pp. 45–59. ISBN 978-1-119-97792-6. [Google Scholar]

- Apostolopoulos, I.; Fouskas, G.; Pandis, S. An IoT Integrated Air Quality Monitoring Device Based on Microcomputer Technology and Leading Industry Low-Cost Sensor Solutions. In Proceedings of the Accepted Paper to Appear in: Future Access Enablers for Ubiquitous and Intelligent Infrastructures; Springer: Zagreb, Croatia, 2022. [Google Scholar]

- Kosmopoulos, G.; Salamalikis, V.; Pandis, S.N.; Yannopoulos, P.; Bloutsos, A.A.; Kazantzidis, A. Low-Cost Sensors for Measuring Airborne Particulate Matter: Field Evaluation and Calibration at a South-Eastern European Site. Sci. Total Environ. 2020, 748, 141396. [Google Scholar] [CrossRef]

- Zimmerman, N.; Li, H.Z.; Ellis, A.; Hauryliuk, A.; Robinson, E.S.; Gu, P.; Shah, R.U.; Ye, Q.; Snell, L.; Subramanian, R.; et al. Improving Correlations between Land Use and Air Pollutant Concentrations Using Wavelet Analysis: Insights from a Low-Cost Sensor Network. Aerosol Air Qual. Res. 2020, 20, 314–328. [Google Scholar] [CrossRef]

- Zimmerman, N.; Presto, A.A.; Kumar, S.P.; Gu, J.; Hauryliuk, A.; Robinson, E.S.; Robinson, A.L. A Machine Learning Calibration Model Using Random Forests to Improve Sensor Performance for Lower-Cost Air Quality Monitoring. Atmos. Meas. Tech. 2018, 11, 291–313. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; ACM: San Francisco, CA, USA, 2016; pp. 785–794. [Google Scholar]

- Svetnik, V.; Liaw, A.; Tong, C.; Culberson, J.C.; Sheridan, R.P.; Feuston, B.P. Random forest: A classification and regression tool for compound classification and QSAR modeling. J. Chem. Inf. Comput. Sci. 2003, 43, 1947–1958. [Google Scholar] [CrossRef]

- Prokhorenkova, L.; Gusev, G.; Vorobev, A.; Dorogush, A.V.; Gulin, A. CatBoost: Unbiased Boosting with Categorical Features. In In Proceedings of the NIPS’18: Proceedings of the 32nd International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; Curran Associates Inc.: Newry, UK; Lane: Red Hook, NY, USA, 2018; pp. 6639–6649. [Google Scholar]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.-Y. Lightgbm: A highly efficient gradient boosting decision tree. Adv. Neural Inf. Process. Syst. 2017, 30, 3146–3154. [Google Scholar]

- Sarker, I.H. Machine Learning: Algorithms, Real-World Applications and Research Directions. SN Comput. Sci. 2021, 2, 160. [Google Scholar] [CrossRef]

- Zuidema, C.; Schumacher, C.S.; Austin, E.; Carvlin, G.; Larson, T.V.; Spalt, E.W.; Zusman, M.; Gassett, A.J.; Seto, E.; Kaufman, J.D.; et al. Deployment, Calibration, and Cross-Validation of Low-Cost Electrochemical Sensors for Carbon Monoxide, Nitrogen Oxides, and Ozone for an Epidemiological Study. Sensors 2021, 21, 4214. [Google Scholar] [CrossRef]

- Cross, E.S.; Williams, L.R.; Lewis, D.K.; Magoon, G.R.; Onasch, T.B.; Kaminsky, M.L.; Worsnop, D.R.; Jayne, J.T. Use of Electrochemical Sensors for Measurement of Air Pollution: Correcting Interference Response and Validating Measurements. Atmospheric Meas. Tech. 2017, 10, 3575–3588. [Google Scholar] [CrossRef]

- Yu, H.; Puthussery, J.V.; Wang, Y.; Verma, V. Spatiotemporal Variability in the Oxidative Potential of Ambient Fine Particulate Matter in the Midwestern United States. Atmos. Chem. Phys. 2021, 21, 16363–16386. [Google Scholar] [CrossRef]

| Target Pollutant | Sensor Model | Range | Manufacturer |

|---|---|---|---|

| Ozone | OX-B431 | 0–200 ppb | Alphasense |

| Nitrogen Dioxide | NO2-B43F | 0–200 ppb | Alphasense |

| Nitric Oxide | NO-B4 | 0–200 ppb | Alphasense |

| Carbon Monoxide | CO-B4 | 0–2000 ppb | Alphasense |

| Total VOCs | VOC-B4 | 0–10,000 ppb | Alphasense |

| Sulfur Dioxide | SO2-B4 | 0–200 ppb | Alphasense |

| Carbon Dioxide | COZIR-AH | 0–10,000 ppm | Gas Sensing Solutions |

| Fine Particle Matter (PM2.5) | PMS5003 | 0–500 μg m−3 | Plantower |

| Temperature | BME680 | −40–85 °C | Bosch Sensortec |

| Relative Humidity | BME680 | 0–100% | Bosch Sensortec |

| Sensor | Average Discrepancy | R2 | COD |

|---|---|---|---|

| CO (Raw) | 117 ppb | 0.55 | 0.29 |

| CO (Calibrated) | 47 ppb | 0.91 | 0.05 |

| NO (Raw) | 5 ppb | 0.87 | 0.24 |

| NO (Calibrated) | 0.9 ppb | 0.9 | 0.19 |

| O3 (Raw) | 7.7 ppb | 0.7 | 0.32 |

| O3 (Calibrated) | 4 ppb | 0.89 | 0.1 |

| NO2 (Raw) | 7 ppb | 0.87 | 0.28 |

| NO2 (Calibrated) | 2.8 ppb | 0.84 | 0.19 |

| CO2 (Raw) | 53 ppm | 0.94 | 0.02 |

| PM2.5 (Raw) | 0.15 μg m−3 | 0.96 | 0.02 |

| Total VOCs (Raw) | 14 ppb | 0.97 | 0.06 |

| Sensor | 5 min | 15 min | 60 min | 8 h | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| ME | nME | R2 | ME | nME | R2 | ME | nME | R2 | ME | nME | |

| CO | 288, 263 | 51%, 47% | 0.1, 0.13 | 290, 266 | 52%, 48% | 0.1, 0.13 | 258, 238 | 48%, 44% | 0.1, 0.15 | 152, 160 | 28%, 29% |

| NO | 8.5, 12 | 139%, 197% | 0.79, 0.85 | 8.3, 11.8 | 136%, 193% | 0.78, 0.84 | 7.7, 11 | 126%, 180% | 0.75, 0.84 | 5.3, 10 | 113%, 164% |

| NO2 | 8.9, 4.7 | 61%, 28% | 0.65, 0.7 | 8.6, 3.5 | 61%, 30% | 0.67, 0.72 | 8, 3.2 | 61%, 32% | 0.7, 0.73 | 7.6, 2 | 58%, 21% |

| O3 | 18, 27 | 80%, 119% | 0.75, 0.66 | 18, 26.5 | 80%, 115% | 0.73, 0.61 | 18, 26 | 80%, 113% | 0.75, 0.63 | 17, 25 | 72%, 110% |

| CO2 | 150, 145 | 18%, 17% | 0.72, 0.73 | 160, 145 | 20%, 17% | 0.64, 0.71 | 136, 124 | 17%, 15% | 0.72, 0.74 | 92, 81 | 11%, 9% |

| PM2.5 | - | - | 0.77, 0.7 | - | - | 0.72 | - | - | 0.70 | - | - |

| Sensor | 5 min | 60 min | Average Day-to-Day (8 h) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| ME | nME | R2 | ME | nME | R2 | ME | nME | R2 | |

| CO | 193, 201 | 35%, 33% | 0.16 | 138, 141 | 25 % | 0.25, 0.22 | 135 | 23% | - |

| NO | 3.4, 4.2 | 56%, 65% | 0.72, 0.83 | 3, 3.5 | 49%, 57% | 0.9, 0.87 | 2, 3 | 33%, 49% | - |

| NO2 | 4 | 33% | 0.6 | 3.2, 3.4 | 30%, 34 | 0.71, 0.69 | 3 | 30% | - |

| O3 | 4, 5.5 | 15%, 21% | 0.66, 0.65 | 4, 5.5 | 15%, 21% | 0.75, 0.63 | 4, 5 | 16%, 19% | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Apostolopoulos, I.D.; Dovrou, E.; Androulakis, S.; Seitanidi, K.; Georgopoulou, M.P.; Matrali, A.; Argyropoulou, G.; Kaltsonoudis, C.; Fouskas, G.; Pandis, S.N. Monitoring of Indoor Air Quality in a Classroom Combining a Low-Cost Sensor System and Machine Learning. Chemosensors 2025, 13, 148. https://doi.org/10.3390/chemosensors13040148

Apostolopoulos ID, Dovrou E, Androulakis S, Seitanidi K, Georgopoulou MP, Matrali A, Argyropoulou G, Kaltsonoudis C, Fouskas G, Pandis SN. Monitoring of Indoor Air Quality in a Classroom Combining a Low-Cost Sensor System and Machine Learning. Chemosensors. 2025; 13(4):148. https://doi.org/10.3390/chemosensors13040148

Chicago/Turabian StyleApostolopoulos, Ioannis D., Eleni Dovrou, Silas Androulakis, Katerina Seitanidi, Maria P. Georgopoulou, Angeliki Matrali, Georgia Argyropoulou, Christos Kaltsonoudis, George Fouskas, and Spyros N. Pandis. 2025. "Monitoring of Indoor Air Quality in a Classroom Combining a Low-Cost Sensor System and Machine Learning" Chemosensors 13, no. 4: 148. https://doi.org/10.3390/chemosensors13040148

APA StyleApostolopoulos, I. D., Dovrou, E., Androulakis, S., Seitanidi, K., Georgopoulou, M. P., Matrali, A., Argyropoulou, G., Kaltsonoudis, C., Fouskas, G., & Pandis, S. N. (2025). Monitoring of Indoor Air Quality in a Classroom Combining a Low-Cost Sensor System and Machine Learning. Chemosensors, 13(4), 148. https://doi.org/10.3390/chemosensors13040148