Deep Learning-Based Knee MRI Classification for Common Peroneal Nerve Palsy with Foot Drop

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Participants

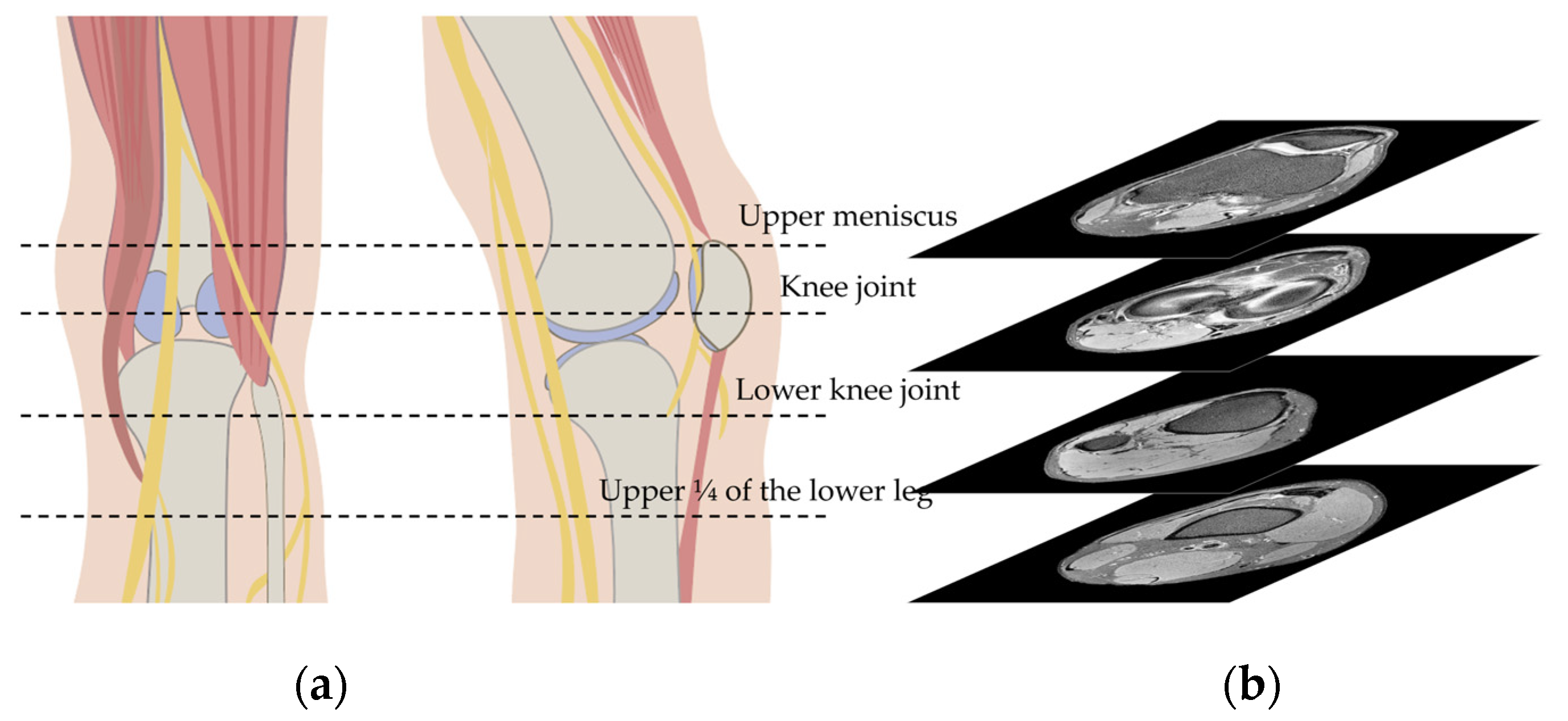

2.2. Knee MRI

2.3. Algorithm Training

2.4. Saliency Maps

2.5. Statistical Analysis

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Stewart, J.D. Foot drop: Where, why and what to do? Pract. Neurol. 2008, 8, 158–169. [Google Scholar] [CrossRef] [PubMed]

- Bendszus, M.; Wessig, C.; Reiners, K.; Bartsch, A.J.; Solymosi, L.; Koltzenberg, M. MR imaging in the differential diagnosis of neurogenic foot drop. AJNR Am. J. Neuroradiol. 2003, 24, 1283–1289. [Google Scholar]

- Stevens, F.; Weerkamp, N.J.; Cals, J.W. Foot drop. BMJ 2015, 350, h1736. [Google Scholar] [CrossRef] [PubMed]

- Dwivedi, N.; Paulson, A.E.; Johnson, J.E.; Dy, C.J. Surgical Treatment of Foot Drop: Patient Evaluation and Peripheral Nerve Treatment Options. Orthop. Clin. N. Am. 2022, 53, 223–234. [Google Scholar] [CrossRef] [PubMed]

- Poage, C.; Roth, C.; Scott, B. Peroneal nerve palsy: Evaluation and management. J. Am. Acad. Orthop. Surg. 2016, 24, 1–10. [Google Scholar] [CrossRef]

- Chhabra, A.; Andreisek, G.; Soldatos, T.; Wang, K.C.; Flammang, A.J.; Belzberg, A.J.; Carrino, J.A. MR neurography: Past, present, and future. AJR Am. J. Roentgenol. 2011, 197, 583–591. [Google Scholar] [CrossRef] [PubMed]

- Daniels, S.P.; Feinberg, J.H.; Carrino, J.A.; Behzadi, A.H.; Sneag, D.B. MRI of foot drop: How we do it. Radiology 2018, 289, 9–24. [Google Scholar] [CrossRef]

- Yang, J.; Cho, Y. The Common Peroneal Nerve Injuries. Nerve 2022, 8, 1–9. [Google Scholar] [CrossRef]

- Tran, T.M.A.; Lim, B.G.; Sheehy, R.; Robertson, P.L. Magnetic resonance imaging for common peroneal nerve injury in trauma patients: Are routine knee sequences adequate for prediction of outcome? J. Med. Imaging Radiat. Oncol. 2019, 63, 54–60. [Google Scholar] [CrossRef]

- Dong, Q.; Jacobson, J.A.; Jamadar, D.A.; Gandikota, G.; Brandon, C.; Morag, Y.; Fessell, D.P.; Kim, S.-M. Entrapment neuropathies in the upper and lower limbs: Anatomy and MRI features. Radiol. Res. Pract. 2012, 2012, 23069. [Google Scholar] [CrossRef]

- Katirji, M.B.; Wilbourn, A.J. Common peroneal mononeuropathy: A clinical and electrophysiologic study of 116 lesions. Neurology 1988, 38, 1723–1728. [Google Scholar] [CrossRef]

- Bendszus, M.; Koltzenburg, M.; Wessig, C.; Solymosi, L. Sequential MR imaging of denervated muscle: Experimental study. AJNR Am. J. Neuroradiol. 2002, 23, 1427–1431. [Google Scholar]

- Viddeleer, A.R.; Sijens, P.E.; van Ooyen, P.M.; Kuypers, P.D.; Hovius, S.E.; Oudkerk, M. Sequential MR imaging of denervated and reinnervated skeletal muscle as correlated to functional outcome. Radiology 2012, 264, 522–530. [Google Scholar] [CrossRef]

- Helm, J.M.; Swiergosz, A.M.; Haeberle, H.S.; Karnuta, J.M.; Schaffer, J.L.; Krebs, V.E.; Spitzer, A.I.; Ramkumar, P.N. Machine learning and artificial intelligence: Definitions, applications, and future directions. Curr. Rev. Musculoskelet. Med. 2020, 13, 69–76. [Google Scholar] [CrossRef]

- Zhou, S.K.; Greenspan, H.; Davatzikos, C.; Duncan, J.S.; van Ginneken, B.; Madabhushi, A.; Prince, J.L.; Rueckert, D.; Summers, R.M. A review of deep learning in medical imaging: Imaging traits, technology trends, case studies with progress highlights, and future promises. Inst. Electr. Electron. Eng. 2021, 109, 820–838. [Google Scholar] [CrossRef]

- Golden, J.A. Deep learning algorithms for detection of lymph node metastases from breast cancer: Helping artificial intelligence be seen. JAMA 2017, 318, 2184–2186. [Google Scholar] [CrossRef]

- Min, J.K.; Kwak, M.S.; Cha, J.M. Overview of deep learning in gastrointestinal endoscopy. Gut Liver 2019, 13, 388–393. [Google Scholar] [CrossRef]

- Mamun, M.; Shawkat, S.B.; Ahammed, M.S.; Uddin, M.M.; Mahmud, M.I.; Islam, A.M. Deep Learning Based Model for Alzheimer’s Disease Detection Using Brain MRI Images. In Proceedings of the IEEE 13th Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON), New York, NY, USA, 26–29 October 2022. [Google Scholar]

- Gao, P.; Shan, W.; Guo, Y.; Wang, Y.; Sun, R.; Cai, J.; Li, H.; Chan, W.S.; Liu, P.; Yi, L. Development and validation of a deep learning model for brain tumor diagnosis and classification using magnetic resonance imaging. JAMA Netw. Open 2022, 5, e2225608. [Google Scholar] [CrossRef]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D. A survey of transfer learning. J. Big Data 2016, 3, 9. [Google Scholar] [CrossRef]

- Liu, X.; Zhou, H.; Wang, Z.; Liu, X.; Li, X.; Nie, C.; Li, Y. Fully convolutional neural network deep learning model fully in patients with type 2 diabetes complicated with peripheral neuropathy by high-frequency ultrasound image. Comput. Math. Methods Med. 2022, 2022, 5466173. [Google Scholar] [CrossRef] [PubMed]

- Zochowski, K.C.; Tan, E.T.; Argentieri, E.C.; Lin, B.; Burge, A.J.; Queler, S.C.; Lebel, R.M.; Sneag, D.B. Improvement of peripheral nerve visualization using a deep learning-based MR reconstruction algorithm. Magn. Reson. Imaging 2022, 85, 186–192. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Region-based convolutional networks for accurate object detection and segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 142–158. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019. [Google Scholar]

- Yang, H.; Chen, L.; Cheng, Z.; Yang, M.; Wang, J.; Lin, C.; Wang, Y.; Huang, L.; Chen, Y.; Peng, S. Deep learning-based six-type classifier for lung cancer and mimics from histopathological whole slide images: A retrospective study. BMC Med. 2021, 19, 80. [Google Scholar] [CrossRef]

- Li, C.; Wang, Q.; Liu, X.; Hu, B. An attention-based CoT-ResNet with channel shuffle mechanism for classification of alzheimer’s disease levels. Front. Aging Neurosci. 2022, 14, 930584. [Google Scholar] [CrossRef]

- Gaur, L.; Bhatia, U.; Jhanjhi, N.; Muhammad, G.; Masud, M. Medical image-based detection of COVID-19 using deep convolution neural networks. Multimed. Syst. 2023, 29, 1729–1738. [Google Scholar] [CrossRef]

- West, G.A.; Haynor, D.R.; Goodkin, R.; Tsuruda, J.S.; Bronstein, A.D.; Kraft, G.; Winter, T.; Kliot, M. Magnetic resonance imaging signal changes in denervated muscles after peripheral nerve injury. Neurosurgery 1994, 35, 1077–1085. [Google Scholar] [CrossRef]

- Kamath, S.; Venkatanarasimha, N.; Walsh, M.; Hughes, P. MRI appearance of muscle denervation. Skeletal. Radiol. 2008, 37, 397–404. [Google Scholar] [CrossRef]

- Goyal, A.; Wadgera, N.; Srivastava, D.N.; Ansari, M.T.; Dawar, R. Imaging of traumatic peripheral nerve injuries. J. Clin. Orthop. Trauma 2021, 21, 101510. [Google Scholar] [CrossRef]

- Chhabra, A.; Ahlawat, S.; Belzberg, A.; Andreseik, G. Peripheral nerve injury grading simplified on MR neurography: As referenced to Seddon and Sunderland classifications. Indian J. Radiol. Imaging 2014, 24, 217–224. [Google Scholar] [CrossRef]

- Henkel, C. Efficient large-scale image retrieval with deep feature orthogonality and hybrid-swin-transformers. arXiv 2021, arXiv:2110.03786. [Google Scholar]

- Fan, H.; Xiong, B.; Mangalam, K.; Li, Y.; Yan, Z.; Malik, J.; Feichtenhofer, C. Multiscale vision transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021. [Google Scholar]

- Noh, S.-H. In Performance comparison of CNN models using gradient flow analysis. Informatics 2021, 8, 53. [Google Scholar] [CrossRef]

- Bengio, Y.; Courville, A.; Vincent, P. Representation learning: A review and new perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1798–1828. [Google Scholar] [CrossRef] [PubMed]

| Case Group (n = 42) | Control Group (n = 107) | p Value | |

|---|---|---|---|

| Age, median (IQR 1) | 51 (42–54) | 53 (43–59) | 0.779 2 |

| Gender, number (%) | 0.927 3 | ||

| Male | 22 (52.4) | 58 (54.2) | |

| Female | 20 (47.6) | 51 (45.8) | |

| Lesion location | 0.860 3 | ||

| Right | 25 (59.5) | 62 (57.9) | |

| Left | 17 (40.5) | 45 (42.1) |

| Dataset | Case Group | Control Group | Total |

|---|---|---|---|

| Train | 769 | 1065 | 1934 |

| Validation | 89 | 128 | 217 |

| Test | 87 | 148 | 235 |

| Total | 945 | 1341 | 2386 |

| TP | FP | FN | TN | Total | Precision | Recall | Accuracy | F1 | |

|---|---|---|---|---|---|---|---|---|---|

| EfficientNet-B5 | 77 | 18 | 10 | 130 | 235 | 0.811 | 0.885 | 0.881 | 0.846 |

| ResNet152 | 67 | 16 | 20 | 132 | 235 | 0.807 | 0.770 | 0.847 | 0.788 |

| VGG19 | 46 | 19 | 41 | 129 | 235 | 0.708 | 0.529 | 0.745 | 0.605 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chung, K.M.; Yu, H.; Kim, J.-H.; Lee, J.J.; Sohn, J.-H.; Lee, S.-H.; Sung, J.H.; Han, S.-W.; Yang, J.S.; Kim, C. Deep Learning-Based Knee MRI Classification for Common Peroneal Nerve Palsy with Foot Drop. Biomedicines 2023, 11, 3171. https://doi.org/10.3390/biomedicines11123171

Chung KM, Yu H, Kim J-H, Lee JJ, Sohn J-H, Lee S-H, Sung JH, Han S-W, Yang JS, Kim C. Deep Learning-Based Knee MRI Classification for Common Peroneal Nerve Palsy with Foot Drop. Biomedicines. 2023; 11(12):3171. https://doi.org/10.3390/biomedicines11123171

Chicago/Turabian StyleChung, Kyung Min, Hyunjae Yu, Jong-Ho Kim, Jae Jun Lee, Jong-Hee Sohn, Sang-Hwa Lee, Joo Hye Sung, Sang-Won Han, Jin Seo Yang, and Chulho Kim. 2023. "Deep Learning-Based Knee MRI Classification for Common Peroneal Nerve Palsy with Foot Drop" Biomedicines 11, no. 12: 3171. https://doi.org/10.3390/biomedicines11123171

APA StyleChung, K. M., Yu, H., Kim, J.-H., Lee, J. J., Sohn, J.-H., Lee, S.-H., Sung, J. H., Han, S.-W., Yang, J. S., & Kim, C. (2023). Deep Learning-Based Knee MRI Classification for Common Peroneal Nerve Palsy with Foot Drop. Biomedicines, 11(12), 3171. https://doi.org/10.3390/biomedicines11123171