Abstract

Sleep staging is of critical significance to the diagnosis of sleep disorders, and the electroencephalogram (EEG), which is used for monitoring brain activity, is commonly employed in sleep staging. In this paper, we propose a novel method for improving the performance of sleep staging models based on Siamese networks, based on single-channel EEG. Our proposed method consists of a Siamese network architecture and a redesigned loss with distance metrics. Two encoders are used in the Siamese network to generate latent features of the EEG epochs, and the contrastive loss, which is also a distance metric, is used to compare the similarity or differences between EEG epochs from the same or different sleep stages. We evaluated our method on single-channel EEGs from different channels (Fpz-Cz and F4-EOG (left)) from two public datasets SleepEDF and MASS-SS3 and achieved the overall accuracies MF1 and Cohen’s kappa coefficient of 85.2%, 78.3% and 0.79 on SleepEDF and 87.2%, 82.1% and 0.81 on MASS-SS3. The results show that our method can significantly improve the performance of sleep staging models and outperform the state-of-the-art sleep staging methods. The performance of our method also confirms that the features captured by Siamese networks and distance metrics are useful for sleep staging.

1. Introduction

The importance of sleep to the physical and mental health of humans cannot be overstated [1]. A growing number of people suffer from sleep disorders such as insomnia, sleep apnea syndrome and narcolepsy [2]. In order to measure sleep quality and evaluate sleep-related diseases, sleep monitoring and analysis are becoming increasingly relevant [3].

The quality of sleep is evaluated by sleep experts using polysomnograms (PSGs), which include electroencephalograms (EEGs), electrooculograms (EOGs), electromyograms (EMGs), and electrocardiograms (ECGs). In order to evaluate sleep quality, sleep staging segments PSG into 30-second epochs, and then categorizes the epochs into different sleep stages based on different sleep scoring standards, including the American Academy of Sleep Medicine (AASM) [4] and Rechtschaffen and Kales (R&K) [5]. The sleep stages are divided into awake (W), non-rapid eye movement (NREM) and rapid eye movement (REM). NREM stage is further divided into stages S1, S2, S3, S4 according to R&K and stages N1, N2, N3 according to AASM. For a long time, sleep staging relied on sleep experts to perform this labor-intensive and time-consuming task manually [6].

A lot of studies have focused on using machine learning models to automatically classify EEG epochs to their corresponding stages, such as support vector machine (SVM) [7] and random forest (RF) [8]. These methods should carefully design non-redundant and representative features and extract those features manually using algorithms like empirical mode decomposition [9] and wavelet transform [10]. Then, the features, instead of raw EEG signals, are sent to the machine learning models for classification. However, since the features were designed based on specific datasets, these methods may not generalize to different sleep datasets.

With the recent advancement of deep learning, various researchers have attempted to develop deep learning techniques based on EEG signals to aid in classifying sleep stages automatically. The existing deep learning models for sleep staging are primarily composed of convolutional neural networks (CNNs) and recurrent neural networks (RNNs) or attention mechanisms. [11]. The general approach to deep learning is to use CNNs to extract time and frequency information and other time-invariant features, followed by RNNs to learn temporal dependencies such as stage transition rules. The authors of [12] constructed a model using two CNNs with different filter sizes and two layers of bidirectional LSTMs. The architecture proposed in [13] begins with multi-resolution CNNs, followed by a temporal context encoder with multi-head attention.

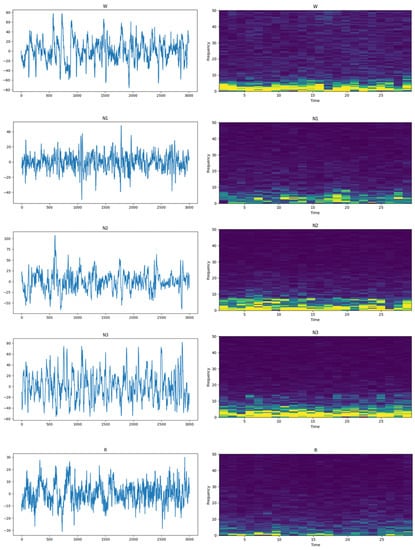

The shortcomings of manually designed features can be effectively overcome by deep learning methods. However, we find that patterns representing differences or similarities between two epochs that are overlooked by deep learning models can be extracted by Siamese networks. Figure 1 shows EEG epochs in the time domain and STFTs of the signal. It can be seen from the figure that there are significant differences in EEG epochs between different sleep stages in both the time domain and frequency domain. To extract these features, we tend to design a new architecture. Siamese networks have two of the same structures which share parameters for a pair of sleep epochs’ input, and have been widely used and achieved great success in natural language processing (NLP) [14] and object detection [15]. In the field of sleep staging, Siamese networks can encode similarity and dissimilarity patterns between two epochs. The features extracted by Siamese networks are then sent to sleep staging models to improve the performance of classification models. In order to achieve better performance in sleep staging, we proposed a method based on Siamese networks, contrastive loss, Euclidean distance and max-sliced Wasserstein distance [16,17] for automatic sleep staging using single-channel EEG.

Figure 1.

EEG epochs in the time and frequency domain. From top to bottom are stages W, N1, N2, N3 and REM. EEG epochs in the time domain are displayed on the left and the short-time Fourier transform (STFT) of EEG epochs is shown on the right. The yellow color represents higher amplitudes and the blue color indicates lower amplitudes.

In this research, we provide a novel approach to enhance the performance of sleep staging models. The primary contributions of our study are as follows:

- (1)

- We propose a Siamese network architecture and implement Siamese convolutional neural networks (Siamese CNNs) and Siamese autoencoders (Siamese AEs). The Siamese network architecture is used to extract features of similarity between two different epochs of EEG.

- (2)

- To train sleep staging models with a Siamese network, we developed a new loss function. The new loss function incorporates metrics for traditional sleep staging models such as cross entropy loss and distance metrics for measuring the distance between two distributions.

- (3)

- We evaluated our model on two public datasets, SleepEDF and MASS-SS3, and compared our results with baseline sleep staging models and some SOTA sleep staging methods. The results show that our encoder can greatly improve the accuracy of the baseline model and outperform the state-of-the-art models in sleep staging.

The rest of this paper is organized as follows. Section 2 shows the datasets and explains the methods by illustrating the architecture of Siamese AEs and Siamese CNNs, the loss function and the training process of our model. Section 3 shows the experimental results and the comparison against the baseline models. Section 4 draws the conclusion of our work.

2. Materials and Methods

2.1. Datasets

We used two different EEG channels from two public datasets, the Montreal Archive of Sleep Studies (MASS) [18] and SleepEDF [19], respectively.

SleepEDF was obtained from PhysioBank, containing two subsets, which were Sleep Cassette (SC) and Sleep Telemetry (ST). The SC subset was from a study aimed at exploring the age effects on sleep of healthy participants, while the ST subset was involved in research focusing on the effects of temazepam on sleep. Each PSG in this dataset contained two EEG channels (Fpz-Cz and Pz-Oz) with a sampling rate of 100 Hz, one chin EMG channel, one EOG channel and 1 oro-nasal respiration signal. Here, Fpz-Cz and Pz-Oz represent the positions of the electrodes. Each 30-second epoch of recordings was labeled by sleep experts based on the R&K standard. In our experiments, we utilized the Fpz-Cz EEG channel in the SC subset to train and evaluate our model. According to previous work [20,21,22,23], we merged the N3 and N4 stages into N3 and we excluded MOVEMENT and UNKNOWN.

The database MASS has 5 subsets, SS1-SS5. The subsets were arranged in accordance with their protocols for study and acquisition and the subset we utilized was SS3. Compared with SleepEDF, MASS-SS3 contained many more EEG channels. Each recording in SS3 was composed of 20 EEG channels, 3 EMG channels, 2 EOG channels and one ECG channel. The EEG and EOG recordings shared a sampling rate of 256 Hz. A notch filter of 60 Hz pre-processed the EEG and EOG recordings; then, the EEG and EOG passed the band-pass filters of 0.30–100 Hz and 0.10–100 Hz, respectively. The recordings were classified by sleep specialists into one of five stages, in accordance with the AASM standard. We selected the F4-EOG (Left) channel to train and evaluate our model. Here, F4-EOG (Left) indicates that the electrodes are placed close to the outer lower canthus of the left eye and the hairline. Furthermore, it has been found that in both the Sleep-EDF and MASS datasets, there are long periods of stage W in the recordings. However, too many or too few W stages in the training set may lead to a class imbalance problem. To avoid this issue and emphasize sleep data rather than wake data, we only used 30 min of wake periods before and after the sleep process.

Table 1 shows the number of EEG epochs of each sleep stage of the two datasets.

Table 1.

The number of samples of the two datasets involved in our work.

In SleepEDF, there were 20 participants in total, with 10 males and 10 females. The ages of participants ranged from 25 to 34, with a mean of 28.7 and a standard deviation of 2.9. There were more subjects in MASS-SS3, containing 28 males and 34 females, and a wider age range of 20 to 69. The mean and standard deviation of the ages were 42.5 and 18.9, respectively.

2.2. Siamese Networks

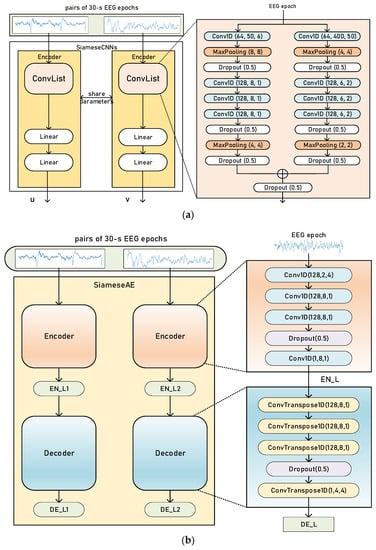

We proposed two Siamese networks with different architectures. One was Siamese CNNs; the other was Siamese AEs. Figure 2a,b shows the architecture of Siamese CNNs and Siamese AEs respectively.

Figure 2.

An overview of Siamese CNNs (a) and Siamese AEs (b).

For Siamese CNNs, we employed two-branched CNNs to encode the input pair of EEG epochs, as shown in Figure 2a. According to previous studies [12,24], convolution with small filter sizes is better for extracting temporal information. Convolution with a larger filter size is better for extracting frequency information from EEG. Moreover, different filter sizes can also capture features from various frequency bands [13]. Therefore, the filter (kernel) sizes of the two CNN branches were different.

A pair of EEG signals with the same or different labels was the input to our Siamese CNNs. The details of EEG data pairs will be further explained in Section 2.4. Each Conv1D block was composed of 1D-convolution, batch normalization [25] and rectified linear unit activation (ReLU). The kernel size, number of filters and stride size are shown in Figure 1. The MaxPooling block shows the pooling size and the stride of the pooling layer, which downsamples the input with a max operation. The dropout layer sets neurons in the neural network to zero with a probability of 0.5.

For Siamese AEs, the encoder consists of convolutional layers and the decoder is formed of transposed convolutional layers. Transposed convolution, by mapping smaller feature maps into larger ones, is an upsampling technique, rather than the reverse of convolution. The encoder creates a latent representation EN_L of the input EEG, which is the output of the encoder. The decoder reconstructs the input EEG. DE_L is the output of the decoder.

2.3. Loss Functions for Measuring Similarity

We propose a novel loss function to measure the performance of the classification model as well as the Siamese network. As the Siamese network consists of two neural networks with shared parameters and architecture, which map a pair of inputs into latent features, it utilizes distance metrics to measure similarity, which is called contrastive loss [26]. The distance should be as small as possible if the input pair is obtained from the same sleep stage. In contrast, the distance should be as large as possible when the input pair comes from different sleep stages.

Defined is the distance function between the inputs and as the Euclidean distance between the outputs of a branch of the Siamese network , shown as:

The contrastive loss of the Siamese network is shown in Equation (2):

The contrastive loss is calculated by adding up the distance metric of EEG data pairs, where is the i-th data pair. The distance metric of a single data pair is shown in Equation (3):

In Equation (3), y is the label of the EEG data pair, which represents whether the two EEG epochs belong to the same sleep stage; if the same, y equals 1 and if not, y equals 0. The hyperparameter m (m > 0) is a threshold to measure the dissimilarity of the data pair, which is determined by experiments.

The loss function of our baseline sleep staging model is multi-class cross-entropy (CE) which is defined in Equation (4):

Here, N is the number of EEG epochs and K is the number of classes. is the real label of the i-th EEG epoch of the class k. Similarly, is the predicted probability of the i-th EEG epoch of the class k.

For Siamese CNNs, the loss is , where (u,v) are the input pair of EEG epochs. Considering the encoding and reconstructing characteristics of autoencoders, the loss of our Siamese AEs has an additional component, compared with Siamese CNNs, which is the distance metric between input EEG epoch and the output of the decoder. This distance metric is composed of max-sliced Wasserstein distance (max-W distance) and Euclidean distance. The max-W distance is calculated from Equation (5):

Here, represents the set containing all directions on unit sphere, u and v are the input distributions is an element in . So, the distance metric we propose is , where represents the Euclidean distance between u and v. The loss of our Siamese AEs is shown in Equation (6).

In Equation (6), the EEG epoch is the input of Siamese AEs, DE_L is the output of the decoder, are the latent features of the input EEG data pair encoded by the encoder.

2.4. Training and Evaluation Process

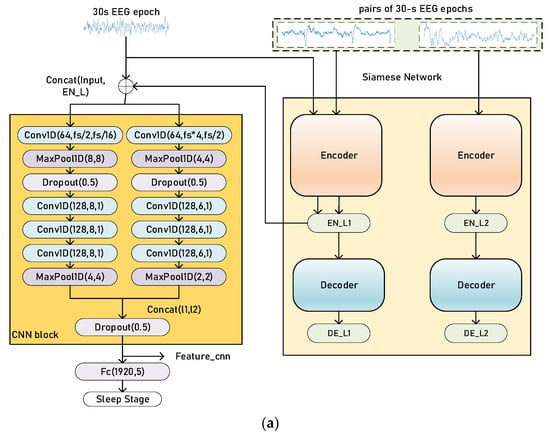

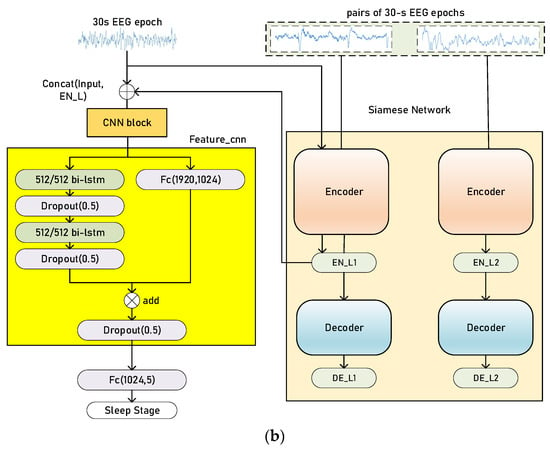

There are two stages in our training process: pretraining and finetuning. The baseline sleep staging model is formed of a set of CNNs and a sequence residual learning block [27] with bidirectional long short-term memories (bi-LSTMs), which is an extension of long short-term memory (LSTM) [28,29]. The whole model (sleep staging model and Siamese network) is shown in Figure 3.

Figure 3.

Illustration of the training process of our proposed method, pretraining (a) and finetuning (b). The Siamese network architecture is Siamese AEs. The CNN block, in orange (a), extracts time-invariant features, and the sequence residual block, in yellow (b), extracts temporal information from a sequence of EEG epochs. Concat (l1, l2) refers to the concatenation of two vectors and fs is the sampling rate of EEG signals. The parameters in the Conv1d blocks and MaxPooling blocks are similar to Figure 2.

The input of Siamese networks is a pair of EEG epochs. The EEG epoch data pairs are generated by randomly selecting data pairs with the same or different labels from the training set. The label of data pairs depends on the label of each EEG epoch. If they belong to the same sleep stage, the label of data-pair is 1; otherwise, the label is 0.

During pretraining, as shown in Figure 3, a pair of EEG epochs is firstly fed into the Siamese AEs. The encoder of our Siamese AEs maps the inputs into latent features EN_L. Then, every 30 s, the EEG epoch is encoded by one branch of Siamese AEs, producing the latent representation to be concatenated with the original EEG epoch. The concatenated data is then fed into the sleep staging model. The basic model in the pretraining stage is the CNN block, and the output of decoder DE_L is used for the calculation of the loss function. Similarly, for Siamese CNNs, the latent feature vector to be concatenated is the output of the encoder. Since the loss function of Siamese CNNs does not need to reconstruct the original input, there are not any decoders in Siamese CNNs.

The sleep staging model during finetuning is based on the pretraining ones. The output of CNN block feature_CNN is then fed into a sequence residual learning block. The left branch of the sequence residual block is formed of bi-LSTMs with a hidden size of 512/512 and the right branch is a fully connected layer. The parameters of the Siamese network, the CNN block and the sequence residual block are updated simultaneously with different learning rates. The learning rate of the sequence residual block is larger than other blocks since they have been pretrained in the former stage.

During testing and evaluation, we used one branch of the Siamese network, since the parameters of the two branches are shared. We applied k-fold cross-validation to evaluate our Siamese network. For the two datasets involved in our experiments, k equals 20 and 31 for SleepEDF and MASS-SS3, respectively.

3. Results

3.1. Experimental Setup and Evaluation Metrics

We implemented our model with the Pytorch 1.10.0 toolkit and trained the model on a GeForce RTX 3090 GPU. We utilized the Adam optimizer [30] with an initial learning rate of 10−3 and a weight decay of 10−3 in the first training stage. The Siamese network and the CNN block of the classification model are pretrained for 100 epochs. Then, in the finetuning stage, the model is trained for 200 epochs and the learning rate of the pretrained layers is modified to 10−6. The learning rate of the sequence residual learning block is the same as pretraining, which is 10−3. The model is trained with a batch size of 32 during pretraining. In the finetuning stage, the batch size is set to 16 and the sequence length is set to 25. The threshold m in the contrastive loss function is set to 7400.

We evaluate the performance of our method using precision (PR), recall (RE), per-class F1-score (F1), overall accuracy (ACC), macro-averaging F1-score (MF1) and Cohen’s kappa coefficient (k) [31]. The per-class metrics are calculated as follows:

Here, , , TNc and refer to the true positives, false negatives, true negatives and false negatives of class . When calculating per-class metrics, the positive class is the current class, and the negative class is defined as the combination of other classes.

The overall metrics ACC, MF1 and kappa (k) are defined as follows:

In the equations above, C is the number of classes which equals 5 according to the AASM manual, while N is the number of epochs in the test set.

3.2. Classification Performance

Table 2, Table 3 and Table 4 shows the per-class metrics and confusion matrices of our model on Fpz-Cz channel in SleepEDF and F4-EOG (Left) channel in MASS-SS3.

Table 2.

Confusion matrix and per-class metrics of applying Siamese CNNs to the baseline sleep staging model on the SleepEDF dataset using 20-fold cross-validation.

Table 3.

Confusion matrix and per-class metrics of applying Siamese AEs to the baseline sleep staging model on the SleepEDF dataset, using 20-fold cross-validation.

Table 4.

Confusion matrix and per-class metrics of applying Siamese AEs to the baseline sleep staging model on the MASS-SS3 dataset, using 31-fold cross-validation.

Since we evaluated our method using k-fold evaluation, we added up the metrics of each fold to calculate the confusion matrices. Each row represents the number of EEG epochs classified by humans, and each column indicates the number of EEG epochs classified by our model. We show the per-class performance (PR, RE, F1) of our models in the last three columns of the table.

In view of the better overall performance of Siamese AEs, we further evaluated Siamese AEs on dataset MASS-SS3. It can be seen from the confusion matrices that our model works well on both datasets, except for stage N1. The precisions of other stages are all around 85% while the precision of stage N1 is around 55% in SleepEDF and around 65% in MASS-SS3. The lack of EEG epochs results in the lower performance of existing sleep staging models in stage N1. Comparing the performance of Siamese CNNs and Siamese AEs on SleepEDF, Siamese AEs performs better on stages N2, N3, REM and Siamese CNNs performs better on stages W and N1. In comparison to SleepEDF, the model achieves a better result on the dataset MASS-SS3.

3.3. Comparison with State-of-the-Art Models

We compared our model with some other sleep staging methods and discussed the performance of our Siamese CNNs and Siamese AEs. Table 5 shows the result of our method comparing with other state-of-the-art methods.

Table 5.

Comparison of our method with other state-of-the-art (SOTA) sleep staging methods. The hyphen in the table represents that the value is unavailable.

Our baseline sleep staging network is DeepSleepNet (DSN); therefore, we compare our results with DSN first. Our Siamese CNNs and Siamese AEs both improved the performance of DSN, with increases in overall accuracy of 3% and 3.3%, respectively. The MF1 and kappa of our method also outperformed DSN on SleepEDF. To further prove that our method is effective for sleep staging, we evaluated our model on MASS-SS3 and compared the results with DSN. There was a 1% improvement in the results. Moreover, the performance of our method outperforms other SOTA sleep staging methods. Our Siamese AEs achieved the highest overall metrics among the sleep staging methods mentioned above.

Comparing our Siamese CNNs and Siamese AEs, the overall accuracy of Siamese AEs is higher than Siamese CNNs on SleepEDF, but the training time is longer than Siamese CNNs. Siamese AEs have an extra component to the loss function, so this extra constraint makes computations more complex and results in higher performance.

4. Conclusions

A Siamese network architecture is proposed in this study for encoding the similarity between two EEG epochs of the same sleep stage. It also encodes the differences between two EEG epochs of different sleep stages. Those features are extracted by the loss function presented by us, which is composed of distance metrics and cross entropy. Distance metrics are used to measure the distance between the distributions of two EEG epochs, and cross entropy is used to classify sleep stages. We also implemented Siamese CNNs and Siamese AEs and evaluated our method on SleepEDF and MASS-SS3. In our experiment, we used the DeepSleepNet (DSN) sleep staging model as a baseline. The results showed that the Siamese architecture proposed by us not only significantly improved the performance of DSN, but also outperformed the SOTA methods in sleep staging. In our future work, we will focus on applying our method to sequence-to-sequence models to improve the performance.

Author Contributions

Conceptualization, Y.Y., Z.Y. and X.G.; methodology, Y.Y., Z.Y. and W.S.; software, Z.Y. and W.S.; validation, X.G.; formal analysis, X.G.; investigation, W.S.; resources, Y.Y.; data curation, Y.Y.; writing—original draft preparation, X.G. and W.S.; writing—review and editing, Y.Y., X.G. and Z.Y.; visualization, X.G.; supervision, Y.Y.; project administration, Y.Y.; funding acquisition, Y.Y. and Z.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by the National Natural Science Foundation of China (Nos. 81973744 and 81473579), CAMS Innovation Fund for Medical Science (CIFMS) (Nos. 2022-I2M-1-018, 2022-I2M-2-001), and the Beijing Natural Science Foundation (No. 7173267).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Link to publicly archived datasets SleepEDF: https://www.physionet.org/physiobank/database/sleep-edfx (accessed on 24 March 2021).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zoubek, L.; Charbonnier, S.; Lecocq, S.; Buguet, A.; Chapotot, F. Feature selection for sleep/wake stages classification using data-driven methods. Biomed. Signal Process. Control 2007, 2, 171–179. [Google Scholar] [CrossRef]

- Chattu, V.K.; Manzar, D.; Kumary, D.S.; Burman, D.; Spence, D.; Pandi-Perumal, S.R. The Global Problem of Insufficient Sleep and Its Serious Public Health Implications. Healthcare 2018, 7, 1. [Google Scholar] [CrossRef] [PubMed]

- Roy, Y.; Banville, H.; Albuquerque, I.; Gramfort, A.; Falk, T.H.; Faubert, J. Deep Learning-Based Electroencephalography Analysis: A Systematic Review. J. Neural Eng. 2019, 16, 051001. [Google Scholar] [CrossRef] [PubMed]

- Iber, C.; Ancoli-Israel, S.; Chesson, A.L., Jr.; Quan, S.F. The AASM Manual for the Scoring of Sleep and Associated Events; American Academy of Sleep Medicine: Westchester, IL, USA, 2007. [Google Scholar]

- Hobson, J.A. A manual of standardized terminology, techniques and scoring system for sleep stages of human subjects. Electroencephalogr. Clin. Neurophysiol. 1969, 26, 644. [Google Scholar] [CrossRef]

- Phan, H.; Andreotti, F.; Cooray, N.; Chén, O.Y.; Vos, M.D. Automatic Sleep Stage Classification Using Single-Channel EEG: Learning Sequential Features with Attention-Based Recurrent Neural Networks. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018; pp. 1452–1455. [Google Scholar]

- Alickovic, E.; Subasi, A. Ensemble SVM Method for Automatic Sleep Stage Classification. IEEE Trans. Instrum. Meas. 2018, 67, 1258–1265. [Google Scholar] [CrossRef]

- Da Silveira TL, T.; Kozakevicius, A.J.; Rodrigues, C.R. Single-channel EEG sleep stage classification based on a streamlined set of statistical features in the wavelet domain. Med. Biol. Eng. Comput. 2017, 55, 343–352. [Google Scholar] [CrossRef]

- Hassan, A.R.; Bhuiyan, M.I.H. Computer-aided sleep staging using complete ensemble empirical mode decomposition with adaptive noise and bootstrap aggregating. Biomed. Signal Process. Control 2016, 24, 1–10. [Google Scholar] [CrossRef]

- Automatic sleep stages classification using optimise flexible analytic wavelet transform. Knowl.-Based Syst. 2020, 19215, 10536.

- Phan, H.; Mikkelsen, K. Automatic Sleep Staging of EEG Signals: Recent Development, Challenges, and Future Directions. Physiol. Meas. 2022, 43, 04TR01. [Google Scholar] [CrossRef]

- Supratak, A.; Dong, H.; Wu, C.; Guo, Y. DeepSleepNet: A Model for Automatic Sleep Stage Scoring Based on Raw Single-Channel EEG. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 1998–2008. [Google Scholar] [CrossRef]

- Eldele, E.; Chen, Z.; Liu, C.; Wu, M.; Kwoh, C.-K.; Li, X.; Guan, C. An Attention-Based Deep Learning Approach for Sleep Stage Classification With Single-Channel EEG. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 809–818. [Google Scholar] [CrossRef]

- Conneau, A.; Kiela, D.; Schwenk, H.; Barrault, L.; Bordes, A. Supervised Learning of Universal Sentence Representations from Natural Language Inference Data. arXiv 2017, arXiv:1705.02364. [Google Scholar]

- Kaya, M.; Bilge, H.Ş. Deep Metric Learning: A Survey. Symmetry 2019, 11, 1066. [Google Scholar] [CrossRef]

- Deshpande, I.; Hu, Y.-T.; Sun, R.; Pyrros, A.; Siddiqui, N.; Koyejo, S.; Zhao, Z.; Forsyth, D.; Schwing, A. Max-Sliced Wasserstein Distance and Its Use for GANs. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Kolouri, S.; Nadjahi, K.; Simsekli, U.; Badeau, R.; Rohde, G.K. Generalized Sliced Wasserstein Distances 2019. Adv. Neural Inf. Process. Syst. 2019, 32. [Google Scholar]

- O’Reilly, C.; Gosselin, N.; Carrier, J.; Nielsen, T. Montreal Archive of Sleep Studies: An Open-Access Resource for Instrument Benchmarking and Exploratory Research. J. Sleep Res. 2014, 23, 628–635. [Google Scholar] [CrossRef]

- Goldberger, A.L.; Amaral, L.A.N.; Glass, L.; Hausdorff, J.M.; Ivanov, P.C.; Mark, R.G.; Mietus, J.E.; Moody, G.B.; Peng, C.-K.; Stanley, H.E. PhysioBank, PhysioToolkit, and PhysioNet: Components of a New Research Resource for Complex Physiologic Signals. Circulation 2000, 101. [Google Scholar] [CrossRef]

- Tsinalis, O.; Matthews, P.M.; Guo, Y. Automatic Sleep Stage Scoring Using Time-Frequency Analysis and Stacked Sparse Autoencoders. Ann. Biomed. Eng. 2016, 44, 1587–1597. [Google Scholar] [CrossRef]

- Tsinalis, O.; Matthews, P.M.; Guo, Y.; Zafeiriou, S. Automatic Sleep Stage Scoring with Single-Channel EEG Using Convolutional Neural Networks 2016. arXiv 2016, arXiv:1610.01683. [Google Scholar]

- Perslev, M.; Jensen, M.H.; Darkner, S.; Jennum, P.J.; Igel, C. U-Time: A Fully Convolutional Network for Time Series Segmentation Applied to Sleep Staging. Adv. Neural Inf. Process. Syst. 2019, 32. [Google Scholar]

- Phan, H.; Andreotti, F.; Cooray, N.; Chen, O.Y.; De Vos, M. Joint Classification and Prediction CNN Framework for Automatic Sleep Stage Classification. IEEE Trans. Biomed. Eng. 2019, 66, 1285–1296. [Google Scholar] [CrossRef]

- Huang, W.; Cheng, J.; Yang, Y.; Guo, G. An Improved Deep Convolutional Neural Network with Multi-Scale Information for Bearing Fault Diagnosis. Neurocomputing 2019, 359, 77–92. [Google Scholar] [CrossRef]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar]

- Chopra, S.; Hadsell, R.; LeCun, Y. Learning a Similarity Metric Discriminatively, with Application to Face Verification. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05); IEEE: San Diego, CA, USA, 2005; Volume 1, pp. 539–546. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Hochreiter; Sepp; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Schuster, M.; Paliwal, K.K. Bidirectional Recurrent Neural Networks. IEEE Trans. Signal Process. 1997, 45, 2673–2681. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Sokolova, M.; Lapalme, G. A Systematic Analysis of Performance Measures for Classification Tasks. Inf. Process. Manag. 2009, 45, 427–437. [Google Scholar] [CrossRef]

- Mousavi, S.; Afghah, F.; Acharya, U.R. SleepEEGNet: Automated Sleep Stage Scoring with Sequence to Sequence Deep Learning Approach. PLoS ONE 2019, 14, e0216456. [Google Scholar] [CrossRef]

- Sun, Y.; Wang, B.; Jin, J.; Wang, X. Deep Convolutional Network Method for Automatic Sleep Stage Classification Based on Neurophysiological Signals. In Proceedings of the 2018 11th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI); IEEE: Beijing, China, 2018; pp. 1–5. [Google Scholar]

- Mixed Neural Network Approach for Temporal Sleep Stage Classification. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 324–333. [CrossRef]

- Phan, H.; Andreotti, F.; Cooray, N.; Chen, O.Y.; De Vos, M. SeqSleepNet: End-to-End Hierarchical Recurrent Neural Network for Sequence-to-Sequence Automatic Sleep Staging. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 400–410. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).