2. Weibull-Lognormal-Pareto Model

Suppose

is a random variable with probability density function (pdf)

where

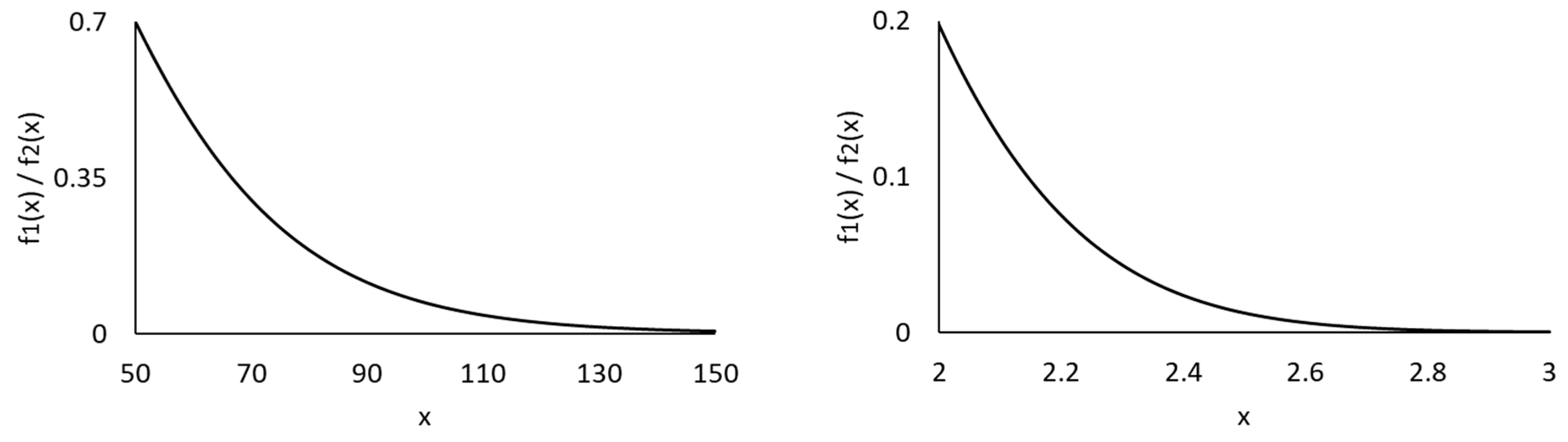

In effect, is the pdf of for , is the pdf of for and , and is the pdf of for , where ϕ, τ, μ, σ, and α are the model parameters. The weights and decide the total probability of each segment. The thresholds and are the points at which the Weibull and lognormal distributions are truncated, and they represent the splitting points between the three data ranges. We refer to this model as the Weibull-lognormal-Pareto model.

In line with previous authors including

Cooray and Ananda (

2005), two continuity conditions

and

, and also two differentiability conditions

and

are imposed at the two thresholds. It can be deduced that the former leads to the two equations below for the weights:

and that the latter generates the following two constraints:

Because of these four relationships, there are effectively five unknown parameters, including

,

,

,

, and

, with the others

,

,

, and

expressed as functions of these parameters. As in all the previous works on composite models, the second derivative requirement is not imposed here because it often leads to inconsistent parameter constraints. One can readily derive that the

kth moment of

is given as follows (see

Appendix A):

in which

is the lower incomplete gamma function and

.

5. Application to Two Data Sets

We first apply the three composite models to the well-known Danish data set of 2492 fire insurance losses (in millions of Danish Krone; a complete data set). The inflation-adjusted losses in the data range from 0.313 to 263.250 and are collected from the “SMPracticals” package in R. This data set has been studied in earlier works on composite models, including those of

Cooray and Ananda (

2005),

Scollnik and Sun (

2012),

Nadarajah and Bakar (

2014), and

Bakar et al. (

2015). For comparison, we also apply the Weibull, lognormal, Pareto, Burr, GB2, lognormal-Pareto, lognormal-GPD, lognormal-Burr, Weibull-Pareto, Weibull-GPD, and Weibull-Burr models to the data. Based on the reported results from the authors mentioned above, the Weibull-Burr model has been shown to produce the highest log-likelihood value and the lowest Akaike Information Criterion (AIC) value for this Danish data set.

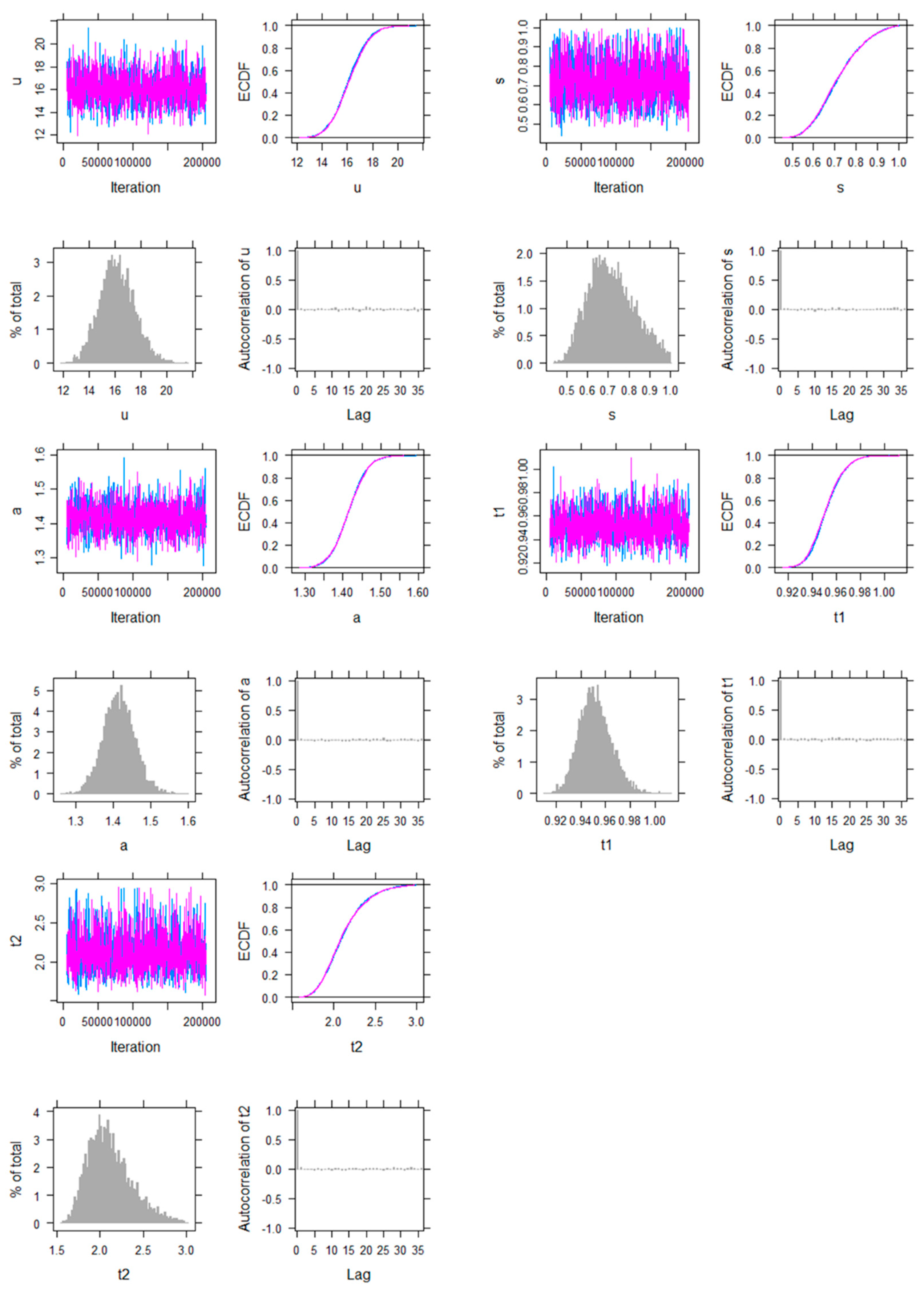

The previous authors mainly used the maximum likelihood estimation (MLE) method to fit their composite models. While we still use the MLE to estimate the parameters (with nlminb in R), we also perform a Bayesian analysis via Markov chain Monte Carlo (MCMC) simulation. More specifically, random samples are simulated from a Markov chain which has its stationary distribution being equal to the joint posterior distribution. Under the Bayesian framework, the posterior distribution is derived as

. We perform MCMC simulations via the software JAGS (Just Another Gibbs Sampler) (

Plummer 2017), which uses the Gibbs sampling method. We make use of non-informative uniform priors for the unknown parameters. Note that the posterior modes under uniform priors generally correspond to the MLE estimates. For each MCMC chain, we omit the first 5000 iterations and collect 5000 samples afterwards. Since the estimated Monte Carlo errors are all well within 5% of the sample posterior standard deviations, the level of convergence to the stationary distribution is considered adequate in our analysis. Some JAGS outputs of MCMC simulation are provided in the

Appendix A. We employ the “ones trick” (

Spiegelhalter et al. 2003) to specify the new models in JAGS. The Bayesian estimates provide a useful reference for checking the MLE estimates. Despite the major differences in their underlying theories, their numerical results are expected to be reasonably close here, as we use non-informative priors, leading to most of the weights being allocated to the posterior mean rather than the prior mean. Since the posterior distribution of the unknown parameters of the proposed models are analytically intractable, the MCMC simulation procedure is a useful method for approximating the posterior distribution (

Li 2014).

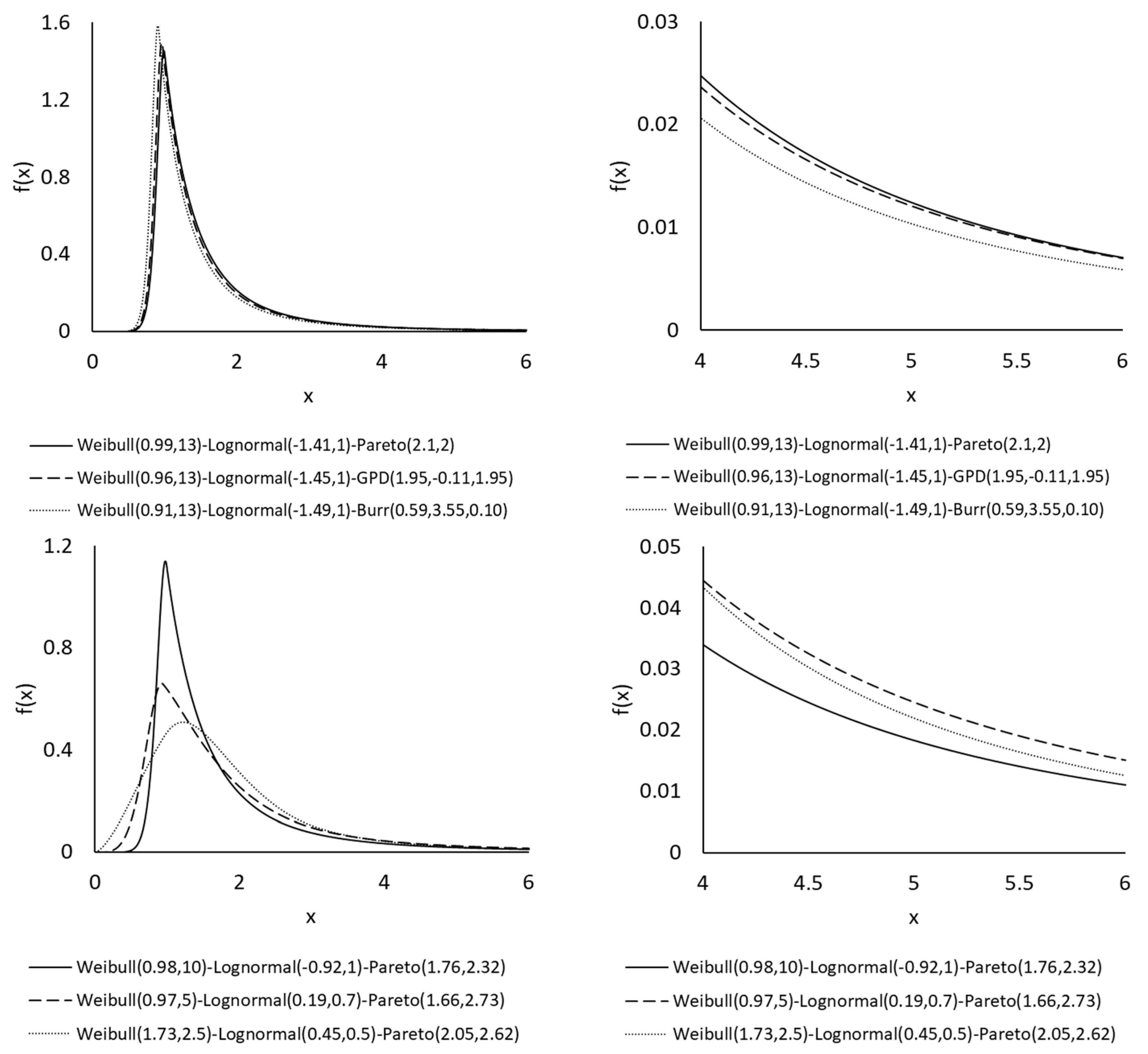

Table 1 reports the negative log-likelihood (NLL), AIC, Bayesian Information Criterion (BIC), Kolmogorov-Smirnov (KS) test statistic, and Deviance Information Criterion (DIC) values

1 for the 14 models tested. The ranking of each model under each test is given in brackets, in which the top three performers are highlighted for each test. Overall, the Weibull-lognormal-Pareto model appears to provide the best fit, with the lowest AIC, BIC, and DIC values and the second lowest NLL and KS values. The second position is taken by the Weibull-lognormal-GPD model, which produces the lowest NLL and KS values and the second (third) lowest AIC (DIC). The Weibull-lognormal-Burr and Weibull-Burr models come next, each of which occupies at least two top-three positions. Apparently, the new three-component composite models outperform the traditional models as well as the earlier two-component composite models. The P–P (probability–probability) plots in

Figure 3 indicate clearly that the new models describe the data very well. Recently,

Grün and Miljkovic (

2019) tested 16 × 16 = 256 two-component models on the same Danish data set, using a numerical method (via numDeriv in R) to find the derivatives for the differentiability condition rather than deriving the derivatives from first principles as in the usual way. Based on their reported results, the Weibull-Inverse-Weibull model gives the lowest BIC (7671.30), and the Paralogistic-Burr and Inverse-Burr-Burr models give the lowest KS test values (0.015). Comparatively, as shown in

Table 1, the Weibull-lognormal-Pareto model produces a lower BIC (7670.88) and all the three new composite models give lower KS values (around 0.011), which are smaller than the critical value at 5% significance level, and imply that the null hypothesis is not rejected.

Table 2 compares the fitted model quantiles (from MLE) against the empirical quantiles. It can be seen that the differences between them are generally small. This result conforms with the P–P plots in

Figure 3. Note that the estimated weights of the three-component composite models are about

and

. These estimates suggest that the claim amounts can be split into three categories of small, medium, and large sizes, with expected proportions of 8%, 54%, and 38%. For pricing, reserving, and reinsurance purposes, the three groups of claims may further be studied separately, possibly with different sets of covariates where feasible, as they may have different underlying driving factors (especially for long-tailed lines of business).

Table 3 lists the parameter estimates of the three-component composite models obtained from the MLE method and also the Bayesian MCMC method. It is reassuring to see that not only the MLE estimates and the Bayesian estimates but also their corresponding standard errors and posterior standard deviations are fairly consistent with one another in general. A few exceptions include

and

, which may suggest that these parameter estimates are not as robust and are less significant. This implication is in line with the fact that the Weibull-lognormal-GPD and Weibull-lognormal-Burr models are only the second and third best models for this Danish data set.

We then apply the 14 models to a vehicle insurance claims data set, which was collected from

http://www.businessandeconomics.mq.edu.au/our_departments/Applied_Finance_and_Actuarial_Studies/research/books/GLMsforInsuranceData (accessed on 2 August 2020). There are 3911 claims in 2004 and 2005 ranging from

$201.09 to

$55,922.13. For computation convenience, we model the claims in thousand dollars.

Table 4 shows that the Weibull-lognormal-GPD and Weibull-lognormal-Burr models are the two best models in terms of all the test statistics covered. They are followed by the Weibull-Burr and lognormal-Burr models, which produce the next lowest NLL, AIC, BIC, and DIC values. As shown in

Table 5, the fitted model quantiles and the empirical quantiles are reasonably close under the two best models. It is noteworthy that the Weibull-lognormal-Pareto model ranks only about fifth amongst the 14 models. For this model, the computed second threshold (

) turns out to be larger than the maximum claim amount observed in the data. This implies that the Pareto tail part is not needed or preferred at all for the data under this model, and the fitted model effectively becomes a Weibull-lognormal model. By contrast, for the Weibull-lognormal-GPD and Weibull-lognormal-Burr models, the GPD and Burr tail parts are important components that need to be incorporated (

= 4.6 and 3.5). Similar observations can be made among the two-component models, in which the GPD and Burr tails are selected over the Pareto tail. The estimated weights of the best composite models are around

and

.

Table 6 gives the parameter estimates of the three-component composite models, and again the MLE estimates and the Bayesian estimates are roughly in line.

Blostein and Miljkovic (

2019) proposed a grid map as a risk management tool for risk managers to consider the trade-off between the best model based on the AIC or BIC and the risk measure. It covers the entire space of models under consideration, and allows one to have a comprehensive view of the different outcomes under different models. In

Figure 4, we extend this grid map idea into a 3D map, considering more than just one model selection criterion. It can serve as a summary of the tail risk measures given by the 14 models being tested, comparing the tail estimates between the best models and the other models under two chosen statistical criteria. For both data sets, it is informative to see that the 99% value-at-risk (VaR) estimates are robust amongst the few best model candidates, while there is a range of outcomes for the other less than optimal models (the 99% VaR is calculated as the 99th percentile based on the fitted model). It appears that the risk measure estimates become more and more stable and consistent as we move to progressively better performing models. This 3D map can be seen as a new risk management tool and it would be useful for risk managers to have an overview of the whole model space and examine how the selection criteria would affect the resulting assessment of the risk. In particular, in many other modelling cases, there could be several equally well-performing models which produce significantly different risk measures, and this tool can provide a clear illustration for more informed model selection. Note that other risk measures and selection criteria than those in

Figure 4 can be adopted in a similar way.

To our knowledge, regression has not been tested on any of the composite models so far in the actuarial literature. We now explore applying regression under the proposed model structure via the MLE method. Besides the claim amounts, the vehicle insurance claims data set also contains some covariates including the exposure, vehicle age, driver age, and gender (see

Table 7). We select the best performing Weibull-lognormal-GPD model (see

Table 4) and assume that

,

, and

are functions of the explanatory variables, based on the first moment derived in

Section 3. We use a log link function for

and

to ensure that they are non-negative, and an identity link function for

2. It is very interesting to observe from the results in

Table 7 that different model components (and so different claim sizes) point to different selections of covariates. For the largest claims, all the tested covariates are statistically significant, in which the claim amounts tend to increase as the exposure, vehicle age, and driver age decrease, and the claims are larger for male drivers on average. By sharp contrast, most of these covariates are not significant for the medium-sized claims and also the smallest claims. The only exception is the driver age for the smallest claims, but its effect is opposite to that for the largest claims. These differences are insightful in the sense that the underlying risk drivers can differ between the various sources or reasons behind the claims, and it is very important to take into account these subtle discrepancies in order to obtain a more accurate price on the risk. A final note is that while

remains about the same level after embedding regression,

has increased to 0.734 (when compared to

Table 6). The inclusion of the explanatory variables has led to a larger allocation to the Weibull component but a smaller allocation to the lognormal component.

As a whole, it is interesting to see the gradual development over time in the area of modelling individual claim amounts. As illustrated in

Table 1 and

Table 4, the simple models (Weibull, lognormal, Pareto) fail to capture the important features of the complete data set when its size is large. More general models with additional parameters and so more flexibility (Burr, GB2) are then explored as an alternative, which does bring some improvement over the simple models. The two-component composite lognormal-kind models represent a significant innovation in combining two distinct densities, though these models do not always lead to obvious improvement over traditional three- and four-parameter distributions. Later, some studies showed that two-component composite Weibull-, Paralogistic-, and Inverse-Burr-kind models can produce better fitting results. In the present work, we take a step ahead and demonstrate that a three-component composite model, with the Weibull for small claims, lognormal for moderate claims, and a heavy tail for large claims, can further improve the fitting performance. Moreover, based on the estimated parameters, there is a rather objective guide for splitting the claims into different groups, which can then be analysed separately for their own underlying features (e.g.,

Cebrián et al. 2003). This kind of separate analysis is particularly important for some long-tailed lines of business, such as public and product liability, for which certain large claims can delay significantly due to specific legal reasons. Note that the previous two-component composite models, when fitted to the two insurance data sets, suggest a split at around the 10% quantile, which is in line with the estimated values of

reported earlier. The proposed three-component composite models can make a further differentiation between moderate and large claim sizes.