Poverty Traps in Online Knowledge-Based Peer-Production Communities

Abstract

:1. Introduction

- Our analysis presents an important practical approach towards understanding reputation systems for collaborative knowledge-based peer-production communities by treating reputation points as currency and Q&A exchanges as scarce resources.

- The results raise important questions about the structure of reputation systems and move toward developing mechanisms that increase equity and lower the barrier for new users.

- We present a set of design recommendations to inform the creation and maintenance of information-sharing and aggregation communities, such as Q&A sites, based on our findings.

2. Background

2.1. Stack Exchange Overview

2.2. Reputation Systems

2.3. Stack Exchange Reputation System

2.4. Types of Q&A on Stack Exchange

- Questions should be unique to the programming profession (a specific programming problem, a software algorithm, software tools commonly used by programmers, etc.).

- Questions should not be based on opinion (e.g., what do you think is better: “x” or “y”).

- Questions should be reasonably scoped [24].

2.5. Prior Findings and Reputation Systems

2.6. Poverty Traps in Online Knowledge-Based Peer-Production Communities

2.7. Theoretical Analysis

3. Methodology

- Do communities exhibit value bias towards earlier Q&A submissions?

- Does the strength and/or existence of this bias depend on the domain?

- Does the existence of a time bias influence the amount of reputation a participant can earn?

4. Data Set

5. Results

- Do communities exhibit value bias towards earlier Q&A submissions?

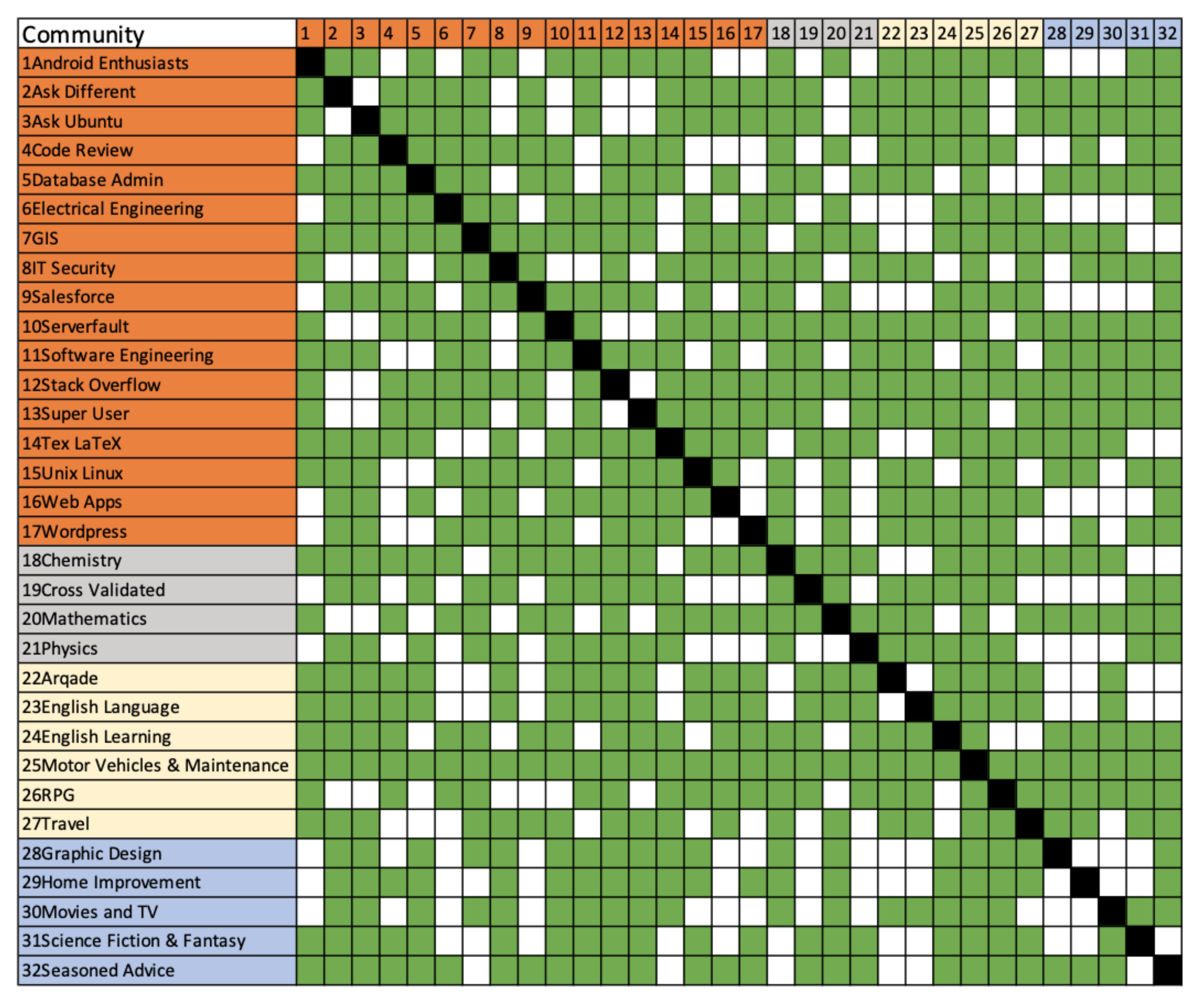

- Does the strength and/or existence of this bias depend on the domain?

- Does the existence of a time bias influence the amount of reputation a participant can earn?

5.1. The Value of Early Q&A Submissions

5.2. The Value of Early Questions in Each Community

5.3. User Ability to Earn Reputation Based on Tenure

6. Discussion

7. Conclusions

- -

- Community administrators should understand the scarcity of valuable Q&A interactions as a community ages. A progressive community should seek to include inflationary measures that allow new users to overcome the advantages had by more tenured users. In addition, administrators could relax policies toward duplicated questions, as they are often asked by novice users and may actually be a source of new information [34].

- -

- Community administrators should reward users for improving existing information by allowing them to share in the reputation revenue. Instead of merely offering small reputation rewards for improving existing material, a progressive scheme would allow for reputation sharing to improve information interaction.

- -

- Community administrators should tailor their reputation scheme based on the domain in which the community exists and modify the reputation system based on the severity of the poverty trap.

Limitations

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| Q&A | Question and Answer |

| GLM | Generalized Linear Model |

References

- Anderson, A.; Huttenlocher, D.; Kleinberg, J.; Leskovec, J. Discovering Value from Community Activity on Focused Question Answering Sites: A Case Study of Stack Overflow. In Proceedings of the 18th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Beijing, China, 12–16 August 2012; ACM: New York, NY, USA, 2012; pp. 850–858. [Google Scholar] [CrossRef]

- Tausczik, Y.R.; Kittur, A.; Kraut, R.E. Collaborative Problem Solving: A Study of MathOverflow. In Proceedings of the 17th ACM Conference on Computer Supported Cooperative Work & Social Computing, Baltimore, MD, USA, 15–19 February 2014; ACM: New York, NY, USA, 2014; pp. 355–367. [Google Scholar] [CrossRef]

- Tausczik, Y.R.; Pennebaker, J.W. Participation in an Online Mathematics Community: Differentiating Motivations to Add. In Proceedings of the ACM 2012 Conference on Computer Supported Cooperative Work, Seattle, WA, USA, 11–15 February 2012; ACM: New York, NY, USA, 2012; pp. 207–216. [Google Scholar] [CrossRef]

- Li, G.; Zhu, H.; Lu, T.; Ding, X.; Gu, N. Is It Good to Be Like Wikipedia? Exploring the Trade-offs of Introducing Collaborative Editing Model to Q&A Sites. In Proceedings of the 18th ACM Conference on Computer Supported Cooperative Work & Social Computing, Vancouver, BC, Canada, 14–18 March 2015; ACM: New York, NY, USA, 2015; pp. 1080–1091. [Google Scholar] [CrossRef]

- Mamykina, L.; Manoim, B.; Mittal, M.; Hripcsak, G.; Hartmann, B. Design Lessons from the Fastest Q&a Site in the West. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Vancouver, BC, Canada, 7–12 May 2011; ACM: New York, NY, USA, 2011; pp. 2857–2866. [Google Scholar] [CrossRef]

- MacLeod, L. Reputation on Stack Exchange: Tag, You’re It! In Proceedings of the 2014 28th International Conference on Advanced Information Networking and Applications Workshops, Victoria, BC, Canada, 13–16 May 2014; pp. 670–674. [Google Scholar] [CrossRef]

- Adamic, L.A.; Zhang, J.; Bakshy, E.; Ackerman, M.S. Knowledge Sharing and Yahoo Answers: Everyone Knows Something. In Proceedings of the 17th International Conference on World Wide Web, Beijing, China, 21–25 April 2008; ACM: New York, NY, USA, 2008; pp. 665–674. [Google Scholar] [CrossRef]

- Furtado, A.; Andrade, N.; Oliveira, N.; Brasileiro, F. Contributor Profiles, Their Dynamics, and Their Importance in Five Q&A Sites. In Proceedings of the 2013 Conference on Computer Supported Cooperative Work, San Antonio, TX, USA, 23–27 February 2013; ACM: New York, NY, USA, 2013; pp. 1237–1252. [Google Scholar] [CrossRef]

- Hardin, C.D.; Berland, M. Learning to Program Using Online Forums: A Comparison of Links Posted on Reddit and Stack Overflow (Abstract Only). In Proceedings of the 47th ACM Technical Symposium on Computing Science Education, Memphis, TN, USA, 2–5 March 2016; ACM: New York, NY, USA, 2016; p. 723. [Google Scholar] [CrossRef]

- Ford, H. Online Reputation: It’s Contextual, 2012. Available online: http://ethnographymatters.net/blog/2012/02/24/online-reputation-its-contextual/ (accessed on 27 April 2023).

- Treude, C.; Barzilay, O.; Storey, M.A. How Do Programmers Ask and Answer Questions on the Web? (NIER Track). In Proceedings of the 33rd International Conference on Software Engineering, Honolulu, HI, USA, 21–28 May 2011; ACM: New York, NY, USA, 2011; pp. 804–807. [Google Scholar] [CrossRef]

- Vargo, A.W.; Matsubara, S. Editing Unfit Questions in Q&A. In Proceedings of the 2016 5th IIAI International Congress on Advanced Applied Informatics (IIAI-AAI), Kumamoto, Japan, 10–14 July 2016; pp. 107–112. [Google Scholar] [CrossRef]

- Livan, G.; Pappalardo, G.; Mantegna, R.N. Quantifying the relationship between specialisation and reputation in an online platform. Sci. Rep. 2022, 12, 16699. [Google Scholar] [CrossRef] [PubMed]

- Adler, B.T.; de Alfaro, L. A Content-driven Reputation System for the Wikipedia. In Proceedings of the 16th International Conference on World Wide Web, Banff, AB, Canada, 8–12 May 2007; ACM: New York, NY, USA, 2007; pp. 261–270. [Google Scholar] [CrossRef]

- De Alfaro, L.; Kulshreshtha, A.; Pye, I.; Adler, B.T. Reputation Systems for Open Collaboration. Commun. ACM 2011, 54, 81–87. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Halfaker, A.; Geiger, R.S.; Morgan, J.T.; Riedl, J. The Rise and Decline of an Open Collaboration System How Wikipedia’s Reaction to Popularity Is Causing Its Decline. Am. Behav. Sci. 2013, 57, 664–688. [Google Scholar] [CrossRef] [Green Version]

- Wei, X.; Chen, W.; Zhu, K. Motivating User Contributions in Online Knowledge Communities: Virtual Rewards and Reputation. In Proceedings of the 2015 48th Hawaii International Conference on System Sciences, Kauai, HI, USA, 5–8 January 2015; pp. 3760–3769. [Google Scholar] [CrossRef]

- Wang, S.; German, D.M.; Chen, T.H.; Tian, Y.; Hassan, A.E. Is reputation on Stack Overflow always a good indicator for users’ expertise? No! In Proceedings of the 2021 IEEE International Conference on Software Maintenance and Evolution (ICSME), Luxembourg, 27 September–1 October 2021; pp. 614–618. [Google Scholar] [CrossRef]

- Chen, Y.; Ho, T.H.; Kim, Y.M. Knowledge Market Design: A Field Experiment at Google Answers. J. Public Econ. Theory 2010, 12, 641–664. [Google Scholar] [CrossRef]

- Jeon, G.Y.; Kim, Y.M.; Chen, Y. Re-examining Price As a Predictor of Answer Quality in an Online Q&A Site. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Atlanta, GA, USA, 10–15 April 2010; ACM: New York, NY, USA, 2010; pp. 325–328. [Google Scholar] [CrossRef]

- What Is Reputation? How Do I Earn (and Lose) It?—Help Center—Stack Overflow. 2015. Available online: http://stackoverflow.com/help/whats-reputation (accessed on 25 June 2023).

- Vargo, A.W.; Matsubara, S. Corrective or critical? Commenting on bad questions in Q&A. In Proceedings of the iConference 2016, iSchools, Philadelphia, PA, USA, 20–23 March 2016. [Google Scholar] [CrossRef] [Green Version]

- Answer to “What Are the Reputation Requirements for Privileges on Sites, and How Do They Differ per Site?”. 2023. Available online: https://meta.stackexchange.com/a/160292 (accessed on 25 June 2023).

- Help Center–What Types of Questions Should I Avoid Asking?—Stack Overflow, 2019. Available online: https://stackoverflow.com/help/dont-ask (accessed on 20 September 2019).

- Nam, K.K.; Ackerman, M.S.; Adamic, L.A. Questions in, Knowledge in?: A Study of Naver’s Question Answering Community. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Boston, MA, USA, 4–9 April 2009; ACM: New York, NY, USA, 2009; pp. 779–788. [Google Scholar] [CrossRef]

- Kang, M. Motivational affordances and survival of new askers on social Q&A sites: The case of Stack Exchange network. J. Assoc. Inf. Sci. Technol. 2022, 73, 90–103. [Google Scholar] [CrossRef]

- Adaji, I.; Vassileva, J. Towards Understanding User Participation in Stack Overflow Using Profile Data. In Proceedings of the Social Informatics, Bellevue, WA, USA, 11–14 November 2016; Springer: Cham, Switzerland, 2016; pp. 3–13. [Google Scholar] [CrossRef]

- Ginsca, A.L.; Popescu, A. User Profiling for Answer Quality Assessment in Q&A Communities. In Proceedings of the 2013 Workshop on Data-Driven User Behavioral Modelling and Mining from Social Media, San Francisco, CA, USA, 28 October 2013; ACM: New York, NY, USA, 2013; pp. 25–28. [Google Scholar] [CrossRef]

- Vargo, A.W.; Matsubara, S. Identity and performance in technical Q&A. Behav. Inf. Technol. 2018, 37, 658–674. [Google Scholar] [CrossRef]

- Jain, S.; Chen, Y.; Parkes, D.C. Designing Incentives for Online Question and Answer Forums. In Proceedings of the 10th ACM Conference on Electronic Commerce, Stanford, CA, USA, 6–10 July 2009; ACM: New York, NY, USA, 2009; pp. 129–138. [Google Scholar] [CrossRef] [Green Version]

- Solomon, J.; Wash, R. Critical Mass of What? Exploring Community Growth in WikiProjects. In Proceedings of the Eighth International AAAI Conference on Weblogs and Social Media, Ann Arbor, MI, USA, 1–4 June 2014. [Google Scholar]

- All Sites—Stack Exchange. 2023. Available online: https://stackexchange.com/sites?view=list#traffic (accessed on 28 March 2023).

- Diedenhofen, B.; Musch, J. cocor: A Comprehensive Solution for the Statistical Comparison of Correlations. PLoS ONE 2015, 10, e0121945. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Abric, D.; Clark, O.E.; Caminiti, M.; Gallaba, K.; McIntosh, S. Can Duplicate Questions on Stack Overflow Benefit the Software Development Community? In Proceedings of the 2019 IEEE/ACM 16th International Conference on Mining Software Repositories (MSR), Montreal, QC, Canada, 25–31 May 2019; pp. 230–234. [Google Scholar] [CrossRef] [Green Version]

| Privilege | Reputation Points Needed to Earn Privilege |

|---|---|

| Create Posts | 1 |

| Participate in Meta | 5 |

| Create Wiki Posts | 10 |

| Remove New User Restrictions | 10 |

| Vote Up | 15 |

| Flag Posts | 15 |

| Talk in Chat | 20 |

| Comment Everywhere | 50 |

| Set Bounties | 75 |

| Create Chat Rooms | 100 |

| Edit Community Wiki | 100 |

| Vote Down | 125 |

| Reduce Ads | 200 |

| View Close Votes | 250 |

| Access Review Queues | 500 |

| Create Gallery Chat Rooms | 1000 |

| Established User | 1000 |

| Create Tags | 1500 |

| Edit Posts (Questions and Answers) | 2000 |

| Create Tag Synonyms | 2500 |

| Cast Close and Reopen Votes | 3000 |

| Approve Tag Wiki Edits | 5000 |

| Access Moderator Tools | 10,000 |

| Protect Questions | 15,000 |

| Trusted User | 20,000 |

| Access to Site Analytics | 25,000 |

| Site | Category | Users (In Thousands) | Site Age in Months |

|---|---|---|---|

| Android Enthusiasts | Technology | 279 | 151 |

| Arqade (Video games) | Culture/Recreation | 202 | 153 |

| Ask Difference | Technology | 391 | 152 |

| Ask Ubuntu | Technology | 1389 | 153 |

| Chemistry | Science | 99 | 132 |

| Code Review | Technology | 234 | 147 |

| Cross-Validated (Statistics) | Science | 324 | 153 |

| Database Administrators | Technology | 233 | 147 |

| Electrical Engineering | Technology | 269 | 151 |

| English Language and Usage | Culture/Recreation | 378 | 152 |

| English Language Learners | Culture/Recreation | 141 | 123 |

| Geographic Information Systems (GIS) | Technology | 182 | 153 |

| Graphic Design | Life/Arts | 133 | 147 |

| Home Improvement | Life/Arts | 119 | 153 |

| Information Security | Technology | 239 | 149 |

| Mathematics | Science | 1018 | 153 |

| Motor Vehicle Maintenance and Repair | Culture/Recreation | 55 | 145 |

| Movies and TV | Life/Arts | 72 | 137 |

| Physics | Science | 287 | 149 |

| Role-Playing Games | Culture/Recreation | 62 | 152 |

| Salesforce | Technology | 102 | 129 |

| Science Fiction and Fantasy | Life/Arts | 127 | 147 |

| Seasoned Advice (Cooking) | Life/Arts | 74 | 153 |

| Server Fault | Technology | 874 | 168 |

| Software Engineering | Technology | 363 | 151 |

| Stack Overflow | Technology | 20,173 | 177 |

| Super User | Technology | 1464 | 165 |

| TeX–LaTeX | Technology | 250 | 153 |

| Travel | Culture/Recreation | 100 | 142 |

| Unix and Linux | Technology | 516 | 152 |

| Web Applications | Technology | 228 | 154 |

| WordPress Development | Technology | 188 | 152 |

| Site | Category | Sample Size | Correlation Coefficient |

|---|---|---|---|

| Motor Vehicle Maintenance and Repair | Culture/Recreation | 19,539 | 0.289 * |

| Seasoned Advice (Cooking) | Life/Arts | 21,665 | 0.2274 * |

| Chemistry | Science | 26,217 | 0.2226 * |

| Geographic Information Systems (GIS) | Technology | 40,147 | 0.2188 * |

| TeX–LaTeX | Technology | 43,220 | 0.2125 * |

| English Language Learners | Culture/Recreation | 34,563 | 0.2106 * |

| Arqade (Video games) | Culture/Recreation | 28,379 | 0.2104 * |

| Science Fiction and Fantasy | Life/Arts | 36,634 | 0.2067 * |

| Electrical Engineering | Technology | 37,276 | 0.1948 * |

| Salesforce | Technology | 36,227 | 0.1934 * |

| Home Improvement | Life/Arts | 29,771 | 0.1882 * |

| Graphic Design | Life/Arts | 20,605 | 0.1855 * |

| Web Applications | Technology | 23,700 | 0.183 * |

| Android Enthusiasts | Technology | 43,230 | 0.1786 * |

| Cross-Validated (Statistics) | Science | 40,823 | 0.176 * |

| Physics | Science | 39,885 | 0.1738 * |

| Movies and TV | Life/Arts | 20,249 | 0.1696 * |

| Code Review | Technology | 36,243 | 0.1634 * |

| WordPress Development | Technology | 32,729 | 0.1627 * |

| Unix and Linux | Technology | 42,060 | 0.1551 * |

| Travel | Culture/Recreation | 29,461 | 0.1541 * |

| Software Engineering | Technology | 32,603 | 0.141 * |

| Database Administrators | Technology | 35,419 | 0.1394 * |

| English Language and Usage | Culture/Recreation | 38,419 | 0.1388 * |

| Mathematics | Science | 46,034 | 0.1257 * |

| IT Security | Technology | 32,574 | 0.1171 * |

| Role-Playing Games (RPG) | Culture/Recreation | 30,778 | 0.1158 * |

| Ask Ubuntu | Technology | 44,707 | 0.1122 * |

| Ask Different | Technology | 38,594 | 0.1082 * |

| Super User | Technology | 43,343 | 0.1074 * |

| Server Fault | Technology | 44,145 | 0.0948 * |

| Stack Overflow | Technology | 48,109 | 0.0925 * |

| Site | Sample Size | Average Answer Score | Standard Deviation |

|---|---|---|---|

| Android Enthusiasts | 374 | 1.68 | 1.63 |

| Arqade (Video games) | 1109 | 3.39 | 2.52 |

| Ask Different | 816 | 2.69 | 3.08 |

| Ask Ubuntu | 2966 | 3.25 | 5.71 |

| Chemistry | 205 | 3.35 | 2.86 |

| Code Review | 542 | 3.07 | 1.51 |

| Cross-Validated (Statistics) | 952 | 3.08 | 3.69 |

| Database Administrators | 488 | 2.32 | 2.98 |

| Electrical Engineering | 836 | 2.11 | 1.60 |

| English Language and Usage | 1340 | 3.09 | 2.81 |

| English Language Learners | 610 | 2.40 | 2.29 |

| Geographic Information Systems (GIS) | 822 | 2.20 | 1.45 |

| Graphic Design | 250 | 2.54 | 2.31 |

| Home Improvement | 316 | 2.35 | 1.89 |

| Information Security | 631 | 4.06 | 3.97 |

| Mathematics | 4791 | 1.79 | 1.95 |

| Motor Vehicle Maintenance and Repair | 169 | 2.41 | 1.83 |

| Movies and TV | 169 | 8.28 | 5.24 |

| Physics | 1304 | 2.33 | 2.34 |

| Role-Playing Games | 543 | 7.26 | 4.95 |

| Salesforce | 696 | 1.39 | 1.36 |

| Science Fiction and Fantasy | 596 | 9.05 | 6.73 |

| Seasoned Advice (Cooking) | 308 | 3.73 | 2.67 |

| Server Fault | 2846 | 2.14 | 2.57 |

| Software Engineering | 947 | 5.17 | 5.31 |

| Stack Overflow | 48,373 | 2.6 | 6.81 |

| Super User | 4056 | 2.98 | 4.39 |

| TeX–LaTeX | 607 | 4.72 | 5.93 |

| Travel | 273 | 6.27 | 4.29 |

| Unix and Linux | 1472 | 4.13 | 6.43 |

| Web Applications | 223 | 2.88 | 2.27 |

| WordPress Development | 888 | 1.35 | 1.28 |

| Site | Estimate | Error | t-Value | |

|---|---|---|---|---|

| TeX–LaTeX | 0.0160 | 0.0010 | 0.384 | 16.0 *** |

| Cross-Validated (Statistics) | 0.0139 | 0.0008 | 0.319 | 17.2 *** |

| Motor Vehicle Maintenance and Repair | 0.0156 | 0.0018 | 0.296 | 8.7 *** |

| Database Administrators | 0.0135 | 0.0014 | 0.264 | 9.9 *** |

| Graphic Design | 0.0130 | 0.0016 | 0.257 | 8.0 *** |

| Unix and Linux | 0.0171 | 0.0011 | 0.257 | 16.0 *** |

| Android Enthusiasts | 0.0144 | 0.0018 | 0.222 | 8.0 *** |

| Mathematics | 0.0091 | 0.0004 | 0.205 | 25.7 *** |

| Chemistry | 0.0099 | 0.0016 | 0.204 | 6.2 *** |

| Web Applications | 0.0117 | 0.0015 | 0.202 | 7.8 *** |

| Electrical Engineering | 0.0087 | 0.0007 | 0.192 | 12.5 *** |

| WordPress Development | 0.0115 | 0.0009 | 0.180 | 12.6 *** |

| Salesforce | 0.0120 | 0.0011 | 0.166 | 10.5 *** |

| Stack Overflow | 0.0132 | 0.0003 | 0.153 | 51.3 *** |

| Arqade (Video games) | 0.0085 | 0.0006 | 0.150 | 13.6 *** |

| Super User | 0.0128 | 0.0006 | 0.149 | 19.9 *** |

| Ask Ubuntu | 0.0136 | 0.0009 | 0.140 | 15.2 *** |

| English Language Learners | 0.0106 | 0.0012 | 0.140 | 8.6 *** |

| Physics | 0.0086 | 0.0007 | 0.139 | 11.6 *** |

| English Language and Usage | 0.0092 | 0.0008 | 0.105 | 11.4 *** |

| Geographic Information Systems (GIS) | 0.0061 | 0.0007 | 0.105 | 9.0 *** |

| Server Fault | 0.0085 | 0.0006 | 0.100 | 14.2 *** |

| Ask Different | 0.0080 | 0.0012 | 0.086 | 6.6 *** |

| Software Engineering | 0.0089 | 0.0012 | 0.080 | 7.6 *** |

| Science Fiction and Fantasy | 0.0065 | 0.0009 | 0.078 | 7.0 *** |

| Code Review | 0.0038 | 0.0006 | 0.077 | 6.2 *** |

| Information Security | 0.0077 | 0.0014 | 0.064 | 5.5 *** |

| Home Improvement | 0.0047 | 0.0012 | 0.058 | 3.8 *** |

| Movies and TV | 0.0059 | 0.0019 | 0.058 | 3.2 *** |

| Seasoned Advice (Cooking) | 0.0035 | 0.0011 | 0.036 | 3.1 *** |

| Travel | 0.0043 | 0.0017 | 0.026 | 2.5 * |

| Role-Playing Games | −0.0005 | 0.0009 | 0.001 | −0.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vargo, A.; Tag, B.; Blakely, C.; Kise, K. Poverty Traps in Online Knowledge-Based Peer-Production Communities. Informatics 2023, 10, 61. https://doi.org/10.3390/informatics10030061

Vargo A, Tag B, Blakely C, Kise K. Poverty Traps in Online Knowledge-Based Peer-Production Communities. Informatics. 2023; 10(3):61. https://doi.org/10.3390/informatics10030061

Chicago/Turabian StyleVargo, Andrew, Benjamin Tag, Chris Blakely, and Koichi Kise. 2023. "Poverty Traps in Online Knowledge-Based Peer-Production Communities" Informatics 10, no. 3: 61. https://doi.org/10.3390/informatics10030061

APA StyleVargo, A., Tag, B., Blakely, C., & Kise, K. (2023). Poverty Traps in Online Knowledge-Based Peer-Production Communities. Informatics, 10(3), 61. https://doi.org/10.3390/informatics10030061