Can AI Technologies Support Clinical Supervision? Assessing the Potential of ChatGPT

Abstract

:1. Introduction

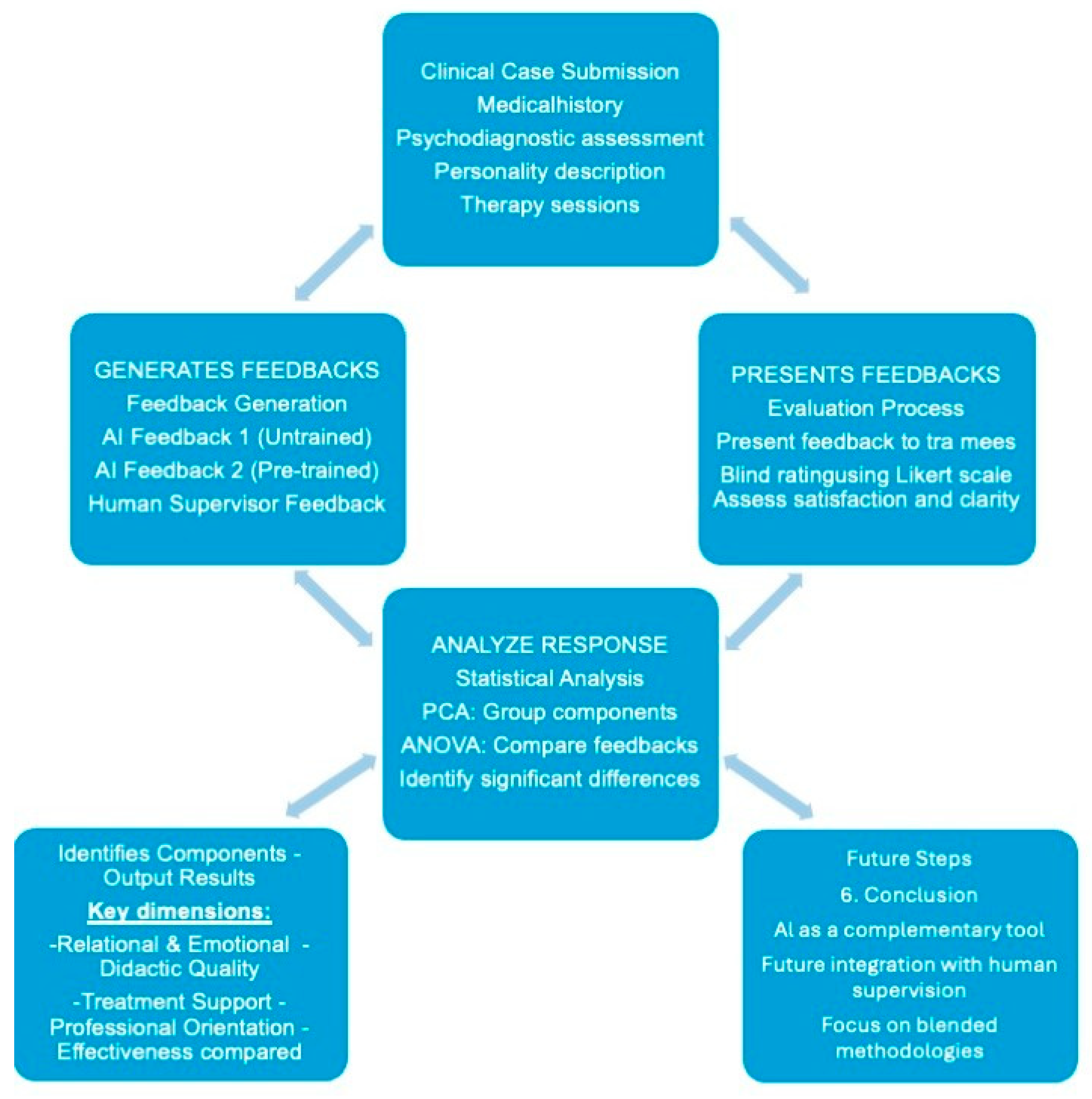

2. AIMS

3. Methodology

4. Results

- Relational and emotional dimension: empathic approach, usefulness of reflection, confidence support, emotional impact (on the evaluator).

- Didactic and technical quality: clarity, relevance, thoroughness, analysis of techniques.

- Treatment support and development: how helpful the feedback is to the treatment; the presence of practical suggestions to the therapist; how much it highlights areas for improvement; how equal communication appears.

- Professional orientation and adaptability: How deontologically oriented the feedback is; how much the feedback helps the contract; how well the feedback fits the therapist’s professional level; how helpful the feedback is to the therapist’s professional development. The individual component scores were obtained by summing the Likert scale values adjusted for the value of the coefficients. PCA explains 68.179% of the variance.

5. Discussions

6. Conclusions

7. Limitations and Future Developments

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Giusti, E.; Montanari, C.; Spalletta, E. La Supervisione Clinica Integrata. Manuale di Formazione Pluralistica in Counseling e Psicoterapia; Elsevier: Amsterdam, The Netherlands, 2000. [Google Scholar]

- Watkins, C.E., Jr. The psychotherapy supervisor as an agent of transformation: To anchor and educate, facilitate and emancipate. Am. J. Psychother. 2020, 73, 57–62. [Google Scholar] [CrossRef] [PubMed]

- Yontef, G. Supervision from a Gestalt therapy perspective. Br. Gestalt. J. 1996, 5, 92–102. [Google Scholar] [CrossRef]

- Rønnestad, M.H.; Orlinsky, D.E.; Schröder, T.A.; Skovholt, T.M.; Willutzki, U. The professional development of counsellors and psychotherapists: Implications of empirical studies for supervision, training and practice. Couns. Psychother. Res. 2019, 19, 214–230. [Google Scholar] [CrossRef]

- Watkins, C.E., Jr. Does psychotherapy supervision contribute to patient outcomes? Considering thirty years of research. Clin. Superv. 2011, 30, 235–256. [Google Scholar] [CrossRef]

- Cioffi, V.; Mosca, L.L.; Moretto, E.; Ragozzino, O.; Stanzione, R.; Bottone, M.; Sperandeo, R. Computational Methods in Psychotherapy: A Scoping Review. Int. J. Environ. Res. Public Health 2022, 19, 12358. [Google Scholar] [CrossRef] [PubMed]

- Tahan, M.; Zygoulis, P. Artificial Intelligence and Clinical Psychology, Current Trends. J. Clin. Dev. Psychol. 2020, 2, 31–48. [Google Scholar]

- Miner, A.S.; Shah, N.; Bullock, K.D.; Arnow, B.A.; Bailenson, J.; Hancock, J. Key considerations for incorporating conversational AI in psychotherapy. Front. Psychiatry 2019, 10, 746. [Google Scholar] [CrossRef] [PubMed]

- Luxton, D.D. Artificial intelligence in psychological practice: Current and future applications and implications. Prof. Psychol. Res. Pract. 2014, 45, 332. [Google Scholar] [CrossRef]

- Brown, J.E.; Halpern, J. AI chatbots cannot replace human interactions in the pursuit of more inclusive mental healthcare. SSM Ment. Health 2021, 1, 100017. [Google Scholar] [CrossRef]

- OpenAI; Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; McGrew, B. Gpt-4 technical report. arXiv 2023, arXiv:2303.08774. [Google Scholar]

- Cioffi, V.; Ragozzino, O.; Scognamiglio, C.; Mosca, L.L.; Moretto, E.; Stanzione, R.; Marino, F.; Acocella, A.; Ammendola, A.; D’Aquino, R.; et al. Towards integrated AI psychotherapy supervision: A proposal for a ChatGPT-4 study. 2025; in press. [Google Scholar] [CrossRef]

- Eshghie, M.; Eshghie, M. ChatGPT as a therapist assistant: A suitability study. arXiv 2023, arXiv:2304.09873. [Google Scholar] [CrossRef]

- Vahedifard, F.; Haghighi, A.S.; Dave, T.; Tolouei, M.; Zare, F.H. Practical Use of ChatGPT in Psychiatry for Treatment Plan and Psychoeducation. arXiv 2023, arXiv:2311.09131. [Google Scholar]

- Jo, E.; Jeong, Y.; Park, S.; Epstein, D.A.; Kim, Y.H. Understanding the impact of long-term memory on self-disclosure with large language model-driven chatbots for public health intervention. In Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11—16 May 2024; pp. 1–21. [Google Scholar]

- Clarkson, P. Psicoterapia Analitico-Transazionale: Un Approccio Integrato; Routledge: Londra, UK, 1992. [Google Scholar]

- Cronbach, L.J. Essentials of Psychological Testing; Harper & Row: New York, NY, USA, 1970. [Google Scholar]

- Jebb, A.T.; Ng, V.; Tay, L. A review of key Likert scale development advances: 1995–2019. Front. Psychol. 2021, 12, 637547. [Google Scholar] [CrossRef] [PubMed]

- Manzotti, R.; Rossi, S. IO & IA Mente, Cervello & GPT; Rubbettino: Soveria Mannelli, Italy, 2023. [Google Scholar]

- Lewin, K. Teoria del Campo Delle Scienze Sociali: Selected Theorical Papers; -Hardcover-; Harper & Brothers: Manhattan, NY, USA, 1951. [Google Scholar]

- Welivita, A.; Pu, P. Is ChatGPT More Empathetic than Humans? arXiv 2024, arXiv:2403.05572. [Google Scholar]

- Raile, P. The usefulness of ChatGPT for psychotherapists and patients. Humanit. Soc. Sci. Commun. 2024, 11, 1–8. [Google Scholar] [CrossRef]

- Francesetti, G.; Gecele, M.; Roubal, J. (Eds.) La Psicoterapia Della Gestalt Nella Pratica Clinica; Dalla Psicopatologia All’estetica del Contatto: Dalla Psicopatologia All’estetica del Contatto; FrancoAngeli: Milan, Italy, 2014. [Google Scholar]

- Lancini, M. Il Ritiro Sociale Negli Adolescenti: La Solitudine di una Generazione Iperconnessa; Raffaello Cortina Editore: Milan, Italy, 2020. [Google Scholar]

- Birkett, M. Autocompassione ed empatia Attraverso le culture: Confronto tra i giovani adulti in Cina e gli Stati Uniti. G. Internazionale Cerca Studi Psicol. 2014, 3, 25–34. [Google Scholar]

- Chopik, W.J.; O’Brien, E.; Konrath, S.H. Differences in empathic concern and perspective taking across 63 countries. J. Cross-Cult. Psychol. 2017, 48, 23–38. [Google Scholar] [CrossRef]

- Blackmore, K.L.; Smith, S.P.; Bailey, J.D.; Krynski, B. Integrating Biofeedback and Artificial Intelligence into eXtended Reality Training Scenarios: A Systematic Literature Review. Simul. Gaming 2024, 55, 445–478. [Google Scholar] [CrossRef]

- Sperandeo, R.; Di Sarno, A.D.; Longobardi, T.; Iennaco, D.; Mosca, L.L.; Maldonato, N.M. Toward a technological oriented assessment in psychology: A proposal for the use of contactless devices for heart rate variability and facial emotion recognition in psychological diagnosis. In Proceedings of the First Symposium on Psychology-Based Technologies co-located with XXXII National Congress of Italian Association of Psychology—Development and Education Section (AIP 2019), Naples, Italy, 25–26 September 2019. [Google Scholar]

| ITEMS | Not at All/Poor | A Little/Sufficient | Quite /Good | Very Good/Excellent |

|---|---|---|---|---|

| Did you find the feedback clear? | 1 | 2 | 3 | 4 |

| Is this feedback relevant to the clinical case presented? | 1 | 2 | 3 | 4 |

| Is this feedback comprehensive? | 1 | 2 | 3 | 4 |

| Did this feedback help the supervisee define the therapeutic contract with the patient? | 1 | 2 | 3 | 4 |

| Does this feedback provide useful insights for the patient’s treatment? | 1 | 2 | 3 | 4 |

| Does this feedback take into account the supervisee’s professional level? | 1 | 2 | 3 | 4 |

| Does this feedback contribute to the professional development of the therapist? | 1 | 2 | 3 | 4 |

| Did this feedback adequately address the ethical and deontological aspects of the clinical case? | 1 | 2 | 3 | 4 |

| Does this feedback provide practical suggestions useful for the therapist? | 1 | 2 | 3 | 4 |

| Does this feedback offer an analysis of the techniques used in the sessions? | 1 | 2 | 3 | 4 |

| Does this feedback constructively highlight any areas for improvement? | 1 | 2 | 3 | 4 |

| Did reading this feedback have an emotional impact on you? | 1 | 2 | 3 | 4 |

| Do you consider this feedback to be characterized by an empathetic approach? | 1 | 2 | 3 | 4 |

| Does this feedback come across as a collegial communication between peers? | 1 | 2 | 3 | 4 |

| Did this feedback help strengthen the supervisee’s capacity for self-reflection? | 1 | 2 | 3 | 4 |

| Has this feedback improved the supervisee’s confidence in clinical case management? | 1 | 2 | 3 | 4 |

| Component | ||||

|---|---|---|---|---|

| C1: Relational and Emotional Dimension | C2: Didactic and Technical Quality | C3: Treatment Support and Development | C4: Professional Orientation and Adaptability | |

| Empathic approach | 0.812 | |||

| It helps self-reflection | 0.633 | |||

| It helps confidence | 0.741 | |||

| Emotional impact | 0.776 | |||

| Clarity | 0.846 | |||

| Relevance | 0.544 | |||

| Completeness | 0.733 | |||

| Analysis of techniques | 0.637 | |||

| Treatment aids | 0.489 | |||

| Practical suggestions to the therapist | 0.82 | |||

| It highlights areas for improvement | 0.806 | |||

| Peer-to-peer communication | 0.512 | |||

| Deontologically oriented | 0.578 | |||

| It helps contract | 0.667 | |||

| Appropriate to professional level | 0.772 | |||

| Useful for professional development | 0.683 | |||

| Extraction method: Principal component analysis. | ||||

| Rotation method: Varimax with Kaiser normalization. The convergence for rotation performed in 7 iterations. | ||||

| KMO measure of sampling adequacy = 0.860 | ||||

| Average | Standard Deviation | Of 95% Confidence Interval | |||||

|---|---|---|---|---|---|---|---|

| Lower Limit | Upper Limit | F | p | ||||

| C1: Relational and emotional dimension | fb1 | 10.395 | 2.773 | 9.250 | 11.540 | 5.733 | |

| fb2 | 13.011 ** | 2.255 | 12.058 | 13.962 | 0.005 | ||

| 2.616 (A.D.) | 0.779 (S.E.) | 1.061 | 4.170 | 0.001 | |||

| fb3 | 11.982 | 3.114 | 10.601 | 13.363 | |||

| C2: Didactic and Technical Quality | fb1 | 12.502 | 2.370 | 11.524 | 13.481 | 1.287 | 0.283 |

| fb2 | 13.625 | 2.327 | 12.642 | 14.607 | |||

| fb3 | 12.849 | 2.802 | 11.606 | 14.091 | |||

| C3: Treatment support and development | fb1 | 11.281 | 2.059 | 10.431 | 12.131 | 0.269 | 0.765 |

| fb2 | 10.966 | 2.153 | 10.057 | 11.875 | |||

| fb3 | 11.450 | 2.631 | 10.283 | 12.616 | |||

| C4: Professional Orientation and Adaptability | fb1 | 10.423 | 2.080 | 9.564 | 11.282 | 3.385 | |

| fb2 | 11.911 * | 1.961 | 11.082 | 12.739 | 0.04 | ||

| 1.488 (A.D.) | 0.648 (S.E) | 0.194 | 2.782 | 0.025 | |||

| fb3 | 10.438 | 2.74 | 9.223 | 11.652 | |||

| Items | Average | Standard Deviation | of 95% Confidence Interval for Average | ||||

|---|---|---|---|---|---|---|---|

| LL | UL | F | p | ||||

| Clarity | fb1 | 3.08 | 0.64 | 2.82 | 3.34 | 2.42 | 0.097 0.032 |

| fb2 | 3.33 0.470 (A.D) | 0.702 0.214 (S.E.) | 3.04 0.04 | 3.63 0.90 | |||

| fb3 | 2.86 | 0.834 | 2.49 | 3.23 | |||

| Relevance | fb1 | 3.08 | 0.702 | 2.79 | 3.37 | 0.887 | 0.417 |

| fb2 | 3.29 | 0.69 | 3 | 3.58 | |||

| fb3 | 3.32 | 0.646 | 3.03 | 3.6 | |||

| Completeness | fb1 | 2.88 | 0.781 | 2.56 | 3.2 | 0.914 | 0.406 |

| fb2 | 3.13 | 0.612 | 2.87 | 3.38 | |||

| fb3 | 2.86 | 0.834 | 2.49 | 3.23 | |||

| Empathic approach | fb1 | 2.28 | 0.843 | 1.93 | 2.63 | 17.408 | <0.001 0.000 0.006 |

| fb2 | 3.33 * 1.053 0.697 (A.D.) | 0.637 0.239 0.247 (S.E.) | 3.06 0.20 | 3.6 1.19 | |||

| fb3 | 2.64 | 1.002 | 2.19 | 3.08 | |||

| Deontologically oriented | fb1 | 2.68 | 0.852 | 2.33 | 3.03 | 0.212 | 0.647 |

| fb2 | 2.63 | 0.824 | 2.28 | 2.97 | |||

| fb3 | 2.36 | 1.002 | 1.92 | 2.81 | |||

| It helps the contract | fb1 | 2.28 | 0.678 | 2 | 2.56 | 7.062 | 0.01 0.011 |

| fb2 | 2.88 * 0.595 (A.D.) | 0.797 0.227 (S.E.) | 2.54 0.14 | 3.21 1.05 | |||

| fb3 | 2.41 | 0.908 | 2.01 | 2.81 | |||

| It helps the treatment | fb1 | 2.88 | 0.6 | 2.63 | 3.13 | 0.067 | 0.797 |

| fb2 | 2.96 | 0.751 | 2.64 | 3.28 | |||

| fb3 | 3.14 | 0.941 | 2.72 | 3.55 | |||

| Analysis of techniques | fb1 | 2.84 | 0.8 | 2.51 | 3.17 | 0.164 | 0.687 |

| fb2 | 2.75 | 0.737 | 2.44 | 3.06 | |||

| fb3 | 2.82 | 0.795 | 2.47 | 3.17 | |||

| Practical suggestions to the therapist | fb1 | 2.96 | 0.841 | 2.61 | 3.31 | 1.465 | 0.23 |

| fb2 | 2.83 | 0.761 | 2.51 | 3.15 | |||

| fb3 | 3.18 | 0.733 | 2.86 | 3.51 | |||

| Suitable for professional level | fb1 | 2.32 | 0.852 | 1.97 | 2.67 | 13.325 | 0.001 0.001 0.005 |

| fb2 | 3.17 * 0.847 0.758 (A.D.) | 0.868 0.250 0.258 (S.E.) | 2.8 0.35 0.25 | 3.53 1.35 1.27 | |||

| fb3 | 2.41 | 0.908 | 2.01 | 2.81 | |||

| Useful for professional development | fb1 | 3 | 0.645 | 2.73 | 3.27 | 2.241 | 0.139 |

| fb2 | 3.29 | 0.751 | 2.97 | 3.61 | |||

| fb3 | 3 | 0.926 | 2.59 | 3.41 | |||

| It highlights areas for improvement | fb1 | 3.28 | 0.678 | 3 | 3.56 | 5.634 | 0.02 0.04 |

| fb2 | 2.88 | 0.741 | 2.56 | 3.19 | |||

| fb3 | 3.32 * 0.443 (A.D.) | 0.716 0.210 (S.E.) | 3 0.02 | 3.64 0.86 | |||

| It helps self-reflection | fb1 | 2.72 | 0.614 | 2.47 | 2.97 | 1.409 | 0.239 |

| fb2 | 3.17 | 0.702 | 2.87 | 3.46 | |||

| fb3 | 3.18 | 0.853 | 2.8 | 3.56 | |||

| It helps confidence | fb1 | 2.44 | 0.821 | 2.1 | 2.78 | 7.648 | 0.007 0.002 |

| fb2 | 3.17 * 0.727 (A.D.) | 0.637 0.221 (S.E.) | 2.9 0.29 | 3.44 1.17 | |||

| fb3 | 2.82 | 0.853 | 2.44 | 3.2 | |||

| Emotional impact | fb1 | 1.88 | 0.833 | 1.54 | 2.22 | 6.378 | 0.014 0.000 |

| fb2 | 2.96 ** 1.078 (A.D.) | 0.908 0.264 (S.E.) | 2.57 0.55 | 3.34 1.61 | |||

| fb3 | 2.86 | 1.037 | 2.4 | 3.32 | |||

| Peer communication | fb1 | 2.52 | 0.77 | 2.2 | 2.84 | 0.523 | 0.472 |

| fb2 | 2.38 | 0.875 | 2.01 | 2.74 | |||

| fb3 | 2.55 | 0.963 | 2.12 | 2.97 | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cioffi, V.; Ragozzino, O.; Mosca, L.L.; Moretto, E.; Tortora, E.; Acocella, A.; Montanari, C.; Ferrara, A.; Crispino, S.; Gigante, E.; et al. Can AI Technologies Support Clinical Supervision? Assessing the Potential of ChatGPT. Informatics 2025, 12, 29. https://doi.org/10.3390/informatics12010029

Cioffi V, Ragozzino O, Mosca LL, Moretto E, Tortora E, Acocella A, Montanari C, Ferrara A, Crispino S, Gigante E, et al. Can AI Technologies Support Clinical Supervision? Assessing the Potential of ChatGPT. Informatics. 2025; 12(1):29. https://doi.org/10.3390/informatics12010029

Chicago/Turabian StyleCioffi, Valeria, Ottavio Ragozzino, Lucia Luciana Mosca, Enrico Moretto, Enrica Tortora, Annamaria Acocella, Claudia Montanari, Antonio Ferrara, Stefano Crispino, Elena Gigante, and et al. 2025. "Can AI Technologies Support Clinical Supervision? Assessing the Potential of ChatGPT" Informatics 12, no. 1: 29. https://doi.org/10.3390/informatics12010029

APA StyleCioffi, V., Ragozzino, O., Mosca, L. L., Moretto, E., Tortora, E., Acocella, A., Montanari, C., Ferrara, A., Crispino, S., Gigante, E., Lommatzsch, A., Pizzimenti, M., Temporin, E., Barlacchi, V., Billi, C., Salonia, G., & Sperandeo, R. (2025). Can AI Technologies Support Clinical Supervision? Assessing the Potential of ChatGPT. Informatics, 12(1), 29. https://doi.org/10.3390/informatics12010029