Human-Centred Design Meets AI-Driven Algorithms: Comparative Analysis of Political Campaign Branding in the Harris–Trump Presidential Campaigns

Abstract

:1. Introduction

RQ: How can integrating AI-driven predictive models and creative insights improve political campaign designs by enhancing viewer attention, emotional engagement, and information retention?

1.1. Background and Hypotheses

1.1.1. A Review of Relevant Literature

1.1.2. Theoretical Framework

- Y represents the dependent variable (e.g., attitudes towards AI usage).

- β0 is the intercept.

- β1, β2, …, βn are the coefficients for each independent variable (e.g., perceived usefulness, AI mindset).

- X1, X2,…, Xn are the independent variables.

- ϵ is the error term.

- Predictors of AI usage: In the context of this study, perceived usefulness (β = 0.34) and AI mindset growth (β = 0.28) are significant predictors of attitudes towards AI usage [51].

- Perceived ease of use: The extent voters find AI-generated campaign materials easy to understand and engage with. The ease with which campaign designers can integrate AI tools into their strategies affects their willingness to adopt these technologies. Simplified interfaces and user-friendly AI tools contribute to higher acceptance [53,54].

- Behavioural intention: Voters’ likelihood of engaging with and being influenced by AI-generated campaign materials. The intention to use AI is influenced by the perceived usefulness, ease of use, and social influence. A positive behavioural intention towards AI adoption can lead to more widespread use in campaign design [55].

2. Research Design

2.1. Novelty of the Approach

2.1.1. AI-Powered Eye-Tracking Software

2.1.2. CoPilot: A Neuroscience-Based AI Marketing Assistant

3. Materials and Methods

Statistical Analysis: ANOVA and Bonferroni Post-Hoc Test Results

4. Results

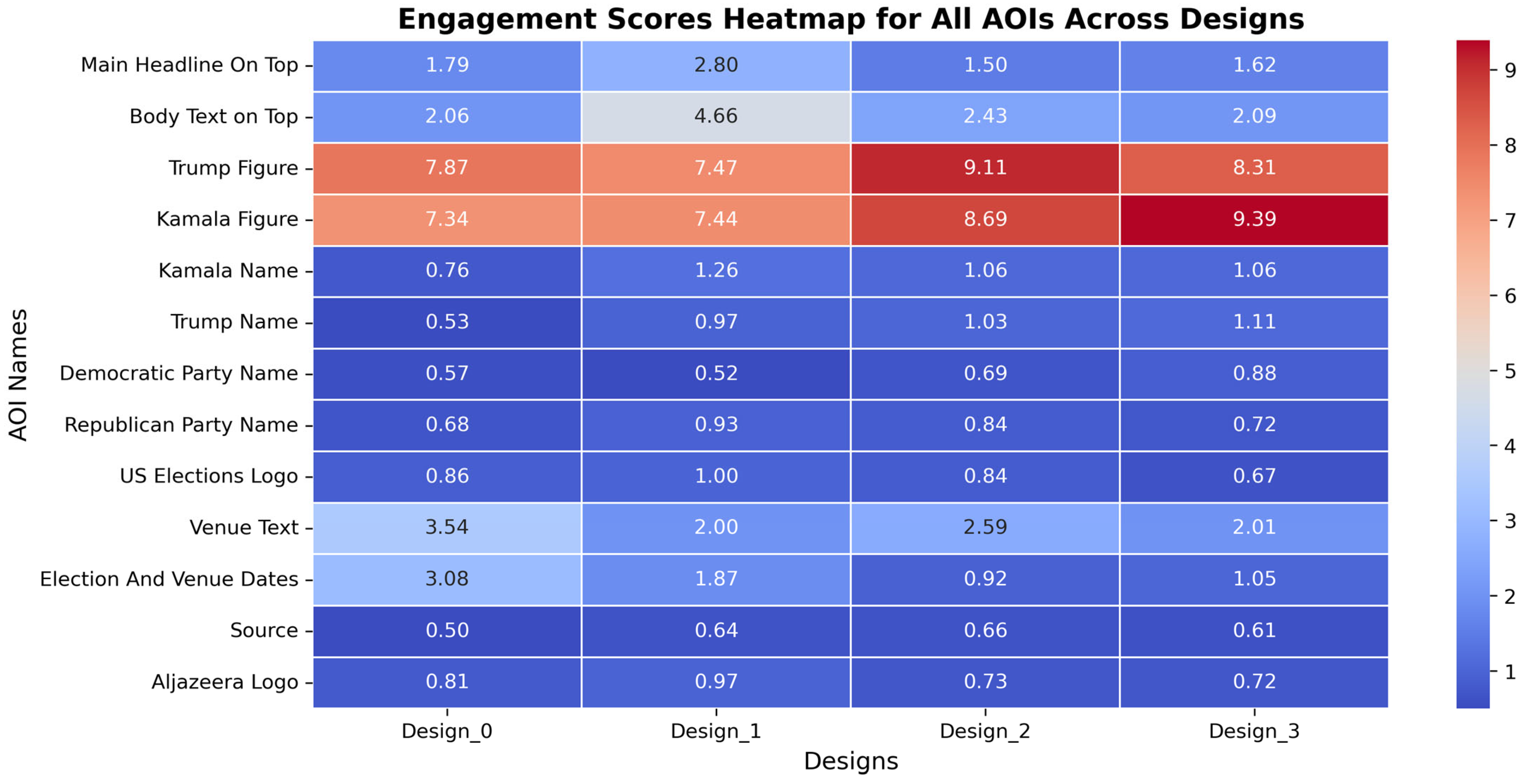

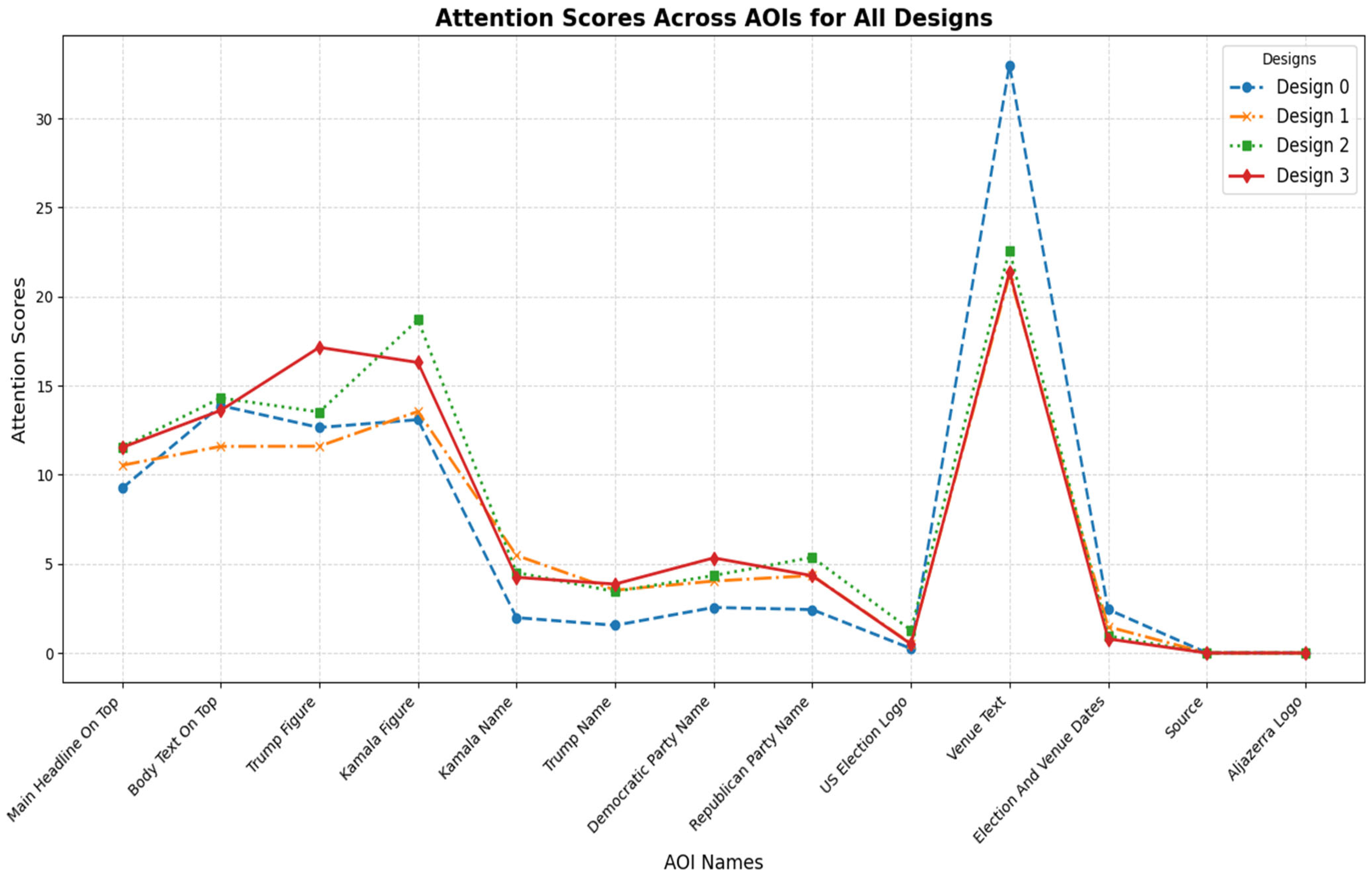

4.1. Comparative Analysis of Attention Scores Across Design Variations

4.1.1. Text-Based AOIs

4.1.2. Visual AOIs

4.1.3. Peripheral AOIs

4.1.4. High-Impact AOIs

4.1.5. Overall Trends

4.1.6. Implications

4.2. Weighted Performance Analysis: Comparing AI-Enhanced and Human-Influenced Designs

4.3. Comparison of Clarity Scores Across Four Flyer Designs Using Kruskal–Wallis H-Test

- -

- AOI 1 (Main Headline on Top): (7.03 × 100);

- -

- AOI 2 (Body Text on Top): (6.13 × 100);

- -

- AOI 5 (Kamala Name): (2.56 × 100).

- -

- AOI 4 (Kamala Figure): (1.077 × 101) (highest among all designs);

- -

- AOI 5 (Kamala Name): (2.66 × 100).

- -

- AOI 10 (Venue Text): (1.193 × 101) (the highest clarity score for this AOI).

- -

- AOI 3 (Trump Figure): (1.015 × 101);

- -

- AOI 4 (Kamala Figure): (9.67 × 100).

4.4. A Spearman Correlation Analysis of the Relationship Between Clarity and Cognitive Demand

5. Discussion

- Design 1 was associated with a significantly higher attention score [8.7 × 100] compared to Design 2 [6.2 × 100] and Design 3 [7.5 × 100].

- Key elements in Design 1 were associated with longer fixation times, averaging [2.3 × 100] seconds more than other designs.

- Design 1 was associated with a [3.5 × 10⁻1] higher recall rate of key information.

- Human expertise and intuition: Design 1, created by a human designer, likely benefited from years of experience and an intuitive understanding of human perception and emotional responses, allowing for more nuanced design choices.

- Contextual understanding: Human designers may possess a better grasp of cultural, social, and political contexts, enabling the creation of more relevant and emotionally engaging content.

- Emotional intelligence: Human designers can tap into emotional intelligence to create designs that evoke specific feelings or responses, while AI systems may struggle to capture and replicate human emotions’ complexities fully.

- Cognitive load management: The human-designed flyer may have more effectively balanced information density and visual complexity, reducing cognitive load for viewers.

- Familiarity and trust: Viewers may respond more positively to designs that feel familiar and “human-made”, potentially leading to higher engagement with Design 1.

- Integration of AI insights: Design 1 incorporated AI-assisted eye-tracking analysis, suggesting that human designers effectively combined AI-generated insights with their creativity.

- Limitations of current AI systems: AI may still lack the ability to fully replicate human creativity, especially in areas requiring subjective judgment or cultural sensitivity.

6. Study Contribution

7. Future Recommendation

- Investigate long-term effects: Conduct longitudinal studies to assess the sustained impact of hybrid neuroscience AI–human designs on user engagement and information retention over extended periods.

- Explore diverse design contexts: Expand research to various design fields (e.g., web design, product packaging, and advertising) to determine if the superiority of hybrid approaches is consistent across different domains. In advertising, hybrid approaches incorporating diverse and inclusive elements have yielded positive outcomes for both brands and society. This finding suggests that the integration of various diversity attributes within advertising strategies enhances their overall efficacy [137].

- Analyse the decision-making process: Examine how human designers interpret and apply neuroscience AI-generated insights, potentially leading to the development of more effective neuroscience AI–human collaboration frameworks.

- Optimize eye-tracking and AI-LLM to human integration: Investigate methods to streamline the integration of AI neuroscience insights into human design workflows, enhancing efficiency and effectiveness.

- Compare multiple neuroscience AI technologies: Evaluate the performance of different AI technologies (e.g., computer vision and natural language processing) combined with human expertise to identify the most potent synergies.

- Assess cultural variations: Study how cultural differences may influence the effectiveness of hybrid neuroscience AI–human designs, potentially leading to culturally tailored design strategies. Deep learning models that account for cultural variations in emotional processing can provide significant insights for developing AI systems that effectively align with diverse cultural and emotional frameworks. These models have the potential to facilitate the creation of more culturally sensitive and emotionally attuned AI applications, thereby ensuring that interactions are more congruent with the emotional cues and expectations of different cultural groups [138].

- Investigate ethical implications: Explore the ethical considerations of using AI neuroscience insights in design, particularly in persuasive or marketing contexts. Public perception of ethical AI design is crucial in determining the acceptance and trust in AI systems. While ethical principles such as explainability, fairness, and privacy are generally considered equally important, preferences for these values may vary across diverse demographic groups [139].

- Refine neuroscience AI algorithms: Continuously improve AI algorithms based on successful human interpretations and applications of AI-generated insights.

- Conduct interdisciplinary research: Foster collaboration between neuroscientists, AI researchers, and design professionals to drive innovation in hybrid design approaches.

- 10.

- Future research directions: future research should consider longitudinal designs to validate causal relationships between AI-enhanced designs and viewer engagement. Such studies could track changes in engagement over time as designs are modified, providing more substantial evidence for causal effects.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| OBC | Oxford Business College |

| PREDICT | AI eye-tracking software for predicting human behaviour |

| COPILOT | AI neuroscience LLM software AI/AI Eye tracking—Predict AI LLM—Copilot |

| CD | cognitive demand |

| AOI | area of interest |

| LLM | large language model |

| SBCs | subtle backdrop cues—minor, often unnoticed, environmental or contextual elements in a scene or interaction that subtly influence perception, behaviour, or decision-making. |

| JND-SalCAR | Just Noticeable Difference-Saliency and Contrast Attention Regulation model—a theoretical framework utilised to elucidate the mechanisms by which saliency (the prominence or attention-capturing quality) and contrast in visual stimuli influence human attention and perception. |

| AUPOD | An automated, data-driven model designed to optimise decision-making and design processes through a systematic approach. |

| PYKOGNITION | An artificial intelligence-based Python library designed for cognitive task analysis and eye-tracking applications. |

| UMSI | A framework designed to integrate two key concepts in visual perception: saliency and importance. |

| A/B | A methodology for comparing two variants of a variable (Version A and Version B) to ascertain which one exhibits superior performance based on a specified metric or objective. |

Appendix A

| Remark | Objective |

|---|---|

| Enlarge and centre the debate headline with a dynamic font to create a more vital focal point. | Improve attention distribution and enhance the clarity of the main message. |

| Replace static participant images with action-oriented debate poses. | Boost engagement through added visual energy and emotional resonance. |

| Create a compact, infographic-style element for debate details. | Reduce cognitive demand and improve the clarity of information presentation. |

| Introduce a subtle patriotic background element. | Enhance visual interest and reinforce brand identity without increasing cognitive load. |

References

- Çakar, T.; Filiz, G. Unraveling neural pathways of political engagement: Bridging neuromarketing and political science for understanding voter behaviour and political leader perception. Front. Hum. Neurosci. 2023, 17, 1293173. [Google Scholar] [CrossRef] [PubMed]

- Pich, C.; Dean, D. Political branding: A sense of identity or identity crisis? An investigation of the transfer potential of the brand identity prism to the UK Conservative Party. J. Mark. Manag. 2015, 31, 1353–1378. [Google Scholar] [CrossRef]

- Herrmann, M.; Shikano, S. Do campaign posters trigger voting based on looks? Probing an explanation for why good-looking candidates win more votes. Acta Politica 2021, 56, 416–435. [Google Scholar] [CrossRef]

- Joo, J.; Li, W.; Steen, F.F.; Zhu, S.-C. Visual Persuasion: Inferring Communicative Intents of Images. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23 June 2014; IEEE: Piscataway, NJ, USA, 2018; pp. 216–223. [Google Scholar] [CrossRef]

- Hughes, A.G. Visualizing inequality: How graphical emphasis shapes public opinion. Res. Politics 2015, 2, 2053168015622073. [Google Scholar] [CrossRef]

- Lam, C.; Huang, Z.; Shen, L. Infographics and the Elaboration Likelihood Model (ELM): Differences between Visual and Textual Health Messages. J. Health Commun. 2022, 27, 737–745. [Google Scholar] [CrossRef]

- Billard, T.J. Citizen typography and political brands in the 2016 US presidential election campaign. Mark. Theory 2018, 18, 421–431. [Google Scholar] [CrossRef]

- Sazan, D.; Al-Smadi, O.A.; Rahman, N.A. Visual Representation of Malaysian Candidates in General Election in Selected Coalition Parties: A Visual Survey on Social Media. Theory Pract. Lang. Stud. 2024, 14, 365–375. [Google Scholar] [CrossRef]

- Arias-Rosales, A. The perceived value of human-AI collaboration in early shape exploration: An exploratory assessment. PLoS ONE 2022, 17, e0274496. [Google Scholar] [CrossRef]

- Maksymenko, S.; Lytvynchuk, L.; Onufriieva, L. Neuro-Psycholinguistic Study of Political Slogans in Outdoor Advertising. Psycholinguistics 2019, 26, 246–264. [Google Scholar] [CrossRef]

- Matthes, J.; Marquart, F.; Arendt, F.; Wonneberger, A. The Selective Avoidance of Threat Appeals in Right-Wing Populist Political Ads: An Implicit Cognition Approach Using Eye-Tracking Methodology. In Advances in Advertising Research; Springer: Wiesbaden, Germany, 2016; Volume VI, pp. 135–145. [Google Scholar] [CrossRef]

- Coronel, J.C.; Moore, R.C.; Debuys, B. Do Gender Cues from Images Supersede Partisan Cues Conveyed via Text? Eye Movements Reveal Political Stereotyping in Multimodal Information Environments. Political Commun. 2021, 38, 281–304. [Google Scholar] [CrossRef]

- Dan, V.; Arendt, F. Visual Cues to the Hidden Agenda: Investigating the Effects of Ideology-Related Visual Subtle Backdrop Cues in Political Communication. Int. J. Press Politics 2020, 26, 22–45. [Google Scholar] [CrossRef]

- Seo, S.; Ki, S.; Kim, M. A Novel Just-Noticeable-Difference-Based Saliency-Channel Attention Residual Network for Full-Reference Image Quality Predictions. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 2602–2616. [Google Scholar] [CrossRef]

- Lai, Q.; Khan, S.; Nie, Y.; Sun, H.; Shen, J.; Shao, L. Understanding More About Human and Machine Attention in Deep Neural Networks. IEEE Trans. Multimedia 2020, 23, 2086–2099. [Google Scholar] [CrossRef]

- Bueno, A.; Sato, J.; Hornberger, M. Eye tracking–The overlooked method to measure cognition in neurodegeneration? Neuropsychologia 2019, 133, 107191. [Google Scholar] [CrossRef]

- Pizzo, A.; Fosgaard, T.R.; Tyler, B.B.; Beukel, K. Information acquisition and cognitive processes during strategic decision-making: Combining a policy-capturing study with eye-tracking data. PLoS ONE 2022, 17, e0278409. [Google Scholar] [CrossRef]

- Onwuegbusi, T.; Hermens, F.; Hogue, T. Data-driven group comparisons of eye fixations to dynamic stimuli. Q. J. Exp. Psychol. 2021, 75, 989–1003. [Google Scholar] [CrossRef]

- Dommett, K.; Barclay, A.; Gibson, R. Just what is data-driven campaigning? A systematic review. Inf. Commun. Soc. 2023, 27, 1–22. [Google Scholar] [CrossRef]

- Silva-Torres, J.-J.; Martínez-Martínez, L.; Cuesta-Cambra, U. Diseño de un modelo de atención visual para campañas de comunicación. El caso de la COVID-19. Prof. Inf. 2020, 29, e290627. [Google Scholar] [CrossRef]

- Otto, L.P.; Thomas, F.; Maier, M.; Ottenstein, C. Only One Moment in Time? Investigating the Dynamic Relationship of Emotions and Attention Toward Political Information with Mobile Experience Sampling. Commun. Res. 2019, 47, 1131–1154. [Google Scholar] [CrossRef]

- Boussalis, C.; Coan, T.G. Facing the Electorate: Computational Approaches to the Study of Nonverbal Communication and Voter Impression Formation. Political Commun. 2020, 38, 75–97. [Google Scholar] [CrossRef]

- Dumitrescu, D. Nonverbal Communication in Politics. Am. Behav. Sci. 2016, 60, 1656–1675. [Google Scholar] [CrossRef]

- Sophocleous, H.P.; Masouras, A.N.; Anastasiadou, S.D. The Impact of Political Marketing on Voting Behaviour of Cypriot Voters. Soc. Sci. 2024, 13, 149. [Google Scholar] [CrossRef]

- Kristensen, J.B.; Albrechtsen, T.; Dahl-Nielsen, E.; Jensen, M.; Skovrind, M.; Bornakke, T. Parsimonious data: How a single Facebook like predicts voting behaviour in multiparty systems. PLoS ONE 2017, 12, e0184562. [Google Scholar] [CrossRef]

- Lundberg, K.B.; Payne, B.K. Decisions among the Undecided: Implicit Attitudes Predict Future Voting Behavior of Undecided Voters. PLoS ONE 2014, 9, e85680. [Google Scholar] [CrossRef]

- Pich, C.; Newman, B.I. Evolution of Political Branding: Typologies, Diverse Settings and Future Research. J. Political Mark. 2019, 19, 3–14. [Google Scholar] [CrossRef]

- Gemenis, K. Artificial intelligence and voting advice applications. Front. Political Sci. 2024, 6, 1286893. [Google Scholar] [CrossRef]

- Spenkuch, J.L.; Toniatti, D. Political Advertising and Election Results. Q. J. Econ. 2018, 133, 1981–2036. [Google Scholar] [CrossRef]

- Walker, R.M.; Yeung, D.Y.-L.; Lee, M.J.; Lee, I.P. Assessing Information-based Policy Tools: An Eye-Tracking Laboratory Experiment on Public Information Posters. J. Comp. Policy Anal. Res. Pract. 2020, 22, 558–578. [Google Scholar] [CrossRef]

- Otamendi, F.J.; Martín, D.L.S. The Emotional Effectiveness of Advertisement. Front. Psychol. 2020, 11, 2088. [Google Scholar] [CrossRef]

- Townsley, J. Is it worth door-knocking? Evidence from a United Kingdom-based Get Out the Vote (GOTV) field experiment on the effect of party leaflets and canvass visits on voter turnout. Political Sci. Res. Methods 2018, 13, 21–35. [Google Scholar] [CrossRef]

- Foos, F.; John, P. Parties are No Civic Charities: Voter Contact and the Changing Partisan Composition of the Electorate. Political Sci. Res. Methods 2018, 6, 283–298. [Google Scholar] [CrossRef]

- Simchon, A.; Edwards, M.; Lewandowsky, S. The persuasive effects of political microtargeting in the age of generative AI. PNAS Nexus 2024, 3, pgae035. [Google Scholar] [CrossRef]

- Kamal, R.; Kaur, M.; Kaur, J.; Malhan, S. Artificial Intelligence-Powered Political Advertising. In The Ethical Frontier of AI and Data Analysis; IGI Global: Hershey, PN, USA, 2024; pp. 100–109. [Google Scholar] [CrossRef]

- Thapa, J. The Impact of Artificial Intelligence on Elections. Int. J. Multidiscip. Res. 2024, 6, 240217524. [Google Scholar] [CrossRef]

- Hassan, I.M.; Mahmood, A.H. A Cognitive Semantic Study of Selected Posters Used in Trump and Biden’s 2020 Election Campaign. Al Farahidi Lit. Mag. 2022, 14, 626–642. [Google Scholar] [CrossRef]

- Itti, L. Lessons from neuroscience. In Proceedings of the Companion Proceedings of the 2019 World Wide Web Conference, New York, NY, USA, 13–17 May 2019; p. 70. [Google Scholar] [CrossRef]

- Muddamsetty, S.M.; Sidibé, D.; Trémeau, A.; Mériaudeau, F. Salient objects detection in dynamic scenes using colour and texture features. Multimed. Tools Appl. 2018, 77, 5461–5474. [Google Scholar] [CrossRef]

- Chan, H.-Y.; Boksem, M.A.; Venkatraman, V.; Dietvorst, R.C.; Scholz, C.; Vo, K.; Falk, E.B.; Smidts, A. Neural Signals of Video Advertisement Liking: Insights into Psychological Processes and Their Temporal Dynamics. J. Mark. Res. 2023, 61, 891–913. [Google Scholar] [CrossRef]

- Cabot, P.-L.H.; Dankers, V.; Abadi, D.; Fischer, A.; Shutova, E. The Pragmatics behind Politics: Modelling Metaphor, Framing and Emotion in Political Discourse. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP, Association for Computational Linguistics, Stroudsburg, PA, USA, 16–20 November 2020; pp. 4479–4488. [Google Scholar] [CrossRef]

- Karinshak, E.; Liu, S.X.; Park, J.S.; Hancock, J.T. Working with AI to Persuade: Examining a Large Language Model’s Ability to Generate Pro-Vaccination Messages. Proc. ACM Hum.-Comput. Interact. 2023, 7, 1–29. [Google Scholar] [CrossRef]

- Shulman, H.C.; Sweitzer, M.D.; Bullock, O.M.; Coronel, J.C.; Bond, R.M.; Poulsen, S. Predicting Vote Choice and Election Outcomes from Ballot Wording: The Role of Processing Fluency in Low Information Direct Democracy Elections. Political Commun. 2022, 39, 652–673. [Google Scholar] [CrossRef]

- Kendall, C.; Nannicini, T.; Trebbi, F. How Do Voters Respond to Information? Evidence from a Randomized Campaign. Am. Econ. Rev. Am. Econ. Assoc. 2013, 105, 253–322. Available online: https://docs.iza.org/dp7340.pdf (accessed on 8 March 2025).

- Paetzel, F.; Lorenz, J.; Tepe, M.S. Transparency Diminishes Framing-Effects in Voting on Redistribution: Some Experimental Evidence. SSRN Electron. J. 2017, 55, 169–184. [Google Scholar] [CrossRef]

- Moore, A.; Hong, S.; Cram, L. Trust in information, political identity and the brain: An interdisciplinary fMRI study. Philos. Trans. R. Soc. B Biol. Sci. 2021, 376, 20200140. [Google Scholar] [CrossRef] [PubMed]

- Haenschen, K.; Tamul, D.J. What’s in a Font?: Ideological Perceptions of Typography. Commun. Stud. 2019, 71, 244–261. [Google Scholar] [CrossRef]

- Beecham, R. Using position, angle and thickness to expose the shifting geographies of the 2019 UK general election. Environ. Plan. A Econ. Space 2020, 52, 833–836. [Google Scholar] [CrossRef]

- Davis, F.D.; Granić, A. Introduction: “Once Upon a TAM”. In The Technology Acceptance Model; Springer: Cham, Switzerland, 2024; pp. 1–18. [Google Scholar] [CrossRef]

- Or, C. Watch That Attitude! Examining the Role of Attitude in the Technology Acceptance Model through Meta-Analytic Structural Equation Modelling. Int. J. Technol. Educ. Sci. 2024, 8, 558–582. [Google Scholar] [CrossRef]

- Ibrahim, F.; Münscher, J.-C.; Daseking, M.; Telle, N.-T. The technology acceptance model and adopter type analysis in the context of artificial intelligence. Front. Artif. Intell. 2025, 7, 1496518. [Google Scholar] [CrossRef]

- Adebayo, A.A. Campaigning in the Age of AI: Ethical Dilemmas and Practical Solutions for The UK and US. Int. J. Soc. Sci. Hum. Res. 2024, 7, 9330–9336. [Google Scholar] [CrossRef]

- Assaf, R.; Omar, M.; Saleh, Y.; Attar, H.; Alaqra, N.T.; Kanan, M. Assessing the Acceptance for Implementing Artificial Intelligence Technologies in the Governmental Sector. Eng. Technol. Appl. Sci. Res. 2024, 14, 18160–18170. [Google Scholar] [CrossRef]

- Zhou, C.; Liu, X.; Yu, C.; Tao, Y.; Shao, Y. Trust in AI-augmented design: Applying structural equation modelling to AI-augmented design acceptance. Heliyon 2023, 10, e23305. [Google Scholar] [CrossRef]

- Baroni, I.; Calegari, G.R.; Scandolari, D.; Celino, I. AI-TAM: A model to investigate user acceptance and collaborative intention inhuman-in-the-loop AI applications. Hum. Comput. 2022, 9, 1–21. [Google Scholar] [CrossRef]

- Rane, N.; Choudhary, S.P.; Rane, J. Acceptance of artificial intelligence: Key factors, challenges, and implementation strategies. J. Appl. Artif. Intell. 2024, 5, 50–70. [Google Scholar] [CrossRef]

- Susser, D.; Grimaldi, V. Measuring Automated Influence: Between Empirical Evidence and Ethical Values. In Proceedings of the 2021 AAAI/ACM Conference on AI, Ethics, and Society, ACM, New York, NY, USA, 30 July 2021; pp. 242–253. [Google Scholar] [CrossRef]

- Šola, H.M.; Qureshi, F.H.; Khawaja, S. AI-Powered Eye Tracking for Bias Detection in Online Course Reviews: A Udemy Case Study. Big Data Cogn. Comput. 2024, 8, 144. [Google Scholar] [CrossRef]

- Šola, H.M.; Qureshi, F.H.; Khawaja, S. Predicting Behaviour Patterns in Online and PDF Magazines with AI Eye-Tracking. Behav. Sci. 2024, 14, 677. [Google Scholar] [CrossRef] [PubMed]

- Marques, J.A.L.; Neto, A.C.; Silva, S.C.; Bigne, E. Predicting consumer ad preferences: Leveraging a machine learning approach for EDA and FEA neurophysiological metrics. Psychol. Mark. 2024, 42, 175–192. [Google Scholar] [CrossRef]

- Goshi, A. Large Language Models in Politics and Democracy: A Comprehensive Survey. arXiv 2024, arXiv:2412.04498. Available online: https://arxiv.org/abs/2412.04498 (accessed on 7 March 2025).

- Chang, H.C.H.; Shaman, B.; Chen, Y.C.; Zha, M.; Noh, S.; Wei, C.; Weener, T.; Magee, M. Generative Memesis: AI Mediates Political Memes in the 2024 United States Presidential Election. OSF Prepr. 2024, 1–26. [Google Scholar] [CrossRef]

- Nalisnick, E.; Matuskwa, A.; Teh, Y.W.; Gorur, D.; Lakhminarayanan, B. Hybrid Models with Deep and Invertible Features, Proceedings of the 36th International Conference on Machine Learning. In Proceedings of the PMLR 97 Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 4723–4732. Available online: https://proceedings.mlr.press/v97/nalisnick19b.html (accessed on 1 December 2024).

- Alsharif, A.H.; Salleh, N.Z.M.; Baharun, R.; Mansor, A.A.; Ali, J.; Abbas, A.F. Neuroimaging Techniques in Advertising Research: Main Applications, Development, and Brain Regions and Processes. Sustainability 2021, 13, 6488. [Google Scholar] [CrossRef]

- Neurons. Predict Tech Paper, Version 1.0; Neurons, Inc. Aps: Copenhagen, Denmark, 2023; pp. 1–30.

- Neurons. Predict Datasheet; Neurons Inc. Aps: Copenhagen, Denmark, 2024; pp. 1–3.

- L. Neurons. Copilot Technical Paper; Neurons Inc. Aps: Copenhagen, Denmark, 2024; pp. 1–7.

- Osgood, B. News, US Election: Trump-Harris First Presidential Debate: What to Watch for. Al Jazeera. Available online: https://www.aljazeera.com/news/2024/9/9/trump-harris-first-presidential-debate-what-to-watch-for-on-tuesday (accessed on 10 September 2024).

- Fondevila-Gascón, J.-F.; Gutiérrez-Aragón, Ó.; Vidal-Portés, E.; Pujol-Cordero, O. Influencia del neuromarketing en la percepción de carteles publicitarios. Grafica 2023, 11, 133–143. [Google Scholar] [CrossRef]

- Sasibhooshan, R.; Kumaraswamy, S.; Sasidharan, S. Image caption generation using Visual Attention Prediction and Contextual Spatial Relation Extraction. J. Big Data 2023, 10, 18. [Google Scholar] [CrossRef]

- Huang, T.-H.; Yeh, S.-L.; Yang, Y.-H.; Liao, H.-I.; Tsai, Y.-Y.; Chang, P.-J.; Chen, H.H. Method and experiments of subliminal cueing for real-world images. Multimed. Tools Appl. 2015, 74, 10111–10135. [Google Scholar] [CrossRef]

- Dijkstra, N.; Bosch, S.E.; van Gerven, M.A. Shared Neural Mechanisms of Visual Perception and Imagery. Trends Cogn. Sci. 2019, 23, 423–434. [Google Scholar] [CrossRef]

- Eríşen, H.; Ersoy, M. Visual frame analysis of the UKIP leave campaigns “Turkish migrant” Brexit visuals. Mediterr. Politics 2024, 28, 1–25. [Google Scholar] [CrossRef]

- Bera, P.; Sofer, P.; Parsons, J. Using Eye Tracking to Expose Cognitive Processes in Understanding Conceptual Models. Manag. Inf. Syst. Q. 2019, 43, 1105–1126. Available online: https://www.researchgate.net/publication/330853967_Using_Eye_Tracking_to_Expose_Cognitive_Processes_in_Understanding_Conceptual_Models (accessed on 1 December 2024). [CrossRef]

- Lancry-Dayan, O.C.; Kupershmidt, G.; Pertzov, Y. Been there, seen that, done that: Modification of visual exploration across repeated exposures. J. Vis. 2019, 19, 2. [Google Scholar] [CrossRef] [PubMed]

- Hock, F. Sie wollte immer eigene Kinder, aber: Ein Einblick ins Privatleben von Kamala Harris. Watson. Available online: https://www.watson.ch/international/leben/717418662-ein-einblick-in-das-privatleben-von-kamala-harris (accessed on 1 October 2024).

- Baker, G. UK Urged to Reject Co-Operation with ‘Torture Enthusiast’ Trump. Middle East Eye. Available online: https://www.middleeasteye.net/news/uk-urged-reject-co-operating-torture-enthusiast-trump (accessed on 1 October 2024).

- Peterson, D.A. The dynamic construction of candidate image. Elect. Stud. 2018, 54, 289–296. [Google Scholar] [CrossRef]

- Al-Burai, A.; Burnaz, S.; Girisken, Y. An analysis of voters perception of visual advertisements with respect to neuromarketing approach. Pressacademia 2018, 7, 237–258. [Google Scholar] [CrossRef]

- Casiraghi, M.C.; Curini, L.; Cusumano, E. The colors of ideology: Chromatic isomorphism and political party logos. Party Politics 2022, 29, 463–474. [Google Scholar] [CrossRef]

- Scicluna, P.; Kemper, F.; Siebenmorgen, R.; Wesson, R.; Blommaert, J.A.D.L.; Wolf, S. Precision: A fast python pipeline for high-contrast imaging–Application to SPHERE observations of the red supergiant VX Sagitariae. Mon. Not. R. Astron. Soc. 2020, 494, 3200–3211. [Google Scholar] [CrossRef]

- Schober, P.; Boer, C.; Schwarte, L.A. Correlation Coefficients: Appropriate Use and Interpretation. Anesth. Analg. 2018, 126, 1763–1768. [Google Scholar] [CrossRef]

- Stevens, J.R.; Al Masud, A.; Suyundikov, A. A comparison of multiple testing adjustment methods with block-correlation positively-dependent tests. PLoS ONE 2017, 12, e0176124. [Google Scholar] [CrossRef]

- Aslam, M.; Albassam, M. Presenting post hoc multiple comparison tests under neutrosophic statistics. J. King Saud Univ. Sci. 2020, 32, 2728–2732. [Google Scholar] [CrossRef]

- Goeman, J.J.; Solari, A. Comparing Three Groups. Am. Stat. 2021, 76, 168–176. [Google Scholar] [CrossRef]

- Grusell, M.; Nord, L. Not so Intimate Instagram: Images of Swedish Political Party Leaders in the 2018 National Election Campaign. J. Political Mark. 2020, 22, 92–107. [Google Scholar] [CrossRef]

- Lench, H.C.; Fernandez, L.; Reed, N.; Raibley, E.; Levine, L.J.; Salsedo, K. Voter emotional responses and voting behaviour in the 2020 US presidential election. Cogn. Emot. 2024, 38, 1196–1209. [Google Scholar] [CrossRef] [PubMed]

- Opitz, R. An Experiment in Using Visual Attention Metrics to Think About Experience and Design Choices in Past Places. J. Archaeol. Method Theory 2017, 24, 1203–1226. [Google Scholar] [CrossRef]

- Šola, H.M.; Qureshi, F.H.; Khawaja, S. AI Eye-Tracking Technology: A New Era in Managing Cognitive Loads for Online Learners. Educ. Sci. 2024, 14, 933. [Google Scholar] [CrossRef]

- Boussioux, L.; Lane, J.N.; Zhang, M.; Jacimovic, V.; Lakhani, K.R. The Crowdless Future? Generative AI and Creative Problem-Solving. Organ. Sci. 2024, 35, 1589–1607. [Google Scholar] [CrossRef]

- McGuire, J.; De Cremer, D.; Van de Cruys, T. Establishing the importance of co-creation and self-efficacy in creative collaboration with artificial intelligence. Sci. Rep. 2024, 14, 18525. [Google Scholar] [CrossRef]

- Bansal, G.; Nawal, A.; Chamola, V.; Herencsar, N. Revolutionizing Visuals: The Role of Generative AI in Modern Image Generation. ACM Trans. Multimedia Comput. Commun. Appl. 2024, 20, 1–22. [Google Scholar] [CrossRef]

- Das, S.; Rani, P. Revolutionizing Graphic Design: The Synergy of AI Tools and Human Creativity. ShodhKosh J. Vis. Perform. Arts 2024, 5, 372–380. [Google Scholar] [CrossRef]

- Cheng, S.; Fan, J.; Hu, Y. Visual saliency model based on crowdsourcing eye tracking data and its application in visual design. Pers. Ubiquitous Comput. 2020, 27, 613–630. [Google Scholar] [CrossRef]

- Che, Y.-K.; Mierendorff, K. Optimal Dynamic Allocation of Attention. Am. Econ. Rev. 2019, 109, 2993–3029. [Google Scholar] [CrossRef]

- Murphy, D.H.; Rhodes, M.G.; Castel, A.D. The perceived importance of words in large font guides learning and selective memory. Mem. Cogn. 2024, 52, 1463–1476. [Google Scholar] [CrossRef] [PubMed]

- Dobres, J.; Chahine, N.; Reimer, B.; Gould, D.; Mehler, B.; Coughlin, J.F. Utilising psychophysical techniques to investigate the effects of age, typeface design, size and display polarity on glance legibility. Ergonomics 2016, 59, 1377–1391. [Google Scholar] [CrossRef]

- Minakata, K.; Beier, S. The effect of font width on eye movements during reading. Appl. Ergon. 2021, 97, 103523. [Google Scholar] [CrossRef]

- Koulieris, G.A.; Drettakis, G.; Cunningham, D.; Mania, K. High-level saliency prediction for smart game balancing. In Proceedings of the ACM SIGGRAPH 2014 Talks, ACM, New York, NY, USA, 27 July 2014; p. 1. [Google Scholar] [CrossRef]

- Rahal, R.-M.; Fiedler, S. Understanding cognitive and affective mechanisms in social psychology through eye-tracking. J. Exp. Soc. Psychol. 2019, 85, 103842. [Google Scholar] [CrossRef]

- Wang, Q.; Zhu, F.; Dang, R.; Wei, X.; Han, G.; Huang, J.; Hu, B. An eye-tracking investigation of attention mechanism in driving behaviour under emotional issues and cognitive load. Sci. Rep. 2023, 13, 16963. [Google Scholar] [CrossRef]

- Yang, F.; Cai, M.; Mortenson, C.; Fakhari, H.; Lokmanoglu, A.D.; Hullman, J.; Franconeri, S.; Diakopoulos, N.; Nisbet, E.C.; Kay, M. Swaying the Public? Impacts of Election Forecast Visualizations on Emotion, Trust, and Intention in the 2022 U.S. Midterms. IEEE Trans. Vis. Comput. Graph. 2023, 30, 23–33. [Google Scholar] [CrossRef]

- Powell, T.E.; Boomgaarden, H.G.; De Swert, K.; de Vreese, C.H. A Clearer Picture: The Contribution of Visuals and Text to Framing Effects. J. Commun. 2015, 65, 997–1017. [Google Scholar] [CrossRef]

- Tolochko, P.; Song, H.; Boomgaarden, H. ‘That Looks Hard!’: Effects of Objective and Perceived Textual Complexity on Factual and Structural Political Knowledge. Polit. Commun. 2019, 36, 609–628. [Google Scholar] [CrossRef]

- Carpinella, C.M.; Johnson, K.L. Visual Political Communication: The Impact of Facial Cues from Social Constituencies to Personal Pocketbooks. Soc. Pers. Psychol. Compass 2016, 10, 281–297. [Google Scholar] [CrossRef]

- Haunss, S.; Kuhn, J.; Padó, S.; Blessing, A.; Blokker, N.; Dayanik, E.; Lapesa, G. Integrating Manual and Automatic Annotation for the Creation of Discourse Network Data Sets. Politics Gov. 2020, 8, 326–339. [Google Scholar] [CrossRef]

- Elhajjar, S. Unveiling the marketer’s lens: Exploring experiences and perspectives on AI integration in marketing strategies. Asia Pac. J. Mark. Logist. 2024, 37, 498–517. [Google Scholar] [CrossRef]

- Riswanto, A.L.; Ha, S.; Lee, S.; Kwon, M. Online Reviews Meet Visual Attention: A Study on Consumer Patterns in Advertising, Analyzing Customer Satisfaction, Visual Engagement, and Purchase Intention. J. Theor. Appl. Electron. Commer. Res. 2024, 19, 3102–3122. [Google Scholar] [CrossRef]

- Galai, Y. Political Visual Literacy. Int. Politi-Sociol. 2023, 17, olad010. [Google Scholar] [CrossRef]

- Mensah, K. Political brand architecture: Towards a new conceptualisation of political branding in an emerging democracy. Afr. Journal. Stud. 2016, 37, 61–84. [Google Scholar] [CrossRef]

- Dudinskaya, E.C.; Naspetti, S.; Zanoli, R. Using eye-tracking as an aid to design on-screen choice experiments. J. Choice Model. 2020, 36, 100232. [Google Scholar] [CrossRef]

- Segovia, M.S.; A Palma, M. Testing the consistency of preferences in discrete choice experiments: An eye-tracking study. Eur. Rev. Agric. Econ. 2020, 48, 624–664. [Google Scholar] [CrossRef]

- Hancock, P.A.; Warm, J.S. A Dynamic Model of Stress and Sustained Attention. Hum. Factors J. Hum. Factors Ergon. Soc. 1989, 31, 519–537. [Google Scholar] [CrossRef]

- Kim, I.; Tang, C.S. Lead time and response time in a pull production control system. Eur. J. Oper. Res. 1997, 101, 474–485. [Google Scholar] [CrossRef]

- Parasuraman, R.; Sheridan, T.B.; Wickens, C.D. Situation Awareness, Mental Workload, and Trust in Automation: Viable, Empirically Supported Cognitive Engineering Constructs. J. Cogn. Eng. Decis. Mak. 2008, 2, 140–160. [Google Scholar] [CrossRef]

- Saaty, T.L. How to make a decision: The analytic hierarchy process. Eur. J. Oper. Res. 1990, 48, 9–26. [Google Scholar] [CrossRef]

- Kompatsiari, K.; Perez-Osorio, J.; De Tommaso, D.; Metta, G.; Wykowska, A. Neuroscientifically-Grounded Research for Improved Human-Robot Interaction. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; IEEE: Piscataway, NJ, USA; pp. 3403–3408. [Google Scholar] [CrossRef]

- Tessler, M.H.; Bakker, M.A.; Jarrett, D.; Sheahan, H.; Chadwick, M.J.; Koster, R.; Evans, G.; Campbell-Gillingham, L.; Collins, T.; Parkes, D.C.; et al. AI can help humans find common ground in democratic deliberation. Science 2024, 386, 6719. [Google Scholar] [CrossRef] [PubMed]

- Kurinec, C.A.; Wise, A.V.T.; Cavazos, C.A.; Reyes, E.M.; Weaver, C.A. Clarity under cognitive constraint: Can a simple directive encourage busy speakers to avoid ambiguity? Lang. Cogn. 2019, 11, 621–644. [Google Scholar] [CrossRef]

- Parodi, G.; Julio, C. ¿Dónde se posan los ojos al leer textos multisemióticos disciplinares? Procesamiento de palabras y gráficos en un estudio experimental con eye tracker. Rev. Signos 2016, 49, 149–183. [Google Scholar] [CrossRef]

- Muraoka, T. The electoral implications of politically irrelevant cues under demanding electoral systems. Political Sci. Res. Methods 2019, 9, 312–326. [Google Scholar] [CrossRef]

- Benoit, K.; Munger, K.; Spirling, A. Measuring and Explaining Political Sophistication through Textual Complexity. Am. J. Political Sci. 2019, 63, 491–508. [Google Scholar] [CrossRef]

- Huybrechts, L.; Teli, M. The Politics of Co-Design. CoDesign 2020, 16, 1–2. [Google Scholar] [CrossRef]

- Averbeck, J.M.; Miller, C. Expanding Language Expectancy Theory: The Suasory Effects of Lexical Complexity and Syntactic Complexity on Effective Message Design. Commun. Stud. 2013, 65, 72–95. [Google Scholar] [CrossRef]

- Rasmussen, S.H.R.; Ludeke, S. Cognitive ability is a powerful predictor of political tolerance. J. Pers. 2021, 90, 311–323. [Google Scholar] [CrossRef]

- Holland, D.; Krause, A.; Provencher, J.; Seltzer, T. Transparency tested: The influence of message features on public perceptions of organisational transparency. Public Relat. Rev. 2018, 44, 256–264. [Google Scholar] [CrossRef]

- Amsalem, E. How Informative and Persuasive is Simple Elite Communication? Public Opin. Q. 2019, 83, 1–25. [Google Scholar] [CrossRef]

- Jha, S.; Jha, S.K.; Velasquez, A. Neuro-symbolic Generative AI Assistant for System Design. In Proceedings of the 2024 22nd ACM-IEEE International Symposium on Formal Methods and Models for System Design (MEMOCODE), Raleigh, NC, USA, 3–4 October 2024; IEEE: Piscataway, NJ, USA; pp. 75–76. [Google Scholar] [CrossRef]

- Zhang, W.; Seong, D. Using Artificial Intelligence to Strengthen the Interaction between Humans and Computers and Biosensor Cooperation. J. Wirel. Mob. Netw. Ubiquitous Comput. Dependable Appl. 2024, 15, 53–68. [Google Scholar] [CrossRef]

- Yang, W. Beyond algorithms: The human touch machine-generated titles for enhancing click-through rates on social media. PLoS ONE 2024, 19, e0306639. [Google Scholar] [CrossRef] [PubMed]

- Durugbo, C.M. Eye tracking for work-related visual search: A cognitive task analysis. Ergonomics 2021, 64, 225–240. [Google Scholar] [CrossRef]

- Lee, G.; Kim, H. Algorithm fashion designer? Ascribed mind and perceived design expertise of AI versus human. Psychol. Mark. 2024, 42, 255–273. [Google Scholar] [CrossRef]

- Hua, Y.; Ni, J.; Lu, H. An eye-tracking technology and MLP-based colour matching design method. Sci. Rep. 2023, 13, 1294. [Google Scholar] [CrossRef]

- Wright, K.B.; Bafna, S. Structure of Attention and the Logic of Visual Composition. Behav. Sci. 2014, 4, 226–242. [Google Scholar] [CrossRef]

- Anupama, T.; Rosita, S. Neuromarketing Insights Enhanced by Artificial Intelligence. ComFin Res. 2024, 12, 24–28. [Google Scholar] [CrossRef]

- Richardson, J.; Witzleb, N.; Paterson, M. Political Micro-Targeting in an Era of Big Data Analytics. In Big Data, Political Campaigning and the Law; Routledge: London, UK, 2019; pp. 1–14. [Google Scholar] [CrossRef]

- Eisend, M.; Muldrow, A.F.; Rosengren, S. Diversity and inclusion in advertising research. Int. J. Advert. 2022, 42, 52–59. [Google Scholar] [CrossRef]

- Messner, W. Cultural Differences in an Artificial Representation of the Human Emotional Brain System: A Deep Learning Study. J. Int. Mark. 2022, 30, 21–43. [Google Scholar] [CrossRef]

- Kieslich, K.; Keller, B.; Starke, C. Artificial intelligence ethics by design. Evaluating public perception on the importance of ethical design principles of artificial intelligence. Big Data Soc. 2022, 9, 20539517221092956. [Google Scholar] [CrossRef]

| Hypothesis | Description |

|---|---|

| H1a: Attention Capture | Political campaign flyers designed using Predict AI’s Co-Pilot will achieve higher initial attention scores (start attention) than traditionally designed flyers. |

| H1b: Emotional Engagement | Political campaign flyers designed with Predict AI’s Co-Pilot will elicit stronger emotional engagement, measured by higher end attention and lower cognitive demand scores. |

| H1c: Message Clarity | Political campaign flyers created using Predict AI’s Co-Pilot will have higher clarity scores than those designed using traditional methods, ensuring better comprehension of campaign messages. |

| H1d: Viewer Engagement and Recall | Political campaign flyers designed with Predict AI’s Co-Pilot will result in higher viewer engagement, recall, and recognition scores than traditionally designed flyers. |

| Design Version | Key Modifications | Design Objectives | Designer |

|---|---|---|---|

| Design 0 (Original Flyer) | No design changes; kept original as published by Al Jazeera. | Baseline comparison for evaluating improvements. | Not Applicable (Source: Al Jazeera) |

| Design 1 | Enlarged candidate names and party text with bold font, colour-matched text to the election logo, added a secondary background logo behind candidate figures, slightly enlarged figures for greater emphasis, lightened the main headline background from dark to light for improved readability, and reduced body text size to focus viewer attention on primary content. The election logo’s position is positioned to its original placement for a familiar visual structure. | Increase the visibility of key details, emphasise candidate prominence, and create a cleaner, more visually compelling layout. | Professional Graphic Designer |

| Design 2 | Retained changes from Design 1, including bold candidate names and text alignment. Further improvements involved adjusting background contrast, repositioning the US Elections logo for increased prominence, refining text hierarchy, balancing figure prominence, and removing official party icons. | Create balance, strengthen party association awareness, improve readability, and maintain familiar visual structure. | Professional Graphic Designer |

| Design 3 | Incorporated recommendations from CoPilot; replaced static figures with dynamic debate poses, adjusted background contrast; repositioned key elements for better hierarchy, emphasised key headlines with bold text, improved logo visibility, applied colour-coded emphasis on political affiliations; and used action-oriented visuals with a subtle patriotic background. A prominent election logo was added to the background behind candidates to draw attention and subconsciously enhance the design. Official party icons (donkey for Democrats, elephant for Republicans) were added near candidates’ names to attract subliminal attention to each party and its respective candidate. The election logo’s position was restored to its original placement for a familiar visual structure. | Boost emotional engagement, highlight action dynamics, and improve message clarity through visual storytelling. | Professional Graphic Designer (based on AI CoPilot Recommendations) |

| AOI ID | AOI Name | Functional Description |

|---|---|---|

| 1 | Main Headline on Top (Main Headline) | Primary attention grabber conveys key info |

| 2 | Body Text on Top (Body Text) | Supporting text for event context |

| 3 | Trump Figure (Candidate Image: Trump) | Candidate representation (visual anchor) |

| 4 | Kamala Figure (Candidate Image: Kamala) | Candidate representation (visual anchor) |

| 5 | Kamala Name (Name Tag: Kamala) | Identifies candidate (text label) |

| 6 | Trump Name (Name Tag: Trump) | Identifies candidate (text label) |

| 7 | Democratic Party Name (Party Label: Democratic) | Displays political affiliation (Kamala) |

| 8 | Republican Party Name (Party Label: Republican) | Displays political affiliation (Trump) |

| 9 | US Elections Logo (Election Logo) | Central campaign event branding |

| 10 | Venue Text (Event Venue) | Provides event location |

| 11 | Election and Venue Dates (Event Date) | Displays event timeline and deadlines |

| 12 | Source (Source Reference) | Source reference for credibility |

| 13 | Al Jazeera Logo (Media Logo) | Media attribution for journalistic integrity |

| Metric | F-Statistic | p-Value | Significance |

|---|---|---|---|

| Clarity | 0.20 | 0.8989 | Not Significant |

| Engagement | 0.01 | 0.9985 | Not Significant |

| Total Attention | 0.04 | 0.9884 | Not Significant |

| Group 1 | Group 2 | Mean Difference | p-Value | Significance |

|---|---|---|---|---|

| Clarity_Design_0 | Clarity_Design_1 | −0.97 | 0.8724 | No |

| Clarity_Design_0 | Clarity_Design_2 | −0.37 | 0.9915 | No |

| Clarity_Design_0 | Clarity_Design_3 | −0.45 | 0.9849 | No |

| Clarity_Design_1 | Clarity_Design_2 | 0.60 | 0.9653 | No |

| Clarity_Design_1 | Clarity_Design_3 | 0.52 | 0.9769 | No |

| Clarity_Design_2 | Clarity_Design_3 | −0.08 | 0.9999 | No |

| Group 1 | Group 2 | Mean Difference | p-Value | Significance |

|---|---|---|---|---|

| Engagement_Design_0 | Engagement_Design_1 | 0.16 | 0.9988 | No |

| Engagement_Design_0 | Engagement_Design_2 | 0.05 | 0.9999 | No |

| Engagement_Design_0 | Engagement_Design_3 | −0.01 | 1.0000 | No |

| Engagement_Design_1 | Engagement_Design_2 | −0.10 | 0.9997 | No |

| Engagement_Design_1 | Engagement_Design_3 | −0.17 | 0.9985 | No |

| Engagement_Design_2 | Engagement_Design_3 | −0.06 | 0.9999 | No |

| Group 1 | Group 2 | Mean Difference | p-Value | Significance |

|---|---|---|---|---|

| TotalAttention_Design_0 | TotalAttention_Design_1 | −0.39 | 0.9992 | No |

| TotalAttention_Design_0 | TotalAttention_Design_2 | 0.56 | 0.9977 | No |

| TotalAttention_Design_0 | TotalAttention_Design_3 | 0.45 | 0.9988 | No |

| TotalAttention_Design_1 | TotalAttention_Design_2 | 0.96 | 0.9891 | No |

| TotalAttention_Design_1 | TotalAttention_Design_3 | 0.85 | 0.9923 | No |

| TotalAttention_Design_2 | TotalAttention_Design_3 | −0.10 | 1.0000 | No |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Šola, H.M.; Qureshi, F.H.; Khawaja, S. Human-Centred Design Meets AI-Driven Algorithms: Comparative Analysis of Political Campaign Branding in the Harris–Trump Presidential Campaigns. Informatics 2025, 12, 30. https://doi.org/10.3390/informatics12010030

Šola HM, Qureshi FH, Khawaja S. Human-Centred Design Meets AI-Driven Algorithms: Comparative Analysis of Political Campaign Branding in the Harris–Trump Presidential Campaigns. Informatics. 2025; 12(1):30. https://doi.org/10.3390/informatics12010030

Chicago/Turabian StyleŠola, Hedda Martina, Fayyaz Hussain Qureshi, and Sarwar Khawaja. 2025. "Human-Centred Design Meets AI-Driven Algorithms: Comparative Analysis of Political Campaign Branding in the Harris–Trump Presidential Campaigns" Informatics 12, no. 1: 30. https://doi.org/10.3390/informatics12010030

APA StyleŠola, H. M., Qureshi, F. H., & Khawaja, S. (2025). Human-Centred Design Meets AI-Driven Algorithms: Comparative Analysis of Political Campaign Branding in the Harris–Trump Presidential Campaigns. Informatics, 12(1), 30. https://doi.org/10.3390/informatics12010030

_Bryant.png)