1. Introduction

It is well known that, at the present time, the thermal requirements of the diverse variety of buildings (residential, commercial and industrial) have been increasing with a growing trend and that the highest percentage of the electrical consumption of these buildings is precisely due to the systems installed to meet these demands.

For years, work has been carried out on the development and implementation of alternative technologies that allow the satisfaction of the increasing, growing thermal demand in the various sectors of this discipline such as air conditioning and commercial and industrial refrigeration. Alternative technologies that permit to discharge, mitigate and even minimize the growth of the saturated current electrical system, resulting in a decrease in the use of fossil fuels and its corresponding consequences such as greenhouse gases and global warming.

In order to respond to the aforementioned need, in recent years, research work has focused on the development of different types of equipment, systems and solutions, such as photovoltaic panels, flat and concentrating solar collectors, absorption systems and adsorption systems, among many others.

However, the implementation of these systems and solutions is no longer enough; now, control and regulation systems are becoming essential for designing and establishing strategies that optimize all those factors that intervene in the functioning and operation of new proposed systems and solutions, as well as in the environments that require these treatments. These control and regulation systems need to be oriented toward the optimization of the obtained green energy resources, reduction of the electrical consumption and the auxiliary energy requirements.

Traditionally, numerous and very different types of control methods and models have been used for the regulation of HVAC and refrigeration systems and equipment [

1,

2,

3,

4]. These control models can be classified into three large groups: traditional, rule-based models, model-based approaches and data-driven demand response-based control models.

Traditional, rule-based models have been extensively implemented in various types of mechanical and electrical installations [

5,

6,

7,

8,

9,

10,

11,

12,

13]. The main advantages of this type of control model are found in its plain and intuitive structure, easy implementation, low initial cost, quick response and feedback controller [

4,

14,

15]. However, the limitation in modulation capability, the small scalability, the constant need for operator manipulation, the lack of learning capability, the requirement of more complex rules with higher maintenance/update costs for the complex system and the non-optimal performance constitute its main disadvantages [

4,

14,

15].

The model-based approach has been widely investigated in recent years [

16,

17,

18,

19,

20,

21,

22,

23]. This control approach, in accordance with [

4,

24], is considered so valuable for controlling real systems due to its high accuracy, anticipating behavior, capability to consider hard constraints, disturbance robustness, adaptation to shifting in performing conditions and flexibility related to using available explicit models. On the contrary, the high initial and installation costs, as well as the complexity in identifying the appropriate system model, constitute its most unfavorable aspects.

Despite the important advantages indicated above, the commercial and industrial application of the model-based approach has been limited, since its correct, stable and accurate performance depends largely on the use of very precise assumptions and customized models with an elevated grade of detail [

25]. These conditions are impractical and very difficult to achieve given the complexity of the dynamics of thermal systems and of those factors involved in their operation, so it is common to observe this type of control system working with low convergence and unstable and deficient performance.

The overcoming of the drawbacks mentioned above and the generation of optimal control policies can be achieved through the implementation of the data-driven demand response-based control model due to its multiple favorable characteristics. Some of these favorable characteristics are the capability to find an optimal action policy without requiring a model of the system, the flexibility of the algorithm to learn from the interaction with the environment, lower computational costs, high accuracy and, above all, the ability to adapt to real-time measurements and its correct performance in dynamic and uncertain environments.

A data-driven demand response-based control model has its own limitations, such as the requirement for a large amount of data, which makes it difficult to train the model directly from the real system or environment. In these cases, a model of the system and a large number of simulations are required to achieve agent training.

Nowadays, the implementation of the data-driven demand response-based control model is favored due to:

The availability and accessibility of the building control and automation data provided by sensors and control, measurement and automation devices;

The simplicity of the processes of collection, analysis and management of the building control and automation data derived from the virtues of big data and the powerful computing equipment available in the current market.

The aforementioned virtues of the data-driven demand response-based control model and the favorable conditions for its utilization have recently aroused deep interest in the investigation and implementation of this control approach through the use of the reinforcement learning technique in various types of application.

The main fields in which this type of control approach has been investigated and implemented are those subdisciplines involved in the treatment and operation of buildings and facilities of all kinds, such as heating, ventilation and air conditioning, refrigeration, electricity, lighting, energy storage, batteries, domestic hot water, communications, sustainability and building energy management.

Building energy management is one of the areas in which the implementation of reinforcement learning has been most studied to date [

26,

27,

28,

29,

30,

31]. The optimization of the performance and operation of solar domestic hot water and heating systems using control models based on reinforcement learning has also been studied [

32,

33,

34,

35,

36].

Likewise, various reinforcement learning techniques and algorithms have been implemented in HVAC systems or solutions in various types of building, all with the aim of finding the optimal control policy that allows the reduction of energy consumption and/or improves the comfort of the treated space [

25,

37,

38,

39,

40,

41,

42,

43].

Some other examples from the many disciplines and fields related to energy in which the potential of reinforcement learning in process optimization has been studied are: power and energy systems [

44,

45,

46,

47,

48,

49], urban energy management [

50], storage energy [

51] and electric distribution systems [

14].

Despite the recently conducted studies focused on the use of control models based on machine learning, there is still much to explore, especially in terms of HVAC systems and solutions. At the present time, even with the well-known virtues of machine learning for controlling thermal systems, no detailed study has been conducted regarding the implementation of reinforcement learning to optimize the design and operation of solar thermal cooling systems.

Considering the aforementioned evidence, the present research work was performed with the objective of analyzing and studying the potential of control systems based on reinforcement learning in the optimization of the operation and functioning of solar thermal cooling systems driven by linear Fresnel collectors. The main contributions and innovative aspects of this study are the following:

Implementation of a control system based on reinforcement learning in the regulation of a solar thermal cooling system;

The modular integration of a simulation tool developed in EES with a reinforcement learning algorithm module developed in Python, simplifying and facilitating the agent training;

Proposal of a conceptual scheme for the real implementation of the control of solar thermal cooling systems using reinforcement learning;

Verification of the potential of using a control model based on reinforcement learning in the optimization of the operation of an absorption solar cooling system.

Through the performed simulations, it was possible to verify the advantages and potential of control models based on RL for controlling and the regulation of solar thermal cooling systems. It was observed that, for the studied period and with the solar thermal cooling system operating with a control system based on RL, there was a 35% reduction in consumption of auxiliary energy, a 17% reduction in electrical consumption by the pump that feeds the absorption machine and more precise control in the generation of cooling energy regarding the installation working under a predictive control approach.

2. Materials and Methods

2.1. General Description of the Study

The study consisted of evaluating and verifying the potential of a control model based on reinforcement learning in optimizing the operation of solar thermal cooling systems driven by linear Fresnel collectors (STCS_LFC). This was conducted through a practical case study in which the performance of a solar cooling installation operated by a control system based on RL and by a predictive control system was simulated and compared.

The objective of the study was limited to verifying the effect of reinforcement learning on improving system performance and not the maximum optimization that could be achieved with this technique. Therefore, only a few parameters of the many involved in its operation and on which its optimization depends were considered.

The optimization of the operation of the proposed STCS_LFC was evaluated by considering the auxiliary energy requirements, the electrical consumption of sub-circuit C and the satisfaction of the refrigeration demand as dependent variables and the conditions of the storage tank leaving flow (mass/volumetric flow and temperature) as independent variables. That is, with the Q-learning control approach, the aim was to find the control policy that maximizes the use of solar energy in satisfying the cooling demand and, thereby, reduces the consumption of auxiliary energy and the electrical consumption of the pump of the sub-circuit that feeds the absorption machine.

The simulations of the STCS_LFC operating with a predictive control approach were carried out through the simulation tool [

52]. To perform the simulations of the STCS_LFC controlled through the reinforcement learning approach, it was necessary to implement a programming module based on the Q-learning algorithm in operation mode, the agent of which had been previously trained.

2.2. Description of the Case Study

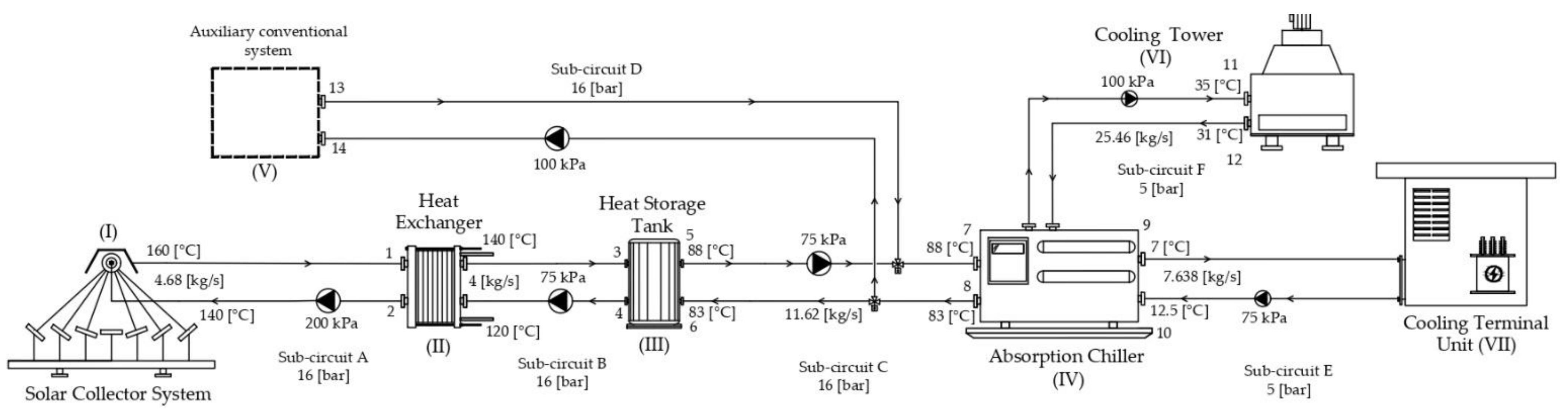

In the case study, the hourly simulation of a solar cooling installation with the configuration shown in

Figure 1 was analyzed for a typical summer day (on 1 July) in the city of Riyadh. This solar cooling installation was dimensioned to satisfy the cooling demand of a typical set of electro-mechanical buildings for the maintenance of the medium-capacity railway installations located in Riyadh.

The buildings are treated through an air-handling unit (AHU) fed with chilled water generated by the absorption machine. The AHU is arranged with a recirculation module and supplies the minimum requirements of outdoor air.

2.2.1. Building Characteristics

The properties of the buildings’ construction materials (enclosures and glazing), as well as the design and use characteristics of the air-conditioning and lighting systems, were considered in compliance with the indications of [

53] and in accordance with the climatic zone of the place where the study was carried out.

The minimum outdoor air requirements were based on [

54], according to the type of space or building to be treated. The internal thermal loads were calculated by applying a ratio of 230 W/m

2 derived from previously estimated mechanical and electrical losses considered for this type of buildings. The detailed analysis performed to obtain the ratio for the internal thermal loads is outside the scope of this work.

2.2.2. Refrigeration System

The flow diagram of the proposed solar cooling thermal system in this study is shown in

Figure 1 [

52]. This configuration is characterized by operating under an indirect coupling control mode [

55] similar to that of commissioned installations, such as those in [

56,

57,

58] among others.

The main elements of this system are: a field of concentrating linear Fresnel collectors (I), a stratified hot water storage tank (III), a single-effect absorption chiller using the pair LiBr–H2O (IV) and a cooling tower (VI). The complementary elements of the proposed system are: a heat exchanger (II), a conventional auxiliary thermal energy supply subsystem (V) and various water distribution elements consisting of pipes, pumping groups and control valves that interconnect the aforementioned subsystems.

The main considered aspects for the operation of the proposed solar cooling system in this study are mentioned below:

The linear Fresnel collectors are arranged horizontally and with a north–south orientation;

The heat transfer fluid in each of the sub-circuits is pressurized water;

The storage tank has stratification and is arranged in a vertical position.

In the schematic diagram in

Figure 1, the design conditions of the proposed solar thermal cooling system for the case study are presented, and

Table 1,

Table 2,

Table 3 and

Table 4 show the technical and sizing characteristics of its components.

The pressure losses of the pumps were previously estimated through a general analysis of each of the sub-circuits, considering the pipes, hydraulic components and accessories present, as shown in

Figure 1.

The operating parameters (range, the ratio between the water mass flow rate (L) and the air mass flow rate (G) and the approach) of the cooling tower were defined considering the recommendations of [

59] for air-conditioning applications, and they are shown in

Table 2. The value of the tower characteristic (KaV/L) was calculated using the mathematical model of the cooling tower. For this calculation, the values of the operating parameters and the ambient design conditions for cooling were considered for the hottest month of the year.

2.2.3. Climatic Conditions

The studied building was considered to be located in Riyadh, Saudi Arabia. The geographic information and the annual cooling design conditions were defined in accordance with the information provided by the ASHRAE climatic conditions database [

60] corresponding to the year 2017. In addition, the climatic conditions regarding the annual hourly profile of the direct normal irradiation, dry bulb temperature, relative humidity, atmospheric pressure and wind speed were obtained from the database of the software Design Builder [

61] and from the software System Advisor Model (SAM) [

62].

2.2.4. Study Period

The study was conducted for a typical summer day in the city of Riyadh, Saudi Arabia. The selected day was 1 July. The climatic conditions for the studied day were defined according to

Section 2.2.3.

2.2.5. Software

The software Design Builder [

61] was used for the modeling of the studied buildings and for the estimation of the hourly cooling demand.

The simulation and analysis of the solar cooling systems driven by linear Fresnel collectors were accomplished using the realistic simulation tool developed in the research work [

52]. Detailed information about this simulation tool is provided in

Section 2.4.

2.3. Work Sequence of the Proposed Study

The work sequence followed to conduct the study on the potential of reinforcement learning in optimizing the operation of solar thermal cooling systems driven by linear Fresnel collectors is presented in

Figure 2, where: DNI: direct normal irradiance (W/m

2); and STCS_LFC-QL: the simulation module developed in EES that integrates the STCS_LFC tool [

52] and the Q-learning module developed in Python software.

2.4. Simulation Tool for Analysis of Solar Thermal Cooling Systems Driven by Linear Fresnel Collectors

The simulation tool [

52] was used to perform the simulations of the solar thermal cooling system driven by linear Fresnel collectors studied in the practical case of this research work. This simulation tool was used, on the one hand, individually with a predictive control approach and, on the other hand, in conjunction with the Q-learning module described in

Section 2.5 in agent training mode and later in operation mode.

The STCS_LFC simulation tool [

52] was developed using the EES software [

63]. It consists of a main program that integrates and interconnects: (1) subroutines containing the governing equations for the system components of a solar cooling thermal installation (submodules), (2) input data and (3) a set of control statements. The resolution of the mathematical model is carried out through a parametric table that interacts with the main program window by means of specific programming statements, as well as with variables that serve as a bridge among them. These bridge variables allow the exchange of information among the parametric table, the control procedure and the base/main mathematical model in the main window.

The integrated mathematical model of the simulation tool [

52] considers the ambient conditions, the thermal loads of the building, the dimensioning data of each of the components of the system and the simultaneous interaction among them to conduct a realistic, simple and precise analysis. The structure diagram of the developed mathematical model is presented in

Figure 3.

In accordance with [

52], the correct implementation and accuracy of the mathematical model used for the development of the simulation tool were verified through a code and calculation verification. This verification was conducted through an evaluation by comparison among the numerical solutions obtained from the mathematical model in the assessment related to the known correct answers of a proposed case study or experimental results.

The mean deviation between the experimental data and the calculated numerical solution from the model for the main components of the solar cooling installation are indicated below:

Mean convergence error calculated for the linear Fresnel collector, <3.87%;

Mean convergence error calculated for the storage tank, <1.10%;

Mean convergence error calculated for the absorption chiller, <0.48%;

Mean convergence error calculated for the cooling tower model, <1%.

With the results indicated above, the accuracy of the simulation tool is demonstrated, and its use in this research work is justified.

2.5. Interaction between the Solar Thermal Cooling System Simulation Tool and the Q-Learning Module

Figure 4 and

Figure 5 show the flowcharts that illustrate the interaction between the Q-learning module developed in Python and the solar thermal cooling system simulation module developed in EES for the training and implementation phases, respectively.

The combination of these two pieces of software aimed to facilitate and optimize the implementation of the Q-learning technique, since each of the involved modules was developed in the most appropriate programming languages for its resolution. In addition, in this way, each of them was independently assigned a specific and complementary activity within the installation control process, thus avoiding programming problems when trying to use a programming language to resolve issues for which they are not fully prepared or when its operation brings more problems than benefits.

For example, with the EES software it is not possible to program a sequence that allows an array to be updated in a simple and optimal way; however, in Python, this is something that is very simple, practical and safe to do. The same thing happens if an attempt is made to program a complex thermodynamic system in Python, which does not have the necessary tools and functions to allow for this to be carried out correctly, simply and, in general, without convergence problems, but is possible with EES. The interaction between EES and Python in the implementation of Q-learning constitutes one of the most innovative aspects of this research work.

On the other hand, it is important to mention that, since there were no real measurements of the performance of the proposed solar cooling thermal system to be studied, the idea was to use the tool [

52] to realistically simulate the thermal behavior of the installation and, subsequently, use the obtained results for the learning of the agent during the training phase. As shown in

Figure 4, during the training phase, the Q-learning module indicated the actions on the STCS_LFC based on the training criteria (exploration or reward optimization) which were implemented directly in the installation through the simulation tool [

52], obtaining specific feedback for this action. This procedure (realistic live training) is advantageous in the following cases:

Cases like this in which there is not a real installation in operation from which to obtain measurements and data on its behavior;

Case studies in which there is only an unreliable and incomplete set of data and measurements on the real behavior of an installation in which interpolations are usually used to cover ranges for which information is not available;

Cases in which the starting initial information is obtained from simulating the system in general and unrealistic conditions due to the limitations of the used software, for which the obtained feedback presents serious information gaps, so it is necessary to resort again to interpolations and other data-handling techniques.

St_i: state in the time step i of the study period;

At_i: action for the state in the time step i of the study period;

Rt_i: reward for state in the time step i of the study period;

St_i-1: previous state to the time step i for the study period;

At_i-1: action for the previous state to the time step i of the study period;

Rt_i-1: reward for the previous state to the time step i of the study period.

The optimization of the policy was achieved through the Bellman equation, also known as the equation Q, which is essentially used for updating the Q-values in the Q-table for each episode, as illustrated in

Figure 4. The Bellman equation is shown in Equation (1), the variables of which are indicated below:

: Q-value of taking an action (At) at the state (St);

: updated Q-value of taking an action (At) at the state (St);

Q-value of the resulting next state (St´) taking the optimal action (At´);

α: learning rate (0 < α ≤ 1);

γ: discount factor (0 < γ ≤ 1);

Rt: reward for the current time step.

During the training phase, the agent faces the dilemma of exploring new states while maximizing the global reward at the same time, which is known as the exploration vs. exploitation trade-off. In order to achieve a balance between exploration and exploitation, first, hyperparameter values (α, γ) are intentionally chosen that permit a deep exploration of states and actions and, subsequently, chosen values are more focused on the learning process and on obtaining long-term rewards. Finally, the agent has enough information to make the best decision in the future.

The best trade-off points between training time (number of episodes) and the optimal reward obtained are reached when a convergent pattern to the maximum reward value that can be obtained is observed in the calculated reward values.

2.6. Q-Learning Module for the STCS_LFC Operation Control

The developed Q-learning module for the operating control of the solar thermal cooling system of the proposed case study in the present work was developed using the programming software Python, version 3.10.

Table 5 details the used Python modules and their implemented functions in the code of the developed algorithm.

2.6.1. Parameters of the Q-Learning Algorithm Applied to the Practical Case Study

In this practical case study, each state was represented by a vector of length 6 composed of the variables: time, DNI, ambient temperature and relative humidity, hot water storage tank average temperature and cooling demand of the building. A total of 732 possible states that could occur in this case study were identified, and they are shown in

Table 6.

The set of actions defined for the training of the agent are shown in

Table 7, and they were represented by the variation of the volumetric flow of the hot water entering the generator of the absorption machine. The variation of this flow was performed with volumetric flow steps between 0.000125 m

3/s and 0.0025 m

3/s.

The actions were defined according to the operating range of the absorption machine dimensioned to satisfy the cooling demand of the analyzed building considering the indications of the manufacturer’s datasheet.

The reward was the opposite value of the sum of the penalties stipulated for the auxiliary energy consumption, the electrical consumption of the pump that supplies hot water to the absorption machine and the precision in the generation of the required cooling energy at each time step for a certain state and for a specific selected action.

The total reward of an episode was calculated using the Equation (2), considering the rewards for auxiliary energy consumption (RA), electrical consumption of the pump that supplies hot water to the absorption machine (RB) and the precision in the generation of the required cooling energy (RC) for each time step. These values were estimated independently using Equations (3)–(5), respectively.

Equations (3)–(5) were obtained through an iterative process and defined in order to guarantee a significant gradient that maximizes the target value to be achieved (RA, RB and RC) considering the maximum and minimum values that the proposed installation can reach in terms of auxiliary energy consumption, electrical consumption and cooling capacity.

The weight of each type of reward (

RA,

RB and

RC) in Equation (2) was selected and customized by the authors, prioritizing low auxiliary energy consumption over low electrical consumption and precision in the generation of the required cooling demand.

In Equations (6)–(8), the following variables are considered:

percentage of the total required heat transfer rate at the generator that is supplied by the auxiliary system in the i time step of the study day, [%];

: heat transfer rate supply by the auxiliary system in the i time step of the study day, [kW];

required heat transfer rate in the generator of the absorption machine in the i time step of the study day, [kW];

percentage of the consumption of the pump of the sub-circuit C regarding its maximum consumption in the i time step of the study day, [%];

: electric consumption of the pump of the sub-circuit C in the i time step of the study day, [kW];

design maximum electric consumption of the pump of the sub-circuit C [kW];

: percentage of the generated cooling by the absorption machine regarding the cooling demand in the i time step of the study day, [%];

generated cooling by the absorption machine in the i time step of the study day, [kW];

cooling demand in the i time step of the study day, [kW].

2.6.2. QL Module Algorithm

The algorithm, in Python, of the developed Q-learning module for the case study of this research work and its detailed flowchart are presented in the

Appendix A and in

Figure 6, respectively. In this algorithm, the updating of the Q-value was performed on delay.

In

Figure 6 the following variables are considered:

St_o: initial state of the study period;

At_o: action in the initial state of the study period;

Rt_o: reward for the applied action in the initial state of the study period;

St_o+1: next state to the initial;

At_o+1: action for the next state to the initial;

Rt_o+1: reward for the next state to the initial;

St_i: state in the time step i of the study period;

At_i: action in the time step i of the study period;

Rt_i: reward for state in the time step i of the study period.

3. Results

3.1. Case Study Results

This section analyzes the obtained results from the simulations carried out on the solar cooling system of the proposed case study. This installation was simulated operating under a predictive control system and under a reinforcement learning control approach.

The reinforcement learning control approach was studied considering a trained agent for different numbers of iterations or episodes (1, 10, 100, 1000 and 1500) for the defined study period.

Figure 7 illustrates the obtained total reward for each episode performed during the training of the agent Q. The total reward represented the sum of the obtained rewards at every time step during each episode, calculated using the Equation (2).

The value of the total reward in the first episode was 29.83, and its value kept oscillating between 29.66 and 31 during the following 250 episodes. After these episodes, the values of the total reward began to increase progressively until they converged towards a value of 32 in the last 500 episodes, approximately. The minimum and maximum total reward reached were 29.66 and 32.25 in episodes 52 and 1421, respectively, which means a difference of 8.73%.

The pattern of the curve shown in

Figure 7 indicates the learning process of the Q agent. During the first 655 iterations, the observed behavior was oscillating and represented a phase marked more by the exploration of the agent than by the search for the optimal sequence of actions that maximize the reward. During this interval of episodes, the authors intentionally chose values of the hyperparameters (

α,

γ) that allowed the agent to perform a deep exploration of the states and the proposed actions.

From approximately episode 655, a convergent pattern was observed towards a total reward value of 32, which indicates the learning of agent Q. During this interval, the authors chose values of hyperparameters (α, γ) more focused on the learning process (incorporation of knowledge acquired in previous episodes) and on obtaining a long-term reward.

The training of Q agent in the present study was completed in a total of 1500 iterations with an acceptable level of convergence, taking a time of approximately 9.5 h on a computer with the following characteristics: Intel (R) Core (TM) i7-9750H processor unit and 32 GB in RAM memory.

Figure 8,

Figure 9 and

Figure 10 show the comparative evolution of the behavior of the simulated solar thermal cooling system as a function of the number of training episodes performed.

Figure 8 illustrates the hourly average tank temperature as a function of agent training episodes resulting from the simulations of the proposed cooling installation using the reinforcement-learning-based control system. A decreasing and very regular pattern in terms of the trajectory of the resulting curves for each number of the studied episodes was observed, with slight variations in the value of the obtained temperature for each hour of the studied day. This convergent behavior indicates the limitations or restrictions of the simulated solar cooling system regarding the solar thermal energy absorbed and stored compared to the requirements with which the RL algorithm has to deal to optimize it and thereby to reduce the contribution of auxiliary energy.

A decrease in the total electrical consumption of the pump that recirculates water in sub-circuit C of the system shown in

Figure 1 at the end of the training was observed, as shown in

Figure 9, which reflects the learning process of the agent and the proper selection and functioning of the proposed reward equation. The achieved reduction in electrical consumption between the beginning and the end of the training was 7.25%.

The influence of the number of training episodes on the auxiliary energy requirement of the solar thermal cooling system of the present case study is presented in

Figure 10. In this, a reduction in the auxiliary energy consumption was observed with the increment in the number of training episodes of the agent, which demonstrates, once again, the learning process of the agent and the proper selection and functioning of the proposed reward equation. The optimization in the auxiliary energy consumption reached within the training process was 4.52%.

The performance of the solar thermal cooling system of the present case study operating with a predictive control model and with a reinforcement learning control model was analyzed using the obtained results from the simulations, which are represented in

Figure 11,

Figure 12,

Figure 13,

Figure 14 and

Figure 15.

In

Figure 11, the hourly total net absorbed solar heat transfer rate by the collector is represented for each studied control approach. In this, it can be seen that when the installation operated with the control system based on RL, the solar collection system worked from 7 a.m. to 6 p.m. without interruptions. However, when the installation was operated by the predictive control system, a deactivation of the solar collectors was observed after 13 h.

The deactivation at 13 h occurred because the predictive control system during the first hours of the day was not able to find the optimal combination of water flow and temperature required by the generator of the absorption machine to satisfy cooling demand, deciding to store part of the absorbed solar energy and use auxiliary energy to drive the absorption machine. This energy storage resulted in a progressive increase in the temperature of the tank until the maximum setpoint temperature was reached, causing the deactivation of the solar collectors, as can be seen in

Figure 12.

The RL control approach is capable of finding the optimum quantity and temperature of the water leaving the hot water storage tank to be supplied to the absorption machine for satisfying the cooling requirements. The foregoing remark is reflected in

Figure 12 with the decreasing of the tank temperature, which enabled the solar collection system to remain operational during the hours of availability of solar energy.

The capability of the control system based on RL to regulate the solar cooling system in a more optimal way allowed the absorption of 19.72% more solar energy compared to the solar energy absorbed when the installation operated with the predictive control system.

Figure 13 illustrates comparatively the hourly cooling generation of the absorption machine operating with each type of control approach proposed in this study. The graph shows a greater precision of the control system based on QL compared to the predictive control system in the satisfaction of the cooling demand of the system. At 8 o’clock, a deviation in the predictive control system led to an overproduction of cooling, increasing the amount of thermal energy that must be provided, which, in this case, was auxiliary energy, as shown in

Figure 15.

The electrical consumption of the pump in the sub-circuit C when the STCS of the present case study operated with the control system based on RL was less than when the operation was controlled with the predictive control, as illustrated in

Figure 14. The reduction of the consumption of the pump of this sub-circuit achieved by the RL control approach with respect to the consumption with the predictive control system reached 1.68 kWh, that is, 16.86% lower.

Figure 15 shows the hourly heat transfer rate supplied by the auxiliary system to satisfy the cooling demand of the building. In this, it can be observed that the thermal requirements supplied by the auxiliary system were lower for every hour of the studied day when the installation operated with the control system based on RL due to the ability of this control approach to regulate in a more optimal way the operation of the installation.

The high consumption of auxiliary energy observed when the solar cooling installation operated with the predictive control system was due to its inability to find, during the first hours of the studied day, the optimal combination of water flow and temperature to supply the generator of the absorption machine according to the cooling demand, generally resulting in a higher consumption of auxiliary energy.

The achieved optimization in the auxiliary energy consumption through the use of the RL control approach compared to the predictive control system was 1080 kWh, that is, a reduction of 34.68%.

3.2. Defiant Aspects of Control Models Based on RL for Operating Solar Thermal Cooling Systems

During the realization of this research work, some defiant aspects and drawbacks were identified in the applicability of control models based on RL for the operation and functioning of solar thermal cooling systems, which are described below:

Need for a large amount of data for agent training:

To perform agent training, a large amount of data is required, which can be obtained directly from the environment and the installed system. In general, this amount of data is not available, so modeling of the installation and the environment to be treated is commonly used. If this were the case, a large number of simulations would be required to adequately complete the agent training;

Complexity in the definition of the reward equation and the hyperparameters:

The definition of the reward equation is not a trivial task; it requires significant knowledge about the operation of the system, the mathematical models and computer programs to be used.

The effectiveness of the RL model is conditioned to the appropriate choice of states, actions, rewards and the hyperparameters (α, γ), and it also requires a high number of simulations of episodes that allow the correct learning of the agent. The choice of the hyperparameters (α, γ) and the reward equation must be adjusted according to the obtained results and according to the observed level of convergence.

Each solar cooling installation is different; they have their own characteristics in terms of configuration, capacity, residual energy availability, climatic conditions, etc. Therefore, the reward equation and the value of the hyperparameters have to be recalculated and particularized. The foregoing results in a high number of hours of simulations, analysis and operating adjustments, which obviously have an economic impact;

Complexity in the manipulation of data and interrelationships between variables:

One of the main drawbacks or challenges in the implementation of RL models in the regulation of solar thermal cooling systems is the complex programming and data manipulation that are necessary due to the high number of involved variables in their operation, as well as for the complex interaction between them.

One way of dealing with the aforementioned complexity in programming and data management is through the combined and integrated use of two programs, one specifically designed to perform the simulations of thermal systems and another for the data handling and complex programming. This procedure was successfully used in this research work, allowing great practicality, ease, time savings and efficiency during the agent training stage as well as in the operation of the installation with the control system based on RL (with the agent already trained).

Despite the defiant aspects and drawbacks mentioned above regarding the applicability of RL-based control models, the obtained results demonstrate their enormous potential in the regulation and operation of this type of system.

3.3. Control Scheme for Solar Thermal Cooling System Based on RL for Real Implementation

In

Figure 16, a schematic diagram of a control model based on Q-learning for real implementation is presented at a conceptual level. Depending on the complexity of the system, the number of states, actions and variables to be controlled, it may be necessary to use a more powerful algorithm such as one for deep Q-learning.

4. Conclusions

A control model based on the reinforcement learning technique was implemented for the operation of the solar thermal cooling system driven by linear Fresnel collectors proposed for the case study of this research work.

The implementation of this control model was carried out through the interaction of a QL module developed in Python and a simulation module of a SCTS_LFC developed in EES. This interaction was achieved through a programming procedure developed in EES that served as a communication gateway between both modules.

In the absence of experimental data on the studied building, the HVAC system and the solar cooling installation, the agent was trained using models. The solar thermal cooling system driven by linear Fresnel collectors was simulated through the tool [

52], and the modeling of the building and the HVAC system was carried out using the software Design Builder. Depending on the required degree of detail, the model of a building and its corresponding HVAC system may require a time of 40 to 100 h.

The obtained results from the simulations carried out demonstrate the advantages of the control system based on reinforcement learning compared to the predictive control approach. For the proposed case study, a reduction of 35% in the auxiliary energy requirements and 17% in the electrical consumption of the pump that feeds the absorption machine was observed when the solar cooling installation was operated with a control system based on RL.

Although, in the present research work, for simplicity, only some variables of the SCTS_LFC were considered to be controlled from the developed RL approach, the potential of this control approach for the controlling and operating of this type of thermal system was verified, and, thereby, demonstrated that reinforcement learning can open a new door in energy optimization not only for solar thermal cooling systems but also in other types of thermodynamic systems with sequential processes.

The effectiveness of the RL model is conditioned to the appropriate choice of states, actions, rewards and the hyperparameters (α, γ), and it also requires a high number of simulations of episodes that allow the correct learning of the agent.

The choice of the hyperparameters (α, γ) must be adjusted according to the obtained results and according to the observed level of convergence. In this research work, one thousand and five hundred episodes were performed for the agent training. Firstly, hyperparameter values were chosen that allowed a deep exploration by the agent, and, then, more importance was given to achieving greater long-term rewards.

The time required for agent training was 9.5 h using a computer with a processor Intel (R) Core (TM) i7-9750H and 32 GB in RAM memory.

Finally, a control scheme based on RL for solar thermal cooling systems driven by linear Fresnel collectors was proposed at a conceptual level, in which all the variables of the various components of the system that could be controlled for the optimization of its operation were considered. Future research could analyze the scenario described above, although it would be necessary to use more powerful QL algorithms that allow the handling of a greater number of variables, states and actions.