A Review on Observer Assistance Systems for Harvested and Protected Fish Species

Abstract

:1. Introduction

2. Related Research

2.1. Harvest Estimation

2.2. Fish Species Estimation

2.3. Electric Monitoring System

3. Proposed Electronic Monitoring System

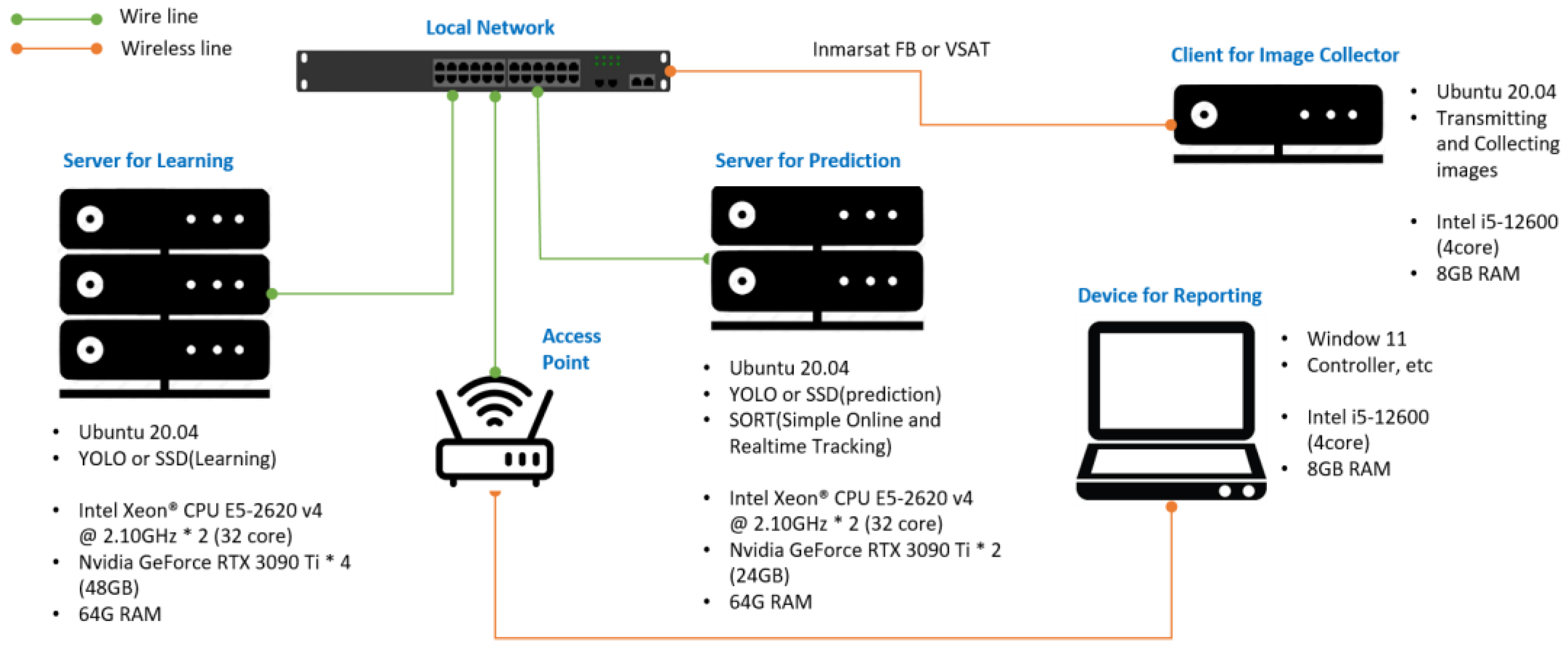

3.1. An Overview of Hardware System

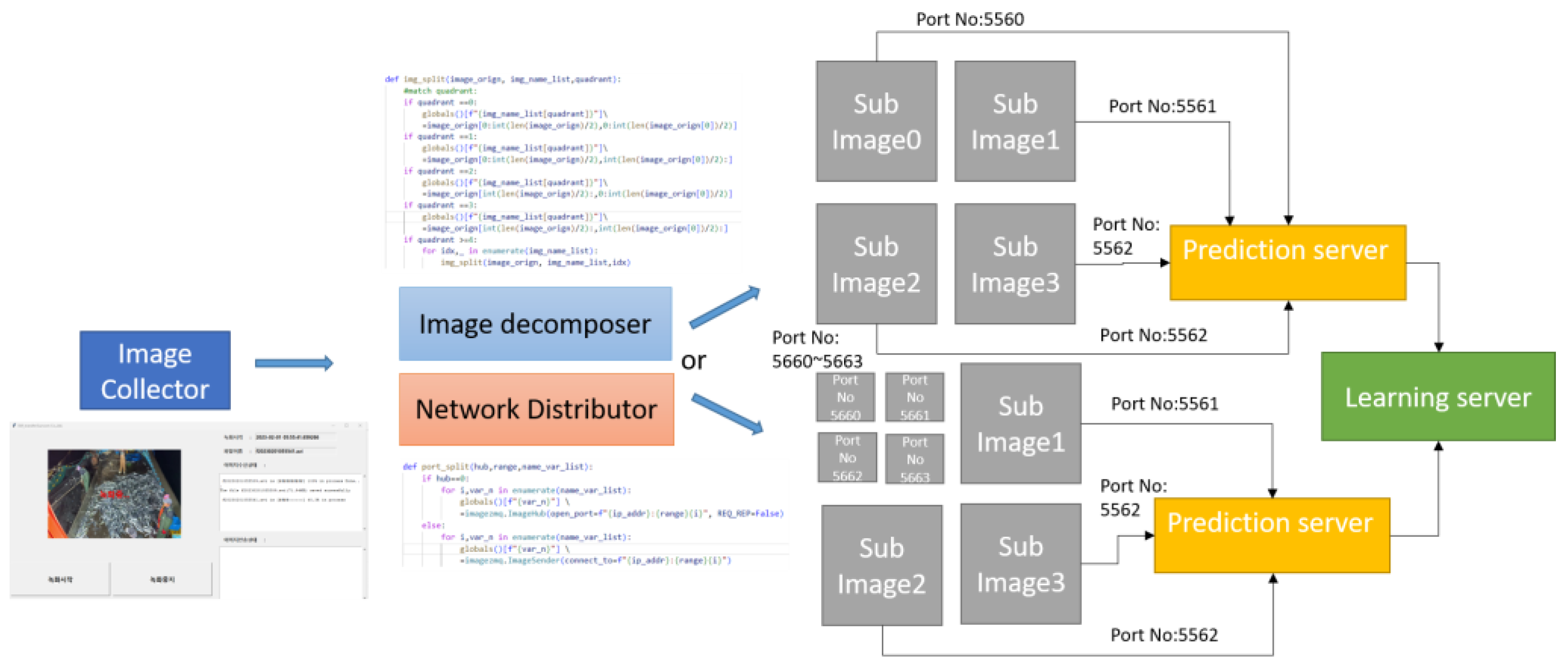

3.2. Software Structure

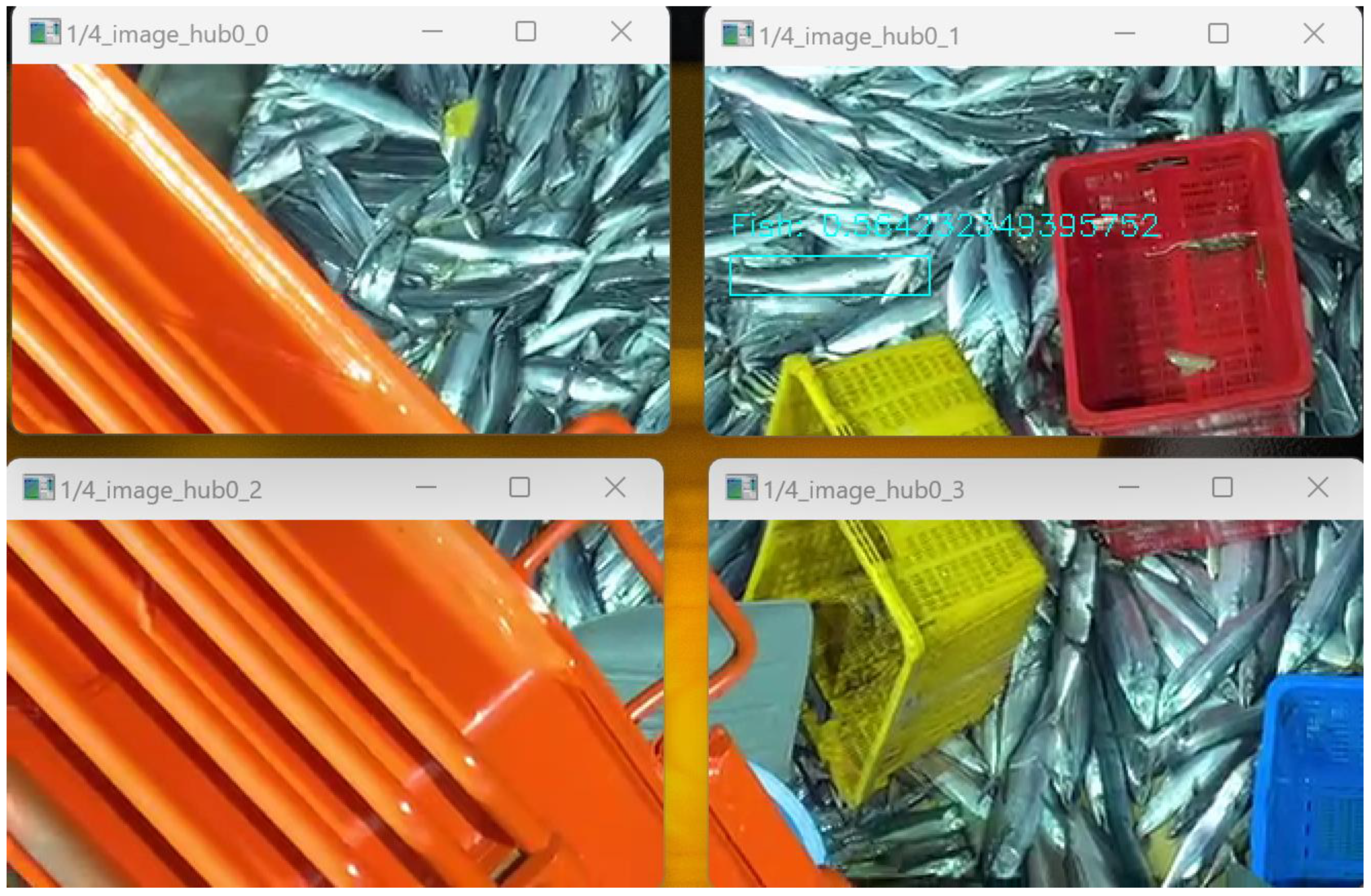

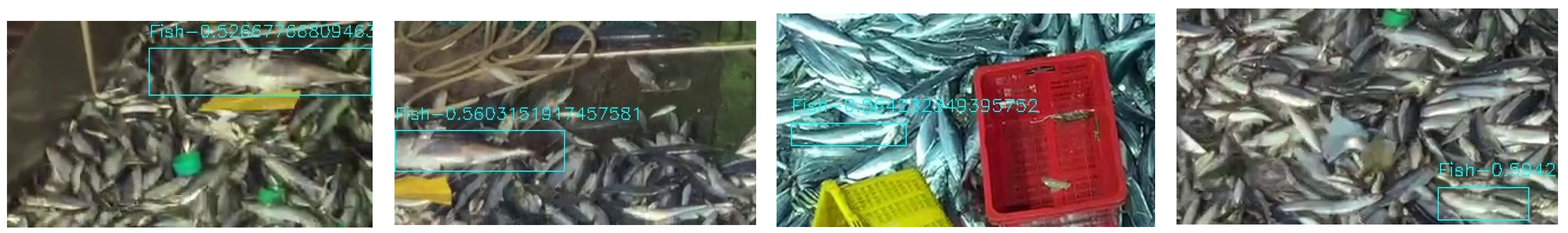

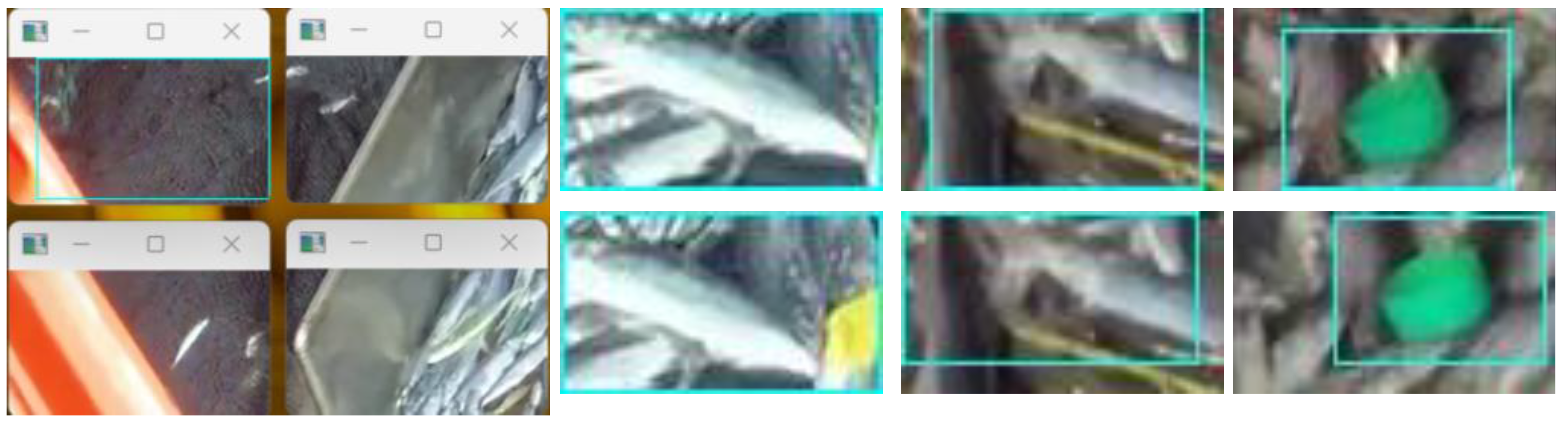

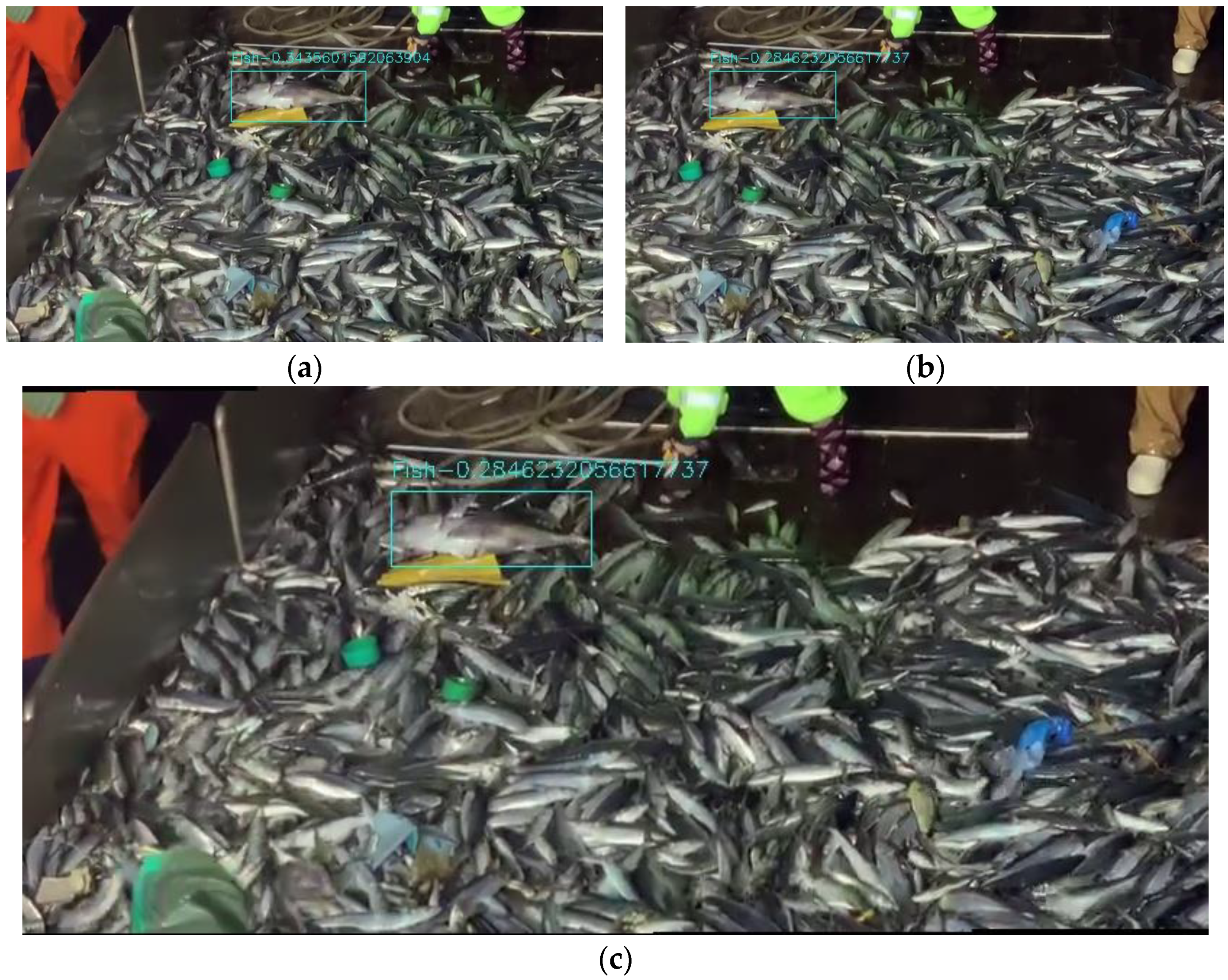

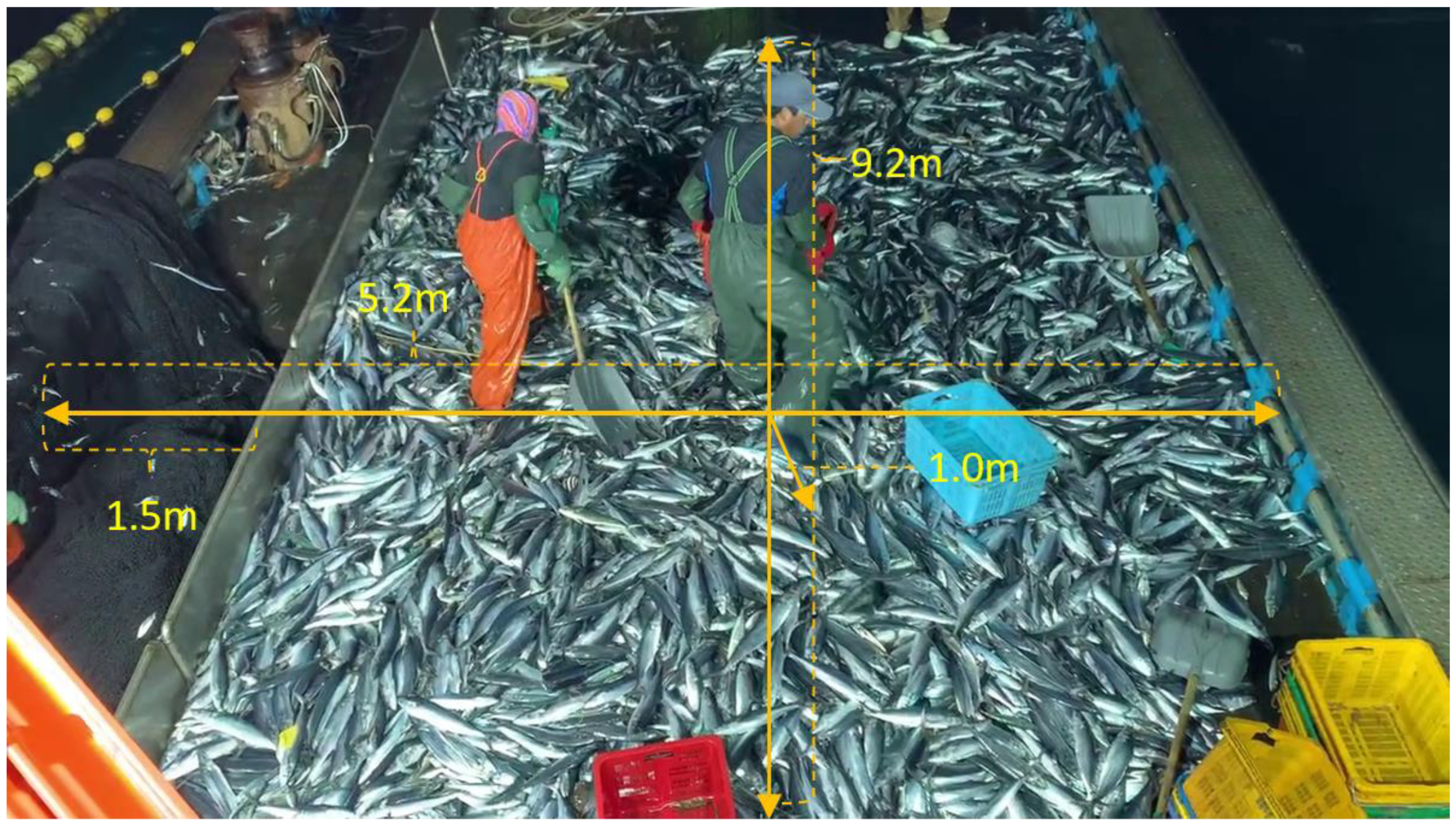

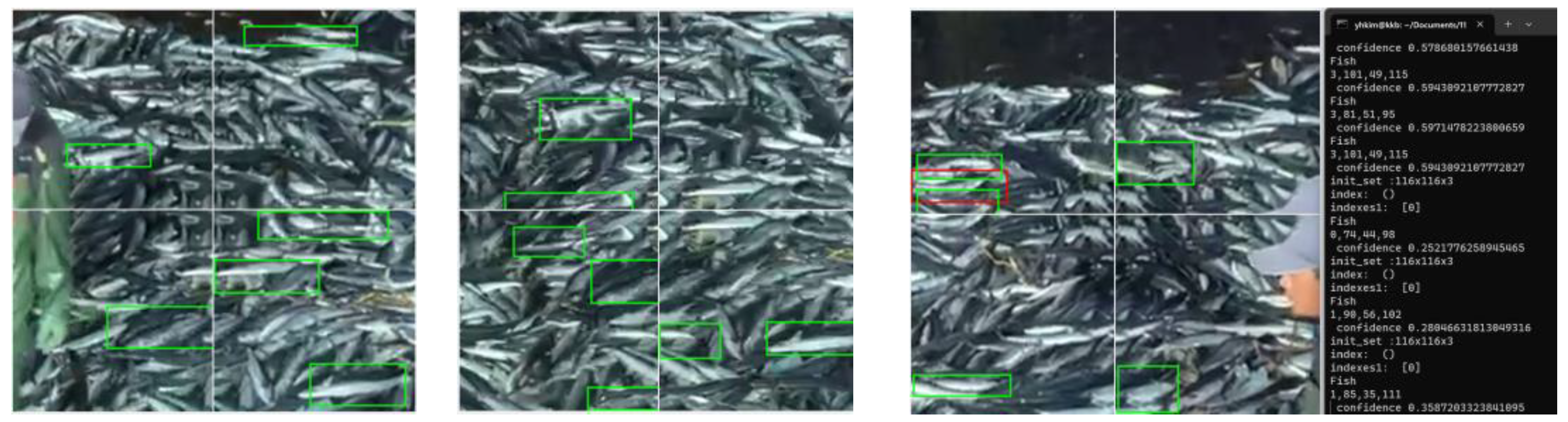

4. Experiments on Electronic Monitoring Systems

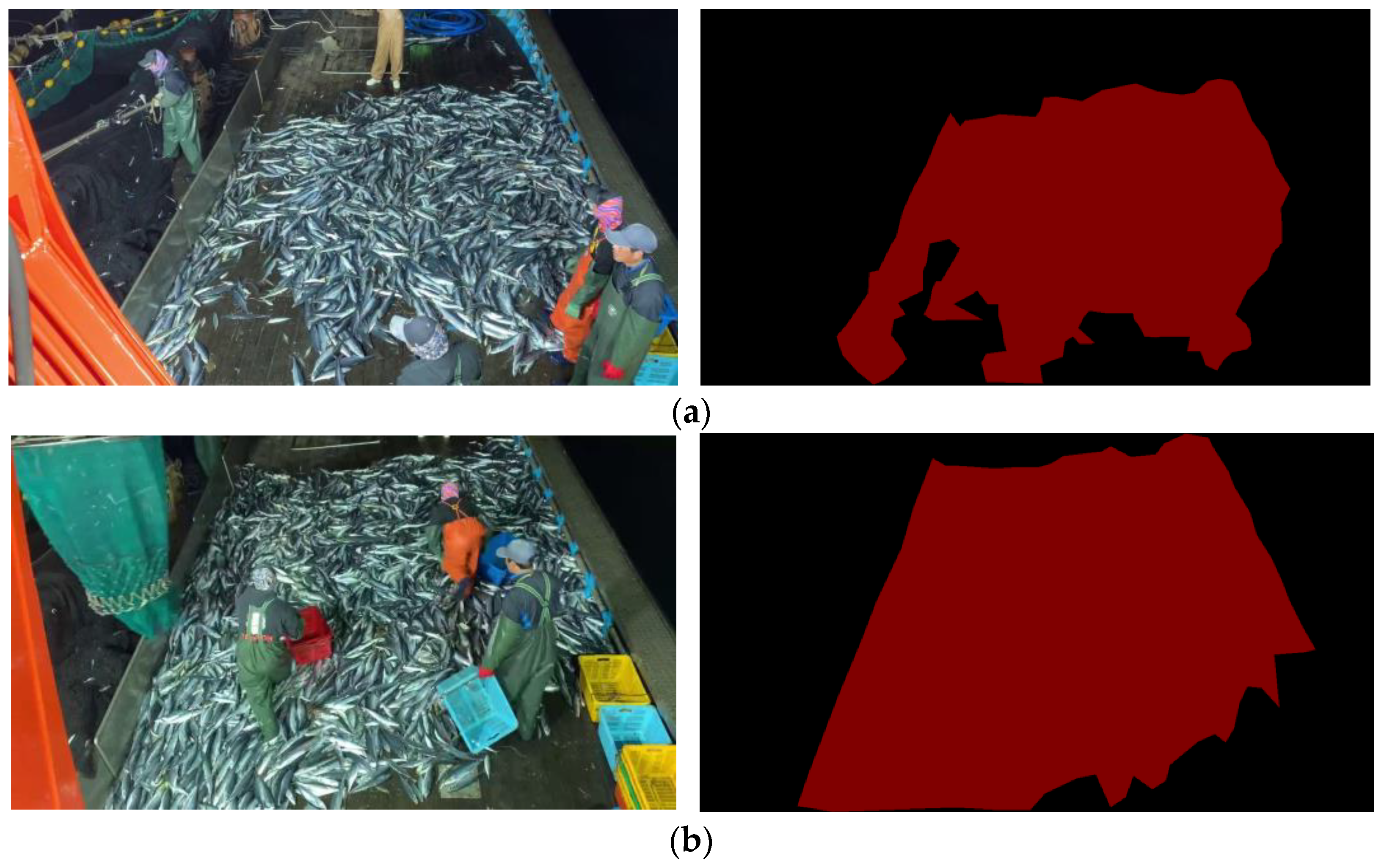

Experiments for Inferencing Harvest

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Choi, J.H. A Study on Conservation and Management Implementation System of WCPFC for Marine Living Resources; The Korea Institute of Marine Law: Busan, Republic of Korea, 2008; Volume 20, pp. 1–26. [Google Scholar]

- Ministry of Oceans and Fisheries. Research on Ways to Strengthen the Competitiveness of the Ocean Industry; Ministry of Oceans and Fisheries: Sejong, Republic of Korea, 2017. [Google Scholar]

- Son, J.H. A Study on the Conservation and Management of Marine Living Resources and Countermeasure against IUU Fishing. Ph.D. Thesis, Pukyong National University, Busan, Republic of Korea, 2011. [Google Scholar]

- Choi, J.H. Measures to Vitalize Bilateral International Cooperation in the Fisheries Filed; Ministry of Agriculture, Food and Rural Affairs: Sejong, Republic of Korea, 2010. [Google Scholar]

- Choi, J.S. Climate Change Response Research—Global Maritime Strategy Establishment Research; Ministry of Oceans and Fisheries: Sejong, Republic of Korea, 2006. [Google Scholar]

- Hong, H.P. A Study on the 2nd Comprehensive Plan for Ocean Industry Development; Ministry of Oceans and Fisheries: Sejong, Republic of Korea, 2014. [Google Scholar]

- Ministry of Oceans and Fisheries. Study on Detailed Action Plan for Seabirds and Shark International Action Plan; Ministry of Oceans and Fisheries: Sejong, Republic of Korea, 2006. [Google Scholar]

- Do, Y.J. Measures to Create International Observer Jobs in Deep Sea Fisheries; Ministry of Oceans and Fisheries: Sejong, Republic of Korea, 2017. [Google Scholar]

- Go, K.M. Ecosystem-Based Fishery Resource Evaluation and Management System Research; Ministry of Oceans and Fisheries: Sejong, Republic of Korea, 2010. [Google Scholar]

- Snow, R.; O’Connor, B.; Jurafsky, D.; Ng, A.Y. Proceedings of the Conference on Empirical Methods in Natural Language Processing—EMNLP’08, Honolulu, HI, USA, 25–27 October 2008; Association for Computational Linguistics: Morristown, NJ, USA, 2008; pp. 254p. Available online: http://portal.acm.org/citation.cfm?doid=1613715.1613751 (accessed on 25 October 2008).

- Whitehill, J.; Ruvolo, P.; Wu, T.; Bergsma, J.; Movellan, J. Whose vote should count more: Optimal integration of labels from labelers of unknown expertise. Adv. Neural Inf. Process. Syst. 2009, 22, 1–9. [Google Scholar]

- Liu, Q.; Peng, J.; Ihler, A. Variational inference for crowdsourcing. Adv. Neural Inf. Process. Syst. 2012, 25, 1–9. [Google Scholar]

- Hong, C. Generative Models for Learning from Crowds. arXiv 2017, arXiv:1706.03930. [Google Scholar]

- Raykar, V.C.; Yu, S. Eliminating spammers and ranking annotators for crowdsourced labeling tasks. J. Mach. Learn. Res. 2012, 13, 491–518. [Google Scholar]

- Khan Khattak, F.; Salleb-Aouissi, A. Crowdsourcing learning as domain adaptation. In Proceedings of the Second Workshop on Computational Social Science and the Wisdom of Crowds (NIPS 2011), Sierra Nevada, Spain, 7 December 2011; pp. 1–5. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common Objects in Context. arXiv 2014, arXiv:1405.0312. [Google Scholar]

- Su, H.; Deng, J.; Fei-fei, L. Crowdsourcing annotations for visual object detection. In Proceedings of the 2012 Twenty-Sixth AAAI Conference on Artificial Intelligence, Toronto, ON, Canada, 22–26 July 2012; pp. 40–46. [Google Scholar]

- Idrees, H.; Saleemi, I.; Seibert, C.; Shah, M. Multi-source Multi-scale Counting in Extremely Dense Crowd Images. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition (IEEE), Portland, OR, USA, 23–28 June 2013; pp. 2547–2554. Available online: http://ieeexplore.ieee.org/document/6619173/ (accessed on 23 June 2013).

- Guerrero-Gomez-Olmedo, R.; Torre-Jimenez, B.; Lopez-Sastre, R.; Maldonado-Bascon, S.; Onoro-Rubio, D. Extremely overlapping vehicle counting. In Proceedings of the 2015 Iberian Conference on Pattern Recognition and Image Analysis, Santiago de Compostela, Spain, 17–19 June 2015; 2, pp. 423–431. [Google Scholar]

- Sanchez-Torres, G.; Ceballos-Arroyo, A.; Robles-Serrano, S. Automatic measurement of fish weight and size by processing underwater hatchery images. Eng. Lett. 2018, 26, 461–471. [Google Scholar]

- Viazzi, S.; Van Hoestenberghe, S.; Goddeeris, B.; Berckmans, D. Automatic mass estimation of jade perch scortum barcoo by computer vision. Aquac. Eng. 2015, 64, 42–48. [Google Scholar] [CrossRef]

- Konovalov, D.A.; Saleh, A.; Domingos, J.A.; White, R.D.; Jerry, D.R. Estimating mass of harvested asian seabass lates calcarifer from images. World J. Eng. Technol. 2018, 6, 15. [Google Scholar] [CrossRef]

- Domingos, J.A.; Smith-Keune, C.; Jerry, D.R. Fate of genetic diversity within and between generations and implications for dna parentage analysis in selective breeding of mass spawners: A case study of commercially farmed barramundi, lates calcarifer. Aquaculture 2014, 424, 174–182. [Google Scholar] [CrossRef]

- Huxley, J.S. Constant differential growth-ratios and their significance. Nature 1924, 114, 895–896. [Google Scholar] [CrossRef]

- Zion, B. The use of computer vision technologies in aquaculture a review. Comput. Electron. Agric. 2012, 88, 125–132. [Google Scholar] [CrossRef]

- MBalaban, O.; Chombeau, M.; Cırban, D.; Gumus, B. Prediction of the weight of alaskan pollock using image analysis. J. Food Sci. 2010, 75, E552–E556. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436. [Google Scholar] [CrossRef] [PubMed]

- Monteagudo, J.P.; Legorburu, G.; Justel-Rubio, A.; Restrepo, V. Preliminary study about the suitability of an electronic monitoring system to record scientific and other information from the tropical tuna purse seine fishery. Collect. Vol. Sci. Pap. ICCAT 2015, 71, 440–459. [Google Scholar]

- Rodrigues, M.T.; Padua, F.L.; Gomes, R.M.; Soares, G.E. Automatic fish species classification based on robust feature extraction techniques and artificial immune systems. In Proceedings of the 2010 IEEE Fifth International Conference on Bio-Inspired Computing: Theories and Applications (BIC-TA), Changsha, China, 23–26 September 2010; pp. 1518–1525. [Google Scholar]

- Hu, J.; Li, D.; Duan, Q.; Han, Y.; Chen, G.; Si, X. Fish species classification by color, texture and multi-class support vector machine using computer vision. Comput. Electron. Agric. 2012, 88, 133–140. [Google Scholar] [CrossRef]

- Li, X.; Shang, M.; Qin, H.; Chen, L. Fast accurate fish detection and recognition of underwater images with fast R-CNN. In Proceedings of the OCEANS’15 MTS/IEEE, Washington, DC, USA, 19–22 October 2015; pp. 1–5. [Google Scholar]

- Navarro, A.; Lee-Montero, I.; Santana, D.; Henríquez, P.; Ferrer, M.A.; Morales, A.; Soula, M.; Badilla, R.; Negrín-Báez, D.; Zamorano, M.J.; et al. IMAFISH_ML: A fully-automated image analysis software for assessing fish morphometric traits on gilthead seabream (Sparus aurata L.), meagre (Argyrosomus regius) and red porgy (Pagrus pagrus). Comput. Electron. Agric. 2016, 121, 66–73. [Google Scholar] [CrossRef]

- Huang, P.X.; Boom, B.J.; Fisher, R.B. Hierarchical classification with reject option for live fish recognition. Mach. Vis. Appl. 2015, 26, 89–102. [Google Scholar] [CrossRef]

- Marini, S.; Corgnati, L.; Mantovani, C.; Bastianini, M.; Ottaviani, E.; Fanelli, E.; Aguzzi, J.; Griffa, A.; Poulain, P.M. Automated estimate of fish abundance through the autonomous imaging device GUARD1. Measurement 2018, 126, 72–75. [Google Scholar] [CrossRef]

- Redmon, J.; Ali, F. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Pham, T.N.; Nguyen, V.H.; Huh, J.H. Integration of improved YOLOv5 for face mask detector and auto-labeling to generate dataset for fighting against COVID-19. J. Supercomput. 2023, 79, 8966–8992. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part 1–14; Springer International Publishing: Cham, Switzerland, 2016. [Google Scholar]

- Li, Z.; Zhou, F. FSSD. Feature fusion single shot multibox detector. arXiv 2017, arXiv:1712.00960. [Google Scholar]

- Siddiqui, S.A.; Salman, A.; Malik, M.I.; Shafait, F.; Mian, A.; Shortis, M.R.; Harvey, E.S. Automatic fish species classification in underwater videos: Exploiting pre-trained deep neural network models to compensate for limited labelled data. ICES J. Mar. Sci. 2017, 75, 374–389. [Google Scholar] [CrossRef]

- Ali-Gombe, A.; Elyan, E.; Jayne, C. August. Fish classification in context of noisy images. In Proceedings of the International Conference on Engineering Applications of Neural Networks, Athens, Greece, 25–27 August 2017; Springer: Cham, Switzerland, 2017; pp. 216–226. [Google Scholar]

- Lu, Y.-C.; Chen, T.; Kuo, Y.F. Identifying the species of harvested tuna and billfish using deep convolutional neural networks. ICES J. Mar. Sci. 2020, 77, 1318–1329. [Google Scholar] [CrossRef]

- Ames, R.T.; Leaman, B.M.; Ames, K.L. Evaluation of video technology for monitoring of multispecies longline catches. N. Am. J. Fish. Manag. 2007, 27, 955–964. [Google Scholar] [CrossRef]

- Kindt-Larsen, L.; Kirkegaard, E.; Dalskov, J. Fully documented fishery: A tool to support a catch quota management system. ICES J. Mar. Sci. 2011, 68, 1606–1610. [Google Scholar] [CrossRef]

- Needle, C.L.; Dinsdale, R.; Buch, T.B.; Catarino, R.M.; Drewery, J.; Butler, N. Scottish science applications of remote electronic monitoring. ICES J. Mar. Sci. 2015, 72, 1214–1229. [Google Scholar] [CrossRef]

- Bartholomew, D.C.; Mangel, J.C.; Alfaro-Shigueto, J.; Pingo, S.; Jimenez, A.; Godley, B.J. Remote electronic monitoring as a potential alternative to on-board observers in small-scale fisheries. Biol. Conserv. 2018, 219, 35–45. [Google Scholar] [CrossRef]

- Van Helmond, A.T.; Chen, C.; Poos, J.J. Using electronic monitoring to record catches of sole (Solea solea) in a bottom trawl fishery. ICES J. Mar. Sci. 2017, 74, 1421–1427. [Google Scholar] [CrossRef]

- White, D.J.; Svellingen, C.; Strachan, N.J. Automated measurement of species and length of fish by computer vision. Fish. Res. 2006, 80, 203–210. [Google Scholar] [CrossRef]

- Larsen, R.; Olafsdottir, H.; Ersbøll, B.K. Shape and texture based classification of fish species. In Proceedings of the Scandinavian Conference on Image Analysis, Oslo, Norway, 15–18 June 2009; Springer: Berlin/Heidelberg, Germany, 2009; pp. 745–749. [Google Scholar]

- Shafry MR, M.; Rehman, A.; Kumoi, R.; Abdullah, N.; Saba, T. FiLeDI framework for measuring fish length from digital images. Int. J. Phys. Sci. 2012, 7, 607–618. [Google Scholar]

- Morais, E.F.; Campos MF, M.; Padua, F.L.; Carceroni, R.L. Particle filter-based predictive tracking for robust fish counting. In Proceedings of the XVIII Brazilian Symposium on Computer Graphics and Image Processing (SIBGRAPI’05), Rio Grande do Norte, Brazil, 9–12 October 2005; pp. 367–374. [Google Scholar]

- Spampinato, C.; Chen-Burger, Y.H.; Nadarajan, G.; Fisher, R.B. Detecting, tracking and counting fish in low quality unconstrained underwater videos. VISAPP 2008, 1, 514–519. [Google Scholar]

- Toh, Y.H.; Ng, T.M.; Liew, B.K. Automated fish counting using image processing. In Proceedings of the 2009 International Conference on Computational Intelligence and Software Engineering, Wuhan, China, 11–13 December 2009; pp. 1–5. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 2, 1097–1105. [Google Scholar] [CrossRef]

- Tran, D.T.; Huh, J.-H. New machine learning model based on the time factor for e-commerce recommendation systems. J. Supercomput. 2022, 79, 6756–6801. [Google Scholar] [CrossRef]

- Huh, J.-H.; Seo, K. Artificial intelligence shoe cabinet using deep learning for smart home. In Advanced Multimedia and Ubiquitous Engineering; MUE/FutureTech 2018; Springer: Singapore, 2019. [Google Scholar]

- Lim, S.C.; Huh, J.H.; Hong, S.H.; Park, C.Y.; Kim, J.C. Solar Power Forecasting Using CNN-LSTM Hybrid Model. Energies 2022, 15, 8233. [Google Scholar] [CrossRef]

- French, G.; Fisher, M.H.; Mackiewicz, M.; Needle, C. Convolutional neural networks for counting fish in fisheries surveillance video. In Proceedings of the Machine Vision of Animals and their Behaviour (MVAB), Swansea, UK, 10 September 2015; pp. 7.1–7.10. [Google Scholar]

- Li, X.; Shang, M.; Hao, J.; Yang, Z. Accelerating fish detection and recognition by sharing CNNs with objectness learning. In Proceedings of the OCEANS 2016-Shanghai, Shanghai, China, 10–13 April 2016; pp. 1–5. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef] [PubMed]

- Qin, H.; Li, X.; Liang, J.; Peng, Y.; Zhang, C. DeepFish: Accurate underwater live fish recognition with a deep architecture. Neurocomputing 2016, 187, 49–58. [Google Scholar] [CrossRef]

- Zhuang, P.; Xing, L.; Liu, Y.; Guo, S.; Qiao, Y. Marine animal detection and recognition with advanced deep learning models. In Proceedings of the CLEF (Working Notes), Dublin, Ireland, September 2017; pp. 11–14. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Sung, M.; Yu, S.C.; Girdhar, Y. Vision based real-time fish detection using convolutional neural network. In Proceedings of the OCEANS 2017-Aberdeen, Aberdeen, UK, 19–22 June 2017; pp. 1–6. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Jäger, J.; Wolff, V.; Fricke-Neuderth, K.; Mothes, O.; Denzler, J. Visual fish tracking: Combining a two-stage graph approach with CNN-features. In Proceedings of the OCEANS 2017-Aberdeen, Aberdeen, UK, 19–22 June 2017; pp. 1–6. [Google Scholar]

- Zheng, Z.; Guo, C.; Zheng, X.; Yu, Z.; Wang, W.; Zheng, H.; Fu, M.; Zheng, B. Fish recognition from a vessel camera using deep convolutional neural network and data augmentation. In Proceedings of the 2018 OCEANS-MTS/IEEE Kobe Techno-Oceans (OTO), Kobe, Japan, 28–31 May 2018; pp. 1–5. [Google Scholar]

- Tseng, C.H.; Hsieh, C.L.; Kuo, Y.F. Automatic measurement of the body length of harvested fish using convolutional neural networks. Biosyst. Eng. 2020, 189, 36–47. [Google Scholar] [CrossRef]

- Monkman, G.G.; Hyder, K.; Kaiser, M.J.; Vidal, F.P. Using machine vision to estimate fish length from images using regional convolutional neural networks. Methods Ecol. Evol. 2019, 10, 2045–2056. [Google Scholar] [CrossRef]

- Al Muksit, A.; Hasan, F.; Emon MF, H.B.; Haque, M.R.; Anwary, A.R.; Shatabda, S. YOLO-Fish: A robust fish detection model to detect fish in realistic underwater environment. Ecol. Inform. 2022, 72, 101847. [Google Scholar] [CrossRef]

- Chen, Y.-S.; Chuang, Y.-Y. Natural image stitching with the global similarity prior. In Proceedings of the 14th European Conference Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; pp. 186–201. [Google Scholar]

- Zhang, G.; He, Y.; Chen, W.; Jia, J.; Bao, H. Multi-viewpoint panorama construction with wide-baseline images. IEEE Trans. Image Process. 2016, 25, 3099–3111. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Yuan, L.; Sun, J.; Quan, L. Dual-feature warping-based motion model estimation. In Proceedings of the 2015 IEEE International Conference on Computer Vision Workshop (ICCVW), Washington, DC, USA, 7–13 December 2015; pp. 4283–4291. [Google Scholar]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Minaee, S.; Boykov, Y.; Porikli, F.; Plaza, A.; Kehtarnavaz, N.; Terzopoulos, D. Image segmentation using deep learning: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 3523–3542. [Google Scholar] [CrossRef] [PubMed]

- Ghosh, S.; Das, N.; Das, I.; Maulik, U. Understanding deep learning techniques for image segmentation. ACM Comput. Surv. (CSUR) 2019, 52, 1–35. [Google Scholar] [CrossRef]

- Jone, A.; Friston, K.J. Unified segmentation. Neuroimage 2005, 26, 839–851. [Google Scholar]

| MCS elements 1 | CCSBT | FFA | IATTC | ICCAT | IOTC | WCPFC |

|---|---|---|---|---|---|---|

| VMS 2: | o 3 | o | o | o | o | o |

| -Operating entity | Shipper | Secretariat | Shipper | Shipper | Shipper | Shipper |

| -Target boat | Over 24 m | Over 24 m | Over 24 m | Over 24 m | Over 24 m | Over 24 m |

| -Reporting interval | 6 h | 6 h | 6 h | 6 h | 6 h | 6 h |

| Observer system: | o | o | o (Surrounding net) | o | x 4 | o |

| -Task | Scientific research | Scientific research, surveillance | Surveillance | Scientific research | - | Scientific research, surveillance |

| -Operating entity | Shipper | Nearshore | Shipper Secretariat | Shipper | - | Shipper, secretariat |

| -Boarding ratio | 10% | Bilateral agreement | 100% | 10% | - | 10% |

| -Bear costs | x | Vessel, shipowner | Shipper Secretariat | x | - | Shipowner, shipper |

| Monitoring port state | x | o | x | o | o | o |

| Monitoring boarding in High sea | x | X | x | x | x | o |

| Surveilling the entry of goods into ship | x | o(Prohibition) | x | o | x | o |

| Statistical document preparation | o | - | o | o | o | o |

| List of IUU fishing vessel name | o | x | o | o | o | o |

| Fishing vessel registration | o | o | o | o | o | o |

| Classification | Ship Specification |

|---|---|

| Ship name | Seonrin |

| Gross tonnage | 22 ton |

| Length over all | 18.6 m |

| Beam | 5.2 m |

| Category | Pixel (Mean) | Area (m2) | After Applying Weights 1 | After Applying Weights 2 | Length (m) (Piece of Fish) |

|---|---|---|---|---|---|

| Real data | 445,938 | 22.62942 | 452.5884 | 0.075431 | 0.274648 |

| Inferential Statistics | 424,721 | 22.04715 | 440.943 | 0.07349 | 0.271091 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Koh, T.; Kim, Y. A Review on Observer Assistance Systems for Harvested and Protected Fish Species. Processes 2023, 11, 1261. https://doi.org/10.3390/pr11041261

Koh T, Kim Y. A Review on Observer Assistance Systems for Harvested and Protected Fish Species. Processes. 2023; 11(4):1261. https://doi.org/10.3390/pr11041261

Chicago/Turabian StyleKoh, Taehoon, and Yonghoon Kim. 2023. "A Review on Observer Assistance Systems for Harvested and Protected Fish Species" Processes 11, no. 4: 1261. https://doi.org/10.3390/pr11041261