A Novel Cellular Network Traffic Prediction Algorithm Based on Graph Convolution Neural Networks and Long Short-Term Memory through Extraction of Spatial-Temporal Characteristics

Abstract

1. Introduction

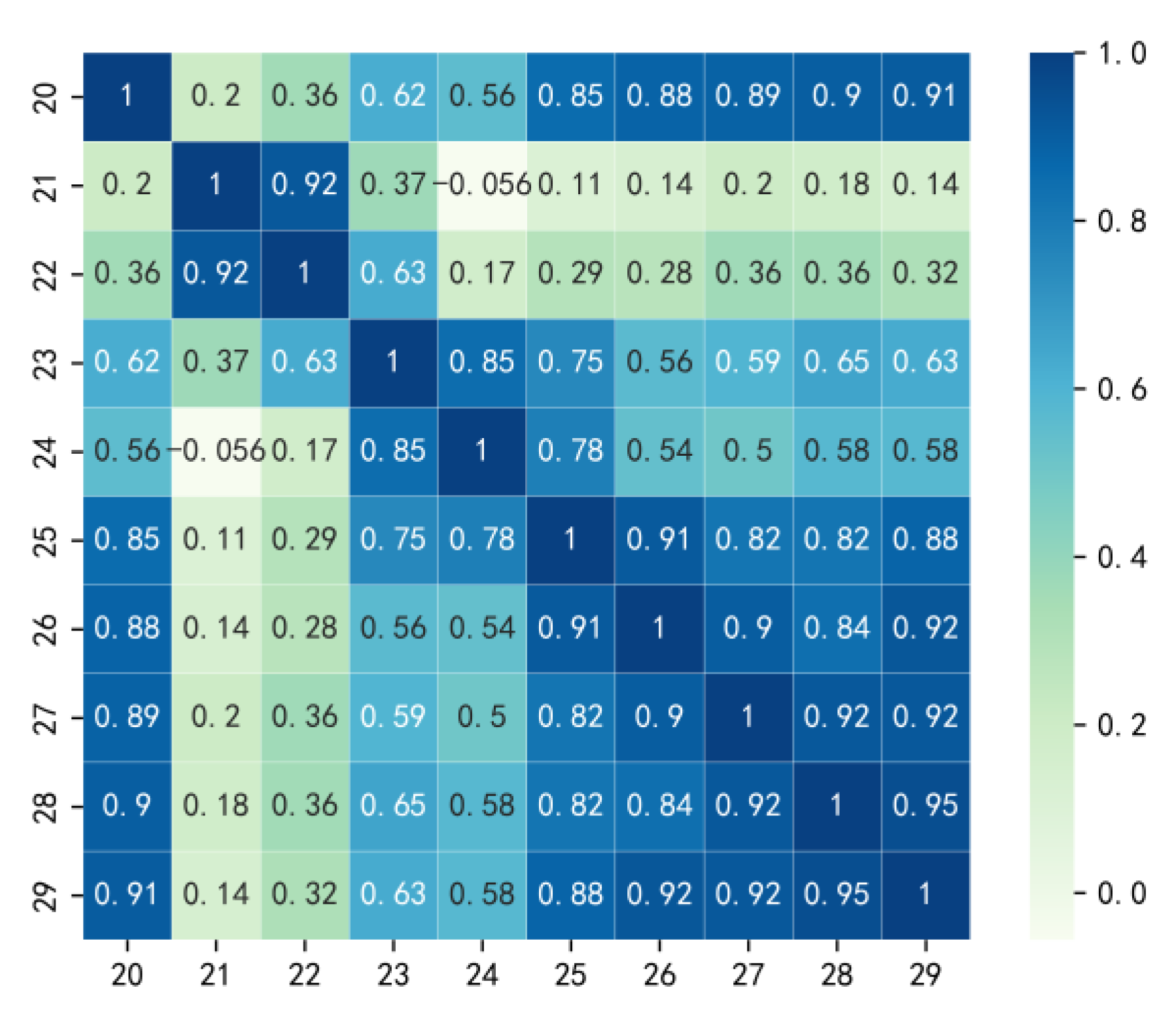

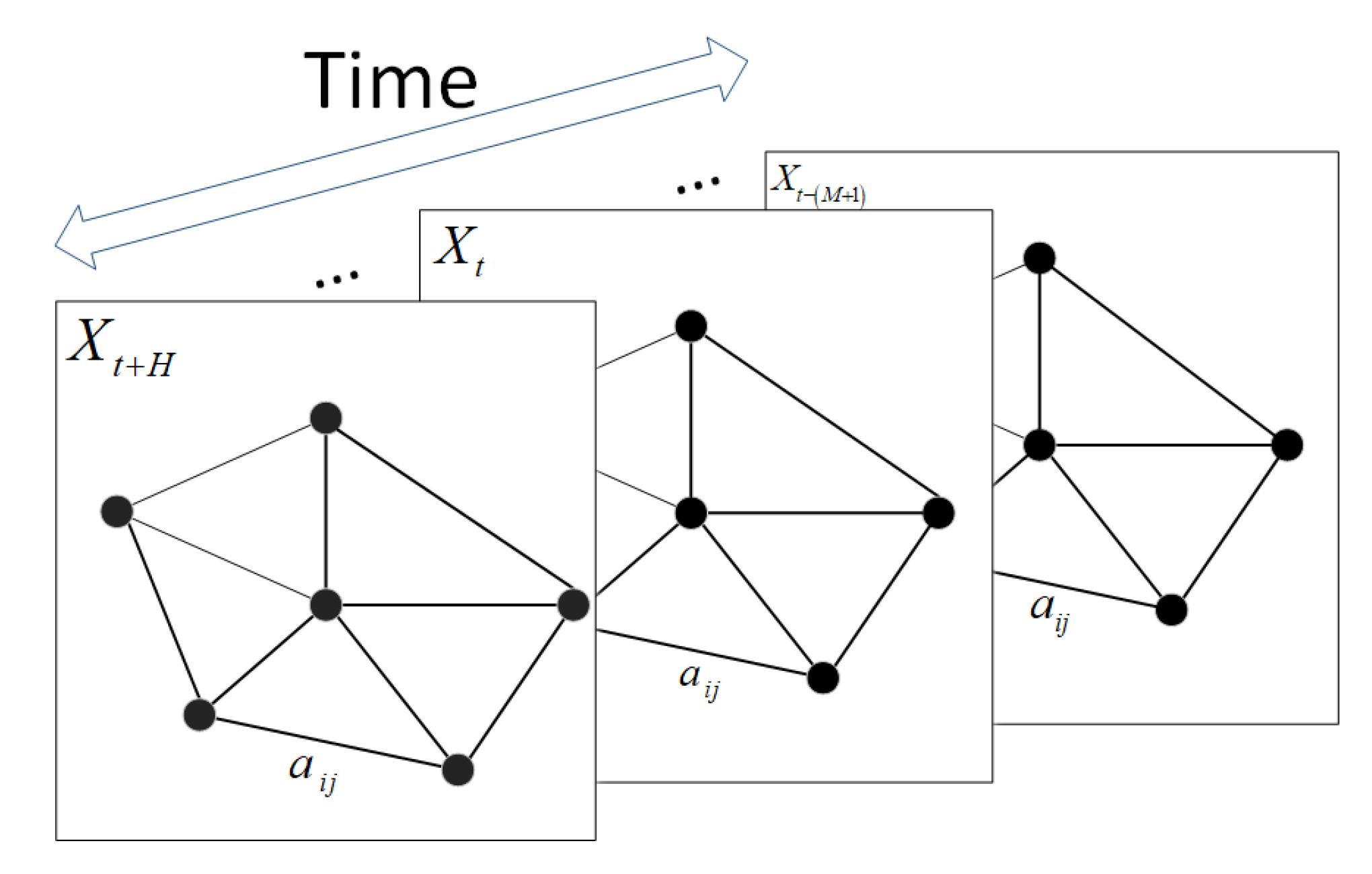

- We build the cellular network on graphs based on the principle of spatial correlation between cells within the same period. The Pearson correlation coefficient is used to calculate the correlation between all cells at the time step t-th. The Euclidean distance is used to calculate the spatial distance between all cells. The spatial correlation is represented by the two together to construct the adjacency matrix so that the cellular network is built on a graph for each time step. Thus, the spatial graph of the cellular network is different for each time step.

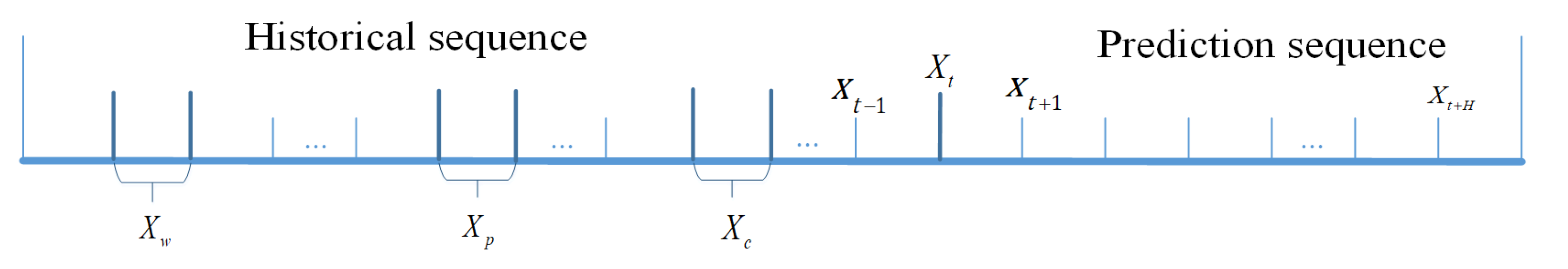

- We build the cellular network on time series based on the principles of temporal proximity and periodicity. The hourly sequence, daily sequence, and weekly sequence of each time step t-th are calculated by using the principles of proximity and periodicity, and then all moments are combined together to obtain the final hourly sequence, daily sequence, and weekly sequence.

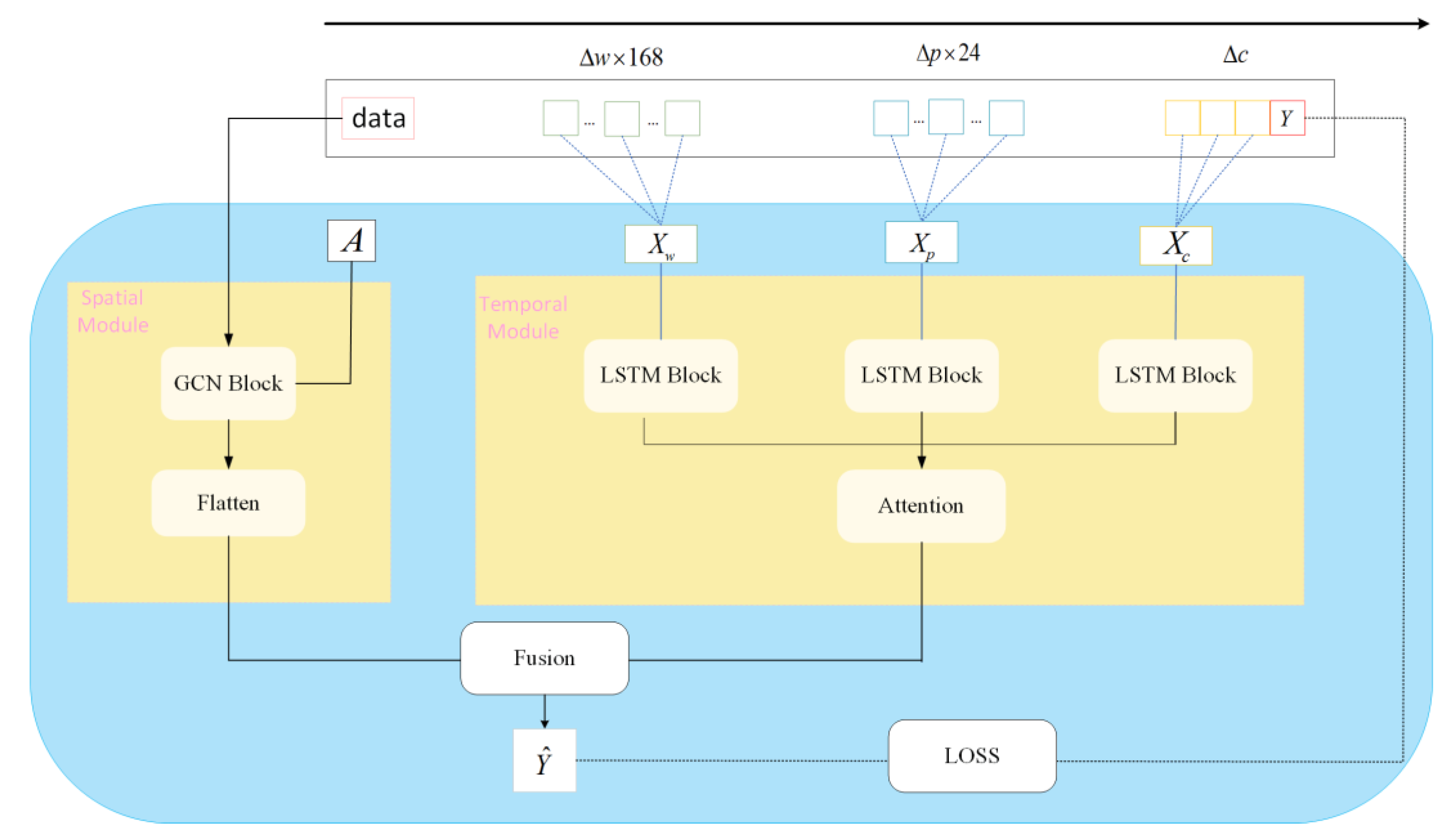

- A spatial-temporal parallel module is proposed to consider both spatial and temporal characteristics. The graph convolution neural (GCN) network module is used to learn the spatial characteristics of the graph data, and the long short-term memory (LSTM) module is used to learn the temporal characteristics of three kinds of time series. The input and output between modules do not affect each other. This allows us to capture spatial and temporal dependencies more realistically and effectively.

- Simulation experiments are carried out on real data sets and compared with other models. The experimental results show that our model has better prediction results.

2. Related Work

3. Datasets

3.1. Data Sources

- (SMS-in) Number of SMS messages received within the cell: If any user receives an SMS message in any cell, a record of the SMS message reception service will be generated for that cell;

- (SMS-out) Number of SMS messages sent within the cell: If any user sends an SMS message in any cell, a record of the SMS message sending service will be generated for that cell;

- (Call-in) Number of Call messages received within the cell: If any user receives a call message in any cell, a record of the incoming call message will be generated for that cell;

- (Call-out) Number of outgoing Call messages within the cell: If any user issues a call message in any cell, a record of the outgoing call message will be generated for that cell;

- (Internet) Wireless network traffic data within the cell: If any user initiates an Internet connection or ends an Internet connection in any cell, a record of wireless network traffic data services will be generated for that cell. A record will also be generated if the connection lasts longer than 15 min or if the user transmits more than 5 MB during the same connection.

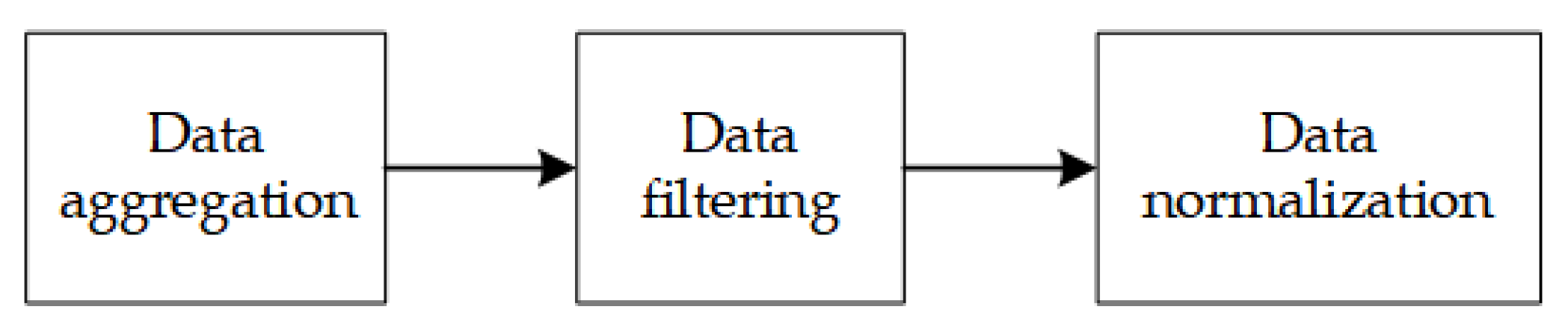

3.2. Data Pre-Processing

- Data aggregation. The original dataset collected data at a 10 min aggregate granularity, which resulted in many cells having sparse cellular network data values, with mostly recorded values of 0. Therefore, this paper takes 1 h as the time granularity of statistics to ensure that most of the data have a value other than 0 and ensure the validity of the data.

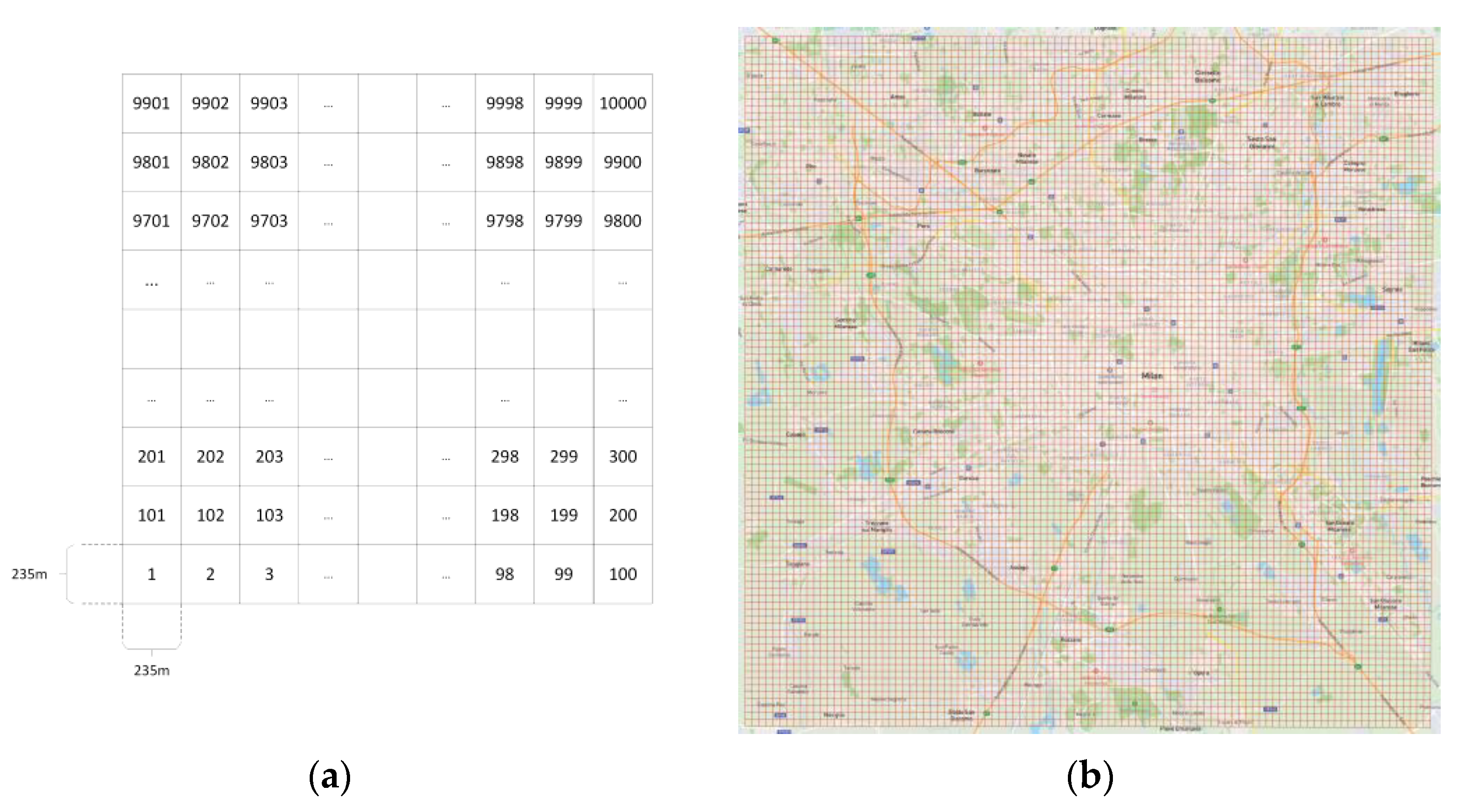

- Data filtering. The original dataset divided the city of Milan into 100 × 100 cells, from which we chose 20 × 20 cells as the dataset used in this paper. The cell IDs we chose are 4041–4060, 4141–4160……, 5941–5960.

- Data normalization. In this paper, the data are processed by using the maximum–minimum normalization method and map its scale to the interval [0, 1]. Finally, when analyzing and comparing the experimental results in Section 5, the data are reversed to the original data range.

3.3. Data Analysis

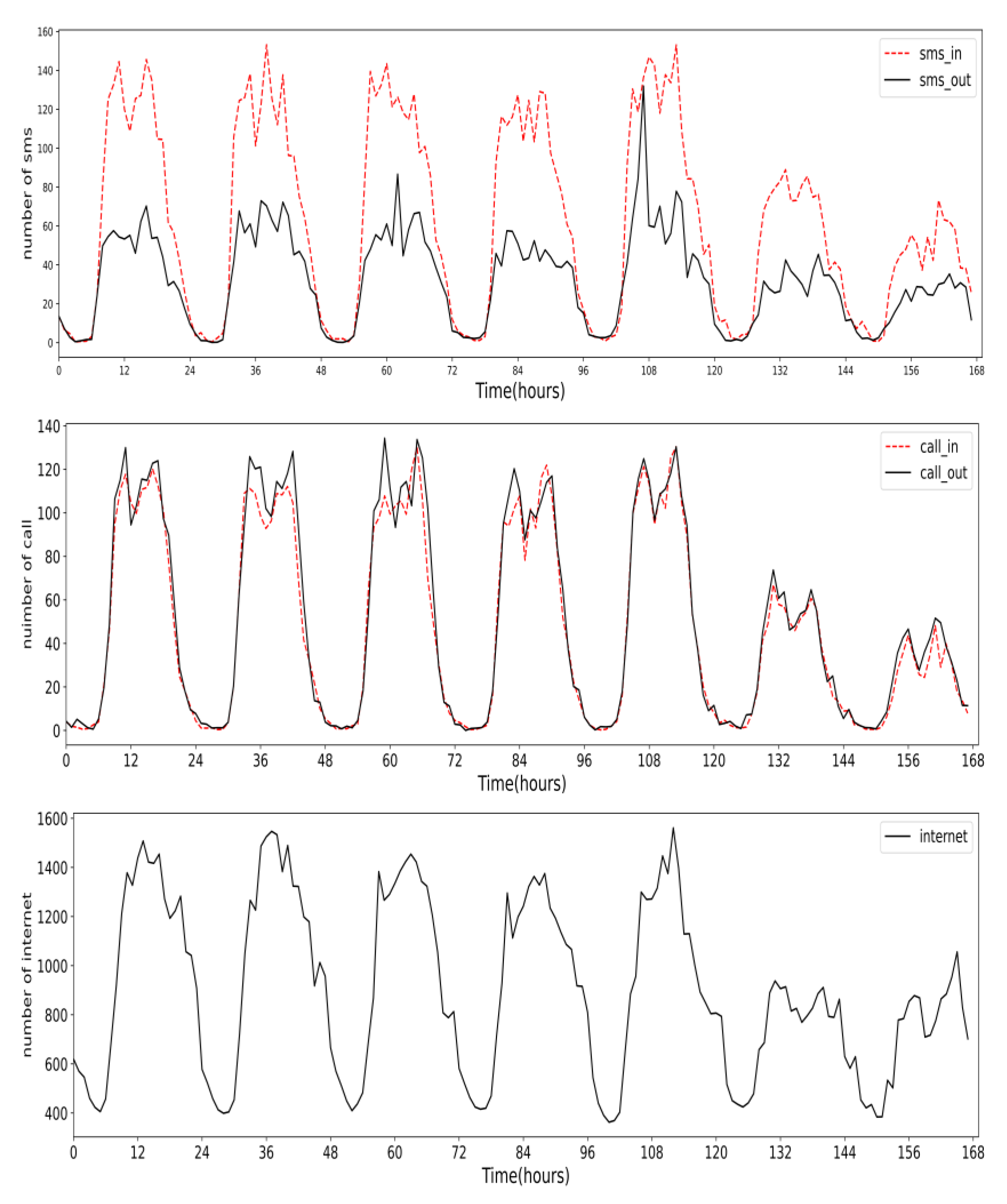

3.3.1. Temporal Characterization

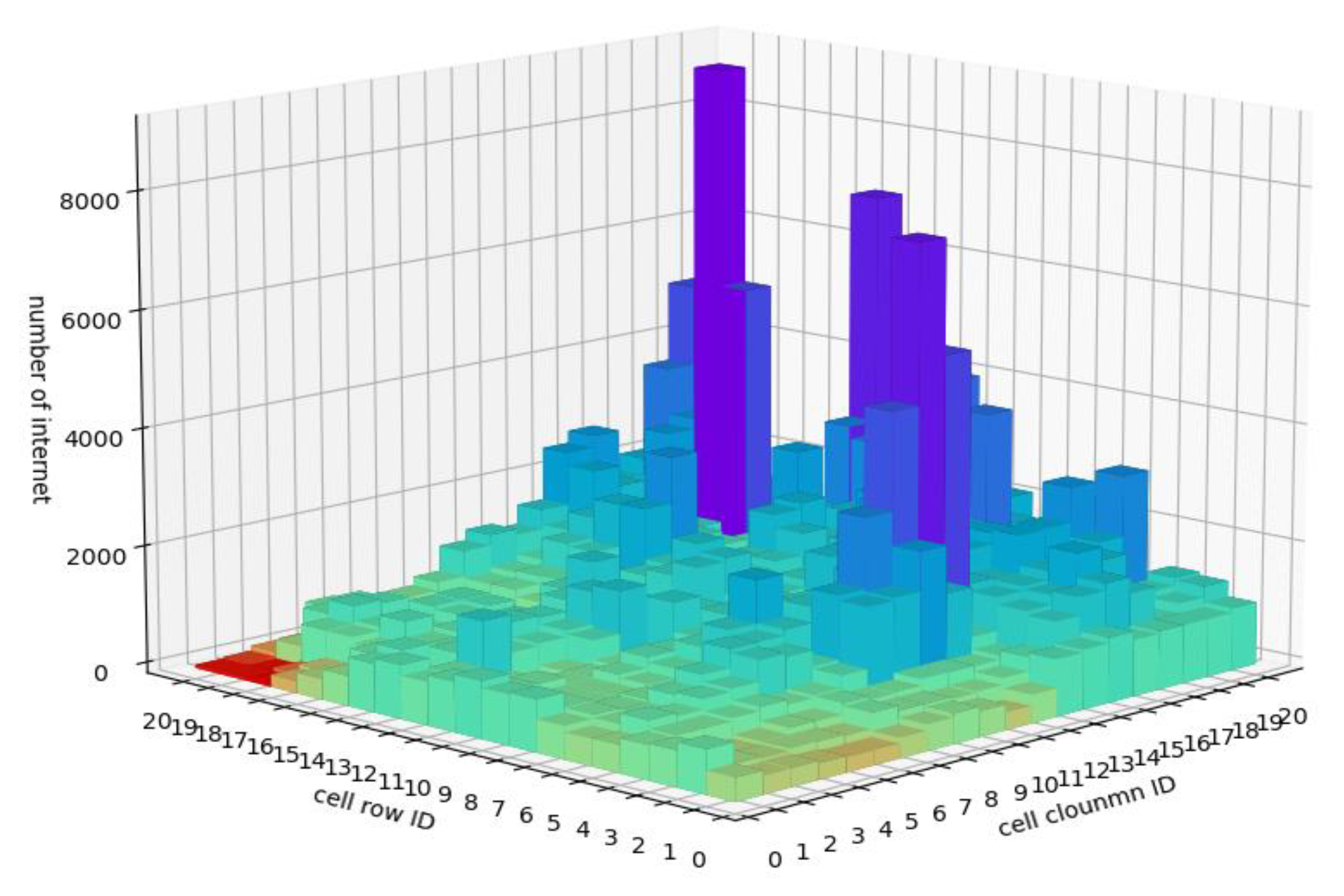

3.3.2. Spatial Characterization

4. Data Construction and Forecast Model

4.1. Graph Data Construction

4.2. Time Series Data Construction

4.2.1. Hourly Sequence

4.2.2. Daily Sequence

4.2.3. Weekly Sequence

4.3. Proposed Forecasting Model

4.3.1. Graph Convolutional Neural Network Module

4.3.2. Long Short-Term Memory Network Module

4.3.3. Output Block

4.3.4. Loss Function

5. Experiment and Evaluation

5.1. Training Paraments

5.2. Performance Evaluation Method

- RMSE reflects the degree of deviation between the ground truth and the forecasted value. The smaller its value, the better the quality of the model and the more accurate the prediction.

- MAE evaluates the degree of deviation between the ground truth and the forecasted value, that is, the actual size of the prediction error. The smaller the value, the better the model quality and the more accurate the prediction.

- R2 explains the variance score of the regression model, and its value takes the range of [0, 1]. The closer to 1 indicates the better the quality of the model and the more accurate the prediction, and the smaller the value, the worse the effect.

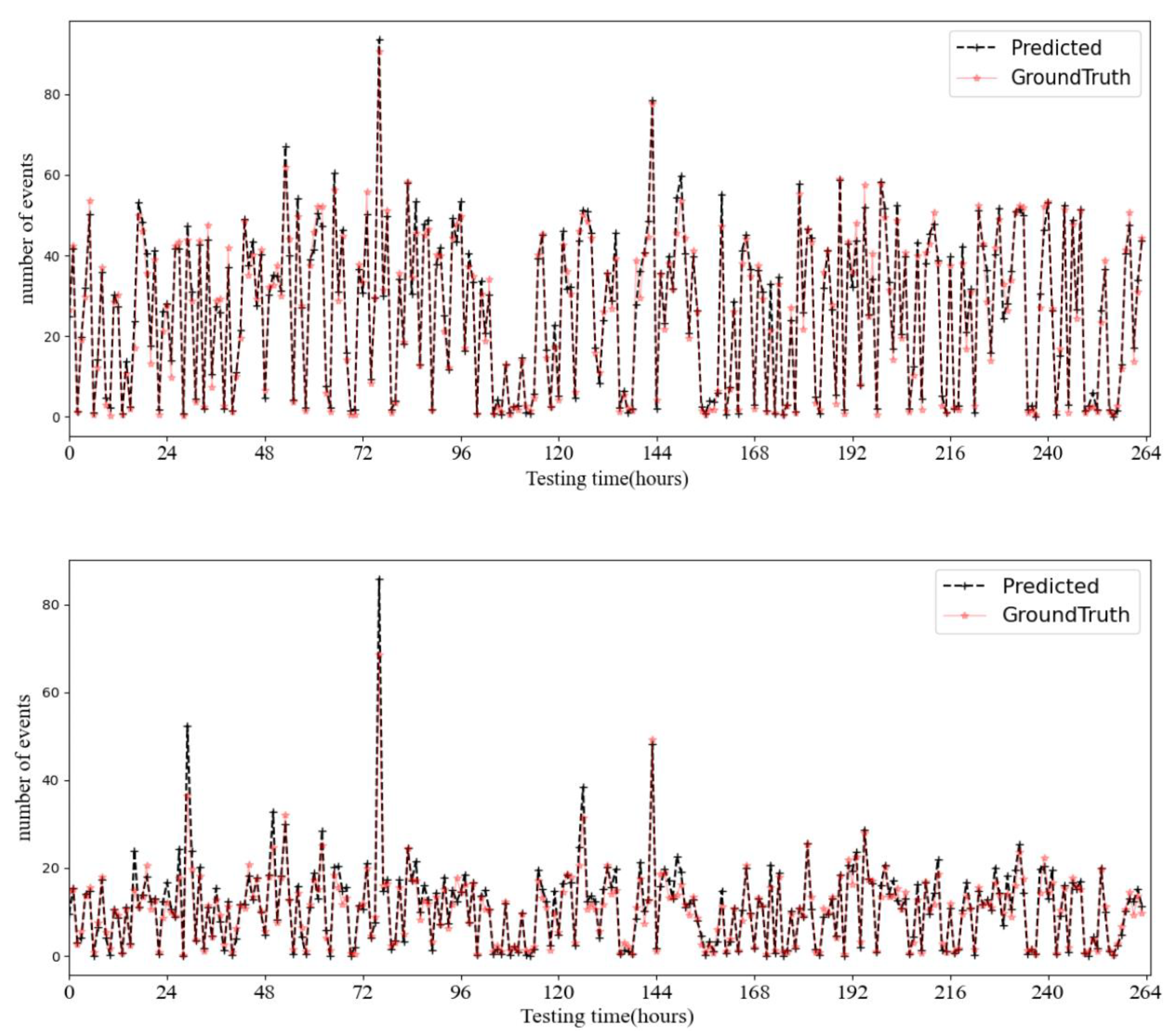

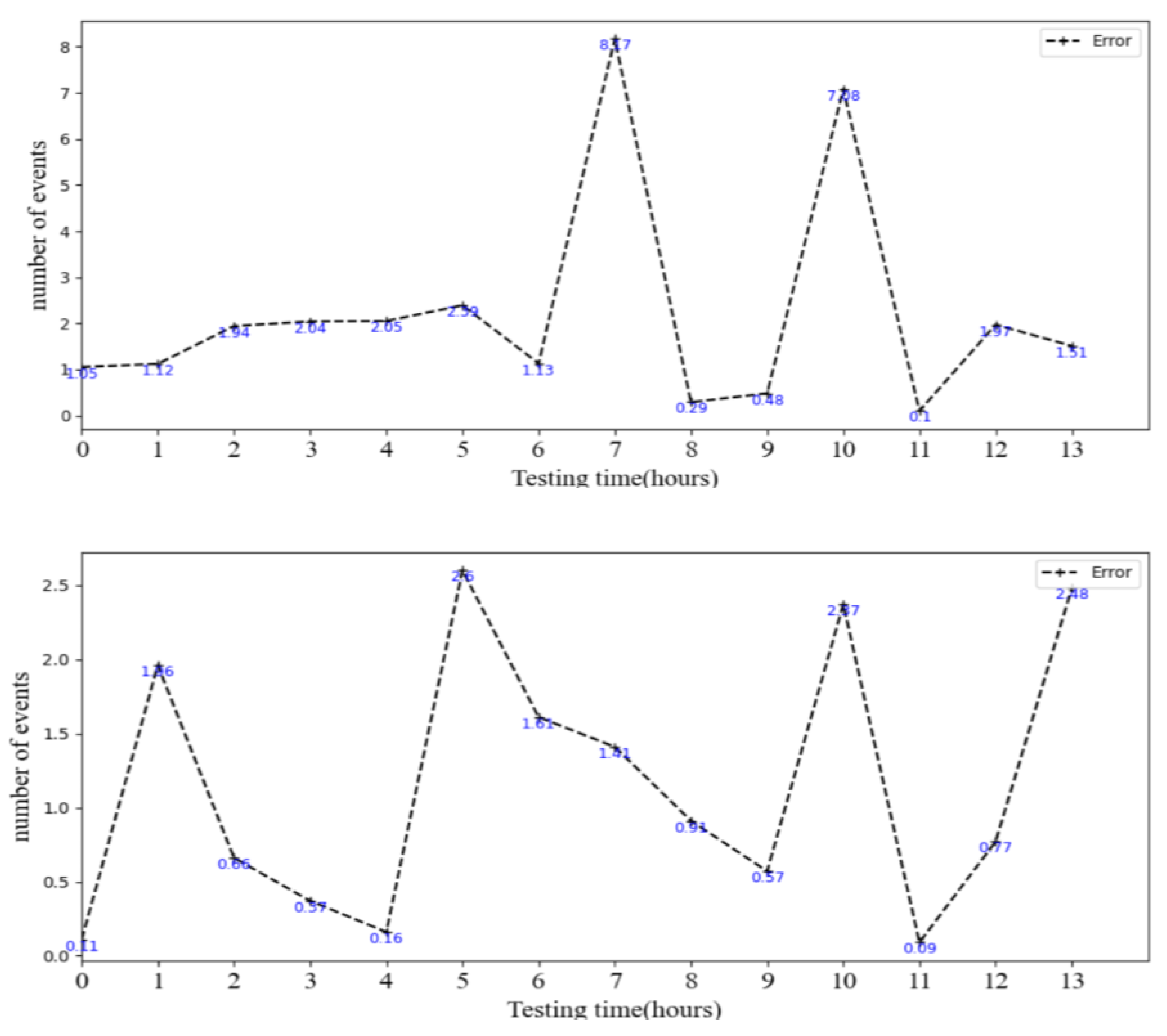

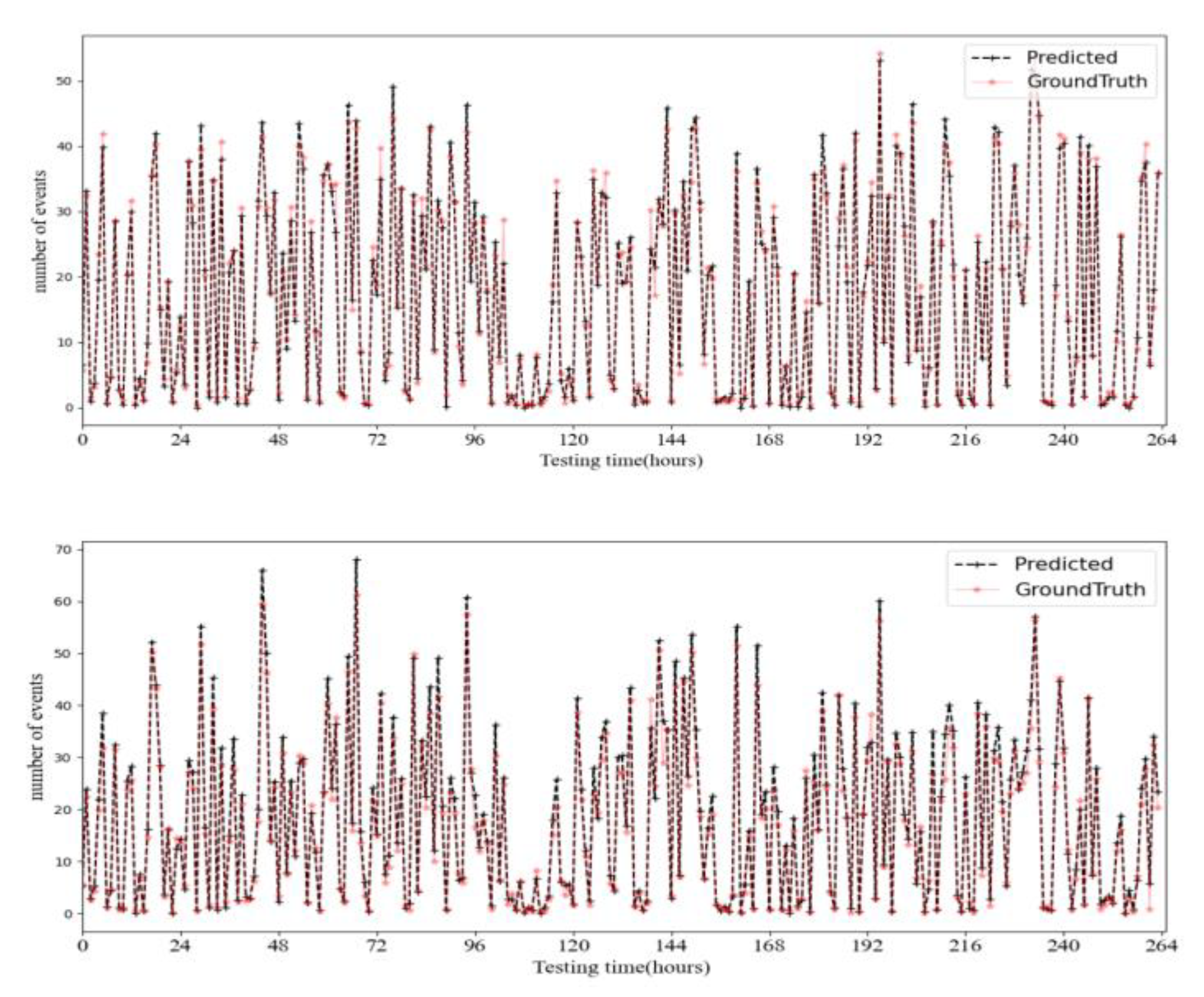

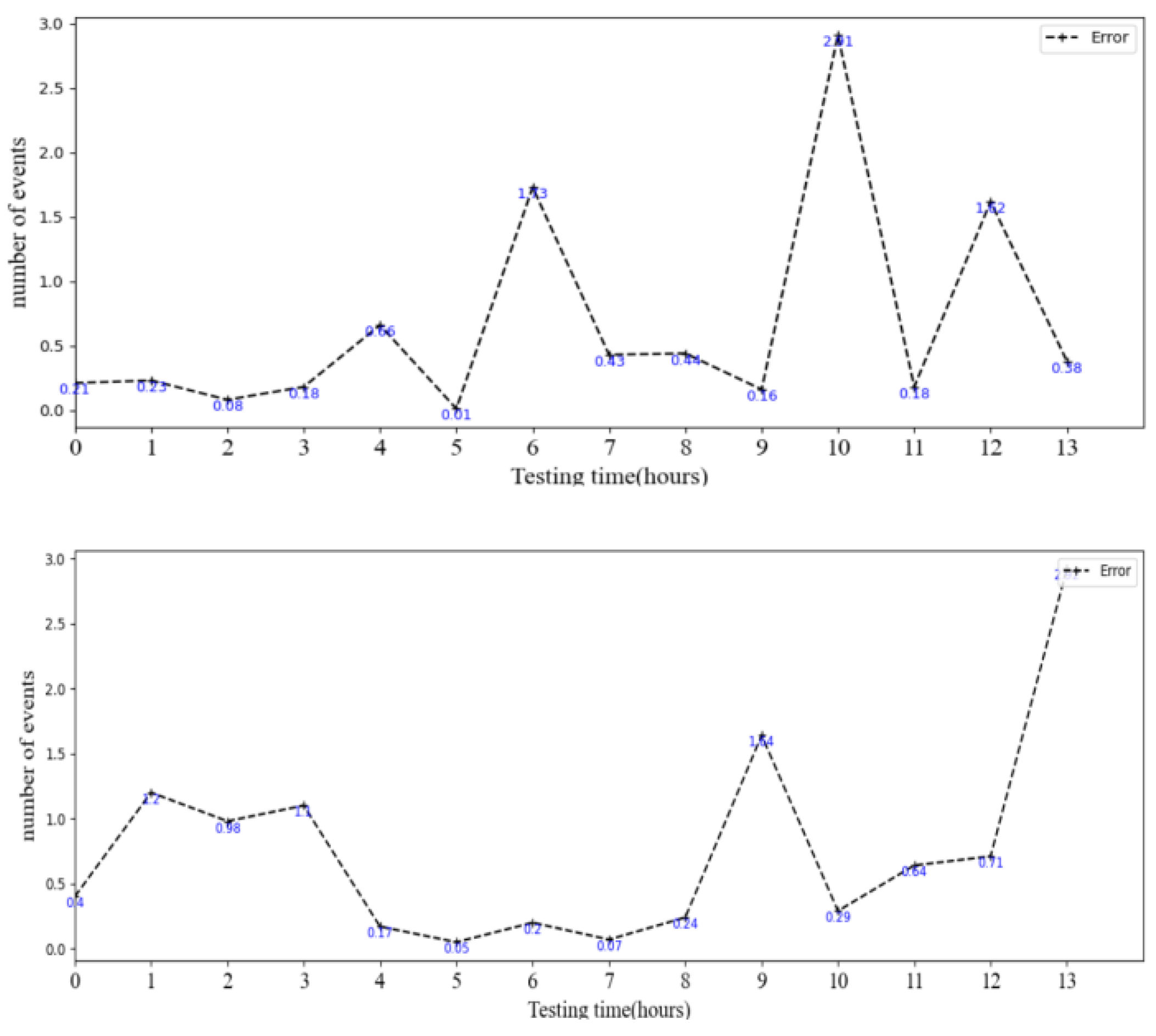

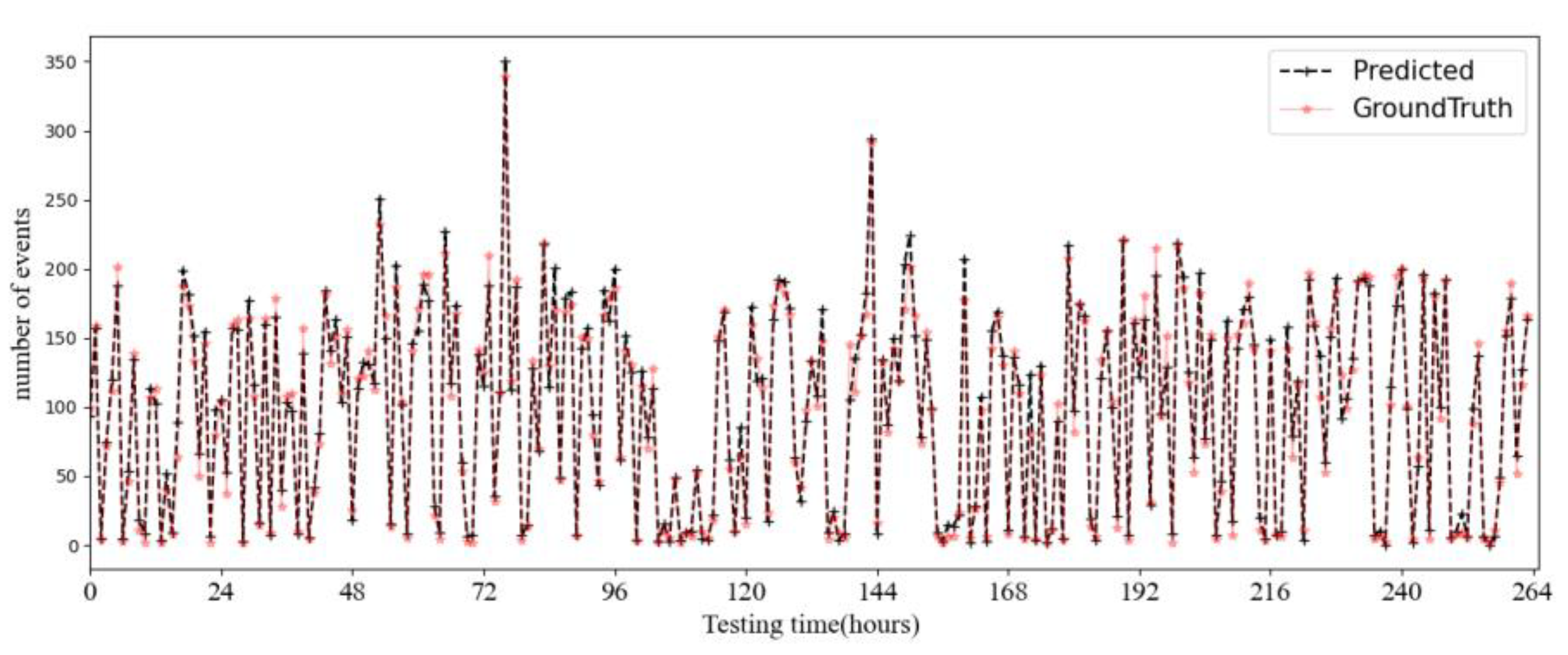

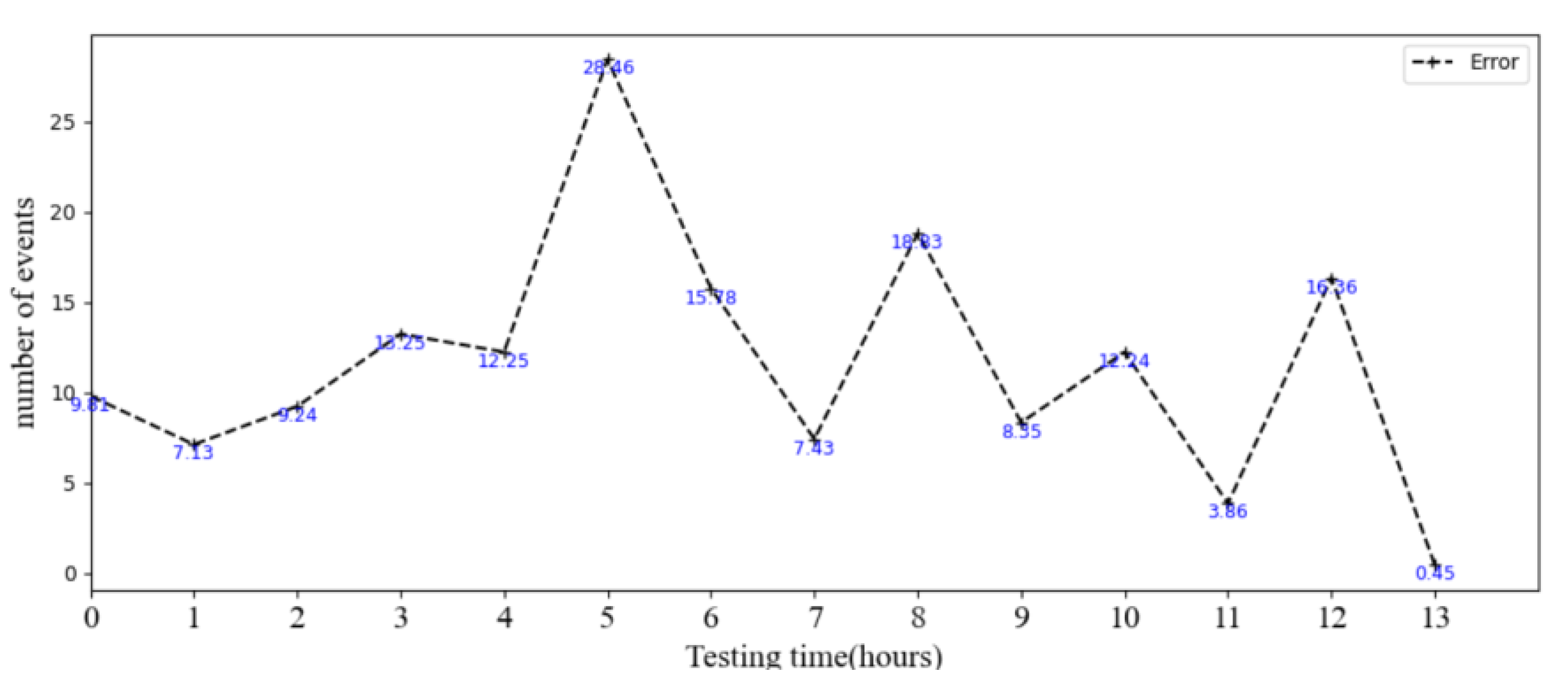

5.3. Results and Analysis

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- He, Y.; Yang, Y.; Zhao, B.; Gao, Z.; Rui, L. Network Traffic Prediction Method Based on Multi-Channel Spatial-Temporal Graph Convolutional Networks. In Proceedings of the 2022 IEEE 14th International Conference on Advanced Infocomm Technology (ICAIT), Chongqing, China, 8–11 July 2022. [Google Scholar]

- Naboulsi, D.; Fiore, M.; Ribot, S.; Stanica, R. Large-Scale Mobile Traffic Analysis: A Survey. IEEE Commun. Surv. Tutor. 2016, 18, 124–161. [Google Scholar] [CrossRef]

- Zeng, Q.; Sun, Q.; Chen, G.; Duan, H.; Li, C.; Song, G. Traffic Prediction of Wireless Cellular Networks Based on Deep Transfer Learning and Cross-Domain Data. IEEE Access 2020, 8, 172387–172397. [Google Scholar] [CrossRef]

- Jiang, W. Cellular Traffic Prediction with Machine Learning: A Survey. Expert Syst. Appl. 2022, 201, 117163. [Google Scholar] [CrossRef]

- Liu, Q.; Li, J.; Lu, Z. ST-Tran: Spatial-Temporal Transformer for Cellular Traffic Prediction. IEEE Commun. Lett. 2021, 25, 3325–3329. [Google Scholar] [CrossRef]

- Wang, Z.; Hu, J.; Min, G.; Zhao, Z.; Wang, J. Data-Augmentation-Based Cellular Traffic Prediction in Edge-Computing-Enabled Smart City. IEEE Trans. Ind. Inform. 2021, 17, 4179–4187. [Google Scholar] [CrossRef]

- Xu, F.; Lin, Y.; Huang, J.; Wu, D.; Shi, H.; Song, J.; Li, Y. Big Data Driven Mobile Traffic Understanding and Forecasting: A Time Series Approach. IEEE Trans. Serv. Comput. 2016, 9, 796–805. [Google Scholar] [CrossRef]

- Li, R.; Zhao, Z.; Zhou, X.; Palicot, J.; Zhang, H. The prediction analysis of cellular radio access network traffic: From entropy theory to networking practice. IEEE Commun. Mag. 2014, 52, 234–240. [Google Scholar] [CrossRef]

- Makhoul, J. Linear prediction: A tutorial review. Proc. IEEE 1975, 63, 561–580. [Google Scholar] [CrossRef]

- Van Der Voort, M.; Dougherty, M.; Watson, S. Combining kohonen maps with arima time series models to forecast traffic flow. Transp. Res. C-EMER 1996, 4, 307–318. [Google Scholar] [CrossRef]

- Contreras, J.; Espinola, R.; Nogales, F.J.; Conejo, A.J. ARIMA models to predict next-day electricity prices. IEEE Trans. Power Syst. 2003, 18, 1014–1020. [Google Scholar] [CrossRef]

- Sirisha, U.M.; Belavagi, M.C.; Attigeri, G. Profit Prediction Using ARIMA, SARIMA and LSTM Models in Time Series Forecasting: A Comparison. IEEE Access 2022, 10, 124715–124727. [Google Scholar] [CrossRef]

- Wang, W.; Guo, Y. Air Pollution PM2.5 Data Analysis in Los Angeles Long Beach with Seasonal ARIMA Model. In Proceedings of the 2009 International Conference on Energy and Environment Technology, Guilin, China, 16–18 October 2009. [Google Scholar]

- Rahman Minar, M.; Naher, J. Recent Advances in Deep Learning: An Overview. arXiv 2018, arXiv:1807.08169. [Google Scholar]

- Zhang, L.; Zhang, X. SVM-Based Techniques for Predicting Cross-Functional Team Performance: Using Team Trust as a Predictor. IEEE Trans. Eng. Manag. 2015, 62, 114–121. [Google Scholar] [CrossRef]

- Awan, F.M.; Minerva, R.; Crespi, N. Using Noise Pollution Data for Traffic Prediction in Smart Cities: Experiments Based on LSTM Recurrent Neural Networks. IEEE Sens. J. 2021, 21, 20722–20729. [Google Scholar] [CrossRef]

- Fu, R.; Zhang, Z.; Li, L. Using LSTM and GRU neural network methods for traffic flow prediction. In Proceedings of the 2016 31st Youth Academic Annual Conference of Chinese Association of Automation (YAC), Wuhan, China, 11–13 November 2016. [Google Scholar]

- Zhang, C.; Zhang, H.; Yuan, D.; Zhang, M. Citywide Cellular Traffic Prediction Based on Densely Connected Convolutional Neural Networks. IEEE Commun. Lett. 2018, 22, 1656–1659. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Daoud, N.; Eltahan, M.; Elhennawi, A. Aerosol Optical Depth Forecast over Global Dust Belt Based on LSTM, CNN-LSTM, CONV-LSTM and FFT Algorithms. In Proceedings of the IEEE EUROCON 2021—19th International Conference on Smart Technologies, Lviv, Ukraine, 6–8 July 2021. [Google Scholar]

- Zhao, N.; Wu, A.; Pei, Y.; Liang, Y.-C.; Niyato, D. Spatial-Temporal Aggregation Graph Convolution Network for Efficient Mobile Cellular Traffic Prediction. IEEE Commun. Lett. 2022, 26, 587–591. [Google Scholar] [CrossRef]

- Zhao, S.; Jiang, X.; Jacobson, G.; Jana, R.; Hsu, W.L.; Rustamov, R.; Talasila, M.; Aftab, S.A.; Chen, Y.; Borcea, C. Cellular Network Traffic Prediction Incorporating Handover: A Graph Convolutional Approach. In Proceedings of the 2020 17th Annual IEEE International Conference on Sensing, Communication, and Networking (SECON), Como, Italy, 22–25 June 2020. [Google Scholar]

- Guo, K.; Hu, Y.; Qian, Z.; Liu, H.; Zhang, K.; Sun, Y.; Gao, J.; Yin, B. Optimized Graph Convolution Recurrent Neural Network for Traffic Prediction. T-ITS 2021, 22, 1138–1149. [Google Scholar] [CrossRef]

- Seo, Y.; Defferrard, M.; Vandergheynst, P.; Bresson, X. Cellular Structured Sequence Modeling with Graph Convolutional Recurrent Networks. In Proceedings of the Neural Information Processing—25th International Conference, (ICONIP), Siem Reap, Cambodia, 13–16 December 2018. [Google Scholar]

- Yu, B.; Yin, H.; Zhu, Z. Spatio-temporal graph convolutional networks: A deep learning framework for traffic forecasting. In Proceedings of the 27th International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018. [Google Scholar]

- Hammond, D.K.; Vandergheynst, P.; Gribonval, R. Wavelets on graphs via spectral graph theory. Appl. Comput. Harmon. Anal. 2011, 30, 129–150. [Google Scholar] [CrossRef]

- Defferrard, M.; Bresson, X.; Vandergheynst, P. Convolutional neural networks on graphs with fast localized spectral filtering. In Proceedings of the 30th International Conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. arXiv 2016, arXiv:1609.02907. [Google Scholar]

- Zhao, N.; Ye, Z.; Pei, Y.; Liang, Y.-C.; Niyato, D. Spatial-Temporal Attention-Convolution Network for Citywide Cellular Traffic Prediction. IEEE Commun. Lett. 2020, 24, 2532–2536. [Google Scholar] [CrossRef]

- Barlacchi, G.; De Nadai, M.; Larcher, R.; Casella, A.; Chitic, C.; Torrisi, G.; Antonelli, F.; Vespignani, A.; Pentland, A.; Lepri, B. A multi-source dataset of urban life in the city of Milan and the province of Trentino. Sci. Data 2015, 2, 150055. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Tang, J.; Xu, Z.; Wang, Y.; Xue, G.; Zhang, X.; Yang, D. Spatiotemporal modeling and prediction in cellular networks: A big data enabled deep learning approach. In Proceedings of the IEEE INFOCOM 2017—IEEE Conference on Computer Communications, Atlanta, GA, USA, 1–4 May 2017. [Google Scholar]

- Wang, X.; Zhou, Z.; Xiao, F.; Xing, K.; Yang, Z.; Liu, Y.; Peng, C. Spatio-Temporal Analysis and Prediction of Cellular Traffic in Metropolis. IEEE Trans. Mob. Comput. 2019, 18, 2190–2202. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Luong, M.-T.; Pham, H.; Manning, C.D. Effective Approaches to Attention-based Neural Machine Translation. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015. [Google Scholar]

- Wang, Y.; Zheng, J.; Du, Y.; Huang, C.; Li, P. Traffic-GGNN: Predicting Traffic Flow via Attentional Spatial-Temporal Gated Graph Neural Networks. T-ITS 2022, 23, 18423–18432. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Lin, C.-Y.; Su, H.-T.; Tung, S.-L.; Hsu, W. Multivariate and Propagation Graph Attention Network for Spatial-Temporal Prediction with Outdoor Cellular Traffic. In Proceedings of the 30th ACM International Conference on Information & Knowledge Management, Queensland, Australia, 1–5 November 2021. [Google Scholar]

| Parameters | Value |

|---|---|

| Location | Milan, Italy |

| Span of time | 1 November 2013~1 January 2014 |

| Time interval | 10 min |

| Type of data | {SMS-in, SMS-out, Call-in, Call-out, Internet} |

| Parameters | Value |

|---|---|

| Observation window | 1056 |

| Forecast window | 264 |

| Epoch | 50 |

| Batch size | 32 |

| Learning rate | 0.001 |

| Optimization technology | Adam |

| Loss function | MSE |

| Training set: test set | 8:2 |

| Model | SMS-in (One Cell/All Cells) | SMS-out (One Cell/All Cells) | ||||

|---|---|---|---|---|---|---|

| RMSE | MAE | R2 | RMSE | MAE | R2 | |

| HA | 60.428 | 44.431 | −0.266 | 25.772 | 19.040 | 0.275 |

| LR | 15.323/77.853 | 10.845/48.367 | 0.935/0.492 | 13.85/49.893 | 8.434/28.706 | 0.82/0.219 |

| GCN | 4.888/26.050 | 3.301/14.80 | 0.991/0.975 | 5.397/26.747 | 3.705/13.173 | 0.975/0.908 |

| LSTM | 5.843/37.415 | 4.362/18.498 | 0.989/0.948 | 5.593/32.347 | 3.971/16.170 | 0.985/0.869 |

| STDenseNet [18] | 6.255/59.920 | 3.768/33.839 | 0.930/0.640 | 7.003/41.326 | 5.812/21.514 | 0.813/0.025 |

| STGCN [25] | 5.254/46.297 | 4.297/21.172 | 0.92/0.792 | 6.866/35.659 | 5.811/16.562 | 0.906/0.622 |

| STP-GLN | 2.773/27.359 | 2.038/14.726 | 0.992/0.973 | 2.535/23.5 | 1.56/12.312 | 0.984/0.932 |

| Model | Call-in (One Cell/All Cells) | Call-out (One Cell/All Cells) | ||||

|---|---|---|---|---|---|---|

| RMSE | MAE | R2 | RMSE | MAE | R2 | |

| HA | 41.065 | 32.875 | −0.786 | 50.613 | 40.354 | −0.816 |

| LR | 8.355/32.78 | 5.682/21.403 | 0.94/0.780 | 7.901/40.25 | 5.291/25.147 | 0.953/0.796 |

| GCN | 1.678/17.515 | 1.184/9.058 | 0.991/0.977 | 2.004/16.989 | 1.499/9.353 | 0.990/0.982 |

| LSTM | 2.412/17.854 | 1.784/9.806 | 0.991/0.976 | 2.962/20.258 | 2.076/11.153 | 0.988/0.974 |

| STDenseNet [18] | 3.590/35.870 | 1.999/22.764 | 0.917/0.719 | 3.871/40.831 | 2.212/25.156 | 0.922/0.736 |

| STGCN [25] | 4.188/22.398 | 2.61/11.487 | 0.951/0.901 | 5.427/25.837 | 3.253/12.766 | 0.962/0.908 |

| STP-GLN | 1.802/14.314 | 1.216/7.906 | 0.996/0.985 | 1.802/16.202 | 1.708/9.108 | 0.995/0.984 |

| Model | Internet (One Cell/All Cells) | ||

|---|---|---|---|

| RMSE | MAE | R2 | |

| HA | 727.021 | 476.142 | −0.126 |

| LR | 169.3/514.304 | 127.405/329.302 | 0.964/0.769 |

| GCN | 113.274/314.835 | 80.15/182.044 | 0.990/0.968 |

| LSTM | 78.02/290.841 | 54.331/173.889 | 0.994/0.973 |

| STDenseNet [18] | 186.298/385.376 | 139.712/276.109 | 0.974/0.857 |

| STGCN [25] | 147.189/267.267 | 108.549/174.334 | 0.974/0.910 |

| STP-GLN | 27.003/256.442 | 18.82/152.24 | 0.995/0.979 |

| Model | CIKM21-MPGAT Dataset | ||

|---|---|---|---|

| RMSE | MAE | R2 | |

| STGCN [25] | 11.782/15.088 | 4.491/5.571 | 0.522/0.577 |

| STP-GLN | 3.857/5.273 | 1.28/1.662 | 0.967/0.995 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, G.; Guo, Y.; Zeng, Q.; Zhang, Y. A Novel Cellular Network Traffic Prediction Algorithm Based on Graph Convolution Neural Networks and Long Short-Term Memory through Extraction of Spatial-Temporal Characteristics. Processes 2023, 11, 2257. https://doi.org/10.3390/pr11082257

Chen G, Guo Y, Zeng Q, Zhang Y. A Novel Cellular Network Traffic Prediction Algorithm Based on Graph Convolution Neural Networks and Long Short-Term Memory through Extraction of Spatial-Temporal Characteristics. Processes. 2023; 11(8):2257. https://doi.org/10.3390/pr11082257

Chicago/Turabian StyleChen, Geng, Yishan Guo, Qingtian Zeng, and Yudong Zhang. 2023. "A Novel Cellular Network Traffic Prediction Algorithm Based on Graph Convolution Neural Networks and Long Short-Term Memory through Extraction of Spatial-Temporal Characteristics" Processes 11, no. 8: 2257. https://doi.org/10.3390/pr11082257

APA StyleChen, G., Guo, Y., Zeng, Q., & Zhang, Y. (2023). A Novel Cellular Network Traffic Prediction Algorithm Based on Graph Convolution Neural Networks and Long Short-Term Memory through Extraction of Spatial-Temporal Characteristics. Processes, 11(8), 2257. https://doi.org/10.3390/pr11082257