UnA-Mix: Rethinking Image Mixtures for Unsupervised Person Re-Identification

Abstract

1. Introduction

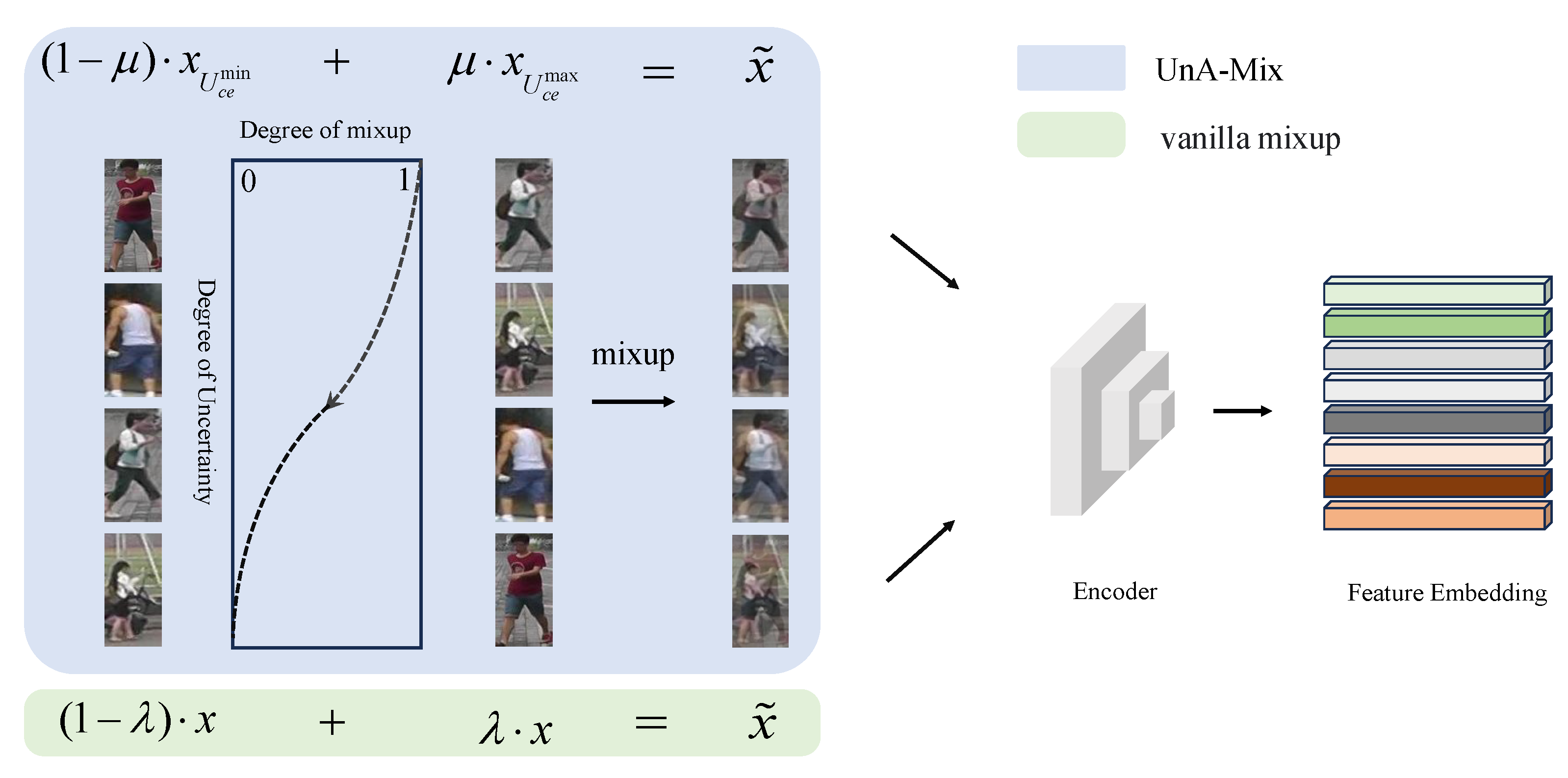

- To our knowledge, this is the first detailed exploration of the impacts of the mixup technique when applied at different locations (at the pixel level and feature level) on task performance in an unsupervised person Re-ID framework.

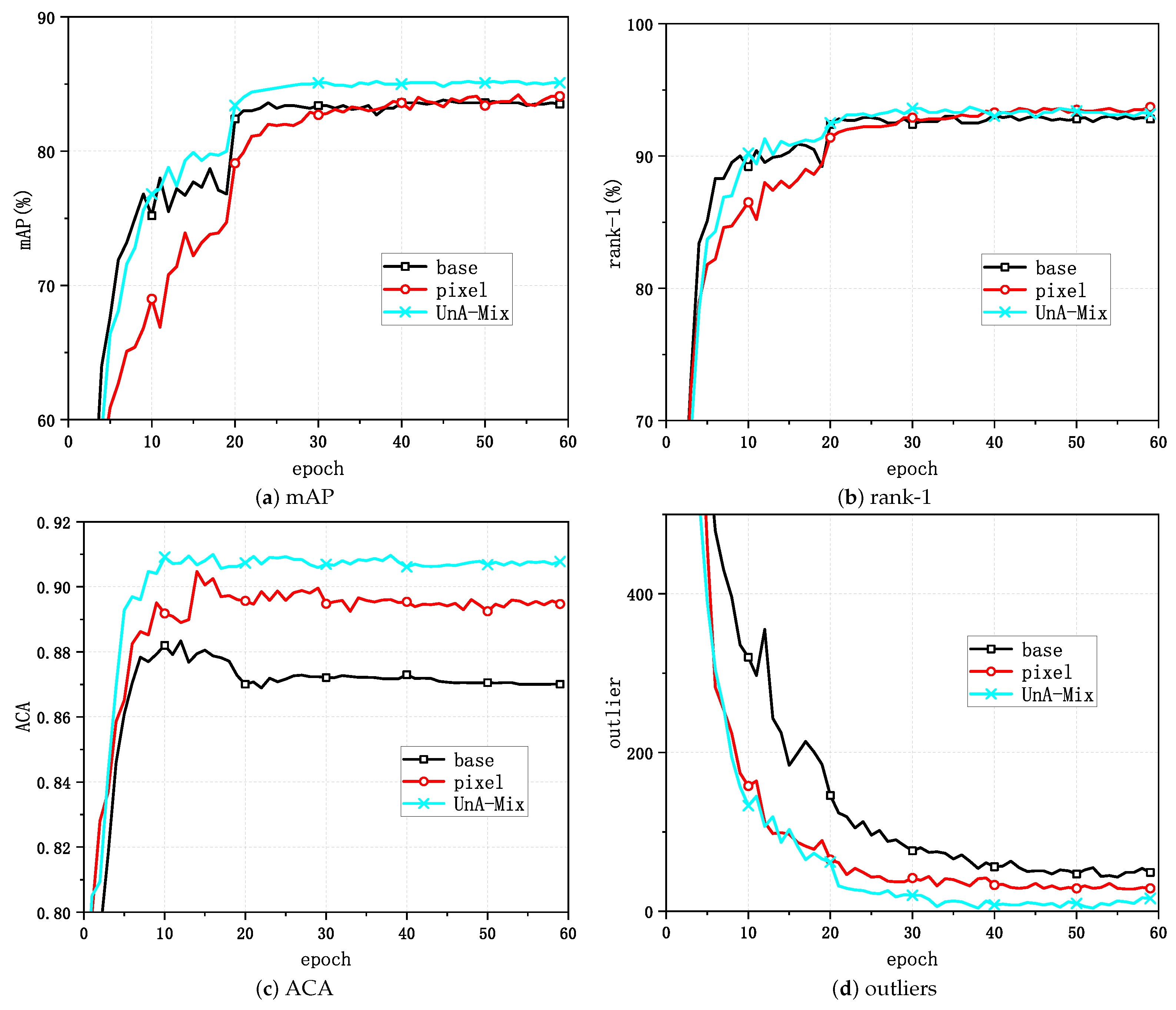

- Training samples of people were quantitatively measured using entropy, based on which we propose the sample uncertainty-aware mixup (UnA-Mix) method for boosting the performance of the unsupervised person Re-ID task.

- Experiments on three person Re-ID benchmark datasets indicated that the proposed method demonstrated better performance than that of other state-of-the-art methods.

2. Related Work

2.1. Unsupervised Person Re-ID

2.2. Contrastive Learning

2.3. Label Noise Representation Learning

3. Methodology

3.1. Preliminary

3.1.1. Unsupervised Person Re-ID Framework

3.1.2. Paradigms of Mixture

3.2. Mixture Strategy

3.3. Uncertainty-Aware Mixup

4. Experiments

- Market1501 [39] is a standard person dataset that was collected at Tsinghua University. It consists of 32,668 images obtained from six video cameras. The training set contains 12,936 pictures with 751 persons, and the test set is composed of 19,732 frames with 750 persons.

- DukeMTMC-ReID [40] is a collection of 36,411 pictures of 1404 people. The pictures were taken by eight cameras. The training set is made up of 16,522 images of 702 persons, and the test set is constructed from 19,889 images of 702 persons.

- MSMT17 [41] is the most challenging dataset; it was collected from 15 cameras at Peking University, and it includes both indoor and outdoor scenes. This dataset contains a total of 126,441 photos of 4101 persons; the training set includes 1041 persons and 32,621 images to which they correspond. The remaining 30,360 persons with 93,820 images serve as the test set.

4.1. Implementation Details

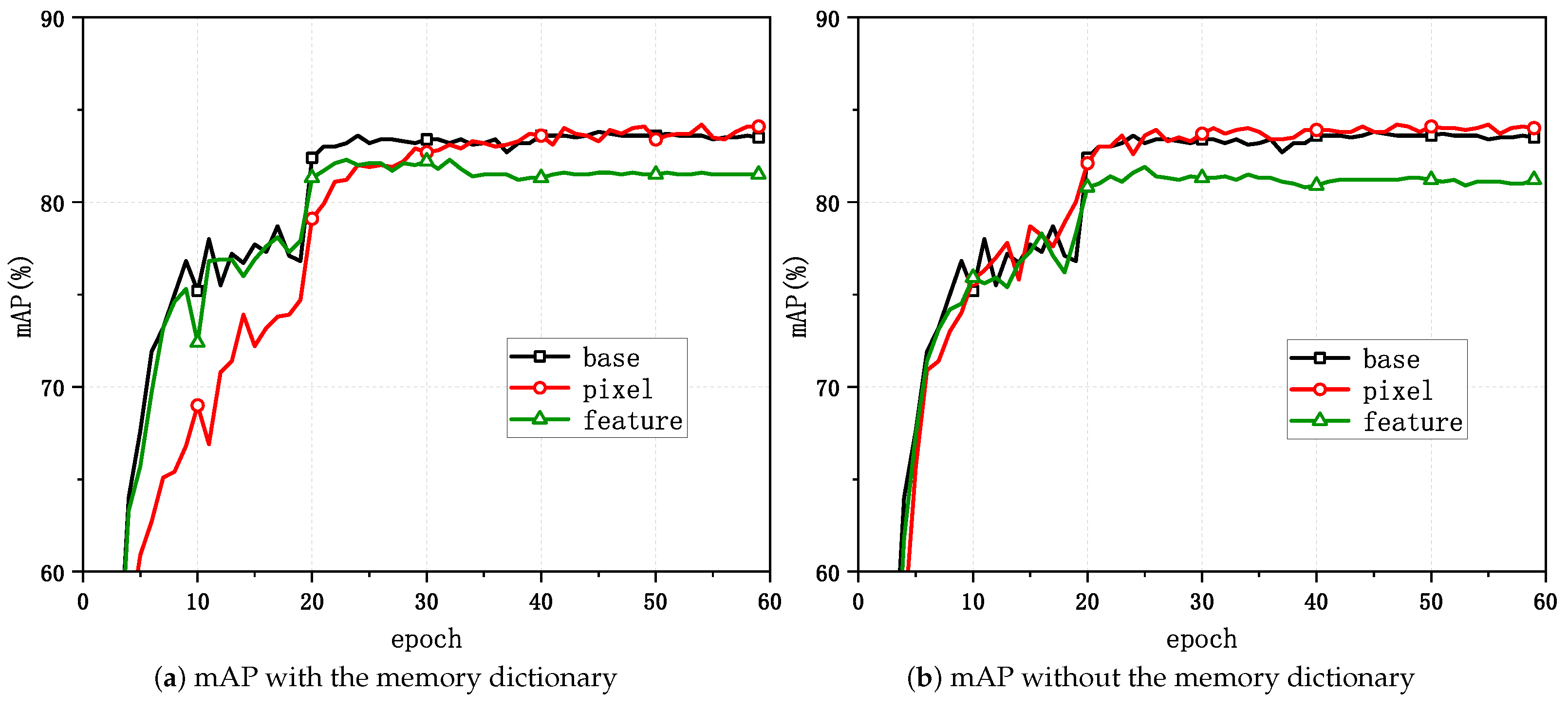

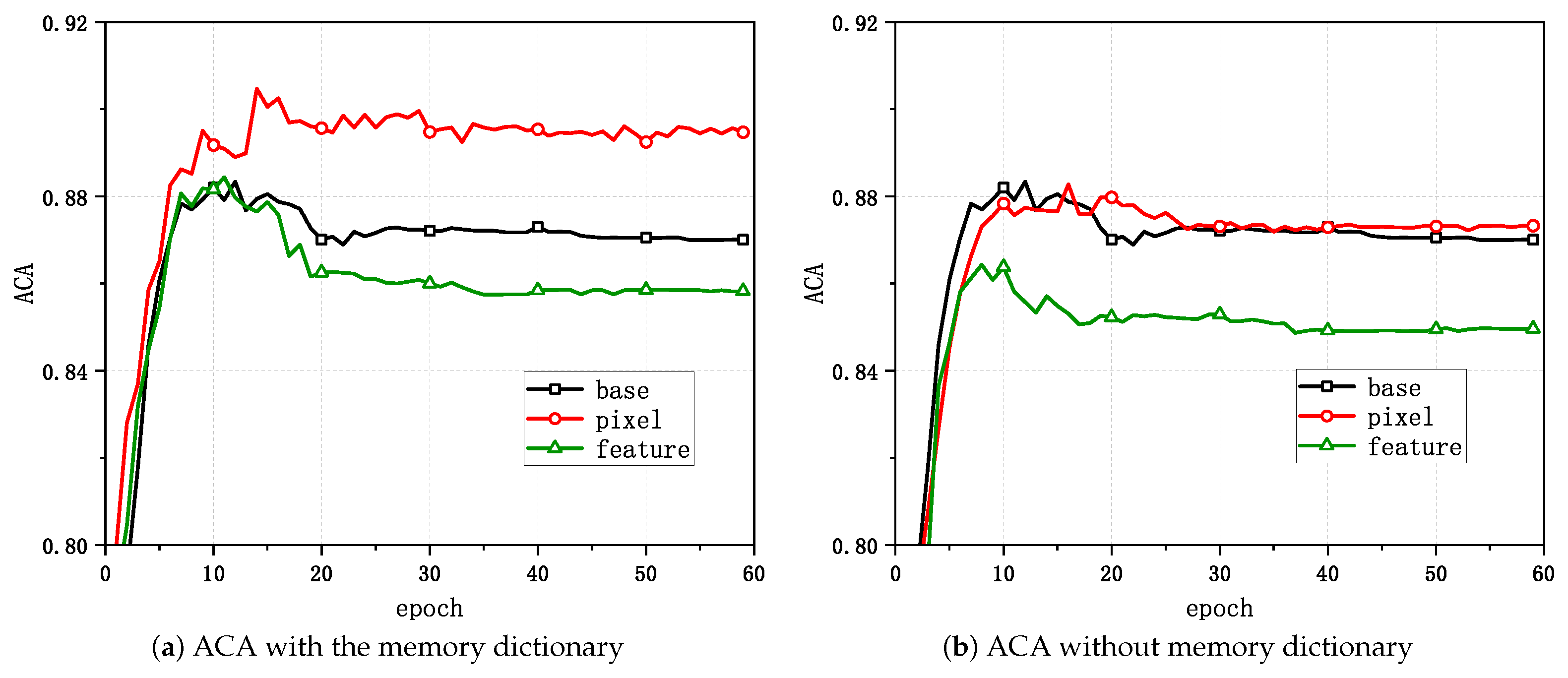

4.2. Comparison of Different Positions

- It is noticeable in Table 2 that the pixel-level method had improvements in mAP of 0.9% and in rank-1 of 1.0% for Market-1501, improvements in mAP of 1.0% and in rank-1 of 0.2% for DukeMTMC-ReID, and in mAP of 4.8% and in rank-1 of 4.4% for MSMT17 compared to the baseline. It is reasonable to speculate that when mixup acted on the pixel level, the generated mixed samples could force the network model to generate more discriminative feature representations and learn more accurate and smoother decision boundaries. In contrast, when the mixup operation acted at the feature level, it lacked the process of feature extraction from mixed samples by the network model, which undoubtedly weakened the capacity of the model to yield more discriminative features.

- In comparison to the w.o.MD approach in the pixel-level technique, the w.MD approach had improvements of 0.3% and 0.7% in the mAP and rank-1 metrics for Market-1501, 2.0% and 1.6% for DukeMTMC-ReID, and 3.3% and 2.4% for MSMT17, as Table 2 illustrates. The w.MD technique had improvements of 0.4% and 0% for the mAP and rank-1 for Market-1501, 1.7% and -0.1% for DukeMTMC-ReID, and 1.7% and 2.8% for MSMT17 in comparison to the w.o.MD approach in the feature-level method. It can be observed that improved relative to in the evaluation metrics mAP and rank-1, regardless of whether mixup acted at the feature level or the pixel level. This verified that mixup alleviated the drawbacks of the insufficient number of individual persons in the Re-ID dataset to a certain extent.

4.3. Comparison with State-of-the-Art Methods

4.4. Ablation Study

4.4.1. Convergence

4.4.2. Visualization

5. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Fan, H.; Zheng, L.; Yan, C.; Yang, Y. Unsupervised person re-identification: Clustering and fine-tuning. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2018, 14, 1–18. [Google Scholar] [CrossRef]

- Ma, X.; Zhu, X.; Gong, S.; Xie, X.; Hu, J.; Lam, K.M.; Zhong, Y. Person re-identification by unsupervised video matching. Pattern Recognit. 2017, 65, 197–210. [Google Scholar] [CrossRef]

- Lu, X.; Li, X.; Sheng, W.; Ge, S.S. Long-term person re-identification based on appearance and gait feature fusion under covariate changes. Processes 2022, 10, 770. [Google Scholar] [CrossRef]

- Wu, L.; Liu, D.; Zhang, W.; Chen, D.; Ge, Z.; Boussaid, F.; Bennamoun, M.; Shen, J. Pseudo-pair based self-similarity learning for unsupervised person re-identification. IEEE Trans. Image Process. 2022, 31, 4803–4816. [Google Scholar] [CrossRef] [PubMed]

- Song, L.; Wang, C.; Zhang, L.; Du, B.; Zhang, Q.; Huang, C.; Wang, X. Unsupervised domain adaptive re-identification: Theory and practice. Pattern Recognit. 2020, 102, 107173. [Google Scholar] [CrossRef]

- Song, C.; Huang, Y.; Ouyang, W.; Wang, L. Mask-guided contrastive attention model for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1179–1188. [Google Scholar]

- Younis, H.A.; Ruhaiyem, N.I.R.; Badr, A.A.; Abdul-Hassan, A.K.; Alfadli, I.M.; Binjumah, W.M.; Altuwaijri, E.A.; Nasser, M. Multimodal age and gender estimation for adaptive human-robot interaction: A systematic literature review. Processes 2023, 11, 1488. [Google Scholar] [CrossRef]

- Pang, B.; Zhai, D.; Jiang, J.; Liu, X. Fully unsupervised person re-identification via selective contrastive learning. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2022, 18, 1–15. [Google Scholar] [CrossRef]

- Yin, J.; Zhang, S.; Xie, J.; Ma, Z.; Guo, J. Unsupervised person re-identification via simultaneous clustering and mask prediction. Pattern Recognit. 2022, 126, 108568. [Google Scholar] [CrossRef]

- Li, Y.; Yao, H.; Xu, C. Intra-domain consistency enhancement for unsupervised person re-identification. IEEE Trans. Multimed. 2021, 24, 415–425. [Google Scholar] [CrossRef]

- Pang, Z.; Guo, J.; Sun, W.; Xiao, Y.; Yu, M. Cross-domain person re-identification by hybrid supervised and unsupervised learning. Appl. Intell. 2022, 52, 2987–3001. [Google Scholar] [CrossRef]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. mixup: Beyond empirical risk minimization. arXiv 2017, arXiv:1710.09412. [Google Scholar]

- Thulasidasan, S.; Chennupati, G.; Bilmes, J.A.; Bhattacharya, T.; Michalak, S. On mixup training: Improved calibration and predictive uncertainty for deep neural networks. Adv. Neural Inf. Process. Syst. 2019, 32, 13911–13922. [Google Scholar]

- Wu, D.; Wang, C.; Wu, Y.; Wang, Q.C.; Huang, D.S. Attention deep model with multi-scale deep supervision for person re-identification. IEEE Trans. Emerg. Top. Comput. Intell. 2021, 5, 70–78. [Google Scholar] [CrossRef]

- Sun, L.; Xia, C.; Yin, W.; Liang, T.; Yu, P.S.; He, L. Mixup-transformer: Dynamic data augmentation for nlp tasks. arXiv 2020, arXiv:2010.02394. [Google Scholar]

- Lee, M.F.R.; Chen, Y.C.; Tsai, C.Y. Deep learning-based human body posture recognition and tracking for unmanned aerial vehicles. Processes 2022, 10, 2295. [Google Scholar] [CrossRef]

- Ge, L.; Dan, D.; Koo, K.Y.; Chen, Y. An improved system for long-term monitoring of full-bridge traffic load distribution on long-span bridges. Structures 2023, 54, 1076–1089. [Google Scholar] [CrossRef]

- Niu, Z.; Jiang, B.; Xu, H.; Zhang, Y. Balance Loss for multiAttention-based YOLOv4. In Proceedings of the 2023 5th International Conference on Intelligent Control, Measurement and Signal Processing (ICMSP), Chengdu, China, 19–21 May 2023; pp. 946–954. [Google Scholar]

- Lee, K.; Zhu, Y.; Sohn, K.; Li, C.L.; Shin, J.; Lee, H. i-mix: A domain-agnostic strategy for contrastive representation learning. arXiv 2020, arXiv:2010.08887. [Google Scholar]

- Shen, Z.; Liu, Z.; Liu, Z.; Savvides, M.; Darrell, T.; Xing, E. Un-mix: Rethinking image mixtures for unsupervised visual representation learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2022; Volume 36, pp. 2216–2224. [Google Scholar]

- He, K.; Fan, H.; Wu, Y.; Xie, S.; Girshick, R. Momentum contrast for unsupervised visual representation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9729–9738. [Google Scholar]

- Mannor, S.; Peleg, D.; Rubinstein, R. The cross entropy method for classification. In Proceedings of the 22nd International Conference on Machine Learning, Bonn, Germany, 7–11 August 2005; pp. 561–568. [Google Scholar]

- Li, M.; Li, C.G.; Guo, J. Cluster-guided asymmetric contrastive learning for unsupervised person re-identification. IEEE Trans. Image Process. 2022, 31, 3606–3617. [Google Scholar] [CrossRef] [PubMed]

- Lin, X.; Ren, P.; Yeh, C.H.; Yao, L.; Song, A.; Chang, X. Unsupervised person re-identification: A systematic survey of challenges and solutions. arXiv 2021, arXiv:2109.06057. [Google Scholar]

- Si, T.; He, F.; Zhang, Z.; Duan, Y. Hybrid contrastive learning for unsupervised person re-identification. IEEE Trans. Multimed. 2022, 25, 4323–4334. [Google Scholar] [CrossRef]

- Ge, Y.; Chen, D.; Li, H. Mutual mean-teaching: Pseudo label refinery for unsupervised domain adaptation on person re-identification. arXiv 2020, arXiv:2001.01526. [Google Scholar]

- Yang, F.; Li, K.; Zhong, Z.; Luo, Z.; Sun, X.; Cheng, H.; Guo, X.; Huang, F.; Ji, R.; Li, S. Asymmetric co-teaching for unsupervised cross-domain person re-identification. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12597–12604. [Google Scholar]

- Zhu, X.; Li, Y.; Sun, J.; Chen, H.; Zhu, J. Learning with noisy labels method for unsupervised domain adaptive person re-identification. Neurocomputing 2021, 452, 78–88. [Google Scholar] [CrossRef]

- Grill, J.B.; Strub, F.; Altché, F.; Tallec, C.; Richemond, P.; Buchatskaya, E.; Doersch, C.; Avila Pires, B.; Guo, Z.; Gheshlaghi Azar, M.; et al. Bootstrap your own latent-a new approach to self-supervised learning. Adv. Neural Inf. Process. Syst. 2020, 33, 21271–21284. [Google Scholar]

- Chen, X.; He, K. Exploring simple siamese representation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 15750–15758. [Google Scholar]

- Wang, X.; Qi, G.J. Contrastive learning with stronger augmentations. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 5549–5560. [Google Scholar] [CrossRef] [PubMed]

- Kalantidis, Y.; Sariyildiz, M.B.; Pion, N.; Weinzaepfel, P.; Larlus, D. Hard negative mixing for contrastive learning. Adv. Neural Inf. Process. Syst. 2020, 33, 21798–21809. [Google Scholar]

- Han, B.; Yao, Q.; Yu, X.; Niu, G.; Xu, M.; Hu, W.; Tsang, I.; Sugiyama, M. Co-teaching: Robust training of deep neural networks with extremely noisy labels. Adv. Neural Inf. Process. Syst. 2018, 31. [Google Scholar]

- Lee, K.H.; He, X.; Zhang, L.; Yang, L. Cleannet: Transfer learning for scalable image classifier training with label noise. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5447–5456. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25. [Google Scholar] [CrossRef]

- Wu, Z.; Xiong, Y.; Yu, S.X.; Lin, D. Unsupervised feature learning via non-parametric instance discrimination. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3733–3742. [Google Scholar]

- De Boer, P.T.; Kroese, D.P.; Mannor, S.; Rubinstein, R.Y. A tutorial on the cross-entropy method. Ann. Oper. Res. 2005, 134, 19–67. [Google Scholar] [CrossRef]

- Zheng, L.; Shen, L.; Tian, L.; Wang, S.; Wang, J.; Tian, Q. Scalable person re-identification: A benchmark. In Proceedings of the IEEE International Conference on Computer Vision, Washington, DC, USA, 7–13 December 2015; pp. 1116–1124. [Google Scholar]

- Zheng, Z.; Zheng, L.; Yang, Y. Unlabeled samples generated by gan improve the person re-identification baseline in Vitro. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2017; pp. 3754–3762. [Google Scholar]

- Wei, L.; Zhang, S.; Gao, W.; Tian, Q. Person transfer gan to bridge domain gap for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 79–88. [Google Scholar]

- Wang, D.; Zhang, S. Unsupervised person re-identification via multi-label classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10981–10990. [Google Scholar]

- Li, J.; Zhang, S. Joint visual and temporal consistency for unsupervised domain adaptive person re-identification. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XXIV 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 483–499. [Google Scholar]

- Ge, Y.; Zhu, F.; Chen, D.; Zhao, R. Self-paced contrastive learning with hybrid memory for domain adaptive object re-id. Adv. Neural Inf. Process. Syst. 2020, 33, 11309–11321. [Google Scholar]

- Chen, G.; Lu, Y.; Lu, J.; Zhou, J. Deep credible metric learning for unsupervised domain adaptation person re-identification. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part VIII 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 643–659. [Google Scholar]

- Chen, H.; Lagadec, B.; Bremond, F. Enhancing diversity in teacher-student networks via asymmetric branches for unsupervised person re-identification. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual Event, 5–9 January 2021; pp. 1–10. [Google Scholar]

- Lin, Y.; Dong, X.; Zheng, L.; Yan, Y.; Yang, Y. A bottom-up clustering approach to unsupervised person re-identification. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 8738–8745. [Google Scholar]

- Lin, Y.; Xie, L.; Wu, Y.; Yan, C.; Tian, Q. Unsupervised person re-identification via softened similarity learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3390–3399. [Google Scholar]

- Xuan, S.; Zhang, S. Intra-inter camera similarity for unsupervised person re-identification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 11926–11935. [Google Scholar]

- Cho, Y.; Kim, W.J.; Hong, S.; Yoon, S.E. Part-based pseudo label refinement for unsupervised person re-identification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 7308–7318. [Google Scholar]

- Dai, Z.; Wang, G.; Yuan, W.; Zhu, S.; Tan, P. Cluster contrast for unsupervised person re-identification. In Proceedings of the Asian Conference on Computer Vision, Macao, China, 4–8 December 2022; pp. 1142–1160. [Google Scholar]

- Roy, P.; Seshadri, S.; Sudarshan, S.; Bhobe, S. Efficient and extensible algorithms for multi query optimization. In Proceedings of the 2000 ACM SIGMOD international conference on Management of Data, Dallas, TX, USA, 16–18 May 2000; pp. 249–260. [Google Scholar]

- Khan, K.; Rehman, S.U.; Aziz, K.; Fong, S.; Sarasvady, S. DBSCAN: Past, present and future. In Proceedings of the Fifth International Conference on the Applications of Digital Information and Web Technologies (ICADIWT 2014), Chennai, India, 17–19 February 2014; pp. 232–238. [Google Scholar]

| Dataset | Total Images | Cameras | Train Identities | Train Images | Test Identities | Query Images |

|---|---|---|---|---|---|---|

| Market-1501 | 32,668 | 6 | 751 | 12,936 | 750 | 3368 |

| DukeMTMC-ReID | 36,441 | 8 | 702 | 16,522 | 702 | 2228 |

| MSMT17 | 126,441 | 15 | 1041 | 32,621 | 3060 | 11,659 |

| Datasets | Baseline | Pixel Level | Feature Level | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| w.MD | w.o.MD | w.MD | w.o.MD | w.MD | w.o.MD | |||||||

| mAP | rank-1 | mAP | rank-1 | mAP | rank-1 | mAP | rank-1 | mAP | rank-1 | mAP | rank-1 | |

| Market-1501 | 83.3 | 92.6 | - | - | 84.2 | 93.6 | 83.9 | 92.9 | 82.3 | 92.3 | 81.9 | 92.0 |

| DukeMTMC-ReID | 72.6 | 84.9 | - | - | 73.6 | 85.1 | 71.6 | 83.5 | 72.7 | 83.7 | 71.0 | 83.8 |

| MSMT17 | 36.7 | 64.5 | - | - | 41.5 | 68.9 | 38.2 | 66.5 | 29.2 | 56.5 | 27.5 | 53.7 |

| Methods | Market-1501 | DukeMTMC-ReID | MSMT17 | ||||

|---|---|---|---|---|---|---|---|

| mAP | Rank-1 | mAP | Rank-1 | mAP | Rank-1 | ||

| UDA | MMCL | 60.4 | 84.4 | 51.4 | 72.4 | 16.2 | 43.6 |

| JVTC | 61.1 | 83.8 | 56.2 | 75.0 | 20.3 | 45.4 | |

| SpCL | 76.7 | 90.3 | 68.8 | 82.9 | 26.8 | 53.7 | |

| DCML | 72.6 | 87.9 | 63.3 | 79.1 | - | - | |

| MMT | 75.6 | 89.3 | 65.1 | 78.9 | 24.0 | 50.1 | |

| ABMT | 78.3 | 92.5 | 69.1 | 82.0 | 26.5 | 54.3 | |

| USL | BUC | 29.6 | 61.9 | 22.1 | 40.4 | - | - |

| SSL | 37.8 | 71.9 | 28.6 | 52.5 | - | - | |

| MMCL | 45.5 | 80.3 | 40.2 | 65.2 | 11.2 | 35.4 | |

| SpCL | 73.1 | 88.1 | 65.3 | 81.2 | 19.1 | 42.3 | |

| IICS | 72.9 | 89.5 | 68.2 | 81.4 | 26.9 | 56.4 | |

| PPLR | 81.5 | 92.8 | - | - | 31.4 | 61.1 | |

| Baseline | 83.3 | 92.6 | 72.6 | 84.9 | 36.7 | 64.5 | |

| UnA-Mix | 84.8 | 94.1 | 74.0 | 85.5 | 41.9 | 69.3 | |

| Datasets | Pixel Level | Feature Level | ||||||

|---|---|---|---|---|---|---|---|---|

| w.MD | w.o.MD | w.MD | w.o.MD | |||||

| mAP | Rank-1 | mAP | Rank-1 | mAP | Rank-1 | mAP | Rank-1 | |

| Market-1501 | 84.8 | 94.1 | 84.1 | 93.0 | 82.8 | 92.6 | 82.1 | 92.4 |

| DukeMTMC-ReID | 74.0 | 85.5 | 72.0 | 83.7 | 73.1 | 83.9 | 71.5 | 83.9 |

| MSMT17 | 41.9 | 69.3 | 38.9 | 66.6 | 29.2 | 56.2 | 27.8 | 53.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, J.; Sun, H.; Liu, W.; Guo, A.; Zhang, J. UnA-Mix: Rethinking Image Mixtures for Unsupervised Person Re-Identification. Processes 2024, 12, 168. https://doi.org/10.3390/pr12010168

Liu J, Sun H, Liu W, Guo A, Zhang J. UnA-Mix: Rethinking Image Mixtures for Unsupervised Person Re-Identification. Processes. 2024; 12(1):168. https://doi.org/10.3390/pr12010168

Chicago/Turabian StyleLiu, Jingjing, Haiming Sun, Wanquan Liu, Aiying Guo, and Jianhua Zhang. 2024. "UnA-Mix: Rethinking Image Mixtures for Unsupervised Person Re-Identification" Processes 12, no. 1: 168. https://doi.org/10.3390/pr12010168

APA StyleLiu, J., Sun, H., Liu, W., Guo, A., & Zhang, J. (2024). UnA-Mix: Rethinking Image Mixtures for Unsupervised Person Re-Identification. Processes, 12(1), 168. https://doi.org/10.3390/pr12010168