Measurement Method of Bar Unmanned Warehouse Area Based on Binocular Vision

Abstract

:1. Introduction

2. Literature Review

3. 3D Dimensional Measurement of Stack Based on Binocular Vision

3.1. Binocular Camera Joint Calibration

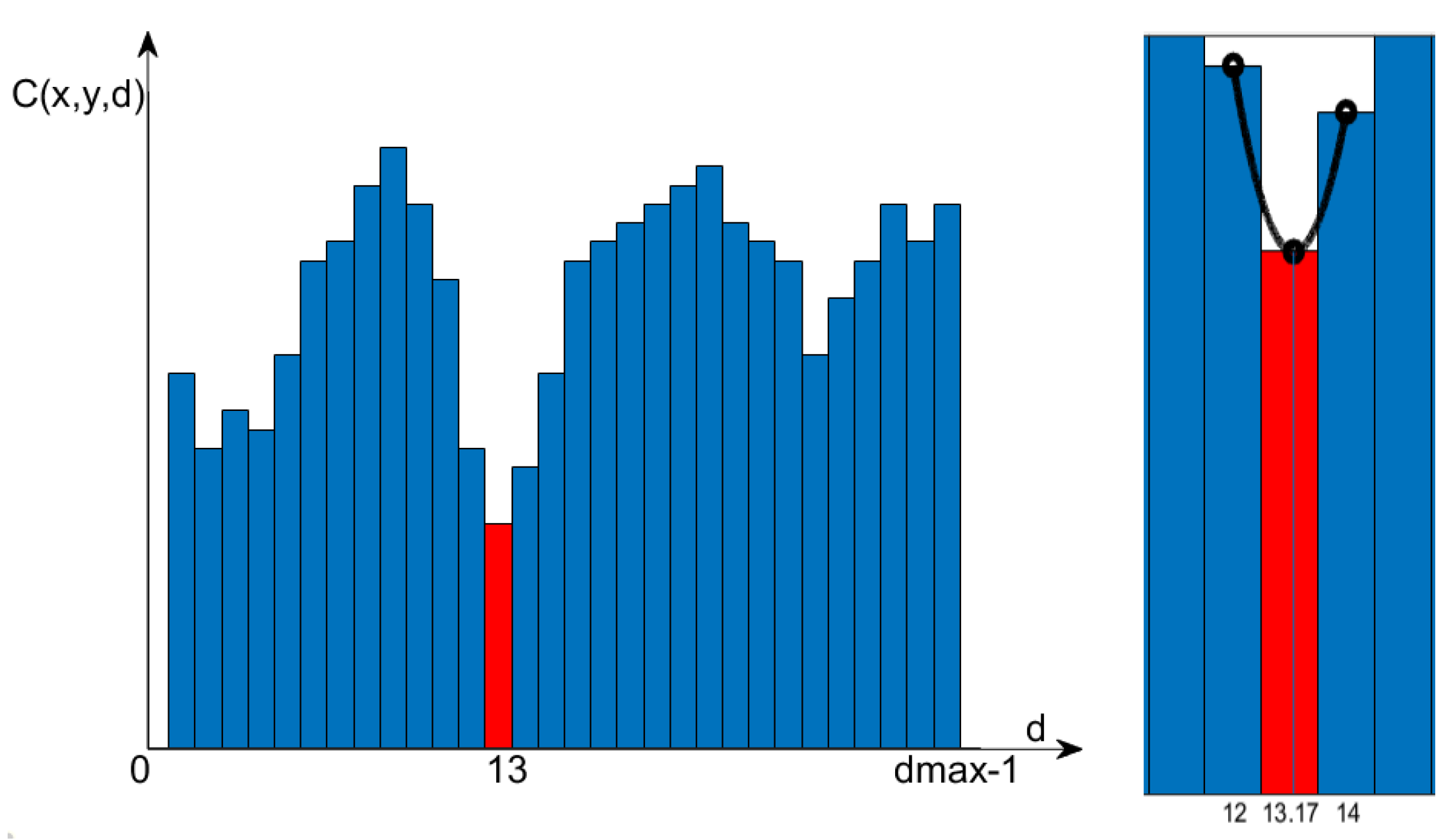

3.2. Stereo Matching Algorithm Based on Sub-Pixel Interpolation

4. Vision-Based Multi-Bit Pose Measurement Technology for Bar Warehouse Area

4.1. Bar Warehouse Area Feature Point Detection

4.2. Feature Point Matching Algorithm Based on Motion Trend Constraint

- System Calibration

- 2.

- Establish an IMU measurement model.

- 3.

- Establish IMU kinematics model.

- 4.

- Eliminate mismatched feature points using the inertia model.

4.3. Bar Library Multi-Position Point Cloud Stitching Algorithm

5. Fields Test and Application

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Fang, J.; Xu, S.; Yang, Y.; Wang, Y. Localization and measurement method of continuous casting slab model based on binocular vision. Microw. Opt. Technol. Lett. 2020, 62, 53–59. [Google Scholar] [CrossRef]

- Niu, M.; Song, K.; Huang, L.; Wang, Q.; Yan, Y.; Meng, Q. Unsupervised saliency detection of rail surface defects using stereoscopic images. IEEE Trans. Ind. Inform. 2020, 17, 2271–2281. [Google Scholar] [CrossRef]

- Zhao, S.; Kang, F.; Li, J. Displacement monitoring for slope stability evaluation based on binocular vision systems. Optik 2018, 171, 658–671. [Google Scholar] [CrossRef]

- Shi, B.; Liu, Z.; Zhang, G. Online stereo vision measurement based on correction of sensor structural parameters. Opt. Express 2021, 29, 37987–38000. [Google Scholar] [CrossRef]

- Liu, L.; Cui, J.; Huan, Y.; Zou, Z.; Hu, X.; Zheng, L. A Design of Smart Unmanned Vending Machine for New Retail Based on Binocular Camera and Machine Vision. IEEE Consum. Electron. Mag. 2021, 11, 21–31. [Google Scholar] [CrossRef]

- Smith, R.C.; Cheeseman, P. On the representation and estimation of spatial uncertainty. Int. J. Robot. Res. 1986, 5, 56–68. [Google Scholar] [CrossRef]

- Bresson, G.; Alsayed, Z.; Yu, L.; Glaser, S. Simultaneous localization and mapping: A survey of current trends in autonomous driving. IEEE Trans. Intell. Veh. 2017, 2, 194–220. [Google Scholar] [CrossRef]

- Davison, A.J.; Reid, I.D.; Molton, N.D.; Stasse, O. MonoSLAM: Real-Time Single Camera SLAM. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1052–1067. [Google Scholar] [CrossRef] [PubMed]

- Klein, G.; Murray, D. Parallel tracking and mapping for small AR workspaces. In Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Washington, DC, USA, 13–16 November 2007; IEEE Computer Society: Washington, DC, USA, 2007; pp. 1–10. [Google Scholar]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardós, J.D. Orb-SLAM2: An open-source slam system for monocular, stereo, and RGB-D cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-scale direct monocular SLAM. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 834–849. [Google Scholar]

- Liu, Y.; Zhao, C.; Ren, M. An Enhanced Hybrid Visual–Inertial Odometry System for Indoor Mobile Robot. Sensors 2022, 22, 2930. [Google Scholar] [CrossRef]

- Xie, H.; Chen, W.; Wang, J. Hierarchical forest based fast online loop closure for low-latency consistent visual-inertial SLAM. Robot. Auton. Syst. 2022, 151, 104035. [Google Scholar] [CrossRef]

- Lee, W.; Eckenhoff, K.; Yang, Y.; Geneva, P.; Huang, G. Visual-inertial-wheel odometry with online calibration. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021. [Google Scholar]

- Cheng, J.; Zhang, L.; Chen, Q. An Improved Initialization Method for Monocular Visual-Inertial SLAM. Electronics 2021, 10, 3063. [Google Scholar] [CrossRef]

- Jung, J.H.; Cha, J.; Chung, J.Y.; Kim, T.I.; Seo, M.H.; Park, S.Y.; Yeo, J.Y.; Park, C.G. Monocular visual-inertial-wheel odometry using low-grade IMU in urban areas. IEEE Trans. Intell. Transp. Syst. 2020, 23, 925–938. [Google Scholar] [CrossRef]

- Leutenegger, S.; Furgale, P.; Rabaud, V.; Chli, M.; Konolige, K.; Siegwart, R. Keyframe-based visual-inertial slam using nonlinear optimization. In Proceedings of the Robotis Science and Systems (RSS), Berlin, Germany, 24 June–28 June 2013. [Google Scholar]

- Leutenegger, S.; Lynen, S.; Bosse, M.; Siegwart, R.; Furgale, P. Keyframe-based visual–inertial odometry using nonlinear optimization. Int. J. Robot. Res. 2014, 34, 314–334. [Google Scholar] [CrossRef]

- Li, J.; Shi, X.X.; Cheng, Z.P.; Wang, J.Z. Road detection and location based on multi-channel fusion and polar constraint. J. Beijing Inst. Technol. 2020, 40, 867–872. [Google Scholar]

- Pai, N.S.; Huang, W.Z.; Chen, P.Y.; Chen, S.A. Optimization and Path Planning of Simultaneous Localization and Mapping Construction Based on Binocular Stereo Vision. Sens. Mater. 2022, 34, 1091–1104. [Google Scholar] [CrossRef]

- Shao, X.; Zhang, L.; Zhang, T.; Shen, Y.; Zhou, Y. MOFIS SLAM: A Multi-Object Semantic SLAM System with Front-View, Inertial, and Surround-View Sensors for Indoor Parking. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 4788–4803. [Google Scholar] [CrossRef]

- Yan, Y.; Zhang, B.; Zhou, J.; Zhang, Y.; Liu, X.A. Real-Time Localization and Mapping Utilizing Multi-Sensor Fusion and Visual–IMU–Wheel Odometry for Agricultural Robots in Unstructured, Dynamic and GPS-Denied Greenhouse Environments. Agronomy 2022, 12, 1740. [Google Scholar] [CrossRef]

- Yang, Q. A non-local cost aggregation method for stereo matching. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 1402–1409. [Google Scholar]

- Peng, X.; Han, J.; Tang, Y.; Shang, Y.; Yu, Y. Anti-noise stereo matching algorithm based on improved Census transform and outlier elimination. Acta Opt. Sin. 2017, 37, 223–231. [Google Scholar]

- Lowe, G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-up robust features. Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Rosten, E.; Drummond, T. Machine learning for high-speed corner detection. In Proceedings of the Computer Vision–ECCV 2006: 9th European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; pp. 430–443. [Google Scholar]

- Furgale, P.; Rehder, J.; Siegwart, R. Unified temporal and spatial calibration for multi-sensor systems. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013. [Google Scholar]

| Measurement Range (mm) | Target Size (mm) | SGBM (mm) | Error (%) | CSCA (mm) | Error (%) | Proposed Algorithm (mm) | Error (%) |

|---|---|---|---|---|---|---|---|

| 1800 | 90 | 89.413 | 0.65 | 91.324 | 1.47 | 91.703 | 1.89 |

| 2500 | 90 | 88.161 | 2.04 | 91.815 | 2.02 | 91.133 | 1.26 |

| 3000 | 90 | 91.858 | 2.06 | 89.978 | 4.01 | 93.608 | 1.34 |

| 3600 | 90 | 93.330 | 3.70 | 88.679 | 1.47 | 89.596 | 0.45 |

| 4500 | 90 | 95.212 | 5.79 | 88.420 | 1.76 | 88.912 | 1.21 |

| 5000 | 90 | 92.472 | 2.75 | 95.360 | 5.96 | 91.747 | 1.94 |

| 5600 | 90 | 92.228 | 2.48 | 87.991 | 2.23 | 88.991 | 1.12 |

| 8500 | 90 | 108.265 | 20.29 | 95.995 | 6.66 | 92.543 | 2.83 |

| Side Surface of Bar Stack | Top Surface of Bar Stack | |||

|---|---|---|---|---|

| Feature Point Type | Number of Measurements | Time (ms) | Number of Measurements | Time (ms) |

| SIFT | 18972 | 7840 | 107 | 1980 |

| SURF | 8240 | 6070 | 36 | 2160 |

| ORB | 500 | 1580 | 41 | 1580 |

| Side Surface of Bar Stack | Top Surface of Bar Stack | |||||

|---|---|---|---|---|---|---|

| Feature Point Type | Number of Initial Matching Point Pairs | Number of Matching Point Pairs after Filtering | Time (ms) | Number of Initial Matching Point Pairs | Number of Matching Point Pairs after Filtering | Time (ms) |

| SIFT | 2823 | 837 | 12,050 | 107 | 4 | 1930 |

| SURF | 534 | 208 | 6630 | 69 | 17 | 2170 |

| ORB | 59 | 28 | 2620 | 41 | 20 | 1750 |

| MAE (mm) | MRE | Maximum Absolute Error (mm) | Maximum Relative Error | Bar Dimension | Method | Unit Distance (mm) | Frequency (Hz) | |

|---|---|---|---|---|---|---|---|---|

| Laser scanning | 0 | 0 | 0 | 0 | 2D | Single Point Scan | 10 | 2 |

| Binocular vision | 9.82 | 0.26% | 17.73 | 0.47% | 3D | Face Scan | 9.56 | 30 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yan, S.; Xu, D.; Yan, H.; Wang, Z.; He, H.; Wang, X.; Yang, Q. Measurement Method of Bar Unmanned Warehouse Area Based on Binocular Vision. Processes 2024, 12, 466. https://doi.org/10.3390/pr12030466

Yan S, Xu D, Yan H, Wang Z, He H, Wang X, Yang Q. Measurement Method of Bar Unmanned Warehouse Area Based on Binocular Vision. Processes. 2024; 12(3):466. https://doi.org/10.3390/pr12030466

Chicago/Turabian StyleYan, Shuzong, Dong Xu, He Yan, Ziqiang Wang, Hainan He, Xiaochen Wang, and Quan Yang. 2024. "Measurement Method of Bar Unmanned Warehouse Area Based on Binocular Vision" Processes 12, no. 3: 466. https://doi.org/10.3390/pr12030466