A TCN-BiGRU Density Logging Curve Reconstruction Method Based on Multi-Head Self-Attention Mechanism

Abstract

1. Introduction

2. Methodology

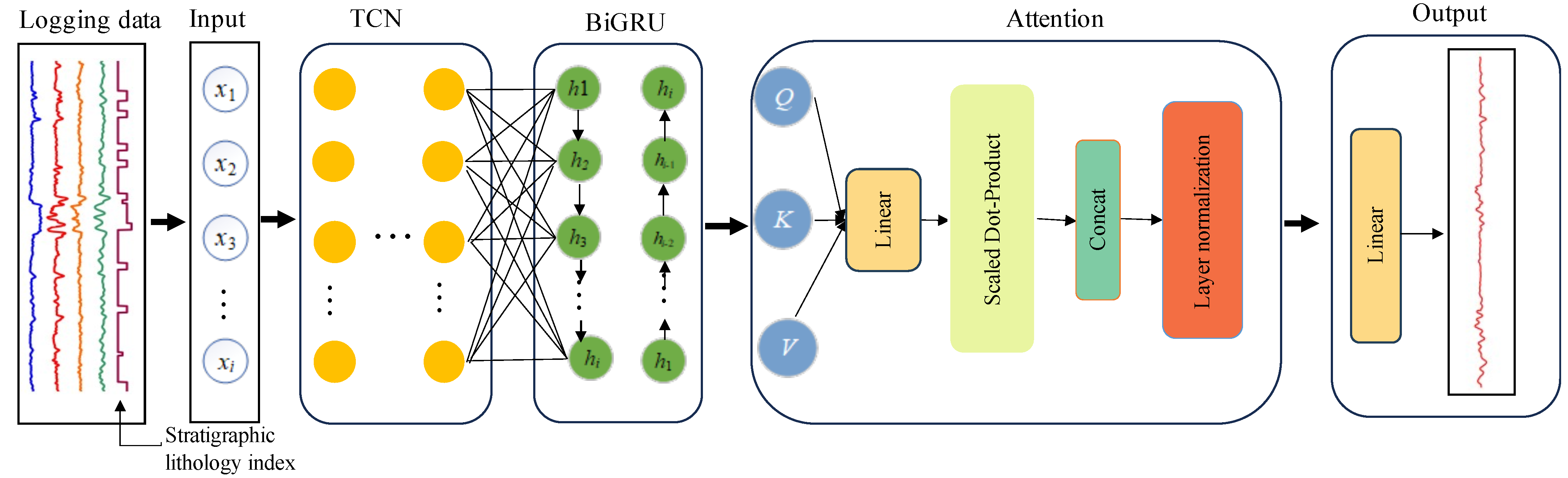

2.1. Temporal Convolutional Networks

2.1.1. Dilated Causal Convolution

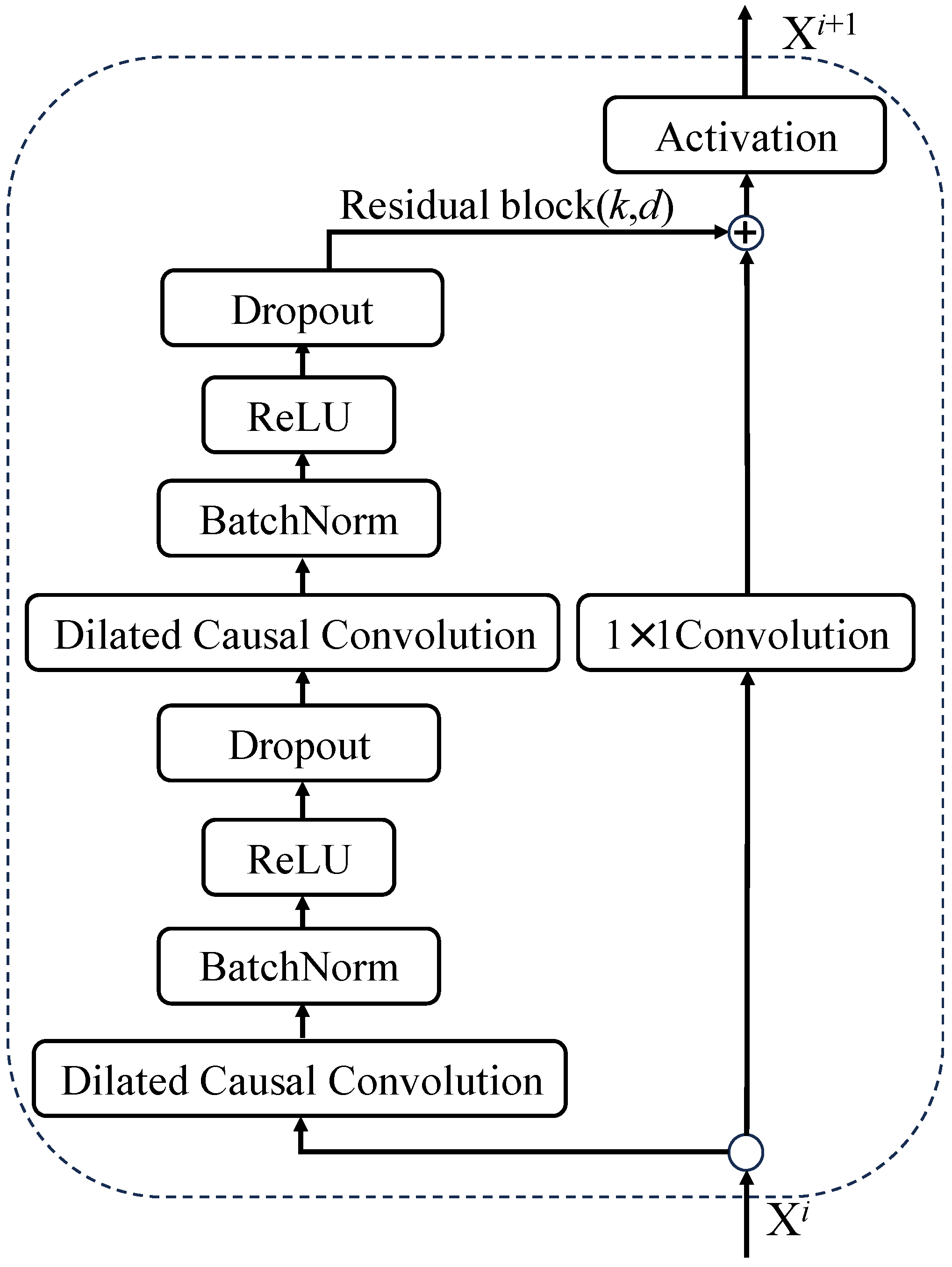

2.1.2. Residual Module

2.2. Bidirectional Gated Recurrent Unit

2.3. Multi-Head Self-Attention Mechanism

2.4. TBMSA

2.5. Model Evaluation Metrics

3. Case Study

3.1. Correlation Analysis

3.2. Physical Constraint Analysis

3.3. Comparative Experimental Analysis

3.3.1. Model Parameter Settings

3.3.2. Experimental Results Analysis

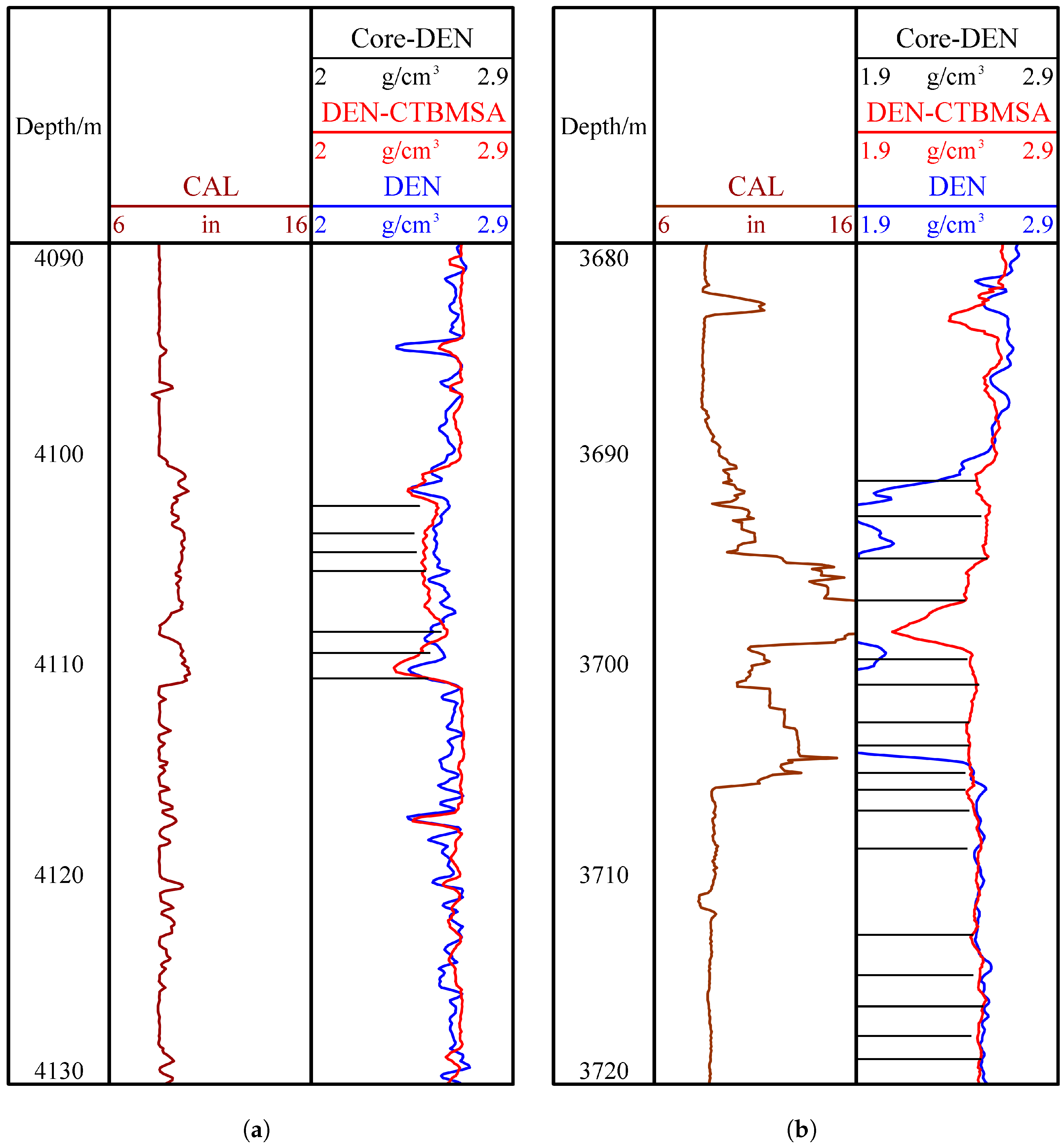

3.4. Core Calibration Experiment

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhang, D.; Chen, Y.; Meng, J. Synthetic well logs generation via Recurrent Neural Networks. Pet. Explor. Dev. 2018, 45, 629–639. [Google Scholar] [CrossRef]

- Fan, P.; Deng, R.; Qiu, J.; Zhao, Z.; Wu, S. Well logging curve reconstruction based on kernel ridge regression. Arab. J. Geosci. 2021, 14, 1–10. [Google Scholar] [CrossRef]

- Lin, L.; Wei, H.; Wu, T.; Zhang, P.; Zhong, Z.; Li, C. Missing well-log reconstruction using a sequence self-attention deep-learning framework. Geophysics 2023, 88, D391–D410. [Google Scholar] [CrossRef]

- Zhang, H.; Wu, W.; Song, X. Well Logs Reconstruction Based on Deep Learning Technology. IEEE Geosci. Remote Sens. Lett. 2024. [CrossRef]

- Ming, L.; Deng, R.; Gao, C.; Zhang, R.; He, X.; Chen, J.; Zhou, T.; Sun, Z. Logging curve reconstructions based on MLP multilayer perceptive neural network. Int. J. Oil Gas Coal Technol. 2023, 34, 25–41. [Google Scholar] [CrossRef]

- Ren, Q.; Zhang, H.; Azevedo, L.; Yu, X.; Zhang, D.; Zhao, X.; Zhu, X.; Hu, X. Reconstruction of Missing Well-Logs Using Facies-Informed Discrete Wavelet Transform and Time Series Regression. SPE J. 2023, 28, 2946–2963. [Google Scholar] [CrossRef]

- Zhang, G.; Wang, Z.; Chen, Y. Deep learning for seismic lithology prediction. Geophys. J. Int. 2018, 215, 1368–1387. [Google Scholar] [CrossRef]

- You, L.; Tan, Q.; Kang, Y.; Xu, C.; Lin, C. Reconstruction and prediction of capillary pressure curve based on Particle Swarm Optimization-Back Propagation Neural Network method. Petroleum 2018, 4, 268–280. [Google Scholar] [CrossRef]

- Mo, X.; Zhang, Q.; Li, X. Well logging curve reconstruction based on genetic neural networks. In Proceedings of the 2015 12th International Conference on Fuzzy Systems and Knowledge Discovery (FSKD), Zhangjiajie, China, 15–17 August 2015; pp. 1015–1021. [Google Scholar]

- Jun, W.; Jun-xing, C.; Jia-chun, Y. Log reconstruction based on gated recurrent unit recurrent neural network. In Proceedings of the SEG 2019 Workshop: Mathematical Geophysics: Traditional vs Learning, Beijing, China, 5–7 November 2019; pp. 91–94. [Google Scholar]

- Zeng, L.; Ren, W.; Shan, L.; Huo, F. Well logging prediction and uncertainty analysis based on recurrent neural network with attention mechanism and Bayesian theory. J. Pet. Sci. Eng. 2022, 208, 109458. [Google Scholar] [CrossRef]

- Cheng, C.; Gao, Y.; Chen, Y.; Jiao, S.; Jiang, Y.; Yi, J.; Zhang, L. Reconstruction Method of Old Well Logging Curves Based on BI-LSTM Model—Taking Feixianguan Formation in East Sichuan as an Example. Coatings 2022, 12, 113. [Google Scholar] [CrossRef]

- Chang, J.; Li, J.; Liu, H.; Kang, Y.; Lv, W. Well Logging Reconstruction Based on Bidirectional GRU. In Proceedings of the 2022 2nd International Conference on Control and Intelligent Robotics, Nanjing China, 24–26 June 2022; pp. 525–528. [Google Scholar]

- Li, J.; Gao, G. Digital construction of geophysical well logging curves using the LSTM deep-learning network. Front. Earth Sci. 2023, 10, 1041807. [Google Scholar] [CrossRef]

- Zhang, H.; Wu, W.; Chen, Z.; Jing, J. Well logs reconstruction of petroleum energy exploration based on bidirectional Long Short-term memory networks with a PSO optimization algorithm. Geoenergy Sci. Eng. 2024, 239, 212975. [Google Scholar] [CrossRef]

- Zhou, X.; Zhang, Z.; Zhang, C. Bi-LSTM deep neural network reservoir classification model based on the innovative input of logging curve response sequences. IEEE Access 2021, 99. [Google Scholar]

- Li, G.; Chen, W.; Mu, C. Residual-wider convolutional neural network for image recognition. IET Image Process. 2020, 14, 4385–4391. [Google Scholar] [CrossRef]

- Zhang, J.; Shao, K.; Luo, X. Small sample image recognition using improved Convolutional Neural Network. J. Vis. Commun. Image Represent. 2018, 55, 640–647. [Google Scholar] [CrossRef]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef]

- Shamsipour, G.; Fekri-Ershad, S.; Sharifi, M.; Alaei, A. Improve the efficiency of handcrafted features in image retrieval by adding selected feature generating layers of deep convolutional neural networks. Signal Image Video Process. 2024, 18, 2607–2620. [Google Scholar] [CrossRef]

- Wu, L.; Dong, Z.; Li, W.; Jing, C.; Qu, B. Well-logging prediction based on hybrid neural network model. Energies 2021, 14, 8583. [Google Scholar] [CrossRef]

- Duan, Z.Y.; Wu, Y.; Xiao, Y.; Li, C.L. Density logging curve reconstruction method based on CGAN and CNN-GRU combined model. Prog. Geophys. 2022, 37, 1941–1945. [Google Scholar]

- Wang, J.; Cao, J.; Fu, J.; Xu, H. Missing well logs prediction using deep learning integrated neural network with the self-attention mechanism. Energy 2022, 261, 125270. [Google Scholar] [CrossRef]

- Bai, S.; Kolter, J.Z.; Koltun, V. An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. arXiv 2018, arXiv:1803.01271. [Google Scholar]

- Jiang, C.; Zhang, D.; Chen, S. Lithology identification from well-log curves via neural networks with additional geologic constraint. Geophysics 2021, 86, IM85–IM100. [Google Scholar] [CrossRef]

- Cai, C.; Li, Y.; Su, Z.; Zhu, T.; He, Y. Short-term electrical load forecasting based on VMD and GRU-TCN hybrid network. Appl. Sci. 2022, 12, 6647. [Google Scholar] [CrossRef]

- Wang, D.; Chen, G. Intelligent seismic stratigraphic modeling using temporal convolutional network. Comput. Geosci. 2023, 171, 105294. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Yu, Z.; Sun, Y.; Zhang, J.; Zhang, Y.; Liu, Z. Gated recurrent unit neural network (GRU) based on quantile regression (QR) predicts reservoir parameters through well logging data. Front. Earth Sci. 2023, 11, 1087385. [Google Scholar] [CrossRef]

- Chung, J.; Gulcehre, C.; Cho, K.H.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- She, D.; Jia, M. A BiGRU method for remaining useful life prediction of machinery. Measurement 2021, 167, 108277. [Google Scholar] [CrossRef]

- Qiao, Y.; Xu, H.M.; Zhou, W.J.; Peng, B.; Hu, B.; Guo, X. A BiGRU joint optimized attention network for recognition of drilling conditions. Pet. Sci. 2023, 20, 3624–3637. [Google Scholar] [CrossRef]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Zeng, L.; Ren, W.; Shan, L. Attention-based bidirectional gated recurrent unit neural networks for well logs prediction and lithology identification. Neurocomputing 2020, 414, 153–171. [Google Scholar] [CrossRef]

- Yang, L.; Wang, S.; Chen, X.; Chen, W.; Saad, O.M.; Zhou, X.; Pham, N.; Geng, Z.; Fomel, S.; Chen, Y. High-fidelity permeability and porosity prediction using deep learning with the self-attention mechanism. IEEE Trans. Neural Netw. Learn. Syst. 2022, 34, 3429–3443. [Google Scholar] [CrossRef] [PubMed]

- Liu, N.; Li, Z.; Liu, R.; Zhang, H.; Gao, J.; Wei, T.; Wu, H. ASHFormer: Axial and sliding window based attention with high-resolution transformer for automatic stratigraphic correlation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5913910. [Google Scholar] [CrossRef]

| Lithology | Minerals | Tags |

|---|---|---|

| fine sandstone | quartz, dark minerals | 1 |

| siltstone | quartz | 2 |

| muddy siltstone | quartz, clay | 3 |

| mudstone | clay | 4 |

| siltstone | clay, quartz | 5 |

| Model | MSE | RMSE | MAE |

|---|---|---|---|

| CTBMS | 0.0038 | 0.0613 | 0.0371 |

| UTBMS | 0.0055 | 0.0739 | 0.0489 |

| Evaluation Indicators | MAE | MSE | RMSE |

|---|---|---|---|

| MCF | 0.0695 | 0.0074 | 0.0863 |

| GRU | 0.0491 | 0.0039 | 0.0625 |

| BiGRU | 0.0454 | 0.0036 | 0.0599 |

| BiGRU-SA | 0.0403 | 0.0033 | 0.0578 |

| BiGRU-MSA | 0.0385 | 0.0030 | 0.0547 |

| TCN | 0.0399 | 0.0029 | 0.0542 |

| TCN-BiGRU | 0.0332 | 0.0023 | 0.0481 |

| TBMSA | 0.0303 | 0.0018 | 0.0421 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liao, W.; Gao, C.; Fang, J.; Zhao, B.; Zhang, Z. A TCN-BiGRU Density Logging Curve Reconstruction Method Based on Multi-Head Self-Attention Mechanism. Processes 2024, 12, 1589. https://doi.org/10.3390/pr12081589

Liao W, Gao C, Fang J, Zhao B, Zhang Z. A TCN-BiGRU Density Logging Curve Reconstruction Method Based on Multi-Head Self-Attention Mechanism. Processes. 2024; 12(8):1589. https://doi.org/10.3390/pr12081589

Chicago/Turabian StyleLiao, Wenlong, Chuqiao Gao, Jiadi Fang, Bin Zhao, and Zhihu Zhang. 2024. "A TCN-BiGRU Density Logging Curve Reconstruction Method Based on Multi-Head Self-Attention Mechanism" Processes 12, no. 8: 1589. https://doi.org/10.3390/pr12081589

APA StyleLiao, W., Gao, C., Fang, J., Zhao, B., & Zhang, Z. (2024). A TCN-BiGRU Density Logging Curve Reconstruction Method Based on Multi-Head Self-Attention Mechanism. Processes, 12(8), 1589. https://doi.org/10.3390/pr12081589