1. Introduction

Nonlinear unconstrained optimization is an active research area, since many real-life challenges/problems can be modeled as a continuous nonlinear optimization problem [

1]. To deal with this kind of optimization problems, various nature-inspired population based search mechanisms have been developed in the past [

2]. A few of those are Differential Evolution (DE) [

3,

4], Evolution Strategies (ES) [

2,

5], Partical Swarm Optimization (PSO) [

6,

7,

8,

9], Ant Colony Optimization (ACO) [

10,

11,

12,

13], Bacterial Foraging Optimization (BFO) [

14,

15], Genetic Algorithm (GA) [

16,

17,

18], Genetic Programming (GP) [

2,

19,

20,

21], Cuckoo Search (CS) [

22,

23], Estimation of Distribution Algorithm (EDA) [

24,

25,

26,

27,

28] and Grey Wolf Optimization (GWO) [

29,

30].

DE does not need specific information about the complicated problem at hand [

31]. That is why DE is implemented to solve a wide variety of optimization problems in the past two decades [

30,

32,

33,

34]. DE has merits over PSO, GA, ES and ACO, as it depends upon few control parameters. Its implementation is very easy and user friendly, too [

2]. Due to these advantages, we selected DE to perform global search in the suggested hybrid design. In addition, because of its easy nature, DE is implemented widely [

35,

36,

37,

38,

39,

40,

41,

42] on practical optimization problems [

35,

36,

37,

38,

39,

40,

41,

42]. However, its convergence to known optima is not guaranteed [

2,

31,

43]. Stagnation of DE is another weakness identified in various studies [

31].

Traditional search approaches, such as Nelder–Mead algorithm, Steepest Descent and DFP [

44] may be hybridized with DE to improve its search capability. Implementing LS into a global search for enhancing the solution quality is called Memetic Algorithms (MAs) [

31,

45]. Some of the recent MAs can be found in [

1,

31]. Very recently, Broyden–Fletcher–Goldfarb–Shanan LS was merged with an adaptive DE version, JADE [

46], which produced the MA, Hybridization of Adaptive Differential Evolution with an Expensive Local Search Method [

47]. In the majority of the established designs, LS is implemented to the overall best solutions, while in our design it is applied to the migrated elements of the archive. In addition, the population is adaptively decreased.

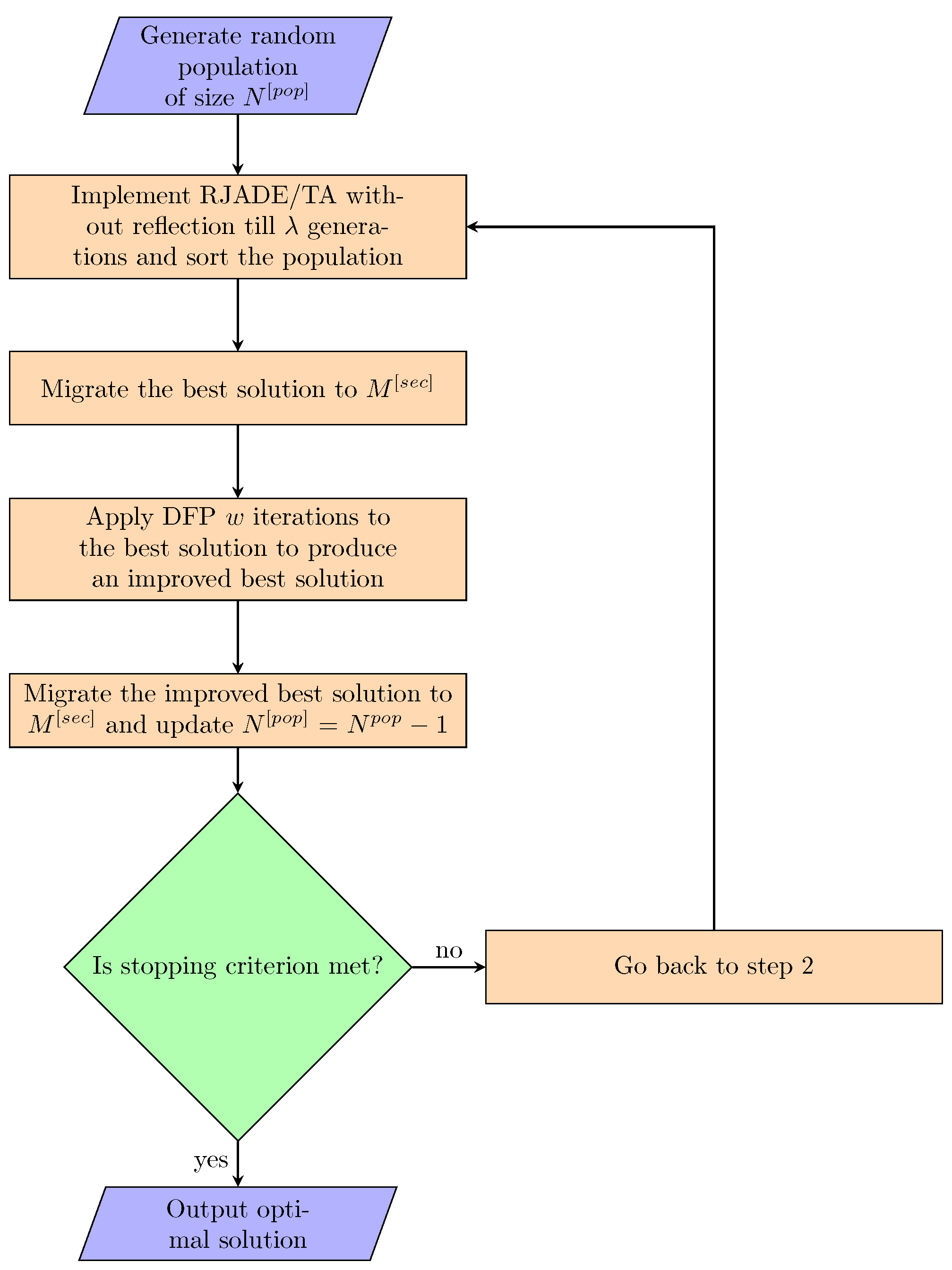

In this work, we propose a hybrid algorithm that combines DFP [

44,

48,

49] with a recently developed algorithm, RJADE/TA [

50], to enhance RJADE/TA’s performance in local regions. The main idea is to operate DFP on the elements that are shifted to archive and record the information from both solutions, the previously brought forward and the new potential solutions to discourage the chance of losing the globally best solution. For this purpose, firstly, DFP is implemented to the archived information. Secondly, a decreasing population mechanism is suggested. The new algorithm is denoted by RJADE/TA-ADP-LS.

The structure of this work is as follows.

Section 2 presents primary DE, DFP, and RJADE/TA methods.

Section 3 describes the literature review. In

Section 4, the suggested hybrid algorithm is outlined.

Section 5 is devoted to the validation of results achieved by RJADE/TA-ADP-LS. At the end, the conclusions are summarized in

Section 6.

2. Primary DE, DFP, and RJADE/TA

We reviewed in detail traditional DE and JADE in our previous works [

47,

50]. Here, we briefly review primary DE, DFP and RJADE/TA for ready reference.

2.1. Primary DE

DE [

3,

4] starts with a random population in the given search region. After initialization, a mutation strategy, where three different individuals from population are randomly selected and the scaled difference of the two individuals to the third one, target vector is added to produce a mutant vector. Following mutation, the mutant and the target vectors are combined through a crossover operator to produce a trial vector. At last, the target and trial vectors are compared based on a fitness function to select the better one for the next generation (see Lines 7–20 of Algorithm 1).

| Algorithm 1 Outlines of RJADE/TA Procedure. |

- 1:

To form the primary population produce vectors uniformly and randomly, ; - 2:

; - 3:

Initialize ; ; ; - 4:

Set ; - 5:

Evaluate ; - 6:

while do - 7:

; - 8:

Randomly sample in pop; - 9:

Choose in ; - 10:

Choose in do random selection; - 11:

Produce the mutant vector as ; - 12:

Produce the trial vector as follows. - 13:

for to n do - 14:

if or then - 15:

; - 16:

else - 17:

; - 18:

end if - 19:

end for - 20:

Best selection ; - 21:

if is the best then - 22:

, , ; - 23:

end if - 24:

If size of , delete extra solutions from randomly; - 25:

Update as follows. - 26:

if then - 27:

; - 28:

; - 29:

Centroid calculation ; - 30:

Reflection mechanism ; - 31:

end if - 32:

; - 33:

; - 34:

end while - 35:

Result: The best solution corresponding to minimum function value from in the optimization.

|

2.2. Reflected Adaptive Differential Evolution with Two External Archives (RJADE/TA)

RJADE/TA [

50] is an adaptive DE variant. Its main idea is to archive comparatively best solutions of the population at regular interval of optimization process and reflect the overall poor solutions. RJADE/TA inserts the following techniques in JADE. The techniques are presented in

Table 1.

To prevent premature convergence and stagnation, the best solution, is replaced by its reflection in RJADE/TA and is then shifted to the second archive .

The reflected solution replaces in the population and the ever best candidate by itself is migrated to the second archive . RJADE/TA maintains two archives, termed as and for convenience. After half of available resources are utilized (), the first archive update of the second archive, , is made. Afterwards, is updated adaptively with a continuing intermission of generations (see Algorithm 1).

The overall best candidates are transferred to , whereas records the recently explored poor solutions. The size of is fixed, equal to population size , while the size of may exceed . As keeps information of all best solutions found, no solution is deleted from it. records only one solution of the current iteration, it may be a child or a parent, whereas makes a history of more than one inferior “parent solutions” only. is updated at every iteration and , initialized as ∅, is updated with a gap of iterations adaptively. The recorded history of is utilized in reproduction later on. In contrast, in , the recorded best individual is reflected with a new solution, which is then sent to the population. Once a candidate solution is posted to , it remains passive during the whole optimization. When the search procedures are terminated, then the recoded information contributes towards the selection of the best candidate solution.

2.3. Davidon–Fletcher–Powell (DFP) Method

The DFP method is a variable metric method, which was first proposed by Davidon [

51] and then modified by Powell and Fletcher [

52]. It belongs to the class of gradient dependent LS methods. If a right line search is used in DFP method, it will assure convergence (minimization) [

49]. It calculates the difference between the old and new points, as given in Equation (

1). Then, it finds the difference of the gradients at these points as calculated in Equation (

2).

It then updates the Hessian matrix

as presented in Equation (

3). Afterwards, it locates the optimal search direction

with the help of the Hessian matrix information as calculated in Equation (

4). Finally, the output solution

is computed by Equation (

5), where

is calculated by a line search method; golden section search method is used in this work.

3. Related Work

To fix the above-mentioned weaknesses of DE, many researchers merged various LS techniques in DE. Nelder–Mead LS is hybridized with DE [

53] to improve the local exploitation of DE. Recently, two new LS strategies are proposed and hybridized iteratively with DE in [

1,

31]. These hybrid designs show performance improvement over the algorithms in comparison. Two LS strategies, Trigonometric and Interpolated, are inserted in DE to enhance its poor exploration. Two other LS techniques are merged in DE along with a restart strategy to improve its global exploration [

54]. This algorithm is statistically sound, as the obtained results are better than other algorithms. Furthermore, alopex-based LS is merged in DE [

55] to improve its diversity of population. In another experiment, DE’s slow convergence is enhanced by combining orthogonal design LS [

56] with it. To avert local optima in DE, random LS is hybridized [

57] with it. On the other hand, some researchers borrowed DE’s mutation and crossover in traditional LS methods (see, e.g., [

58,

59]).

To the best of our knowledge, none of the reviewed algorithms in this section integrate DFP into DE’s framework. Further, the proposed work here maintains two archives: the first one stores inferior solutions and the second one keeps information of best solutions migrated to it by the global search. Furthermore, the second archive improves the solutions quality further by implementing DFP there. Hence, our proposed work has the advantage that the second archive keeps complete information of the solution before and after LS. This way, any good solution found is not lost. It also adopts a population decreasing mechanism.

5. Validation of Results

In this section, first we briefly illustrate the five algorithms used for comparison and then the experimental results are presented.

5.1. Global Search Algorithms in Comparison

Among the five algorithms for comparison, the first two, RJADE/TA and RJADE/TA-LS, are our recently proposed hybrid algorithms, while the remaining three, jDE, jDEsoo and jDErpo, are non-hybrid, but adaptive and popular DE variants.

5.1.1. RJADE/TA

RJADE/TA [

50], similar to RJADE/TA-ADP-LS, utilizes two archives for information. One of the archives stores inferior solutions, while the other keeps a record of superior solutions. However, in RJADE/TA-ADP-LS, the second archive stores elite solutions, which are then improved by DFP. Further details of RJADE/TA can be seen in

Section 2.2.

5.1.2. RJADE/TA-LS

RJADE/TA-LS [

60] is a very recently proposed hybrid version of global and local search. However, it is different from RJADE/TA-ADP-LS in the sense that it utilizes reflection mechanism and a fixed population, while RJADE/TA-ADP-LS uses DFP as LS without reflection and a population decreasing approach.

5.1.3. jDE

jDE [

61] is an adaptive version of DE, which is based on self-adaption of control parameters

F and

. In jDE, the parameters

F and

keep changing during the evolution process, while the population size

is kept unchanged. Every solution in jDE has its own

F and

values. Better individuals are produced due to better values of

F and

. Such parameter values translate to upcoming generations of jDE. Because of its unique mechanism and simplicity, jDE has gained popularity among researchers in the field of optimization. Since its establishment, people use it to compare with their own algorithms.

5.1.4. jDEsoo and jDErpo

jDEsoo [

62] is a new version of DE that deals with single-objective optimization. jDEsoo subdivides the population and implements more than one DE strategies. To enhance diversity of population, it removes those individuals from population that remain unchanged in the last few generations. It was primarily developed for CEC 2013 competition.

jDErpo [

61] is an improvement of jDE. It is based on the following mechanisms. Firstly, it incorporates two mutation strategies, different from jDE, DE and RJADE/TA. Secondly, it uses adaptively increasing strategy for adjusting the lower bounds of control parameters. Thirdly, it utilizes two pairs of control parameters for two different mutation strategies in contrast to one pair of parameters used in jDE, classic DE and RJADE/TA. jDErpo was also specially designed for solving CEC 2013 competition problems.

5.2. Parameter Settings/Termination Criteria

Experiments were performed on 28 benchmark test problems of CEC 2013 [

63]. They are referred as BMF1–BMF28. The parameters’ settings were kept the same as demanded in [

63]. The dimension

n of each problem was set to 10, population size

to 100, and the

to

. The number of elite solutions

r was kept as 1. The iterations number

w of DFP was set to 2. The reduction of population per archive update

r was also chosen as 1. The gap

between successive updates of

was kept as 20. The optimization was terminated if either

were reached or the difference between the means of function error values was less than

, as suggested in [

50,

63].

5.3. Comparison of RJADE/TA-ADP-LS against Established Global Optimizers

The mean of function error values, the difference between known and approximated values, for jDE, jDEsoo, jDErpo, RJADE/TA and RJADE/TA-ADP-LS, are presented in

Table 2. In

Table 2, + indicates that the algorithm won against our algorithm, RJADE/TA-ADP-LS; − indicates that the particular algorithm lost against our algorithm; and = indicates that both algorithms obtained the same statistics. The comparison of RJADE/TA-ADP-LS with other competitors showed its outstanding performance against all of them. RJADE/TA-ADP-LS achieved higher mean values than jDE and jDEsoo on 17 out of 28 problems; the many − signs in columns 2 and 3 of

Table 2 support this fact. In contrast, jDE and jDEsoo performed better on six and eight problems, respectively.

RJADE/TA-ADP-LS showed performance improvement against jDErpo and RJADE/TA algorithms as well. In general, RJADE/TA-ADP-LS performed better than all algorithms in comparison, especially in the category of multimodal and composite functions. The proposed mechanism is not only based on LS for local tuning with no reflection, but it also implements an ADP approach, which could be the reasons for its good performance.

5.4. Performance Evaluation of RJADE/TA-ADP-LS Versus RJADE/TA-LS

We empirically studied the performance of RJADE/TA-ADP-LS against RJADE/TA-LS.

Table 3 presents the mean results achieved by both methods in 51 runs. The best results are shown in bold face. It is very clear from the results in

Table 3 that the proposed RJADE/TA-ADP-LS performed higher than RJADE/TA-LS on 13 out of 28 problems. Furthermore, on five problems, they obtained the same results. RJADE/TA-LS showed performance improvement on 10 test problems.

It is interesting to note that RJADE/TA-ADP-LS showed outstanding performance in the category of composite functions, where it solved BMF22–BMF28 better than RJADE/TA-LS. Again, the two different mechanisms, the ADP approach and the LS search with out reflection, of RJADE/TA-ADP-LS could be the reasons for its better performance. Among 28 problems, RJADE/TA-LS was better on 10 functions. Further,

Table 4 presents the percentage performance of RJADE/TA-ADP-LS and RJADE/TA-LS. Since on five test problems, both algorithms showed equal results, thus we compared the percentage for the remaining 23 problems. As shown in

Table 4, RJADE/TA-ADP-LS was able to solve

of problems against

of problems solved by RJADE/TA-LS out of 23 test instances.

Furthermore, box plots were plotted from all means obtained in 25 runs of RJADE/TA, RJADE/TA-LS and RJADE/TA-ADP-LS.

Figure 2 and

Figure 3 plot one function from each three functions. Box plots are very good tools to show the spread of the data.

Figure 2b–d shows that the boxes obtained by RJADE/TA-ADP-LS were lower than the other two boxes, indicating its better performance.

Figure 2a presents the plot of BMF3, in which the two boxes in comparison were lower than RJADE/TA-ADP-LS, thus they were better.

Figure 3b,d,f shows that the boxes obtained by RJADE/TA-ADP-LS on BMF19, BMF25 and BMF27 were lower than the boxes of RJADE/TA and RJADE/TA-LS, indicating higher performance of RJADE/TA-ADP-LS.

Figure 3a,c,e shows that the two other algorithms were better on the respective test instances.

5.5. Analysis/Discussion of Various Parameters Used

The number of solutions r to be migrated to archive and undergo DFP was kept as 1, since DFP is an expensive method due to gradient calculation. Further, its application to more than one solution might slow down the algorithm. The users may take two, but at most three is suggested. The number of iteration w of DFP to archive elements was kept as 2. DFP is a very good method; it could fine tune the solutions in only two iterations. Moreover, the decreasing number r of population per archive update was also chosen as 1. Since the archive was updated after regular gap of global evolution, each time population was decreased by one. However, if we reduced it by more than one solutions, then a stage would come where the diversity of the population would be decreased and the algorithm would either stop at local optima or converge prematurely. We suggest that the decreasing number be at most 3. In general, these parameters are user defined but should be chosen wisely to compliment the global and local search together, instead of premature convergence or stagnation.

6. Conclusions

This paper proposed a new hybrid algorithm, RJADE/TA-ADP-LS, where a LS mechanism, DFP is combined with a DE based global search scheme, RJADE/TA to benefit from their searching capabilities in local and global regions. Further, a population decreasing mechanism is also adopted. The key idea is to shift the overall best solution to archive at specified regular intervals of RJADE/TA, where it undergoes DFP for further improvement. The archive stores both the best solution and its improved form. Furthermore, the population is decreased by one solution at each archive update. We evaluated and compared our hybrid method with five established algorithms on test suit of CEC 2013. The results demonstrated that our new algorithm is better than other competing algorithms on majority of the tested problems, particularly our algorithm showed superior performance on hard multimodal and composite problems of CEC 2013. In future, the present work will be extended to constrained optimization. As a second task, some other gradient free LS methods, global optimizers and archiving strategies will be tried to design more efficient algorithms for global optimization.