Dynamic Optimization of Chemical Processes Based on Modified Sailfish Optimizer Combined with an Equal Division Method

Abstract

:1. Introduction

2. Problem Description and Equal Division Method

2.1. DOP Description

2.2. Equal Division Method

3. Sailfish Optimizer

4. Modified Sailfish Optimizer (MSFO)

4.1. Tent Chaos Initialization Policy

4.2. Adaptive Linear Decrease Attack Parameter

4.3. Modifying the Search Equation for Sardines

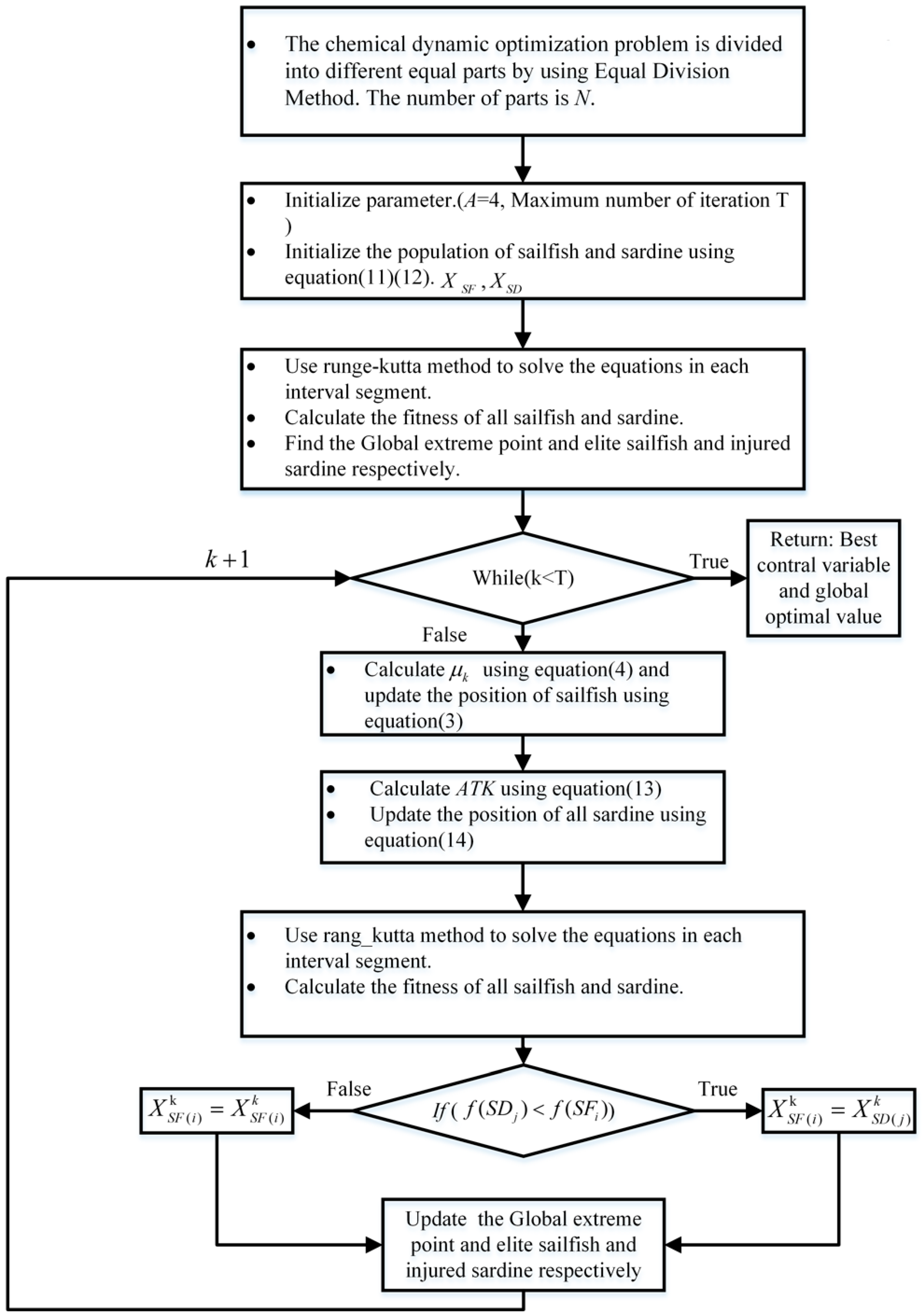

| Algorithm 1 Pseudo-codes of the MSFO |

| Inputs: The population size Pop and maximum number of iterations T. Outputs: The global optimal solution. Initialize parameter (A = 4) and the population of sailfish and sardine using Equations (11) and (12). Calculate the objective fitness of each sailfish and sardine. Find the Global extreme point, elite sailfish, and injured sardine, respectively. while (k < T) do for each sailfish Calculate using Equation (4) and update the position of sailfish using Equation (3) end for Calculate ATK using Equation (13) for each sardine Update the position of all sardines using Equation (14). end for Check and correct the new positions based on the boundaries of variables. Calculate the fitness of all sailfish and sardine. Sort the moderate values of sailfish and sardines. If the fitness of sardine is better than that of the sailfish Replace a sailfish with an injured sardine using Equation (10). Remove the hunted sardine from population. Update the best sailfish and best sardine. end if Update the Global extreme point and elite sailfish and injured sardine, respectively. end while return global optimal solution. |

4.4. Performance Analysis of Modified Sailfish Optimizer

4.4.1. Benchmark Functions

4.4.2. Parameter Settings

4.4.3. Statistical Result Comparison

4.4.4. Convergence Trajectory Comparison

4.4.5. Box Plot Analysis

4.4.6. Wilcoxon p-Value Statistical Test

5. Application MSFO to Dynamic Optimization Problems in Chemical Processes

5.1. Experimental Flow and Parameter Settings

- (1)

- The chemical dynamic optimization problem was divided into different equal parts by using the equal division method. The number of parts was N.

- (2)

- Runge–Kutta Method was used for numerical solutions.

- (3)

- MSFO algorithm was used to optimize the chemical case.

5.2. Test Case and Analysis

5.2.1. Case 1: Benchmark Dynamic Optimization Problem

5.2.2. Case 2: Batch Reactor Consecutive Reaction

5.2.3. Case 3: Parallel Reactions in Tubular Reactor

5.2.4. Case 4: Catalyst Mixing Problem

5.2.5. Case 5: Plug Flow Tubular Reactor

5.2.6. Case 6: Fed Batch Bioreactor

5.3. Results and Discussions

5.3.1. Analysis of the Experimental Results of Case 1

5.3.2. Analysis of the Experimental Results of Case 2

5.3.3. Analysis of the Experimental Results of Case 3

5.3.4. Analysis of the Experimental Results of Case 4

5.3.5. Analysis of the Experimental Results of Case 5

5.3.6. Analysis of the Experimental Results of Case 6

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Srinivasan, B.; Palanki, S.; Bonvin, D. Dynamic Optimization of Batch Processes: I. Characterization of the Nominal Solution. Comput. Chem. Eng. 2003, 27, 1–26. [Google Scholar] [CrossRef]

- Pollard, G.P.; Sargent, R.W.H. Offline Computation of Optimum Controls for a Plate Distillation Column. Automatica 1970, 6, 59–76. [Google Scholar] [CrossRef]

- Luus, R. Optimization of Fed-Batch Fermentors by Iterative Dynamic Programming. Biotechnol. Bioeng. 1993, 41, 599–602. [Google Scholar] [CrossRef] [PubMed]

- Jing, S.; Zhu’an, C. Application of Iterative Dynamic Programming to Dynamic Optimization Problems. J. Chem. Ind. Eng. China 1999, 50, 125–129. [Google Scholar]

- Pham, Q.T. Dynamic Optimization of Chemical Engineering Processes by an Evolutionary Method. Comput. Chem. Eng. 1998, 22, 1089–1097. [Google Scholar] [CrossRef]

- Chiou, J.-P.; Wang, F.-S. A Hybrid Method of Differential Evolution with Application to Optimal Control Problems of a Bioprocess System. In Proceedings of the 1998 IEEE International Conference on Evolutionary Computation Proceedings, IEEE World Congress on Computational Intelligence (Cat. No. 98TH8360), Anchorage, AK, USA, 4–9 May 1998; pp. 627–632. [Google Scholar]

- Zhang, B.; Yu, H.; Chen, D. Sequential Optimization of Chemical Dynamic Problems by Ant-Colony Algorithm. J. Chem. Eng. Chin. Univ. 2006, 20, 120. [Google Scholar]

- Yuanbin, M.; Qiaoyan, Z.; Yanzhui, M.; Weijun, Y. Adaptive Cuckoo Search Algorithm and Its Application to Chemical Engineering Optimization Problem. Comput. Appl. Chem. 2015, 3, 292–294. [Google Scholar] [CrossRef]

- Jiang, Q.; Wang, L.; Lin, Y.; Hei, X.; Yu, G.; Lu, X. An Efficient Multi-Objective Artificial Raindrop Algorithm and Its Application to Dynamic Optimization Problems in Chemical Processes. Appl. Soft Comput. 2017, 58, 354–377. [Google Scholar] [CrossRef]

- Shi, B.; Yin, Y.; Liu, F. Optimal control strategies combined with PSO and control vector parameterization for batchwise chemical process. J. Chem. Ind. Eng. 2018, 70, 979–986. [Google Scholar]

- Srivastava, A.; Das, D.K. A Sailfish Optimization Technique to Solve Combined Heat And Power Economic Dispatch Problem. In Proceedings of the 2020 IEEE Students Conference on Engineering & Systems (SCES), Prayagraj, India, 10–12 July 2020; pp. 1–6. [Google Scholar]

- Ghosh, K.K.; Ahmed, S.; Singh, P.K.; Geem, Z.W.; Sarkar, R. Improved Binary Sailfish Optimizer Based on Adaptive β-Hill Climbing for Feature Selection. IEEE Access 2020, 8, 83548–83560. [Google Scholar] [CrossRef]

- Li, M.; Li, Y.; Chen, Y.; Xu, Y. Batch Recommendation of Experts to Questions in Community-Based Question-Answering with a Sailfish Optimizer. Expert Syst. Appl. 2021, 169, 114484. [Google Scholar] [CrossRef]

- Hammouti, I.E.; Lajjam, A.; Merouani, M.E.; Tabaa, Y. A Modified Sailfish Optimizer to Solve Dynamic Berth Allocation Problem in Conventional Container Terminal. Int. J. Ind. Eng. Comput. 2019, 10, 491–504. [Google Scholar] [CrossRef]

- Li, L.-L.; Shen, Q.; Tseng, M.-L.; Luo, S. Power System Hybrid Dynamic Economic Emission Dispatch with Wind Energy Based on Improved Sailfish Algorithm. J. Clean. Prod. 2021, 316, 128318. [Google Scholar] [CrossRef]

- Vicente, M.; Sayer, C.; Leiza, J.R.; Arzamendi, G.; Lima, E.L.; Pinto, J.C.; Asua, J.M. Dynamic Optimization of Non-Linear Emulsion Copolymerization Systems: Open-Loop Control of Composition and Molecular Weight Distribution. Chem. Eng. J. 2002, 85, 339–349. [Google Scholar] [CrossRef]

- Mitra, T. Introduction to dynamic optimization theory. In Optimization and Chaos; Springer: Berlin/Heidelberg, Germany, 2000; pp. 31–108. [Google Scholar]

- Liu, L.; Sun, S.Z.; Yu, H.; Yue, X.; Zhang, D. A Modified Fuzzy C-Means (FCM) Clustering Algorithm and Its Application on Carbonate Fluid Identification. J. Appl. Geophys. 2016, 129, 28–35. [Google Scholar] [CrossRef]

- Kellert, S.H. Books-Received-in the Wake of Chaos-Unpredictable Order in Dynamical Systems. Science 1995, 267, 550. [Google Scholar]

- Rather, S.A.; Bala, P.S. Swarm-Based Chaotic Gravitational Search Algorithm for Solving Mechanical Engineering Design Problems. World J. Eng. 2020, 17, 101–130. [Google Scholar] [CrossRef]

- Gandomi, A.H.; Yun, G.J.; Yang, X.-S.; Talatahari, S. Chaos-Enhanced Accelerated Particle Swarm Optimization. Commun. Nonlinear Sci. Numer. Simul. 2013, 18, 327–340. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle Swarm Optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Arora, S.; Singh, S. Butterfly Optimization Algorithm: A Novel Approach for Global Optimization. Soft Comput. 2019, 23, 715–734. [Google Scholar] [CrossRef]

- Leonard, D.; Van Long, N.; Ngo, V.L. Optimal Control Theory and Static Optimization in Economics; Cambridge University Press: Cambridge, UK, 1992; ISBN 0-521-33746-1. [Google Scholar]

- Rajesh, J.; Gupta, K.; Kusumakar, H.S.; Jayaraman, V.K.; Kulkarni, B.D. Dynamic Optimization of Chemical Processes Using Ant Colony Framework. Comput. Chem. 2001, 25, 583–595. [Google Scholar] [CrossRef]

- Asgari, S.A.; Pishvaie, M.R. Dynamic Optimization in Chemical Processes Using Region Reduction Strategy and Control Vector Parameterization with an Ant Colony Optimization Algorithm. Chem. Eng. Technol. Ind. Chem. Plant Equip. Process. Eng. Biotechnol. 2008, 31, 507–512. [Google Scholar] [CrossRef]

- Werterterp, K.R.; Ptasiński, J. Safe Design of Cooled Tubular Reactors for Exothermic, Multiple Reactions; Parallel Reactions—I: Development of Criteria. Chem. Eng. Sci. 1984, 39, 235–244. [Google Scholar] [CrossRef] [Green Version]

- Banga, J.R.; Seider, W.D. Global optimization of chemical processes using stochastic algorithms. In State of The Art in Global Optimization; Springer: Berlin/Heidelberg, Germany, 1996; pp. 563–583. [Google Scholar]

- Reddy, K.V.; Husain, A. Computation of Optimal Control Policy with Singular Subarc. Can. J. Chem. Eng. 1981, 59, 557–559. [Google Scholar] [CrossRef]

- Luus, R. Application of Iterative Dynamic Programming to State Constrained Optimal Control Problems. Hung. J. Ind. Chem. 1991, 29, 245–254. [Google Scholar]

- Mekarapiruk, W.; Luus, R. Optimal Control of Inequality State Constrained Systems. Ind. Eng. Chem. Res. 1997, 36, 1686–1694. [Google Scholar] [CrossRef]

- Park, S.; Fred Ramirez, W. Optimal Production of Secreted Protein in Fed-batch Reactors. AIChE J. 1988, 34, 1550–1558. [Google Scholar] [CrossRef]

- Qian, F.; Sun, F.; Zhong, W.; Luo, N. Dynamic Optimization of Chemical Engineering Problems Using a Control Vector Parameterization Method with an Iterative Genetic Algorithm. Eng. Optim. 2013, 45, 1129–1146. [Google Scholar] [CrossRef]

- Tian, J.; Zhang, P.; Wang, Y.; Liu, X.; Chunhua, Y.; Lu, J.; Gui, W.; Sun, Y. Control Vector Parameterization-Based Adaptive Invasive Weed Optimization for Dynamic Processes. Chem. Eng. Technol. 2018, 41, 964–974. [Google Scholar] [CrossRef]

- Zhang, B.; Chen, D.; Zhao, W. Iterative Ant-Colony Algorithm and Its Application to Dynamic Optimization of Chemical Process. Comput. Chem. Eng. 2005, 29, 2078–2086. [Google Scholar] [CrossRef]

- Peng, X.; Qi, R.; Du, W.; Qian, F. An Improved Knowledge Evolution Algorithm and Its Application to Chemical Process Dynamic Optimization. CIESC J. 2012, 63, 841–850. [Google Scholar]

- Zhou, Y.; Liu, X. Control Parameterization-based Adaptive Particle Swarm Approach for Solving Chemical Dynamic Optimization Problems. Chem. Eng. Technol. 2014, 37, 692–702. [Google Scholar] [CrossRef]

- Liu, Z.; Du, W.; Qi, R.; QIAN, F. Dynamic Optimization in Chemical Processes Using Improved Knowledge-Based Cultural Algorithm. CIESC J. 2010, 11, 2890–2895. [Google Scholar]

- Xu, L.; Mo, Y.; Lu, Y.; Li, J. Improved Seagull Optimization Algorithm Combined with an Unequal Division Method to Solve Dynamic Optimization Problems. Processes 2021, 9, 1037. [Google Scholar] [CrossRef]

- Biegler, L.T. Solution of Dynamic Optimization Problems by Successive Quadratic Programming and Orthogonal Collocation. Comput. Chem. Eng. 1984, 8, 243–247. [Google Scholar] [CrossRef]

- Vassiliadis, V.S.; Sargent, R.W.; Pantelides, C.C. Solution of a Class of Multistage Dynamic Optimization Problems. 2. Problems with Path Constraints. Ind. Eng. Chem. Res. 1994, 33, 2123–2133. [Google Scholar] [CrossRef]

- Huang, M.; Zhou, X.; Yang, C.; Gui, W. Dynamic Optimization Using Control Vector Parameterization with State Transition Algorithm. In Proceedings of the 2017 36th Chinese Control Conference (CCC), Dalian, China, 26–28 July 2017; pp. 4407–4412. [Google Scholar]

- Angira, R.; Santosh, A. Optimization of Dynamic Systems: A Trigonometric Differential Evolution Approach. Comput. Chem. Eng. 2007, 31, 1055–1063. [Google Scholar] [CrossRef]

- Chen, X.; Du, W.; Tianfield, H.; Qi, R.; He, W.; Qian, F. Dynamic Optimization of Industrial Processes with Nonuniform Discretization-Based Control Vector Parameterization. IEEE Trans. Autom. Sci. Eng. 2013, 11, 1289–1299. [Google Scholar] [CrossRef]

- Zhang, P.; Chen, H.; Liu, X.; Zhang, Z. An Iterative Multi-Objective Particle Swarm Optimization-Based Control Vector Parameterization for State Constrained Chemical and Biochemical Engineering Problems. Biochem. Eng. J. 2015, 103, 138–151. [Google Scholar] [CrossRef]

- Xiao, L.; Liu, X. An Effective Pseudospectral Optimization Approach with Sparse Variable Time Nodes for Maximum Production of Chemical Engineering Problems. Can. J. Chem. Eng. 2017, 95, 1313–1322. [Google Scholar] [CrossRef]

- Luus, R. Iterative Dynamic Programming; Chapman and Hall/CRC: Boca Raton, FL, USA, 2019; ISBN 0-429-12364-7. [Google Scholar]

- Ko, D.Y.-C. Studies of Singular Solutions in Dynamic Optimization; Northwestern University: Evanston, IL, USA, 1969; ISBN 9798659357156. [Google Scholar]

- Lei, Y.; Li, S.; Zhang, Q.; Zhang, X. A Non-Uniform Control Vector Parameterization Approach for Optimal Control Problems. J. China Univ. Pet. Ed. Nat. Sci. 2011, 5. [Google Scholar]

- Wei, F.X.; Aipeng, J. A grid reconstruction strategy based on pseudo Wigner-Ville analysis for dynamic optimization problem. J. Chem. Ind. Eng. 2019, 70, 158–167. [Google Scholar]

- Tholudur, A.; Ramirez, W.F. Obtaining Smoother Singular Arc Policies Using a Modified Iterative Dynamic Programming Algorithm. Int. J. Control. 1997, 68, 1115–1128. [Google Scholar] [CrossRef]

- Wang, K.; Li, F. A Dynamic Chaotic Mutation Based Particle Swarm Optimization for Dynamic Optimization of Biochemical Process. In Proceedings of the 2017 4th International Conference on Information Science and Control Engineering (ICISCE), Changsha, China, 21–23 July 2017; pp. 788–791. [Google Scholar]

| Functions | Formulation | Rang | Dim | |

|---|---|---|---|---|

| Sphere | [−100,100] | 30 | 0 | |

| Schwefel | [−10,10] | 30 | 0 | |

| Rastrigin | [−5.12,5.12] | 30 | 0 | |

| Ackley | [−32,32] | 30 | 0 | |

| Kowalik | [−5,5] | 4 | 0.00030 | |

| Six Hump Camel Back | [−5,5] | 2 | −1.0316 |

| Algorithm | |||||

|---|---|---|---|---|---|

| Functions | Result | MSFO | SFO | PSO | BOA |

| F1 | Best | 6.8104 × 10−94 | 9.7670 × 10−16 | 1.6556 × 103 | 2.7743 × 100 |

| Worst | 3.6275 × 10−54 | 1.0181 × 10−9 | 8.6603 × 103 | 7.0283 × 100 | |

| Mean | 8.4117 × 10−56 | 9.5079 × 10−11 | 4.8832 × 103 | 5.0551 × 100 | |

| Std. | 5.1736 × 10−55 | 1.9546 × 10−10 | 1.8340 × 103 | 9.6613 × 10−1 | |

| Rank | 1 | 2 | 4 | 3 | |

| F2 | Best | 2.7641 × 10−52 | 5.3076 × 10−7 | 3.4437 × 101 | 8.0016 × 10−2 |

| Worst | 5.4745 × 10−27 | 1.8220 × 10−4 | 7.9924 × 102 | 1.2217 × 100 | |

| Mean | 1.5945 × 10−28 | 3.9648 × 10−5 | 3.5138 × 102 | 4.7870 × 10−1 | |

| Std. | 8.0045 × 10−28 | 3.9331 × 10−5 | 1.3872 × 103 | 2.6753 × 10−1 | |

| Rank | 1 | 2 | 4 | 3 | |

| F3 | Best | 0.0000 × 100 | 2.3124 × 10−10 | 2.1053 × 102 | 1.5309 × 102 |

| Worst | 0.0000 × 100 | 2.5092 × 10−6 | 3.6692 × 102 | 2.3210 × 102 | |

| Mean | 0.0000 × 100 | 1.9599 × 10−7 | 2.9085 × 102 | 1.9280 × 102 | |

| Std. | 0.0000 × 100 | 4.36977 × 10−7 | 2.8042 × 101 | 1.7471 × 101 | |

| Rank | 1 | 2 | 4 | 3 | |

| F4 | Best | 8.8818 × 10−16 | 6.2225 × 10−8 | 8.3902 × 100 | 1.3961 × 101 |

| Worst | 8.8818 × 10−16 | 2.8007 × 10−5 | 1.5680 × 101 | 1.9874 × 101 | |

| Mean | 8.8818 × 10−16 | 6.3357 × 10−6 | 1.2945 × 101 | 1.8706 × 101 | |

| Std. | 5.9765 × 10−31 | 5.2560 × 10−6 | 1.5215 × 100 | 1.0767 × 100 | |

| Rank | 1 | 2 | 3 | 4 | |

| F5 | Best | 3.1071 × 10−4 | 3.1082 × 10−4 | 1.2370 × 10−3 | 3.0955 × 10−4 |

| Worst | 5.2036 × 10−4 | 5.7377 × 10−4 | 2.9944 × 10−2 | 3.5654 × 10−3 | |

| Mean | 3.6986 × 10−4 | 3.6003 × 10−4 | 1.1166 × 10−2 | 7.8221 × 10−4 | |

| Std. | 2.0186 × 10−4 | 5.7242 × 10−5 | 9.7893 × 10−3 | 5.2900 × 10−4 | |

| Rank | 1 | 2 | 3 | 4 | |

| F6 | Best | −1.0316 × 100 | −1.0316 × 100 | −1.0316 × 100 | −1.0316 × 100 |

| Worst | −1.0316 × 100 | −9.9951 × 101 | −1.0246 × 100 | −1.0316 × 100 | |

| Mean | −1.0316 × 100 | −1.0306 × 100 | −1.0294 × 100 | −1.0316 × 100 | |

| Std. | 4.4860 × 100 | 4.5484 × 10−3 | 1.8578 × 10−3 | 4.4860 × 10−16 | |

| Rank | 1 | 3 | 4 | 2 | |

| Function | MSFO versus SFO | MSFO versus PSO | MSFO versus BOA |

|---|---|---|---|

| F1 | 7.0661 × 10−18 | 7.0661 × 10−18 | 7.0661 × 10−18 |

| F2 | 7.0661 × 10−18 | 4.8495 × 10−18 | 4.8495 × 10−18 |

| F3 | 3.3111 × 10−20 | 3.3111 × 10−20 | 3.3111 × 10−20 |

| F4 | 3.3111 × 10−20 | 3.3111 × 10−20 | 3.3111 × 10−20 |

| F5 | 0.0483 | 8.0196 × 10−6 | 5.3702 × 10−10 |

| F6 | 8.4620 × 10−18 | 6.6308 × 10−20 | 0.0011 |

| Parameter | Quantity | Value |

|---|---|---|

| A | Initial attack value | 4 |

| percent | Sailfish ratio | 0.2 |

| Pop | Population | 800 |

| T | Maximum number of iterations | 1000 |

| Ref. | Method | N | Optimum |

|---|---|---|---|

| [24] | OCT | - | 0.761594156 |

| [25] | ACO-CP | - | 0.76238 |

| [26] | IACO-CVP | - | 0.76160 |

| [33] | IGA-CVP | - | 0.761595 |

| [34] | IWO-CVP | 50 | 0.76159793 |

| [34] | ADIWO-CVP | 50 | 0.76159417 |

| This work | MSFO | 20 | 0.76165319 |

| This work | MSFO | 50 | 0.761594199 |

| Ref. | Method | N | Optimum |

|---|---|---|---|

| [9] | MOARA | - | 5.54 × 10−2 |

| [10] | PSO-CVP | - | 0.6105359 |

| [34] | IWO-CVP | - | 0.61079180 |

| [35] | IACA | 10 | 0.6100 |

| [35] | IACA | 20 | 0.6104 |

| [36] | GA | - | 0.61072 |

| [36] | IKEA | 10 | 0.6101 |

| [36] | IKEA | 20 | 0.610426 |

| [36] | IKEA | 100 | 0.610781–0.610789 |

| [37] | CP-PSO | - | 0.6107847 |

| [37] | CP-APSO | - | 0.6107850 |

| [38] | IKBCA | 10 | 0.6101 |

| [38] | IKBCA | 20 | 0.610454 |

| [38] | IKBCA | 100 | 0.610779–0.610787 |

| [39] | ISOA | 10 | 0.6101 |

| [39] | ISOA | 25 | 0.61053 |

| [39] | ISOA | 50 | 0.6107724 |

| This work | MSFO | 10 | 0.610118 |

| This work | MSFO | 25 | 0.610537 |

| This work | MSFO | 50 | 0.610771–0.610785 |

| Ref. | Method | N | Optimum |

|---|---|---|---|

| [28] | MCB | ~ | 0.57353 |

| [37] | CP-PSO | ~ | 0.573543 |

| [37] | CP-APSO | ~ | 0.573544 |

| [39] | ISOA | 10 | 0.572226 |

| [39] | ISOA | 35 | 0.57348 |

| [40] | CVP | ~ | 0.56910 |

| [40] | CVI | ~ | 0.57322 |

| [41] | CPT | ~ | 0.57353 |

| This work | MSFO | 10 | 0.572143 |

| This work | MSFO | 40 | 0.573212–0.574831 |

| Ref. | Method | N | Optimum |

|---|---|---|---|

| [36] | IKEA | 20 | 0.4757 |

| [36] | IKEA | 100 | 0.47761–0.47768 |

| [39] | ISOA | 40 | 0.47721 |

| [42] | STA | 5 | 0.47260 |

| [42] | STA | 10 | 0.47363 |

| [42] | STA | 15 | 0.47453 |

| [42] | GA | 5 | 0.47260 |

| [42] | GA | 10 | 0.47363 |

| [42] | GA | 15 | 0.47453 |

| [43] | TDE | 20 | 0.47527 |

| [43] | TDE | 40 | 0.47683 |

| [44] | NDCVP-HGPSO | 15 | 0.47771 |

| This work | MSFO | 20 | 0.47562 |

| This work | MSFO | 70 | 0.477544–0.47760 |

| Ref. | Method | N | Optimum |

|---|---|---|---|

| [45] | IPSO | 20 | 0.677219 |

| [46] | SVTN | 20 | 0.677389 |

| [47] | Iterative dynamic programming (IDP) | 10 | 0.67531 |

| [48] | Combination mode method (CMM) | 10 | 0.7226 |

| [29] | Conjugate gradient method (CGM) | 10 | 0.7227 |

| [49] | Nonuniform control vector parameterization (NU-CVP) | 20 | 0.77298 |

| [50] | S-CVP | 20 | 0.7234708 |

| [50] | PWV-CVP | 20 | 0.7234708 |

| This work | MSFO | 10 | 0.7226987 |

| This work | MSFO | 20 | 0.7234724 |

| Ref. | Method | N | Optimum |

|---|---|---|---|

| [8] | VSACS | 10 | 32.18175–32.18246 |

| [8] | VSACS | 20 | 32.45614–32.45629 |

| [8] | VSACS | 100 | 32.81001–32.81224 |

| [34] | PADIWO-CVP | ~ | 32.68649 |

| [34] | GADIWO-CVP | ~ | 32.68720 |

| [36] | IKEA | 20 | 32.4225–32.6783 |

| [38] | IKBCA | 20 | 32.4556–32.4561 |

| [38] | IKBCA | 150 | 32.7114–32.7180 |

| [43] | TDE | ~ | 32.684494 |

| [44] | NDCVP-HGPSO | ~ | 32.68712 |

| [51] | FIDP | ~ | 32.47 |

| [52] | DCM-PSO | 10 | 32.68851327 |

| [52] | DCM-PSO | 20 | 32.68851335 |

| This work | MSFO | 10 | 32.18024–32.18197 |

| This work | MSFO | 20 | 32.45332–32.45446 |

| This work | MSFO | 100 | 32.69236–32.71112 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Mo, Y. Dynamic Optimization of Chemical Processes Based on Modified Sailfish Optimizer Combined with an Equal Division Method. Processes 2021, 9, 1806. https://doi.org/10.3390/pr9101806

Zhang Y, Mo Y. Dynamic Optimization of Chemical Processes Based on Modified Sailfish Optimizer Combined with an Equal Division Method. Processes. 2021; 9(10):1806. https://doi.org/10.3390/pr9101806

Chicago/Turabian StyleZhang, Yuedong, and Yuanbin Mo. 2021. "Dynamic Optimization of Chemical Processes Based on Modified Sailfish Optimizer Combined with an Equal Division Method" Processes 9, no. 10: 1806. https://doi.org/10.3390/pr9101806

APA StyleZhang, Y., & Mo, Y. (2021). Dynamic Optimization of Chemical Processes Based on Modified Sailfish Optimizer Combined with an Equal Division Method. Processes, 9(10), 1806. https://doi.org/10.3390/pr9101806