Enhancing Melanoma Diagnosis with Advanced Deep Learning Models Focusing on Vision Transformer, Swin Transformer, and ConvNeXt

Abstract

1. Introduction

2. The Evolution of Deep Learning in Artificial Intelligence and Computer Vision

3. Publicly Available Dermoscopic Image Datasets

4. Material and Method

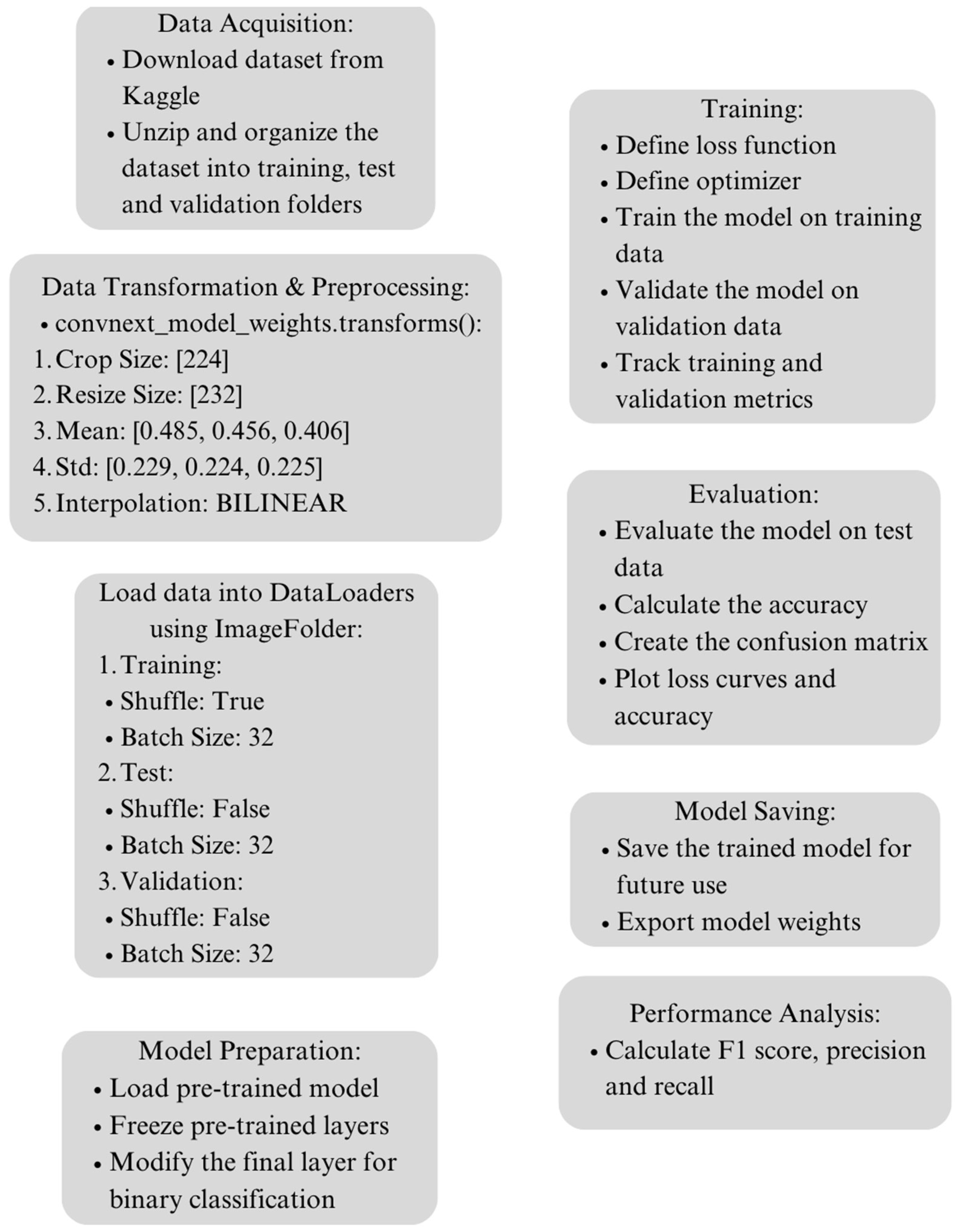

4.1. Dataset Acquisition and Preprocessing

4.2. Data Transformation

4.3. Proposed Deep Learning Model Architecture

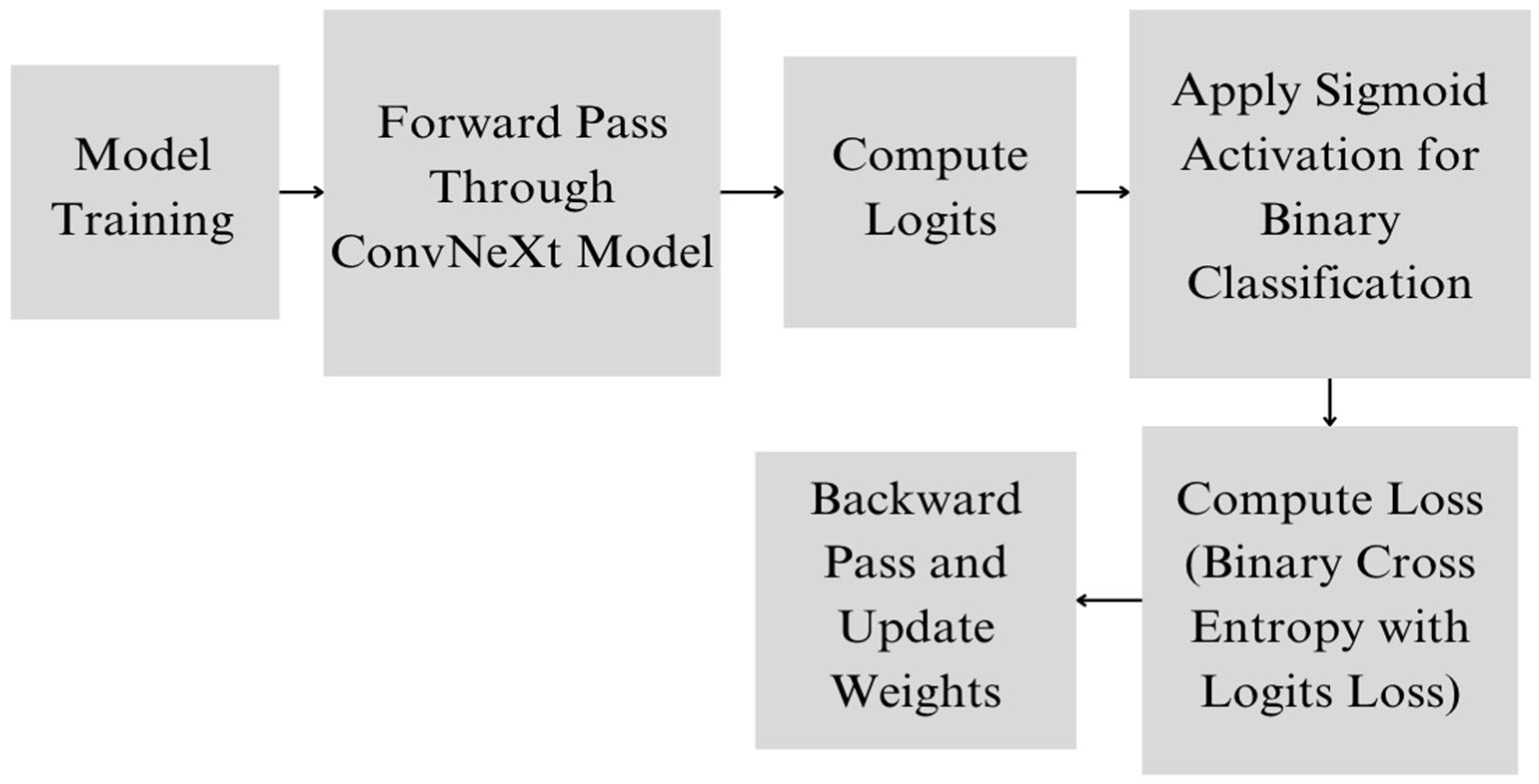

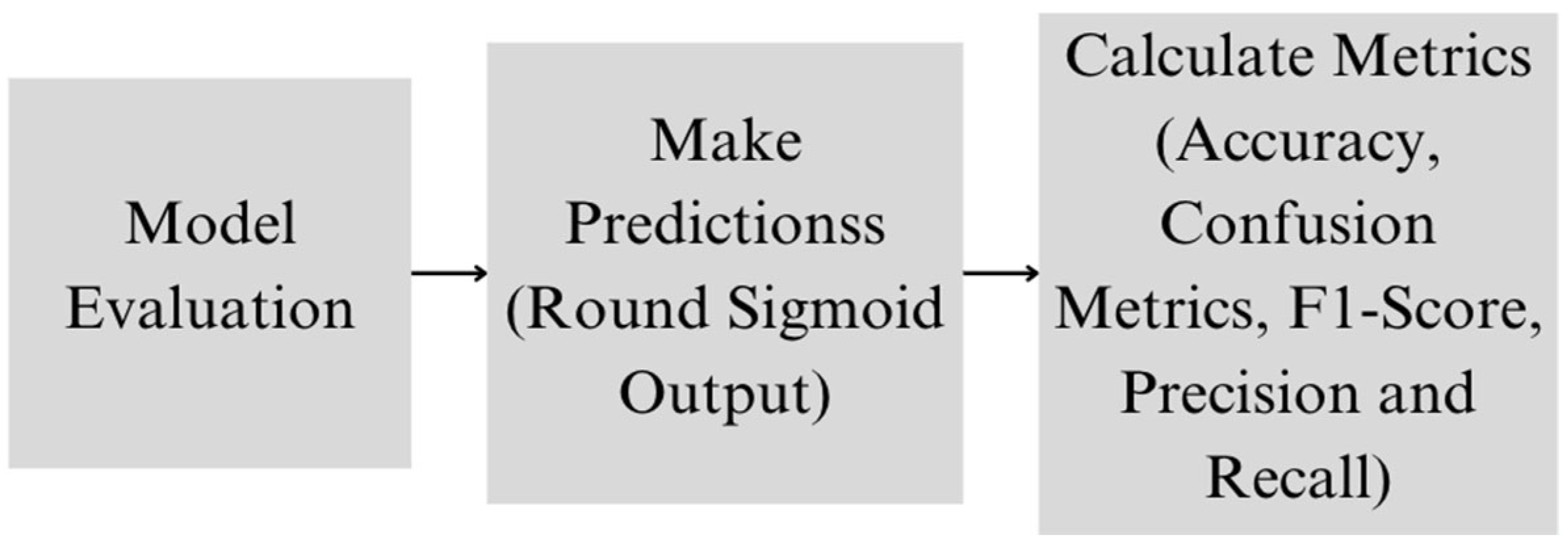

4.4. Proposed Deep Learning Model

4.5. Experimental Setup

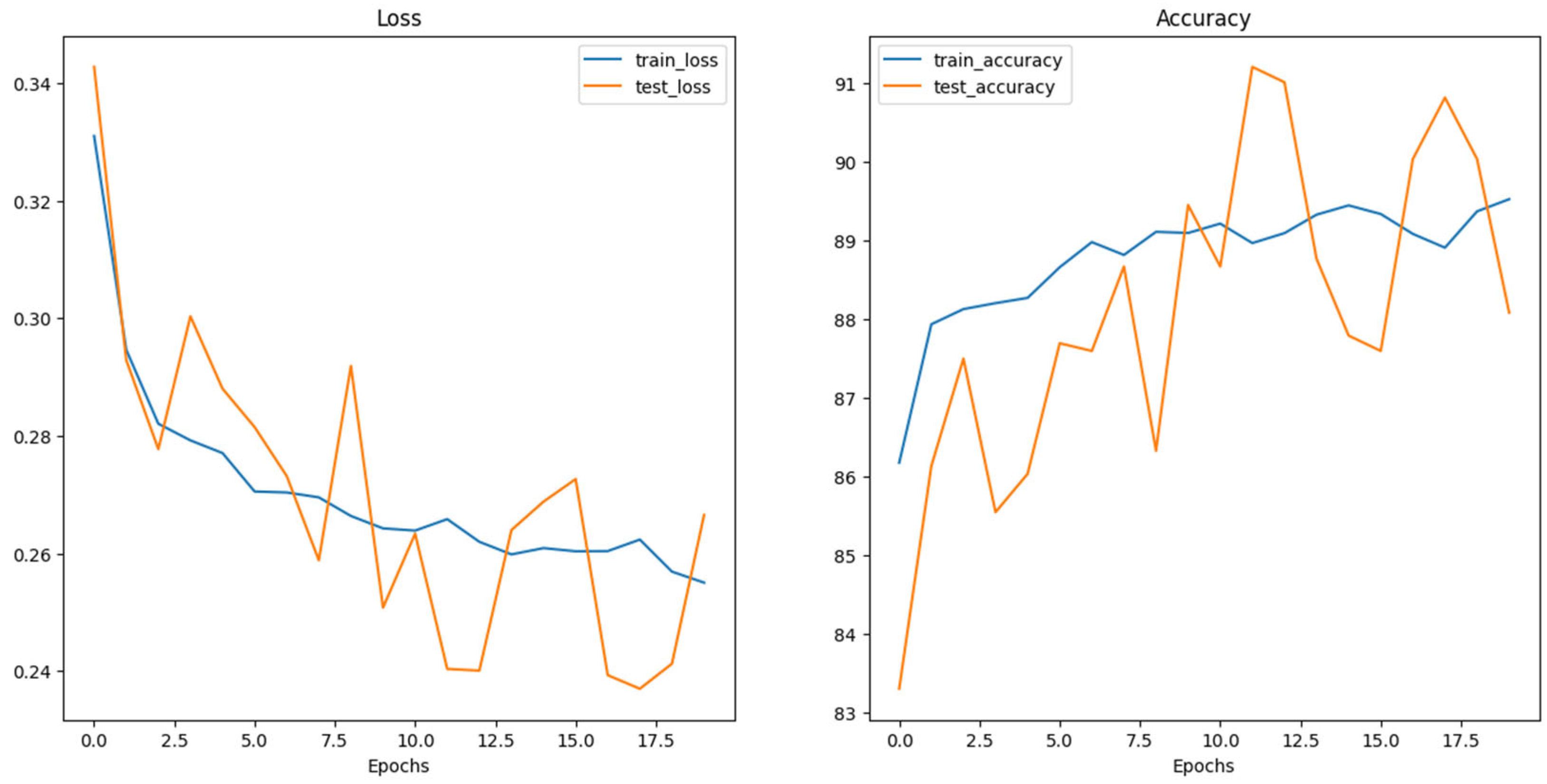

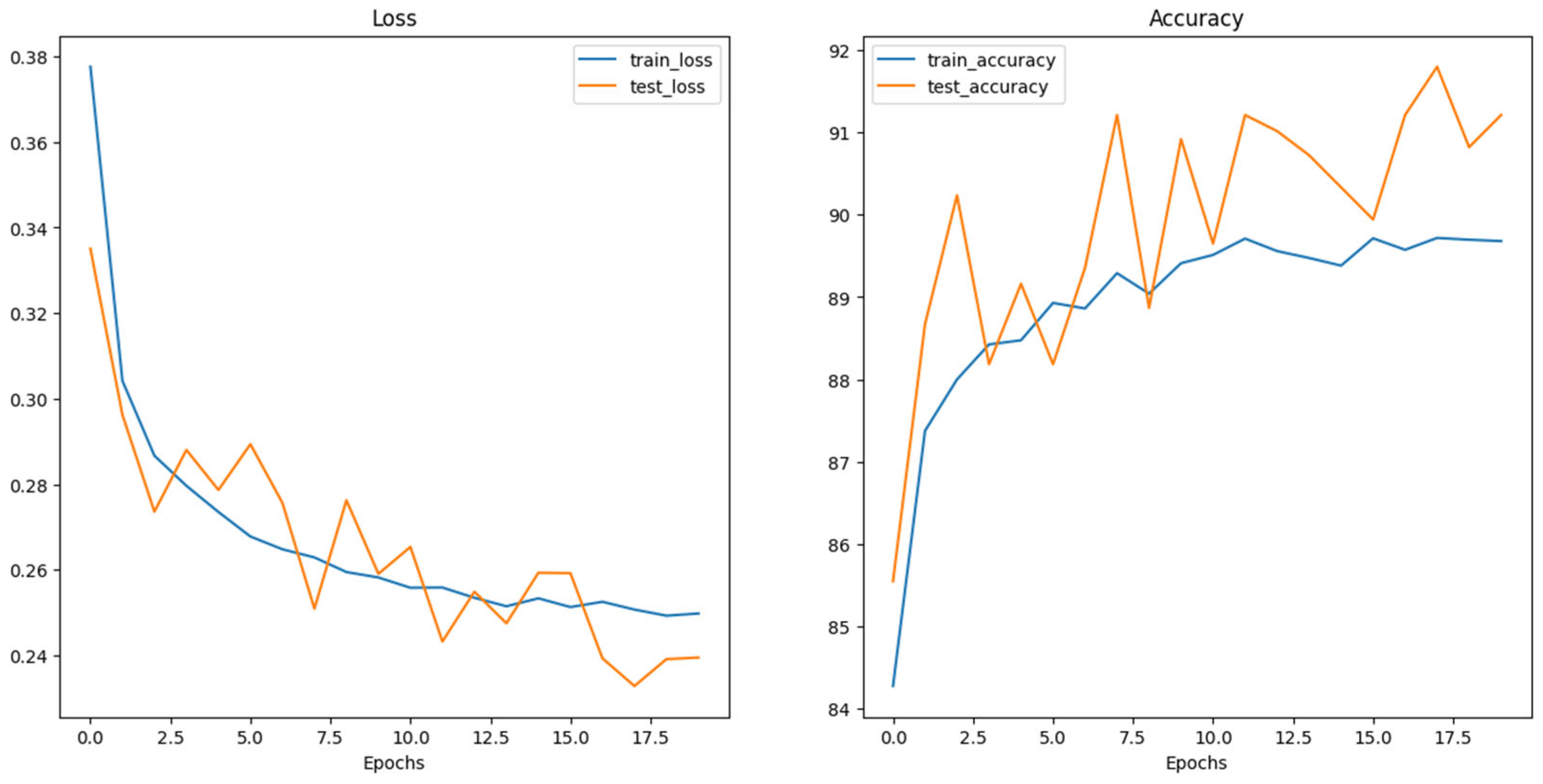

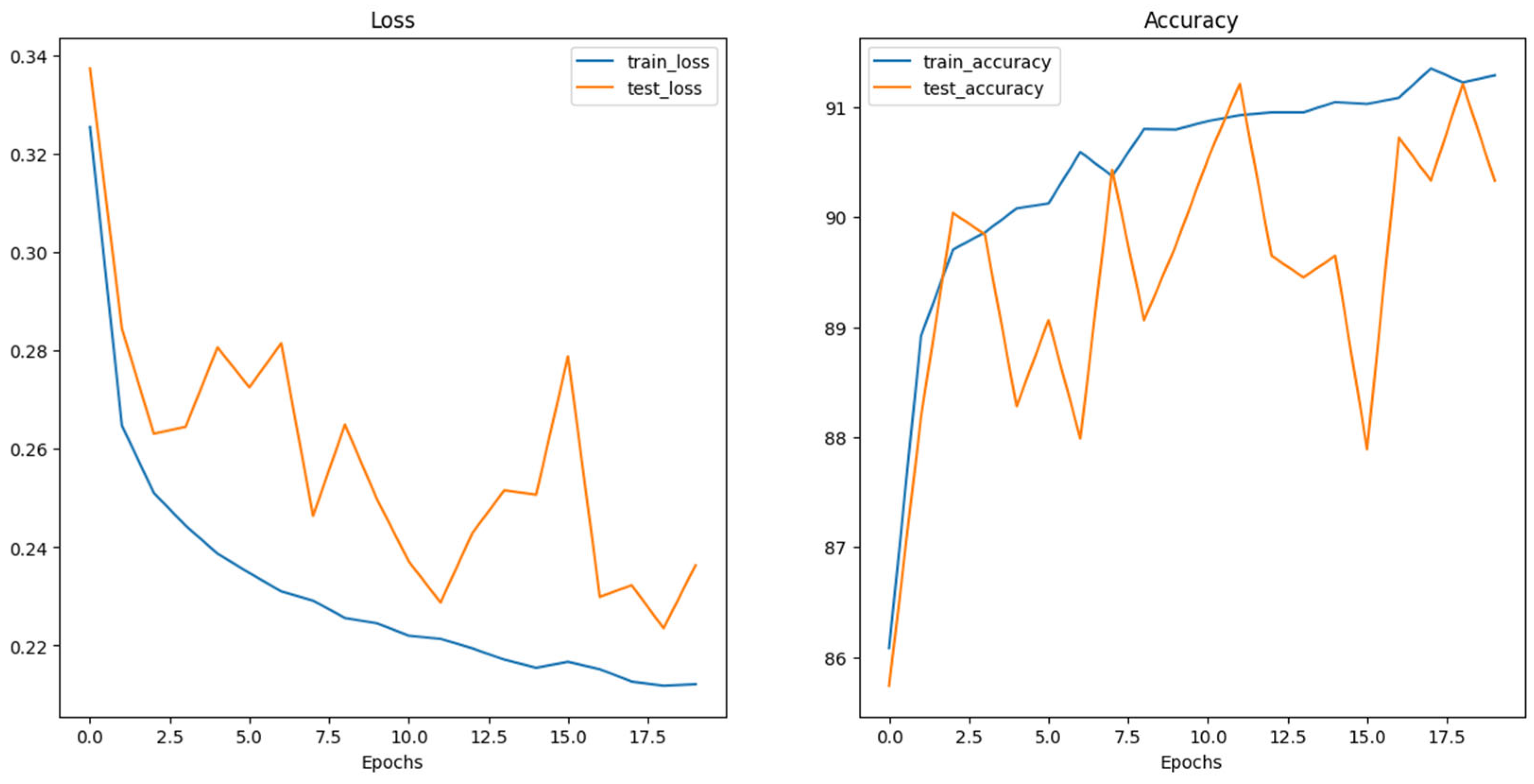

5. Results and Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Radiation: Ultraviolet (UV) Radiation and Skin Cancer. Available online: https://www.who.int/news-room/questions-and-answers/item/radiation-ultraviolet-(uv)-radiation-and-skin-cancer (accessed on 12 April 2024).

- Lubis, A.R.; Prayudani, S.; Fatmi, Y.; Lase, Y.Y.; Al-Khowarizmi. In Image Classification of Skin Cancer Sufferers: Modification of K-Nearest Neighbor with Histogram of Oriented Gradients Approach. In Proceedings of the 2022 1st International Conference on Information System & Information Technology (ICISIT), Yogyakarta, Indonesia, 27–28 July 2022; pp. 85–89. [Google Scholar]

- Likhitha, S.; Baskar, R. Skin Cancer Classification Using CNN in Comparison with Support Vector Machine for Better Accuracy. In Proceedings of the 2022 11th International Conference on System Modeling & Advancement in Research Trends (SMART), Moradabad, India, 16–17 December 2022; pp. 1298–1302. [Google Scholar]

- Jusman, Y.; Firdiantika, I.M.; Dharmawan, D.A.; Purwanto, K. Performance of Multi Layer Perceptron and Deep Neural Networks in Skin Cancer Classification. In Proceedings of the 2021 IEEE 3rd Global Conference on Life Sciences and Technologies (LifeTech), Nara, Japan, 9–11 March 2021; pp. 534–538. [Google Scholar]

- Kondaveeti, H.K.; Edupuganti, P. Skin Cancer Classification Using Transfer Learning. In Proceedings of the 2020 IEEE International Conference on Advent Trends in Multidisciplinary Research and Innovation (ICATMRI), Buldana, India, 30 December 2020; pp. 1–4. [Google Scholar]

- Carvajal, D.C.; Delgado, B.M.; Ibarra, D.G.; Ariza, L.C. Skin Cancer Classification in Dermatological Images Based on a Dense Hybrid Algorithm. In Proceedings of the 2022 IEEE XXIX International Conference on Electronics, Electrical Engineering and Computing (INTERCON), Lima, Peru, 11–13 August 2022; pp. 1–4. [Google Scholar]

- Muniteja, M.; Bee, M.K.M.; Suresh, V. Detection and Classification of Melanoma Image of Skin Cancer Based on Convolutional Neural Network and Comparison with Coactive Neuro Fuzzy Inference System. In Proceedings of the 2022 International Conference on Cyber Resilience (ICCR), Dubai, United Arab Emirates, 6–7 October 2022; pp. 1–5. [Google Scholar]

- Likhitha, S.; Baskar, R. Skin Cancer Segmentation Using R-CNN Comparing with Inception V3 for Better Accuracy. In Proceedings of the 2022 11th International Conference on System Modeling & Advancement in Research Trends (SMART), Moradabad, India, 16–17 December 2022; pp. 1293–1297. [Google Scholar]

- Razzak, I.; Naz, S. Unit-Vise: Deep Shallow Unit-Vise Residual Neural Networks with Transition Layer for Expert Level Skin Cancer Classification. IEEE/ACM Trans. Comput. Biol. Bioinf. 2022, 19, 1225–1234. [Google Scholar] [CrossRef] [PubMed]

- Caffe. Available online: https://github.com/BVLC/caffe (accessed on 15 April 2024).

- TensorFlow. Available online: https://github.com/tensorflow (accessed on 22 April 2024).

- Theano. Available online: https://github.com/Theano/Theano (accessed on 26 April 2024).

- Torch. Available online: https://github.com/torch (accessed on 28 April 2024).

- Lasagne. Available online: https://github.com/Lasagne/Lasagne (accessed on 29 April 2024).

- Keras. Available online: https://github.com/keras-team (accessed on 1 May 2024).

- Baykal Kablan, E.; Ayas, S. Skin lesion classification from dermoscopy images using ensemble learning of ConvNeXt models. Signal Image Video Process. 2024, 18, 6353–6361. [Google Scholar] [CrossRef]

- Saha, D.K.; Joy, A.M.; Majumder, A. YoTransViT: A Transformer and CNN Method for Predicting and Classifying Skin Diseases Using Segmentation Techniques. Inform. Med. Unlocked 2024, 47, 101495. [Google Scholar] [CrossRef]

- Que, S.K.T.; Fraga-Braghiroli, N.; Grant-Kels, J.M.; Rabinovitz, H.S.; Oliviero, M.; Scope, A. Through the Looking Glass: Basics and Principles of Reflectance Confocal Microscopy. J. Am. Acad. Dermatol. 2015, 73, 276–284. [Google Scholar] [CrossRef] [PubMed]

- ISIC International Skin Imaging Collaboration. Available online: https://www.isic-archive.com/ (accessed on 5 May 2024).

- Que, S.K.T. Research Techniques Made Simple: Noninvasive Imaging Technologies for the Delineation of Basal Cell Carcinomas. J. Investig. Dermatol. 2016, 136, e33–e38. [Google Scholar] [CrossRef] [PubMed]

- Alawi, S.A.; Kuck, M.; Wahrlich, C.; Batz, S.; McKenzie, G.; Fluhr, J.W.; Lademann, J.; Ulrich, M. Optical Coherence Tomography for Presurgical Margin Assessment of Non-melanoma Skin Cancer–a Practical Approach. Exp. Dermatol. 2013, 22, 547–551. [Google Scholar] [CrossRef] [PubMed]

- PH2 Dataset Pedro Hispano Clinic. Available online: http://www.fc.up.pt/addi/ph2%20database.html (accessed on 12 May 2024).

- Wurm, E.M.T.; Campbell, T.M.; Soyer, H.P. Teledermatology: How to Start a New Teaching and Diagnostic Era in Medicine. Dermatol. Clin. 2008, 26, 295–300. [Google Scholar] [CrossRef] [PubMed]

- MEDNODE Dataset University Medical Center Groningen. Available online: https://www.cs.rug.nl/~imaging/databases/melanoma_naevi/index.html (accessed on 18 May 2024).

- Gronbeck, C.; Grant-Kels, J.M.; Fox, C.; Feng, H. Trends in Utilization of Reflectance Confocal Microscopy in the United States, 2017–2019. J. Am. Acad. Dermatol. 2022, 86, 1395–1398. [Google Scholar] [CrossRef] [PubMed]

- DermIS Dataset. Available online: https://www.dermis.net/ (accessed on 20 May 2024).

- DermQuest Dataset. Available online: https://www.dermquest.com/ (accessed on 24 May 2024).

- HAM10000. Available online: https://www.kaggle.com/datasets/kmader/skin-cancer-mnist-ham10000/ (accessed on 29 May 2024).

- Dermofit Image Library DermNetNZ. Available online: https://www.dermnetnz.org/ (accessed on 31 May 2024).

| Unit | Definition | Function |

|---|---|---|

| Neurons | Basic units of a neural network | Receive input, process it, and pass the output to the next layer. |

| Layers | Groups of neurons |

|

| Weights | Parameters connecting neurons between layers | Adjusted during training to minimize prediction error; determine the influence of one neuron on another. |

| Biases | Additional parameters in neurons | Allow activation of neurons to be shifted, aiding in better fitting of the model. |

| Activation Functions | Functions applied to neuron output |

|

| Forward Propagation | Process of passing data through the network | Generates predictions based on current network state. |

| Loss Function | Measures prediction accuracy |

|

| Back-propagation | Algorithm for training neural networks | Updates weights and biases to minimize the loss function. |

| Learning Rate | Hyperparameter controlling adjustment of weights and biases | Ensures stable convergence of the network. |

| Epochs and Batches |

|

|

| Dataset Name | Description | Number of Images | Refs |

|---|---|---|---|

| ISIC Dataset | A collection of clinical images displaying various skin conditions, including melanoma and nevi, essential for training AI models for classification tasks. | 1279–44,108 | [19] |

| HAM10000 Dataset | Contains 10,015 high-quality images for training and validating AI models, offering a diverse range of skin problems to fine-tune classification tasks. | 10,015 | [28] |

| PH2 Dataset | Annotated dataset with 200 dermoscopic images (40 melanoma and 160 non-melanoma) from the Pedro Hispano Clinic in Portugal, aiding in melanoma analysis. | 200 | [22] |

| MEDNODE Dataset | Consists of 170 images, focusing on melanoma and nevi, contributing to the study of skin cancer diagnosis. | 170 | [24] |

| DermIS Dataset | Largest online resource for skin cancer diagnosis, featuring 146 melanoma images, serving as a valuable reference for researchers and practitioners. | 146 | [26] |

| DermQuest Dataset | A compilation of 22,000 clinical images, reviewed by an international editorial board, providing a vast resource for studying various skin conditions. | 22,000 | [27] |

| Dermofit Image Library | Includes 1300 high-quality images showcasing ten different types of skin lesions, aiding in the development and refinement of algorithms for melanoma diagnosis. | 1300 | [29] |

| Class | Total | Training | Testing | Validation |

|---|---|---|---|---|

| Malignant | 6590 | 5590 | 500 | 500 |

| Benign | 7289 | 6289 | 500 | 500 |

| Total | 13,879 | 11,879 | 1000 | 1000 |

| Model | Accuracy (%) | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|---|

| Swin_v2_s | 91 | 96.59 (B)/86.61 (M) | 85 (B)/97 (M) | 90.43 (B)/91.51 (M) |

| ConvNeXt_Base | 91.5 | 90.45 (B)/92.61 (M) | 92.8 (B)/90.2 (M) | 91.61 (B)/91.39 (M) |

| ViT_Base_16 | 92 | 89.62 (B)/94.68 (M) | 95 (B)/89 (M) | 92.23 (B)/91.75 (M) |

| Model | TP (B) | TN (M) | FN | FP |

|---|---|---|---|---|

| Swin_v2_s | 425 | 485 | 75 | 15 |

| ConvNeXt_Base | 464 | 451 | 36 | 49 |

| ViT_Base_16 | 475 | 445 | 25 | 55 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aksoy, S.; Demircioglu, P.; Bogrekci, I. Enhancing Melanoma Diagnosis with Advanced Deep Learning Models Focusing on Vision Transformer, Swin Transformer, and ConvNeXt. Dermatopathology 2024, 11, 239-252. https://doi.org/10.3390/dermatopathology11030026

Aksoy S, Demircioglu P, Bogrekci I. Enhancing Melanoma Diagnosis with Advanced Deep Learning Models Focusing on Vision Transformer, Swin Transformer, and ConvNeXt. Dermatopathology. 2024; 11(3):239-252. https://doi.org/10.3390/dermatopathology11030026

Chicago/Turabian StyleAksoy, Serra, Pinar Demircioglu, and Ismail Bogrekci. 2024. "Enhancing Melanoma Diagnosis with Advanced Deep Learning Models Focusing on Vision Transformer, Swin Transformer, and ConvNeXt" Dermatopathology 11, no. 3: 239-252. https://doi.org/10.3390/dermatopathology11030026

APA StyleAksoy, S., Demircioglu, P., & Bogrekci, I. (2024). Enhancing Melanoma Diagnosis with Advanced Deep Learning Models Focusing on Vision Transformer, Swin Transformer, and ConvNeXt. Dermatopathology, 11(3), 239-252. https://doi.org/10.3390/dermatopathology11030026