Turbulence Aberration Restoration Based on Light Intensity Image Using GoogLeNet

Abstract

:1. Introduction

2. Related Principle and Method

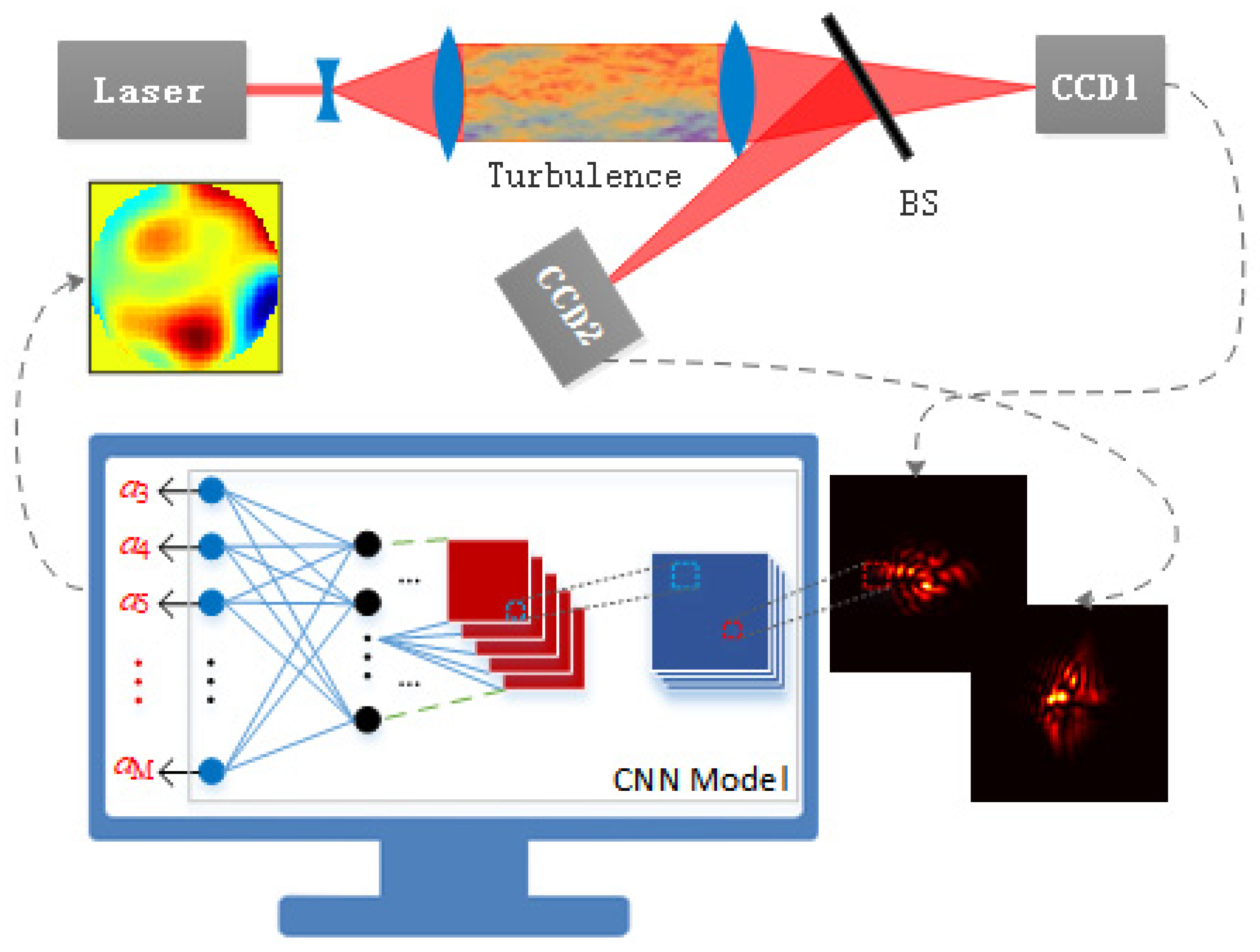

2.1. Turbulence Aberration Restoration Model Based on CNN

2.2. Structure of Convolutional Neural Network

2.3. Data Preparation

2.4. The Training Method

3. Experiment Results and Analysis

3.1. Loss Value Results of GoogLeNet Training and Testing

3.2. Some Samples of Turbulence Aberration Restoration

3.3. Results of Turbulence Aberration Restoration for Different Cases of D/r0

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Strohbehn, J. Laser Beam Propagation in the Atmosphere; Springer: Berlin/Heidelberg, Germany, 1990. [Google Scholar]

- Tyson, R. Principles of Adaptive Optics, 3rd ed.; CRC Press: Boca Raton, FL, USA, 2010. [Google Scholar]

- Vorontsov, M.; Carhart, G.; Ricklin, J.C. Adaptive phase-distortion correction based on parallel gradient-descent optimization. Opt. Lett. 1997, 22, 907–909. [Google Scholar] [CrossRef]

- Song, H.; Fraanje, R.; Schitter, G.; Kroese, H.; Vdovin, G.; Verhaegen, M. Model-based aberration correction in a closed-loop wavefront-sensor-less adaptive optics system. Opt. Express 2010, 18, 24070–24084. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yang, H.; Soloviev, O.; Verhaegen, M. Model-based wavefront sensorless adaptive optics system for large aberrations and extended objects. Opt. Express 2015, 23, 24587–24601. [Google Scholar] [CrossRef]

- Dong, B.; Li, Y.; Han, X.-L.; Hu, B. Dynamic Aberration Correction for Conformal Window of High-Speed Aircraft Using Optimized Model-Based Wavefront Sensorless Adaptive Optics. Sensors 2016, 16, 1414. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gerchberg, R.W.; Saxton, W.O. A practical algorithm for the determination of phase from image and diffraction plane pictures. Optik 1972, 35, 237–250. [Google Scholar]

- Gonsalves, R.A. Phase retrieval and diversity in adaptive optics. Opt. Eng. 1982, 21, 829–832. [Google Scholar] [CrossRef]

- Angel, J.R.P.; Wizinowich, P.; Lloyd-Hart, M.; Sandler, D. Adaptive optics for array telescopes using neural-network techniques. Nature 1990, 348, 221–224. [Google Scholar] [CrossRef]

- Sandler, D.G.; Barrett, T.K.; Palmer, D.A.; Fugate, R.Q.; Wild, W.J. Use of a neural network to control an adaptive optics system for an astronomical telescope. Nature 1991, 351, 300–302. [Google Scholar] [CrossRef]

- Barrett, T.K.; Sandler, D.G. Artificial neural network for the determination of Hubble Space Telescope aberration from stellar images. Appl. Opt. 1993, 32, 1720–1727. [Google Scholar] [CrossRef] [Green Version]

- Suzuki, K. Overview of deep learning in medical imaging. Radiol. Phys. Technol. 2017, 10, 257–273. [Google Scholar] [CrossRef]

- Hamwood, J.; Alonso-Caneiro, D.; Read, S.A.; Vincent, S.J.; Collins, M.J. Effect of patch size and network architecture on a convolutional neural network approach for automatic segmentation of OCT retinal layers. Biomed. Opt. Express 2018, 9, 3049–3066. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, M.; Liu, H.; Chen, C. Enhanced skeleton visualization for view invariant human action recognition. Pattern Recognit. 2017, 68, 346–362. [Google Scholar] [CrossRef]

- Janidarmian, M.; Roshan, F.A.; Radecka, K.; Zilic, Z. A Comprehensive Analysis on Wearable Acceleration Sensors in Human Activity Recognition. Sensors 2017, 17, 529. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tsai, T.H.; Cheng, W.H.; You, C.W.; Hu, M.C.; Tsui, A.W.; Chi, H.Y. Learning and Recognition of On-Premise Signs From Weakly Labeled Street View Images. IEEE Trans. Image Process. 2014, 23, 1047–1059. [Google Scholar] [CrossRef] [PubMed]

- Hebbalaguppe, R.; Garg, G.; Hassan, E.; Ghosh, H.; Verma, A. Telecom Inventory Management via Object Recognition and Localisation on Google Street View Images. In Proceedings of the 2017 IEEE Winter Conference on Applications of Computer Vision, Santa Rosa, CA, USA, 24–31 March 2017. [Google Scholar]

- Manana, M.; Tu, C.; Owolawi, P.A. A survey on vehicle detection based on convolution neural networks. In Proceedings of the 2017 3rd IEEE International Conference on Computer and Communications, Chengdu, China, 13–16 December 2017. [Google Scholar]

- Ringeval, F.; Valstar, M.; Jaiswal, S.; Marchi, E.; Lalanne, D.; Cowie, R.; Pantic, M. AV+EC 2015:The First Affect Recognition Challenge Bridging Across Audio, Video, and Physiological Data. In Proceedings of the International Workshop on Audio/visual Emotion Challenge, Brisbane, Australia, 26 October 2015. [Google Scholar]

- Valstar, M.; Gratch, J.; Ringeval, F.; Lalanne, D.; Torres, M.T.; Scherer, S.; Stratou, G.; Cowie, R.; Pantic, M. AVEC 2016: Depression, Mood, and Emotion Recognition Workshop and Challenge. In Proceedings of the 6th International Workshop on Audio/Visual Emotion Challenge, Amsterdam, The Netherlands, 16 October 2016. [Google Scholar]

- Nguyen, T.; Bui, V.; Lam, V.; Raub, C.B.; Chang, L.-C.; Nehmetallah, G. Automatic phase aberration compensation for digital holographic microscopy based on deep learning background detection. Opt. Express 2017, 25, 15043–15057. [Google Scholar] [CrossRef]

- Fei, X.; Zhao, J.; Zhao, H.; Yun, D.; Zhang, Y. Deblurring adaptive optics retinal images using deep convolutional neural networks. Biomed. Opt. Express 2017, 8, 5675–5687. [Google Scholar] [CrossRef] [Green Version]

- Lohani, S.; Glasser, R.T. Turbulence correction with artificial neural networks. Opt. Lett. 2018, 43, 2611–2614. [Google Scholar] [CrossRef]

- Lohani, S.; Knutson, E.M.; O’Donnell, M.; Huver, S.D.; Glasser, R.T. On the use of deep neural networks in optical communications. Appl. Opt. 2018, 57, 4180–4190. [Google Scholar] [CrossRef]

- Paine, S.W.; Fienup, J.R. Machine learning for improved image-based wavefront sensing. Opt. Lett. 2018, 43, 1235–1238. [Google Scholar] [CrossRef] [Green Version]

- Ma, H.; Liu, H.; Qiao, Y.; Li, X.; Zhang, W. Numerical study of adaptive optics compensation based on Convolutional Neural Networks. Opt. Commun. 2019, 433, 283–289. [Google Scholar] [CrossRef]

- Nishizaki, Y.; Valdivia, M.; Horisaki, R.; Kitaguchi, K.; Saito, M.; Tanida, J.; Vera, E. Deep learning wavefront sensing. Opt. Express 2019, 27, 240–251. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.; Guo, Y.; Bao, H.; Rao, C. Sub-Millisecond Phase Retrieval for Phase-Diversity Wavefront Sensor. Sensors 2020, 20, 4877. [Google Scholar] [CrossRef] [PubMed]

- Wang, K.; Zhang, M.; Tang, J.; Wang, L.; Hu, L.; Wu, X.; Li, W.; Di, J.; Liu, G.; Zhao, J. Deep learning wavefront sensing and aberration correction in atmospheric turbulence. PhotoniX 2021, 2, 8. [Google Scholar] [CrossRef]

- Xu, Y.; Guo, H.; Wang, Z.; He, D.; Tan, Y.; Huang, Y. Self-Supervised Deep Learning for Improved Image-Based Wave-Front Sensing. Photonics 2022, 9, 165. [Google Scholar] [CrossRef]

- Wang, M.; Guo, W.; Yuan, X. Single-shot wavefront sensing with deep neural networks for free-space optical communications. Opt. Express 2021, 29, 3465–3478. [Google Scholar] [CrossRef]

- Li, Y.; Yue, D.; He, Y. Prediction of wavefront distortion for wavefront sensorless adaptive optics based on deep learning. Appl. Opt. 2022, 61, 4168–4176. [Google Scholar] [CrossRef]

- Wang, J.Y.; Silva, D.E. Wave-front interpretation with Zernike polynomials. Appl. Opt. 1980, 19, 1510–1518. [Google Scholar] [CrossRef]

- Noll, R.J. Zernike polynomials and atmospheric turbulence. J. Opt. Soc. Am. A 1976, 66, 207–211. [Google Scholar] [CrossRef]

- Li, Z.; Li, X. Fundamental performance of transverse wind estimator from Shack-Hartmann wave-front sensor measurements. Opt. Express 2018, 26, 11859–11876. [Google Scholar] [CrossRef]

- Roddier, N.A. Atmospheric wavefront simulation using Zernike polynomials. Opt. Eng. 1990, 29, 1174–1180. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; People’s Posts and Telecommunications Publishing House: Beijing, China, 2017. [Google Scholar]

| Turbulence Intensity | Interval | Data Volume for Each Interval (Set) | Total Data Volume (Set) |

|---|---|---|---|

| From 1 to 15 | 1 | 100 | 1500 |

| From 1 to 15 | 1 | 1000 | 15,000 |

| From 1 to 15 | 1 | 10,000 | 15,0000 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, H.; Zhang, W.; Ning, X.; Liu, H.; Zhang, P.; Zhang, J. Turbulence Aberration Restoration Based on Light Intensity Image Using GoogLeNet. Photonics 2023, 10, 265. https://doi.org/10.3390/photonics10030265

Ma H, Zhang W, Ning X, Liu H, Zhang P, Zhang J. Turbulence Aberration Restoration Based on Light Intensity Image Using GoogLeNet. Photonics. 2023; 10(3):265. https://doi.org/10.3390/photonics10030265

Chicago/Turabian StyleMa, Huimin, Weiwei Zhang, Xiaomei Ning, Haiqiu Liu, Pengfei Zhang, and Jinghui Zhang. 2023. "Turbulence Aberration Restoration Based on Light Intensity Image Using GoogLeNet" Photonics 10, no. 3: 265. https://doi.org/10.3390/photonics10030265

APA StyleMa, H., Zhang, W., Ning, X., Liu, H., Zhang, P., & Zhang, J. (2023). Turbulence Aberration Restoration Based on Light Intensity Image Using GoogLeNet. Photonics, 10(3), 265. https://doi.org/10.3390/photonics10030265