AR-HUD Optical System Design and Its Multiple Configurations Analysis

Abstract

:1. Introduction

2. Optical Design

2.1. Design Considerations and System Parameters

2.2. Optical Layout and Design Methods

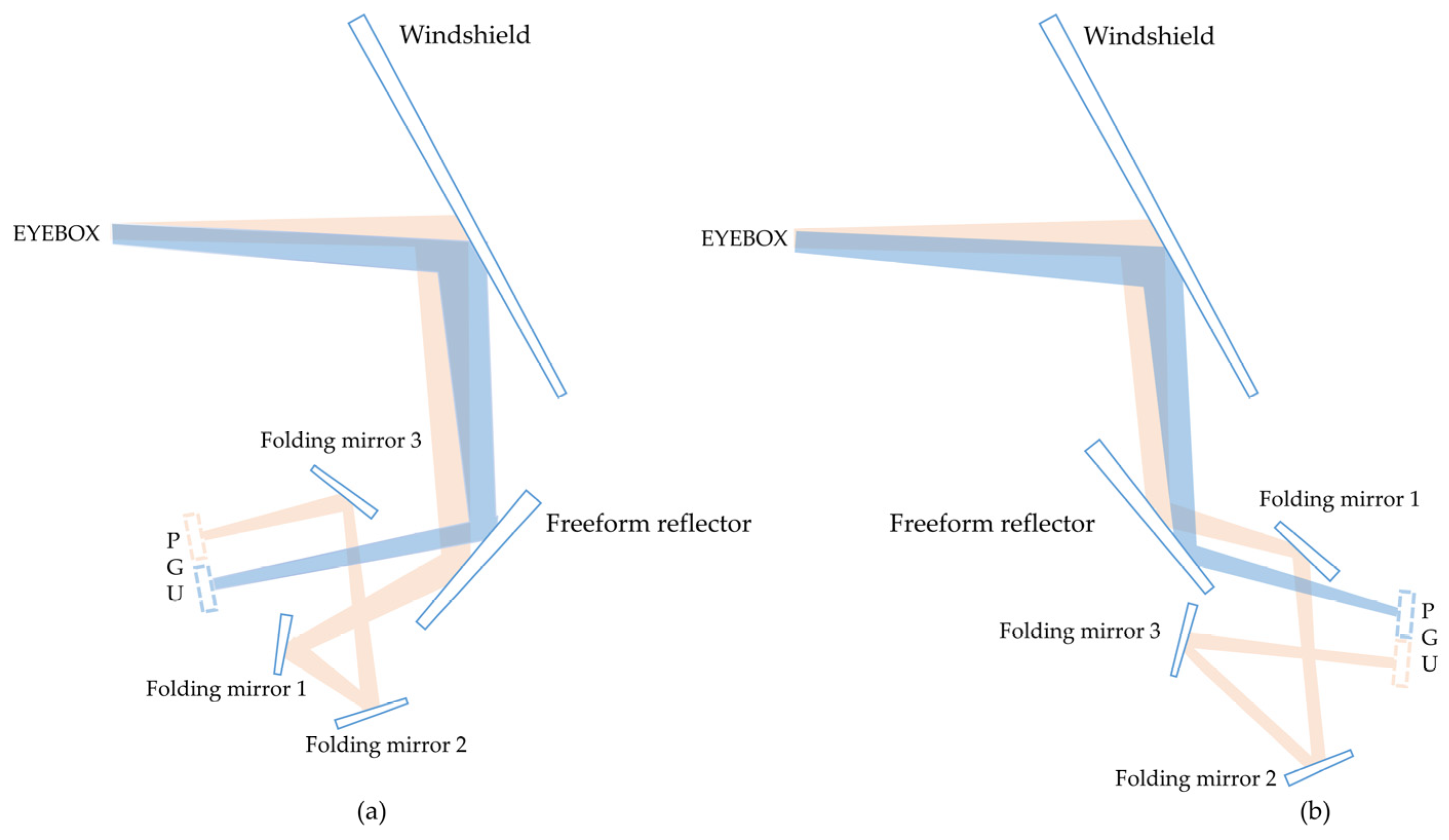

2.2.1. Two Possible Optical Layouts

2.2.2. Design Method

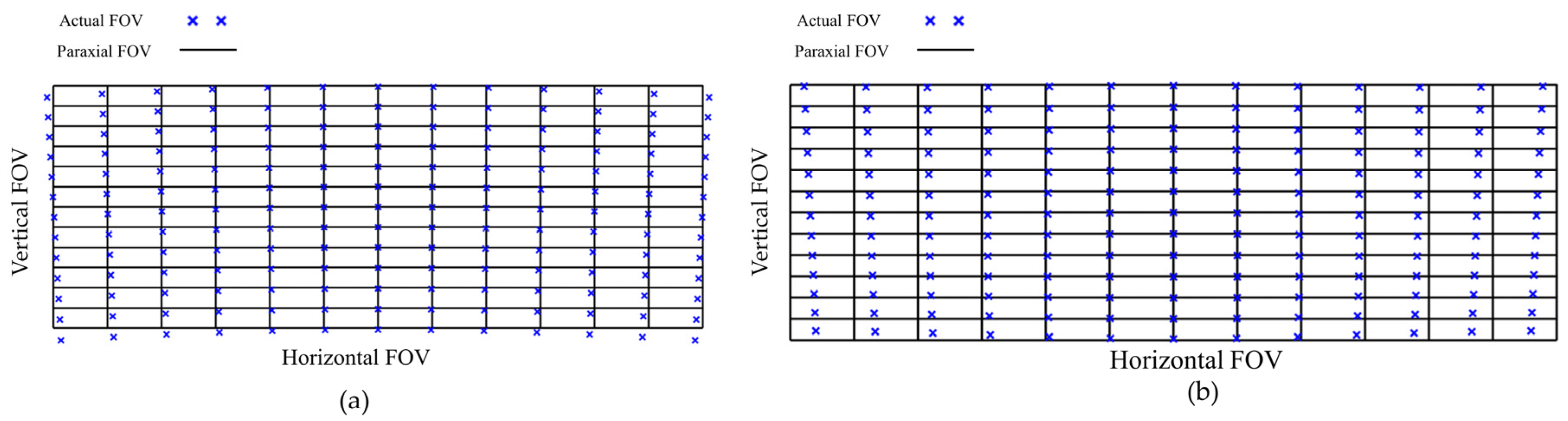

2.2.3. Design Results and Analysis

3. Multi-Structure Design Analysis

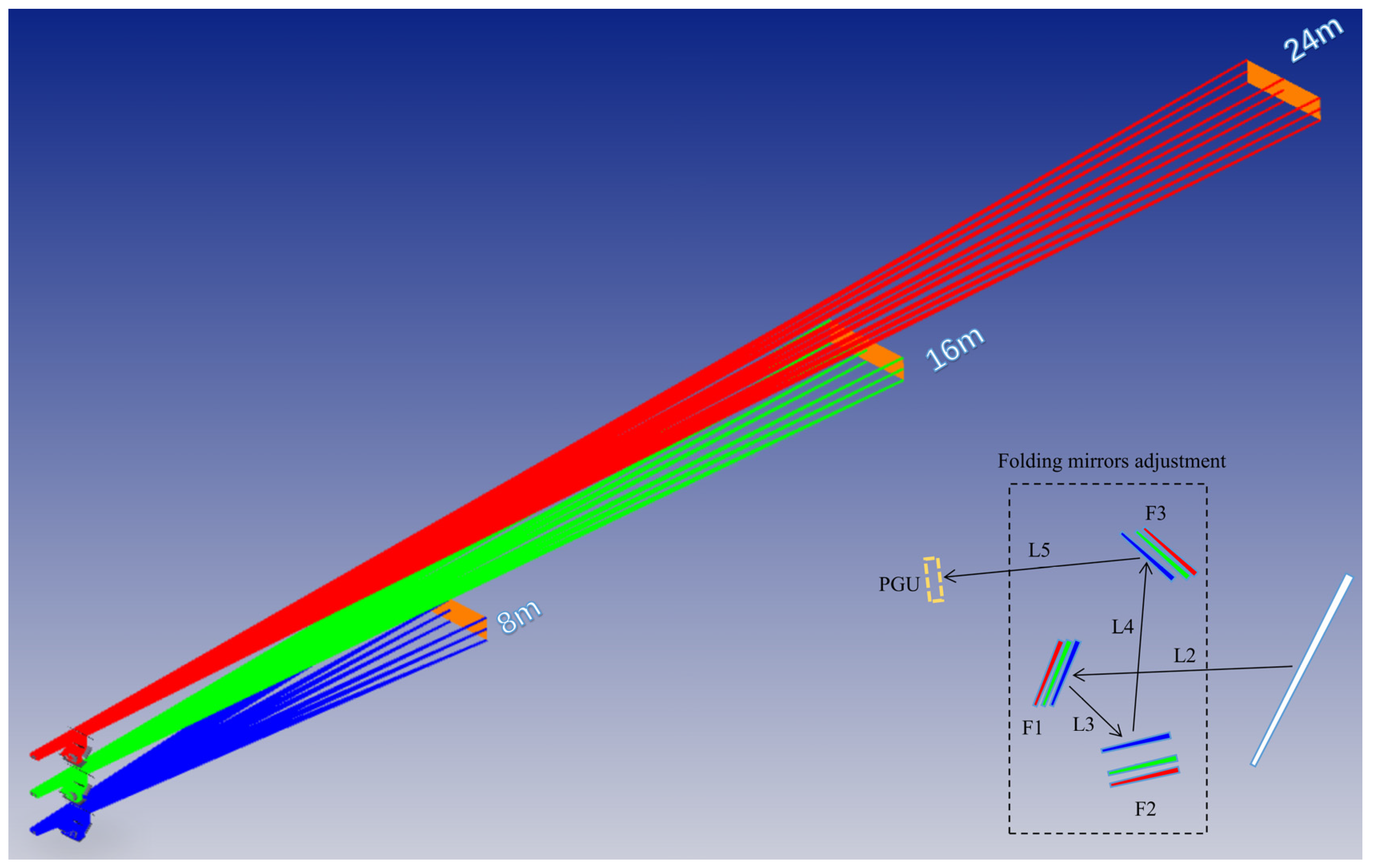

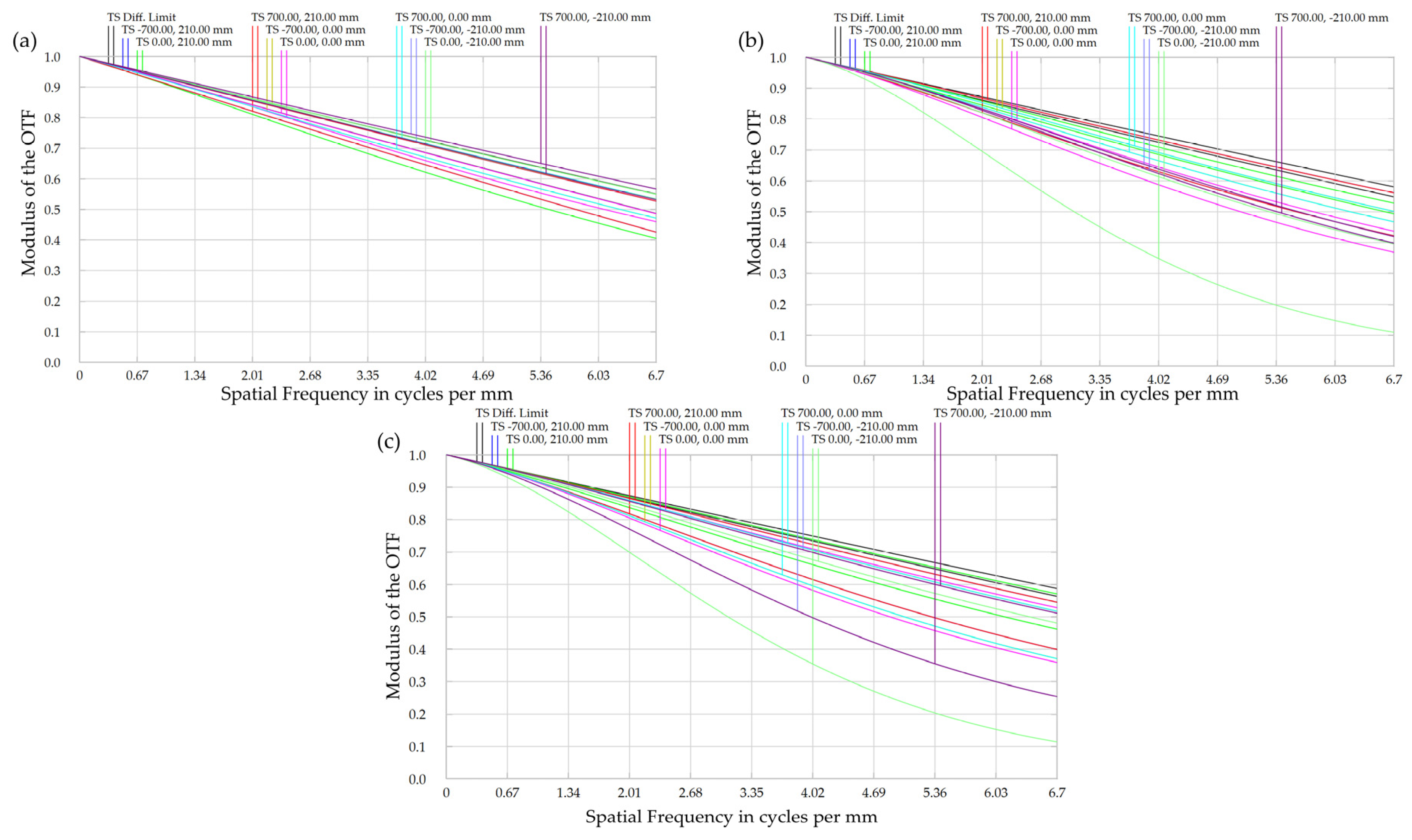

3.1. Multi-Structure Analysis of Far-Field Image

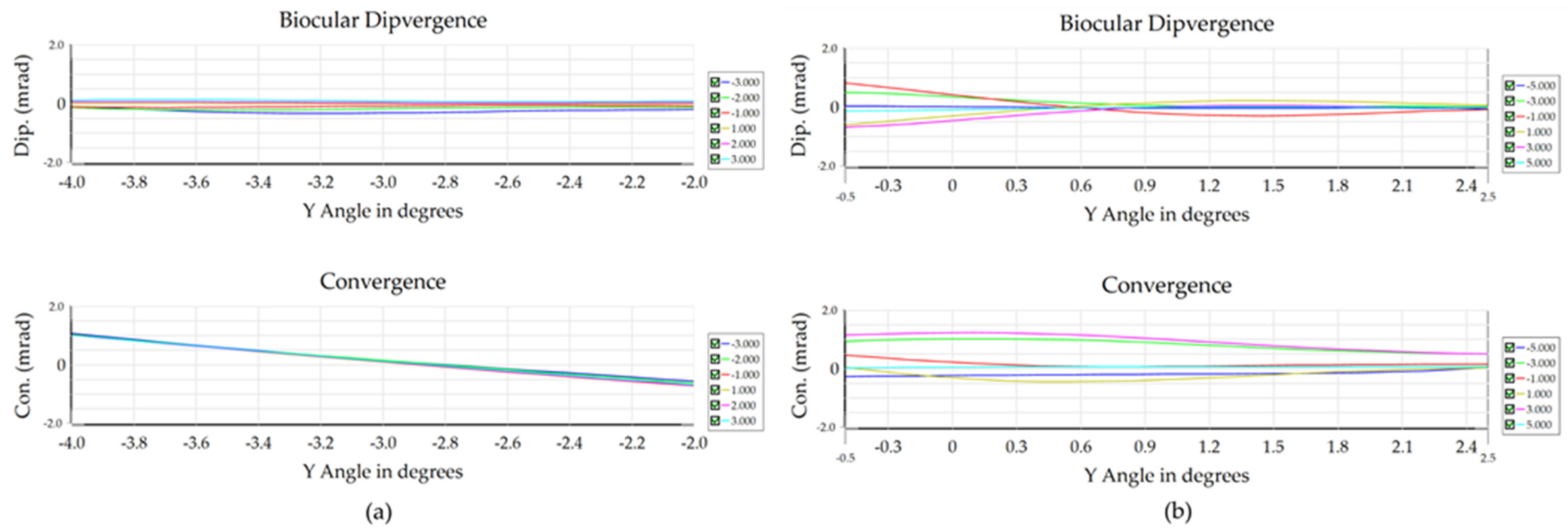

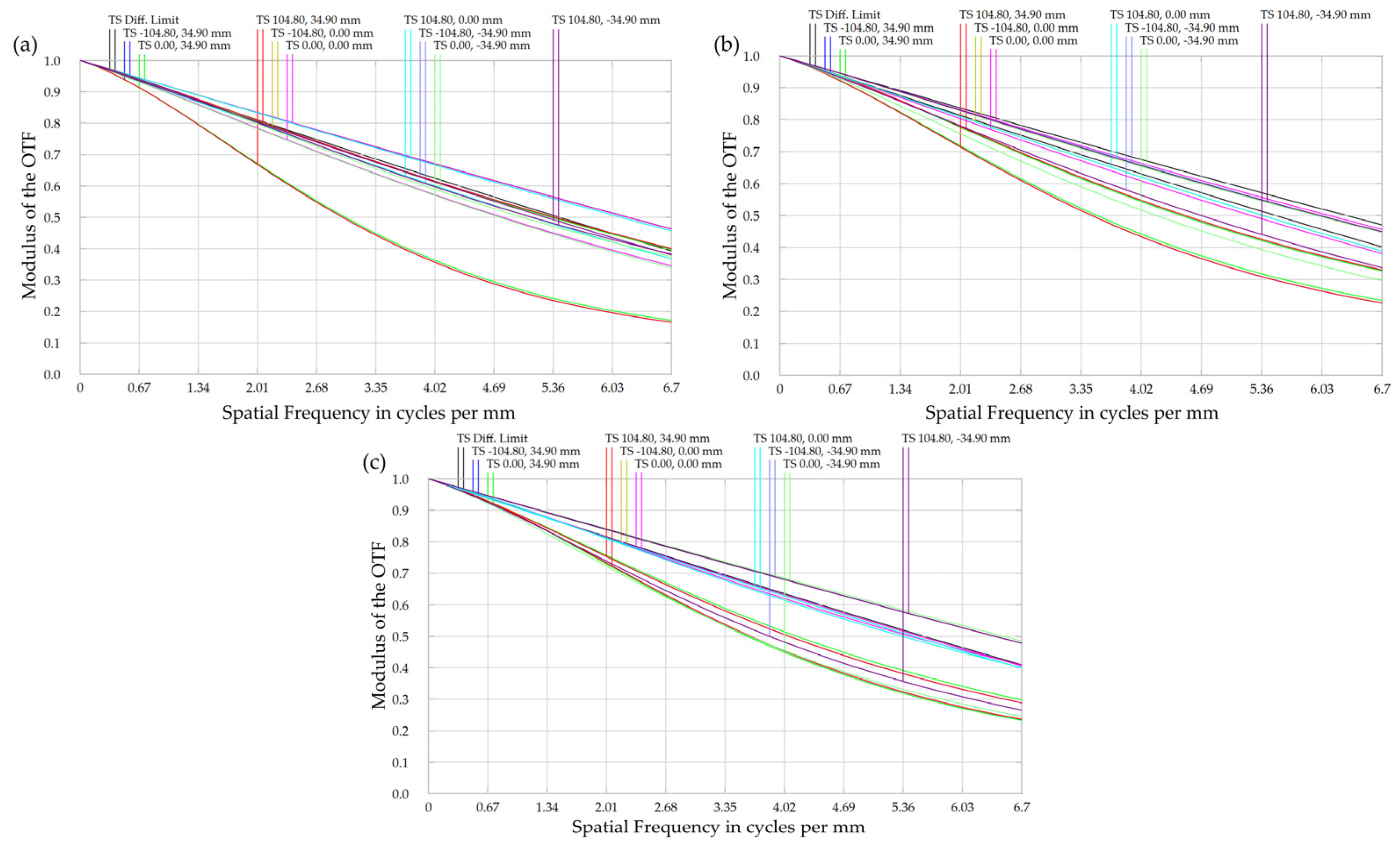

3.2. EYEBOX Multiple Structure Analysis

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Crawford, J.; Neal, A. A Review of the Perceptual and Cognitive Issues Associated with the Use of Head-up Displays in Commercial Aviation. Int. J. Aviat. Psychol. 2006, 16, 1–19. [Google Scholar] [CrossRef]

- The Emergency Lane: The History of the Head-Up Display. Available online: http://techzle.com/the-emergency-lane-the-history-of-the-head-up-display (accessed on 31 May 2023).

- Cho, W.H.; Chao, T.L.; Chi, C.K.; Liao, B.; Lee, C.C. A Novel Head-up Display Using Inclined Al2O3 Column Array. In Proceedings of the Optical Interference Coatings 2013, Whistler, BC, Canada, 16–21 June 2013. [Google Scholar]

- Smith, S.; Fu, S.H. The relationships between automobile head-up display presentation images and drivers’ Kansei. Displays 2011, 32, 58–68. [Google Scholar] [CrossRef]

- Norris, G. Boeing 787 Dreamliner: Flying Redefined; Aerospace Technical Publications International: Charlotte, NC, USA, 2005. [Google Scholar]

- Kim, S.; Dey, A.K. Simulated augmented reality windshield display as a cognitive mapping aid for elder driver navigation. In Proceedings of the 27th International Conference on Human Factors in Computing Systems, CHI 2009, Boston, MA, USA, 4–9 April 2009. [Google Scholar]

- China Head Up Display Industry Report C-HUD, W-HUD and AR-HUD. Available online: https://www.renrendoc.com/paper/127055129.html (accessed on 1 June 2023).

- Cao, Y. Optimization Design of Optical Module for Vehicle Head Up Display System. Master’s Thesis, Xi’an Technological University, Xi’an, China, 2022. [Google Scholar]

- Special Report on Automotive Intelligent Cabin: Core Entry of Incremental Value in the Intelligent Era. Available online: https://baijiahao.baidu.com/s?id=1691543015962744512&wfr=spider&for=pc (accessed on 2 June 2023).

- Kim, B.H.; Park, S.C. Optical System Design for a Head-up Display Using Aberration Analysis of an Off-axis Two-mirror System. J. Opt. Soc. Korea 2016, 20, 481–487. [Google Scholar] [CrossRef]

- Wei, S.L.; Fan, Z.C.; Zhu, Z.B.; Ma, D.L. Design of a head-up display based on freeform reflective systems for automotive applications. Appl. Opt. 2019, 58, 1675–1681. [Google Scholar] [CrossRef] [PubMed]

- Gu, L.; Cheng, D.W.; Liu, Y.; Ni, J.H.; Wang, Y.T. Design and fabrication of an off-axis four-mirror system for head-up display. Appl. Opt. 2020, 59, 4893–4900. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.H.; Bang, K.; Lee, B. Design Method of Holographic Mirror and Freeform Mirror for Automotive Augmented Reality Head-up Display. In Proceedings of the OSA Optical Sensors and Sensing Congress 2021 (AIS, FTS, HISE, SENSORS, ES), Seoul, Republic of Korea, 1 January 2021. [Google Scholar]

- Blanche, P.; Draper, C.T. Curved Holographic Waveguide Combiner for HUD and AR Display. In Proceedings of the OSA Imaging and Applied Optics Congress 2021 (3D, COSI, DH, ISA, pcAOP), Washington, DC, USA, 19 July 2021. [Google Scholar]

- Isomae, Y.; Shibata, Y.; Ishinabe, T.; Fujikake, H. Phase-Only Holographic Head Up Display Without Zero-Order Diffraction Light for Automobiles. IEEE Consum. Electron. Mag. 2019, 8, 99–104. [Google Scholar] [CrossRef]

- Peng, H.C.; Cheng, D.W.; Han, J.; Xu, C.; Song, W.T.; Ha, L.Z.; Yang, J.; Hu, Q.X.; Wang, Y.T. Design and fabrication of a holographic head-up display with asymmetric field of view. Appl. Opt. 2014, 53, H177–H185. [Google Scholar] [CrossRef] [PubMed]

- Qin, Z.; Lin, S.M.; Luo, K.T.; Chen, C.H.; Huang, Y.P. Dual-focal-plane augmented reality head-up display using a single picture generation unit and a single freeform mirror. Appl. Opt. 2019, 58, 5366–5374. [Google Scholar] [CrossRef] [PubMed]

- Yu, D.Y.; Tan, H.Y. Engineering Optics, 4th ed.; China Machine Press: Beijing, China, 2016; p. 216. [Google Scholar]

- AS8055; Minimum Performance Standard for Airborne Head up Display (HUD). SAE Aerospace Standard: Charlotte, NC, USA, 2008.

- Zhan, T.; Xiong, J.; Zou, J.; Wu, S.T. Multifocal displays: Review and prospect. PhotoniX 2020, 1, 10. [Google Scholar] [CrossRef]

| Parameters | Near-Field Image | Far-Field Image |

|---|---|---|

| VID | 2 m | 8 m |

| Horizontal FOV | −3°~3° (6°) | −5°~5° (10°) |

| Vertical FOV | −4°~−2° (2°) | −0.5°~2.5° (3°) |

| Up/down viewing angle | −3° | 1° |

| Windshield radius | 7500 mm (horizontal) | 3500 mm (vertical) |

| EYEBOX | 120 × 60 mm | |

| PGU pixel size | 75 μm | |

| Design wavelength | 486~656 nm | |

| MTF value | >0.2 @ 6.7 lp/mm | |

| Parameter | Structure 1 | Structure 2 | Structure 3 |

|---|---|---|---|

| FOV | 10° × 3° | 5° × 1.5° | 3.33° × 1° |

| Focal length | 813.3 mm | 857.6 mm | 889.2 mm |

| Magnification | 7.9 | 16.9 | 27 |

| Distance L1 | 8000 mm | 16,000 mm | 24,000 mm |

| Distance L2 | 225 mm | 232 mm | 239 mm |

| Distance L3 | 100 mm | 126.319 mm | 138.966 mm |

| Distance L4 | 285 mm | 308.165 mm | 317.244 mm |

| Distance L5 | 244.345 mm | 258.073 mm | 260.165 mm |

| Image center coordinate y | −245.606 mm | −245.625 mm | −245.632 mm |

| Image center coordinate z | 550.893 mm | 550.892 mm | 550.889 mm |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, Q.; Guo, Z. AR-HUD Optical System Design and Its Multiple Configurations Analysis. Photonics 2023, 10, 954. https://doi.org/10.3390/photonics10090954

Jiang Q, Guo Z. AR-HUD Optical System Design and Its Multiple Configurations Analysis. Photonics. 2023; 10(9):954. https://doi.org/10.3390/photonics10090954

Chicago/Turabian StyleJiang, Qubo, and Zhiyuan Guo. 2023. "AR-HUD Optical System Design and Its Multiple Configurations Analysis" Photonics 10, no. 9: 954. https://doi.org/10.3390/photonics10090954

APA StyleJiang, Q., & Guo, Z. (2023). AR-HUD Optical System Design and Its Multiple Configurations Analysis. Photonics, 10(9), 954. https://doi.org/10.3390/photonics10090954