Detection and Classification of Saffron Adulterants by Vis-Nir Imaging, Chemical Analysis, and Soft Computing

Abstract

:1. Introduction

2. Experiment

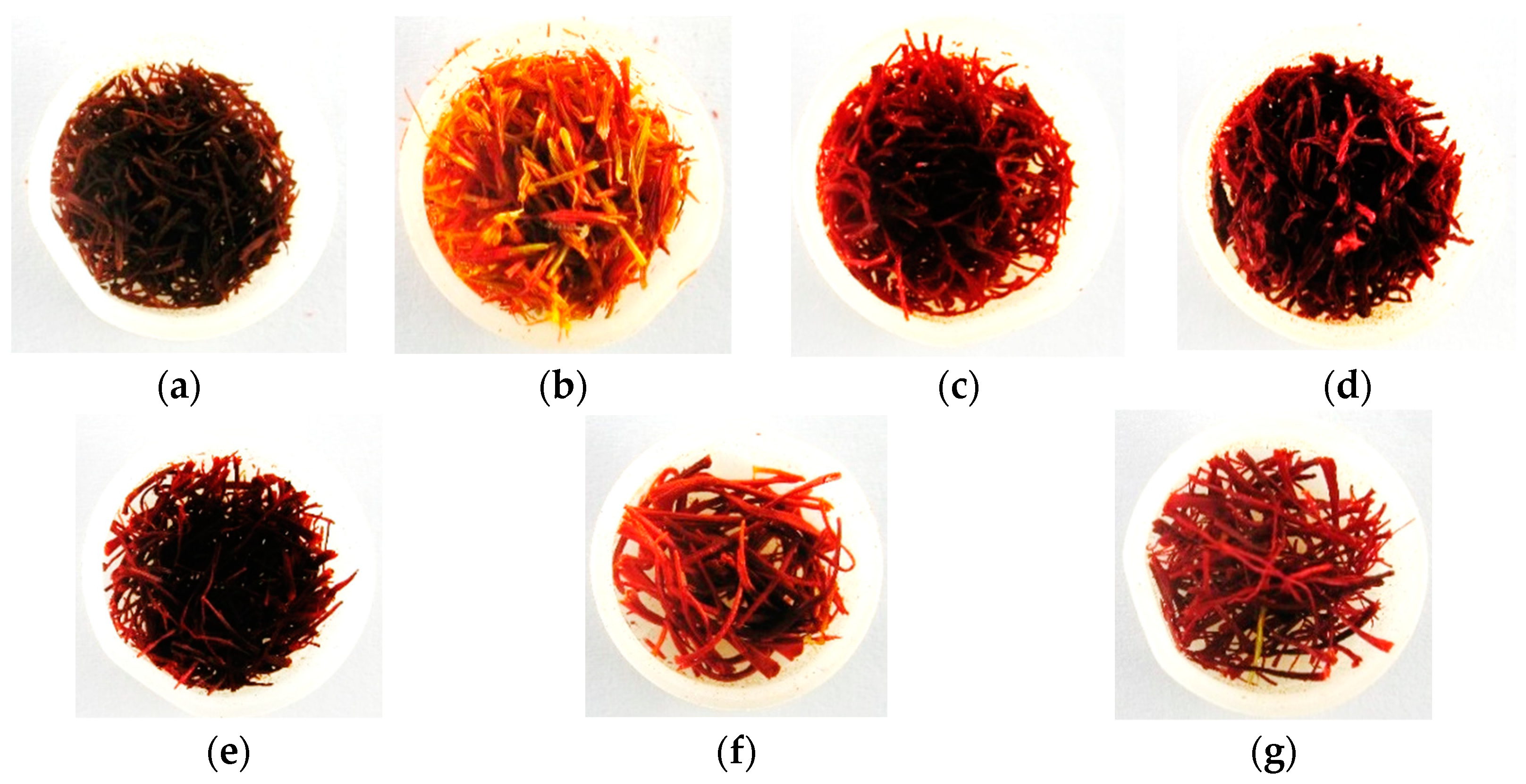

2.1. Sample Preparation

2.2. RGB Images Acquisition

2.2.1. Image Segmentation

2.2.2. Extraction of Color Components

2.2.3. Texture Features

2.3. Spectral (Red and NIR) Imaging

2.4. Chemical Analysis

2.5. Classifier Models

2.5.1. Neural Networks

2.5.2. Support Vector Machine (SVM)

2.5.3. K-Nearest Neighbor Classifier (KNN) Algorithm

2.5.4. Evaluation of Classification Performance

2.6. Software

3. Results and Discussion

3.1. The Results of Chemical Analysis

3.2. Detection and Classification Using Vis-NIR Spectral Imaging

3.3. Detection and Classification Using RGB Imaging

3.4. Evaluation of Classifiers Performance

3.5. Design and Evaluation of RBF Neural Network Based on Vis-NIR Results

3.6. Design and Evaluation of RBF Neural Network Based on RGB Results

3.7. Comparison with Similar Works

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| Nomenclature | Definition |

| F1 | Dyed citrus blossom |

| F2 | Safflower |

| F3 | Mixed stamen and dyed straw |

| F4 | Fiber |

| G1 | Microwave-dried saffron |

| G2 | Freeze-dried saffron and: |

| G3 | Hot-air-dried saffron |

| RGB | Red, green, and blue color components, respectively |

| HSI | Hue, saturation, and intensity color components, respectively |

| L*a*b* | Luminance, the red-green axis, and the blue-yellow axis color components, respectively |

| RBF | Radial Basis Function |

| MLP | Multilayer Perceptron |

| KNN | K-nearest neighbor |

| SVM | Support vector machine |

| SOM | Self-organized map |

| LVQ | Learning vector quantization |

References

- Ebrahimzadeharvanaghi, S.; Arkun, G. Investigating the Chemical Composition of Saffron (Crocus sativus) Growing in Different Geographic Regions. Asian J. Agric. Food Sci. 2018, 6, 1–6. [Google Scholar]

- Gresta, F.; Lombardo, G.; Siracusa, L.; Ruberto, G. Saffron, an alternative crop for sustainable agricultural systems. A review. Agron. Sustain. Dev. 2008, 28, 95–112. [Google Scholar] [CrossRef]

- Kumar, R.; Singh, V.; Devi, K.; Sharma, M.; Singh, M.; Ahuja, P.S. State of art of saffron (Crocus sativus L.) agronomy: A comprehensive review. Food Rev. Int. 2008, 25, 44–85. [Google Scholar] [CrossRef]

- Shahandeh, H. Soil conditions for sustainable saffron production. In Saffron; Elsevier: Amsterdam, The Netherlands, 2020; pp. 59–66. [Google Scholar]

- Jiang, M.; Kulsing, C.; Nolvachai, Y.; Marriott, P.J. Two-dimensional retention indices improve component identification in comprehensive two-dimensional gas chromatography of saffron. Anal. Chem. 2015, 87, 5753–5761. [Google Scholar] [CrossRef]

- Sereshti, H.; Poursorkh, Z.; Aliakbarzadeh, G.; Zarre, S. Quality control of saffron and evaluation of potential adulteration by means of thin layer chromatography-image analysis and chemometrics methods. Food Control 2018, 90, 48–57. [Google Scholar] [CrossRef]

- Guijarro-Díez, M.; Castro-Puyana, M.; Crego, A.L.; Marina, M.L. Detection of saffron adulteration with gardenia extracts through the determination of geniposide by liquid chromatography–mass spectrometry. J. Food Compos. Anal. 2017, 55, 30–37. [Google Scholar] [CrossRef]

- Petrakis, E.A.; Cagliani, L.R.; Tarantilis, P.A.; Polissiou, M.G.; Consonni, R. Sudan dyes in adulterated saffron (Crocus sativus L.): Identification and quantification by 1H NMR. Food Chem. 2017, 217, 418–424. [Google Scholar] [CrossRef] [PubMed]

- Villa, C.; Costa, J.; Oliveira, M.B.P.; Mafra, I. Novel quantitative real-time PCR approach to determine safflower (Carthamus tinctorius) adulteration in saffron (Crocus sativus). Food Chem. 2017, 229, 680–687. [Google Scholar] [CrossRef] [PubMed]

- Kiani, S.; Minaei, S.; Ghasemi-Varnamkhasti, M. Integration of computer vision and electronic nose as non-destructive systems for saffron adulteration detection. Comput. Electron. Agric. 2017, 141, 46–53. [Google Scholar] [CrossRef]

- Mohamadzadeh Moghadam, M.; Taghizadeh, M.; Sadrnia, H.; Pourreza, H.R. Nondestructive classification of saffron using color and textural analysis. Food Sci. Nutr. 2020, 8, 1923–1932. [Google Scholar] [CrossRef]

- Aghaei, Z.; Jafari, S.M.; Dehnad, D. Effect of different drying methods on the physicochemical properties and bioactive components of saffron powder. Plant Foods Hum. Nutr. 2019, 74, 171–178. [Google Scholar] [CrossRef]

- Minaei, S.; Kiani, S.; Ayyari, M.; Ghasemi-Varnamkhasti, M. A portable computer-vision-based expert system for saffron color quality characterization. J. Appl. Res. Med. Aromat. Plants 2017, 7, 124–130. [Google Scholar] [CrossRef]

- Aggarwal, S.; Gupta, S.; Gupta, D.; Gulzar, Y.; Juneja, S.; Alwan, A.A.; Nauman, A. An Artificial Intelligence-Based Stacked Ensemble Approach for Prediction of Protein Subcellular Localization in Confocal Microscopy Images. Sustainability 2023, 15, 1695. [Google Scholar] [CrossRef]

- Alighaleh, P.; Khosravi, H.; Rohani, A.; Saeidirad, M.H.; Einafshar, S. The detection of saffron adulterants using a deep neural network approach based on RGB images taken under uncontrolled conditions. Expert Syst. Appl. 2022, 198, 116890. [Google Scholar] [CrossRef]

- Momeny, M.; Neshat, A.A.; Jahanbakhshi, A.; Mahmoudi, M.; Ampatzidis, Y.; Radeva, P. Grading and fraud detection of saffron via learning-to-augment incorporated Inception-v4 CNN. Food Control 2023, 147, 109554. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Wu, D.; Sun, D.-W. Advanced applications of hyperspectral imaging technology for food quality and safety analysis and assessment: A review—Part I: Fundamentals. Innov. Food Sci. Emerg. Technol. 2013, 19, 1–14. [Google Scholar] [CrossRef]

- D’Archivio, A.A.; Maggi, M.A. Geographical identification of saffron (Crocus sativus L.) by linear discriminant analysis applied to the UV–visible spectra of aqueous extracts. Food Chem. 2017, 219, 408–413. [Google Scholar] [CrossRef]

- Li, S.; Shao, Q.; Lu, Z.; Duan, C.; Yi, H.; Su, L. Rapid determination of crocins in saffron by near-infrared spectroscopy combined with chemometric techniques. Spectrochim. Acta Part A Mol. Biomol. Spectrosc. 2018, 190, 283–289. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Xing, B.; Lin, D.; Yi, H.; Shao, Q. Rapid detection of saffron (Crocus sativus L.) Adulterated with lotus stamens and corn stigmas by near-infrared spectroscopy and chemometrics. Ind. Crops Prod. 2020, 152, 112539. [Google Scholar] [CrossRef]

- Manley, M. Near-infrared spectroscopy and hyperspectral imaging: Non-destructive analysis of biological materials. Chem. Soc. Rev. 2014, 43, 8200–8214. [Google Scholar] [CrossRef]

- Verma, B. Image processing techniques for grading & classification of rice. In Proceedings of the 2010 International Conference on Computer and Communication Technology (ICCCT), Allahabad, India, 17–19 September 2010; pp. 220–223. [Google Scholar]

- Pazoki, A.; Pazoki, Z. Classification system for rain fed wheat grain cultivars using artificial neural network. Afr. J. Biotechnol. 2011, 10, 8031–8038. [Google Scholar]

- Manimekalai, K.; Vijaya, M. Taxonomic classification of Plant species using support vector machine. J. Bioinform. Intell. Control 2014, 3, 65–71. [Google Scholar] [CrossRef]

- Rohani, A.; Mamarabadi, M. Free alignment classification of dikarya fungi using some machine learning methods. Neural Comput. Appl. 2019, 31, 6995–7016. [Google Scholar] [CrossRef]

- Amirvaresi, A.; Nikounezhad, N.; Amirahmadi, M.; Daraei, B.; Parastar, H. Comparison of near-infrared (NIR) and mid-infrared (MIR) spectroscopy based on chemometrics for saffron authentication and adulteration detection. Food Chem. 2021, 344, 128647. [Google Scholar] [CrossRef] [PubMed]

- Sabzi, S.; Abbaspour-Gilandeh, Y.; Garcia-Mateos, G. A fast and accurate expert system for weed identification in potato crops using metaheuristic algorithms. Comput. Ind. 2018, 98, 80–89. [Google Scholar] [CrossRef]

- Panigrahi, K.P.; Das, H.; Sahoo, A.K.; Moharana, S.C. Maize leaf disease detection and classification using machine learning algorithms. In Progress in Computing, Analytics and Networking; Springer: Berlin/Heidelberg, Germany, 2020; pp. 659–669. [Google Scholar]

- Wang, A.; Zhang, W.; Wei, X. A review on weed detection using ground-based machine vision and image processing techniques. Comput. Electron. Agric. 2019, 158, 226–240. [Google Scholar] [CrossRef]

- Bakhshipour, A.; Jafari, A.; Nassiri, S.M.; Zare, D. Weed segmentation using texture features extracted from wavelet sub-images. Biosyst. Eng. 2017, 157, 1–12. [Google Scholar] [CrossRef]

- Woods, R.E.; Eddins, S.L.; Gonzalez, R.C. Digital Image Processing Using MATLAB; Gatesmark Publishing: Knoxville, TN, USA, 2009. [Google Scholar]

- Ghanei Ghooshkhaneh, N.; Golzarian, M.R.; Mamarabadi, M. Detection and classification of citrus green mold caused by Penicillium digitatum using multispectral imaging. J. Sci. Food Agric. 2018, 98, 3542–3550. [Google Scholar] [CrossRef] [PubMed]

- Balasundaram, D.; Burks, T.; Bulanon, D.; Schubert, T.; Lee, W. Spectral reflectance characteristics of citrus canker and other peel conditions of grapefruit. Postharvest Biol. Technol. 2009, 51, 220–226. [Google Scholar] [CrossRef]

- Blasco, J.; Ortiz, C.; Sabater, M.D.; Molto, E. Early detection of fungi damage in citrus using NIR spectroscopy. In Proceedings of the Biological Quality and Precision Agriculture II, Boston, MA, USA, 6–8 November 2000; pp. 47–54. [Google Scholar]

- Vakil-Baghmisheh, M.-T.; Pavešić, N. Premature clustering phenomenon and new training algorithms for LVQ. Pattern Recognit. 2003, 36, 1901–1912. [Google Scholar] [CrossRef]

- Rezaei, M.; Rohani, A.; Heidari, P.; Lawson, S. Using soft computing and leaf dimensions to determine sex in immature Pistacia vera genotypes. Measurement 2021, 174, 108988. [Google Scholar] [CrossRef]

- Taki, M.; Ajabshirchi, Y.; Ranjbar, S.F.; Rohani, A.; Matloobi, M. Heat transfer and MLP neural network models to predict inside environment variables and energy lost in a semi-solar greenhouse. Energy Build. 2016, 110, 314–329. [Google Scholar] [CrossRef]

- Taki, M.; Mehdizadeh, S.A.; Rohani, A.; Rahnama, M.; Rahmati-Joneidabad, M. Applied machine learning in greenhouse simulation; new application and analysis. Inf. Process. Agric. 2018, 5, 253–268. [Google Scholar] [CrossRef]

- Boser, B.E.; Guyon, I.M.; Vapnik, V.N. A training algorithm for optimal margin classifiers. In Proceedings of the Fifth Annual Workshop on Computational Learning Theory, Pittsburgh, PA, USA, 27–29 July 1992; pp. 144–152. [Google Scholar]

- Sengupta, S.; Lee, W.S. Identification and determination of the number of immature green citrus fruit in a canopy under different ambient light conditions. Biosyst. Eng. 2014, 117, 51–61. [Google Scholar] [CrossRef]

- Khamis, H.S.; Cheruiyot, K.W.; Kimani, S. Application of k-nearest neighbour classification in medical data mining. Int. J. Inf. Commun. Technol. Res. 2014, 4, 121–128. [Google Scholar]

- Yu, Z.; Chen, H.; Liu, J.; You, J.; Leung, H.; Han, G. Hybrid $ k $-nearest neighbor classifier. IEEE Trans. Cybern. 2015, 46, 1263–1275. [Google Scholar] [CrossRef] [PubMed]

- Kumar, R.; Goyal, M.K.; Ahmed, P.; Kumar, A. Unconstrained handwritten numeral recognition using majority voting classifier. In Proceedings of the 2012 2nd IEEE International Conference on Parallel, Distributed and Grid Computing, Solan, India, 6–8 December 2012; pp. 284–289. [Google Scholar]

- ISO. Spices—Saffron (Crocus sativus L.); The International Organization for Standardization: Geneva, Switzerland, 2010; p. 38. [Google Scholar]

- Heidari, P.; Rezaei, M.; Rohani, A. Soft computing-based approach on prediction promising pistachio seedling base on leaf characteristics. Sci. Hortic. 2020, 274, 109647. [Google Scholar] [CrossRef]

- Lu, H.; Jiang, W.; Ghiassi, M.; Lee, S.; Nitin, M. Classification of Camellia (Theaceae) species using leaf architecture variations and pattern recognition techniques. PLoS ONE 2012, 7, e29704. [Google Scholar] [CrossRef]

- Heidarbeigi, K.; Mohtasebi, S.S.; Foroughirad, A.; Ghasemi-Varnamkhasti, M.; Rafiee, S.; Rezaei, K. Detection of adulteration in saffron samples using electronic nose. Int. J. Food Prop. 2015, 18, 1391–1401. [Google Scholar] [CrossRef]

- Heidarbeigi, K.; Mohtasebi, S.S.; Rafiee, S.; Ghasemi-Varnamkhasti, M.; Rezaei, K.; Rodriguez-Mendez, M.L. An electronic tongue design for the detection of adulteration in saffron samples. Iran. J. Biosyst. Eng. 2015, 46, 405–413. [Google Scholar]

- Aiello, D.; Siciliano, C.; Mazzotti, F.; Di Donna, L.; Athanassopoulos, C.M.; Napoli, A. A rapid MALDI MS/MS based method for assessing saffron (Crocus sativus L.) adulteration. Food Chem. 2020, 307, 125527. [Google Scholar] [CrossRef] [PubMed]

| Color Space | Channel | Transformation from RGB |

|---|---|---|

| rgb | R | r = R/(R + G + B) |

| G | g = G/(R + G + B) | |

| B | b = B/(R + G + B) | |

| XYZ | X | X = 0.607R + 0.174G + 0.200B |

| Y | Y = 0.299R + 0.587G + 0.114B | |

| Z | Z = 0.066G + 1.116B | |

| HSI | H | |

| S | ||

| I | ||

| La*b* | L* | |

| a* | ||

| b* |

| Training Algorithm | Symbol | Function |

|---|---|---|

| Levenberg-Marquardt back propagation | T1 | Trainlm |

| Bayesian regularization | T2 | Trainbr |

| Scaled conjugate gradient backpropagation | T3 | Trainscg |

| Resilient backpropagation (Rprop) | T4 | Trainrp |

| Variable learning rate backpropagation | T5 | Traingdx |

| Gradient descent with momentum backpropagation | T6 | Traingdm |

| gradient descent with adaptive learning rate backpropagation | T7 | Traingda |

| Gradient descent backpropagation | T8 | Traingd |

| BFGS quasi-Newton backpropagation | T9 | Trainbfg |

| Powell–Beale conjugate gradient backpropagation | T10 | Traincgb |

| Fletcher–Powell conjugate gradient backpropagation | T11 | Traincgf |

| Polak–Ribiere conjugate gradient backpropagation | T12 | Traincgp |

| One step secant backpropagation | T13 | Trainoss |

| RGB Features | NIR Features | |||||

|---|---|---|---|---|---|---|

| Classifiers | Train | Test | Total | Train | Test | Total |

| RBF | 99.70 | 98.81 | 99.52 | 94.78 | 94.82 | 94.79 |

| MLP | 99.78 | 97.32 | 99.29 | 89.66 | 89.66 | 89.58 |

| KNN | 100 | 88.10 | 97.62 | 100 | 79.31 | 95.83 |

| SVM | 100 | 71.43 | 94.29 | 99.13 | 75.86 | 94.44 |

| SOM | 89.29 | 89.29 | 89.29 | 80 | 72.41 | 78.47 |

| LVQ | 52.68 | 52.38 | 52.62 | 43.48 | 40 | 43.4 |

| F1 | F2 | F3 | F4 | G1 | G3 | G2 | All | |

|---|---|---|---|---|---|---|---|---|

| Train | 100 | 96.87 | 87.5 | 90.62 | 90.62 | 97.14 | 100 | 94.78 |

| Test | 100 | 100 | 87.5 | 87.5 | 87.5 | 100 | 100 | 94.82 |

| Total | 100 | 97.5 | 87.5 | 90 | 90 | 97.72 | 100 | 94.79 |

| Training Size (%) | Train | Test | Total |

|---|---|---|---|

| 80 | 94.78 | 94.82 | 94.79 |

| 70 | 95.05 | 93.02 | 94.44 |

| 60 | 94.19 | 93.1 | 93.75 |

| 50 | 93.75 | 90.97 | 92.36 |

| Features | Train | Test | Total |

|---|---|---|---|

| All | 99.70 | 98.81 | 99.52 |

| Slected (14 features) | 99.70 | 98.81 | 99.52 |

| HSI | 99.11 | 98.81 | 99.05 |

| RGB | 92.26 | 91.76 | 92.14 |

| L*a*b* | 89.88 | 92.86 | 90.48 |

| HSIstd | 88.39 | 88.1 | 88.33 |

| HSIave | 88.39 | 83.33 | 87.38 |

| RGBave | 82.14 | 85.71 | 82.86 |

| L*a*b*ave | 79.46 | 89.29 | 81.43 |

| RGBstd | 77.98 | 82.14 | 78.81 |

| L*a*b*std | 69.94 | 70.24 | 70.00 |

| texture | 62.50 | 65.48 | 63.10 |

| F1 | F2 | F3 | F4 | G1 | G3 | G2 | All | |

|---|---|---|---|---|---|---|---|---|

| Train | 100 | 100 | 97.92 | 100 | 100 | 100 | 100 | 99.70 |

| Test | 100 | 100 | 91.67 | 100 | 100 | 100 | 100 | 98.81 |

| Total | 100 | 100 | 96.67 | 100 | 100 | 100 | 100 | 99.52 |

| Training Size (%) | Train | Test | Total |

|---|---|---|---|

| 80 | 99.70 | 98.81 | 99.52 |

| 70 | 99.66 | 98.41 | 99.29 |

| 60 | 99.21 | 98.25 | 98.83 |

| 50 | 98.57 | 97.62 | 98.10 |

| Method | Objective | Base on Method | Accuracy | Reference |

|---|---|---|---|---|

| Near-infrared spectroscopy | Determination of crocin | Destructive | 93.4–96.3% | [20] |

| Computer vision | Saffron color quality characterization | Non-destructive | 99% | [13] |

| Deep Learning | Detection of Saffron Adulteration | Non-destructive | 99.8% | [15] |

| Electronic nose | Detection of Saffron Adulteration | Destructive | 86.87–100% | [48] |

| Electronic tongue | Detection of Saffron Adulteration | Destructive | 86.21–96.15% | [49] |

| Near-infrared spectroscopy and chemometrics | Detection of Saffron Adulteration | Destructive | 99% | [21] |

| Matrix-assisted laser desorption ionization mass spectrometry (MALDI-MS/MS) | Detection of Saffron Adulteration | Destructive | 99% | [50] |

| Proposed method | Genuine and fake saffron classification | Non-destructive | 99.52% | Our method |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alighaleh, P.; Pakdel, R.; Ghanei Ghooshkhaneh, N.; Einafshar, S.; Rohani, A.; Saeidirad, M.H. Detection and Classification of Saffron Adulterants by Vis-Nir Imaging, Chemical Analysis, and Soft Computing. Foods 2023, 12, 2192. https://doi.org/10.3390/foods12112192

Alighaleh P, Pakdel R, Ghanei Ghooshkhaneh N, Einafshar S, Rohani A, Saeidirad MH. Detection and Classification of Saffron Adulterants by Vis-Nir Imaging, Chemical Analysis, and Soft Computing. Foods. 2023; 12(11):2192. https://doi.org/10.3390/foods12112192

Chicago/Turabian StyleAlighaleh, Pejman, Reyhaneh Pakdel, Narges Ghanei Ghooshkhaneh, Soodabeh Einafshar, Abbas Rohani, and Mohammad Hossein Saeidirad. 2023. "Detection and Classification of Saffron Adulterants by Vis-Nir Imaging, Chemical Analysis, and Soft Computing" Foods 12, no. 11: 2192. https://doi.org/10.3390/foods12112192